Abstract

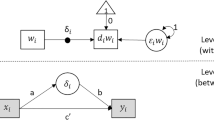

Ansari et al. (Psychometrika 67:49–77, 2002) applied a multilevel heterogeneous model for confirmatory factor analysis to repeated measurements on individuals. While the mean and factor loadings in this model vary across individuals, its factor structure is invariant. Allowing the individual-level residuals to be correlated is an important means to alleviate the restriction imposed by configural invariance. We relax the diagonality assumption of residual covariance matrix and estimate it using a formal Bayesian Lasso method. The approach improves goodness of fit and avoids ad hoc one-at-a-time manipulation of entries in the covariance matrix via modification indexes. We illustrate the approach using simulation studies and real data from an ecological momentary assessment.

Similar content being viewed by others

References

Ansari, A., Jedidi, K., & Dubé, L. (2002). Heterogeneous factor analysis models: A Bayesian approach. Psychometrika, 67, 49–77.

Arminger, G., & Stein, P. (1997). Finite mixtures of covariance structure models with regressors. Sociological Methods and Research, 26, 148–182.

Asparouhov, T., Hamaker, E. L., & Muthén, B. (2017). Dynamic structural equation models. Structural Equation Modeling: A Multidisciplinary Journal, 25(3), 359–388.

Averill, J. R., Catlin, G., & Chon, K. K. (1990). Rules of Hope. New York, NY: Springer.

Benjamini, Y., & Yekutieli, D. (2001). The control of the false discovery rate in multiple testing under dependency. Annals of Statistics, 29, 1165–1188.

Bollen, K. A. (1989). Structural equation models. New York: Wiley.

Borkenau, P., & Ostendorf, F. (1998). The Big Five as states: How useful is the five-factor model to describe intra-individual variations over time? Journal of Personality Research, 32, 202–221.

Bringmann, L. F., Vissers, N., Wichers, M., Geschwind, N., Kuppens, P., Peeters, F., et al. (2013). A network approach to psychopathology: New insights into clinical longitudinal data. PLoS ONE, 8(4), e60188.

Browne, M. W., & Nesselroade, J. R. (2005). Representing psychological processes with dynamic factor models: Some promising uses and extensions of ARMA time series models. In A. Maydeu-Olivares & J. J. McArdle (Eds.), Contemporary psychometrics: A Festschrift for Roderick P. McDonald (pp. 415–452). Mahwah, NJ: Erlbaum.

Chow, S. M., Tang, N., Yuan, Y., Song, X., & Zhu, H. (2011). Bayesian estimation of semiparametric dynamic latent variable models using the dirichlet process prior. British Journal of Mathematical and Statistical Psychology, 64(1), 69–106.

Csikszentmihalyi, M., & Larson, R. (1987). Validity and reliability of the experience-sampling method. Journal of Nervous and Mental Disease, 175, 526–536.

De Roover, K. D., Vermunt, J. K., Timmerman, M. E., & Ceulemans, E. (2017). Mixture simultaneous factor analysis for latent variables between higher level units of multilevel data. Structural Equation Modeling: A Multidisciplinary Journal, 24(4), 506–523.

Diener, E., & Emmons, R. A. (1984). The independence of positive and negative affect. Journal of Personality and Social Psychology, 47, 1105–1117.

Dunn, E. C., Masyn, K. E., Johston, W. R., & Subramanian, S. V. (2015). Modeling contextual effects using individual-level data and without aggregation: An illustration of multilevel factor analysis (MLFA) with collective efficacy. Population Health Metrics, 13, 12–22.

Gelfand, A. E. (1996). Model determination using sampling-based methods. In W. R. Gilks, S. Richardson, & D. J. Spiegelhalter (Eds.), Markov Chain Monte Carlo in Practice (pp. 145–161). London: Chapman & Hall.

Gelman, A. (1996). Inference and monitoring convergence. In W. R. Gilks, S. Richardson, & D. J. Spiegelharter (Eds.), Markov Chain Monte Carlo in Practice (pp. 131–144). London: Chapman & Hall.

Gelman, A., Roberts, G. O., & Gilks, W. R. (1996). Efficient metropolis jumping rules. In J. M. Bernardo, J. O. Berger, A. P. Dawid, et al. (Eds.), Bayesian statistics (Vol. 5, pp. 599–607). New York: Oxford University Press.

Gelman, A., & Meng, X. L. (1998). Simulating normalizing constants: From importance sampling to bridge sampling to path sampling. Statistical Science, 13, 163–185.

Geman, S., & Geman, D. (1984). Stochastic relaxation, Gibbs distributions, and the Bayesian restoration of images. IEEE Transactions on Pattern Analysis and Machine Intelligence, 6, 721–741.

Gilks, W. R., Richardson, S., & Spiegelhalter, D. J. (1996). Markov chain Monte Carlo in practice. London: Chapman & Hall.

Geisser, S., & Eddy, E. (1979). A predictive approach to model selection. Journal of the American Statistical Association, 74, 153–60.

Goldstein, H., & Browne, W. (2005). Multilevel factor analysis models for continuous and discrete data. In A. Maydeu-Olivares & J. J. McArdle (Eds.), Contemporary psychometrics: A festschrift for Roderick P McDonald (pp. 453–475). Mahwah, MJ: Erlbaum.

Goldstein, H., Healy, M. J. R., & Rasbash, J. (1994). Multilevel time series models with applications to repeated measure data. Statistics in Medicine, 13, 1643–1655.

Hans, C. (2009). Bayesian lasso regression. Biometrika, 96(4), 835–845.

Hastings, W. K. (1970). Monte Carlo sampling methods using Markov chains and their application. Biometrika, 57, 97–109.

Heck, R. H. (1999). Multilevel modeling with SEM. In S. L. Thomas & R. H. Heck (Eds.), Introduction to multilevel modeling techniques (pp. 89–127). Mahwah, NJ: Lawrence Erlbaum Associates Inc.

Jöreskog, K. G., & Sörbom, D. (1984). LISREL VI user’s guide. Mooresville, IN: Scientific Software.

Kaplan, D. (1990). Evaluating and modifying covariance structure models: A review and recommendation. Multivariate Behavioral Research, 25, 137–155.

Kass, R. E., & Raftery, A. E. (1995). Bayes factors. Journal of the American Statistical Association, 90, 773–795.

Kelderman, H., & Molenaar, P. C. (2007). The effect of individual differences in factor loadings on the standard factor model. Multivariate Behavioral Research, 42(3), 435–456.

Khondker, Z. S., Zhu, H. T., Chu, H. T., Lin, W. L., & Ibrahim, J. G. (2013). The Bayesian covariance lasso. Statistics and Its Interface, 6(2), 243–259.

Krone, T., Albers, C. J., Kuppens, P., & Timmerman, M. E. (2018). A multivariate statistical model for emotion dynamics. Emotion, 18(5), 739–754.

Lee, S. Y. (2007). Structural equation modelling: A Bayesian approach. New York: Wiley.

Lee, S. Y., & Song, X. Y. (2012). Basic and advanced Bayesian structural equation modeling: With applications in the medical and behavioral sciences. New York: Wiley.

Longford, N. T., & Muthén, B. O. (1992). Factor analysis for clustered observations. Psychometrika, 57, 581–597.

Lopes, H. F., & West, M. (2004). Bayesian model assessment in factor analysis. Statistica Sinica, 14, 41–67.

Lu, Z. H., Chow, S. M., & Loken, E. (2016). Bayesian factor analysis as a variable-selection problem: Alternative priors and consequences. Multivariate Behavioral Research, 51(4), 519–539.

Lu, J., Huet, C., & Dubé, L. (2011). Emotional reinforcement as a protective factor for healthy eating in home settings. The American Journal of Clinical Nutrition, 94(1), 254–261.

Maydeu-Olivares, A., & Coffman, D. L. (2006). Random intercept item factor analysis. Psychological Methods, 11(4), 344–362.

MacCallum, R. C. (1995). Model specification: Procedures, strategies, and related issues. In R. H. Hoyle (Ed.), Structural equation modeling: Concepts, issues, and applications. Thousand Oaks, CA: SAGE.

MacCallum, R. C., Roznowski, M., & Necowitz, L. B. (1992). Model modifications in covariance structure analysis: The problem of capitalization on chance. Psychological Bulletin, 111(3), 490–504.

McArdle, J.J. (1982). Structural equation modeling of an individual system: Preliminary results from ‘A case study in episodic alcoholism’. Department of Psychology, University of Denver. (Unpublished manuscript).

Metropolis, N., Rosenbluth, A. W., Rosenbluth, M. N., Teller, A. H., & Teller, E. (1953). Equations of state calculations by fast computing machine. Journal of Chemical Physics, 21, 1087–1091.

Merz, E. L., & Roesch, S. C. (2011). Modeling trait and state variation using multilevel factor analysis with panas daily diary data. Journal of Research in Personality, 45(1), 2–9.

Molenaar, P. C. M. (1985). A dynamic factor model for the analysis of multivariate time series. Psychometrika, 50(2), 181–202.

Molenaar, P. C. M., & Campbell, C. G. (2009). The new person-specific paradigm in psychology. Current Directions in Psychological Science, 18(2), 112–117.

Muthén, B. O. (1991). Multilevel factor analysis of class and student achievement components. Journal of Educational Measurement, 28, 338–354.

Muthén, B. O. (1994). Multilevel covariance structure analysis. Sociological methods and Research, 22, 376–398.

Muthén, B. O. (2007). Latent variable hybrids: Overview of old and new models. In G. R. Hancock & K. M. Samuelsen (Eds.), Advances in latent variable mixture models (pp. 1–24). Charlotte, NC: Information Age.

Muthén, L.K., & Muthén, B.O. (1998–2013). Mplus User’s Guide., 7th Edition. Los Angeles, CA: Muthén & Muthén.

Nesselroade, J. R., McArdle, J. J., Aggen, S. H., & Meyers, J. M. (2002). Alternative dynamic factor models for multivariate time-series analyses. In D. M. Moskowitz & S. L. Hershberger (Eds.), Modeling intraindividual variability with repeated measures data: Advances and techniques (pp. 235–265). Mahwah, NJ: Erlbaum.

O’Malley, A. J., & Zaslavsky, A. M. (2008). Domain-level covariance analysis for multilevel survey data with structured nonresponse. Journal of the American Statistical Association, 103(484), 1405–1418.

Park, T., & Casella, G. (2008). The Bayesian lasso. Journal of the American Statistical Association, 103(482), 681–686.

Pan, J. H., Ip, E. H., & Dubé, L. (2017). An alternative to post-hoc model modification in confirmatory factor analysis: The Bayesian Lasso. Psychological Methods, 22(4), 687–704.

Reise, S. P., Kim, D. S., Mansolf, M., & Widaman, K. F. (2016). Is the bifactor model a better model or is it just better at modeling implausible responses? Application of iteratively reweighted least squares to the rosenberg self-esteem scale. Multivariate Behavioral Research, 51(6), 818–838.

Reise, S. P., Ventura, J., Nuechterlein, K. H., & Kim, K. H. (2005). An illustration of multilevel factor analysis. Journal of Personality Assessment, 84, 126–136.

Schmukle, S. C., Egloff, B., & Burns, L. R. (2002). The relationship between positive and negative affect in the positive and negative affect schedule. Journal of Research in Personality, 36(5), 463–475.

Shiffman, S. (2000). Real-time self-report of momentary states in the natural environment: Computerized Ecological Momentary Assessment. In A. Stone, J. Turkkan, J. Jobe, et al. (Eds.), The science of self-report: Implications for research and practice (pp. 277–296). Mahwah, NJ: Lawrence Erlbaum Associates.

Song, H., & Ferrer, E. (2012). Bayesian estimation of random coefficient dynamic factor models. Multivariate Behavioral Research, 47(1), 26–60.

Song, X. Y., Tang, N. S., & Chow, S. M. (2012). A bayesian approach for generalized random coefficient structural equation models for longitudinal data with adjacent time effects. Computational Statistics & Data Analysis, 56(12), 4190–4203.

Sörbom, D. (1989). Model modification. Psychometrika, 54(3), 371–384.

Stakhovych, S., Bijmolt, T. H., & Wedel, M. (2012). Spatial dependence and heterogeneity in Bayesian factor analysis: A cross-national investigation of Schwartz values. Multivariate Behavioral Research, 47(6), 803–839.

Tanner, M. A., & Wong, W. H. (1987). The calculation of posterior distributions by data augmentation (with discussion). Journal of the American Statistical Association, 82, 528–550.

Wang, H. (2012). Bayesian graphical lasso models and efficient posterior computation. Bayesian Analysis, 7(4), 867–886.

Yung, Y. F. (1997). Finite mixtures in confirmatory factor analysis models. Psychometrika, 62, 297–330.

Zhang, Z., & Nesselroade, J. R. (2007). Bayesian estimation of categorical dynamic factor models. Multivariate Behavioral Research, 42, 729–756.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work was supported by the National Natural Science Foundation of China (NSFC 31871128) and the MOE (Ministry of Education) Project of Humanities and Social Science of China (18YJA190013), and the NSF Grant (SES-1424875) and NIH Grant (1UL1TR001420) from the United States.

Appendix: Conditional Distributions for the Block Gibbs Sampler

Appendix: Conditional Distributions for the Block Gibbs Sampler

-

1.

The full conditional distribution for the latent factor scores \(\varvec{\omega }_{1gi}\) is multivariate normal and is given by

$$\begin{aligned} \varvec{\omega }_{1gi} |\mathbf{y}_{gi}, \varvec{\mu }_g, \varvec{\theta }\sim N[\varvec{\omega }_{1gi}^*, \mathbf{V}_{\omega _{1gi}}], \end{aligned}$$(15)where \(\mathbf{V}_{\omega _{1gi}} = (\varvec{\Lambda }_1^T \varvec{\Psi }_{1g}^{-1} \varvec{\Lambda }_1 + \varvec{\Phi }_{1g}^{-1})^{-1}\) and \(\varvec{\omega }_{1gi}^* = \mathbf{V}_{\omega _{1gi}} \varvec{\Lambda }_1^T \varvec{\Psi }_{1g}^{-1} (\mathbf{y}_{gi} - \varvec{\mu }_g)\).

-

2.

The full conditional distribution for the individual-specific intercept \(\varvec{\mu }_g\) is a multivariate normal distribution given by

$$\begin{aligned} \varvec{\mu }_g|\mathbf{Y}_{g}, \varvec{\Omega }_{1g}, \varvec{\Omega }_2, \varvec{\theta }\sim N[\varvec{\mu }_g^*, \mathbf{V}_{\mu _g}], \end{aligned}$$(16)where \(\mathbf{V}_{\mu _g} = (N_g \varvec{\Psi }_{1g}^{-1} + \varvec{\Psi }_2^{-1})^{-1}\) and \(\varvec{\mu }_g^* = \mathbf{V}_{\mu _g}[\varvec{\Psi }_2^{-1}(\varvec{\mu }+\varvec{\Lambda }_2\varvec{\omega }_{2g}) + \sum _{i=1}^{N_g}\varvec{\Psi }_g^{-1}(\mathbf{y}_{1gi}-\varvec{\Lambda }_1\varvec{\omega }_{1gi})]\).

-

3.

Let \(\varvec{\Lambda }_{1k}^T\) be the kth row of \(\varvec{\Lambda }_1\), \(\varvec{\Lambda }_{1(-k)}\) be submatrix of \(\varvec{\Lambda }_1\) with the kth row deleted, \(\mathbf{Y}_{gk}\) be the submatrix of \(\mathbf{Y}_g\) only with the kth row and \(\mathbf{Y}_{g(-k)}\) be the submatrix of \(\mathbf{Y}_g\) with the kth row deleted. Note that \(\varvec{\Psi }_{1g}\) is not diagonal, and without a loss of generality, we partition and rearrange the columns of \(\varvec{\Psi }_{1g}\) as follows:

$$\begin{aligned}&\varvec{\Psi }_{1g}=\left( \begin{array}{cccc} \varvec{\Psi }_{1(-kk)} &{}\quad \varvec{\psi }_{1k} \\ \varvec{\psi }_{1k}^T &{}\quad \psi _{1kk} \end{array}\right) , \end{aligned}$$where \(\psi _{1kk}\) is the kth diagonal element of \(\varvec{\Psi }_{1g}\), \(\varvec{\psi }_{1k} = (\psi _{1k1}, \cdots , \psi _{1k,k-1}, \psi _{1k,k+1}, \cdots , \psi _{1kp})^T\) is the vector of all off-diagonal elements of the kth column and \(\varvec{\Psi }_{1(-kk)}\) is the \((p-1) \times (p-1)\) matrix resulting from deleting the kth row and kth column from \(\varvec{\Psi }_{1g}\). We suppress the subscript g in the definitions of \(\varvec{\Psi }_{1(-kk)}\), \(\varvec{\psi }_{1k}\) and \(\psi _{1kk}\). It can be shown that: for \(k=1, 2, \ldots , p\),

$$\begin{aligned} \varvec{\Lambda }_{1k}|\mathbf{Y}, \varvec{\Omega }_1, \varvec{\mu }_g, \varvec{\theta }\sim N[\varvec{\Lambda }_{1k}^*, \mathbf{V}_{\Lambda _{1k}}], \end{aligned}$$(17)where

$$\begin{aligned} \mathbf{V}_{\Lambda _{1k}}= & {} \left( \sum _{g=1}^G \varvec{\Psi }_{1gk}^{*-1}\varvec{\Omega }_{1g}\varvec{\Omega }_{1g}^T + \mathbf{H}_{01k}^{-1}\right) ^{-1}, \\ \varvec{\Lambda }_{1k}^*= & {} \mathbf{V}_{\Lambda _{1k}}\left( \sum _{g=1}^G \varvec{\Psi }_{1gk}^{*-1} \varvec{\Omega }_{1g} \mathbf{Y}_{gk}^{*T} +\mathbf{H}_{01k}^{-1}\varvec{\Lambda }_{01k}\right) , \\ \varvec{\Psi }_{1gk}^*= & {} \psi _{1kk}-\varvec{\psi }_{1k}^T\varvec{\Psi }_{1(-kk)}^{-1}\varvec{\psi }_{1k}, \end{aligned}$$and \(\mathbf{Y}_{gk}^*\) is the matrix with element

$$\begin{aligned} y_{gik}^*=y_{gik}-\mu _{gk}-\varvec{\psi }_{1k}^T\varvec{\Psi }_{1(-kk)}^{-1}(\mathbf{y}_{gi(-k)}-\varvec{\mu }_{g(-k)}-\varvec{\Lambda }_{1(-k)}\varvec{\omega }_{1gi}), \end{aligned}$$\(\mathbf{y}_{gi(-k)}\) is the vector of \(\mathbf{y}_{gi}\) with the kth element deleted and \(\varvec{\mu }_{g(-k)}\) is the vector of \(\varvec{\mu }_g\) with the kth element deleted.

-

4.

For \(g=1, 2, \ldots , G\), let \({{\tilde{\mathbf{S}}}_g}=\sum _{i=1}^{N_g} (\mathbf{y}_{gi} - \varvec{\mu }_g - \varvec{\Lambda }_1\varvec{\omega }_{1gi})(\mathbf{y}_{gi} - \varvec{\mu }_g - \varvec{\Lambda }_1\varvec{\omega }_{1gi})^T\). Following Wang (2012), an efficient block Gibbs sampling is updated by \({\varvec{\Sigma }}_g\) one column at a time after appropriate reparametrization. Denote the running index for observed measurements by k. For \(k=1, 2, \ldots , p\), partition and rearrange the columns of \({\varvec{\Sigma }}_g\) and \({{\tilde{\mathbf{S}}}_g}\) as follows:

$$\begin{aligned}&{\varvec{\Sigma }}_g=\left( \begin{array}{cccc} {\varvec{\Sigma }}_{(-kk)} &{}\quad \varvec{\sigma }_k \\ \varvec{\sigma }_k^T &{}\quad \sigma _{kk} \\ \end{array}\right) ,~~~ {{\tilde{\mathbf{S}}}_g}=\left( \begin{array}{cccc} {{\tilde{\mathbf{S}}}}_{(-kk)} &{}\quad {{\tilde{\mathbf{s}}}}_k \\ {{\tilde{\mathbf{s}}}}_k^T &{}\quad {{\tilde{s}}}_{kk} \\ \end{array}\right) , \end{aligned}$$where \(\sigma _{kk}\) is the kth diagonal element of \({\varvec{\Sigma }}_g\), \(\varvec{\sigma }_k = (\sigma _{k1}, \ldots , \sigma _{k,k-1}, \sigma _{k,k+1}, \ldots , \sigma _{kp})^T\) is the vector of all off-diagonal elements of the kth column and \({\varvec{\Sigma }}_{(-kk)}\) is the \((p-1) \times (p-1)\) matrix resulting from deleting the kth row and kth column from \({\varvec{\Sigma }}_g\). Similarly, \({{\tilde{s}}}_{kk}\) is the kth diagonal element of \({{\tilde{\mathbf{S}}}_g}\), \({{\tilde{\mathbf{s}}}}_k \) is the vector of all off-diagonal elements of the kth column of \({{\tilde{\mathbf{S}}}_g}\), and \({{\tilde{\mathbf{S}}}}_{(-kk)}\) is the matrix with the kth row and kth column of \({{\tilde{\mathbf{S}}}_g}\) deleted. Note that for notational simplicity, we also suppress the subscript g in the above definitions. Let \({\varvec{\beta }}_g = \varvec{\sigma }_k\) and \(\gamma _g = \sigma _{kk}-\varvec{\sigma }_k^T{\varvec{\Sigma }}_{(-kk)}^{-1}\varvec{\sigma }_k\). It can be shown that:

$$\begin{aligned} {\varvec{\beta }}_g|{\varvec{\Sigma }}_{(-kk)}, \mathbf{Y}, \varvec{\Omega }_1, \varvec{\theta }, \varvec{\tau }_g, \lambda _g\sim & {} N[-\mathbf{V}_{\beta _g}{{\tilde{\mathbf{s}}}}_k, \mathbf{V}_{\beta _g}], \end{aligned}$$(18)$$\begin{aligned} \gamma _g|{\varvec{\Sigma }}_{(-kk)}, \mathbf{Y}, \varvec{\Omega }_1, \varvec{\theta }, \varvec{\tau }_g, \lambda _g\sim & {} Gamma\left( \frac{N_g}{2}+1, \frac{{{\tilde{s}}}_{kk}+ \lambda _g}{2}\right) , \end{aligned}$$(19)where \(\mathbf{V}_{\beta _g} = \left[ ({\tilde{s}}_{kk}+\lambda _g){\varvec{\Sigma }}_{(-kk)}^{-1} + \mathbf{M}_{\varvec{\tau }_g}^{-1} \right] ^{-1}\) and \(\mathbf{M}_{\varvec{\tau }_g}\) is the diagonal matrix with diagonal elements \(\tau _{g,k1}, \ldots , \tau _{g,k(k-1)}, \tau _{g,k(k+1)}, \ldots , \tau _{g,kp}\). After simulating observations from the above conditional distributions, we can obtain \(\varvec{\sigma }_k = {\varvec{\beta }}_g\), \(\varvec{\sigma }_k^T = {\varvec{\beta }}_g^T\) and \(\sigma _{kk} = \gamma _g + \varvec{\sigma }_k^T{\varvec{\Sigma }}_{(-kk)}^{-1}\varvec{\sigma }_k\); then, the last column and row of \({\varvec{\Sigma }}_g\) are updated at a time. At the end, \(\varvec{\Psi }_{1g}={\varvec{\Sigma }}_g^{-1}\) is computed. The conditional distribution of \(\varvec{\tau }_g\) can be expressed as follows: for \(i<j\),

$$\begin{aligned} \frac{1}{\tau _{g,ij}}|\mathbf{Y}, \varvec{\Omega }_1, \varvec{\theta }, {\varvec{\Sigma }}_g, \lambda _g \sim IG\left( \sqrt{\frac{\lambda _g^2}{\sigma _{g,ij}^2}}, \lambda _g^2\right) , \end{aligned}$$(20)where IG(a, b) indicates the inverse Gaussian distribution with mean a and shape parameter b. Additionally, it can be shown that the conditional distribution of \(\lambda _g\) follows:

$$\begin{aligned} \lambda _g|\mathbf{Y}, \varvec{\Omega }_1, \varvec{\theta }, {\varvec{\Sigma }}_g, \varvec{\tau }_g \sim Gamma\left( \alpha _{g}+\frac{p(p+1)}{2}, \beta _{g}+\frac{1}{2}\sum _{i=1}^p \sum _{j=1}^p |\sigma _{g,ij}|\right) . \end{aligned}$$(21) -

5.

The full conditional distribution for the latent factor scores \(\varvec{\omega }_{2g}\) is multivariate normal and is given by

$$\begin{aligned} \varvec{\omega }_{2g} |\varvec{\mu }_{g}, \varvec{\theta }\sim N[\varvec{\omega }_{2g}^*, \mathbf{V}_{\omega _{2g}}], \end{aligned}$$(22)where \(\mathbf{V}_{\omega _{2g}} = (\varvec{\Lambda }_2^T \varvec{\Psi }_{2}^{-1} \varvec{\Lambda }_2 + \varvec{\Phi }_{2}^{-1})^{-1}\) and \(\varvec{\omega }_{2g}^* = \mathbf{V}_{\omega _{2g}} \varvec{\Lambda }_2^T \varvec{\Psi }_{2}^{-1} (\varvec{\mu }_{g} - \varvec{\mu })\).

-

6.

The full conditional distribution of \(\varvec{\mu }\) can be written as a multivariate normal distribution given by

$$\begin{aligned} \varvec{\mu }|\mathbf{U}, \varvec{\Omega }_2, \varvec{\theta }\sim N[\varvec{\mu }^*, \mathbf{V}_{\mu }], \end{aligned}$$(23)where \(\mathbf{U}= (\varvec{\mu }_1, \ldots , \varvec{\mu }_G)\), \(\mathbf{V}_{\mu } = (G \varvec{\Psi }_2^{-1} + \mathbf{H}_{02}^{-1})^{-1}\), \(\varvec{\mu }^* = \mathbf{V}_{\mu }[\mathbf{H}_{02}^{-1}\varvec{\mu }_{02}+\sum _{g=1}^G \varvec{\Psi }_2^{-1} (\varvec{\mu }_g-\varvec{\Lambda }_2\varvec{\omega }_{2g})]\).

-

7.

The full conditional distribution of \(\varvec{\Phi }_2\) can be written as an inverse Wishart distribution given by

$$\begin{aligned} \varvec{\Phi }_2 |\mathbf{U}, \varvec{\Omega }_2, \varvec{\theta }\sim IW\left( \sum _{g=1}^G \varvec{\omega }_{2g}\varvec{\omega }_{2g}^T + \mathbf{R}_{02}^{-1}, \rho _{01}+G\right) , \end{aligned}$$(24)where \(IW(\cdot , \cdot )\) denotes the inverse Wishart distribution.

-

8.

The full conditional distribution of \(\psi _{2k}^{-1}\) can be written as gamma distribution given by: for \(k=1, 2, \ldots , p\),

$$\begin{aligned} \psi _{2k}^{-1} |\mathbf{U}, \varvec{\Omega }_2, \varvec{\theta }\sim Gamma\left( \frac{G}{2}+\alpha _{02k}, \frac{1}{2}\sum _{g=1}^G(\mu _{gk}-\mu _k-\varvec{\Lambda }_{2k}^T\varvec{\omega }_{2g})^2+\beta _{02k}\right) ,\qquad \quad \end{aligned}$$(25)where \(\mu _{gk}\) and \(\mu _k\) are the kth element of \(\varvec{\mu }_g\) and \(\varvec{\mu }\), respectively, and \(\varvec{\Lambda }_{2k}^T\) is the kth row of \(\varvec{\Lambda }_2\).

-

9.

The full conditional distribution of \(\varvec{\Lambda }_{2k}\) can be written as a multivariate normal distribution given by

$$\begin{aligned} \varvec{\Lambda }_{2k} |\mathbf{U}, \varvec{\Omega }_2, \varvec{\theta }\sim N[\varvec{\Lambda }_{2k}^*, \mathbf{V}_{\Lambda _{2k}}], \end{aligned}$$(26)where \(\mathbf{V}_{\Lambda _{2k}} = (\psi _{2k}^{-1}\varvec{\Omega }_{2}\varvec{\Omega }_{2}^T + \mathbf{H}_{02k}^{-1})^{-1}\), \(\varvec{\Lambda }_{2k}^* = \mathbf{V}_{\Lambda _{2k}}(\psi _{2k}^{-1} \varvec{\Omega }_{2} \mathbf{U}_{k}^* +\mathbf{H}_{02k}^{-1}\varvec{\Lambda }_{02k})\) and \(\mathbf{U}_{k}^{*T}=(\mu _{1k}-\mu _k, \mu _{2k}-\mu _k, \ldots , \mu _{Gk}-\mu _k)\).

-

10.

The full conditional distribution for \(\mathbf{R}\) can be expressed as an inverse Wishart distribution as follows:

$$\begin{aligned} \mathbf{R}|\varvec{\Phi }_{11}, \varvec{\Phi }_{12}, \ldots , \varvec{\Phi }_{1G}, \varvec{\theta }\sim IW\left( \sum _{g=1}^G \varvec{\Phi }_{1g}^{-1} + \mathbf{R}_0 ^{-1}, \rho _0+G\rho \right) . \end{aligned}$$(27) -

11.

According to the model and the prior distribution defined, respectively, in Eqs. (5) and (12), the full conditional distribution of \(\rho '\) is as follows:

$$\begin{aligned} p(\rho '|\varvec{\Phi }_{11}, \ldots , \varvec{\Phi }_{1G}, \varvec{\theta }) \propto \prod _{g=1}^G p(\varvec{\Phi }_{1g}|\rho ', \mathbf{R}) \times p(\rho '), \end{aligned}$$(28)where the distribution of \(\varvec{\Phi }_{1g}\) is Wishart, and the prior distribution of \(\rho '\) is truncated univariate normal; therefore, this full conditional distribution is nonstandard, and the MH algorithm is employed to sample from this nonstandard distribution. Given the current value \(\rho '^{(l)}\), we simulate a new candidate \(\rho '^*\) from proposal distribution \(N(\rho '^{(l)}, \varphi )\). \(\rho '^*\) is then accepted as new observation \(\rho '^{(l+1)}\) with the following probability

$$\begin{aligned} \text {min}\left\{ 1, \frac{\prod _{g=1}^G p(\varvec{\Phi }_{1g}|\rho '^*, \mathbf{R}) \times p(\rho '^*)}{\prod _{g=1}^G p(\varvec{\Phi }_{1g}|\rho '^{(l)}, \mathbf{R}) \times p(\rho '^{(l)})}\right\} , \end{aligned}$$where tuning parameter \(\varphi \) is selected such that the average acceptance rate is around 0.25 (Gelman et al. 1996).

-

12.

The full conditional distribution of \(\varvec{\Phi }_{1g}\) can be written as an inverse Wishart distribution given by: for \(g=1, 2, \ldots , G\),

$$\begin{aligned} \varvec{\Phi }_{1g} |\varvec{\Omega }_1, \varvec{\theta }\sim IW\left( \sum _{i=1}^{N_g} \varvec{\omega }_{1gi}\varvec{\omega }_{1gi}^T + \mathbf{R}^{-1}, \rho +N_g\right) . \end{aligned}$$(29)

Rights and permissions

About this article

Cite this article

Pan, J., Ip, E.H. & Dubé, L. Multilevel Heterogeneous Factor Analysis and Application to Ecological Momentary Assessment. Psychometrika 85, 75–100 (2020). https://doi.org/10.1007/s11336-019-09691-4

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11336-019-09691-4