Abstract

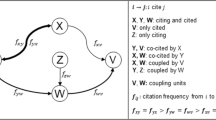

The debate regarding to which similarity measure can be used in co-citation analysis lasted for many years. The mostly debated measure is Pearson’s correlation coefficient r. It has been used as similarity measure in literature since the beginning of the technique in the 1980s. However, some researchers criticized using Pearson’s r as a similarity measure because it does not fully satisfy the mathematical conditions of a good similarity metric and (or) because it doesn’t meet some natural requirements a similarity measure should satisfy. Alternative similarity measures like cosine measure and chi square measure were also proposed and studied, which resulted in more controversies and debates about which similarity measure to use in co-citation analysis. In this article, we put forth the hypothesis that the researchers with high mutual information are closely related to each other and that the mutual information can be used as a similarity measure in author co-citation analysis. Given two researchers, the mutual information between them can be calculated based on their publications and their co-citation frequencies. A mutual information proximity matrix is then constructed. This proximity matrix meet the two requirements formulated by Ahlgren et al. (J Am Soc Inf Sci Technol 54(6):550–560, 2003). We conduct several experimental studies for the validation of our hypothesis and the results using mutual information are compared to the results using other similarity measures.

Similar content being viewed by others

References

Ahlgren, P., Javerning, B., & Rousseau, R. (2003). Requirements for a co-citation similarity measure, with special references to Pearson’s correlation coefficient. Journal of the American Society for Information Science and Technology, 54(6), 550–560.

Bennasar, M., Hicks, Y., & Setchi, R. (2015). Feature selection using joint mutual information maximisation. Expert Systems with Applications, 42(22), 8520–8532.

Berger, A., & Lafferty, J. (2017). Information retrieval as statistical translation. ACM SIGIR Forum, 51(2), 219–226.

Cover, T. M., & Thomas, J. A. (2006). Elements of information theory (2nd ed.). Hoboken: Wiley.

Egghe, L. (2010). Good properties of similarity measures and their complementarity. Journal of the American Society for Information Science and Technology, 61(10), 2151–2160.

Fiedor, P. (2014). Networks in financial markets based on the mutual information rate. Physical Review E, 89(5), 052801.

Gao, S., Ver Steeg, G., & Galstyan, A. (2015). Efficient estimation of mutual information for strongly dependent variables. In Artificial intelligence and statistics (pp. 277–286).

Hausser, J., & Strimmer, K. (2014). Entropy: Estimation of entropy, mutual information and related quantities. R package version, 1(1).

Leydesdorff, L. (2008). On the normalization and visualization of author co-citation data: Salton’s Cosine versus the Jaccard index. Journal of the American Society for Information Science and Technology, 59(1), 77–85.

Leydesdorff, L., & Vaughan, L. (2006). Co-occurrence matrices and their applications in information science: Extending ACA to the Web environment. Journal of the American Society for Information Science and technology, 57(12), 1616–1628.

Megnigbeto, E. (2013). Controversies arising from which similarity measures can be used in co-citation analysis. Malaysian Journal of Library & Information Science, 18(2), 25–31.

Paninski, L. (2003). Estimation of entropy and mutual information. Neural Computation, 15(6), 1191–1253.

Shannon, C. E. (1948). A mathematical theory of communication. Bell System Technical Journal, 27(379–423), 623–656.

Sammon, J. W. (1969). A nonlinear mapping for data structure analysis. IEEE Transactions on Computers, 100(5), 401–409.

Van Eck, N. J., & Waltman, L. (2008). Appropriate similarity measures for author co-citation analysis. Journal of the American Society for Information Science and Technology, 59(10), 1653–1661.

White, H. D. (2003). Author cocitation analysis and Pearson’s r. Journal of the American Society for Information Science and Technology, 54(13), 1250–1259.

White, H. D., & Griffith, B. C. (1981). Author cocitation: A literature measure of intellectual structure. Journal of the American Society for Information Science, 32, 163–171.

Zhang, Z. (2012). Entropy estimation in Turing’s perspective. Neural Computation, 24(5), 1368–1389.

Zhang, Z., & Zheng, L. (2015). A mutual information estimator with exponentially decaying bias. Statistical Applications in Genetics and Molecular Biology, 14(3), 243–252.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendices

The Estimation of MIPM

Assume that we have M authors, then the mutual information proximity matrix (MIPM) is an \(M\times M\) matrix consisting of \(M\times M-M\) off-diagonal entries and M diagonal entries. The off-diagonal entries are the mutual information, defined in Eq. (1), between all pairs from these M authors. The diagonal entries are the entropy, defined in Eq. (3), of all the M authors. These entries are as follows:

-

Off-diagonal entries \(\mathbb {MI}(X_s,\ X_t),\ s=1,\dots , M,\ t=1,\dots ,M,\ s\ne t\).

-

Diagonal entries \(\mathbb {MI}(X_m,X_m)=H(X_m),\ m=1\ldots , M\).

The mutual information \(\mathbb {M}(X_s,X_t)\) and entropy \(H(X_m)\) in the mutual information proximity matrix can be estimated using (2) and (4) respectively, given the corresponding co-citation frequency distribution tables (like Table 1) and citation frequency distribution tables (like Table 2).

For illustration purpose, we will show the procedure on how to estimate the off-diagonal entries and diagonal entries based on Tables 1 and 2, respectively.

Off-diagonal entries

Assume that the co-citation frequency distribution table between the first and second oeuvres is given by Table 1. We can estimate the mutual information \(\mathbb {M}(X_1,X_2)\) between them by the following procedure.

-

1.

Obtain the total co-citation counts: \(n=\sum _{i}\sum _{j}f_{ij}=230\).

-

2.

Calculate the joint and marginal relative frequencies: \(\hat{p}_{ij}=f_{ij}/230\), \(\hat{p}_{i,\cdot }=\sum _j\hat{p}_{ij}\), and \(\hat{p}_{\cdot ,j}=\sum _i\hat{p}_{ij}\).

-

3.

Plug in all these values in Eq. (2) (in “Methods’ section) and calculate the value of \(\widehat{\mathbb {M}}(X_1,X_2)=0.2197\).

Since MIPM is symmetric, we have \(\widehat{\mathbb {M}}(X_2,X_1)=\widehat{\mathbb {M}}(X_1,X_2)=0.2197\). Hence, given one co-citation frequency distribution table, we will obtain the estimates of two entries of the MIPM.

We can use the procedure above repeatedly to find the estimates of other off-diagonal entries of the MIPM, given the corresponding co-citation frequency distribution tables.

Diagonal entries

Assume that the citation frequency distribution table of the m-th oeuvre is given by Table 2. We can calculate the m-th diagonal entry of the MIPM by the following procedure.

-

1.

Obtain the total citation counts: \(n_m=\sum _{i}f_{mi}=92\).

-

2.

Calculate the relative frequencies: \(\hat{p}_{mi}=f_{mi}/92\).

-

3.

Plug in all these values in Eq. (4) (in “Methods’ section) and calculate the value of \(\hat{H}(X_m)=1.6802\).

Similarly, we can use the procedure above repeatedly to find the estimates of other diagonal entries of the MIPM, given the corresponding citation frequency distribution tables.

Rights and permissions

About this article

Cite this article

Zheng, L. Using mutual information as a cocitation similarity measure. Scientometrics 119, 1695–1713 (2019). https://doi.org/10.1007/s11192-019-03098-9

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-019-03098-9