Abstract

An effective method of construction of a linear estimator of AUC in the finite interval, optimal in the minimax sense, is developed and demonstrated for five PK models. The models may be given as an explicit C(t) relationship or defined by differential equations. For high variability and rich sampling the optimal method is only moderately advantageous over optimal trapezoid or standard numerical approaches (Gauss-Legendre or Clenshaw-Curtis quadratures). The difference between the optimal estimator and other methods becomes more pronounced with a decrease in sample size or decrease in the variability. The described estimation method may appear useful in development of limited-sampling strategies for AUC determination, as an alternative to the widely used regression-based approach. It is indicated that many alternative approaches are also possible.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The estimation of integral of a function, or area under the curve (AUC), plays an important role in biomedicine including in pharmacokinetic (PK) or toxicokinetic studies that are designed to estimate the integral of concentration of the investigated compound in plasma or tissue taken over time in a given interval.

Within the framework of linear compartmental models AUC established after an intravenous administration is used to calculate the drug clearance. Regardless of which one of the possible linear models is valid, the result is determined solely by the drug dose and AUC. This is one of the reasons for AUC to be a central concept of the so-called model-independent pharmacokinetics. Regulatory institutions use AUC as a measure of extent of absorption in order to assess a bioequivalence of different formulations of the same drug [1–3].

Many authors have addressed the problem of practical determination of AUC. A few papers contain reviews of numerous algorithms designed to estimate this parameter [4, 5]. Their authors do not pay any particular attention to the choice of sampling times, assuming they are given a priori, maybe following a certain traditional pattern. On the other hand, several authors have investigated the optimal designs which should yield the most accurate results using specific approaches.

The optimal sampling is especially important if the number of measured concentrations is limited due to ethical and economical reasons. Duffull et al. searched for the optimal design with limited sampling for the log-trapezoid rule applied to the two-exponential equation [6]. A vast number of authors (MEDLINE reports about 200 papers [7]) developed limited sampling strategies for estimating AUC either of specific drugs, for instance cyclosporine [8, 9] or midazolam [10], or in the general situation [11].

Katz and D’Argenio found optimal sampling times for estimating the integral of bi- and triexponential equations using the trapezoid rule [12]. In their original form these results are of limited usefulness, since the authors have assumed fixed values of parameters of those equations. In practice such parameters are more or less uncertain (if they were certain then the exact AUC would also be known and no estimation would be necessary). The present paper extends, in several directions, the ideas of that work. Namely, the aims of this work are threefold:

-

1.

Find an optimal sample schedule design for trapezoid rule under parameter uncertainty.

-

2.

Find an optimal quadrature within the class of linear combination (LC) quadrature approximations [13]. This is to be achieved by simultaneous adjustment of both sampling design and coefficients (weights) of quadrature.

-

3.

Evaluate obtained quadratures for five common PK models by means of simulation.

In order to reach these aims it is required to:

-

1.

set up transparent criteria of optimality;

-

2.

state necessary assumptions to make the problem tractable;

-

3.

express it as an optimization problem (in this case a minimax problem);

-

4.

invent an approach to practically solve this optimization problem;

-

5.

implement it (as a numerical analysis task);

-

6.

plan and execute optimum searches and simulations;

-

7.

evaluate the results.

These points would be reported in the following sections after introducing the necessary background.

Method

Background: theory of point estimation

While concepts to be introduced here are quite general elements of a statistical decision theory, the presentation will focus on their application to the theory of point estimation or, even more specifically, to the estimation of AUC in PK models. Let \(\varvec{\theta }\) be a vector of standard (primary) PK parameters. True AUC may be expressed as a function of these parameters, \(AUC(\varvec{\theta })\).

An estimator of an unknown quantity is a function of observations that in some way approximates that quantity. As any experiment suffers from various nuisance factors, the observations do not follow any deterministic model exactly. Thus the estimation can be imperfect. One may intuitively expect that certain estimators can perform better than others. However, if one would like to transform this intuition into a scientific method, a rigorous criterion is needed that would enable comparison of estimators.

Towards this end, a statistical theory of point estimation [14] introduces a loss function \(L ( \hat{Q}, \varvec{\theta })\) to assess the precision of an estimator \(\hat{Q}\). A loss function is always non-negative and it should yield 0 if an estimation is exact, i.e. if \(\hat{Q} = AUC (\varvec{\theta }).\) A quadratic loss function

is a typical choice, and it is one of two that will be considered here. The intuition behind this concept is rather simple: a wrong estimation causes a loss. The worse the estimate is, the higher the loss will be. Or: the closer the estimator value to the true AUC is, the lower the loss will be.

Another important concept is that of risk function. It is defined as an expectation of a loss. The expectation is taken over a joint probability distribution of all \(\varepsilon _i\).

The expectation of a continuous random variable \(X\) is defined as the first moment of its probability density function \(\varphi (x)\):

While this definition might appear somewhat abstract, the expectation has quite simple interpretation, due to a fundamental law of statistics: The Law of Large Numbers. If one repeatedly observes a quantity that is random, then the average result should tend to the expectation of that quantity (for a rigorous formulation of that law refer to any textbook on statistics, e.g. [15]). Thus, if one were to repeat estimation with a given estimator, then the average loss should tend to that estimator’s risk.

Estimators may be compared on a basis of their risk. Unfortunately, there is no estimator which is better than any other estimator for any parameter vector \(\varvec{\theta }\) [14]. Nonetheless, an optimal estimator in that sense (called a uniformly optimal estimator) could be found if some restrictions were applied to the class of considered estimators. Perhaps the most popular one is the case of unbiased estimators with quadratic loss function. They are called minimum variance unbiased estimators (MVUE).

There can be little benefit from MVUE in pharmacokinetics, however, as unbiased estimators do not exist for standard PK models with usual parameters (or, at least, they remain unknown).

Despite these problems, a good or even the best estimator, according to reasonable criteria, can be constructed in a somewhat different manner. The choice between two standard solutions depends on whether \(\varvec{\theta }\) is treated as a random variable with known distribution or as an unknown parameter. In the first case an estimator that minimizes expectation of the risk (over \(\varvec{\theta }\) distribution) is searched for. It corresponds to a Bayesian approach. In the second case a maximum possible risk is minimized. That is a minimax problem.

The former approach requires a knowledge of statistical distribution of PK parameters, while in the second method one needs to only know the range of those parameters. In what follows, the latter choice is analysed in detail.

Approach of Katz and D’Argenio

The trapezoid rule may be expressed by the following equation

where

In practice, an integral is calculated based on measured concentrations. Assume the integrand follows a certain PK model with a parameter vector \(\varvec{\theta }\). Thus, what is measured can be expressed by the equation:

where \(\varepsilon _i\) is a random error. The result

is therefore a random variable. It may be considered as a linear estimator of an unknown integral AUC.

In their paper Katz and D’Argenio proposed “selecting observation times so to minimize the expected value of the squared difference between the estimator and the exact value of the integral”. These authors assumed specific parameters of a multiexponential equation (i.e. they fixed \(\varvec{\theta }\)) and numerically found a minimum over a vector of sampling times, \(\varvec{t}\):

In terms of previous subsection a sampling schedule that minimizes the risk of trapezoid rule estimator was found. In order to include a variance model of \(\varepsilon _i\) they used the decomposition of the expectation of the squared error into a variance of estimator and its squared bias

the well-known result (for derivation see, for instance, Lehmann [14] or Katz and D’Argenio [12]). If all \(\varepsilon _i\) are independently distributed with the mean 0 and variance \(\sigma _i ^2\), then

Note that detailed knowledge of the statistical distribution of \(\varepsilon _i\) is not required; any distribution with existing and known variance can be accepted. One way to make \(\sigma _i^2\) known is to express it as a function of C. The heteroschedastic model with a constant coefficient of variation (c v ) is often assumed in PK models. It will be followed in the present study.

As the specific values of parameters need to be assumed, AUC may be calculated based on them and there is no need to use concentrations at all. Katz and D’Argenio made a rudimentary analysis of how the precision of the trapezoid method in an optimal setting changes while changing some (not all) parameters. It may be done in a more systematic manner within the framework of minimax approach and this will be one extension the present paper makes to the ideas of Katz and D’Argenio.

Optimal sample schedule design

Using the minimax approach the minimization of the risk for a given \(\varvec{\theta }\) should be replaced by minimization of the maximum risk that can be obtained for any possible vector of parameters. Thus the problem in Eq. 3 should be rewritten as

For highly variable drugs it might be more useful to minimize a relative rather than an absolute error. This corresponds to the division of the loss function L by the squared \(AUC(\varvec{\theta })\).

An optimum based on the above loss function (let it be called relative, in contrast to an absolute function L) will be analysed in the present study. The corresponding risk function will be denoted by \(R_r(\varvec{\theta })\) and an expression for the required optimum takes on the form:

In bioequivalence studies AUC is being compared on a logarithmic scale; equivalently the comparison focuses on ratios and not differences of AUC values. Also, in clinical application, an estimation error of 10 units is certainly more important if the true AUC equals 50 units than in a case when it is as large as 300. In both situations use of a relative risk would be preferable over an absolute risk.

Substituting Eqs. 5 and 6 into Eq. 4 and taking into account Eq. 7 yields a useful expression for the risk function that is a subject of minimax optimization:

This is a nested problem: there is a maximization over \(\varvec{\theta }\) within a minimization over \(\varvec{t}\). It means that for each trial sampling schedule the maximization in \(\varvec{\theta }\) has to be conducted and finally that sampling schedule which yielded the smallest maximum is to be chosen. An optimization is constrained on both levels: PK parameter values should stay within a reasonable range; sampling times should be arranged in ascending order and they should be included in the integration interval. This constrained optimization problem cannot be solved analytically and an application of numerical algorithms is required. The optimization seems to be one of the most difficult branches of numerical analysis. There is always the possibility that the solution found would appear suboptimal. In order to minimize this possibility, the advanced methods are required using as much information as is available. This is especially important on an inner level: unstable results may mislead outer level optimization routine and thwart convergence.

The necessary information includes first and second derivatives of the inner objective in \(\varvec{\theta }\), since they describe important geometrical properties of the hypersurface along which the maximum is searched for. The first derivative, i.e. the gradient, is a local measure of the descent of the surface, while the second derivative (the Hessian) is a measure of the local curvature. More details are given in a subsection on numerical methods.

Optimal quadrature design

Another dimension in which the described approach can be improved on is the choice of a quadrature. Trapezoid and log-trapezoid rules are the simplest approaches to determine AUC. Their drawbacks were frequently indicated [16, 17]. In the present work not only sampling points are free parameters. Some additional freedom is allowed regarding the choice of a quadrature. This may be done by considering a certain class of quadratures parameterized in a reasonable manner. Here the class of LC methods, as previously introduced by the present author [13], will be considered.

The LC-type quadrature by definition has the form given by Eq. 1, but w i can now be arbitrary; they do not need to satisfy Eq. 2. t i are knots of the quadrature and w i are its weights.

Surprisingly, many approaches used in pharmacokinetics belong to this class. In particular, linear trapezoidal, hyperbolic trapezoidal [18], Lagrange [19] and spline [4] methods all are of the LC type. The same applies to other popular general methods of numerical analysis, like Newton-Côtes, Gauss-Legendre (GL) or Clenshaw-Curtis (CC) quadratures [20].

Allowing weights vector \(\varvec{w}\) as well as knots vector \(\varvec{t}\) to be manipulated, results in a final statement for the minimum that should be reached by the optimal method:

Equation 8 remains valid.

In this problem a maximization over \(\varvec{\theta }\) is nested within a minimization in both \(\varvec{t}\) and \(\varvec{w}\). The dimension of search space of outer minimization is twice as large as it is for the optimal trapezoid problem from previous subsection. The optimization task is thus more difficult than in the previous case, and gradient and Hessian of inner maximization, as discussed in the preceding subsection, may prove even more useful.

Examples chosen for evaluation

Five examples of hypothetical models were analyzed:

-

1.

one-compartment linear model with first-order absorption, single dose;

-

2.

one-compartment linear model with first-order absorption, steady state;

-

3.

two-compartment linear model with iv bolus administration;

-

4.

one-compartment model with iv bolus administration and Michaelis-Menten elimination;

-

5.

one-compartment model with first-order absorption and Michaelis-Menten elimination.

An interval from \(t_1=0\) to \(t_2=24\mathrm {h}\) was chosen for \(AUC\). The dosing interval (\(\tau\)) for model 2 also matched that interval: \(\tau =24\mathrm {h}\). For each model either ranges or fixed values of parameters were assumed. They are given in Table 1 along with the resultant AUC range. It is explained in the Appendix why some parameters can be fixed and which of them to choose.

Three levels of coefficient of variation were assumed: 10%, 5% and 0% (no random error). They were combined with three sample sizes chosen for presentation: \(n=2\), 4 and 6. This is a range of sample sizes considered, among others, while developing limited sampling strategies. As it will be seen, at greater samples the difference between different approaches becomes less evident.

Numerical methods

MATLAB 7.11 (R2011b) software (The MathWorks, Inc.) with Minimization Toolbox [21, 22] was used to perform the required computations. A set of M-files written for that purpose is available as supplementary material to this paper. In order to obtain a solution to the minimax problem the ‘fmincon’ procedure from Minimization Toolbox was used on two levels of recursion. This is a general-purpose constrained nonlinear minimization procedure. It contains a variety of optimization algorithms that can be chosen by the user. At outer level (minimization in \(\varvec{w}\) and \(\varvec{t}\)) an active-set optimization was chosen, the choice of which implies application of sequential quadratic programming (SQP) algorithm. This algorithm belongs to the quasi-Newton family and it can perform better, if derivatives of the objective function are available. It is explained in the Appendix, how the gradient of maximum risk can be computed.

At the inner level (maximization of risk in \(\varvec{\theta }\)) a trust-region-reflective algorithm was preferred. It requires both gradient (first-order derivatives) and Hessian (second-order derivatives) of the objective (in this case the risk function itself) in model parameters.

If a concentration-time dependence and AUC can be expressed in a closed-form by model parameters, then the exact calculation of derivatives imposes no significant difficulty. This is the case with linear compartment models. In the case of one-compartment nonlinear model, with Michaelis-Menten elimination and bolus iv input,C(t) cannot be expressed in a closed-form, but it may be represented by an implicit function. By theorem on implicit function derivative, differentiation of C(t) in this case does not introduce true complications either. Moreover, there is a closed-form expression for an AUC given C(t) at integral limits [17]. However, in order to keep the software as simple as possible, this solution has not actually been used. Conversely, the same solution has been applied to model 4, as it is described just below for model 5.

For the one-compartment nonlinear model, with Michaelis-Menten elimination and first-order input no closed-form solution exists, and a differential equation of the model has to be numerically solved. The MATLAB procedure ‘ode113’, implementing a variable order Adams-Bashforth-Moulton method was used to that purpose (for detailed description of all numerical algorithms used refer to MATLAB documentation [21, 22]). In addition to the required C(t) values, a differential equation solver can also yield a value of AUC along with derivatives of both C(t) and AUC. The necessary details are given in the Appendix.

For each combination of model, sample size and c v an optimal minimax method was found. Moreover, an optimal trapezoid method and either Gauss-Legendre or Clenshaw-Curtis approximation (whichever performed better) were also found.

The trapezoid method was subject to the following restriction: the last knot always had to be placed at the end of the time interval. On the other hand a linear extrapolation to the time zero was allowed for models with \(C_0>0\). This asymmetry was due to the fact that the trapezoid method with a linear extrapolation to \(t=\tau\) no longer belongs to the LC class. An extrapolation to \(t=0\) does not introduce that problem [13].

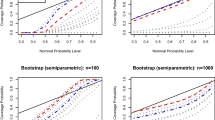

Simulations

In order to evaluate results, for each case 20,000 PK random profiles were simulated. PK parameters were uniformly drawn from their ranges. Based on them concentrations were calculated according to assumed models and Gaussian random noise with assumed c v was subsequently applied. Using those samples a bias, a root mean squared relative error (RMSRE), minimum and maximum absolute errors and their relative counterparts were estimated.

While the present approach has been developed with mild assumptions on statistical distribution of \(\varvec{\theta }\) and \(\varvec{\varepsilon }\), for simulation purposes a particular distribution had to be chosen. In order to perform a more demanding evaluation, one may use for \(\varvec{\theta }\) a distribution that results in a harder test than normal distribution or log-normal distribution, which are usually applied. A (multidimensional) uniform distribution creates the opportunity to scan a parameter space. It is a common choice in a Monte-Carlo optimum search.

The main rationale for simulations was to investigate those aspects of performance of optimal methods that do not comprise the criteria of optimality. The statistical parameters (RMSRE, bias, etc.) provide simple measures for that purpose. In addition they facilitate a simple check of results. The following inequality is to be expected for any method:

where \(Obj\), the objective, is the maximum risk found at chosen knots and weights. Moreover, in a case without random noise, the maximum observed relative deviance cannot be greater than the square root of the objective unless the optimization failed. This relation may be reversed in the presence of random error.

These inequalities are discussed in detail in the Appendix.

Results

The results of the optimum method search and the related simulations are compiled in Tables 2, 3, 4, 5, and 6. Footnote labels in the body of Table 2 are referred to in the discussion. They indicate examples of specific behaviour of results.

A few representative plots showing the quality of the predictions can be found in Figs. 1, 2, and 3. These plots show each simulated case as a small gray dot. Its abscissa equals to the true AUC value, i.e. calculated based on PK parameters values assumed in the simulation, and its ordinate represents the result of estimation. As it is quite common that maximum risk is reached at the extremal values of some or all parameters the special points simulated for these extremal values are indicated by open square symbols (\(\Box\)). Open triangles indicate those points at which the maximum estimation error appeared: \(\triangleleft\) and \(\triangleright\) are for maximum relative under- and overestimates, respectively; while \(\triangledown\) and \(\vartriangle\) are for maximum absolute under- and overestimated results in a plot. In these figures, on separate plots, the knots and weights of all three methods are also depicted. The position of each bar is that of a knot while its height represents a value of weight.

Performance of the investigated methods for Model 1 with n=6 and \(c_v=0.10\). Panels depict true vs estimated AUC by (a) optimal, (b) optimal trapezoid, and (c) Gauss-Legendre methods. Panel (d) displays knots and weights of these methods (one bar of optimal method is hidden behind bars of other methods)

Figure 4 contains sample spaghetti plots for all models at \(c_v=0.1\) and \(n=4\). Each 200th PK profile (of 20,000) is shown along with the corresponding concentrations measured at knots of investigated methods. Yet another manner of comparison of methods is displayed in Fig. 5. It contains a “mean” profile for Model 1, i.e. a profile simulated at midpoint values of PK parameters. The symbols are plotted at knots of the corresponding methods. The area covered by each symbol is proportional to its contribution to the total AUC.

Discussion

The objective for the optimal method was not always significantly less than for the optimal trapezoid (Table 2, footnote \(^{\mathrm{a}}\)). For a richer sampling (\(n=6\)) and higher variability (\(c_v=0.1\)) the maximum risk of all methods was comparable: the objective of the optimal method was never lower than 30% of the worst method’s objective. On the other hand for \(c_v=0\) the objectives differed to even more than five orders of magnitude. Differences also appeared to be more pronounced with a decrease in the sample size. It can also be confirmed by inspection of the Figures: patterns on Fig. 1 (richer sampling, higher variability) are rather similar across all three methods, while on Fig. 2 (very sparse sampling) and Fig. 3 (no variability, in addition) patterns are quite different.

Several maximum absolute deviances appeared to be inferior for the optimal method (Table 2, footnote \(^{\mathrm{b}}\)). Furthermore, it happened that the maximum relative deviance observed was worse for an optimal method than for one of the other methods (Table 2, footnote \(^{\mathrm{c}}\)). There was no contradiction in this, since the neighbourhood of such \(\varvec{\theta }\), at which other methods would perform worse than the optimal one, might simply has been missing in the simulation.

There was no clear superiority of either bias or RMSRE for the optimal method in comparison to other methods. Also, one cannot clearly indicate which one of the alternative methods had lower risk across the investigated models.

Even for as large a sample as \(n=6\), the maximum relative deviance was of order of 20% for any method at \(c_v=0.10\). Assuming the deviance of 20% is at the limit of usefulness, it could be provided in most cases also for \(n=2\) and \(c_v=0.05\) using the optimal method with relative risk.

Inequality 18 discussed in the Appendix was satisfied for samples with \(c_v>0\), confirming that random errors outweigh quadrature errors. For samples with \(c_v=0\) inequality 17 was satisfied, indicating successful optimization.

The precise definition of the optimal AUC estimation method depends on the choice of risk function, considered class of quadratures, and the interpretation of the PK parameters vector (Bayesian or minimax estimation). The present paper contains an analysis of the specific combination of these factors: quadratic loss function, LC quadratures, and minimax estimator. It was demonstrated how much progress may be made by transition from the simple trapezoid method to the optimal LC quadrature in the above sense.

That LC-quadratures are distinguished may be argued as follows: In the framework of linear pharmacokinetics they guarantee linearity of the AUC estimates. With non-linear quadratures the calculation of risk function can be quite difficult and it may require full knowledge of probability distribution of random errors. Also, an application of numerical integration algorithms, including Monte-Carlo, might be necessary. Likewise, the minimax approach enables more general treatment than the Bayesian framework, since the latter depends on the prior distribution of model parameters, which is not necessarily known. On the other hand, in order to successfully apply the present method, the appropriate PK model has to be identified beforehand and the range of certain PK parameters as well as \(c_v\) should be estimated.

Thus prior investigation of the drug on the target population is required. Along with observed clearer superiority on small samples it suggests that developing of limited sampling strategies may constitute an area of application for this approach.

At this point one may wonder, why not simply fit the model to the data and use obtained PK parameters to calculate AUC? Although it is possible in principle, it is an indirect solution, and as such it does not need to be optimal. To illustrate this the following analogy can be developed:

Gauss-Legendre quadrature of order n (i.e. having n knots) is exact for any polynomial of an order up to \(2n-1\). For instance, if a polynomial value is known at three properly chosen knots, this polynomial can be exactly integrated, provided its order is 5 or less. But no polynomial coefficients can be determined for polynomials of an order \(\ge 3\). In fact, there is a continuum of polynomials, passing through those given points, with the same integral; a few of them are depicted in Fig. 6.

While it is believed that the case discussed herein is an important one, it is by no means the only one that deserves analysis. In particular, non-linear quadratures are certainly worth investigation, despite the difficulties indicated above, as they are more general. The widely used log-trapezoid rule is a very simple instance of such a quadrature.

The AUC in the finite interval is more appropriate to the steady state. Amisaki gives an important insight into the problem of integration in the infinite interval [23]; this topic also appears to be worth further analysis.

Conclusions

Optimal linear minimax estimator of AUC in the finite interval can be effectively constructed for PK models, regardless of whether they are given by an explicit C(t) relationship or defined by the differential equations. The developed method may also be applied in other disciplines, where estimation of integrals from sparse and noisy data is essential.

The optimal method may appear significantly better than other considered methods for low variability samples. On the other hand, for larger samples with higher variability it is less advantageous and it may be replaced by the simpler method. In particular, GL and CC algorithms may then be considered, since their weights and knots do not depend on the model nor the range of model parameters.

There is no optimal AUC estimator in the universal sense, but what is meant by 'optimal' depends on so many factors that it appears that the answer to the question in the title should be positive.

The benefits of the minimax estimator with the LC quadrature and constant c v may be summarized as follows:

-

1.

To obtain the estimator one does not need to know PK parameters distribution; no covariance matrix is necessary. Only a reasonable range of parameters should be determined.

-

2.

Detailed knowledge on experimental error is also unimportant. Zero mean and constant c v conditions suffice.

-

3.

A construction of the estimator does not involve multidimensional integrals and their numerical approximations.

-

4.

A constant c v condition with a relative risk simplifies the estimator construction process, even for nonlinear models. This is discussed in the first subsection of the Appendix.

-

5.

In a sense, this estimator is more conservative than the Bayesian approach, since it minimizes an error in the worst possible scenario and not in an average situation as does the Bayesian approach.

As a closing remark a comment on the methodology being used for elaborating limited-sampling strategy for a number of drugs [6, 8–10] can be given. Formally, the applied quadrature differs from the LC type only by an additional constant term (the intercept), but the interpretation is quite different. Linear regression is postulated between AUC and concentrations measured at knots. The weights have the meaning of multiple regression coefficients. It might appear, that some kind of maximum likelihood estimator of AUC is constructed in this way. Note however, that postulate of linear dependency is not necessarily true in PK applications. The present paper also uses linear quadrature, but does not assume it is exact in the absence of random errors, as the linear regression approach does.

References

US Food and Drug Administration (2003) Guidance for Industry: Bioavailability and bioequivalence studies for orally administered drug products - general considerations. Center for Drug Evaluation and Research, Rockville

US Food and Drug Administration (2001) Guidance for Industry: Statistical approaches to establishing bioequivalence. Center for Drug Evaluation and Research, Rockville

European Medicines Agency (2010) Guideline on the investigation of bioequivalence. London

Yeh K, Kwan K (1978) A comparison of numerical integrating algorithms by trapezoidal, Lagrange, and spline approximation. J Pharmacokinet Biopharm 6:79–98

Purves R (1992) Optimum numerical integration methods for estimation of area-under-the curve (AUC) and area-under-the-moment-curve (AUMC). J Pharmacokinet Biopharm 20:211–226

Duffull S, Begg E, Deely J (1999) Development of a general method of limited sampling for the determination of AUC for a drug that displays two-compartment pharmacokinetics. Eur J Clin Pharmacol 55:213–219

Medline database (2014). Bethesda, Maryland: National Library of Medicine. http://pubmed.gov/. Accessed Sep 20 2014

Wilhelm AJ, de Graf P, Veldkamp AI, Janssen JJWM, Huijgens PC, Swart EL (2011) Populaton pharmacokinetics of ciclosporin in haematopoietic allogeneic stem cell transplantation with emphasis on limited sampling strategy. Br J Clin Pharmacol 73:553–563

Sibbald C, Seto W, Taylor T, Saunders EF, Doyle J, Dupuis LL (2008) Determination of area under the whole blood concentration versus time curve after first intravenous cyclosporine dose in children undergoing hematopoietic stem cell transplant: limited sampling strategies. Ther Drug Monit 30:434–438

Mueller SC, Drewelow B (2013) Evaluation of limited sampling models for prediction of oral midazolam AUC for CYP3A phenotyping and drug interaction studies. Eur J Clin Pharmacol 69:1127–1134

Sallas WM (1995) Development of limited sampling strategies for characteristics of a pharmacokinetic profile. J Pharmacokinet Biopharm 23(5):515–529

Katz D, D’Argenio DZ (1983) Experimental design for estimating integrals by numerical quadrature, with application to pharmacokinetic studies. Biometrics 39:621–628

Jawień W (1999) Variablility of the model independent AUC. The one sample per individual case. J Pharmacokinet Biopharm 27(4):437–464

Lehmann EL, Casella G (1998) Theory of point estimation, 2nd edn. Springer-Verlag, New York

Wasserman L (2004) All of statistics: a concise course in statistical inference. Springer texts in statistics. Springer, New York

Chiou W (1978) Critical evaluation of the potential error in pharmacokinetic studies of using linear trapezoidal rule method for the calculation of the area under the plasma level-time curve. J Pharmacokinet Biopharm 6:539–546

Gibaldi M, Perrier D (1982) Pharmacokinetics. Marcel Dekker, New York

Nedelman J, Gibiansky E (1996) The variance of a better AUC estimator for sparse, destructive sampling in toxicokinetics. J Pharm Sci 88:884–886

Rocci ML Jr, Jusko WJ (1983) Lagran program for area and moments in pharmacokinetic analysis. Comput Prog Biomed 16:203–216

Press WH, Teukolsky SA, Vetterling WT, Flannery BP (2007) Numerical recipes. The art of scientific computing, 3rd edn. Cambridge University Press, Cambridge

MathWorks (2008) Matlab 7 Getting Started Guide. The MathWorks, Natick

MathWorks (2011) Matlab Optimization Toolbox User’s Guide. The MathWorks, Natick

Amisaki T (2001) Gaussian quadrature as a numerical integration method for estimating area under the curve. Biol Pharm Bull 24(1):70–77

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

Appendix

Appendix

Reducing the search space

General considerations

Consider two parameter vectors, \(\varvec{\theta }\) and \(\varvec{\theta }'\), both in the assumed parameter range, such that there exists \(\kappa\) so that for any t

Then, in the case of constant c v , \(R(\varvec{\theta }')=\kappa ^2 R(\varvec{\theta })\) and \(R_r(\varvec{\theta }')=R_r(\varvec{\theta })\).

Thus, if there exists a subset \(\varTheta '\), of the parameter range \(\varTheta\), such that for any \(\varvec{\theta }\) in \(\varTheta\) there exists \(\varvec{\theta }'\) in \(\varTheta '\) so that Eq. 9 holds with \(\kappa \ge 1\), then the search space \(\varTheta\) can be reduced to \(\varTheta '\).

From practical reasons \(\varTheta\) is chosen as a multidimensional interval (or combination of such intervals):

If \(\varTheta '\) can be chosen so that for some i, \(\theta _i\) is fixed, then a dimension of search space will be reduced. This may significantly decrease the computing time.

Application to PK models

In the case of linear PK models Eq. 9 is satisfied automatically. For models 1 and 2 let the parameter range be chosen as

and \(\varTheta '\) as

For model 3 the choices might be

and

For model 4 one can write

This implies that if \(C(t)\) is a solution of model equation with parameters \(K_M\) and \(V_m\) and initial condition \(C(0)=C_0\), then \(\kappa C(t)\) is a solution of the same equation with model parameters (including initial concentration) multiplied by \(\kappa\). It may be viewed as a result of change in mass unit by factor \(\kappa\). If one chooses \(\varTheta '\) as

where \(\lambda =C_{0\;min}/C_{0\;max}\), then the choice for \(\varTheta\) might be

Finally, for model 5 one obtains

and

Outer objective derivative derivation

Let \(M(\varvec{\omega }) = \max _{\varvec{\theta }} R(\varvec{\theta },\varvec{\omega })\), where \(\varvec{\omega }\) is a vector combined from \(\varvec{t}\) and \(\varvec{w}\). The \(\varvec{\theta }^{(m)}\) that maximizes \(R\) clearly depends on \(\varvec{\omega }\), so one can write:

Thus

If at the maximizer \(\frac{\partial {R}}{\partial {\theta _i ^{(m)}}} \ne 0\), it implies that \(\theta _i^{(m)}\) must be at one of its bounds. Assuming that \(\frac{\partial {R}}{\partial {\theta _i ^{(m)}}}\) is continuous in \(\varvec{\omega }\), it will remain non-zero after an infinitesimal change in \(\omega _k\) and therefore the maximizer will remain at its bound. It means that \(\frac{\partial {\theta _i ^{(m)}}}{\partial {\omega _k}} = 0\). A maximizer may also be in the interior of \(\varTheta\) implying that \(\frac{\partial {R}}{\partial {\theta _i ^{(m)}}} = 0\). In any case

and

Treatment of models defined by differential equations

In order to obtain \(AUC\) along with \(C(t)\) as well as their derivatives in parameters the model differential equation

has to be supplemented with additional equations, the first of them being:

where \(AUC(t,\varvec{\theta })\) is calculated in the time interval from 0 to \(t\).

Define:

Then

what may be written as the subsequent equations:

and, in the similar way:

Finally, for the Hessian one obtains:

which yields the following equations:

and

The differential Eqs. (10 – 15) with initial conditions:

if solved, form the complete data needed by the optimization procedure.

In the case of iv administration, if \(C_0\) is chosen as a model parameter (along with \(K_M\) and \(V_m\)), so that \(\varvec{\theta }=(K_M,V_m,C_0)\) then

and

For the first-order absorption with no initial concentration \(\varvec{\theta }=(K_M,V_m,k_a,A)\) where \(A=F D k_a / V_d\)

and

Inequalities

In practice, the expectation of a random variate is always less than its maximal possible value. In most cases it should also be less than the maximum value observed, even for a sample of moderate size. Thus the following inequality must hold:

and also, almost certainly:

On the other hand, randomly drawn vectors \(\varvec{\theta }\) may miss the maximizer:

\(MSRE\) estimates an expectation of the risk and for a large sample it should provide a good approximation. On average, inequality 16 should also hold for expectation replaced by \(MSRE\). The relation between objective and maximum observed loss is more complicated, because inequalities 17 and 18 work in opposite directions. If the coefficient of variation \(c_v\) is very small, or there is no random error at all, the inequality in 17 may be replaced by an equal sign and inequality 18 becomes important. In a more realistic situation and with a sample large enough one may expect that random error will dominate the quadrature error, as Katz and D’Argenio pointed out. Then inequality 17 should prevail.

Taking square roots of these inequalities one may summarize them as

unless the \(c_v\) is 0 or very small, in which case the second inequality sign may be reversed.

Supplementary material

Additional documentation to this paper is available as electronic supplementary material. It consists of a set of Matlab M-files implementing minimax optimization, and plots of all simulations for Model 1. There are also several sample job configuration files used by a Matlab code. Interested readers may modify the Matlab code and/or configuration files to develop their own models.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution License which permits any use, distribution, and reproduction in any medium, provided the original author(s) and the source are credited.

About this article

Cite this article

Jawień, W. Searching for an optimal AUC estimation method: a never-ending task?. J Pharmacokinet Pharmacodyn 41, 655–673 (2014). https://doi.org/10.1007/s10928-014-9392-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10928-014-9392-y