Abstract

This article is an editorial, which makes the case that fusion breeding (that is using fusion neutrons to breed nuclear fuel for use in conventional nuclear reactors) is the best objective for the fusion program. To make the case, it reviews a great deal of plasma physics and fusion data. Fusion breeding could potentially play a key role in delivering large-scale sustainable carbon-free commercial power by mid-century. There is almost no chance that pure fusion can do that. The leading magnetic fusion concept, the tokamak, is subject to well-known constraints, which we have called conservative design rules, and review in this paper. These constraints will very likely prevent tokamaks from ever delivering economical pure fusion. Inertial fusion, in pure fusion mode, may ultimately be able to deliver commercial power, but the failure to date of the leading inertial fusion experiment, the National Ignition Campaign, shows that there are still large gaps in our understanding of laser fusion. Fusion breeding, based on either magnetic fusion or inertial fusion, greatly relaxes the requirements on the fusion reactor. It is also a much better fit to today’s and tomorrow’s nuclear infrastructure than is its competitor, fission breeding. This article also shows that the proposed fusion and fission infrastructure, ‘The Energy Park’, reviewed here, is sustainable, economically and environmentally sound, and poses little or no proliferation risk.

Similar content being viewed by others

Introduction

The fusion program, both short term and long term, is in trouble, certainly in the United States, and likely worldwide. In addition to large cost overruns and failures to meet milestones, surely another reason is that pure fusion has almost no chance of meeting energy requirements on a time scale that anyone alive today can relate to. Hence the assertion of this article is that fusion breeding of conventional nuclear fuel is a likely way out of fusion’s current and future difficulties. Fusion breeding substantially reduces the requirements on the fusion reactor. It significantly reduces the necessary Q (fusion power divided by input power), wall loading, and availability fraction. The capital cost of a reactor, estimated based on ITER’s capital cost, is affordable for fusion breeding, but definitely is not for pure fusion. It is likely that fusion breeding can produce fuel at a reasonable cost by mid century. The entire fusion and fission infrastructure would be sustainable, economical, environmentally sound, and have little or no proliferation risk. This article’s mission then, is to hopefully convince a much larger portion of the fusion establishment to make this case. At the very least it hopes to broaden the discussion in the fusion community from where we are now, where one prestigious review committee after another insists that every existing project is absolutely vital, nothing can be changed; except give us more $$$. The inevitable result of this process is that one fusion project after another gets knocked off.

The choice of fusion breeding versus pure fusion does not mean an immediate departure from the current course, but perhaps a 30 degree course correction. Many, but not all initial tasks are common to both options. However even with a 30 degree correction, after some distance traveled, the two paths diverge and are quite far apart. What is needed immediately then is a change in psychology in the fusion effort, that is a realization that breeding is a different, perfectly acceptable, and perhaps even a better option for fusion; and certainly one that is more achievable.

There is a natural symbiosis between fission and fusion. Fusion is neutron rich and energy poor; fission is energy rich and neutron poor. A fusion reaction, which produces about 20 MeV, creates 2–3 neutrons after neutron multiplication. The fission reaction produces the same 2–3 neutrons, but 200 MeV. Thus, for equivalent output power, fusion generates ten times as many neutrons as fission. A fusion reactor can be a very prolific fuel producer for fission reactor, which would be the primary energy producer.

Fusion proponents, for the most part, see fusion as an inherently safe energy source with minimal issues of hazardous waste and an inexhaustible fuel supply. They would prefer not to tie their fortunes to fission, which might not even want them, and which they see as having issues of safety, proliferation, and long-term radioactive waste. But they should consider realities. Fusion breeding is at least an order of magnitude easier to pull off than pure fusion power. It should be possible, whereas commercial application of pure fusion may turn out not to be. Even in the best of circumstances, commercial fusion breeding should be available quite a few decades earlier than commercial pure fusion. Thus it could serve as an intermediate objective, of genuine economic value, on the path to pure fusion.

With the recent disaster at Fukushima, and the sudden advent an era of inexpensive natural gas from hydraulic fracturing, that is, fracking, this hardly seems to be the optimum time to argue for an energy source that is largely nuclear. However this article takes a longer view.

An “inconvenient truth” is that the world may well have a climate problem by mid century, forcing it to move away from carbon-based fossil fuels even if they are not on a rapid path to depletion, which they may well be. But another “inconvenient truth” is that advanced economies require energy, and lots of it. And a third “inconvenient truth” is that the world is very unequal today, but the less-developed nations are pushing very hard for economic equality. It will be apparent by mid-century that the world has a very big long-term energy problem. Today 20 % or so of the world’s population uses the lion’s share of the 14 terawatts (TW) of power the world generates. By midcentury this will no longer be acceptable; everybody will demand the better life style abundant power makes possible. In a much cited paper, Hoffert et al. [1] argue that the world will need an additional 10–30 TW of carbon-free energy by mid-century.

And where will the world get this carbon-free energy? This author is convinced [2] that solar energy (solar thermal, solar photo voltaic, wind and biofuel) will contribute only a small fraction of this energy. As an example consider wind. First of all, much information we read is misleading. This author has seen mostly the ‘nameplate power’ quoted. This is the power the windmill of wind farm produces when the wind blows at exactly the right speed and direction. The average power, or the actual power is considerably less. According to the Wikipedia article on wind power (http://en.wikipedia.org/wiki/Wind_power), the actual wind power produced in 2011 was about 50 gigawatts (GW), about 0.36 % of the total world power of 14 TW. In other words, after more than 20 years of heavily subsidized development, if one takes the percentage of world power produced by wind, and rounds it off the nearest whole number, that percentage is zero.

In the absence of carbon free power sources, right now the world gets its energy from coal. Coal is overtaking oil as the largest source of power, its use is rapidly accelerating [3]. Rapidly developing countries like China, Indonesia, Turkey… are greatly increasing their use of coal. But if fossil fuel use, and especially coal, has to be reduced because of fears of climate change and/or depletion of a finite resource; and if wind, solar and biofuel cannot do it; what else is there? If not nuclear, then what?

Fission and fusion are the only carbon-free technologies that could power a world with 10 billion people. Fusion has many potential advantages as an energy source, but even its most optimistic proponents recognize that commercial fusion energy will not be ready any time near mid century. For nearly half a century, fusion’s advocates have been planning for a DEMO (a precursor to a commercial reactor) 35 years from t = 0, and who knows when t = 0 will be? [4].

Fission, on the other hand, is a technology that works well now, but the supply of fissile 235U is limited. Fission can be a long-term, sustainable solution only if breeders are used to convert 238U or thorium into fissile fuel. There are two options for breeders: fast-neutron fission reactors or fusion reactors. Fission breeding has a head start, but fusion breeding, advocated by this author for 15 years now is by far the more attractive option [4–11]. Fusion breeding is a much easier technology than pure fusion as an energy source; it could be commercialized by mid-century. Furthermore, fusion breeding could naturally serve as an economical bridge to pure fusion in the much more distant future.

Then it is also worth noting that both Andrei Sakharov [12] and Hans Bethe [13] advocated fusion breeding instead of pure fusion. These are two physicists we ignore at our peril.

We will see that for pure fusion to become economically feasible, either ITER or NIF would have to be greatly improved. Yet all experience shows that once systems get to be that size, progress comes with glacial lethargy, if comes at all. For instance it was 20 years from when ITER was proposed until it was approved, then another 15 years until first plasma, and another 7 (if all goes well) until DT experiments can even begin. This 42 year period, just to get to the starting gate, is longer than the total career of a typical physicist.

Furthermore, as we will also shortly see, pure fusion may well be out of reach for tokamaks. However an ITER or NIF scale system is more than adequate for fusion breeding. We will see the Q that ITER or NIF hopes to achieve is not nearly sufficient for pure fusion but definitely fine is for breeding. The first wall of a pure fusion reactor would have to be able to withstand a neutron flux of at least 4 MW/m2; but as we will see, a fusion breeder is fine with ITER scale wall loadings of one, or perhaps even half a megawatt per square meter. If the fusion reactor principally breeds, fuel and is only secondarily a power source, its availability fraction is not such a critical consideration either.

One obvious question then is why develop fusion breeding, decades in the future; when we are not developing fission breeding today? One answer is that there is no immediate need for either fission or fusion breeding. For the next few decades, fissile fuel is readily available. However the need for one or both will be pressing by mid century [1]. A key advantage of fusion breeding, is that it is about an order of magnitude more prolific than fission breeding. That is one fusion breeder can fuel about five light water reactors (LWR’s) of equal power, whereas it takes two fission breeders, for instance two integral fast reactors (IFR) at maximum breeding rate to fuel a single LWR of equal power [14–18], (http://en.wikipedia.org/wiki/Integral_fast_reactor). Clearly fusion breeding fits in well with today’s (and very likely tomorrow’s) nuclear infrastructure; fission breeding does not. In other words, in a fission breeder economy, at least 2/3 of the reactors would have to be breeders and this will represent a staggering cost. In a fusion breeder economy, all of the LWR’s, here and in the future, would remain in place.

Thus fusion breeding does not have to compete with LWR’s, a competition likely unwinnable if fuel supply for LWR’s were no consideration. It only has to compete with fission breeders, a competition it might well win. But more realistically, in a competition between fission and fusion breeding, there will likely be room in the midcentury economy for both, at least if fusion breeding makes a real effort to compete.

A fusion breeder economy does envision a vital role for fast neutron fission reactors. A single IFR (which in fact can run as either a breeder or burner), can burn any actinide. If run as a perfectly efficient burner, it can burn the actinide wastes of about 5 LWR’s (an LWR each year releases about 1/5 of the fuel it starts with in the form of actinide by products) [19]. Thus we can envision a sustainable, mid century, fusion breeding based energy architecture, where a single fusion breeder fuels 5 LWR’s, the nuclear reactor of choice up to now, and a single IFR burns up the actinide waste of these 5 LWR’s. The only waste products would be those fission decay products (cesium 137, strontium 90, etc.) which have no commercial value, but which have half lives of about 30 years. These could be stored for 300–600 years, a time human society can reasonably plan for, not the half million or so years it would take for, for instance 239Pu (half life 24,000 years), to decay away.

There is some dispute about the amount of uranium fuel available. Hoffert et al. [1], measuring it in terawatt years, estimates 60–300 TW years of uranium fuel is available. He mentioned that if the lowest estimate is correct, and 10 TW are needed (right now the world uses about 300 GWe, or about 1 TWth of nuclear power), there is only enough fuel for 6 years, hardly enough to justify creating a large, multi terawatt infrastructure. However other sources estimate that the available fuel is much greater [20]. This paper certainly cannot sort out these conflicting estimates. But one thing is indisputable. No matter how much nuclear fuel fission advocates think they have, they must admit that their supply of fissile nuclear fuel is <1 % of the uranium resource, and 0 % of the thorium. Fusion breeding makes about 50–100 % of each available. This author does not believe the world is so well endowed with energy resources that we can afford to discard more than 99 % of them. To get an idea of the size of this resource, thermal nuclear reactors have been delivering about 300 GWe for about 40 years. This means that in depleted uranium alone, there is sufficient fuel for 3 TWe for 200–400 years! But as we will see, a much better option is to use thorium as a fuel, and there is three times as much thorium as uranium. Nuclear fuel, for all practical purposes, is inexhaustible in the same sense as wind or solar [21].

Hence a conservative approach is to assume the worst as regards nuclear fuel supply and support a relatively low cost, economical way of enhancing it by about two orders of magnitude, i.e. fusion breeding. Supporting fusion breeding will not produce appreciable fuel until mid century at best; and by then there could well be a crying need for it.

Then to reiterate our basic case, this article makes the case that (1) The optimum strategy is not pure fusion, i.e. using the neutron’s 14 MeV kinetic energy to boil water; but fusion breeding, i.e. using what, for want of a better term, we call the neutron’s potential energy, to breed 10 times more fuel, and its kinetic energy to boil water. This is especially true if one adopts the tokamak approach, because conservative design rules, which we will discuss shortly, and which have limited tokamak operation for half a century appear to forbid pure fusion, but allow fusion breeding. Of all the hybrid fusion options, the most sensible is breeding. Recently a summary of many different possible types of many different fusion fission hybrids has appeared [20]. While not explicitly concluding that breeding is the best option, it does seem to point toward it. Our own book chapter summarizing hybrids does explicitly recommend breeding among the options [11]. A valuable resource for anyone interested in fusion breeding or other forms of hybrid fusion is a web site set up by Ralph Moir (www.ralphmoir.com). (2) The fusion configurations should continue along the paths being blazed by ITER and NIF, and not divert scarce resources to other approaches. (3) The mid century energy infrastructure will necessarily have a large nuclear component. Fission now supplies about 300 GWe, or about 13 % of the 2.5 TW the world generates. There are about 400 fission power plants now, and about 70 more are in various stages of construction or planning. There is no reason to think that nuclear power generation cannot be considerably increased. By mid century, fusion breeders could be supplying their fuel and fast neutron reactors could be burning the actinide wastes.

Recently Ralph Moir, along with this author has contributed a chapter on hybrid fusion to a textbook [11]. This chapter concentrated on the nuclear aspects, while not ignoring the plasma. By contrast, this article concentrates mostly on the plasma aspects, while not ignoring the nuclear, and may be regarded as a companion to the book chapter.

Now let’s get to fusion’s difficulties. Consider first ITER [22]. When it was approved in 2005, the construction cost was estimated at about 5 billion euros, with a start date of 2106. In 4 years, the cost has tripled to 16 billion euros, and the start date to 2019 [23]. Some estimates have put the cost of ITER even higher. Here is Senator Diane Feinstein [24], chair of the subcommittee on Energy and Water Development of the Senate on the rapidly increasing cost of ITER:

“We provide no funding for ITER until the department (of energy) provides this committee with a baseline cost, schedule and scope.”

Now consider NIF, another multibillion dollar machine. It was supposed to achieve ignition in FY 2012. As of this writing (fall 2013 and winter, 2013–2014) it has not only failed, but has failed in spectacular fashion. While it now routinely generates laser shots of well over a megajoule, the best gains they have achieved are still <10−2, more than three orders of magnitude below original predictions. The Lawrence Livermore Laboratory has recently published a paper on where NIF is now, pointing out that on their best shot, they achieved about 1015 neutrons, about 3 kJ [25]. Recent efforts have done somewhat better [26].

Here is the House Appropriations Committee [27] on the failure of NIF to achieve ignition:

“As the first ignition campaign comes to a close in fiscal year 2012, it is a distinct possibility that the NNSA will not achieve ignition during these initial experiments. While achieving ignition was never scientifically assured, the considerable costs will not have been warranted if the only role of the National Ignition Facility (NIF) is that of an expensive platform for routine high energy density physics experiments.”

Thus fusion’s two gigantic flagships, ITER and NIF appear to be taking in water. However NIF is, and ITER unquestionably will be unique, priceless, world class resources which this author is confident will ultimately make very major contributions to fusion. But fusion has long term problems, which are even more serious. While these short and long term problems are not obviously related, a more credible long term strategy will help in the short term as well. The fact that fusion breeding fits in very well with todays and tomorrows likely nuclear infrastructure so that it could be introduced gradually and seamlessly enhances the case further.

To illustrate ITER’s long term problems, consider its goal as stated on the ITER web site (www.iter.org):

“The Q in the formula on the right symbolizes the ratio of fusion power to input power. Q ≥ 10 represents the scientific goal of the ITER project: to deliver ten times the power it consumes. From 50 MW (megawatts) of input power, the ITER machine is designed to produce 500 MW of fusion power—the first of all fusion experiments to produce net energy.”

So how close would we be to a reactor? The 500 MW of fusion thermal power typically has an efficiency of conversion to electricity power of about 1/3, so it generates about 170 MWe. However the driver, beams or radiation need about 50 MW. But accelerators and radiation sources are not 100 % efficient either. Again, one third is a more reasonable estimate, so the drivers would need 150 MW of the electric power produced, leaving all of 20 MWe for the grid. Clearly ITER would have to be greatly improved before it could begin to be regarded as an economical power source.

But what about the fact that ITER could be a burning plasma so much less input power is needed. Again, here is the ITER web site:

“The ITER Tokamak will rely on three sources of external heating that work in concert to provide the input heating power of 50 MW required to bring the plasma to the temperature necessary for fusion. These are neutral beam injection and two sources of high-frequency electromagnetic waves.

Ultimately, researchers hope to achieve a “burning plasma”—one in which the energy of the helium nuclei produced by the fusion reaction is enough to maintain the temperature of the plasma. The external heating can then be strongly reduced or switched off altogether. A burning plasma in which at least 50 percent of the energy needed to drive the fusion reaction is generated internally is an essential step to reaching the goal of fusion power generation.”

Hence a burning plasma does not seem to be anything like one of ITER’s initial goals, but taking their figure of a reduction of input power by 50 %, this means that only 25 MW of external power is needed, leaving perhaps 100 MWe for the grid; small power for a $20B facility. In this paper the original Large ITER is also considered [28]. Roughly it produces about 3 times the power, still with Q = 10. At the time the switch was made, the cost of Large ITER was estimated to be about a factor of 2 larger than the cost of ITER. However the cost is very much a moving target; who knows what Large ITER would cost, were estimated today. Here we still do consider mostly a Large ITER based breeder.

In any case, the performance of ITER would have to be upgraded by a very great amount before it could be considered as a potential economical power source. But this could be particularly difficult for ITER, even if it requires no input power. The reason is that tokamak performance has always been constrained by what this author has called ‘conservative design rules’, to be discussed in the next section. These rules indicate that a pure fusion tokamak could be extremely difficult, whereas a tokamak breeder can operate well within these constraints.

Now let’s consider NIF another multibillion dollar machine. Let’s stipulate a gain of 100 from a megajoule laser pulse (current best gains 10−3–10−2), a laser wall plug efficiency of 3 % (currently <1 %) pulse rate of 15 Hz (currently about 1–2 shots per day) generating the same 1.5 GWth, or 500 MWe, just as one would estimate for Large ITER. However each laser pulse needs 30 MJ of electricity to drive it, leaving all of 50 megawatts for the grid.

Hence even stipulating the maximum success for either ITER or NIF, we are still very, very far from economical fusion. This is the serious long term problem that fusion has. After all, if neither ITER nor NIF brings us very close to fusion, what are our sponsors paying for; another half century, or century of effort starting with their success? The great advantage of fusion breeding is that ITER and NIF sized devices could be ends in themselves rather than stepping stones to who knows what DEMO, built who knows how many decades later? Using breeding the author believes fusion could very likely make a major impact on the energy systems in our grand children’s prime (mine are 9, 12, and 14); without, it maybe their grand children’s.

Yet what are the alternatives to tokamaks and lasers? Each of these technologies were developed through a four to five decade international effort, costing billions to get to where they are today. Many other concepts have their proponents who assure us that they can achieve fusion very quickly if only we fund them at the required level. For instance Robert Burke claims that we could get there very quickly via heavy ion beam fusion [29]. In the past, the proponents of these concepts have sought government funding, but recently they have been seeking venture capital funding. One such enterprise, Fusion Power Corp. of Sacramento CA, promises commercial power in 10 years [30] via heavy ion beam inertial fusion. But the high-current ion accelerators and/or storage rings required for heavy ion fusion are at this point merely concepts that have been studied theoretically for over 30 years at Lawrence Berkeley National Laboratory (LBNL) and other labs, but never built. Their feasibility has not been demonstrated, and they would cost billions to build. Is that kind of money available in the private sector for a speculative concept? If so, we can only wish them the best of luck. General Fusion Company in Vancouver Canada, funded by Jeff Bezos of Amazon at $30 M, puts even that time scale to shame. Using magnetized target fusion [31], a concept that was studied over the past 30 years at the Naval Research Laboratory (NRL) and the Los Alamos National Laboratory (LANL), they promise break-even by 2014 and a commercial reactor by 2020.

Maybe; and of course maybe a genius will invent a commercial fusion reactor in his or her garage.

But more realistically, any other concept, starting from where it is now will probably take the same time and effort to get to where tokamaks and lasers are today. And this of course assumes that these other concepts are even as good, despite the fact that they have already been rejected in favor of tokamaks and lasers. Also without getting very far into the politics, is there really any chance that congress would appropriate many billions more for say a stellarator if ITER fails, or a heavy ion accelerator if NIF continues to fail? What with fracking and cheap gas now, do our sponsors really have the stomach for this? Who knows, in the dim distant future, stellarators or heavy ion accelerators may prove to be superior to tokamaks or lasers. But realistically, the only way we will learn this is if they are follow on projects to successes at ITER and NIF.

To this author’s mind the best hope for fusion is to get an ITER or NIF like system to be an economical power producer. The best way to achieve this is to use fusion neutrons to breed fuel for conventional nuclear reactors, likely, but not necessarily light water reactors (LWR’s). The more than order of magnitude increase in Q in the breeding blanket could provide just the boost needed to accomplish this. Let us be clear here, that by an order of magnitude increase in Q, we included the power produced directly by the fusion reactor plus the power of the fuel, which is produced by the fusion reactor, but is burned elsewhere.

But of course this results in a difficult dilemma. Fission people mostly (but not unanimously) think they have enough fuel, and are fighting for their own survival on other fronts. For instance Germany plans to mothball its 17 nuclear reactors, and hence is going through something of an energy crisis now. Also fusion people do not want to get their hand dirty. This paper attempts to take on and answer these arguments.

Section II reviews magnetic fusion. It concentrates on the tokamak in some detail. It discusses other options in much less detail, and mostly makes the case that none of these are nearly ready for prime time. Section III does the same for inertial fusion, concentrating mostly on lasers, and making the case that these are the only reasonable option at this point. Section IV reviews the nuclear issues, concentrating on fusion breeding and gives a possible road map for fusion to produce large scale power by mid century or shortly thereafter. Section V reviews the ‘energy park’, a sustainable approach to producing terawatts of economical, environmentally viable power for a world with 10 billion people. A fusion breeder produces fuel for about 5 thermal reactors, likely 5 LWR’s, of equal power, and a fast neutron reactor, also of equal power, which burns the actinide wastes. Only thorium goes in, only electricity and/or manufactured liquid fuel go out. Section VI draws conclusions.

I will close this introduction with an anecdote. A few years ago I was at an international fusion workshop. The head of the fusion effort of a small European country told all he and his group were doing for ITER. In one of my own less than stellar moments, I jumped on him, saying that he was only getting lip service, and the larger European countries did not value his contribution. He answered that they are indeed a small, but important part of ITER. Then when I got up and gave my pitch for fusion breeding, he jumped on me and said only pure fusion made sense and breeding or any other nuclear option only was a waste of effort.

By serendipity, he and I rode together to the airport. First I apologized for my remarks, and admitted that it did indeed look like his group was doing important work. Then he went on to say that he actually understood that fusion breeding or some other hybrid approach was ultimately the only hope for ITER. I asked him why he landed on me like a ton of bricks. He said, I did not understand, the German Greens are very powerful, and with any hint of nuclear, they would go on the attack against ITER, and likely derail it.

This article has no concern for the sensitivities of the German Greens.

Magnetic Fusion

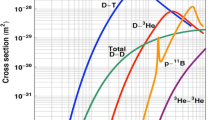

There are many different possible approached to magnetic fusion. How does one compare them? There are many different potential yardsticks, but we will choose two. The first is the triple fusion porduct nTτ, where n is the density in m−3, T is the temperature in keV, and τ is the energy confinement time in seconds. At fusion temperatures, the DT fusion reaction rate 〈σv〉, is roughly proportional to the ion temperature squared. For instance at 10 keV, 〈σv〉 = 1.1 × 10−16 cm3/s, at 20, 4.2 × 10−16. Since the fusion power per unit volume is nDnTW〈σv〉, where W is the fusion energy per reaction, 14 MeV for the neutron and 3.5 for the alpha particle, this power density is roughly proportional to n2T2. However the input power density is simply nT/τ, so the ratio of fusion power to input power, the Q of the device is roughly proportional to nTτ.

Since the whole idea of magnetic fusion is to contain the energy of the plasma, the second obvious measure of a magnetic fusion device is how much energy E does the device confine in Joules. With these two measures of performance, we will compare the various magnetic confinement configurations.

We first discuss the tokamak, which is the main part of this section. Then we briefly discuss other confinement schemes. Not only is the tokamak way ahead of the other devices, many of these others have what appear to this author to be fatal flaws. These flaws are, in many cases, quite simple in concept and indeed appear to be irrefutable. The very simplicity of these flaws, combined with the many orders of magnitude in performance measure that separate these devices from tokamaks, argue very powerfully in favor of tokamaks. Alternative systems undoubtedly can provide a great deal of fundamental information on plasma confinement, but is this the goal? Is it worth the additional billions to achieve it? To this author, the goal is to achieve fusion breeding or pure fusion in the shortest possible time, and the way to get there is to concentrate on the device, which is, by many orders of magnitude, the closest to the goal.

This is not to say tokamaks are a slam dunk. There are serious obstacles that must be overcome before tokamaks can be viable fusion breeders, or pure fusion devices. However they have many fewer obstacles than any other configuration.

Tokamaks

History and Development

The worldwide magnetic fusion has concentrated on the tokamak approach for the last 40 years. Very briefly, a tokamak is a toroidal plasma carrying a toroidal current, confined in part by a toroidal magnetic field. In various modern high performance tokamaks around the world, the major radius varies from less than a meter to more than 3 m, the minor radius from 0.2 to more than 1 m and the toroidal field is typically between about 2.5 and 10 T (with 5 T being a estimate if one wishes to use a single parameter). Electron densities are in the range of 1019–20/m3, electron and ion temperatures between 1 and 15 keV and plasma currents are typically in the Meg amp range. Generally today’s tokamaks use copper toroidal field coils. However while satisfactory for pulsed experiments, these would be enormous power drains in continuous or high duty cycle operation. Hence there is wide agreement that superconducting coils would have to be used in any reactor. The plasmas is heated by Ohmic heating, but this is not nearly enough; supplementary heating is necessary. Usually neutral beams are used for heating, but various types of electromagnetic waves are also being considered.

There have been three large tokamak experiments using 40 MW or more of neutral beam to power to tokamak, JT-60 in Japan, JET in England, and TFTR in the United States; but TFTR has been decommissioned in 1998. Only the latter two have run with DT plasmas. In a brief digression, the author expresses the opinion that it was a great mistake to decommission TFTR. The logic might have been that it had achieved everything it could, but that logic did not convince the sponsors of JET and JT-60, and those tokamaks have gone on another 15 years and have achieved a great deal. The main effect of our decision is that the United States can no longer play in the big leagues in tokamak research. However the author is convinced that there is enough residual tokamak knowledge here that we can rejoin at any time. This article will propose that we do just that.

The tokamak effort has been incredibly successful. A thorough review of tokamak performance, up through the late 1990s has recently been given by the author [5]. In Fig. 1a is shown a very rough plot of the triple fusion product as a function of year (much more detail is given in Ref. [5] ). In Fig. 1b is shown the number of transistors on a chip as a function of year (Moore’s law) [32]. The latter has been called a “25 year record of innovation unmatched in history”. But the slopes of the two graphs are about the same. To this author, the period from about 1970 to about 2000 can be regarded as the golden age of tokamaks.

However there is one important difference between Fig. 1a, b. At every point along the curve in Fig. 1b, the semiconductor industry was able to produce something useful and profitable. Furthermore, after the late 1990s that curve kept advancing, while Fig. 1a leveled off. The tokamak program, despite its success, is still several orders of magnitude away from producing anything economically viable; and the cost of the follow on projects gets very high. However for tokamaks, the period of rapid advance shown in Fig. 1a corresponded to the period of constructing larger and large tokamaks. However there have been no large tokamaks built since, so the performance, as measured by the triple fusion product is about where it was in 1997. What has improved are other figures of merit, principally the discharge time, going from say a second to tens of seconds (in JT-60) [33–38].

Both TFTR and JET have operated with DT plasmas, so they have produced 14 MeV neutrons in appreciable quantities. In a particular shot, JET has generated about 1019 in a 1 s pulse [39, 40], or generated about 20 MJ of neutron energy, giving a Q of about 0.5. JT-60, in deuterium plasma has produced equivalent Q’s higher than unity [33, 34] by using what they called a W shaped diverter. However, for one reason or another, these plasmas all terminated prematurely (after about 1 s). In plasma which persists as long as the beam, the Q is much smaller, typically about 0.2. In Fig. 2, redrawn from Ref [39] are shown neutron rates from two separate discharges in JET, one which terminates and one which persists. It would seem that one very important milestone for both JET and JT-60 would be to see if it can achieve a persistent Q ~ 1 discharge.

The JT-60 web site specifies that it has achieved a triple fusion product of 1.6 × 1021 m−3 keVs, and contains plasma energy of about 9 MJ. The web site shows the progress of nTτ as a function of year. It shows a large advance, up to the late 1990s, followed by about 15 years of flat line.

The International Tokamak Experimental Reactor (ITER)

Since follow on experiments to TFTR size tokamaks get so large and expensive, the world has made a decision to cooperate on the construction of a single large reactor ITER. It has had a troubled political and economic history. Its origin was in the 1985 summit meeting between President Ronald Reagan and General Secretary Mikhail Gorbachev. They proposed cooperating on a single large joint tokamak. It was originally called INTOR for international torus. Design work began on it but few decisions were made. In 1999, the project was to be an 8 m major radius machine, in which about 150 MW of neutral beam heating would generate about 1.5 GW of neutron power for a Q of about 10 [28]. The cost then was estimated at about $20 billion, $10B for construction, and then another $10B for operating expenses over a 10 years period. The international partners were the Soviet Union (and subsequently Russia), the United States, the European Community, China, Japan and South Korea.

Subsequently the United States, deciding that the project was too expensive, pulled out. The remaining partners ultimately decided to agree on a smaller, less expensive machine. The new ITER was to be a 6 m major radius machine which would use about 40 MW of beam power to produce about 500 MW of fusion power [22]. The cost was estimated at about $10B, now $5B for construction and $5B for operation over 10 years. The United States rejoined in 2003. However there was now another controversy; where to build it. Both the European community and Japan put in strong proposals to host it. The United States, Japan and Korea voted for Japan, while Europe, Russian and China voted for Europe (Carderache, France). With the vote tied, there was a standoff, which lasted for several years. Finally in 2005, the partners decided to construct ITER in France. By this time, India joined the consortium, bringing the total number of partners to 7. Shown in Fig. 3 is a schematic of ITER taken from its web site (www.iter.org).

However with agreement on the site, ITER’s problems were far from over. The construction cost was greatly underestimated. The original 5B euro estimate in 2006 has more than tripled to over 16B euros in 2010, and the completion date has slipped from 2016 to 2019 [23]. This undoubtedly motivated Senator Diane Feinstein’s comment cited in the introduction. However at least since 2010, according to the ITER web site, the completion date has not slipped any further.

Whether construction of ITER was a wise decision or not, ITER is what we have got. The only realistic option for magnetic fusion is to get it to work as well as possible. On the ITER web site, there is talk of a DEMO as a follow on project. This would potentially generate electricity for the grid. However as we will show in the next section, in order to do so, this DEMO must get over a series of obstacles, which have constrained tokamak operation for half a century. It is extremely important that while these obstacles prevent pure fusion, they do not prevent fusion breeding.

Conservative Design Rules (CDR’s) for Tokamaks

The Rules

Tokamak operation is subject to four very simple parameter constraints, which are well-grounded in theory, extensively confirmed by experiment, and generally accepted by the fusion physics community. Although these constraints have been known for decades, there has not been much discussion of their impact collectively on fusion reactor operation. We have coined the term “Conservative Design Rules” (CDRs) to describe this set of constraints, and in Ref. [8] we discussed in detail the limits that these rules, taken collectively, impose on fusion power output. We emphasize that the Conservative Design Rules are not controversial. The paper [8] has been in the literature 5 years; its conclusions have not been challenged. The author has discussed the CDRs in seminars at MIT, University of Maryland, NRL, and APS headquarters, again without challenge. Thus, there is a heavy burden of explanation and proof on any proponent of pure fusion who assumes reactor performance in excess of the CDRs.

The other thing about conservative design rules is their simplicity. One does not have to know about any of the complexities of tokamaks; fishbones, transport barriers, grassy ELM’s, ITGI’s, density pedestals, etc. In fact the Sam Cooke approach (“Don’t know much about tokamaks…”) suffices. There are surely much more complicated physical effects further limiting tokamak behavior (i.e. the afore mentioned), but conservative design rules bound all of these, are very simple, and are well established in the tokamak science base. But they have not been emphasized in the literature, nor have their very important implications for fusion been discussed.

It is important to note that conservative design rules have nothing to do with transport. Good transport cannot improve things, bad transport can only make things worse. In fact even if there is no input power, for instance an ignited tokamak, conservative design rules still place the same limits on the fusion power a tokamak can produce. They almost certainly prevent economical pure fusion.

The conservative design rules were discussed in detail in Ref. [8]. Here we will give a shortened version and leave additional details to the reference.

Any tokamak run as a reactor can in all likelihood withstand existing levels of transport. What it cannot tolerate are many (or even any) major disruptions. Thus in the relevant parameter space, there is a boundary separating regions where a tokamak may disrupt. A commercial reactor should operate as far from this red zone as possible, thereby motivating the author’s term ‘conservative design rules’. While disruptions are still not yet fully understood, they are almost certainly rooted in MHD (ideal and resistive) effects in the plasma. MHD instabilities are driven by the current and pressure gradient. The first and most important design principle concerns the plasma beta.

To simplify the discussion here, we will assume the plasma has circular cross section in the poloidal plane, more general configurations are discussed in Ref. [8].

Troyon and Gruber [41, 42] achieved a theoretical breakthrough in understanding the pressure limit. They determined that the maximum beta was governed by what they called the maximum normalized beta βN. In terms of βN, the volume averaged plasma beta β was given by

where I is the current in Megamps, a is the minor radius in meters, and B is the toroidal field in teslas. Their calculations gave a value for βN, and from this β could be determined. If the plasma had no wall stabilization, they found a maximum stable βN of about 2.5 or a little greater; and with strong wall stabilization, it might be as large as 5.

In our conservative design, we will neglect wall stabilization. In a DT tokamak reactor, the wall is doing enough; absorbing and multiplying neutrons, dissipating heat from fast ions and radiation, being one end of a heat exchanger and breeder of 233U and/or T, etc. Furthermore tokamaks always operate with either divertors or limiters, so the wall cannot get that close to the plasma in any case. Hence we take Troyon’s most conservative value, since it will be furthest from the disruption threshold. Thus we take for our first principle of conservative design the condition that βN is 2.5 or less.

To make further progress while keeping our analysis as simple as possible, we assume a density and temperature profile for the plasma. For circular cross section with minor plasma radius a (more complicated geometries are analyzed in [8]), we take parabolic profiles

where ne is the electron density, assumed to be twice the deuterium and tritium density and Ti,e is the ion (electron) temperature. The spatially average density is no/2. The pressure is the product of the two, and the spatially averaged pressure is no(Teo + Tio)/3. Of course there may be effects from different profiles but they should not be major. For instance at the average beta, the center temperature may be higher (giving more fusion power) but cover a smaller average volume (giving less fusion power). In fact most profiles do not fill out nearly as much as a parabolic profile does, so the parabolic profile choice is rather optimistic.

If density and temperature are totally unrestricted, 〈σv〉, as a function of temperature, has a broad maximum at a temperature of Ti ~ 50 keV. However if β, that is total plasma pressure is held constant the maximum is at lower temperature, because this means the density is higher. Since β depends on Te + Ti, whereas the fusion rate depends only on Ti, we must make some assumption here. We assume Te = Ti/2, as is often characteristic of today’s beam heated tokamaks. (If the temperatures were equilibrated, the neutron power would be lower, obviously one can do this calculation for any electron temperature).

Calculating the neutron power by integrating over volume 〈σv〉 times the tritium times the deuterium density times the neutron energy, we find that the it is

where we have assumed nD = nT = no/2 (recall the units of no are 1020 m−3; Pn, MW; and lengths, meters; and temperatures, keV)

If beta is specified, then the density is proportional to T−1. The function χ(Tio)/T 2io is plotted in Fig. 4. It has a maximum at Tio of about 15 ≡ Tio(β). To get the average reactivity for the plasma, just multiply the ordinate by T 2io . Now expressing the density at which the maximum fusion rate occurs, we get

To determine no(β), note that the maximum βN can be is 2.5, consistent with out first design principle.

Now we introduce the second conservative design principle. Decades of plasma experience have shown the tokamaks cannot operate at densities above the Greenwald limit [43, 44] ). While this is more of an empirical law than one grounded in solid theory, it has held for two decades already. The Greenwald density limit (equal to no/2 for our assumed parabolic density profile) is given by

However the failure mode in approaching the Greenwald limit is often a shrinking of the plasma profile followed by a major disruption. Since major disruptions are basically intolerable in any reactor, we take as our second principle of conservative design that the density cannot be above ¾ of the Greenwald limit. To simplify the discussion, we do not consider further the Greenwald limit here; it places an additional restriction on the density as fully discussed in Eq. (8). Considering only the beta limited density gives a good idea of the constraints the tokamak operates under.

Both density limits depend on the current, and if this could increase indefinitely, there would be no problem. But from ideal and resistive MHD, we know that q, the safety factor equal to Br/BpR, where B is the toroidal field, Bp is the poloidal field, at the limiter or diverter, r = a, is nearly always greater than three. This then is the third principle of conservative design, namely that q(a) > 3. For tokamaks of circular cross section, the relations then simplify considerably since one can express q(a) very easily in terms of current. For circular cross section, the beta limited density is simply

and q(a) is given by

In this case, the maximum density depends only on magnetic field and aspect ratio(or geometry if the cross section is not circular) as well as βN, taken to maximize at 2.5, and q(a) taken to minimize at 3. Equation (6), as well as Eq. (3a) and Fig. 4 then give the maximum fusion power any tokamak can generate if it is limited by conservative design rules.

Notice that confinement does not come into these principles at all. This is not to say it is unimportant; the confinement and transport determine the external power needed to maintain the plasma profiles. However even if there were no losses (or else for instance an ignited plasma), these three design rules put serious constraints on what a tokamak can and cannot do. Good confinement cannot make things better, bad confinement can only make them worse.

We now discuss the fourth conservative design rule, the size of the blanket. For ITER and Large ITER, reactor sized tokamaks, our assumption is that the existing designs have room for an appropriate blanket which absorbs neutrons, breeds tritium, handles the heat load, etc. But if one wants to build a smaller tokamak, such as the scientific prototype, which we will discuss shortly, how thick does the blanket have to be? Here, the author has little expertise so only very qualitative matters are considered. The mean free path of neutrons with energies between about 1 and 14 MeV is about 15 cm in lithium, and about 6 cm in beryllium and thorium. All of these are important blanket materials for either pure or hybrid fusion. The mean free path for breeding and slowing down is even longer. Obviously the blanket has to be many mean free paths thick so as to prevent neutron leakage out the back, if one desires long life of the machine. Also one clearly desires to prevent activation of materials behind the blanket. Behind the blanket is usually a neutron shield, which itself is not thin.

Many references on fusion hybrids show schematics of the reactor along with the 2 m man standing along side it, and the blanket is about his size. Rarely are dimensions given. One exception is a rough schematic of a blanket shown in Ref. [45], reproduced here in Fig. 5. In this schematic, the blanket is between 1.5 and 2 m thick, and presumably there is no long term neutron leakage or activation of materials in back. Lidsky [45] when discussing a blanket for fission suppressed thorium cycle postulates a blanket 80 cm thick for just the fertile material. Hence, as a very rough rule of thumb, we will specify that the blanket has to be 1.5 m thick. We will call this the fourth conservative design principle. It applies only where one wishes to design a small (i.e. less than commercial size) reactor, and it imposes a certain minimum size on the experimental device which strives for steady state operation with DT. This design principle is more approximate than the other three, and it may be possible to design thinner blankets.

To summarize, the conservative design rules are:

That is it. What could be simpler? All one needs is the toroidal field, temperature ratio and geometry, and conservative design rules determine the maximum fusion power a tokamak can produce.

Once the magnetic field and geometry are specified, this specifies the minimum q(a), Eq. (8c), that is, the maximum current. If the tokamak is pressure limited, Eq. (8a) specifies the maximum total pressure, no(Teo + Tio)/3. Take the ion temperature of 15 keV, make an assumption of the electron temperature and one has the no, and from no, the neutron power. For a circular cross section and beta limited density, the formula for the maximum power allowed by conservative design rules is very simple;

Here we have assumed Ti = 2Te, as is typical for today’s beam heated tokamaks. However temperature equilibration is likely a more reasonable assumption for a reactor. Then, since more of the allowed pressure is taken up by nonreacting electrons, the fusion power is less. The density is reduced by ¾ and the power by 9/16. This then gives the maximum fusion power a tokamak can give. More complicated geometries are treated in [8], but very roughly, the power is increased by the elliptical elongation factor k.

If the ion temperature could be maintained at twice the electron temperature, it would certainly be advantageous. One way this might be accomplished is through what is called alpha channeling [46, 47]. Waves and particles in a tokamak interact in a complicated way in which the interacting particles move on some prescribed path in the six dimensional phase space. The idea of alpha channeling is to find a wave or set of waves, which move the alpha particles from the center of the tokamak to the edge, while at the same time significantly lowering their energy. The energy thus goes from the alphas to the waves, which can then be used for other purposes. The most obvious purposes are current drive and heating of the plasma ions. (The alpha particles preferentially heat the electrons by collisions.) In this way it might be possible to maintain an ion temperature twice the electron temperature in a reactor. As a total reach, it might be possible convert these waves to other waves which can exit the plasma and thereby harvest their energy directly, but this is obviously very speculative. So far preliminary thought is to use ion Bernstein waves driven by lower hybrid waves, and Alfven waves.

If the density is Greenwald limited rather than beta limited, the maximum power is reduced [8]. Basically where the density has to be lower than the beta limited optimum, the fusion power optimizes if the ion temperature is higher than 15 keV. By applying these conservative design rules to existing and proposed tokamaks in the next few sections, we will see that the maximum neutron power that can be expected from any proposed tokamak is well below the minimum level needed for economical power production.

Unlike the argument given in the Introduction where driver power and its efficiency, and output power and its efficiency in converting to electricity, were considered, conservative design rules are independent of all of that. They show the maximum power a tokamak can deliver, even a burning plasma with no power to drive the plasma or current (Q = infinity). Let us make this extremely optimistic assumption. However even then, the capital cost involved in building such a tokamak such as Large ITER, we optimistically assume at least $25B for a 500MWe reactor, and likely a large operating cost to boot, will in practice render it unsuitable for economical power production. But we will also see that tokamak breeders can function well within these limits, even though their Q is ‘only’ 10, well below infinity.

Recent Tokamak Experiments in the Light of These Design Principles

We summarize results here for TFTR and JT-60 in the light of conservative design rues. Results for other tokamaks, including JET, D3-D and Asdex are summarized in [8]. No tokamak up to now has exceeded the limits imposed by conservative design rules; in fact conservative design rules overestimate fusion by at least a factor of two in all cases.

TFTR: Results from TFTR were summarized in [48] Briefly it achieved spectacular results when operating in the hot ion supershot mode. These have peaked profiles, and the beam is important for both heating and fueling the plasma. It achieved a maximum fusion power of 10 MW for perhaps half a second. However it terminated by rapidly dumping all or a significant part of the plasma energy. The major radius was 2.6 m, the limiter radius was 0.9 m and the magnetic field was over 5T. This is all that is needed to get maximum parameters of the device according to the conservative design principles.

In Ref [48] there was a table of parameters of 4 supershots. A portion of the table is presented in Table 1. The rows in bold are from Ref [48], the rows in ordinary type are from conservative design principles. The central ion temperature is much higher than the optimum value of 15 keV, but the beta is still consistent because the hot part of the plasma is so narrow compared to the parabolic profile we have been assuming. In fact, their measured βN’s are smaller than what we have assumed in the conservative design. While they do not give q(a), for their circular cross section one can estimate it easily enough. In all cases q(a) > 3, so that the results are consistent with conservative design principles in this respect. There are two rows for the calculation of the central density from conservative design rules for q(a) = 3 and βN = 2.5, no(β) and no(G). For TFTR, the former is slightly smaller, so the density is beta limited, not Greenwald limited. In the last row of the table is given neutron power from Eq. (9). Notice that the neutron power per the conservative design is at least double the actual neutron power observed. Hence even though TFTR managed to get a much higher ion temperature than 15, it did not help very much. The reaction rate was higher in this region of high temperature, but the density was lower, and the volume of strongly reacting plasma was also smaller; the net effect being less neutron power than the conservative design rules would specify. Thus for TFTR, the conservative design rules over estimate the fusion power, typically by at least a factor of two.

In Ref. [8] we show that conservative design rules also overestimate the neutron power in JET, in both hot ion and long lived modes, by at least a factor of 2.

JT-60U: JT-60 and more recently its upgrade JT-60U is the largest of the tokamaks, but so far, has not operated with DT (http://www-jt60.naka.jaea.go.jp/english/index-e.html). Its parameters are a major radius of 3.4 m, a minor radius of 1.2 m (to the vacuum wall) and an elongation of about 1.4. The maximum magnetic field is about 4.2 T. Although JT-60U has not yet operated with DT, it has operated with DD plasmas, and from the DD neutron rate, they extrapolate to get the DT rate. In all their reported data, as regards βN (virtually always equal to or <2.5) q(a) (virtually always >3) and n/nG (virtually always <0.75), their results are consistent with the conservative design rules.

A great deal of their earlier effort consisted in developing what they called a W shaped diverter. Here, they reported their largest amount of fusion power, with the equivalent Q in a DT plasma going above unity, and with a great deal of the improvement coming from the new diverter. Figure 6 redraws their plot of equivalent Q vs current from these references. It reaches a maximum of 1.25, nearly double their previous results. However they point out that these are all transient results. In quasi steady operation, their DT equivalent Q’s were below 0.2. This result is similar to the experience of JET.

Some JT-60 data confirming conservative design rules. A plot of DT equivalent Q as a function of current for JT-60 for normal and W shaped divertors. A scatter plot of tokamak operation points in the parameter space of q(a) and normalized beta. Long lived discharges only operate in the region specified by conservative design rules. A plot of H factor as a function of density over Greenwald density, confirming another aspect of conservative design rules. A plot of normalized beta as a function of time in a long lived discharge

In their results for quasi steady plasmas, plasmas lasting longer than 5 times the energy confinement time, all their q(a)’s were greater than 3 and all their βN’s were <2.5. A scatter plot of their data is shown in Fig. 6.

Regarding density in their earlier results, they were always below the Greenwald limit. In Fig. 6 is shown a redrawn plot of their H factor, the fractional increase in confinement time when they operate in the H mode, as a function of n/nG. They have a single point at 0.8, at the worst confinement, and virtually all of their data is for 0.4 < n/nG < 0.6.

In their later results, they emphasized long time operation. This involved getting bootstrap current of over 50 % sustained for a long time and a βN sustained for over 20s. Shown in Fig. 6 is a plot of βN as a function of time is redrawn. While sustained for long time, it is still no greater than 2.5. Their q(a)’s were everywhere greater than 3, and their maximum densities reported were at about 0.5nG.

The Upshot

To summarize, the conservative design rules are very well based in theory and so far have constrained tokamak operation. In fact so far, as regards neutron production, a tokamak is doing well to achieve half the neutron rate specified by conservative design rules. To get powers like 3 GWth, as needed in a commercial reactor, but in a tokamak smaller, much higher Q, and less expensive than large ITER would stretch conservative design rules well beyond the breaking point. This then is the basis for the author’s assertion that commercial pure fusion reactors based on tokamak configurations are unlikely, at least until one can find a way around conservative design rules. However as we will show shortly, a breeding tokamak can operate well within the limits of conservative design rules.

Disruptions and Their Implications

To get an appreciation of the disruption problem tokamaks have, it is instructive to look at the energies involved. In the case of hot ion modes, JT-60, JET and TFTR typically dump about 15 % of the plasma energy abruptly on the walls. As JT-60 confined about 10 MJ, this means in these partial disruptions, about 1.5 MJ is dumped, presumably mostly in the form of radiation and energetic ions and electrons. This is significant energy to dump in an uncontrolled manner, nearly a pound of TNT.

In a worst case scenario, if the plasma density is sufficiently low, the disruption energy could be, and has been manifested as relativistic electron ring. It could have much more than 1 MJ energy. Once formed, there is nothing in the plasma to stop it, it will eventually hit the wall; and this could be enormously destructive.

But this is only for a partial 15 % disruption. Major disruptions dump all of the energy of both the plasma (10 MJ in JT-60) and poloidal field (about the same as the plasma energy), so in this case about 20 MJ of energy, in one form or other, are suddenly dumped on the wall. This is about 1.25 % of the total magnetic field energy for each channel (plasma and current) for about 2.5 % total. The plasma beta is likely quoted as considerably larger than 1.25 %, but then there is a good bit of magnetic field energy outside the plasma.

To estimate the energy available for disruptions in future devices, we will take as a rule of thumb that it is 2.5 % of the total magnetic field energy. Now the energies available get serious. For ITER it is about 160 MJ, about the energy of an 80 pound bomb, and for large ITER, which this paper sees as an optimal end product, it is 550 MJ, the energy of nearly a 300 pound bomb. If more energy is stored in the plasma than our 2.5 % of total magnetic energy, this is good for fusion, but makes disruptions still more dangerous.

But this is only the beginning of the problem. An 80 pound bomb equivalent going off in the confined space of ITER might cause the superconducting toroidal field coils to uncontrollably quench. This could happen. It has happened in the Large Hadron Collider in CERN, bringing the accelerator down for a year. However the CERN tunnel has a huge volume compared to the interior of ITER. An uncontrolled quench in ITER would turn the 80 pound bomb into a 3200 pound bomb. It is unlikely that the building, or much around it could survive. It is important to note that ITER stores an enormous amount of energy.

But hasn’t JT-60 shown that tokamaks can have very long pulses? See Fig. 6 for example. The answer is yes and no. Tokamaks can have very long pulses, but that certainly does not mean that they never disrupt. A very interesting statistical analysis of disruptions on JET has recently been published [49]. One of their important figures of merit is the disruptivity, the rate of disruptions in s−1, as a function of various parameters. Shown in Fig. 7 are disruptivities vs inverse q(a) and density over Greenwald density. There is a similar curve for disruptivity vs normalized beta.

There are two important take conclusions from this data. The first is that it confirms conservative design rules. Disruptivities rise sharply, by well over an order of magnitude as conservative design rules become violated. Secondly, the harmless looking disruptivities at low normalized beta, reciprocal q and density are not harmless at all. A disruptivity of 10−2 means a disruption every 2 min! Clearly this is unacceptable in a reactor.

Probably the most important job for both ITER and the scientific prototype (to be discussed shortly) is to find a fusion relevant regime where the disruption rate is close enough to zero that a reactor is possible. Clearly this will be much easier to do within the confines of conservative design rules, where a breeder can operate. As of now, there is no reason to believe that a disruption free regime exists outside of the conservative design rules where a pure fusion reactor must run.

A large airplane at speed and altitude has enormous kinetic and gravitational potential energy (in fact about the same as the stored magnetic energy in Large ITER), but people are inside, and the aircrew is able to control it. This is a reasonable analogy for tokamaks, and this author’s expectation is that its stored energy also can ultimately be safely controlled, but only within the constraints of conservative design rules.

The Scientific Prototype and Neutron Power of Various Tokamaks

In all his work, the author has argued [5–10, 50] that the next logical step for the American Magnetic Fusion Energy program is a steady state tokamak, called the scientific prototype, about the size of TFTR, run with a DT plasma, achieves Q ~ 1 and which breeds its own tritium. After all tritium self sufficiency ultimately is absolutely essential, for either pure fusion or fusion breeding. If we do not tackle it now, then when will we? The scientific prototype is not some small thing to add to the American base MFE program; rather it is a very large project which would replace the entire base program. The tremendous resource of theoretical, experimental and computational expertise in the American base program would be refocused on this single large project. If the ~$350 M per year that comprises the base program were focused on the scientific prototype for 15 years, that would make over $5B available, not much less than the cost of a 1GWe LWR.

There are two crucial plasma physics problems, which must be solved by the scientific prototype. The first is steady state operation. Ideally this would mean no Ohmic current drive. However in practice, a small amount of Ohmic drive may be unavoidable, and may even be acceptable if the plasma on time is long compared to the off time necessary to recharge the transformer. This is particularly the case if the tokamak is viewed principally as a fuel factory and only secondarily as an on line power source. JT-60 has already demonstrated discharge durations of much more than 5 energy confinement times at the required normalized betas, q(a)’s, and density. One of their runs, at a normalized beta of 2.5, for half a minute is shown in Fig. 6. ITER is designed to operate with 400 s pulses, about 100 energy confinement times. But in all of these, the current is driven at least in part Ohmically.

Recently Luce [51] wrote a review article showing the advances in achieving steady state behavior. At lower power, some tokamaks with superconducting toroidal field coils have run for very long times. The question is whether this can be extended to more powerful tokamaks. Another problem he sees is that the power required to drive the current (Watts per Amp) might be too high. However Luce envisions only pure fusion. If fusion breeding is the goal, where the fusion reactor produces ten times the neutron power in 233U fuel, a bit more power to drive the current is not necessarily such a big deal.

The second crucial plasma physics goal to achieve in the the scientific prototype would be to eliminate or greatly reduce disruptions. Where a disruption in the scientific prototype would release only a small fraction of the energy released in a major disruption in ITER, it could be an ideal vehicle for his study. To achieve these twin plasma physics goals will take every bit of expertise in the American MFE effort, as well as whatever help we can get from the rest of the world. As previously stated, this is no cake walk, but the author believes it is achievable.

The scientific prototype will be a prodigious source of alpha particles even if not enough to dominate the heating. Hence it could also be a laboratory for testing ideas of alpha channeling [46, 47], at least the basic principles even if there is not enough alpha particle power to heat the plasma very much.

While proposing this in the context of fusion breeding [5–10], where the blanket would also breed 233U; it is also a viable concept for pure fusion, where the blanket would only have to breed tritium [50]. Since Q ~ 1, the Quixotic attempt to do a small scale ignition experiment would be abandoned. Let ITER and NIF handle the burning plasma and ignition issues. The American MFE effort has proposed many of such burning plasma experiments, CIT, BPX, IGNITOR, FIRE…,. Would we have been better off proposing the scientific prototype instead? We could hardly have been worse off. None of these burning plasma experiments sold, and the United States has had no large (i.e. the size of TFTR, JT-60 and JET) tokamak experiment for more than 15 years now.

While the scientific prototype would not be a burning plasma, it still would produce alphas, and unlike ITER, would do it on a steady state, or at least a high duty cycle basis. Hence it could also investigate extraction of the alpha energy on a small, but steady state basis.

The scientific prototype cannot be exactly like TFTR because its major radius is only 2.6 m, and the center is filled with all sorts of stuff (e.g. toroidal field coils, etc.). Thus we must take a larger major radius. We take a major radius of 4 m, like TFTR, but now leaving room for a 1.5 m blanket. The minor radius is 1.3 m, so as to keep the aspect ratio as in TFTR, and would keep the circular cross section as TFTR did (although other geometries could obviously be considered). The hope is that it would produce about 40 MW of neutron power with about a 0.25 MW/m2 neutron wall loading. The scientific prototype would reverse conventional wisdom, which has proposed the long list of ignition experiments. Instead of sacrificing every tokamak figure of merit for ignition, the scientific prototype would sacrifice ignition for a significant advance in every other figure of merit, i.e. steady state operation, eliminating disruption in fusion relevant regimes, extended DT operation, tritium breeding…. No other country, at this point is ahead of the United States here, we have plenty of nuclear expertise, the project seems achievable, and it is hard to see how either pure fusion or fusion breeding could advance very far without the knowledge provided by the scientific prototype.

In Table 2 are shown parameters of JT-60, the scientific prototype, ITER, and large ITER, the latter two taken from Refs. [22, 28]. The scientific prototype gives about the 55 MW of neutron power according to conservative design rules. If one takes the rule of thumb that actual tokamaks produce about half the power of the conservative design estimates, as with TFTR and JET, then the estimate of 20–40 MW seems reasonable for the scientific prototype.

The conservative design principles show ITER and large ITER both doing better than actual predicted design values. However if one takes the typical estimate that predicted neutron power is about double the best achieved, large ITER gives about the design value, but ITER does especially well. It may well turn out to do somewhat better than expected. It has higher current density than either large ITER or the scientific prototype. But despite this larger current density, since q(a) is 3.5 according to Ref [22], the current could still be increased by about 15 % and still remain consistent with conservative design rules, so power might be increased by 30 %. Note that for both ITER and Large ITER, the Greenwald density is considerably less than the beta optimized density, meaning that the ion temperature has to be considerably more than 15 (22 for the former, 36 for the latter), see Ref. [8] for a more complete discussion of the case where the density is Greenwald limited rather than beta limited. In any case, when quoting power levels expected for ITER and large ITER, we stick to those calculated by the designers, 500 MW and 1.5 GW.

Several alternate American MFE proposals seem to be in the works and under serious discussion. We seems to be on the threshold of proposing a large stellarator effort, but this a bad idea. Two large American stellarator projects have already been canceled. We are hopelessly behind the Germans and Japanese in this effort and have no prospect of surpassing them. Better to let them run with the ball and support their stellarator projects, if they would support the scientific prototype. Another idea attracting interest is a larger spherical tokamak experiment. This is if anything a worse idea. Later, this paper will argue against such a proposal, as well as several other alternative concepts.

Stellarators

Our discussion of stellarators and other fusion devices, will be very brief, and will mostly make the case that none are viable alternatives to tokamaks at this time. Also this section, and subsequent sections on MFE, will site many fewer references. Information given here, which is not specifically cited, can generally be confirmed by doing a Google search of the device and going to the appropriate web site; using the information directly on the web site, as well as various articles and reports linked to it.

Despite this negative introduction, this author has no reason to think stellarators cannot ultimately work, they just have not so far. If they could work, they would have real advantages. The rotational transform is produced by external coils, so there is no plasma current. Current is one of the two causes of MHD instabilities, pressure gradient being the other. Hence disruption should be much less of a problem than in tokamaks, but it is worth noting that the stellarator like a tokamak, also stores a tremendous amount of energy.

Counterbalancing these advantages, is the fact that a stellarator is an inherently three dimensional configuration, unlike a tokamak which is a two dimensional configuration. In my view, as a theoretical physicist of normal capability, who has trouble analyzing a complex two dimensional configuration, anyone who can analyze a complex three dimensional configuration is a physicist of rare and special talent. Not only is the problem much more difficult, the parameter space much greater. For instance Wendelstein 7-X has 5 bumps going around the major circumference. Why not 4 or 6? Also because of the three dimensional configuration, neoclassical losses are greater than in a tokamak. A great deal of effort in the stellarator community is dedicated to coming up with configurations, which minimize these losses. Because of its three dimensional nature and the demands of minimizing losses, the coil configurations are very complicated and must be engineered to very precise tolerances. As difficult as it may be to fit a blanket around a tokamak plasma, an it might be even more difficult in a stellarator.

So far the largest stellarator is the Large Helical Device (LHD) in Japan (http://www.lhd.nifs.ac.jp/en/). To this author’s mind, its achievements are not very encouraging. It seems to run in either one of two modes, a low density, high temperature mode, or a high density, low temperature mode. It has not been able to achieve high density and high temperature so far. The LHD web site gives the triple produce as 4 × 1019, about 1/40 that of JT-60. Also the web site gives the maximum contained energy so far as 1.4 MJ, about 1/6 that of JT-60.

The Germans are constructing a larger stellarator still, Wendelstein 7-X. The construction is expected to be finished in 2014, with first plasma in 2015. They hope to achieve both high temperature and high density simultaneously, and they hope to achieve a triple fusion product of 4 × 1020, 10 times higher than LHD, but still below JT-60. A schematic of the plasma and some of the field coils is shown in Fig. 8.

The nearly infinite parameter space available for stellarators may be an opportunity, but it also poses a programmatic danger. How can we explore all of it with our very finite resources of dollars and time? LHD is a disappointment, so build Wendelstein 7-X; if that does not work, build a heliotron, if that disappoints, build a torsotron…. Where does it end?

While some in the United States argue that we start our own stellarator program, this is a bad idea. We are hopelessly behind the Germans and Japanese. Better for now to let them run with the ball and help them any way we can, at least until they can prove that a stellarator is definitely superior to tokamaks.

Spherical Tokamaks (ST’s)

The spherical tokamak is like a tokamak except that it has an aspect ratio of approximately unity, so the plasma is nearly spherical is shape. Shown in Fig. 9 is an image of the ST plasma in the Culham Laboratory, MAST. It is nearly spherical with a radius of about 1.5 m (http://www.ccfe.ac.uk/MAST.aspx). However notice that the toroidal field coils all merge to a narrow conductor running down the center, a conductor whose radius is about 10–20 cm judging from Fig. 9. One of the strongest assets of the ST is that it runs at a much lower magnetic field and much higher beta than a tokamak.

The ST at PPPL is called NSTX. This author has not found information on the web sites of either MAST or NSTX, which allows one to easily discern the energy contained or the triple fusion product (unlike the JT-60 and LHD web sites, where this information is prominently displayed). However recent studies of confinement enhancement by lithium walls give this information [52]. The presence of the lithium walls in NSTX increases the confinement time from about 35 ms to about 70. The energy contained is about 150 kJ, or about 20 kJ/m3, and the triple product is about 5 × 1018. This is about where tokamaks were 30 years ago according to Fig. 1. On a more detailed plot [5], one can see that NSTX is about where ASDEX and T-10 were in about 1985, but about an order of magnitude below PLT then. Thus ST’s have a long way to go before they are in the league of tokamaks, and possibly will not get there at all.

But they have a much bigger problem than their late start in the race. How will the center post handle the intense flux of 14 MeV neutrons in a reacting plasma? According to the fourth conservative design rule, plasma facing surfaces should have a depth of about a meter and a half! Certainly the coil cannot be superconducting, so the field coils will necessarily dissipate a tremendous amount of energy. And how does one cool the center post?