Abstract

We define new families of noncommutative symmetric functions and quasi-symmetric functions depending on two matrices of parameters, and more generally on parameters associated with paths in a binary tree. Appropriate specializations of both matrices then give back the two-vector families of Hivert, Lascoux, and Thibon and the noncommutative Macdonald functions of Bergeron and Zabrocki.

Similar content being viewed by others

1 Introduction

The theory of Hall–Littlewood, Jack, and Macdonald polynomials is one of the most interesting subjects in the modern theory of symmetric functions. It is well-known that combinatorial properties of symmetric functions can be explained by lifting them to larger algebras (the so-called combinatorial Hopf algebras), the simplest examples being Sym (Noncommutative symmetric functions [3]) and its dual QSym (Quasi-symmetric functions [5]).

There have been several attempts to lift Hall–Litttlewood and Macdonald polynomials to Sym and QSym [1, 7, 8, 11, 12]. The analogues defined by Bergeron and Zabrocki in [1] were similar to, though different from, those defined by Hivert–Lascoux–Thibon of [8]. These last ones admitted multiple parameters q i and t i which, however, could not be specialized to recover the version of Bergeron–Zabrocki.

The aim of this article is to show that many more parameters can be introduced in the definition of such bases. Actually, one can have a pair of n×n matrices (Q n ,T n ) for each degree n. The main properties established in [1] and [8] remain true in this general context, and one recovers the BZ and HLT polynomials for appropriate specializations of the matrices.

In the last section, another possibility involving quasideterminants is explored. One can then define bases involving two almost-triangular matrices of parameters in each degree. We shall see on some examples that if these matrices are chosen such as to give a special basis for the row and column compositions, special properties arise for hook compositions (n−k,1k). For example, one can obtain a basis whose commutative image reduces to the Macdonald P-polynomials for hook compositions.

One should not expect that constructions at the level of Sym and QSym could lead to general results on ordinary Macdonald polynomials. Even for Schur functions, one has to work in the algebra of standard tableaux, FSym, to understand the Littlewood–Richardson rule. However, the analogues of Macdonald polynomials which can be defined in Sym and QSym have sufficiently much in common with the ordinary ones so as to suggest interesting ideas. The most startling one is that the usual Macdonald polynomials could be specializations of a family of symmetric functions with many more parameters.Footnote 1

2 Notations

Our notations for noncommutative symmetric functions will be as in [3, 10]. Here is a brief reminder.

The Hopf algebra of noncommutative symmetric functions is denoted by Sym, or by Sym(A) if we consider the realization in terms of an auxiliary alphabet. Bases of Sym n are labeled by compositions I of n. The noncommutative complete and elementary functions are denoted by S n and Λ n , and the notation S I means \(S_{i_{1}}\cdots S_{i_{r}}\). The ribbon basis is denoted by R I . The notation I⊨n means that I is a composition of n. The conjugate composition is denoted by I ∼.

The graded dual of Sym is QSym (quasi-symmetric functions). The dual basis of (S I) is (M I ) (monomial), and that of (R I ) is (F I ). The descent set of I=(i 1,…,i r ) is \(\mathrm{Des\,}(I) = \{ i_{1},\ i_{1}+i_{2}, \ldots, i_{1}+\cdots+i_{r-1}\}\).

Finally, let us recall two operations on compositions: if I=(i 1,…,i r ) and J=(j 1,…,j s ), the composition I.J is (i 1,…,i r ,j 1,…,j s ) and I▷J is (i 1,…,i r +j 1,…,j s ).

3 Sym n as a Grassmann algebra

Since for n>0, Sym n has dimension 2n−1, it can be identified (as a vector space) with a Grassmann algebra on n−1 generators η 1,…,η n−1 (that is, η i η j =−η j η i , so that in particular \(\eta_{i}^{2}=0\)). This identification is meaningful, for example, in the context of the representation theory of the 0-Hecke algebras H n (0). Indeed (see [2]), the quiver of H n (0) admits a simple description in terms of this identification. Of course, this identification is not an isomorphism of algebras.

If I is a composition of n with descent set D={d 1<⋯<d k }, we make the identification

For example, R 213↔η 2 η 3. We then have

and

where θ i (I)=η i if \(i\not\in D\) and θ i (I)=1+η i otherwise. Other families of noncommutative symmetric functions have simple expressions under this identification, e.g., the primitive elements Ψ n and Φ n of [3]

and

where \(E_{k} = \sum_{j_{1}<\cdots<j_{k}} \eta_{j_{1}} \cdots \eta_{j_{k}}\). The q-Klyachko element [3]

is

and Hivert’s Hall–Littlewood basis [7] is

3.1 Structure on the Grassmann algebra

Let ∗ be the anti-involution given by \(\eta_{i}^{*}=(-1)^{i}\eta_{i}\). Recall that the Grassmann integral of any function f is defined by

We define a bilinear form on Sym n by

Then,

so that this is (up to an unessential sign) the Bergeron–Zabrocki scalar product [1, Eq. (4)]. Indeed, if \(\mathrm{Des\,}(I)=\{d_{1},\ldots,d_{r}\}\) and \(\mathrm{Des\,}(J)=\{e_{1},\ldots,e_{s}\}\), then

and the coefficient of η 1⋯η n−1 in this product is zero if \(\mathrm{Des\,}(I)\) and \(\mathrm{Des\,}(J)\) are not complementary subsets of [n−1]. When it is the case, moving \(\eta_{d_{k}}\) to its place in the middle of the e i produces a sign \((-1)^{d_{k}-1}\), which together with the factor \((-1)^{d_{k}}\) results into a single factor (−1). Hence the final sign (−1)r=(−1)ℓ(I)−1.

3.2 Factorized elements in the Grassman algebra

Now, for a sequence of parameters Z=(z 1,…,z n−1), let

Note that this is equivalent to defining, as was already done in [8, Eq. (18)],

For example, with n=4, if one orders compositions as usual by reverse lexicographic order, the coefficients of expansion of K n on the basis (R I ) are

We then have

Lemma 3.1

Proof

By induction. For n=1, the scalar product is 1, and

□

3.3 Bases of Sym

We shall investigate bases of Sym n of the form

where Z I is a sequence of parameters depending on the composition I of n.

The bases defined in [8] and [1] are of the previous form and for both of them, the determinant of the Kostka matrix \(\mathcal{K}=(\tilde{\mathbf{k}}_{IJ})\) is a product of linear factors (as for ordinary Macdonald polynomials). This is explained by the fact that these matrices have the form

where A and B have a similar structure, and so on recursively. Indeed, for such matrices,

Lemma 3.2

Let A,B be two m×m matrices. Then,

3.4 Duality

Similarly, the dual vector space \(\mathit{QSym}_{n}=\mathbf{Sym}_{n}^{*}\) can be identified with a Grassmann algebra on another set of generators ξ 1,…,ξ n−1. Encoding the fundamental basis F I of Gessel [5] by

the usual duality pairing such that the F I are dual to the R I is given in this setting by

Let

Then, as above, we have a factorization identity:

Lemma 3.3

Proof

By definition

and

so that

□

Note that alternatively, assuming that the ξ i and the η j commute with each other and that ξ i η i =1, one can define 〈f,g〉 as the constant term in the product fg.

Using this formalism, one can for example find for the dual basis \({\varPhi}_{I}^{*}\) of Φ I, the following expression

where \({\varPhi}^{*}_{1^{r}} \Longleftrightarrow f_{r-1}(\xi_{1},\ldots,\xi_{r-1})\), which is simpler than the description of [3, Proposition 4.29]. Moreover, one can show that

where \({\mathbb{E}}\) is the exponential alphabet defined by \(S_{n}({\mathbb{E}}) = 1/n!\), so that

where a D is the number of permutations of \(\mathfrak{S}_{r}\) with descent set D.

4 Bases associated with paths in a binary tree

The most general possibility to build bases whose Kostka matrix can be recursively decomposed into blocks of the form (19) is as follows. Let y={y u } be a family of indeterminates indexed by all boolean words of length ≤n−1. For example, for n=3, we have the six parameters y 0,y 1,y 00,y 01,y 10,y 11.

We can encode a composition I with descent set D by the boolean word u=(u 1,…,u n−1) such that u i =1 if i∈D and u i =0 otherwise.

Let us denote by u m…p the sequence u m u m+1⋯u p and define

or, equivalently,

Similarly, let

where \(w_{1\ldots k}=u_{1}\cdots u_{k-1} \overline{u_{k}}\) where \(\overline{u_{k}}=1-u_{k}\), so that

For n=4, we have the following tables:

4.1 Kostka matrices

The Kostka matrix, which is defined as the transpose of the transition matrices from P I to R J , is, for n=4:

For example,

Note that this matrix is recursively of the form of Eq. (19). Thus, its determinant is

This has the consequence that, given a specialization of the y w , one can easily check whether the P I form a linear basis of Sym n .

Proposition 4.1

The bases (P I ) and (Q I ) are adjoint to each other, up to normalization:

which is indeed zero unless I=J.

Proof

If I=J, then \(y^{k}(I)\not=y_{k}(I)\) by definition. If \(I\not=J\), let d be the smallest integer which is a descent of either I or J but not both. Then, \(y_{w_{1\dots d}}=y_{u_{1\dots d}}\) and 〈Q I ,P J 〉=0. □

From this, it is easy to derive a product formula for the basis P I . Note that we are considering the usual product from Sym n ×Sym m to Sym n+m and not the product of the Grassman algebra.

Proposition 4.2

Let I and J be two compositions of respective sizes n and m. The product P I P J is a sum over an interval of the lattice of compositions

where

where Y I ⋅1⋅Y J stands for the sequence (y 1(I),…,y n (I),1,y 1(J),…,y m (J)).

Proof

The usual product from Sym n ×Sym m to Sym n+m can be expressed as

Indeed, this formula is clearly satisfied for R I R J . Then,

Then, for any K, the coefficient of P K is given by Formula (41). Thanks to Proposition 4.1, it is 0 if the boolean vector corresponding to I is not a prefix of the boolean vector corresponding to K, that is, if K is not in the interval [I▷(m),I⋅(1m)]. □

For example,

4.2 The quasi-symmetric side

As we have seen before, the (Q I ) being dual to the (P I ), the inverse Kostka matrix is given by the simple construction:

Proposition 4.3

The inverse of the Kostka matrix is given by

Proof

This follows from Proposition 4.1. □

One can check the answer on table (35) for n=4 of Q I in terms of the F I .

4.3 Some specializations

Let us now consider the specialization sending all y w to 1 if w ends with a 1 and denote by \(\mathcal{K}'\) the matrix obtained by this specialization. Then, as in [8, p. 10],

Proposition 4.4

Let n be an integer. Then

is lower triangular. More precisely, let \(Y'_{J}\) be the image of Y J by the previous specialization and define Y′J in the same way. Then the coefficient s IJ indexed by (I,J) is

Proof

This follows from the explicit form of \(\mathcal{K}_{n}\) and \({\mathcal{K}'_{n}}^{-1}\) (see Proposition 4.3). □

Similarly, the specialization sending all y w to 1 if w ends with a 0 leads to an upper triangular matrix.

These properties can be regarded as analogues of the characterization of Macdonald polynomials given in [6, Proposition 2.6] (see also [8, p. 10]).

5 The two-matrix family

5.1 A specialization of the paths in a binary tree

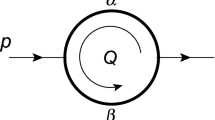

The above bases can now be specialized to bases \({\tilde{\mathrm{H}}}(A;Q,T)\), depending on two infinite matrices of parameters. Label the cells of the ribbon diagram of a composition I of n with their matrix coordinates as follows:

We associate a variable z ij with each cell except (1,1) by setting z ij :=q i,j−1 if (i,j) has a cell on its left, and z ij :=t i−1,j if (i,j) has a cell on its top. The alphabet Z(I)=(z j (I)) is the sequence of the z ij in their natural order. For example,

Next, if J is a composition of the same integer n, form the monomial

For example, with I=(4,1,2,1) and J=(2,1,1,2,2), we have \(\mathrm{Des\,}(J)=\{2,3,4,6\}\) and \(\tilde{\mathbf{k}}_{IJ}=q_{12}q_{13}t_{14}q_{34}\).

Definition 5.1

Let Q=(q ij ) and T=(t ij ) (i,j≥1) be two infinite matrices of commuting indeterminates. For a composition I of n, the noncommutative (Q,T)-Macdonald polynomial \({\tilde{\mathrm{H}}}_{I}(A;Q,T)\) is

Note that \({\tilde{\mathrm{H}}}_{I}\) depends only on the q ij and t ij with i+j≤n.

Note 5.2

Since the kth element of Z I depends only on the prefix of size k of the boolean vector associated with I, the \({\tilde{\mathrm{H}}}_{I}\) are specializations of the P I defined in Eq. (32). More precisely, if u=w0 is a binary word ending by 0, the specialization is \(y_{u}= q_{|w|_{1}+1,|w|_{0}+1}\), and if u=w1, we have \(y_{u}=t_{|w|_{1}+1,|w|_{0}+1}\). Note also that y w0 and y w1 where w is any binary word are different (one is a q, the other is a t), so that the determinant of this specialized Kostka matrix is generically nonzero. Finally, since these \({\tilde{\mathrm{H}}}\) are specializations of the Ps, the product formula detailed in Proposition 4.2 gives a simple generic product formula for the \({\tilde{\mathrm{H}}}\).

For example, translating Eqs. (44) and (45), one gets

5.2 (Q,T)-Kostka matrices

Here are the (Q,T)-Kostka matrices for n=3,4 (compositions are as usual in reverse lexicographic order):

The factorization property of the determinant of the (Q,T)-Kostka matrix, which is valid for the usual Macdonald polynomials as well as for the noncommutative analogues of [8] and [1] still holds since the \({\tilde{\mathrm{H}}}_{I}\) are specializations of the P I . More precisely,

Theorem 5.3

Let n be an integer. Then

where \(e(i,j)=\binom{i+j-2}{i-1}\, 2^{n-i-j}\).

Proof

The matrix \(\mathcal{K}_{n}(Q,T)\) is of the form

where A and B are obtained from \(\mathcal{K}_{n-1}\) by the replacements q ij ↦q i,j+1, t ij ↦t i,j+1, and q ij ↦q i+1,j , t ij ↦t i+1,j , respectively. So the result follows from Lemma 3.2. □

For example, with n=4,

5.3 Specializations

For appropriate specializations, we recover (up to indexation) the Bergeron–Zabrocki polynomials \({\tilde{\mathrm{H}}}_{I}^{\mathrm{BZ}}\) of [1] and the multiparameter Macdonald functions \({\tilde{\mathrm{H}}}_{I}^{\mathrm{HLT}}\) of [8]:

Proposition 5.4

Let (q i ), (t i ), i≥1 be two sequences of indeterminates. For a composition I of n,

-

(i)

Let ν be the anti-involution of Sym defined by ν(S n )=S n . Under the specialization q ij =q i+j−1, t ij =t n+1−i−j , \({\tilde{\mathrm{H}}}_{I}(Q,T)\) becomes a multiparameter version of \(\mathrm{iv}({\tilde{\mathrm{H}}}_{I}^{\mathrm{BZ}})\), to which it reduces under the further specialization q i =q i and t i =t i.

-

(ii)

Under the specialization q ij =q j , t ij =t i , \({\tilde{\mathrm{H}}}_{I}(Q,T)\) reduces to \({\tilde{\mathrm{H}}}_{I}^{\mathrm{HLT}}\).

Proof

Equation (52) gives directly [1, Eq. (36)] under the specialization (i) and [8, Eqs. (2), (6)] under the specialization (ii). □

5.4 The quasi-symmetric side

Families of (Q,T)-quasi-symmetric functions can now be defined by duality by specialization of the (Q I ) defined in the general case. The dual basis of \(({\tilde{\mathrm{H}}}_{J})\) in QSym will be denoted by \((\tilde{\mathrm{G}}_{I})\). We have

where the coefficients are given by the transposed inverse of the Kostka matrix: \((\tilde{\mathbf{g}}_{IJ})={}^{t}(\tilde{\mathbf{k}}_{IJ})^{-1}\).

Let \(Z'(I)(Q,T)=Z(I)(T,Q)=Z(\bar{I}^{\sim})(Q,T)\). Then, thanks to Proposition 4.3 and to the fact that changing the last bit of a binary word amounts to changing a q into a t, we have

Proposition 5.5

The inverse of the (Q,T)-Kostka matrix is given by

Note that, as in the more general case of parameters indexed by binary words (see Proposition 4.4), if one specializes all t (resp., all q) to 1, one then gets lower (resp., upper) triangular matrices with explicit coefficients, hence generalizing the observation of [8].

6 Multivariate BZ polynomials

In this section, we restrict our attention to the multiparameter version of the Bergeron–Zabrocki polynomials, obtained by setting q ij =q i+j−1 and t ij =t n+1−i−j in degree n.

6.1 Multivariate BZ polynomials

As in the case of the two matrices of parameters, Q and T, one can deduce the product in the \({\tilde{\mathrm{H}}}\) basis by some sort of specialization of the general case. However, since t ij specializes to another t where n appears, one has to be a little more cautious to get the correct answer.

Theorem 6.1

Let I and J be two compositions of respective sizes p and r. Let us denote by \(K=I.\bar{J}^{\sim}\) and n=|K|=p+r. Then

where the sum is computed as follows. Let I′ and J′ be the compositions such that |I′|=|I| and either K′=I′⋅J′, or K′=I′▷J′. If I′ is not coarser than I or if J′ is not finer than J, then \({\tilde{\mathrm{H}}}_{K'}\) does have coefficient 0. Otherwise, z k (K′)=q k if k is a descent of K′ and t n−k otherwise. Finally, \(z'_{k}(K')\) does not depend on K′ and is (Z(I),1,Z(J)).

For example, with I=J=(2), we have K=(211). Note that Z BZ(K)=[q 1,t 2,t 1] and \(Z^{\mathrm{BZ}}(\bar{K}^{\sim})=[t_{3},q_{2},q_{3}]\). The set of compositions K′ having a nonzero coefficient is (4), (31), (22), (211). Here are the (modified) Z and Z′ restricted to the descents of K for these four compositions.

6.2 The ∇ operator

The ∇ operator of [1] can be extended by

Then,

Proposition 6.2

The action of ∇ on the ribbon basis is given by

Proof

This is a direct adaptation of the proof of [1]. Lemma 21, Corollary 22, and Lemma 23 of [1] remain valid if one interprets q i and t j as q i and t j . In particular, Eq. (66) reduces to [1, (54)] under the specialization q i =q i, t i =t i. □

Note also that if I=(1n), one has

As a positive sum of ribbons, this is the multigraded characteristic of a projective module of the 0-Hecke algebra. Its dimension is the number of packed words of length n (called preference functions in [1]). Let us recall that a packed word is a word w over {1,2,…} so that if i>1 appears in w, then i−1 also appears in w. The set of all packed words of size n is denoted by PW n .

Then the multigraded dimension of the previous module is

where the statistic ϕ(w) is obtained as follows.

Let \(\sigma_{w}=\overline{\mathrm{std}(\overline{w})}\), where \(\overline{w}\) denotes the mirror image of w. Then

where x i =q i if \(w^{\uparrow}_{i}=w^{\uparrow}_{i+1}\) and x i =t n−i otherwise, where w ↑ is the nondecreasing reordering of w.

For example, with w=22135411, σ w =54368721, w ↑=11122345, the recoils of σ w are 1, 2, 3, 4, 7, and ϕ(w)=q 1 q 2 t 5 q 4 t 1.

Actually, we have the following slightly stronger result.

Proposition 6.3

Denote by d I the number of permutations σ with descent composition C(σ)=I. Then,

Proof

If σ is any permutation such that C(σ −1)=I, the coefficient of R I in the r.h.s. of (71) can be rewritten as

The words w∈PW n such that σ w =σ are obtained by the following construction. For i=σ −1(1), we have w i =1. Next, if k+1 is to the right of k in σ, we must have \(w_{\sigma^{-1}(k+1)}=w_{\sigma^{-1}(k)}+1\). Otherwise, we have two choices: \(w_{\sigma^{-1}(k+1)}=w_{\sigma^{-1}(k)}\) or \(w_{\sigma^{-1}(k+1)}=w_{\sigma^{-1}(k)}+1\). These choices are independent, and so contribute a factor (q i +t n−1) to the sum (72). □

This can again be generalized:

Theorem 6.4

For any composition I of n,

where σ is any permutation such that \(C(\sigma^{-1})=\bar{I}^{\sim}\), and

Proof

First, if ev(w)≤I, then \(C(\sigma_{w}^{-1})\ge \bar{I}^{\sim}\). For any \(J\ge \bar{I}^{\sim}\), the coefficient of R J in (73) is, for any permutation τ such that C(τ −1)=J,

For a packed word w such that σ w =τ, we have

In the second product, one always has x j =q j , since \(w^{\uparrow}_{j}=w^{\uparrow}_{j+1}\). In the first one, there are, as before, two possible independent choices for each j. □

The behavior of the multiparameter BZ polynomials with respect to the scalar product

is the natural generalization of [1, Proposition 1.7]:

7 Quasideterminantal bases

Another way to introduce two matrices of parameters is via the quasi-determinantal expressions of some bases. This method leads to a different kind of deformation, yielding for example noncommutative analogues of the Macdonald P-polynomials.

7.1 Quasideterminants of almost triangular matrices

Quasideterminants [4] are noncommutative analogs of the ratio of a determinant by one of its principal minors. Thus, the quasideterminants of a generic matrix are not polynomials, but complicated rational expressions living in the free field generated by the coefficients. However, for an almost triangular matrices, i.e., such that a ij =0 for i>j+1, all quasideterminants are polynomials, with a simple explicit expression. We shall only need the formula (see [3], Proposition. 2.6):

Recall that the quasideterminant |A| pq is invariant by scaling the rows of index different from p and the columns of index different from q. It is homogeneous of degree 1 with respect to row p and column q. Also, the quasideterminant is invariant under permutations of rows and columns.

The quasideterminant (79) coincides with the row-ordered expansion of an ordinary determinant

which will be denoted as an ordinary determinant in the sequel.

7.2 Quasideterminantal bases of Sym

Many interesting families of noncommutative symmetric functions can be expressed as quasi-determinants of the form

(or of the transposed form), where G k is some sequence of free generators of Sym, and W an almost-triangular (w ij =0 for i>j+1) scalar matrix. For example (see [3, (37)–(41)]),

or (see [10, Eq. (78)])

where Θ n (q)=(1−q)−1 S n ((1−q)A). These examples illustrate relations between sequences of free generators. Quasi-determinantal expressions for some linear bases can be recast in this form as well. For example, the formula for ribbons

can be rewritten as follows. Let U and V be the n×n almost-triangular matrices

Given the pair (U,V), define, for each composition I of n, a matrix W(I) by

and set

Then,

Indeed, H I (U,V;A) is obtained by substituting in (79)

This yields

For example,

For a generic pair of almost-triangular matrices (U,V), the H I form a basis of Sym n . Without loss of generality, we may assume that u 1j =v 1j =1 for all j. Then, the transition matrix M expressing the H I on the S J where J=(j 1,…,j p ) satisfies

where x ij =u ij if i−1 is not a descent of I and v ij otherwise.

As we shall sometimes need different normalizations, we also define for arbitrary almost triangular matrices U,V

and

7.3 Expansion on the basis (S I)

For a composition I=(i 1,…,i r ) of n, let I ♯ be the integer vector of length n obtained by replacing each entry k of I by the sequence (k,0,…,0) (k−1 zeros):

e.g., for compositions of 3,

Adding (componentwise) the sequence (0,1,2,…,n−1) to I ♯, we obtain a permutation σ I . For example,

Proposition 7.1

The expansion of H′(W,S) on the S-basis is given by

Thus, for n=3,

7.4 Expansion of the basis (R I )

Proposition 7.2

For a composition I=(i 1,…,i r ) of n, denote by W I the product of diagonal minors of the matrix W taken over the first i 1 rows and columns, then the next i 2 ones and so on. Then,

7.5 Examples

We shall now describe a class of pairs (U,V) depending on a sequence (q i ) and extra parameters x,y,a,b, for which the coefficients on the ribbon basis are products of binomials. For special values of the parameters, the resulting bases can be regarded as analogues of Schur functions of (1−t)X/(1−q) or of Macdonald P-polynomials, which are indeed obtained for hook compositions.

7.5.1 A family with factoring coefficients

Theorem 7.3

Let U and V be defined by

Then the coefficients W J of the expansion of \(H'_{I}(U,V)\) on the ribbon basis all factor as products of binomials.

Proof

Observe first that the substitutions b=a −1 and \(u_{n+1-i}=q_{i-1}^{-1}\) change the determinant of V into c n det(U) with c n =a n−1 q 1⋯q n−1. Expanding det(U) by its first column yields a two-term recurrence implying easily the factorized expression

so that as well

Now, all the matrices W(I) built from U and V, and all their diagonal minors have the same structure, and their determinants factor similarly. □

The formula for the coefficient of R n is simple enough: if one orders the factors of det(U) and det(V) as

and

then the coefficient of R n in \(H'_{I}(U,V)\) is

For example,

A more careful analysis allows one to compute directly that the coefficient of R J is \(H'_{I}\): denote by u the boolean vector of I and by v the boolean vector of J and consider the biword \(w=\binom{u}{v}\). Start with c IJ =c:=1. First, there are factors coming from the boundaries of the biword:

-

If \(w_{1}=\binom{0}{0}\) then c:=(x−q 1 y)c.

-

If \(w_{1}=\binom{1}{0}\) then \(c:=(x-u_{n_{1}}y) c\).

-

If \(w_{n-1}=\binom{0}{1}\) then c:=(x−q n−1 y)c.

-

If \(w_{n-1}=\binom{1}{1}\) then c:=(x−u 1 y)c.

Then, for any i∈[1,n−2], the two biletters w i w i+1 can have different values:

-

if \(w_{i} w_{i+1}=\binom{.\ 0}{0\ 0}\) then c:=(x−q i+1 y)c,

-

if \(w_{i} w_{i+1}=\binom{.\ 1}{0\ 0}\) then c:=(xu n−i−1−y)c,

-

if \(w_{i} w_{i+1}=\binom{0\ .}{1\ 1}\) then c:=(xq i −y)c,

-

if \(w_{i} w_{i+1}=\binom{1\ .}{1\ 1}\) then c:=(x−u n−i y)c,

-

if \(w_{i} w_{i+1}=\binom{0\ 0}{1\ 0}\) then c:=(xq i −q i+1 y)(x−y)c,

-

if \(w_{i} w_{i+1}=\binom{0\ 1}{1\ 0}\) then c:=(xq i u n−i−1−y)(x−y)c,

-

if \(w_{i} w_{i+1}=\binom{1\ 0}{1\ 0}\) then c:=(x−q i+1 u n−i y)(x−y)c,

-

if \(w_{i} w_{i+1}=\binom{1\ 1}{1\ 0}\) then c:=(xu n−i−1−u n−i y)(x−y)c,

where the dot indicates any possible value. Note that if v i =0 and v i+1=1, no factor is added to c IJ .

7.5.2 An analogue of the (1−t)/(1−q) transform

Recall that for commutative symmetric functions, the (1−t)/(1−q) transform is defined in terms of the power-sums by

There exist several noncommutative analogues of this transformation. One can define it on a sequence of generators and require that it be an algebra morphism. This is the case of the versions chaining internal products on the right by σ 1((1−t)A) and σ 1(A/(1−q)) (the order matters). Taking internal products on the left instead, one obtains linear maps which are not algebra morphisms but still lift the commutative transform.

With the specialization x=1, y=t, q i =q i, u i =1, a=b=1, one obtains a basis such that for a hook composition I=(n−k,1k), the commutative image of \(H'_{I}(U,V)\) becomes the (1−t)/(1−q) transform of the Schur function \(s_{n-k,1^{k}}\).

7.5.3 An analogue of the Macdonald P-basis

With the specialization x=1, y=t, q i =q i, u i =t i, a=b=1, one obtains an analogue of the Macdonald P-basis, in the sense that for hook compositions I=(n−k,1k), the commutative image of \(H'_{I}\) is proportional to the Macdonald polynomial \(P_{n-k,1^{k}}(q,t;X)\).

For example, the following determinant

is equal to

In general, the commutative image of \(H'_{n-k,1^{k}}(U,V)\) is

Notes

This idea has been explored in an unpublished work [9], in which rather convincing (q,t)-Kostka matrices have been constructed up to n=5.

References

Bergeron, N., Zabrocki, M.: q and q, t-analogs of non-commutative symmetric functions. Discrete Math. 298(1–3), 79–103 (2005)

Duchamp, G., Hivert, F., Thibon, J.-Y.: Noncommutative symmetric functions VI: free quasi-symmetric functions and related algebras. Int. J. Algebra Comput. 12, 671–717 (2002)

Gelfand, I.M., Krob, D., Lascoux, A., Leclerc, B., Retakh, V.S., Thibon, J.-Y.: Noncommutative symmetric functions. Adv. Math. 112, 218–348 (1995)

Gelfand, I., Retakh, V.: Determinants of matrices over noncommutative rings. Funct. Anal. Appl. 25, 91–102 (1991)

Gessel, I.: Multipartite P-partitions and inner products of skew Schur functions. Contemp. Math. 34, 289–301 (1984)

Haiman, M.: Macdonald polynomials and geometry. In: New Perspectives in Algebraic Combinatorics. MSRI Publications, vol. 38, pp. 207–254 (1999)

Hivert, F.: Hecke algebras, difference operators, and quasi-symmetric functions. Adv. Math. 155, 181–238 (2000)

Hivert, F., Lascoux, A., Thibon, J.-Y.: Noncommutative symmetric functions with two and more parameters. Preprint arXiv:math.CO/0106191

Hivert, F., Lascoux, A., Thibon, J.-Y.: Macdonald polynomials with two vector parameters. Unpublished notes (2001)

Krob, D., Leclerc, B., Thibon, J.-Y.: Noncommutative symmetric functions II: Transformations of alphabets. Int. J. Algebra Comput. 7, 181–264 (1997)

Novelli, J.-C., Thibon, J.-Y., Williams, L.K.: Combinatorial Hopf algebras, noncommutative Hall–Littlewood functions, and permutation tableaux. Adv. Math. 224, 1311–1348 (2010)

Tevlin, L.: Noncommutative symmetric Hall–Littlewood polynomials. In: Proc. FPSAC 2011, DMTCS Proc. AO, pp. 915–926 (2011)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Lascoux, A., Novelli, JC. & Thibon, JY. Noncommutative symmetric functions with matrix parameters. J Algebr Comb 37, 621–642 (2013). https://doi.org/10.1007/s10801-012-0378-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10801-012-0378-9