Abstract

Geodesign tools are increasingly used in collaborative planning. An important element in these tools is the communication of stakeholder values. As there are many ways to present these values it is important to know how these tools should be designed to communicate these values effectively. The objective of this study is to analyse how the design of the tool influences its effectiveness. To do this stakeholder values were included in four different geodesign tools, using different ways of ranking and aggregation. The communication performances of these tools were evaluated in an online survey to assess their ability to communicate information effectively. The survey assessed how complexity influence user performance. Performance was considered high if a user is able to complete an assignment correctly using the information presented. Knowledge on tool performance is important for selecting the right tool use and for tool design. The survey showed that tools should be as simple as possible. Adding ranking and aggregation steps makes the tools more difficult to understand and reduces performance. However, an increase in the amount of information to be processed by the user also has a negative effect on performance. Ranking and aggregation steps may be needed to limit this amount. This calls for careful tailoring of the tool to the task to be performed. For all tools it was found maybe the most important characteristic of the tools is that they allow for trial and error as this increases the opportunity for experimentation and learning by doing.

Similar content being viewed by others

1 Introduction

In spatial planning maps can be used to combine stakeholder values with different types of spatial information. Maps can also serve different functions in the planning process such as reaching agreements, exchanging information, and setting objectives. However, the role of map representations is not always well understood. The influence of maps depends both on the quality and presentation of the information as on the processing capabilities of the decision-maker (Duhr 2007). Maps integrated in geodesign tools are used to support stakeholders in collaborative planning. Geodesign tools combine geography with design by providing stakeholders with tools that support the evaluation of design alternatives against the impacts of those designs (Flaxman 2010). Little research has been undertaken on the communicative function of map graphics in planning (Duhr 2007). Researchers in the field still remark on a lack of extensive testing and quantitative evaluation of spatial planning and decision support tools (Vonk et al. 2005; Geertman and Stillwell 2004; Geertman and Toppen 2013). Only a few studies have explicitly tested tool effectiveness (e.g. Inman et al. 2011; Arciniegas et al. 2012). It is not self-evident that when information is put in a map, it is also understood by the viewer (Steinitz 2012). Multiple attributes are mostly combined in a suitability map. However, a suitability map of a single objective shows the spatial differentiation of the performance of this objective but does not present the values of other objectives. Furthermore, a suitability map derived from combining multiple objectives only shows the total suitability and does not give any detail about the aggregated objectives. Maps that present a combination of multiple attributes are often complex. Janssen and Uran (2003) for example showed that participants overestimated their ability to use this type of maps.

Geodesign tools intend to increase the effectiveness of spatial planning. However, effectiveness is a broad concept that can include many aspects. Previous studies have discussed various aspects of effectiveness (Nyerges et al. 2006; Salter et al. 2008). Effectiveness has been associated with the usability of a system in the context of human-computer interaction (Sidlar and Rinner 2009; Meng and Malczewski 2009). Jonsson et al. (2011) characterizes effectiveness as making sure that the right things are done and that they are done right. Budic (1994) considers effectiveness as operational effectiveness and decision-making effectiveness. The former concerns improvements in quality and quantity of data, whereas the latter is about the facilitation of planning-related decision making. Goodhue and Thompson (1995) distinguished effectiveness as the extent to which instruments enable stakeholders to carry out the intended tasks and the fit of the instruments to the capabilities and demands of the stakeholders. Gudmundsson (2011) states that, besides measuring effectiveness to assess instrumental use, a tool can also have a more conceptual role where use involves general enlightenment. Use of information can be described as receiving information, reading information or understanding information. Use can also be described as the amount of influence of the information on decision-making in terms of contribution or actions.

This study focused on visualizing the spatial pattern of multiple stakeholder values simultaneously. A comparison was made between four types of geodesign tools to communicate these values. The tools were tested in an online survey to assess their ability to communicate information effectively. The potential of interactive geodesign tools to contribute to decision processes is more and more recognized (Steinitz 2012; Dias et al. 2013). Not many studies, however, address the effectiveness of these tools. An unique element of our study is that it directly links effectiveness to task performance and therefore explicitly includes the interactive element of the toll in the evaluation.

The tools designed for this study vary in the way information on values is processed and presented. The tools differ in the use of an aggregation or ranking step (Fig. 1). Aggregation means that the values are weighted and summed in a total value. Aggregated values support stakeholders by combining multiple sources in a single attribute. Aggregation prevents a stakeholder from having to combine objectives themselves. Ranking means that the order of the value is used to select values. The result is information that only shows the best and worst objective values. The variations resulted in four geodesign tools: (1) objective value tool, (2) relative objective value tool, (3) total value tool and (4) stakeholder value tool.

The objective value tool just presents the objective value and does not require any aggregation or ranking. The relative objective value tool shows how each parcel performs compared to all other parcels and therefore requires ranking. The total value tool aggregates the objective values into an overall value. Finally, the stakeholder value tool uses both aggregation and ranking in order to visualize which exchange is best for each stakeholder.

A pre-test was conducted to finalize the graphic design of the tool. Next, the tools were evaluated in an online survey. Section 2 first describes the current literature on the use of geovisualisation for stakeholder value mapping and then the results of the pre-test. The methodology of the survey is described in Sect. 3. Section 4 presents the four geodesign tools that were developed to present stakeholder information. Section 5 shows the results of the survey comparing tool performance of the four tools. Finally, Sect. 6 provides conclusions on the usefulness of these tools to support spatial planning.

2 Visualisation of Stakeholder Values in Geodesign Tools

Geovisualisations can be used to present the spatial distribution of stakeholder values in decision support and planning tools. A stakeholder value is the score of an objective that is found important by a stakeholder and can consist of multiple objectives of equal or unequal importance. Maps are useful for spatial communication (Arciniegas et al. 2011; Carton 2007) and can even be more effective when incorporated in a decision support tool. In literature, multiple decision and planning support tools integrated in a geographical information system (GIS) can be found (e.g. Geertman and Stillwell 2009; Batty 2008). Maps are evolving in more exploratory and interactive tools that serve as an interface (Kraak 2004). Recent literature also emphasized the need for a multi-objective view to cartographic design (Xiao and Armstrong 2012).

2.1 Mapping Stakeholder Values

The interests of each stakeholder can be presented in the form of maps. The information in these maps has to be combined. Instead of offering all available information to the planners, spatial evaluation methods can help decision makers to structure and simplify the decision problem (Herwijnen 1999). Two ways to aggregate information can be distinguished (1) approaches that start with individual problem solving followed by aggregation of the solution maps, and (2) approaches that start with the aggregation of stakeholder values which will then be processed in a multi criteria analysis (Boroushaki and Malczewski 2010; Herwijnen 1999). Depending on the decision issue, values need to be ranked. In multi criteria analysis ranking is often used (Belton and Stewart 2002).

The need for testing the effectiveness of decision support has been recognized for a long time (Densham 1991; Crossland et al. 1995). Only a few researchers have explicitly studied the effectiveness of visualizations of the spatial distribution of stakeholder values in spatial planning and decision support tools (e.g. Inman et al. 2011; Arciniegas et al. 2012). The review of Brömmelstroet (2012) showed that different types of evaluation criteria are applied and concluded that a systematic analysis of performance is missing. Inman et al. (2011) described the application of a quantitative approach to evaluate environmental decision support systems with small groups of stakeholders in two case studies. The objective of these case studies was to facilitate the participatory decision-making process in water management projects. Stakeholders’ perceptions of effectiveness were elicited and compared using statistical analysis. The results of the two case studies suggested that stakeholders’ backgrounds influences their perceptions of effectiveness.

The experiments of Arciniegas et al. (2012) show that using a set of collaborative spatial decision support tools, it was found that the cognitive effort related to the volume and format of information is a critical issue in spatial decision support. Usefulness, clarity and impact were the dimensions on which effectiveness was evaluated. Ozimec et al. (2010) evaluated multiple types of symbols and tasks that differed in the level of complexity. The evaluation was based on decision accuracy which was measured by performance, decision efficiency which was measured by duration, and decision confidence and ease of task which were derived from ratings. The results of this study show that the type of symbolization strongly influences decision performance. The findings indicated that graduated circles are appropriate symbolizations for use on thematic maps and that their successful utilization seems to be virtually independent of personal characteristics, such as spatial ability and map experience.

There are also studies that explicitly test the different uses of symbols in maps. Dong et al. (2012) measured deviation and response time to assess the quality of dynamic symbols. The results show that size is more efficient and more effective than colour for dynamic maps. Garlandini and Fabrikant (2009) and Fuchs et al. (2009) used eye tracking to study the effectiveness of maps. Garlandini and Fabrikant (2009) propose an empirical, perception-based evaluation approach for assessing effectiveness and efficiency of longstanding cartographic design principles. The visual variable size was found to be the fastest and the most accurate to detect change if it was flashed on and off on the map. The form style and use of cartographic visualizations in spatial planning differ between nations and even between regions (Duhr 2004). This is mainly determined by the functions of plans in the planning system. This means that above findings cannot directly be used for the communication of stakeholder values in a small scale study area. However, the studies advocate that symbols are useful for map design. In this study the evaluation of the tools is limited to testing communicative tool performance and task functionality. Criteria to measure the impact of the tools on the decision making process, such as user confidence and satisfaction, were not studied. The evaluation of the cartographic design was not the main focus of this study and was limited to the pre-test described below.

2.2 Pre-test

An empirical pre-test was used to find perceived preferences of map presentations. A site was selected from a previously studied area (Janssen et al. 2014; Brouns et al. 2014). The key issue in the region is the trade-off between the prevention of soil subsidence and the conservation of agricultural production. A small study area was selected with three types of land use: intensive grassland, extensive grassland and nature. The map includes 13 parcels that were numbered. Three main objectives were identified: (1) maximize agricultural production; (2) minimize soil subsidence; and (3) maximize natural value. The objective values depend on both land use and water level. A high water level results in high objective values for soil subsidence and nature, but in low values for agriculture. A high value for soil subsidence means low subsidence rates, high values for nature means high quality and low values for agriculture means low productivity. Different symbolizations, such as squares, patterns and bar charts were used to develop the semiology of the maps of Figs. 2 and 3 (Bertin 1983; Slocum et al. 2009).

Figure 2 shows the current land uses with the stakeholder values for three objectives in each parcel. In Fig. 2a, boxes are used to show, for each of the objectives, if their performance is good (green), intermediate (yellow) or bad (red). Nature has a low value for all parcels. For intensive and extensive grasslands the value for agriculture is on average high, except for parcel number 3 and 4. In Fig. 2b the value of the objectives is reflected by the height of the bars. These reveal that for the extensive grasslands, values for soil subsidence are close to the values for agriculture. From the bar charts the variation in values for nature can also be derived, whereas in the boxes nature was all classified as low.

In the pre-test, a laboratory experiment was set up with 41 students and researchers. The respondents were asked whether, when given the task to change the land use pattern, they would choose the traffic light or bar chart presentation (Fig. 2). Respondents who favoured the bar charts mentioned the importance of being able to see the actual height of the objective values. Although the boxes provide less information, they were preferred by 63 % of the respondents. This map was found easier to read and better suitable for the identification of parcels that need change. The visualisation of stakeholder interests with boxes was used for visualisation of the geodesign tools that were tested in Sect. 4 of this study. Figure 3 uses the same information to present the land use changes that result in an increase in the total value of the plan. A weighting and aggregation of the three main objectives was applied to determine if a land use change would increase total value of the plan. The map presented in Fig. 3a combines colours and symbols. The colour of each parcel presents the current land use. The colour of the boxes indicates the land use that would result in the highest increase of total value. If the current land use is also the best land use the parcel, it is left blank. In Fig. 3b, primary colours are used for the original land use and secondary colours to indicate the preferred transitions. The original land use is visualised by the colour of the border of the parcel and the preferred change by the colour of the parcel itself. Finally in Fig. 3c, the colour of the parcel itself indicates the current land use and the texture indicates in which direction the land use change is favourable. Similarly, the respondents were asked to select one of the three tools to change the land use.

The symbols map (Fig. 3a) was preferred by 83 % of the respondents over the map using borders with primary and secondary colours (Fig. 3b). The main reasons were that respondents (1) preferred the more intuitive colours, (2) preferred the use of the symbols on top of current map layer, and finally, (3) that the limited number of legend classes was easier to see. This was expressed by one of the respondents as: “ it’s quicker to compare two sets of different variables than to go back and forth between the legend and the map with the extensive colour use ”. Respondents who were in favour of the colours presentation named, as an advantage, that the legend identifies all possible changes, but also mentioned that they did not like the use of the coloured outlines. In comparing the colours map (Figure 3b) with the patterns map (Figure 3c) 61 % of the respondents voted for the patterns map. One of the respondents remarked: “ I like the mixing colours because the colour showing the change combines the colours of the old and new land use, so you can pick out patterns easier. The patterns are not so easy to read but can be recognized easily from the legend.”

It is interesting to see how stakeholder values and environmental indicators are visualised in existing geodesign tools. There have only been few studies where multiple objectives were presented in a single map layer (Alexander et al. 2012; Arciniegas et al. 2011). A more widely used approach is to combine multiple criteria in a single map or indicator (Jankowski et al. 2001). The planning support system ‘UrbanSim’ presents single maps of costs, number of residents (Waddell 2002). ‘Urban strategy’ uses indicator maps of for example noise ( Borst 2010). The online planning support system ‘What if’ uses suitability mapping (Pettit et al. 2013). A suitability map advises positively and negatively about the suitability of locations for a specific change. On the map, regions are marked as ‘suitable’, less suitable or unsuitable ( Carton 2007). Carton and Thissen (2009) show that different frames of stakeholders result in different preferences regarding the suitability maps.

3 Method

Four geodesign tools were developed based on a pre-test and a literature review. These four geodesign tools were evaluated in an online survey. This section first describes the structure of the survey, next the questions and assignments are explained and finally some practical issues are discussed. The aim of the survey was to test tool performance. Tool performance was divided into communicative performance and task performance. Communicative performance is defined as the ability to deliver information from the map to the user. Task performance is defined as tool functionality and describes how well a tool supports a specific task (Vonk and Ligtenberg 2010).

3.1 The survey

The survey consisted of 40 multiple-choice questions and was designed to take about 30 min to complete. This method of data collection was selected to (1) expose the tools to a large number of students and researchers, (2) ask in depth questions about the tools (3) test the tools with independent respondents. Students and researchers from Faculty of Earth Sciences from the VU University were contacted to complete the online survey. The survey consist of four categories of questions: (1) respondent characteristics, (2) communicative performance, (3) task functionality, and (4) user perception. Each question is accompanied with a map including a title, legend and map description (Fig. 4).

Tool performance was assessed for each tool and for each dimension. The dimensions are map patterns, map relations, map change, tool selection and tool application. This means that a total of 20 questions was used to determine overall tool performance. The remaining questions constitute of respondent characteristics, a ranking question, perception on tool difficulty and an open question for respondents to leave comments. Communicative performance was evaluated in three dimensions of map interpretation: (1) map pattern, (2) map relation and (3) map change. The first two referred to static performance. Map pattern refers to the spatial pattern of the information. Map relation referred to how the various map layers lead to the map pattern. Map change referred to dynamic performance and referred to the extent that a change in map pattern was understood. The assessment of dynamic performance provided insight into the ability to use the tools in an interactive setting with dynamic attributes. Interactive maps provide opportunities for including spatio-temporal changes and allow user interaction with spatial data (McCall and Dunn 2012). Task performance was evaluated by (4) tool selection and (5) tool application.

The survey started with a short explanation of the stakeholder objectives and the relationships between the objectives and the physical conditions. The parcels were numbered to ask about tool information and characteristics of specific parcels. Tool performance was assessed with multiple choice questions of four options from which only one answer was correct. The answers to the multiple choice questions were labelled as correct (1) and incorrect (0) and were statistically compared to find significant differences in the performance of the respondents for the four tools with paired t-tests. All question had to be completed but each question contained a ‘do not know’ option to prevent gambling. The survey was developed with \(\hbox {SurveyMonkey}^{{\circledR }}\) (www.surveymonkey.com, last accessed February 2013), which is an online survey tool. It provides online questionnaire software to design, collect and analyse data.. The final questionnaire was pre-tested to check if the questions were understood and to test the length of the survey. Access to the survey was distributed by e-mail.

3.2 Assignments

First characteristics of the respondents were collected. Experience levels were scored on a 5-point scale ranging from very low (\(-\)2) to very high experience (\(+\)2). Experience with maps was divided into experience with maps in general, experience with land use maps and experience with GIS. Experience with maps was used to divide the respondents into ‘experts’ and ‘non experts’. Non-experts are those with experience level up to average on a 5-point Likert scale. Experts are those respondents that classified their experience level with maps as high or very high.

Static communicative performance was assessed in map patterns and map relations. In terms of map patterns, the respondents were, for instance, asked to answer:‘What is the nature score for parcels 10–13?’ (Fig. 4a). Next, a question was asked to find out if the respondent understood the underlying relations with a question such as ‘Why have parcels 3 and 4 got a low value for agriculture?’ The dynamic communicative performance was evaluated to determine whether a change in the map was understood after changes were made. Respondents were asked to name how the new map originated from the original map. For example: For which parcels resulted the land use change in an improved value for the agriculture objective (Fig. 4b). The last part of the survey evaluated how the respondents linked the tools to specific tasks. Task functionality was evaluated in four questions about tool selection and four questions about tool application. Four tasks were formulated that were expected to be best supported with one of the tools. Figure 5 shows a question for one of the tasks . The respondents were asked to select the tool that was most appropriate to perform the task. Next, the respondents were asked to complete the task. If the respondents choose the associated tool and were able to complete the assignment, it was regarded to be a plus for task functionality. The order of the task assignments was changed randomly to prevent a learning effect. Finally, the respondents were asked to indicate their opinion towards the individual tools on a 5-point scale ranging from very difficult (\(-\)2), to very easy (\(+\)2).

4 Geodesign Tools

This section describes the planning tasks that were formulated and the tools that were developed to support these tasks. First the tasks that were formulated are explained, followed by a detailed description of each of the tools that were assumed to support these tasks. Four geodesign tools were developed to present multiple stakeholder values differently. Section 5 describes the survey results.

4.1 Stakeholder Tasks

As the tools were developed based on stakeholder tasks, this section first describes the planning tasks that were formulated. A stakeholder task is the assignment that has to be accomplished during a planning stage. Spatial planning and decision making consist of multiple planning stages. Each stage is assumed to contain multiple stakeholder tasks (Eikelboom and Janssen 2013). This study evaluated the influence of aggregation and ranking in presenting stakeholder values in geodesign tools. The variations resulted in the following stakeholder tasks: (1) assess the spatial pattern of the objective values, (2) identify bottlenecks, (3) find compromises and (4) discover trade-offs to support negotiation (Fig. 6).

The first task was the assessment of the spatial pattern of the objective values. Spatial planning typically starts with an exploration of the state of an area. In terms of stakeholder objectives, this means that each stakeholder searches for high and low scoring areas. Stakeholders that have interests in multiple objectives also search for areas with acceptable or alternative values for multiple objectives. For this task it was necessary to have information about each objective simultaneously for each spatial decision unit.

The second task was the identification of bottlenecks. Bottlenecks are situations where change is needed. Respondents had to select areas that are sub-optimal or problematic. In case of multiple problematic areas and a limited budget, information on priorities is needed. Stakeholders need to know which regions have the lowest performance. Time and energy can also be saved when parcels that are close to optimal are excluded or neglected. The identification of outliers in the regions is a task that calls for ranking of the objective values. The first two tasks require separate presentation of each objective.

The thirds task was the search for the best compromise for all stakeholders. This results in a direct advice on what to change in the interest of all stakeholders. This task is supported by a tool that shows the best compromise by combining the stakeholders in a predefined manner. For the fourth task respondents were asked to find parcels that are candidates for negotiation with other stakeholders. This meant that the task was to find information that supports the identification of desirable exchanges of land use. To support this task information is needed on which measure leads to the highest value for each of the stakeholders. The last two tasks required integration of stakeholder objectives in aggregated values. The tasks were operationalized in assignments such as ‘create extensive grassland when extensive grassland is the best land use’ or ‘raise the water level for parcels when the objective value soil has a 20 % worst value’.

4.2 Tools

The support these tasks four geodesign tools were made available: (1) objective value tool, (2) relative objective value tool, (3) total value tool, and (4) stakeholder value tool. Each tool was designed for one of the specific tasks. The four stakeholder tasks were: (1) Assess the spatial pattern of the objective values, (2) identify bottlenecks, (3) find the best compromise, and (4) discover trade-offs to support negotiation. Parcels were used as the spatial unit for evaluation with land use of water level as background. Within each parcel values were presented in one, two or three boxes. The tools were designed for dynamic use as the effects of a change on the value of objectives was shown immediately. The tools were constructed with Community Viz software version 4.3 (http://placeways.com/communityviz, last accessed December 2014). An overview of the tools is given in Fig. 7.

The first tool is named the ‘objective value tool’. The value of an objective depends on land use and water level and each objective responds differently. When more than one objective is shown, a comparison between objectives can be made at the same time that land use or water level is changed. The objective values vary between 0 and 10 and are represented in three classes: worst (0–7), average (7,8) and best (9,10) (Fig. 7a). Consequently, three red boxes indicate a low value for all objectives.

Second, the ‘total value tool’ visualises the best option for the stakeholders as one group and is a consensus driven approach. This total value is derived from weighting the stakeholder objectives for each land use. The total value tool has a different map lay out as it only shows one box instead of three (Fig. 7b). The background colour of each parcel represents the current land use. The colour in the box in the middle of a parcel shows the land use type that results in the highest total value. If the current land use is the same as the best land use no box is shown. The following weights were used to calculate total values (Table 1):

The ‘relative objective value tool’ shows a percentage of the best- and worst- scoring objective values. The tool can be seen as a reduced version of the first tool as it only shows relative values on the map. The aim is to have less information on the map so that selection of areas of interest will become easier or faster. For each objective the current values are ranked. Using this ranking the highest and lowest ranking parcels are identified. In this study the 20 % highest and lowest were presented (Fig. 7c). The relative value of each objective is presented in three classes where red represents the lowest 20 %, green the best 20 % and white all intermediate parcels. The relative objective values depend on both land use and water level. After improving a parcel by changing either land use or water level, a new ranking decides which parcels have again the 20 % highest and lowest value at that moment in time. This can be used to search for possible bottlenecks to identify areas in need of improvements. The amount of information to be processed by stakeholders is reduced as only the top and bottom 20 % are presented.

The final tool is the ‘stakeholder value tool’, which is linked to land use. The map shows which change is preferable for which stakeholder. Table 2 shows three stakeholders. Intensive farmers are assumed to be only interested in agriculture. Extensive farmers are assumed to also have an interest in soil and nature, while the stakeholder responsible for nature is assumed to have an interest in soil and nature only. By weighting the objectives the stakeholder values can be calculated. This tool shows potential values for each stakeholder: the potential value if the land use is changed to the preferred land use of the stakeholder. The map shows the best and worst 20 % of the parcels for each stakeholder. The three boxes now represent the three land uses (Fig. 7d). The left box represents intensive grassland, extensive grassland in the middle and right nature. A threshold is specified say 20 %, indicating the best and worst 20 %. A box is red or green if it is with the worst or best 20 %; otherwise it is white. These values are independent of the land use of a parcel. The land use type that leads to a high value is green and the land use type that has a low value is red. As a response users can change land use if the current land use is presented with a red box or if another land use type is presented with a green box. Table 2 shows how the objectives are linked to the stakeholders.

In summary, the tools differ in the amount of information, the number of calculation steps to process the underlying information, and the degree of ambiguity. The first three tools show information about three objective values with each three legend classes and provide information that leaves room for discussion. The last tool only shows a single value and directly suggests a change. This tool is more prescriptive to the decisions to be made. The tools differ from each other as the second tool includes an additional ranking. The third tool is even more complex as weighting was included, and the aggregation of the last tool results in less information on the map. The values are presented as boxes which leave the background map visible. Linking the boxes with different maps can provide insight into the influence of different factors on objective values. From Fig. 7a, for example, it does not become clear why parcels 3 and 4 have a low value for agriculture. In Fig. 8 the tools are shown on top of the current water level. Figure 8a reveals that parcels 3 and 4 have a high water level which decreases the value of agriculture.

5 Results

This section presents the results of the survey. Four geodesign tools were developed to present stakeholder objectives. The tools vary in the way the values of these objectives are presented. Tool performance was divided in communicative performance and task performance. Communicative performance is defined as the ability to deliver information from the map to the user. Task performance is defined as tool functionality and describes how well a tool supports a specific task. The results of the survey are described in four steps: (1) respondents’ characteristics (2) communicative performance, (3) task performance, and (4) overall performance.

5.1 Respondents

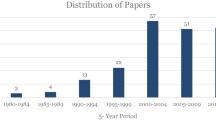

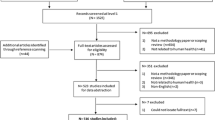

The online survey was completed by 49 of the 78 respondents (completion rate of 63 %). The respondents that finished questions about the first tool completed the survey. The respondents that dropped out, had already stopped after the first substantive question. The respondents were students (63 %) and researchers (27 %). They were experienced or very experienced with maps (51 %), land use maps (35 %) and GIS (22 %). The average duration of completing the survey was 43 min, though it was not registered whether the respondents had small breaks between questions. The questions in the survey were found to be difficult by 39 % of the respondents (e.g those that checked difficult or very difficult on a five point Likert scale).

5.2 Communicative Performance

A distinction was made between static and dynamic performance. Static performance was assessed in two dimensions. First, the respondents were asked how they interpreted the map patterns. Second, they were asked about the underlying relations. Dynamic performance was tested by changing the land use in all four tools followed by questions about changes in the maps. For static performance, 22 respondents (45 %) answered all questions correctly. From the 27 (55 %) that had made mistakes, there were 15 respondents who made multiple mistakes. The 22 % that were wrong on the relative objective tool all picked the same wrong answer. They interpreted the map as if it was the absolute objective tool. For dynamic performance, 9 respondents (18 %) were completely correct and 31 (63 %) made only 1 or 2 mistakes. The scores of communicative performances for all respondents are shown in Table 3.

The objective value tool includes no additional calculation steps. As expected this tool scored high on all categories. A score of 96 % implies that two respondents (4 %)gave the wrong answer. The tools that include a ranking step, the Relative objective value tool and the Stakeholder value tool, have lower rates. This is especially the case for dynamic performance.

Map patterns were found most difficult to understand using the relative objective value tool as compared to the objective value tool, the total value tool, andthe stakeholder value tool. Understanding the underlying relationships was easier for the objective value tool compared to the relative, and the total value tool. This suggests that aggregation as well as ranking decreases the ability to understand the relations that formed the map. The dynamic performance of the total value tool is low partly because of the relative high percentage that was indicated as ‘do not know’ and listed as wrong (12 %), compared to 0–6 % for all the other questions.

The relative value tool and the stakeholder value tool use a percentage to calculate the best and worst parcels. This percentage is dependent on the planning task, for example, 20 %, when the assignment is to allocate extensive grasslands in 20 % of the area. It was tested whether the respondents understood the effects of a change in this percentage. The respondents were presented with a map based on different percentage and were asked whether they thought that the percentage was increased, decreased, unchanged or whether they had no idea. Only 51 % of the respondents correctly understood in which direction the percentage had changed, 14 % of the respondents had no idea and another 33 % had the direction of change wrong. From this it can be concluded that tools presenting individual performance of spatial units are easier to understand compared to tools based on ranking of these units. The objective value tool and the total value tool were best understood based on dynamic performance.

5.3 Task Performance

Task performance was evaluated by two questions. First, respondents were asked to select the tool they found most suitable for a specific task. In a follow up question respondents were asked to apply the selected tool to complete the task. The results are shown in Table 4.

Tool application (/Total) performance represents the percentage of the respondents that selected the correct tool and also applied it correctly. Therefore, it is never higher than the tool selection score. The last row, tool application (/Correct tool) shows the success rate as a percentage of the respondents that selected the correct tool. It was not possible to derive the correct answer with the wrong tool. Table 4 shows that in general respondents picked the right tool for the assigned task with the exception of the stakeholder value tool which was only selected by 67 % of the respondents for the associated task.

Although the objective value tool scored best on communicative performance it scores lowest on corrected tool application. This can possibly be explained by the amount of information that needs to be processed to perform the task. Although this tool is the easiest to understand it requires the most information to be processed. The tools that involve ranking, the relative objective value tool and stakeholder value tool, only present the best and worst parcels and therefore there is less information to process. In addition, the stakeholder value tool aggregates the information for each stakeholder. This could explain the high performance of this tool. From the respondents 47 % selected the correct tool for all tasks, 33 % made one mistake and 20 % went wrong on multiple tools.

5.4 Overall Performance

The previous sections showed differences between performance rates for both communicative and task performance. The first relates to the dimensions (1) map patterns, (2) map relations, (3) map change, and the second results from (4) tool selection and (5) tool application. For each tool five questions were asked. If everyone was correct on all questions the total performance is 100 %.The influence of each dimension on the overall performance is shown in Fig. 9.

The summation of all performance questions provides information on the total performances for each of the tools. The tools differ in the level of aggregation and the use of ranking. The results in Fig. 10 show that the objective value tool has highest percentage of correctness and the stakeholder value tool has the lowest percentage. The stakeholder value tool includes ranking and aggregation and was therefore also expected to be the most complex.

The bars of Fig. 11 show the dimension performances for each of the respondents ranked from low to high performance for the two levels of expertise. The respondents 1–25 are non-experts and 26–49 are experts. Those with low performance rates have no correct answers for dynamic performance (map change) and task performance (tool application and tool selection). Performance scores range from 30 to 100 %. Almost all people managed to answer more than half of the questions correctly but only two respondents reached a score of 100 %.

The overall performance is also influenced by the level of experience of a user. Comparing the total performance of the non-experts (M \(=\) 14.8, SD \(=\) 3.01) with the experts (M \(=\) 17.0, SD \(=\) 2.02) showed a significant difference t(23) \(=\) 2.01, p \(=\) 0.004.The performance rates are higher for experts compared to non-experts for all survey dimensions. In addition, the variance in the performance of the non-experts is higher (Fig. 12).

At the end of the survey, respondents were asked to assess the difficulty of the tools using a five point scale from very easy to very difficult. The results show that the objective value tool was perceived easier (M \(=\) 2.86, SD \(=\) 0.91) than the relative objective value tool (M \(=\) 3.22, SD \(=\) 0.90), t(49) \(=\) 1.98, \(\hbox {p} < 0.05\). No correlation with completion time and no correlation with experience could be found.

From the performance scores it could be concluded that none of the tools was found to be too difficult (performances \(>\)72 %). However, the static dimensions of communicative performance showed that the inclusion of ranking has a negative influence on the interpretation of map patterns and understanding underlying mechanisms (map relations) although the differences remain small. The assignment on the understanding of map change suggests the tool that included both ranking and aggregation was less suitable for interactive use. The analyses of the performances based on respondents characteristics indicated that the tools were better understood by users with some experience with maps, though were not too difficult for non-experts. In general, the tools without ranking were perceived easier compared to those including ranking or aggregation. The tools described were only used to present a maximum of three objectives. This is in accordance with the findings of Arciniegas et al. (2012) who concluded in an empirical analysis of the effectiveness of map presentations of stakeholder values that no more than three objectives should be presented simultaneously. Recently, Pelzer et al. (2014) qualitatively evaluated the perceived added value of planning support systems (PSS) by frequent users of a touch table device. The results of this study show that the practitioners found improved collaboration and communication as main advantage of the tools. The tools were specifically designed for interactive use. This required short calculation times and the need for explanation should be limited.

6 Conclusions

Geodesign tools are used to increase the effectiveness of spatial planning. However, effectiveness is a broad concept that can include many aspects. Only few studies explicitly tested the effectiveness of geodesign (e.g. Inman et al. 2011; Arciniegas et al. 2012). This study measured the effectiveness of geodesign tools as tool performance. Performance was considered high if a user is able to complete an assignment correctly using the information presented. Knowledge on tool performance is important for future tool use and tool design as it can provide arguments for selecting a specific type of tools or to design a new tool.

6.1 Ranking and aggregation

The tools differed in the use of an aggregation or ranking step. Aggregation means that the values are weighted and summed into a total value. Aggregated values support stakeholders by combining multiple sources in a single attribute. Aggregation prevents a stakeholder from having to combine objectives themselves. Ranking means that the order of the value is used to select values. The result is information that only shows best and worst objective values.

The tool that performed best on communicate performance was the objective value tool. This was the most simple tool without an additional aggregation or ranking step. Adding a ranking step lowered performance, especially dynamic performance. This was in line with the results on perceived difficulty of the tools. Performance on map patterns was found most difficult for the relative objective value tool. Understanding the underlying relations was easier for the objective value tool compared to the relative and total value tool. This suggests that aggregation as well as ranking decreased the ability to understand the relations that formed the map. Although the objective value tool scored best on communicative performance it scored lowest on tool application. This could possibly be explained by the amount of information that needed to be processed to perform the task. Although this tool was the easiest to understand it required the most information to be processed. The tools that involve ranking, the relative objective value tool and stakeholder value tool, only presented the best and worst parcels and therefore there was much less information to process. In addition, the stakeholder value tool aggregated the information for each stakeholder. The summation of all performance questions provided information on the total performance for each of the tools. The results showed that the objective value tool performed best and the stakeholder value tool had the lowest performance. The stakeholder value tool included ranking and aggregation and was therefore also expected to be the most complex. The overall performance is also influenced by the level of experience of a user. The average performance rates for dynamic and task performance were higher for experts compared to non-experts.

6.2 In conclusion

Tools should be as simple as possible. Adding ranking and aggregation steps makes the tools more difficult to understand. On the other hand tools should also limit the amount of information to be processed by the user of the tool. This may well call for including ranking and aggregation steps. This stresses the importance of tailoring methods to tasks. Further research is needed to experiment with the tools in a workshop setting (Eikelboom and Janssen 2015), to test the tools in practice (see for example Janssen et al. 2014) and to test the tools in different contexts (see for example Alexander et al. 2012). But maybe the most important characteristic of the tools is that they allow for trial and error. Steinitz (2012) emphasizes that this is very important as it increases the opportunity for experimentation and learning by doing.

References

Alexander KA, Janssen R, Arciniegas G, O’Higgins TG, Eikelboom T, Wilding TA (2012) Interactive marine spatial planning: siting tidal energy arrays around the Mull of Kintyre. Plos One 7(1):1–8

Arciniegas GA, Janssen R, Omtzigt N (2011) Map-based multicriteria analysis to support interactive land use allocation. Int J Geogr Inf Sci 25:1931–1947

Arciniegas G, Janssen R, Rietveld P (2012) Effectiveness of collaborative map-based decision support tools: results of an experiment. Environ Model Softw 1–17

Batty M (2008) Progress, predictions, and speculations on the shape of things to come. In: Brail RK (ed) Planning support systems for cities and regions. Lincoln Institute of Land Policy, Cambridge, pp 3–30

Belton V, Stewart TJ (2002) Multiple criteria decision analysis: an integrated approach. Springer, Berlin

Bertin J (1983) Semiology of graphics: diagrams, networks, maps. University of Wisconsin Press, Madison

Boroushaki S, Malczewski J (2010) Measuring consensus for collaborative decision-making: a GIS-based approach. Comput Environ Urban Syst 34:322–332

Borst J (2010) Urban strategy: interactive spatial planning for sustainable cities. Infrastructure systems and services: next generation infrastructure systems for Eco-Cities (INFRA) (2010) third international conference on, pp 1–5. IEEE

Brouns K, Eikelboom T, Jansen PC, Janssen R, Kwakernaak C, Van den Akker JJH, Verhoeven JTA (2014) Spatial analysis of soil subsidence in peat meadow areas in Friesland in relation to land and water management, climate change and adaptation. J environ manag plan. doi:10.1007/s00267-014-0392-x

Budic IZ (1994) Effectiveness of geographic information systems in local planning. J Am Plan Assoc 60(2):244–263

Carton LJ (2007) Map making and map use in a multi-actor context. Spatial visualizations and frame conflicts in regional policymaking in the Netherlands. PhD thesis, TU Delft, Delft

Carton LJ, Thissen WAH (2009) Emerging conflict in collaborative mapping: towards a deeper understanding? J Environ Manag 90:1991–2001

Crossland MD, Wynne BE, Perkins WC (1995) Spatial decision support systems: an overview of technology and a test of efficacy. Decis Support Syst 14:219–235

Densham PJ (1991) Spatial decision support systems. Geogr inf syst 1:403–412

Dias E, Linde M, Rafiee A, Koomen E, Scholten H (2013) Beauty and brains: integrating easy spatial design and advanced urban sustainability models. In: Geertman S, Toppen F, Stillwell J (eds) Planning support systems for sustainable urban development. Springer, Berlin, pp 469–484

Dong W, Ran J, Wang J (2012) Effectiveness and efficiency of map symbols for dynamic geographic information visualization. Cartogr Geogr Inf Sci 39:98–106

Duhr S (2004) The form, style, and use of cartographic visualisations in European spatial planning: examples from England and Germany. Environ Plan A 36:1961–1989

Duhr S (2007) The visual language of spatial planning. Routledge, London

Eikelboom T, Janssen R (2013) Interactive spatial tools for the design of regional adaptation strategies. J Environ Manag 127:S6–S14

Eikelboom T, Janssen R (2015) Collaborative use of geodesign tools to support adaptation planning. J Mitig Adapt Strateg Glob Change (in press)

Flaxman M (2010) Fundamentals of geodesign. In: Buhmann E, Pietsch M, Kretzler E (eds) Proceedings of digital landscape architecture conference 2010. Wichmann Verlag, Berlin Wichmann, pp 28–41

Fuchs S, Spachinger K, Dorner W, Rochman J, Serrhini K (2009) Evaluating cartographic design in flood risk mapping. Environ Hazards 8:52–70

Garlandini S, Fabrikant S (2009) Evaluating the effectiveness and efficiency of visual variables for geographic information visualization. In: Hornsby K, Claramunt C, Denis M, Ligozat G (eds) Spatial information theory. Springer, Berlin, pp 195–211

Geertman S, Stillwell J (2004) Planning support systems: an inventory of current practice. Comput Environ Urban Syst 28:291–310

Geertman S, Stillwell J (2009) Planning support systems: best practices and new methods. Springer, Berlin

Geertman S, Toppen F, (2013) Planning support systems for sustainable urban development. Springer, Berlin

Goodhue DL, Thompson RL (1995) Task-technology fit and individual performance. MIS Q 213–236

Gudmundsson H (2011) Analysing models as a knowledge technology in transport planning. Transp Rev 31(2):145–159

Herwijnen M (1999) Spatial decision support for environmental management. PhD thesis VU University, Amsterdam

Inman D, Blind M, Ribarova I, Krause A, Roosenschoon O, Kassahun A, Scholten H, Arampatzis G, Abrami G, McIntosh B, Jeffrey P (2011) Perceived effectiveness of environmental decision support systems in participatory planning: evidence from small groups of end-users. Environ Model Softw 26:302–309

Jankowski P, Andrienko N, Andrienko G (2001) Map-centred exploratory approach to multiple criteria spatial decision making. Int J Geogr Inf Sci 15:101–127

Janssen R, Uran O (2003) Presentation of information for spatial decision support A survey on the use of maps by participants in quantitative water management in the IJsselmeer region, The Netherlands. Phys Chem Earth Parts A/B/C 28:611–620

Janssen R, Eikelboom T, Brouns K, Verhoeven JTA (2014) Using geodesign to develop a spatial adaption strategy for south east Friesland. In: Dias E, Lee D, Scholten HJ (eds) Geodesign for land use planning. Springer, New York, pp 103–116

Jonsson D, Berglund S, Almström P, Algers S (2011) The usefulness of transport models in Swedish planning practice. Transp Rev 31(2):251–265

Kraak M (2004) The role of the map in a Web-GIS environment. J Geogr Syst 6:83–93

McCall MK, Dunn CE (2012) Geo-information tools for participatory spatial planning: fulfilling the criteria for ’good’governance? Geoforum 43:81–94

Meng Y, Malczewski J (2009) Usability evaluation for a web-based public participatory GIS: a case study in Canmore, Alberta. Cartographie, Imagerie, SIG 483

Nyerges T, Jankowski P, Tuthill D, Ramsey K (2006) Collaborative water resource decision support: results of a field experiment. Ann Assoc Am Geogr 96(4):699–725

Ozimec AM, Natter M, Reutterer T (2010) Geographical information systems-based marketing decisions: effects of alternative visualizations on decision quality. J Mark 74:94–110

Pelzer P, Geertman S, van der Heijden R, Rouwette E (2014) The added value of planning support systems: a practitioner’s perspective. Comput Environ Urban Syst 48:16–27

Pettit C, Klosterman R, Nino-Ruiz M, Widjaja I, Russo P, Tomko M, Sinnott R, Stimson R (2013) The online what if? Planning support system. In: Geertman S, Toppen F, Stillwell J (eds) Planning support systems for sustainable urban development, 195th edn. Springer, Berlin, pp 349–362

Salter JD, Campbell C, Journeay M, Sheppard SRJ (2008) The digital workshop: exploring the use of interactive and immersive visualisation tools in participatory planning. J Environ Manag 90(6):2090–2101

Sidlar CL, Rinner C (2009) Utility assessment of a map-based online geo-collaboration tool. J Environ Manag 90(6):2020–2026

Slocum TA, McMaster RB, Kessler FC, Howard HH (2009) Thematic cartography and geovisualization. Pearson Prentice Hall Upper Saddle River, New York

Steinitz C (2012) A framework for geodesign: changing geography by design. ESRI Press, Redmond

Te Brömmelstroet M (2012) Performance of planning support systems: what is it, and how do we report on it? Comput Environ Urban Syst 41:299–308

Vonk G, Geertman S, Schot P (2005) Bottlenecks blocking widespread usage of planning support systems. Environ Plan A 37:909–924

Vonk G, Ligtenberg A (2010) Socio-technical PSS development to improve functionality and usability-sketch planning using a Maptable. Landsc Urban Plan 94:166–174

Waddell P (2002) UrbanSim: modeling urban development for land use, transportation, and environmental planning. J Am Plan Assoc 68:297–314

Xiao N, Armstrong MP (2012) Towards a multiobjective view of cartographic design. Cartogr Geogr Inf Sci 39:76–87

Acknowledgments

This research was funded by the ‘Knowledge for Climate’ research program of the Netherlands. The respondents are acknowledged for their contribution to the results of the surveys.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution License which permits any use, distribution, and reproduction in any medium, provided the original author(s) and the source are credited.

About this article

Cite this article

Eikelboom, T., Janssen, R. Comparison of Geodesign Tools to Communicate Stakeholder Values. Group Decis Negot 24, 1065–1087 (2015). https://doi.org/10.1007/s10726-015-9429-7

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10726-015-9429-7