Abstract

Neural rhythms or oscillations are ubiquitous in neuroimaging data. These spectral responses have been linked to several cognitive processes; including working memory, attention, perceptual binding and neuronal coordination. In this paper, we show how Bayesian methods can be used to finesse the ill-posed problem of reconstructing—and explaining—oscillatory responses. We offer an overview of recent developments in this field, focusing on (i) the use of MEG data and Empirical Bayes to build hierarchical models for group analyses—and the identification of important sources of inter-subject variability and (ii) the construction of novel dynamic causal models of intralaminar recordings to explain layer-specific activity. We hope to show that electrophysiological measurements contain much more spatial information than is often thought: on the one hand, the dynamic causal modelling of non-invasive (low spatial resolution) electrophysiology can afford sub-millimetre (hyper-acute) resolution that is limited only by the (spatial) complexity of the underlying (dynamic causal) forward model. On the other hand, invasive microelectrode recordings (that penetrate different cortical layers) can reveal laminar-specific responses and elucidate hierarchical message passing and information processing within and between cortical regions at a macroscopic scale. In short, the careful and biophysically grounded modelling of sparse data enables one to characterise the neuronal architectures generating oscillations in a remarkable detail.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Neural rhythms have been associated with a variety of cognitive functions; including working memory (Pesaran et al. 2002; Siegel et al. 2009), visual attention (Buschman and Miller 2007; Fries 2009; Kornblith et al. 2015; Womelsdorf et al. 2006), cortical representations (Buzsáki and Chrobak 1995; Schoffelen et al. 2005), feature binding (Tallon-Baudry et al. 1996) and information propagation in feedforward/feedback directions in cortical hierarchies (Bastos et al. 2012; Friston et al. 2015). Oscillatory activity is also thought to be the signature of aberrant neuronal processing in psychiatric diseases (Uhlhaas and Singer 2012), such as autism (Dickinson et al. 2015) or schizophrenia (Gonzalez-Burgos and Lewis 2008) and can be used to disclose mechanisms underlying intersubject variability (Pinotsis et al. 2013). Gamma band responses in particular, have been shown to reflect various input attributes, like the size of visual objects (Pinotsis et al. to appeara; Perry et al. 2013), luminance (Swettenham et al. 2013) and contrast (Pinotsis et al. 2014; Ray and Maunsell 2010; Roberts et al. 2013). Here, we consider powerful tools from Bayesian inference to illustrate the wealth of information about brain function that neural rhythms and electrophysiological responses afford. In this setting, Bayesian deconvolution and empirical Bayes are used to finesse the ill-posed problem of reconstructing and explaining electromagnetic sources and oscillatory responses. In this setting, neural activity is described by probability densities parameterized by physiological or anatomical (lead field) parameters and hyper-parameters that embody assumptions about random effects. This description provides a generative model of how underlying signals are caused, which can be used to optimise the model—and its (physiological) parameters. This approach calls on a combination of forward and backward modelling that involves simulating predicted responses (using biologically plausible anatomical models) and Bayesian inversion to estimate cortical structure and function. We will showcase two applications of Variational Bayes to extract information from neuroimaging data, see also (Pinotsis and Friston 2014a): First, we will illustrate the richness of non-invasive recordings by reviewing recent studies that use parametric empirical Bayes (PEB) to characterise intersubject variability—in cortical function—using non-invasive electrophysiology. Second, we will preview a new study that uses laminar data and Bayesian model comparison to analyse oscillatory recordings obtained from the prefrontal cortex during a delayed saccade task. These complementary examples show how biologically informed modelling of electrophysiological measurements can, on the one hand, allow questions about microcircuitry to be answered using macroscopic (non-invasive) data, while on the other hand microscopic (invasive) data can be used to inform hypotheses about neuronal interactions at a macroscopic scale.

Hierarchical Bayesian Models and the Analysis of Neuroimaging Data

In this section, we summarize some theoretical results and show how (i) parametric empirical Bayes (PEB) can be used to quantify group effects in multi-subject studies—by optimizing hierarchical Bayesian models and (ii) how Bayesian model comparison allows us to reconcile formally distinct (compartmental and mean field) models and construct DCMs of laminar probe data.

The first application provides a fresh perspective on the use of non-invasive electrophysiology: M/EEG are often thought to have excellent temporal but limited spatial resolution, see e.g., (Lütkenhöner 2003). However, in previous work we have shown how variational Bayesian inference can be used as a mathematical microscope to yield non-invasive estimates of cortical anatomy, structure and function, see e.g., (Pinotsis et al. 2013). At the same time, M/EEG responses can illuminate the neurobiological mechanisms underpinning neural rhythms (Friston et al. 2015; Pinotsis et al. 2014). Here, we present further results that illustrate the rich spatial information in non-invasive data and argue that this richness is (only) bounded by the models used to explain empirical data—and the experimental design used to elicit those state (e.g., experimental artefacts, number and location of the sensors, length of recording sessions etc.), see also (Troebinger et al. 2014). Exploiting this rich spatial information rests upon formulating appropriate hierarchical Bayesian models that generate predictions of neural activity and group effects (Friston et al. 2016). A general form of these models accommodates both within and between subject effects. For example:

where \(y_{i}\) is a matrix of i-th subject responses, \(\varGamma_{i} (\theta^{(1)} )\) represents the (differential equation or dynamic causal) model that generates these responses with parameters \(\theta^{(1)}\), \(\varGamma (\theta^{(2)} )\) is the between subject (second level) model that describes intersubject variability in the parameters of the first level model. The second level maps second to first level parameters (e.g., group means to subject-specific parameters), where \(\varepsilon^{(i)}\) represent random effects at each level (e.g., intersubject variability and observation noise). Below, we combine these second level models with models of brain activity that make predictions about the dynamics of coupled excitatory and inhibitory populations. In these applications, \(\varGamma_{i} (\theta^{(1)} )\) captures biophysical (see e.g. (Deco et al. 2008; Pinotsis and Friston 2014a) behaviours that are caused by key architectures and (synaptic) connectivity parameters of interest. Bayesian procedures allow us to identify the form of hierarchical models and estimate their (hidden) parameters using observed responses and Variational Bayesian inference (Friston et al. 2007, 2008).

In the context of non-invasive electrophysiology, the hierarchical model (2) poses the difficult inversion problem of finding neural source estimates in the context of intersubject variability. This involves (i) partitioning the covariance of observed data into observation error and components that can be explained in terms of neuronal responses, that themselves entail components due to second level (between-subject) level variability; (ii) exploiting differential equation models to provide anatomical and physiological constraints on the explanation for first level (within subject) responses—usually in terms of (synaptic) connectivity estimates. The second level covariance components specify whether the parameters of the dynamical model at the first level are random or fixed effects, while dynamical models provide predictions of the dynamics at source and sensor space, which depend upon cortical anatomy and physiology.

In summary, hierarchical or empirical Bayesian modelling of the sort implied by Eq. (2) allows us to perform efficient source reconstruction and obtain connectivity estimates by replacing phenomenological constraints (e.g., based on autoregressive modelling and temporal smoothness considerations) by spatiotemporal constraints based on models of neuronal activity. This can be thought as an alternative to autoregressive models, which model statistical dependencies among measured signals—as opposed to the neuronal processes generating measurements. In dynamic causal modelling, one uses a forward or generative model of distributed processing to estimate the (coupling) parameters of that model. Inference then proceeds assuming nonlinear within-subject effects and linear between subject effects. This allows one to distinguish among competing hypotheses about the mechanisms and architectures generating the data and the nature of group effects in multiple subject studies (Friston et al. 2015, 2016; Pinotsis et al.; to appear).

Crucially, the use of Bayesian model reduction (BMR) allows one to reduce the computational burden of inverting PEB models from multiple subjects and enables an efficient scoring and averaging of large sets of (nested) models (Friston and Penny 2011). Bayesian model reduction entails the estimation of a posterior density over hidden model parameters for a reduced model (defined in terms of a prior density) using just the posterior density estimated from a full model (with a complete set of parameters). We can express the generative model in Eq. 1 in terms of a likelihood model and the implicit (empirical) priors:

Here, \(y\) denotes the data obtained from all subjects (indexed by i) and the generative model \(\varGamma_{i}\) is a function of model parameters at the first or within-subject level: \(\theta^{(1)}\). These parameterize the connectivity architecture mediating responses, the observation function \(\varphi \subset \theta^{(1)}\) and the spectra of the inputs and channel noise, \(\{ \alpha_{n} ,\alpha_{u} ,\beta_{n} ,\beta_{u} \} \subset \theta^{(1)}\). Gaussian assumptions about sampling errors \(\varepsilon^{(1)}\) provide the likelihood model at the first (within-subject) level: \(p(y_{i} |\theta^{(1)} )\). To explain intersubject variability this model is supplemented with a mapping from group means to subject-specific estimates: \(\theta^{(1)} = (X \otimes I)\theta^{(2)} + \varepsilon^{(2)}\), where \(\varepsilon^{(2)}\) are random effects (at the between–subject level) and X is a design matrix containing between-subject explanatory variables. Below, using Bayesian model reduction, we adjudicate among competing hypotheses about the intrinsic connections that show intersubject variability. Effectively, this involves comparing the evidence for random effects models with and without (combinations of) between subject effects on (combinations) of connectivity parameters.

In this hierarchical model, constraints on the posterior density over model parameters are provided by the level above. In variational Bayesian inference, the approximate posterior over the second level parameters is obtained by optimising its sufficient statistics (i.e., mean and covariance) with respect to a (second level) free energy:

The key thing about this free energy is that it can be evaluated (using BMR) without optimising the first level posterior. This means the second level parameters (e.g., group means) can be optimised or estimated, for any given model of priors, without reinventing the model at the first level. Technically, the inversion of the hierarchical or empirical Bayesian model only requires the posterior density from the inversion of each subject’s DCM. In short, the use of BMR allows one to make inferences at the group level without having to re-estimate subject-specific parameters; see (Friston et al. 2015, 2016) for details and a study of robustness of this scheme—and (Litvak et al. in press) for a reproducibility study using independent data under formally distinct models. Finally, after obtaining optimized second level estimates, these can be used as empirical priors to recursively optimize densities over parameters at the first level. The latter approach is not necessary but can finesse the local minima problem inherent in nonlinear (dynamic causal) modelling at the first level and allows one to estimate subject—or trial—specific parameters when a subset of subjects (or trials) provide more informative data than others (e.g. because of differences in lead fields), see (Friston et al. 2016).

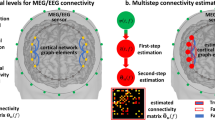

The second application of (approximate) Bayesian inference considered below uses posterior means obtained from compartmental (conductance based) models (Jones et al. 2007; Mainen and Sejnowski 1996; Prinz et al. 2003; Traub et al. 1991) as empirical priors. We first obtain simulated responses from a compartmental model that has been previously shown to faithfully represent the cortical microarchitecture—and has been used to model MEG responses during a tactile stimulation paradigm (Bush and Sejnowski 1993; Jones et al. 2007). We then use these simulated data to optimize the mean-field (lumped) parameters of a homologous neural mass model. The resulting parameters provide prior constraints on neural mass models that can be used for subsequent dynamic causal modelling of empirical responses. This approach ensures the neural mass model has construct validity, in relation to more detailed (compartmental) models of cortical microcircuitry; see Fig. 1 for a summary of this approach.

Schematic of the validation steps. a We first establish the functional equivalence between the model of Jones et al. (2007) and its symmetric variant. Here, horizontal arrows of different widths in the left panel denote asymmetric connectivities and delays between mini-columns—depicted as rectangles containing superficial and deep pyramidal cells (SP and DP) and inhibitory interneurons (II). In the right panel a mean field reduction of the model (and the symmetry assumptions about lateral connections) reveals a setup similar to that adopted in neural mass models. b We then establish the construct validity of the corresponding mass model in relation to mean field model above. This is achieved by fitting the model to synthetic data obtained from its compartmental homologue. c Finally, we show how this model can distinguish between superficial and deep responses obtained with laminar probes. We exploit Bayesian model selection and compute the relative log-evidence for plausible (left) and implausible (right) experimental setups, where the probes of laminar sensors are placed in the correct and inverted locations, see below

Our aim, in this second study, is to make inferences using data collected with laminar probes (see below). The first level generative model makes predictions of layer specific responses, where we eschew the difficult problem of inverting detailed (compartmental) models (due model complexity and conditional dependencies) by using simulated data obtained with a microscopic (compartmental) model \(m_{CM}\) to inform a (mean field) neural mass model \(m_{MF}\) of empirical data. In other words, we consider the joint optimization of compartmental and neural mass models, assuming they are homologous (i.e., they explain the same underlying cortical function and structure, see Pinotsis et al. under review, for more details). Effectively, we use the conditional densities obtained after fitting simulated data as empirical priors for subsequent analyses of empirical data. In what follows, we illustrate this approach after first describing a study of individual differences in gamma oscillations.

Neural Models and Their Inversion with Variational Bayes

Neural models describe brain activity at different scales, ranging from single cells to whole brain networks. In this review, we consider both population and compartmental models. In the first application using Bayesian model reduction, we consider a neural field model with a canonical cortical microcircuitry following (Bastos et al. 2012; Pinotsis et al. 2014). In the second application—that focuses on the analysis of interlaminar data—we consider a compartmental model that follows the microcircuitry introduced in (Bush and Sejnowski 1993) and its neural mass counterpart introduced in (Pinotsis et al. under review), see Fig. 2.

The Bush and Sejnowski mass model. This figure shows the evolution equations that specify a neural mass of a single source. This model contains four populations occupying different cortical layers: the pyramidal cell population of the Jansen and Rit model is here split into two subpopulations allowing a separation of the sources of forward and backward connections in cortical hierarchies. Firing rates within each sub-population provide inputs to other populations and subsequent convolution of presynaptic activity generates postsynaptic depolarization. We treat the activity in superficial and deep populations as separate predictors—as opposed to common neural mass model applications that use weighted mixtures of activity from all subpopulations. Excitatory connections are in black and inhibitory connections are in red

Population models come in different flavours, for a review see (Deco et al. 2008; Moran et al. 2015) with some cardinal distinctions; namely the distinction between convolution and conductance dynamics, the distinction between neural mass and mean field formulations and the distinction between point sources and neural field models. The first distinction pertains to the dynamics or equations of motion within a single population. Convolution models formulate synaptic dynamics in terms of a (linear) convolution operator; whereas conductance based models consider the (non-linear) coupling between conductance and voltage. The second distinction is between the behaviour of a neuronal population or ensemble of neurons—as described with their mean or a point probability mass over state space. This contrasts with mean field approaches that model the ensemble density, where different ensemble densities are coupled through their expectations and covariances; in other words, these models include a nonlinearity that follows from the interaction between first and second order moments. This extra realism allows them to reproduce faster population dynamics; for example, somatosensory evoked potentials (Marreiros et al. 2010; Pinotsis et al. 2013b). Finally, there is a distinction between models of populations as point sources (c.f., equivalent current dipoles) and models that have an explicit spatial domain over (cortical) manifolds that call on neural fields. Neural field models are defined in terms of (integro-) differential equations that describe cortical dynamics in terms of (spatially) distributed sources sending afferent connections, conduction delays and lumped synaptic time constants (Pinotsis and Friston 2014b). These equations prescribe the activity in neuronal populations occupying bounded manifolds (patches) in different layers that lie beneath the cortical surface. In summary, field and mass models offer a coarse-grained description of spatiotemporal dynamics of brain sources in terms of smooth (analytic) connectivity matrices that also depend on time (and perhaps space).

Compartmental models on the other hand, operate at the single cell level. They yield precise descriptions of the anatomy, morphology and biophysical properties of the neurons that constitute populations. These models provide detailed descriptions of intracellular (longitudinal) currents within the long apical dendrites of synchronized cortical pyramidal cells, see e.g. (Bazhenov et al. 2002; Einevoll 2014; Krupa et al. 2008; Lindén et al. 2010; Ramirez-Villegas et al. 2015; Roth and Häusser 2001; Santaniello et al. 2015). These models embody the laminar structure of a cortical column and can characterize the cellular and circuit level processes that are measured with multielectrode arrays, MEG or electrocorticography. They provide characterizations of neuronal morphology and how neurons are grouped together to form spatially extended networks with well-behaved intrinsic (inter-and intra-laminar) connectivity. In the analysis of microelectrode data below, we employ a compartmental model that was originally used to explain somatosensory evoked responses measured with MEG during a tactile stimulation paradigm (Jones et al. 2007), and its neural mass analogue. Having specified the particular generative or forward model of observed that physiological responses the next step is to estimate the evidence and parameters of competing models; usually using dynamic causal modelling (DCM).

DCM offers a framework for the inversion of state space models using a Variational Bayesian algorithm known as Variational Laplace. This is based on the optimization of a cost function called variational Free Energy, \({\mathcal{F}}\). This provides a bound on the model log-evidence that—under Gaussian assumptions about the posterior density and random effects—acquires a simple form: see (Friston et al. 2007) for details. A standard model inversion corresponds to the case when \({\mathcal{F}}\) is given by Eq. (3), while the empirical Bayesian approach used here considers the case where \({\mathcal{F}}\) is defined at the first and second level on a hierarchical model (within and between subject respectively)—and is optimized with respect to first and second level posteriors (Eq. 3). Crucially, this optimization is computationally efficient, because the second level free energy receives a contribution from the first level that can be computed easily for any (reduced) priors, given the (pre-computed) posterior under full priors: see Friston et al. (2015, 2016) and subsequent applications for more details.

Compartmental models usually include a large number of parameters that renders the (ill-posed) inverse problem of mapping responses to laminar-specific neuronal sources quite hard. This mapping has been addressed using methods like current source density (Freeman and Nicholson 1975; Koo et al. 2015; Mitzdorf and Singer 1977; Sakamoto et al. 2015) and more recently Laminar population analysis (Einevoll et al. 2007; Ness et al. 2015). We show below that this problem can be bypassed using an alternative approach. This approach uses Bayesian model comparison and predictions from a compartmental model to establish a formal equivalence with a population model. After the this equivalence or construct validity has been established, variational Bayes can be used to invert the population model as described above. Bayesian model selection is based on the (variational free energy approximation to the) relative log-evidence of competing models (Bayes factor):

If \(B_{ij} > 3\), we can say that model mi is better than mj—or more exactly, there is strong evidence for the i-th model relative to the j-th model.

Explaining Intersubject Variability in Gamma Responses Using Neural Fields

Neural rhythms are thought to reflect summed activity from excitatory and inhibitory pools of neurons under various input stimuli and in several cortical sources, e.g. (Hauck et al. 2007; Xing et al. 2009). We show below that by analysing such responses with neural field models and PEB, we can understand which mechanisms (manifested in the dynamics of coupled populations and lateral connections) are important for explaining individual differences in local gamma responses (or other phenotypes). In the following, we consider a particular form of Eq. (1) where \(\varGamma_{i} (\theta^{(1)} )\) is a likelihood model that produces observed (cross spectral) responses at sensors l and m (Pinotsis et al. 2014):

Here, \(L_{r} (k,\varphi )\) is the Fourier transform of the lead field of the q-th sensor, \(\dag\) denotes the conjugate transpose matrix and \(Q = [q_{1} ,q_{2} ,q_{3} ,q_{4} ]\) is a vector of coefficients that weights the contributions of each neuronal population to the observed MEG signal. Here, \(g_{u} (\omega )\) is a spatiotemporal representation of fluctuations or inputs driving induced responses, which we assume to be a mixture of white and pink temporal components. These contributions are based on anatomical properties and the lead field configuration of each population (e.g. inhibitory neurons do not generate a large dipole), where each electrode or sensor has its own sensitivity profile, reflecting the topographic structure of the underlying cortical source.

Equation (5) describes the predicted cross spectra as a function of the power of underlying neuronal fluctuations \(g_{u} (\omega )\) and transfer functions \(T(k,\omega ,\theta^{(1)} )\) that depend upon model parameters at the first or within-subject level: \(\theta^{(1)}\), see Table 1. For the explicit form of the transfer functions \(T(k,\omega ,\theta^{(1)} )\) we refer the interested reader to (Pinotsis et al. 2014).

Below we use the likelihood model given by Eq. (5) and PEB to study intersubject variability in (stimulus-locked) oscillations recorded with MEG during a visual perception paradigm (Perry et al. 2013). Technical details of this analysis can be found in (Pinotsis et al. to appear). Here, our focus is on explaining these results from the vantage point of hierarchical Bayesian inference (see above). We used cross spectral densities as data features that were taken from observed responses, while the subject was looking at stationary, vertically oriented bars. These spectral responses showed sustained activity in the 30–80 Hz range that varied across individuals with stimulus size: these responses either showed an approximately linear (monotonic) increase in the gamma-band response or a saturating response with increasing size, akin to surround suppression. So what are the key mechanisms that could explain these individual differences?

As intimated earlier, inferences and Bayesian model comparison at the group level necessitate the use of hierarchical models. In other words, \(\varGamma (\theta^{(2)} ) = (X \otimes W)\beta\), where X and W are design matrices describing group and within subject effects respectively. In our application, we assume (for simplicity), \(W = I\) and consider three proxies to describe phenotypic variations between subjects; namely, the change in amplitude of gamma responses with increasing stimulus size, the peak frequency over all stimuli and the amplitude of gamma responses averaged over stimuli (Perry et al. 2013). These proxies or phenotypes enter the design matrix X, creating the model space depicted in Fig. 3. First, we fit the (first level) model to individual subject data as in standard DCM approach. Then, we use Bayesian model reduction to invert the hierarchical model (2). The Kronecker tensor product with the identity matrix \(X \otimes I\) means that we have a second level parameter for every second level (phenotypic) variable and every first level (connectivity) parameter. This means one can identify the combination of connectivity parameters and phenotypic variables that best explains intersubject variability. This model space corresponds to all combinations of between subject effects. Having defined the hierarchical model we can now establish the significance of any group effects using Bayesian model reduction over (second level) models; see Fig. 3.

Above design matrix containing the between subject effects; these include a constant term and three parametric variables based upon electrophysiological characterisations of each subject. Left model space comprising second level effects encoded by the design matrix. Right posterior probability over models shows all three between subject effects are necessary to explain between subject gamma response variability

Having established the importance of the (between subject) explanatory variables, we can now focus on the main question; in particular, which factors mediate intersubject variability in gamma band responses. To address this question, we performed an exhaustive search over combinations of second level parameters, that is, the 30 parameters describing the effects of the three explanatory variables on the ten intrinsic connections of our neural field model. Using BMR, we scored every combination of parameters and identified key connections explaining intersubject variability in gamma responses. Bayesian model averages of these parameters are shown in Fig. 4: interestingly, individual differences can be explained by connections to and from inhibitory interneurons within a local source in the visual cortex. This speaks to crucial rule of fast inhibitory interneurons for the genesis of gamma rhythms—often referred to as the ING hypothesis (Lytton and Sejnowski 1991; Whittington et al. 2000). This example shows how one can obtain mechanistic explanations for intersubject variability in the peak gamma frequency, observed during visually induced oscillations and follows a similar study that asked whether this variability can be attributed to cortical structure or function (Pinotsis et al. 2013a). In contrast to the earlier paper—that used a summary statistic approach—the current study used a purely probabilistic approach based on empirical Bayesian models.

Posterior estimates obtained using BMR. Second level effects comprised differences in gamma responses with stimulus size and associated gamma peak frequency. Posterior means are in grey and 90 % confidence intervals are depicted in red. Individual differences in spectral responses seem to implicate connections to and from inhibitory interneurons (intrinsic connections five and nine are highlighted in thick lines in the insert on the right: inhibitory cells and connections are shown in red, while excitatory populations and connections are shown in black). Model posteriors for models with and without each second level parameter are shown separately for the constant term or group mean (bottom left panel) and group effects (bottom middle and right panels)

In short, this example shows how non-invasive (macroscopic) data can be used to make inferences at a microcircuitry level. In this instance, we have taken the opportunity to highlight inferences in the setting of hierarchical or empirical Bayesian models that accommodate intersubject variability. Our conclusion is that intersubject variability in visually induced gamma responses is best explained by differences in the intrinsic (laminar) connectivity to and from inhibitory interneurons.

Modelling Layer-Specific Activity Using Neural Mass Models

We now turn to our second example that involves laminar responses and a slightly different neural circuitry that follows the architecture used in (Bush and Sejnowski 1993). We consider two versions of Eqs. (1); these correspond to a compartmental and a neural mass model respectively

The first of the above models is a well-known conductance based (microscopic) model (Bush and Sejnowski 1993). In this model, neurons and their constituent parts (axonal arbours, soma etc.) are considered as cylindrical conductors (segments) and transmembrane potentials are given by aggregates of Ohmic currents. These currents flow across the compartment, forming an RC circuit and obey Kirchhoff’s law. \(L_{im}\) are lead field coefficients for each compartment and sensor, \(A_{m} ,l_{m}\) are the cross-sectional area and the length of compartment m (projected in a direction perpendicular to apical dendrites). \(\rho_{m}\), \(c_{m}^{{}}\) are the axial resistivity and membrane capacitance and \(J_{m} (t)\) is the longitudinal current density. This model yields detailed descriptions of intracellular longitudinal currents—within the long apical dendrites of synchronized cortical pyramidal cells—that follow from cable theory. Neuronal populations are modelled as spatially organised networks with the soma of principal cells in supragranular and infragranular layers. This model captures the laminar structure of cortical columns and can characterize the cellular and circuit level processes that are measured with multi-electrode arrays or MEG. It also provides a model of neuronal morphology and how neurons are grouped together to form spatially extended networks, with precise connectivity.

The second model considered above is the neural mass variant of the Bush and Sejnowski 1993) model, see Fig. 2. The crucial difference between the two models in Eq. (6) is that the latter operates at the mesoscale and cannot describe microscopic effects like dendritic delays or back propagation. However, by fitting responses generated by its homologous microscopic model (using DCM), we obtain a prior distribution of neural mass model parameters that can faithfully explain responses recorded with laminar probes, see also Pinotsis et al. under review. In other words, we can establish a mapping between detailed compartmental models based upon conductances and simpler neural mass models based upon (implicit) synaptic convolutions. This mapping uses exactly the same inference machinery used to analyse empirical data but, in this instance, we are fitting neural mass models to responses that are generated by detailed compartmental models.

A Working Memory Task and Experimental Data

Recordings were obtained from a monkey performing a memory guided saccade task. The monkey was trained to fixate on a central white dot during the 250 ms presentation of a red dot (cue) in the periphery of the animal's vision. This cue was presented at one of six potential locations evenly spread on an annulus 10° from the fixation point. After the cue, dots appeared at all of the six locations while the monkey maintained central fixation over a two second memory delay. Then, the central fixation dot turned purple and the peripheral stimuli disappeared. This told the monkey to make a direct saccade to the remembered location of the red cue dot to receive a juice reward. We recorded local field potentials from a 24 channel multi-contact laminar electrode implanted within prefrontal cortex.

To help locate the electrode relative to the cortical layers, we also trained the monkey to maintain fixation while a white disk with a radius of 11 visual degrees was repeatedly flashed for 50 ms intervals. A current source density analysis of the visually evoked potentials during this task permitted the identification of the cortical lamina surrounding the electrode. (Godlove et al. 2014). An example of this type of analysis is shown in Fig. 5. This figure illustrates peristimulus time responses of current source density channels (top) and the corresponding current source density depth variations (bottom) from a laminar probe placed in the prefrontal cortex over all cortical layers (Mitzdorf and Singer 1978). This analysis reveals current sinks and sources and allowed us to identify the first active sink, which corresponds to middle (granular) layers of cortex. Thus, we can localize each of the 24 channels of the laminar electrode in relation to the granular layer—and determine whether they lie in the superficial as opposed to deep layers. In the final part of this review, we use this analysis to establish the validity of our neural mass model above and show that it can successfully explain layer specific responses.

Current source density channels (top) and profile across channels (bottom). We find that the first active sink corresponds to unipolar channel 7. This enables us to distinguish contacts that measure responses from distinct cortical layers, that is superficial (contacts 3–4) and deep (contacts 9–10) populations

Dynamic Causal Modelling of Laminar Probe Data

After equiping the mass model of Fig. 2 with with priors that are consistent with compartmental models (see above), we inverted the empirical responses induced during the delay period in the working memory task. We then used Bayesian model comparison to test whether the model could successfully identify the layer (superficial vs deep) from which we recorded responses.

We find that although this model is formulated at a mesoscopic scale, it could indeed distinguish between activity arising from different layers, see Fig. 6. For example, Bayesian model comparison revealed a relative log-evidence of 26 when we swapped supercial and deep recordings. Generally, a relative log-evidence of three or more can be taken as strong evidence for one model over another (Kass and Raftery 1995). The remarkable thing about this result is that the neural mass model has no explicit notion of space or laminar depth. In other words, the distinction between superficial and deep populations rests purely on their connectivity and synaptic time constants, without any explicit reference to their spatial deployment across multiple cortical layers. The (correct) mapping between superficial and deep populations to their laminar depth endorses or validates the prior constraints on their respective (synaptic) parameters—that can generate complicated mixtures of spectral responses.

Spectral responses and model fits during the delay period from pairs of superficial and deep contacts across the laminar probe. These fits used bipolar data from the delay period, averaged across all conditions, calculated using Hanning tapers. Model predictions are in red and empirical (spectral) data features in blue. The inset shows a log-evidence difference for models with the correct and incorrect designation of laminar depth (two superficial and deep pyramidal cell populations). This relative evidence shows that the model can correctly distinguish between responses originating from different layers

This sort of validation is potentially important as laminar probes offer unbiased estimates of laminar specific activity—and the hierarchical architecture of extrinsic (between-source) connections rests primarily on laminar specific connectivity. Laminar probes are therefore an exciting technique that allows us to measure brain responses at an unprecedented resolution. When combined with dynamic causal modelling, these responses could be used to address several important questions that we review in the Conclusions below.

Conclusion

We have reviewed two recent advances in hierarchical or empirical Bayesian modelling that enable us to deal with the ill-posed (inverse) problem of source reconstruction and disclose processes that generate neural rhythms—and mediate the propagation of information within and between cortical sources. In the first illustration, we showed that non-invasive recordings contain rich spatial information, despite the low resolution of M/EEG; while in the second, we attempted to reconcile models operating at the microscopic and mesoscopic scale—and show that DCM can correctly assign superficial and deep cortical dynamics to laminar-specific responses. This intralaminar DCM affords the same computational efficiency and advantages as all other models in DCM, but can exploit microscopic (laminar-specific) data that embody effects like antidromic currents and back-propagation.

Dynamic causal modelling of electrophysiological responses obtained with laminar probes is in a position to address several neurobiological questions: one of the key reasons to use laminar probes is that they can provide direct evidence that distinct cortical layers are involved in particular oscillations and computations. Previous studies of visual cortex suggest that oscillatory activity in the gamma and alpha bands are segregated by layers. Neurons in deep layers (layers 5 and 6) show spike-field coherence in the alpha band while superficial layer (layers 2 and 3) neurons show spike-field coherence in the gamma band (Buffalo et al. 2011). A question of outstanding importance is whether this laminar segregation is preserved in prefrontal cortex, which is involved in top-down control of sensory cortex (Miller and Cohen 2001).

Superficial and deep cortical layers also tend to have distinct cortical targets. For example, superficial-layer neurons form the strongest source of cortico-cortical feedforward projections, while deep-layer neurons contribute predominately to cortico-cortical feedback (Markov et al. 2013). Recently, it was shown that the laminar connectivity pattern of a particular inter-areal (extrinsic) connection predicts how inter-areal oscillatory activity is coordinated between the areas: when a given connection is dominated by superficial-layer projection neurons (characteristic of feedforward connectivity), gamma and theta oscillations predominate. On the other hand, when a reciprocal connection is dominated by deep-layer projection neurons (characteristic of feedback connectivity), beta oscillations appear to mediate neuronal communication (Bastos et al. 2015). These results suggest that the precise laminar pattern of extrinsic connectivity profoundly shapes inter-areal communication, and the frequencies over which it occurs.

Therefore, it appears that the functional role of oscillations is shaped both by cortical layer and inter-areal connection types, as reviewed above. This provides an important motivation for using multi-laminar probes to examine cortical activity during cognitive tasks. An equally important motivation, from our perspective, is to interrogate the canonical microcircuit hypothesis, which predicts that neurons in distinct cortical layers contribute to distinct computations (Bastos et al. 2012; Friston and Kiebel 2009; Friston et al. 2015). In particular, it has been hypothesized that superficial layer neurons can encode prediction error, while deep layer neurons encode expectations that are used to generate descending (feedback) predictions. The hierarchical message passing of prediction errors and predictions is thought to be a crucial part of predictive coding under the Bayesian brain hypothesis (Friston and Kiebel 2009; Rao and Ballard 1999; Summerfield et al. 2008). Therefore, multilaminar data may provide the critical test for these hypotheses: the modelling of these data could establish whether neuronal activities (spikes and LFPs) from different cortical layers are indeed involved in distinct computations implied by predictive coding. In parallel, these data can be used to inform and nuance laminar-resolved dynamic causal models of the sort we entertain here. In turn, more advanced models will provide more precise descriptions of laminar-resolved activity, allowing more mechanistic questions to be asked about the role of specific neuronal populations, intrinsic connectivity and their neuromodulators in cognition.

References

Bastos AM, Usrey WM, Adams RA, Mangun GR, Fries P, Friston KJ (2012) Canonical microcircuits for predictive coding. Neuron 76:695–711

Bastos AM, Vezoli J, Bosman CA, Schoffelen J-M, Oostenveld R, Dowdall JR, De Weerd P, Kennedy H, Fries P (2015) Visual areas exert feedforward and feedback influences through distinct frequency channels. Neuron 85:390–401

Bazhenov M, Timofeev I, Steriade M, Sejnowski TJ (2002) Model of thalamocortical slow-wave sleep oscillations and transitions to activated states. J Neurosci 22:8691–8704

Buffalo EA, Fries P, Landman R, Buschman TJ, Desimone R (2011) Laminar differences in gamma and alpha coherence in the ventral stream. Proc Natl Acad Sci 108:11262–11267

Buschman TJ, Miller EK (2007) Top-down versus bottom-up control of attention in the prefrontal and posterior parietal cortices. Science 315:1860–1862

Bush PC, Sejnowski TJ (1993) Reduced compartmental models of neocortical pyramidal cells. J Neurosci Methods 46:159–166

Buzsáki G, Chrobak JJ (1995) Temporal structure in spatially organized neuronal ensembles: a role for interneuronal networks. Curr Opin Neurobiol 5:504–510

Deco G, Jirsa VK, Robinson PA, Breakspear M, Friston K (2008) The dynamic brain: from spiking neurons to neural masses and cortical fields. PLoS Comput Biol 4:e1000092

Dickinson A, Bruyns-Haylett M, Jones M, Milne E (2015) Increased peak gamma frequency in individuals with higher levels of autistic traits. Eur J Neurosci 41:1095–1101

Einevoll GT, Pettersen KH, Devor A, Ulbert I, Halgren E, Dale AM (2007) Laminar population analysis: estimating firing rates and evoked synaptic activity from multielectrode recordings in rat barrel cortex. J Neurophysiol 97(3):2174–2190

Einevoll GT (2014) LFPy: a tool for biophysical simulation of extracellular potentials generated by detailed model neurons. Front Neuroinform 7:41

Freeman JA, Nicholson CH (1975) Experimental optimization of current source-density technique for anuran cerebellum. J Neurophysiol 38(2):369–382

Fries P (2009) Neuronal gamma-band synchronization as a fundamental process in cortical computation. Annu Rev Neurosci 32:209–224

Friston K, Kiebel S (2009) Predictive coding under the free-energy principle. Philos Trans R Soc B Biol Sci 364:1211–1221

Friston K, Penny W (2011) Post hoc Bayesian model selection. Neuroimage 56:2089–2099

Friston K, Mattout J, Trujillo-Barreto N, Ashburner J, Penny W (2007) Variational free energy and the Laplace approximation. Neuroimage 34:220–234

Friston K, Harrison L, Daunizeau J, Kiebel S, Phillips C, Trujillo-Barreto N, Henson R, Flandin G, Mattout J (2008) Multiple sparse priors for the M/EEG inverse problem. Neuroimage 39:1104–1120

Friston KJ, Bastos AM, Pinotsis D, Litvak V (2015) LFP and oscillations—what do they tell us? Curr Opin Neurobiol 31:1–6

Friston KJ, Litvak V, Oswal A, Razi A, Stephan KE, vanWijk BC, Ziegler G, Zeidman P (2016) Bayesian model reduction and empirical Bayes for group (DCM) studies. Neuroimage 128:413–431

Godlove DC, Maier A, Woodman GF, Schall JD (2014) Microcircuitry of agranular frontal cortex: testing the generality of the canonical cortical microcircuit. J Neurosci 34:5355–5369

Gonzalez-Burgos G, Lewis DA (2008) GABA neurons and the mechanisms of network oscillations: implications for understanding cortical dysfunction in schizophrenia. Schizophr Bull 34:944–961

Hauck M, Lorenz J, Engel AK (2007) Attention to painful stimulation enhances γ-band activity and synchronization in human sensorimotor cortex. J Neurosci 27:9270–9277

Jones SR, Pritchett DL, Stufflebeam SM, Hämäläinen M, Moore CI (2007) Neural correlates of tactile detection: a combined magnetoencephalography and biophysically based computational modeling study. J Neurosci 27:10751–10764

Kass RE, Raftery AE (1995) Bayes factors. J Am Stat Assoc 90:773–795

Koo PC, Thome J, Berger C, Foley P, Hoeppner J (2015) Current source density analysis of resting state EEG in depression: a review. J Neural Transm 1–10. doi:10.1007/s00702-015-1432-2

Kornblith S, Buschman TJ, Miller EK (2015) Stimulus load and oscillatory activity in higher cortex. Cereb Cortex 26:3772–3784

Krupa M, Popović N, Kopell N, Rotstein HG (2008) Mixed-mode oscillations in a three time-scale model for the dopaminergic neuron. Chaos Interdiscip J Nonlinear Sci 18:15106

Lindén H, Pettersen KH, Einevoll GT (2010) Intrinsic dendritic filtering gives low-pass power spectra of local field potentials. J Comput Neurosci 29:423–444

Lütkenhöner B (2003) Magnetoencephalography and its Achilles’ heel. J Physiol Paris 97:641–658

Lytton WW, Sejnowski TJ (1991) Simulations of cortical pyramidal neurons synchronized by inhibitory interneurons. J Neurophysiol 66:1059–1079

Mainen ZF, Sejnowski TJ (1996) Influence of dendritic structure on firing pattern in model neocortical neurons. Nature 382:363–366

Markov NT, Ercsey-Ravasz M, Van Essen DC, Knoblauch K, Toroczkai Z, Kennedy H (2013) Cortical high-density counterstream architectures. Science 342:1238406

Marreiros AC, Kiebel SJ, Friston KJ (2010) A dynamic causal model study of neuronal population dynamics. Neuroimage 51:91–101

Miller EK, Cohen JD (2001) An integrative theory of prefrontal cortex function. Annu Rev Neurosci 24:167–202

Mitzdorf ULLA, Singer WOLF (1977) Laminar segregation of afferents to lateral geniculate nucleus of the cat: an analysis of current source density. J Neurophysiol 40(6):1227–1244

Mitzdorf U, Singer W (1978) Prominent excitatory pathways in the cat visual cortex (A 17 and A 18): a current source density analysis of electrically evoked potentials. Exp Brain Res 33:371–394

Moran R, Pinotsis DA, Friston K (2015) Neural masses and fields in dynamic causal modeling. Neural Masses Fields Model Dyn Brain Act 7:190

Ness TV, Chintaluri C, Potworowski J, Łęski S, Głąbska H, Wójcik DK, Einevoll GT (2015) Modelling and analysis of electrical potentials recorded in microelectrode arrays (MEAs). Neuroinformatics 13(4):403–426

Perry G, Hamandi K, Brindley LM, Muthukumaraswamy SD, Singh KD (2013) The properties of induced gamma oscillations in human visual cortex show individual variability in their dependence on stimulus size. Neuroimage 68:83–92

Pesaran B, Pezaris JS, Sahani M, Mitra PP, Andersen RA (2002) Temporal structure in neuronal activity during working memory in macaque parietal cortex. Nat Neurosci 5:805–811

Pinotsis D, Friston K (2014a) Gamma oscillations and neural field DCMs can reveal cortical excitability and microstructure. AIMS Neurosci 1:4–24

Pinotsis D, Friston K (2014b) Extracting novel information from neuroimaging data using neural fields. EPJ Nonlinear Biomed Phys 2:5

Pinotsis Schwarzkopf DS, Litvak V, Rees G, Barnes G, Friston KJ (2013) Dynamic causal modelling of lateral interactions in the visual cortex. Neuroimage 66:563–576

Pinotsis D, Leite M, Friston K (2013) On conductance-based neural field models. Front Comput Neurosci 7:158

Pinotsis DA, Brunet N, Bastos A, Bosman CA, Litvak V, Fries P, Friston KJ (2014) Contrast gain-control and horizontal interactions in V1: a DCM study. Neuroimage 92:143–155

Prinz AA, Billimoria CP, Marder E (2003) Alternative to hand-tuning conductance-based models: construction and analysis of databases of model neurons. J Neurophysiol 90:3998–4015

Ramirez-Villegas JF, Logothetis NK, Besserve M (2015) Sharp wave-ripple complexes in a reduced model of the hippocampal CA3-CA1 network of the macaque monkey. BMC Neurosci 16:P15

Rao RP, Ballard DH (1999) Predictive coding in the visual cortex: a functional interpretation of some extra-classical receptive-field effects. Nat Neurosci 2:79–87

Ray S, Maunsell JH (2010) Differences in gamma frequencies across visual cortex restrict their possible use in computation. Neuron 67:885

Roberts MJ, Lowet E, Brunet NM, Ter Wal M, Tiesinga P, Fries P, De Weerd P (2013) Robust gamma coherence between macaque V1 and V2 by dynamic frequency matching. Neuron 78:523–536

Roth A, Häusser M (2001) Compartmental models of rat cerebellar Purkinje cells based on simultaneous somatic and dendritic patch-clamp recordings. J Physiol 535:445–472

Sakamoto K, Kawaguchi N, Yagi K, Mushiake H (2015) Spatiotemporal patterns of current source density in the prefrontal cortex of a behaving monkey. Neural Networks 62:67–72

Santaniello S, McCarthy MM, Montgomery EB, Gale JT, Kopell N, Sarma SV (2015) Therapeutic mechanisms of high-frequency stimulation in Parkinson’s disease and neural restoration via loop-based reinforcement. Proc Natl Acad Sci 112:E586–E595

Schoffelen J-M, Oostenveld R, Fries P (2005) Neuronal coherence as a mechanism of effective corticospinal interaction. Science 308:111–113

Siegel M, Warden MR, Miller EK (2009) Phase-dependent neuronal coding of objects in short-term memory. Proc Natl Acad Sci 106:21341–21346

Summerfield C, Trittschuh EH, Monti JM, Mesulam M-M, Egner T (2008) Neural repetition suppression reflects fulfilled perceptual expectations. Nat Neurosci 11:1004–1006

Swettenham JB, Muthukumaraswamy SD, Singh KD (2013) BOLD responses in human primary visual cortex are insensitive to substantial changes in neural activity. Front Hum Neurosci 7:76

Tallon-Baudry C, Bertrand O, Delpuech C, Pernier J (1996) Stimulus specificity of phase-locked and non-phase-locked 40 Hz visual responses in human. J Neurosci 16:4240–4249

Traub RD, Wong RK, Miles R, Michelson H (1991) A model of a CA3 hippocampal pyramidal neuron incorporating voltage-clamp data on intrinsic conductances. J Neurophysiol 66:635–650

Troebinger L, López JD, Lutti A, Bestmann S, Barnes G (2014) Discrimination of cortical laminae using MEG. Neuroimage 102:885–893

Uhlhaas PJ, Singer W (2012) Neuronal dynamics and neuropsychiatric disorders: toward a translational paradigm for dysfunctional large-scale networks. Neuron 75:963–980

Whittington MA, Traub RD, Kopell N, Ermentrout B, Buhl EH (2000) Inhibition-based rhythms: experimental and mathematical observations on network dynamics. Int J Psychophysiol 38:315–336

Womelsdorf T, Fries P, Mitra PP, Desimone R (2006) Gamma-band synchronization in visual cortex predicts speed of change detection. Nature 439:733–736

Xing D, Yeh C-I, Shapley RM (2009) Spatial spread of the local field potential and its laminar variation in visual cortex. J Neurosci 29:11540–11549

Acknowledgments

This work was funded by the Wellcome Trust Grant No 088130/Z/09/Z, NIMH R37MH087027 and The MIT Picower Innovation Fund.

Author information

Authors and Affiliations

Corresponding author

Additional information

This is one of several papers published together in Brain Topography on the “Special Issue: Controversies in EEG Source Analysis”.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Pinotsis, D.A., Loonis, R., Bastos, A. et al. Bayesian Modelling of Induced Responses and Neuronal Rhythms. Brain Topogr 32, 569–582 (2019). https://doi.org/10.1007/s10548-016-0526-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10548-016-0526-y