Abstract

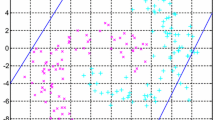

This paper presents an efficient ν-Twin Support Vector Machine Based Regression Model with Automatic Accuracy Control (ν-TWSVR). This ν-TWSVR model is motivated by the celebrated ν-SVR model (Schlkoff et al. 1998) and recently introduced 𝜖-TSVR model (Shao et al., Neural Comput Applic 23(1):175–185, 2013). The ν-TSVR model can automatically optimize the parameters 𝜖 1 and 𝜖 2 according to the structure of the data such that at most certain specified fraction ν 1(respectively ν 2) of data points contribute to the errors in up (respectively down) bound regressor. The ν-TWSVR formulation constructs a pair of optimization problems which are mathematically derived from a related ν-TWSVM formulation (Peng, Neural Netw 23(3):365–372, 2010) and making use of an important result of Bi and Bennett (Neurocomputing 55(1):79–108, 2003). The experimental results on artificial and UCI benchmark datasets show the efficacy of the proposed model in practice.

Similar content being viewed by others

References

Burges JC (1998) A tutorial on support vector machines for pattern recognition. Data Min Knowl Disc 2 (2):121–167

Cortes C, Vapnik V (1995) Support vector networks. Mach Learn 20(3):273–297

Bradley P, Mangasarian OL (2000) Massive data discrimination via linear support vector machines. Optim Methods Softw 13(1):1–10

Cherkassky V, Mulier F (2007) Learning from data: concepts, theory and methods. Wiley, New York

Bi J, Bennett KP (2003) A geometric approach to support vector regression. Neurocomputing 55(1):79–108

Jayadeva, Khemchandani R, Chandra S (2007) Twin support vector machines for pattern classification. IEEE Trans Pattern Anal Mach Intell 29(5):905–910

Peng X (2010) TSVR: an efficient twin support vector machine for regression. Neural Netw 23(3):365–372

Khemchandani R, Goyal K, Chandra S (2016) TWSVR: regression via twin support vector machine. Neural Netw 74:14–21

Shao YH, Zhang C, Yang Z, Deng N (2013) An 𝜖-twin support vector machine for regression. Neural Comput & Applic 23(1):175–185

Schölkopf B, Bartlett P, Smola AJ, Williamson RC (1998) Support vector regression with automatic accuracy control. In: ICANN, vol 98. Springer, London, pp 111–116

Peng X (2010) A ν-twin support vector machine (ν-TSVM) classifier and its geometric algorithms. Inf Sci 180(20):3863–3875

Schölkopf B, Smola AJ, Williamson RC, Bartlett PL (2000) New support vector algorithms. Neural Comput 12(5):1207–1245

Blake CI, Merz CJ (1998) UCI repository for machine learning databases, http://www.ics.uci.edu/*mlearn/MLRepository.html

Xu Y, Wang L (2014) K-nearest neighbor-based weighted twin support vector regression. Appl Intell 41 (1):299–309

Vapnik V (1998) Statistical learning theory, vol 1. Wiley, New York

Acknowledgments

The authors are extremely thankful to the learned referees whose valuable comments have helped to improve the content and presentation of the paper.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix A

Proposition 1

Suppose ν-TWSVR is applied on a dataset which results 𝜖 1 (respectively 𝜖 2 ) > 0, then following statements hold.

-

(a)

v 1 (respectively v 2 ) is an upper bound on fraction of error ξ(respectively η).

-

(b)

v 1 (respectively v 2 ) is a lower bound on fraction of support vectors for up bound (respectively down bound) regressor.

Proof

-

(a)

Using the KKT conditions (21) and (25) for up bound regressor, we can find that for ξ i > 0, β i = 0 and \(\alpha _{i} = \frac {c_{2}}{l}\). Since from (22) and (26), e T α≤c 2 v 1, so there may exist at most l v 1 points for which ξ i ≠0. In the similar way using the K.K.T. optimality conditions for down bound regressor we can prove that there are at most l v 2 points for which η i ≠0.

-

(b)

Using the KKT conditions (22) and (25) for 𝜖 1≠0 we find that γ = 0. This implies that e T α = c 2 v 1.

Since \(0 \leq \alpha _{i} \leq \frac {c_{2}}{l} \) so there must be at least l v 1 points for which α i ≠0. In similar way using the K.K.T conditions for down bound regressor we can prove that there are at least l v 2 points for which λ i ≠0 .

□

Appendix B: ν-TWSVR via ν-TWSVM

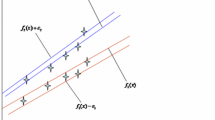

Bi and Bennett [5] have shown the equivalence between a given regression problem and an appropriately constructed classification problem. They have shown that for a given regression training set (A,Y), a regressor y = w T x + b is an 𝜖-insensitive regressor if and only if the set D + and D − locate on different sides of n+1 dimensional hyperplane w T x−y + b = 0 respectively where

In veiw of this result of Bi and Bennett [5], the regression problem is equivalent to the classification problem of sets D + and D − in R n+1. If we use the TWSVM methodology [6] for the classification of these two sets D + and D − then we can find TWSVM based Regression [8]. It is relevant to mention here that the classification of set D + and D − is a special case of classification where we have following privilege informations.

-

(a)

D + and D − classes are symmetric in nature and have equal number of sample points.

-

(b)

Points in the class D + and D − are separated by the distance 2𝜖.

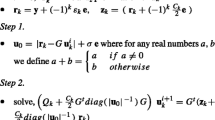

These privileged informations must be exploited for the better classification as better classification of the set D + and D − will eventually lead to better regressor. The classification of the set D + and D − in R n+1 using ν-TWSVM results into following QPPs

and

Let us first consider the problem (40). Here we note that η 1≠0 and therefore, without loss of generality, we can assume that η 1 > 0. The constraint of (40) can be rewriteen as

On replacing w 1:=−w 1/η 1, b 1:=−b 1/η 1 and noting that η 1≥0, (40) reduces to

Next, if we replace e b 1: = e b 1−𝜖 e in (42) then it reduces to

Let \(\left (2e\epsilon -\frac {\rho _{+}}{\eta _{1}}\right ):=e\epsilon _{1}\) then it will reduce to

In the similar manner, assuming η 2 > 0 and using the replacement w 2:=−w 2/η 2, b 2:=−b 2/η 2, problem (41) can be written as

If we replace e b 2: = e b 2 + 𝜖 e and \((2e\epsilon -\frac {\rho _{-}}{\eta _{2}}):=e\epsilon _{2}\) then problems reduces to

Looking at problems (44) and (45 ) we observe that our approach is valid provided we can show that \(\epsilon _{1}=(2\epsilon -\frac {2\rho _{+}}{\eta _{1}}) \geq 0\) and \(\epsilon _{2} = (2\epsilon -\frac {2\rho _{-}}{\eta _{2}}) \geq 0\). We can prove this assertion as follow.

As the first hyperplane w T x + η 1 y + b 1 = 0 is the least square fit for the class D + so there certainly exists an index j such that

Also from (40),

In particular, taking (47) for j we get

Adding (47) and (48) we get \(\epsilon _{1} = \left (2\epsilon -\frac {\rho _{+}}{\eta _{1}}\right ) \geq 0\). Similarly we can prove that \(\epsilon _{2} = \left (2\epsilon -\frac {\rho _{-}}{\eta _{2}}\right ) \geq 0\).

Remark 2

The above proof can be appropriately modified to show that 𝜖-TSVR formulation of Shao et al. [9] also follows from Bi and Bennett [5] results and TWSVM methodology.

Rights and permissions

About this article

Cite this article

Rastogi, R., Anand, P. & Chandra, S. A ν-twin support vector machine based regression with automatic accuracy control. Appl Intell 46, 670–683 (2017). https://doi.org/10.1007/s10489-016-0860-5

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-016-0860-5