Abstract

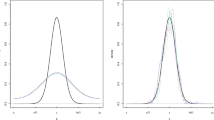

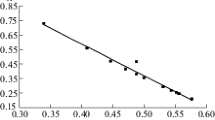

We derive the asymptotic properties of the least squares cross-validation (LSCV) selector and the direct plug-in rule (DPI) selector in the kernel density estimation for circular data. The DPI selector has a convergence rate of \(O(n^{-5/14})\), although the rate of the LSCV selector is \(O(n^{-1/10})\). Our simulation shows that the DPI selector has more stability than the LSCV selector for small and large sample sizes. In other words, the DPI selector outperforms the LSCV selector in theoretical and practical performance.

Similar content being viewed by others

References

Abe, T., Pewsey, A. (2011). Sine-skewed circular distributions. Statistical Papers, 52, 683–707.

Agostinelli, C., Lund, U. (2017). R package ‘circular’: Circular Statistics (version 0.4-93). https://r-forge.r-project.org/projects/circular/.

Di Marzio, M., Panzera, A., Taylor, C. C. (2011). Kernel density estimation on the torus. Journal of Statistical Planning and Inference, 141, 2156–2173.

Di Marzio, M., Fensore, S., Panzera, A., Taylor, C. C. (2017). Nonparametric estimating equations for circular probability density functions and their derivatives. Electronic Journal of Statistics, 11, 4323–4346.

Di Marzio, M., Fensore, S., Panzera, A., Taylor, C. C. (2018). Circular local likelihood. Test, 1–25.

Hall, P. (1984). Central limit theorem for integrated square error of multivariate nonparametric density estimators. Journal of Multivariate Analysis, 14, 1–16.

Hall, P., Marron, J. S. (1987). Extent to which least-squares cross-validation minimises integrated square error in nonparametric density estimation. Probability Theory and Related Fields, 74, 567–581.

Hall, P., Watson, G. S., Cabrera, J. (1987). Kernel density estimation with spherical data. Biometrika, 74, 751–762.

Mardia, K. V., Jupp, P. E. (1999). Directional statistics, p. 40. London: Wiley.

R Core Team. (2018). R: A language and environment for statistical computing. Vienna: R Foundation for Statistical Computing.

Scott, D. W., Terrell, G. R. (1987). Biased and unbiased cross-validation in density estimation. Journal of the American Statistical Association, 82, 1131–1146.

Sheather, S. J., Jones, M. C. (1991). A reliable data-based bandwidth selection method for kernel density estimation. Journal of the Royal Statistical Society. Series B (Methodological), 53, 683–690.

Tsuruta, Y., Sagae, M. (2017). Higher order kernel density estimation on the circle. Statistics and Probability Letters, 131, 46–50.

Wand, M. P., Jones, M. C. (1994). Bandwidth selection. Kernel smoothing, pp. 58–88. USA: Chapman&Hall/CRC.

Acknowledgements

We would like to thank the reviewers for the helpful comments. This work was partially supported by JSPS KAKENHI Grant Nos. JP16K00043, JP24500339, and JP16H02790.

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

Appendix A

Appendix A

Proof of Theorem 2.

We set \(\gamma (y_{ij})=\gamma _{ij}\) to ease the notation. First, we calculate the expectation of \(\overline{{{\mathrm {CV}}}}(\kappa )\), given by

We set \(\gamma _{i}={{E}}_{f}[\gamma _{ij}|\varTheta _{i}]\). Then, the conditional expectation \(\gamma _{i}\) is given by

“Appendix B” in ESM presents the details. It follows from (17) that

Lemma 1

(Tsuruta and Sagae 2017) The term \(R(K(\theta )\theta ^{t})\) is equal to

where \( d_{2t}(L):=2^{-1}\mu ^{-2}_{0}(L)\delta _{2t}(L)\) and \(d(L):=d_{0}(L)\).

By considering Lemma 1, (18), and \({{E}}_{f}[f(\varTheta _{i})]=R(f)\), we find that \({{E}}_{f}[\overline{{{\mathrm {CV}}}}(\kappa )]\) is equivalent to (6).

We calculate the variance of \(\overline{{{\mathrm {CV}}}}(\kappa )\). That is,

where \(j\ne k\). Let \(I_{1}:=R((f^{(4)})^{1/2}f)\), \(I_{2}:=R(f^{(2)})R(f)\), and \(I_{3:}=R(f^{3/2})-R(f)^{2}\). Each term on the right-hand side of (19) is given by

and

“Appendix C” in ESM provides the details of (20)–(23). By considering (19)–(23), we find that \({{\mathrm {Var}}}_{f}[\overline{{{\mathrm {CV}}}}(\kappa )]\) is equivalent to (7). \(\square \)

proof of Corollary 1

We set \(c:={\hat{\kappa }}_{{{\mathrm {CV}}}}/\kappa _{*}\). Then, we combine Theorems 1 and 2 and find that

and

(26) is a convex function with a minimum at \(c=1\). Thus, if \(c\ne 1\) and n is large, then it follows from combining (24) and (26) that

Suppose that c does not converge to 1. Recall that it is necessary that \(\overline{{{\mathrm {CV}}}}(c\kappa _{*})\le \overline{{{\mathrm {CV}}}}(\kappa )\) for any \(\kappa \), because \({\hat{\kappa }}_{{{\mathrm {CV}}}}\) is the minimizer of \(\overline{{{\mathrm {CV}}}}(\kappa )\). Additionally, if n is large, then \(\overline{{{\mathrm {CV}}}}(\kappa )\) is a convex function with a minimum at \(\kappa =c\kappa _{*}\), because we find that \(\overline{{{\mathrm {CV}}}}(\kappa )\) approximates \({\mathrm {AMISE}}(\kappa )\) from Theorem 2. Therefore, it follows that

as \(n\rightarrow \infty \). From (25) and (28), then it holds that

as \(n\rightarrow \infty \). The contradiction between (27) and (29) completes the proof. \(\square \)

Proof of Theorem 3.

Let \(U_{ij}=T^{(4)}_{g}(\varTheta _{i}-\varTheta _{j})\), and \(U_{i}={{E}}_{f}[U_{ij}|\varTheta _{i}]\). The expectation of \({\hat{\psi }}_{4}(g)\) is given by

It follows from (9) that

Lemma 2

(Tsuruta and Sagae 2017) The term \(C_{\kappa }(L)\) is given by

By combining (31) and Lemma 2, we find that the first term on the right side of (30) is equal to

Lemma 3

(Tsuruta and Sagae 2017) We set \(\alpha _{j}(K_{\kappa }):=\int ^{\pi }_{-\pi }K_{\kappa }(\theta )\theta ^{j}\mathrm{d}\theta \). The terms \(\alpha _{2t}(K_{\kappa })\) for \(t=1,2\) are given by

and \(\alpha _{4}(K_{\kappa }) = O(\kappa ^{-2})\). Lemma 2 in Tsuruta and Sagae (2017) presents the general form of \(\alpha _{2t}(K_{\kappa })\).

It follows from Lemma 3 that

\({{E}}_{f}[U_{ij}]\) in (30) is given by the expectation of (33) over \(\varTheta _{i}\).

We obtain the bias (13) from combining (30), (32), and (34).

We now derive the variance of \({\hat{\psi }}_{4}(g)\). We set \(W_{ij}:=U_{ij}-U_{i}-U_{j}+{{E}}_{f}[U_{i}]\) and \(Z_{i}:=U_{i}-{{E}}_{f}[U_{i}]\). Then, we obtain \({{E}}_{f}[W_{ij}]=0\), \({{E}}_{f}[Z_{i}]=0\), and \({\mathrm {Cov}}_{f}[Z_{i}W_{ij}]=0\). By using \(W_{ij}\) and \(Z_{i}\), we present \({\hat{\psi }}_{4}(g)-{{E}}_{f}[{\hat{\psi }}_{4}(g)]\) as

(35) shows that the variance of \({\hat{\psi }}_{4}\) is equal to

By combining (33) and (34), \({{\mathrm {Var}}}_{f}[Z_{i}]\) reduces to

By considering (34), \({{E}}_{f}[U^{2}_{ij}]=g^{9/2}[G_{1,0}(S_{4})\psi _{0}+o(1)]\), and \({{E}}_{f}[U_{i}^{2}]={{E}}_{f}[U_{i}]^{2}=O(1)\) (“Appendix D” in ESM provides the details of \({{E}}_{f}[U^{2}_{ij}]\) and \({{E}}_{f}[U_{i}^{2}]\)), we obtain \({{\mathrm {Var}}}_{f}[W_{ij}]\). That is,

We obtain (11) from combining (36) (37), and (38). \(\square \)

Proof of Theorem 4.

If n is large, it follows from Lemma 3 that

The derivative of (39) is given by

where \(V_{ij}:=\kappa ^{-1/2}[\gamma (y_{ij})+\rho (y_{ij})+3/4\mu ^{-1}_{0}(L)\mu _{2}(L)\kappa ^{-1}\tau (y_{ij})]\), \(\phi _{\kappa }(y_{ij}):=\kappa C^{-1}_{\kappa }(L)\frac{\mathrm{d}}{\mathrm{d}\kappa }L_{\kappa }(y_{ij})\), \(\rho (y_{ij}):=K_{\kappa }(y_{ij})+\int ^{\pi }_{-\pi }\{\phi _{\kappa }(w)K_{\kappa }(w+y_{ij})+K_{\kappa }(w)\phi _{\kappa }(w+y_{ij})\}\mathrm{d}w-2\phi _{\kappa }(y_{ij})\), and \(\tau (y_{ij}):=\int ^{\pi }_{-\pi } K_{\kappa }(w)K_{\kappa }(w+y_{ij})\mathrm{d}w-K_{\kappa }(y_{ij})\).

“Appendix E” in ESM provides the details. The selector \({\hat{\kappa }}_{{{\mathrm {CV}}}}\) satisfies \(\mathrm{d}{{\mathrm {CV}}}(\kappa )/\mathrm{d}\kappa \mid _{\kappa ={\hat{\kappa }}_{{{\mathrm {CV}}}}}=0\). This is equivalent to

Note that \(V_{i}:={{E}}_{f}[V_{ij}|\varTheta _{i}]\). Then, we set \(H_{ij}:=V_{ij}-V_{i}-V_{j}+{{E}}_{f}[V_{i}]\) and \(X_{i}:=V_{i}-{{E}}_{f}[V_{i}]\). Then, we rewrite \(2n^{-2}\sum _{i<j}\{V_{ij}-{{E}}_{f}[V_{ij}]\}\) as

where \(2n^{-2}\sum _{i<j}H_{ij}\) is the degenerate U-statistic. We obtain the asymptotic normality for \(2n^{-1}\sum _{i}X_{i}\) from the standard Central Limit Theorem (CLT). That is,

where, \(B:=16\mu ^{4}_{2}(L)\{R(f^{(4)}f^{1/2})-R(f^{\prime \prime })^{2}\}/\{\mu ^{4}_{0}(L)\}\). “Appendix F” in ESM presents the details.

We give the definition of a degenerate U-statistic. A U-statistic is defined as \(U_{n}:=\sum _{i<j}H_{ij}\), where \(H_{ij}:=H(\varTheta _{i},\varTheta _{j})\) and \(H_{ij}\) is symmetric and \({{E}}_{f}[H_{ij}]=0\). Let the degenerate U-statistic be the U-statistic satisfying \({{E}}_{f}[H_{ij}|\varTheta _{i}]=0\). The following lemma describes the asymptotic normality of a degenerate U-statistic.

Lemma 4

(Hall 1984) Assume that \(H_{ij}\) is symmetric, and \({{E}}_{f}[H_{ij}|\varTheta _{i}]=0\), almost surely and \({{E}}_{f}[H^{2}_{ij}]<\infty \) for each n. We set \(G_{ij}:={{E}}_{f}[H_{ii}H_{ij}]\). if

as \(n\rightarrow \infty \), then,

We obtain the asymptotic normality for \(2n^{-2}\sum _{i<j}H_{ij}\) from Lemma 4. that is,

See “Appendix G” in ESM for details. We combine (42) and (44) to derive the asymptotically normal for \(2n^{-2}\sum _{i<j}V_{ij}\) as

where \(\sigma ^{2}_{1}:=B n^{-1}\kappa ^{-5}+2n^{-2}\kappa ^{-1/2}M_{1,0}(L)R(f)\). We take \(\kappa ={\hat{\kappa }}_{{{\mathrm {CV}}}}\) in (45). Then, we replace \({\hat{\kappa }}_{{{\mathrm {CV}}}}\) in the variance to \(\kappa _{*}\) by Corollary 1. Thus, it follows from combining (41) and (45) that

where \(\sigma ^{2}_{2}:=B n^{-1}\kappa _{*}^{-5}+2n^{-2}\kappa _{*}^{-1/2}M_{1,0}(L)R(f)\). We ignore the first term for the variance of (46), because the convergence rate of the first term is \(O(n^{-3})\), and that of the second term is \(O(n^{-11/5})\) using \(\kappa _{*}=O(n^{2/5})\). From (3), we obtain \(R(f^{\prime \prime })\mu ^{2}_{2}(L)n/(d(L)\mu _{0}(L))=\kappa ^{5/2}_{*}\). Thus, (46) reduces to

Let \(g(x)=x^{-5/2}\). Then, it follows that \(g(1)=1\) and \(\{g^{\prime }(1)\}^{2}=25/4\). We obtain the asymptotic normality for \({\hat{\kappa }}_{{{\mathrm {CV}}}}/\kappa _{*}\) by applying the delta method to (47). That is,

Theorem 4 completes the proof from (48). \(\square \)

Proof of Theorem 5.

The Taylor expansion \({\hat{\kappa }}_{{\mathrm {PI}}}= {\hat{\kappa }}_{{\mathrm {PI}}}({\hat{\psi }}_{4}(g_{*}))\) is given by

(49) reduces to

Noting \(W_{ij}:=U_{ij}-U_{i}-U_{j}+{{E}}_{f}[U_{i}]\), and \(Z_{i}:=U_{i}-{{E}}_{f}[U_{i}]\), it follows that (35) becomes

where \(2n^{-2}\sum _{i<j}W_{ij}\) is the degenerate U-statistic. From (37), we obtain the asymptotic normality distribution from the standard CLT. That is,

If we choose \(g_{*}=W(S)n^{2/7}\), then applying Lemma A.4 to \(2n^{-2}\sum _{i<j}W_{ij}\) gives

as \(n\rightarrow \infty \). “Appendix H” in ESM presents the details. By combining (52) and (53), we obtain the asymptotic distribution of (51). That is,

Theorem 3 shows that the rate of \({{\mathrm {Var}}}_{f}[{\hat{\psi }}_{4}(g^{*})]\) is the order \(n^{-5/7}\). Thus, (54) reduces to

The main term \({\hat{\psi }}_{4}(g_{*})-\psi _{4}\) on the right side for (50) is equivalent to

We show that \({{\mathrm {Bias}}}_{f}[{\hat{\psi }}_{4}(g^{*})]=O(n^{-4/7})\) from Corollary 2. Then, we obtain that \(n^{5/14}{{\mathrm {Bias}}}_{f}[{\hat{\psi }}_{4}(g_{*})]\) is \(O(n^{-3/14})\). Thus, if n is large, then this term is ignored. Therefore, the asymptotic normal distribution for \(n^{5/14}\{{\hat{\psi }}_{4}(g_{*})-\psi _{4}\}\) is given by

Therefore, as \(n\rightarrow \infty \), Theorem 5 completes the proof from (50) and (57). \(\square \)

About this article

Cite this article

Tsuruta, Y., Sagae, M. Theoretical properties of bandwidth selectors for kernel density estimation on the circle. Ann Inst Stat Math 72, 511–530 (2020). https://doi.org/10.1007/s10463-018-0701-x

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10463-018-0701-x