Abstract

This article is concerned with proving the consistency of Efron’s bootstrap for the Kaplan–Meier estimator on the whole support of a survival function. While previous works address the asymptotic Gaussianity of the Kaplan–Meier estimator without restricting time, we enable the construction of bootstrap-based time-simultaneous confidence bands for the whole survival function. Other practical applications include bootstrap-based confidence bands for the mean residual lifetime function or the Lorenz curve as well as confidence intervals for the Gini index. Theoretical results are complemented with a simulation study and a real data example which result in statistical recommendations.

Similar content being viewed by others

References

Akritas, M. G. (1986). Bootstrapping the Kaplan–Meier Estimator. Journal of the American Statistical Association, 81(396), 1032–1038.

Akritas, M. G., Brunner, E. (1997). Nonparametric methods for factorial designs with censored data. Journal of the American Statistical Association, 92(438), 568–576.

Allignol, A., Beyersmann, J., Gerds, T., Latouche, A. (2014). A competing risks approach for nonparametric estimation of transition probabilities in a non-Markov illness-death model. Lifetime Data Analysis, 20(4), 495–513.

Andersen, P. K., Borgan, Ø., Gill, R. D., Keiding, N. (1993). Statistical models based on counting processes. New York: Springer.

Billingsley, P. (1999). Convergence of probability measures (2nd ed.). New York: Wiley.

Dobler, D. (2016). Nonparametric inference procedures for multi-state Markovian models with applications to incomplete life science data. PhD thesis Universität Ulm, Deutschland.

Dobler, D., Pauly, M. (2017). Bootstrap- and permutation-based inference for the Mann–Whitney effect for right-censored and tied data. TEST early view:1–20. https://doi.org/10.1007/s11749-017-0565-z.

Efron, B. (1981). Censored data and the bootstrap. Journal of the American Statistical Association, 76(374), 312–319.

Gastwirth, J. L. (1971). A general definition of the Lorenz curve. Econometrica, 39(6), 1037–1039.

Gill, R. D. (1980). Censoring and stochastic integrals. Mathematical Centre Tracts 124, Amsterdam: Mathematisch Centrum.

Gill, R. D. (1983). Large sample behaviour of the product-limit estimator on the whole line. The Annals of Statistics, 11(1), 49–58.

Gill, R. D. (1989). Non- and semi-parametric maximum likelihood estimators and the von mises method (part 1) [with discussion and reply]. Scandinavian Journal of Statistics, 16(2), 97–128.

Grand, M. K., Putter, H. (2016). Regression models for expected length of stay. Statistics in Medicine, 35(7), 1178–1192.

Horvath, L., Yandell, B. (1987). Convergence rates for the bootstrapped product-limit process. The Annals of Statistics, 15(3), 1155–1173.

Janssen, A., Pauls, T. (2003). How do bootstrap and permutation tests work? The Annals of Statistics, 31(3), 768–806.

Klein, J. P., Moeschberger, M. L. (2003). Survival analysis: Techniques for censored and truncated data (2nd ed.). New York: Springer.

Lo, S.-H., Singh, K. (1986). The product-limit estimator and the bootstrap: Some asymptotic representations. Probability Theory and Related Fields, 71(3), 455–465.

Loprinzi, C. L., Laurie, J. A., Wieand, H. S., Krook, J. E., Novotny, P. J., Kugler, J. W., et al. (1994). Prospective evaluation of prognostic variables from patient-completed questionnaires. Journal of Clinical Oncology, 12(3), 601–607.

Meilijson, I. (1972). Limiting properties of the mean residual lifetime function. The Annals of Mathematical Statistics, 43(1), 354–357.

Pocock, S. J., Ariti, C. A., Collier, T. J., Wang, D. (2012). The win ratio: A new approach to the analysis of composite endpoints in clinical trials based on clinical priorities. European Heart Journal, 33(2), 176–182.

Pollard, D. (1984). Convergence of stochastic processes. New York: Springer.

R Development Core Team. (2016). R: A language and environment for statistical computing. R Foundation for Statistical Computing Vienna, Austria. http://www.R-project.org.

Revuz, D., Yor, M. (1999). Continuous martingales and Brownian motion (3rd ed.). Berlin: Springer.

Stute, W., Wang, J.-L. (1993). The strong law under random censorship. The Annals of Statistics, 21(3), 1591–1607.

Tattar, P., Vaman, H. (2012). Extension of the Harrington–Fleming tests to multistate models. Sankhya B, 74(1), 1–14.

Therneau, T. M., Lumley, T. (2017). A package for survival analysis in S. http://CRAN.R-project.org/package=survival. version 2.41-3.

Tse, S.-M. (2006). Lorenz curve for truncated and censored data. Annals of the Institute of Statistical Mathematics, 58(4), 675–686.

van der Vaart, A. W., Wellner, J. (1996). Weak convergence and empirical processes. New York: Springer.

Wang, J.-G. (1987). A note on the uniform consistency of the Kaplan–Meier estimator. The Annals of Statistics, 15(3), 1313–1316.

Wellner, J. A. (1978). Limit theorems for the ratio of the empirical distribution function to the true distribution function. Zeitschrift für Wahrscheinlichkeitstheorie und verwandte Gebiete, 45(1), 73–88.

Ying, Z. (1989). A note on the asymptotic properties of the product-limit estimator on the whole line. Statistics & Probability Letters, 7(4), 311–314.

Acknowledgements

The author would like to thank Markus Pauly (Ulm University) for helpful discussions. Furthermore, the discussion with a referee and his / her suggestions to present numerical results and to illustrate the methods with the help of real data have helped to improve this manuscript significantly.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix

Denote by \(\mathbf X \) the \(\sigma \)-algebra generated by all observations \(X_1, \delta _1, X_2, \delta _2, \dots \). Some of the following proofs (in “Appendix A”) rely on the ideas of Gill (1983). In order to also apply (variants of) his lemmata in our bootstrap context, “Appendix B” below contains all required results. ‘Tightness’ in the support’s right boundary \(\tau \) for the bootstrapped Kaplan–Meier estimator is essentially shown via a bootstrap version of the approximation theorem for truncated estimators as in Theorem 3.2 in Billingsley (1999); cf. “Appendix C.” Define by \(Y(u) = n {\widehat{H}}_{n-}(u)\) the process counting the number of individuals at risk of dying, and by \(Y^*(u)\) its bootstrap version.

Proofs

Proof of Lemma 2

Proof of (a): This part of the lemma is proven by showing the convergence of the integral from 0 to \(\tau \) in probability. Since, by the continuous mapping theorem and the boundedness away from zero of \(\frac{1}{G}\) on [0, t], the convergence \( -\int _0^{t} \frac{\mathrm d{\widehat{S}}_n}{{\widehat{G}}_{n-}} {\mathop {\longrightarrow }\limits ^{p}}- \int _0^{t} \frac{\mathrm dS}{G_-}\) holds as \(n \rightarrow \infty \), the assertion then follows from an application of the continuous mapping theorem to the difference functional.

Let \(t < \tau \) and suppose (4) holds. Letting \(t \uparrow \tau \), the integral \(- \int _0^{t} \frac{\mathrm dS}{G_-}\) converges toward \(- \int _0^{\tau } \frac{\mathrm dS}{G_-} < \infty \). It remains to apply Theorem 3.2 of Billingsley (1999) to the distance \(\rho (-\int _0^{t} \frac{\mathrm d{\widehat{S}}_n}{{\widehat{G}}_{n-}}, -\int _0^{\tau } \frac{\mathrm d{\widehat{S}}_n}{{\widehat{G}}_{n-}}) = | -\int _0^{\tau } \frac{\mathrm d{\widehat{S}}_n}{{\widehat{G}}_{n-}} + \int _0^t \frac{\mathrm d{\widehat{S}}_n}{{\widehat{G}}_{n-}} |\) for a verification of the assertion for \(t=0\). Thus, we show that for all \(\varepsilon > 0\),

Let \({\widehat{T}}_n = X_{n:n}\) again be the largest observation among \(X_1, \dots , X_n\) and define, for any \(\beta > 0\),

By Lemmata 6 and 7, the probability \( p_\beta := 1 - P(B_\beta ) \leqslant \beta + \frac{e}{\beta } \exp (-1 / \beta )\) is arbitrary small for sufficiently small \(\beta > 0\). Hence, by Theorem 1.1 of Stute and Wang (1993) (applied for the concluding convergence),

For large \(t < \tau \) and by the continuity of S, the far right-hand side of the previous display equals \(p_\beta \).

Suppose now that (4) is violated. By the Glivenko–Cantelli theorems in Stute and Wang (1993) for Kaplan–Meier estimators of continuous survival functions and by letting G be continuous w.l.o.g. (distributing the atoms of G uniformly on small intervals with no mass of S, without affecting the integral), the integral over (0, t] converges almost surely to \(-\int _0^t (\mathrm dS)/ G_-\) for each \(t < \tau \) by the continuous mapping theorem. But this integral is arbitrarily large for sufficiently large \(t \uparrow \tau \). Hence, the stated a.s. convergence follows.

Proof of (b): First note that the uniform convergences in probability in Theorems IV.3.1 and IV.3.2 of Andersen et al. (1993), p. 261ff., yield, for any \(\varepsilon > 0\),

Further, the dominated convergence theorem and \( S_- \mathrm dA = - \mathrm dS\) show that

as \(u,v \rightarrow \tau \). Hence, it remains to verify the remaining Condition (3.8) of Theorem 3.2 in Billingsley (1999) in order to conclude this proof. That is, for each positive \(\delta \) we show

To this end, rewrite \({\widehat{\varGamma }}_n(u,v) - {\widehat{\varGamma }}_n(\tau , \tau )\) as

The left-hand integral is bounded in absolute value by \(-\int _{u \wedge v}^\tau \frac{\mathrm d{\widehat{S}}_n}{{\widehat{G}}_{n-}}\) which goes to \(-\int _{u \wedge v}^\tau \frac{\mathrm dS}{G_{-}}\) in probability as \(n \rightarrow \infty \) by (a). For large u, v this is arbitrarily small.

The remaining integral is bounded in absolute value by

By Lemmata 6 and 7 this integral is bounded from above by a constant times

on a set with arbitrarily high probability. For sufficiently large n we also have \({\widehat{T}}_n > u \wedge v\) with arbitrarily high probability. Next, Theorem 1.1 in Stute and Wang (1993) yields

As above the dominated convergence theorem shows the negligibility of this integral as \(u,v\rightarrow \tau \). \(\square \)

Proof of Theorem 1

For the proof of weak convergence of the bootstrapped Kaplan–Meier estimator on each Skorohod space D[0, t], \(t < \tau \), see, for example, Akritas (1986), Lo and Singh (1986) or Horvath and Yandell (1987). By defining these processes as constant functions after t, the convergences equivalently hold on \(D[0,\tau ]\). This takes care of Condition (a) in Lemma 9, while (c) is obviously fulfilled by the continuity of the limit Gaussian process.

To close the indicated gap for the bootstrapped Kaplan–Meier process on the whole support \([0,\tau ]\), it remains to analyze Condition (b). This is first verified for the truncated process by following the strategy of Gill (1983) while applying the martingale theory of Akritas (1986) for the bootstrapped counting processes. Thus, the truncation technique of Lemma 9 shows the convergence in distribution of the truncated process. Finally, the negligibility of the remainder term is shown similarly as in Ying (1989).

We will make use of the fact that our martingales, stopped at arbitrary stopping times, retain the martingale property; cf. Andersen et al. (1993), p. 70, for sufficient conditions on this matter. Similarly to the largest event or censoring time \({\widehat{T}}_n\), introduce the largest bootstrap time \(T^*_n = \max _{i=1, \dots , n} X_i^*\), being an integrable stopping time with respect to the filtration of Akritas (1986) who used Theorem 3.1.1 of Gill (1980): Hence, we choose the filtration given by

see also Gill (1980), p. 26, for a similar minimal filtration. Note that we did not include the indicators \(\varvec{1}\{X_i^* \leqslant t\}\) into the filtration since their values are already determined by all the \(X_i^*\varvec{1}\{X_i^* \leqslant t\}\): According to our assumptions, \(X_i^* > 0\) a.s. for all \(i=1,\dots ,n\).

We would first like to verify condition (b) in Lemma 9 for the stopped bootstrap Kaplan–Meier process. That is, for each \(\varepsilon > 0\) and an arbitrary subsequence \((n') \subset (n)\) there is another subsequence \((n'') \subset (n')\) such that

for all \(\varepsilon > 0\). Here \(\sigma (\mathbf X ) = \mathcal F_0\) summarizes the collected data. Due to the boundedness away from zero, i.e., \(\inf _{t \leqslant s < {\widehat{T}}_n} {\widehat{S}}_n(s) > 0\), we may rewrite the bootstrap process

for each \(s \in [t,{\widehat{T}}_n)\) of which the bracket term is a square integrable martingale; see Akritas (1986) again. Hence, the term \(\sqrt{n} (S_n^* - {\widehat{S}}_n)(s)\) in (7) equals

whereof \((M^*_n(s))_{s \in [0,{\widehat{T}}_n)}\) is again a square integrable martingale. Indeed, its predictable variation process evaluated at the stopping time \(s = T_n^*\) is finite (having the sufficient condition of Andersen et al. (1993), p. 70, for a stopped martingale to be a square integrable martingale in mind): The predictable variation is given by

where \(H^*_n\) is the empirical survival function of \(X_1^*, \dots , X_n^*\) and \(\varDelta f\) denotes the increment process \(s \mapsto f(s+) - f(s-)\) of a monotone function f. The supremum in (7) is bounded by

of which the right-hand term is not greater than \( | M_n^*(t) | {\widehat{S}}_n(t \wedge T^*_n) \). By the convergence in distribution of the bootstrapped Kaplan–Meier estimator on each \(D[0,{\widetilde{\tau }}]\), \({\widetilde{\tau }} < \tau \), we have convergence in conditional distribution of \( M^*_n(t) {\widehat{S}}_n(t \wedge T^*_n) \) given \(\mathbf X \) toward \(N(0, S^2(t) \varGamma (t,t))\) in probability. Hence,

almost surely along subsequences \((n'')\) of arbitrary subsequences \((n') \subset (n)\). Since the variance of the normal distribution in the previous display goes to zero as \(t \uparrow \tau \), cf. (2.4) in Gill (1983), the above probability vanishes as \(t \uparrow \tau \).

By Lemma 8, the remainder \(\sup _{t \leqslant s < {\widehat{T}}_n} | M^*_n(s) - M^*_n(t) | {\widehat{S}}_n(s \wedge T^*_n) \) is not greater than

Since, given \(\mathbf X \), \({\widehat{S}}_n\) is a bounded and predictable process, this integral is a square integrable martingale on \([t,{\widehat{T}}_n)\). We proceed as in Gill (1983) by applying Lenglart’s inequality, cf. Sect. II.5.2 in Andersen et al. (1993): For each \(\eta > 0\) we have

We intersect the event on the right-hand side of (10) with \(B_{H,n,\beta }^* := \{H^*_n(s-) \geqslant \beta {\widehat{H}}_n(s-)\) for all \(s \in [t,T^*_n]\}\) and also with \(B_{S,n,\beta }^* := \{S^*_n(s) \leqslant \beta ^{-1} {\widehat{S}}_n(s)\) for all \(s \in [t,T^*_n]\}\). According to Lemmata 6 and 7, the conditional probabilities of these events are at least \( 1- \exp (1-1/\beta )/\beta \) and \(1-\beta \), respectively, for any \(\beta \in (0,1)\). Thus, (10) is less than or equal to

In order to show the almost sure negligibility of the indicator function as \(n \rightarrow \infty \) and then \(t \uparrow \tau \), we analyze the corresponding convergence of the integral. Since \(- \mathrm d{\widehat{S}}_n = {\widehat{S}}_{n-} \mathrm d{\widehat{A}}_n\), the integral is less than or equal to

Lemma 2 implies that for each subsequence \((n') \subset (n)\) there is another subsequence \((n'') \subset (n')\) such that \(-\int _t^{\tau } \frac{\mathrm d{\widehat{S}}_{n}}{{\widehat{G}}_{n-}} \rightarrow - \int _t^{\tau } \frac{\mathrm dS}{G_-}\) a.s. for all \(t \in [0, \tau ] \cap \mathbb {Q}\) along \((n'')\). Due to \(P(Z_1 \in \mathbb {Q}) = 0\), the same convergence holds for all \( t \leqslant \tau \). Letting now \(t \uparrow \tau \) shows that the indicator function in (11) vanishes almost surely in limit superior along \((n'')\). The remaining terms are arbitrarily small for sufficiently small \(\eta , \beta > 0\). Hence, all conditions of Lemma 9 are met and the assertion follows for the stopped process

Finally, we show the asymptotic negligibility of

cf. Ying (1989) for similar considerations. Again by Lemma 6, we have for any \(\varepsilon > 0, \beta \in (0,1)\) that

Define the generalized inverse \({\widehat{S}}_n^{-1}(u) := \inf \{ s \leqslant \tau : {\widehat{S}}_n(s) \geqslant u\}\). The independence of the bootstrap drawings as well as arguments of quantile transformations yields

The cardinality in the display goes to infinity in probability, and hence almost surely along subsequences. Indeed, for any constant \(C >0\),

Clearly, this indicator function goes to 1 as \(n \rightarrow \infty \). \(\square \)

Proof of Lemma 3

For the most part, we follow the lines of the above proof of Lemma 2 by verifying Condition (3.8) of Theorem 3.2 in Billingsley (1999). To point out the major difference to the previous proof, we consider

where \(J^*(u) = \mathbf 1 \{ Y^*(u) > 0 \}\). The arguments of Akritas (1986) show that

is a square integrable martingale with predictable variation process given by

After writing \(S_n^* G^*_n = H_n^*\), a twofold application of Lemmata 6 and 7 (at first to the bootstrap quantities \(S_n^*\) and \(H_n^*\), then to the Kaplan–Meier estimators \({\widehat{S}}_n\) and \({\widehat{H}}_n\)) shows that the predictable variation in the previous display is bounded from above by

on a set with arbitrarily large probability depending on \(\beta \in (0,1)\). Here we also used that \({\widehat{S}}_{n-} \mathrm d{\widehat{A}}_n = \mathrm d{\widehat{S}}_n\). Due to (5), Theorem 1.1 of Stute and Wang (1993) yields

and hence the asymptotic negligibility of the predictable variation process in probability. By Rebolledo’s theorem (Theorem II.5.1 in Andersen et al. 1993, p. 83), \(\int _{u \wedge v}^\tau \frac{S^*_{n-}}{G^*_{n-}} J^* \mathrm d( A_n^* - {\widehat{A}}_n) \) hence goes to zero in conditional probability. The remaining integral \( \int _{u \wedge v}^\tau \frac{S^*_{n-}}{G^*_{n-}} J^* \mathrm d{\widehat{A}}_n\) is treated similarly with Lemmata 6 and 7 and Theorem 1.1 of Stute and Wang (1993) yielding a bound in terms of \(\int _{u \wedge v}^\tau \frac{\mathrm dS}{G_-} \). This is arbitrarily small for sufficiently large \(u,v < \tau \). \(\square \)

Proof of Lemma 4

Let the function spaces \(D[t_1,\tau ]\) and \({\widetilde{D}}[t_1,t_2]\) be equipped with the supremum norm. For some sequences \(t_n \downarrow 0\) and \(h_n \rightarrow h\) in \(D[t_1,\tau ]\) such that \(\theta + t_n h_n \in {\widetilde{D}}[t_1,t_2]\), consider the supremum distance

The proof is concluded if (12) goes to zero. For an easier access the expression in the previous display is first analyzed for each fixed \(s \in [t_1,t_2]\):

For large n, each denominator is bounded away from zero: To see this, denote \(\varepsilon := \inf _{s \in [t_1,t_2]}|\theta (s)|\) and \(C := \sup _{s \in [t_1,t_2]} |h(u)|\). Thus,

for each n large enough. It follows that, for each such n additionally satisfying \(t_n \leqslant \varepsilon (2\varepsilon + 2C)^{-1}\), the denominators are bounded away from zero, in particular, \(\inf _{s \in [t_1,t_2]}|\theta (s) + t_n h_n(s)| \geqslant \varepsilon / 2\). Thus, taking the suprema over \(s \in [t_1,t_2]\), the first two terms in (13) become arbitrarily small by letting \(t_n\) be sufficiently small. The remaining two terms converge to zero since \(\sup _{s \in [t_1,t_2]} | h_n(s) - h(s) | \rightarrow 0\) and \(\sup _{u \in [t_1,\tau ]} | \theta (u) | < \infty \). Note here that

\(\square \)

Proof of Lemma 5

The convergences are immediate consequences of the functional delta method, Theorem 1 and the bootstrap version of the delta method; cf. Sect. 3.9 in van der Vaart and Wellner (1996). Simply note that all considered survival functions are elements of \(D_{< \infty } \cap {\widetilde{D}}[t_1,t_2]\) (on increasing sets with probability tending to one) and that the survival function of the lifetimes is assumed continuous and bounded away from zero on compact subsets of \([0,\tau )\). Further, there is a version of the limit Gaussian processes with almost surely continuous sample paths.

For the representation of the variance of the limit distribution in part (a) we refer to van der Vaart and Wellner (1996), p. 383 and 397. The asymptotic covariance structure in part (b) is easily calculated using Fubini’s theorem—for its applicability note that the variances \(\varGamma (r,r)\) of the limit process W of the Kaplan–Meier estimator exist at all points of time \(r \in [0,\tau ]\). Thus, since W is a zero-mean process, we have for any \(0 \leqslant r \leqslant s < \tau \),

Inserting the definition \(\varGamma (r,s) = S(r) S(s) \sigma ^2(r \wedge s)\) and splitting the first integral into \(\int _r^\tau = \int _r^s + \int _s^\tau \) yields that the last display equals

\(\square \)

Proof of Theorem 2

The theorem follows from Lemma 5 combined with the continuous mapping theorem applied to the supremum functional \(D[t_1,t_2] \rightarrow \mathbb {R}\), \(f \mapsto \sup _{t \in [t_1,t_2]} | f(t) |\) which is continuous on \(C[t_1,t_2]\). For the connection between the consistency of a bootstrap distribution of a real statistic and the consistency of the corresponding tests (and the equivalent formulation in terms of confidence regions), see Lemma 1 in Janssen and Pauls (2003). \(\square \)

Adaptations of Gill’s (1983) Lemmata

Abbreviate again the sigma algebra containing all the information of the original sample as \(\mathbf X := \sigma (X_i,\delta _i: i = 1,\dots ,n)\). The proofs in “Appendix A” rely on bootstrap versions of Lemmata 2.6, 2.7 and 2.9 in Gill (1983). Since those are stated under the assumption of a continuous distribution function S, but ties in the bootstrap sample are inevitable, these lemmata need a slight extension. For completeness, parts (a) of the following two Lemmata correspond to the original Lemmata 2.6 and 2.7 in Gill (1983).

Lemma 6

(Extension of Lemma 2.6 in Gill 1983) For any \(\beta \in (0,1)\),

-

(a)

\(P( {\widehat{S}}_n(t) \leqslant \beta ^{-1} S(t) \text { for all } t \leqslant {\widehat{T}}_n) \geqslant 1 - \beta \),

-

(b)

\(P( S^*_n(t) \leqslant \beta ^{-1} {\widehat{S}}_n(t) \text { for all } t \leqslant T^*_n \ | \ \mathbf X ) \geqslant 1 - \beta \) almost surely.

Proof of \(\text {(b)}\). All equalities and inequalities concerning conditional expectations are understood as to hold almost surely. As in the proof of Theorem 1, \((S^*_n(t \wedge T^*_n) / {\widehat{S}}_n(t \wedge T^*_n) )_{t \in [0, {\widehat{T}}_n)}\) defines a right-continuous martingale for each fixed n and for almost every given sample \(\mathbf X \). Hence, Doob’s \(L_1\)-inequality (e.g., Revuz and Yor 1999, Theorem 1.7 in Chapter II) yields for each \(\beta \in (0,1)\)

This implies \(P( S_n^* \leqslant \beta ^{-1} {\widehat{S}}_n \text { on } [0,T_n^*) \ | \ \mathbf X ) \geqslant 1 - \beta .\) It remains to extend this result to the interval’s endpoint. If the observation corresponding to \(T^*_n\) is uncensored, we have \(0 = S^*_n(T^*_n) \leqslant \beta ^{-1} {\widehat{S}}_n(T^*_n)\). Else, the event of interest \(\{ S_n^* \leqslant \beta ^{-1} {\widehat{S}}_n \text { on } [0,T_n^*) \}\) (given \(\mathbf X \)) implies that

Thus, for given \(\mathbf X \), \( \{ S_n^*(T^*_n) \leqslant \beta {\widehat{S}}_n(T^*_n) \} \subset \{ S^*_n \leqslant \beta {\widehat{S}}_n \text { on } [0,T^*_n) \}\). \(\square \)

Lemma 7

(Extension of Lemma 2.7 in Gill 1983) For any \(\beta \in (0,1)\),

-

(a)

\(P( {\widehat{H}}_n(t-) \geqslant \beta {H(t-)} \ \text { for all } \ t \leqslant {\widehat{T}}_n) \geqslant 1 - \frac{e}{\beta } \exp (- 1/ \beta )\),

-

(b)

\(P( H_n^*(t-) \geqslant \beta {\widehat{H}}_n(t-) \ \text { for all } \ t \leqslant T^*_n \ | \ \mathbf X ) \geqslant 1 - \frac{e}{\beta } \exp (- 1/ \beta )\) almost surely.

Proof of \(\text {(a)}\). As pointed out by Gill (1983), the assertion follows from the inequality for the uniform distribution in Remark 1(ii) of Wellner (1978). By using quantile transformations, his inequality can be shown to hold for random variables having an arbitrary, even discontinuous distribution function.

Proof of \(\text {(b)}\). Fix \(X_i(\omega ),\delta _i(\omega ), i=1,\dots ,n\). Since H in part (a) is allowed to have discontinuities, (b) follows from (a) for each \(\omega \). \(\square \)

Let \(a, b \in D[0,\tau ]\) be two (stochastic) jump processes, i.e., processes being constant between two discontinuities. If b has bounded variation, we define the integral of a with respect to b via

where the sum is over all discontinuities of b inside the interval (0, s]. If a has bounded variation, we define the above integral via integration by parts: \(\int _0^s a \mathrm db = a(s) b(s) - a(0) b(0) - \int _0^s b_- \mathrm da\).

Lemma 8

(Adaptation of Lemma 2.9 in Gill 1983) Let \(h \in D[0,\tau ]\) be a nonnegative and non-increasing jump process such that \(h(0)=1\) and let \(Z \in D[0,\tau ]\) be a jump process which is zero at time zero. Then, for all \(t \leqslant \tau \),

Proof

The original proof of Lemma 2.9 in Gill (1983) still applies for the most part with the assumptions of this lemma. For the sake of completeness, we present the whole proof.

Let \(U(t) = \int _0^t h(s) \mathrm dZ(s) \) with a \(t \leqslant \tau \) such that \(h(t) > 0\). Then,

Thus, following the lines of the original proof,

\(\square \)

Bootstrap version of the truncation technique for weak convergence

The following lemma is a conditional variant of Theorem 3.2 in Billingsley (1999). Let \(\rho \) be the modified Skorohod metric \(J_1\) on \(D[0,\tau ]\) as in Billingsley (1999), i.e., \(\rho (f,g) = \inf _{\lambda \in \varLambda } ( \Vert \lambda \Vert ^o \vee \sup _{t \in [0, \tau ]} | f(t) - g(\lambda (t)) | ) \), where \(\varLambda \) is the collection of non-decreasing functions onto \([0, \tau ]\) and

For an application in the proof of Theorem 1, note that \(\rho (f,g) \leqslant \sup _{t \in [0,\tau ]} | f(t) - g(t) |\).

Lemma 9

Let \(X: (\varOmega , {\mathcal {A}}, P) \rightarrow (D[0,\tau ], \rho )\) be a stochastic process and let the sequences of stochastic processes \(X_{un}\) and \(X_n\) satisfy the following convergences given a \(\sigma \)-algebra \({\mathcal {C}}\):

-

(a)

\(X_{un} {\mathop {\longrightarrow }\limits ^{d}}Z_u\) given \({\mathcal {C}}\) in probability as \(n \rightarrow \infty \) for every fixed u,

-

(b)

\(Z_{u} {\mathop {\longrightarrow }\limits ^{d}}X\) given \({\mathcal {C}}\) in probability as \(u \rightarrow \infty \),

-

(c)

for all \(\varepsilon > 0\) and for each subsequence \((n') \subset (n)\) there exists another subsequence \((n'') \subset (n')\) such that

$$\begin{aligned} \lim _{u \rightarrow \infty } \limsup _{n'' \rightarrow \infty } P( \rho (X_{u n''}, X_{n''}) > \varepsilon \ | \ {\mathcal {C}} ) = 0 \quad \text {almost surely}. \end{aligned}$$

Then, \(X_{n} {\mathop {\longrightarrow }\limits ^{d}}X\) given \({\mathcal {C}}\) in probability as \(n \rightarrow \infty \).

Proof

Choose a sequence \(\varepsilon _m \downarrow 0\). Let \((n') \subset (n)\) be an arbitrary subsequence and choose subsequences \((n''(\varepsilon _m)) \subset (n')\) and \((u') \subset (u)\) such that (a) and (b) hold almost surely and also such that (c) holds along these subsequences. Replace \((n''(\varepsilon _m))\) by their diagonal sequence \((n'')\) ensuring (c) simultaneously for all \(\varepsilon _m\). Let \(F \subset D[0, \tau ]\) be a closed subset and let \(F_{\varepsilon _m} = \{f \in D[0, \tau ]: \rho (f, F) \leqslant \varepsilon _m \}\) be its closed \(\varepsilon _m\)-enlargement. We proceed as in the proof of Theorem 3.2 in Billingsley (1999), whereas all inequalities now hold almost surely.

The Portmanteau theorem in combination with (a) yields

Condition (b) and another application of the Portmanteau theorem imply that

Let \(m \rightarrow \infty \) to deduce \( \limsup _{n'' \rightarrow \infty } P(X_{n''} \in F \ | \ {\mathcal {C}}) \leqslant P(X \in F \ | \ {\mathcal {C}}) \) almost surely. Thus, a final application of Portmanteau theorem as well as the subsequence principle leads to the conclusion that \(X_{n} {\mathop {\longrightarrow }\limits ^{d}}X\) given \({\mathcal {C}}\) in probability. \(\square \)

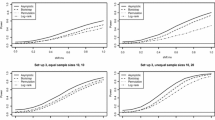

Further simulation results for mean residual lifetime confidence bands

As suggested by a referee, we now evaluate the influence of the choice of the underlying time span \([t_1,t_2]\) on the coverage probabilities of the confidence bands for the mean residual lifetime function. To this end, we again carried out the simulations of Sect. 5.2 while allowing for any combination of the above-chosen time intervals in all setups, i.e., with underlying exponential and Gompertz distributions with any of the three scenarios (i)–(iii). From these results, we conclude that the choice of time span does not have a great impact on the linear bands’ coverage probabilities. In the exponential setup (Table 5), these empirical probabilities vary only slightly, whereas broader intervals \([t_1, t_2]\) appear to make the bands more conservative in the case of an underlying Gompertz distribution (Table 6).

The behavior of the \(\log \)-transformed confidence bands is quite surprising: The empirical coverage probabilities deteriorate considerably for small sample sizes in the case of increased interval lengths \(t_2 - t_1\): They may drop by several dozens of percentage points (see the Exponential setup (i) and \(n \in \{30, 50\}\)) or even from 94.9 to 0.3% (see the Gompertz setup (i) and \(n = 50\)) (Table 6).

We will find the reason for the sometimes good, sometimes dramatic behavior of the confidence bands in the eventual survival probabilities; Table 7 shows the survival probabilities at the right boundaries of the time intervals for each considered setup. Even in the case of a final survival probability of about \(6\%\) and sample sizes \(n \geqslant 500\), the linear confidence bands have satisfactory empirical coverage probabilities within 95.2 and 96.2%. On the other hand, the reason for the bad performance of both types of confidence bands in setup (i) with an underlying Gompertz distribution is the very small survival probability at the right boundary of the time interval: With survival probabilities between 0.01 and 0.17%, one cannot clearly see the asymptotic exactness of the confidence bands, even with sample sizes as big as \(n=1000\). The linear bands tend to be quite conservative, whereas the \(\log \)-transformed bands are very liberal. Much larger samples are necessary to get reliable confidence bands in this extreme setup. These results also indicate that the asymptotic behavior breaks down whenever one seeks to derive confidence bands for the mean residual lifetime function on its whole support.

These observations again lead us to the conclusion that the linear confidence bands appear to be the best choice for the mean residual lifetime function. Depending on the length of the underlying time interval, it may be slightly conservative. But this improves with an increasing sample size if the survival probability at the right boundary is not excessively small.

About this article

Cite this article

Dobler, D. Bootstrapping the Kaplan–Meier estimator on the whole line. Ann Inst Stat Math 71, 213–246 (2019). https://doi.org/10.1007/s10463-017-0634-9

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10463-017-0634-9