Abstract

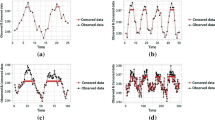

For time series nonparametric regression models with discontinuities, we propose to use polynomial splines to estimate locations and sizes of jumps in the mean function. Under reasonable conditions, test statistics for the existence of jumps are given and their limiting distributions are derived under the null hypothesis that the mean function is smooth. Simulations are provided to check the powers of the tests. A climate data application and an application to the US unemployment rates of men and women are used to illustrate the performance of the proposed method in practice.

Similar content being viewed by others

References

Alley, R. B., Marotzke, J., Nordhaus, W. D., Overpeck, J. T., Peteet, D. M., Pielke, R., et al. (2003). Abrupt climate change. Science, 299, 2005–2010.

Bowman, A. W., Pope, A., Ismail, B. (2006). Detecting discontinuities in nonparametric regression curves and surfaces. Statistics and Computing, 16, 377–390.

Chen, G. M., Choi, Y. K., Zhou, Y. (2008). Detections of changes in return by a wavelet smoother with conditional heteroscedastic volatility. Journal of Econometrics, 143, 227–262.

de Boor, C. (2001). A practical guide to splines. New York: Springer.

Gijbels, I., Lambert, A., Qiu, P. H. (2007). Jump-preserving regression and smoothing using local linear fitting: a compromise. Annals of the Institute of Statistical Mathematics, 59, 235–272.

Huang, J. Z. (2003). Local asymptotics for polynomial spline regression. The Annals of Statistics, 31, 1600–1635.

Huang, J. Z., Yang, L. (2004). Identification of nonlinear additive autoregressive models. Journal of the Royal Statistical Society Series B, 66, 463–477.

Hui, E. C. M., Yu, C. K. W., Ip, W. C. (2010). Jump point detection for real estate investment success. Physica A, 389, 1055–1064.

Ivanov, M. A., Evtimov, S. N. (2010). 1963: The break point of the northern hemisphere temperature trend during the twentieth century. International Journal of Climatology, 30, 1738–1746.

Johnson, R. A., Wichern, D. W. (1992). Applied multivariate statistical analysis. Englewood Cliffs: Prentice Hall.

Joo, J., Qiu, P. H. (2009). Jump detection in a regression curve and its derivative. Technometrics, 51, 289–305.

Koo, J. Y. (1997). Spline estimation of discontinuous regression functions. Journal of Computational and Graphical Statistics, 6, 266–284.

Lin, Z. Y., Li, D., Chen, J. (2008). Change point estimators by local polynomial fits under a dependence assumption. Journal of Multivariate Analysis, 99, 2339–2355.

Ma, S. J., Yang, L. J. (2011). A jump-detecting procedure based on spline estimation. Journal of Nonparametric Statistics, 23, 67–81.

Matyasovszky, I. (2011). Detecting abrupt climate changes on different time scales. Theoretical and Applied Climatology, 105, 445–454.

Müller, H. G. (1992). Change-points in nonparametric regression analysis. The Annals of Statistics, 20, 737–761.

Müller, H. G., Song, K. S. (1997). Two-stage change-point estimators in smooth regression models. Statistics and Probability Letters, 34, 323–335.

Müller, H. G., Stadtmüller, U. (1999). Discontinuous versus smooth regression. The Annals of Statistics, 27, 299–337.

National Bureau of Economic Research (2011). US business cycle expansions and contractions. http://www.nber.org/cycles/cyclesmain.html#announcements.

Qiu, P. H. (1991). Estimation of a kind of jump regression functions. Systems Science and Mathematical Sciences, 4, 1–13.

Qiu, P. H. (1994). Estimation of the number of jumps of the jump regression functions. Communications in Statistics-Theory and Methods, 23, 2141–2155.

Qiu, P. H. (2003). A jump-preserving curve fitting procedure based on local piecewise-linear kernel estimation. Journal of Nonparametric Statistics, 15, 437–453.

Qiu, P. H. (2005). Image processing and jump regression analysis. New York: Wiley.

Qiu, P. H., Yandell, B. (1998). A local polynomial jump detection algorithm in nonparametric regression. Technometrics, 40, 141–152.

Qiu, P. H., Asano, C., Li, X. (1991). Estimation of jump regression function. Bulletin of Informatics and Cybernetics, 24, 197–212.

Shiau, J. H. (1987). A note on MSE coverage intervals in a partial spline model. Communications in Statistics Theory and Methods, 16, 1851–1866.

Silverman, B. W. (1986). Density estimation for statistics and data analysis. London: Chapman and Hall.

Song, Q., Yang, L. (2009). Spline confidence bands for variance function. Journal of Nonparametric Statistics, 21, 589–609.

Wang, L., Yang, L. (2007). Spline-backfitted kernel smoothing of nonlinear additive autoregression model. The Annals of Statistics, 35, 2474–2503.

Wang, L., Yang, L. (2010). Simultaneous confidence bands for time-series prediction function. Journal of Nonparametric Statistics, 22, 999–1018.

Wong, H., Ip, W., Li, Y. (2001). Detection of jumps by wavelets in a heteroscedastic autoregressive model. Statistics and Probability Letters, 52, 365–372.

Wu, J. S., Chu, C. K. (1993). Kernel type estimators of jump points and values of a regression function. The Annals of Statistics, 21, 1545–1566.

Wu, W. B., Zhao, Z. B. (2007). Inference of trends in time series. Journal of the Royal Statistical Society Series B, 69, 391–410.

Xue, L., Yang, L. (2006). Additive coefficient modeling via polynomial spline. Statistica Sinica, 16, 1423–1446.

Zhou, Y., Wan, A. T. K., Xie, S. Y., Wang, X. J. (2010). Wavelet analysis of change-points in a non-parametric regression with heteroscedastic variance. Journal of Econometrics, 159, 183–201.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

The following notations are used throughout the proof. We define \(||\cdot ||_{\infty }\) as the supremum norm of a function \(r\) on \([a,b]\), i.e. \(||r||_{\infty }=\sup _{x \in [a,b]}|r(x)|.\) We will use \(c,C\) to denote some positive constants in a generic sense through the proof.

To prove Theorem 1, we decompose the estimation error \(\hat{m}_p(x)-m_p(x)\) into a bias term and a noise term. Denoting \(\mathbf{m}=\left( m\left( X_{1}\right) ,\ldots ,m\left( X_{n}\right) \right) ^\mathrm{T}\) and \(\mathbf{E}_{\sigma }=\left( \sigma \left( X_{1}\right) \varepsilon _{1},\ldots , \sigma \left( X_{n}\right) \varepsilon _{n}\right) ^\mathrm{T}\), we can rewrite \(\mathbf{Y}\) as \(\mathbf{Y}=\mathbf{m}+\mathbf{E}_{\sigma }\). We project the response \(\mathbf{Y}\) onto the spline space \(G^{(p-2)}_N\) spanned by \(\{\mathbf{B}_{j,p}(\mathbf{X})\}_{j=1-p}^N\), where \(\mathbf{B}_{j,p}\left( \mathbf{X}\right) \) is denoted as

with \(B_{j,p}\left( x\right) \) introduced in Sect. 2.1. We obtain the following decomposition

where

The bias term is \(\tilde{m}_p(x)-m_p(x)\) and the noise term is \(\tilde{\varepsilon }_p(x)\).

Lemma 1

As \(n \rightarrow \infty \),

where \(\max _{0 \le j \le N}\left| r_{j,n,1}\right| +\max _{-1 \le j \le N}\left| r_{j,n,2}\right| +\max _{-1 \le j \le N-1}\left| \tilde{r}_{j,n,2}\right| \le C \omega \left( f,h\right) \) and \(\omega (f,h)= \max _{x,x^{\prime }\in [a,b],|x-x^{\prime }| \le h}|f(x)-f(x^{\prime })|\) is the moduli of continuity of a continuous function \(f\) on \([a,b]\). Furthermore,

Proof of Theorem 1

for \(p=1\) When \(p=1,\,\mathbf{V}_{n,1}^{-1}\) is a diagonal matrix, and \(\tilde{\varepsilon }_1(x)\) in Eq. (10) can be rewritten as

We define \(\hat{\varepsilon }_1(x)=\sum _{j=0}^N \varepsilon _j^* B_{j,1}(x)\), and it is straightforward that \(\hat{\varepsilon }_1(t_{j})=B_{j,1}(t_{j})\varepsilon _{j}^*, \, j=0,\ldots ,N\). We treat the variance of \(\hat{\varepsilon }_1(t_{j+1})-\hat{\varepsilon }_1(t_{j})\) as follows.

Lemma 2

The variance of \(\hat{\varepsilon }_1(t_{j+1})-\hat{\varepsilon }_1(t_{j}),\,j=0, \ldots ,N-1,\) is \(\sigma ^2_{n,1,j}\) in Eq. (5), which satisfies

Accordingly, under Assumption \((\)A2\()\), one has \(c(nh)^{-1/2}\le \sigma _{n,1,j}\le C(nh)^{-1/2}\) for any \(j=0,\ldots ,N-1\) as \(n\) sufficiently large.

The proof can be easily obtained by Lemma A.1 combining with the fact that \(\langle B_{j,1},B_{j+1,1}\rangle =0\).

Denote, for \(0\le j \le N-1,\,\tilde{\xi }_{n,1,j}=\sigma _{n,1,j}^{-1}\{\tilde{ \varepsilon }_1(t_{j+1} )-\tilde{\varepsilon }_1(t_j)\}\) and \(\hat{\xi }_{n,1,j}=\sigma _{n,1,j}^{-1}\{\hat{\varepsilon }_1 (t_{j+1})-\hat{\varepsilon }_1(t_j)\}\). The next lemma follows from Lemma A.6 of Wang and Yang (2010).

Lemma 3

Under Assumptions \((\mathrm{A2}{-}\mathrm{A4})\), as \(n\rightarrow \infty \),

Proof

Rewrite \(\hat{\varepsilon }_1(t_{j+1})-\hat{\varepsilon }_1(t_{j})\) as \(\hat{\varepsilon }_1(t_{j+1})-\hat{\varepsilon }_1(t_{j})={\mathbf{D}_{j,1}}^\mathrm{T}{{\varvec{\Lambda }}_{j,1}},\,j=0,\ldots ,N-1\), where

It follows that \(\sigma ^2_{n,1,j}={\mathbf{D}_{j,1}}^\mathrm{T}Cov\left( {\varvec{\Lambda }}_{j,1} \right) \mathbf{D}_{j,1}\) with

Let \(\mathbf{Z}_j=(Z_{j1},Z_{j2})^\mathrm{T}= {\varvec{\Lambda }}_{j,1}^\mathrm{T}\{Cov({\varvec{\Lambda }}_{j,1})\}^{-1/2}\). Specifically, for \(0\le j\le N-1\),

By Lemmas 3.2, 3.3 and A.7 of Wang and Yang (2010), we have uniformly in \(j\),

Therefore, for \(\gamma =1,2\),

Denote \(\mathbf{Q}_{j,1}={\varvec{\Lambda }}_{j,1}^\mathrm{T}\left\{ Cov\left( {\varvec{\Lambda }}_{j,1} \right) \right\} ^{-1}{\varvec{\Lambda }}_{j,1}=\mathbf{Z}_j\mathbf{Z}_j^\mathrm{T}=\sum _{\gamma =1,2}Z_{j\gamma }^2,\,j=0,\ldots ,N-1\). According to the maximization lemma of Johnson and Wichern (1992),

Hence,

Note that \(\hat{m}_1(t_{j+1})-\hat{m}_1(t_{j})=[\tilde{m}_1(t_{j+1})-m (t_{j+1})]-[\tilde{m}_1(t_j )-m(t_j)]+[m(t_{j+1}) -m(t_j)]+[\tilde{\varepsilon }_1 (t_{j+1})-\tilde{\varepsilon }_1(t_j)]\). The theorem of de Boor (2001) on page 149 and Theorem 5.1 of Huang (2003) entail that under \(\mathcal{H }_0\) the orders of the first three terms are all \(Op\left( h\right) \), which makes

We finally apply Lemma 3 to get

\(\square \)

Proof of Theorem 1

for \(p=2\) For \(p=2\), we can rewrite the noise term \(\tilde{\varepsilon }_2\left( x\right) \) in Eq. (10) as \(\tilde{\varepsilon }_2\left( x\right) = \sum _{j=-1}^N \tilde{a}_j B_{j,2}\left( x\right) \), where

Similarly as before, we denote \(\hat{\varepsilon }_2\left( x\right) =\sum _{j=-1}^N \hat{a}_j B_{j,2}\left( x\right) \), where \(\hat{\mathbf{a}}= (\hat{a}_{-1},\ldots ,\hat{a}_N)^\mathrm{T}\) is defined by replacing \({\mathbf{V}}_{n,2}^{-1}\) in the above formula with \(\mathbf{S}=\mathbf{V}_2^{-1}\), i.e.

Thus, for any \(x \in [a,b]\),

Denote \(\tilde{\xi }_{2,j}=\tilde{\varepsilon }_2(t_{j+1})-\tilde{ \varepsilon }_2(t_j),\, \hat{\xi }_{2,j}=\hat{\varepsilon }_2(t_{j+1})-\hat{ \varepsilon }_2(t_j)\), and \(\tilde{\xi }_{n,2,j}=\sigma _{n,2,j}^{-1}\tilde{\xi }_{2,j},\, \hat{\xi }_{n,2,j}=\sigma _{n,2,j}^{-1}\hat{\xi }_{2,j}\). It follows that \(\hat{\xi }_{2,j}={\mathbf{D}_{j,2}}^\mathrm{T}{{\varvec{\Lambda }}_{j,2}},\,j=0,\ldots ,N-1\), where

In the next lemma, we calculate the variance of \(\hat{\xi }_{2,j}\).

Lemma 4

The variance of \(\hat{\xi }_{2,j}\) is \(\sigma ^2_{n,2,j}\) in Eq. (6), which satisfies

And for large enough \(n,\,c\left( nh\right) ^{-1/2}\le \sigma _{n,2,j} \le C \left( nh\right) ^{-1/2}\).

Proof

Since \(\sigma ^2_{n,2,j}=E\hat{\xi }^2_{2,j}=\mathbf{D}_{j}^\mathrm{T}Cov({\varvec{\Lambda }}_j)\mathbf{D}_{j}\), by applying Lemma A.10 of Wang and Yang (2010), we can get the desired results.\(\square \)

Similar arguments used in Lemmas A.11 and A.12 of Wang and Yang (2010) yield that

Then we can finish the proof similarly as for \(p=1\). \(\square \)

About this article

Cite this article

Yang, Y., Song, Q. Jump detection in time series nonparametric regression models: a polynomial spline approach. Ann Inst Stat Math 66, 325–344 (2014). https://doi.org/10.1007/s10463-013-0411-3

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10463-013-0411-3