Abstract

Ecosystem budgets of water and elements can be difficult to estimate and are often unreplicated, making it challenging to provide confidence in estimates of ecosystem pools and fluxes. We conducted a survey to learn about current practices in reporting uncertainties in precipitation, streamflow, soils, and vegetation. Uncertainty derives from natural variation, which is commonly characterized by replicate samples, and from imperfect knowledge, which includes measurement error and model error (model fit and model selection). We asked questions about whether researchers report uncertainties in these sources, whether they know how to do so, and how important they believe the sources to be. We also asked questions about identifying missing or unusable values, filling gaps in data, and dealing with analytical concentrations below detection limits. We obtained responses from 140 researchers representing 90 research sites around the world. Natural variation was the most important source of uncertainty in calculations of biomass and soil pools, according to respondents in these fields, and sampling error was the source they most often reported. In contrast, uncertainty in the chemical analysis of precipitation and stream water was the source most commonly reported by hydrologists, although they rated this one of the least important sources of uncertainty to calculations of hydrologic flux. Awareness of types of uncertainty can help identify sources of uncertainty that may have been overlooked, and quantifying them will help determine which sources are most important to report.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Measurements from ecosystems are often reported without taking uncertainty into account. This omission stems in part from the fact that each ecosystem is unique, making it challenging to identify replicate sampling units. Even in cases where replication may be possible, it can be prohibitively expensive to monitor the number of ecosystems required for an acceptable level of uncertainty. Without replication it is still possible to propagate the sources of error and report the uncertainty in reported ecosystem pools or fluxes (for example, Campbell and others 2016). However, the calculations can be complex when multiple sources of uncertainty are involved. In light of these complexities, it is not surprising that in these types of studies, estimates of uncertainty are often incorrect, incomplete, or omitted entirely.

Sources of Uncertainty: A Conceptual Taxonomy

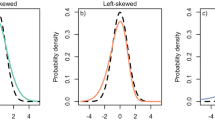

Ecological data are characterized by many different types of uncertainty, even in seemingly straightforward studies (Regan and others 2002; Harmon and others 2007; Ascough and others 2008). In the broadest sense, uncertainty arises both from natural variability and from imperfect knowledge (Figure 1). Natural variability is inherent to the system and is the result of both spatial and temporal heterogeneity. Natural variability cannot be decreased by making more measurements or by using better equipment, but better measurements allow us to better describe it. In other words, we can increase our confidence in our estimate of the dispersion of the population, although this statistic is rarely reported (Johnson and others 1990).

Sources of uncertainty in ecosystem studies (modified from Harmon and others 2007). Red arrows indicate sources of uncertainty that contribute to other sources of uncertainty (Color figure online).

In contrast, knowledge uncertainty is due to imperfect understanding of, or ability to characterize, a system (Harmon and others 2007). The error due to uncertain knowledge can be systematic (biased) or random. Measurement error is a form of knowledge uncertainty that arises from measurement devices and sampling strategies (Csavina and others 2017). Knowledge uncertainty also includes model error, and model error includes not only fit statistics, which are easy to describe, but also uncertainty in the structure of models. There may be more than one possible model, and thus, the selection of a model is another source of knowledge uncertainty. Interpolating between observations is a source of uncertainty that can be considered a form of modeling.

We conducted a survey to ascertain the current state of knowledge and of practices for reporting uncertainty in ecosystem studies, specifically addressing vegetation, soils, precipitation, and streamflow. We expected to find sampling error to be the most commonly reported source of uncertainty, followed by measurement error, with model uncertainty, especially model selection uncertainty, the least commonly reported. We were interested in finding out which sources of uncertainty are considered important by researchers and which sources researchers do not know how to quantify.

Survey Design and Implementation

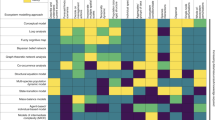

We distributed an on-line survey to assess the current reporting practices among researchers studying precipitation, streamflow, biomass, and soils. We listed the sources of uncertainty in estimates of these ecosystem components (for example, pool sizes for biomass and soil, water and nutrient fluxes for precipitation and streamflow), including sources due to natural variation, measurement error, model prediction, and model selection (Figure 2). Survey respondents were asked whether they commonly report each source of uncertainty, whether they know how to quantify each source of uncertainty, and how important they believe each source to be (rarely, occasionally, or often important). Additionally, respondents were asked how they identify missing or unusable values, how they fill data gaps, and how they deal with analytical concentrations below detection limits.

We made the survey available to researchers as part of a workshop at the 2015 All Scientists Meeting of the Long Term Ecological Research (LTER) Network. We followed up with researchers at LTER sites from which we did not have responses from a primary investigator, research scientist, or postdoctoral researcher, and obtained responses from all of the LTER sites. We also announced the survey via the Ecological Society of America’s listserv, the LTER Network, the Soil Science Society of America’s Forest Soils list, and the Quantifying Uncertainty in Ecosystem Studies (QUEST) Research Coordination Network. Our collaborators distributed the survey to researchers in the National Ecological Observatory Network (NEON), Environmental Protection Agency (EPA), United States Geological Survey (USGS), National Atmospheric Deposition Program (NADP), United States Forest Service (USFS), and the Clean Air Status and Trends Network (CASTNET). Responses were collected between August 30, 2015, and January 12, 2016. In total, we received 140 responses from individuals at 90 different research sites or networks. Although our outreach efforts targeted research in the USA, we received responses from researchers in 13 countries. The number of responses by study type was 44 for vegetation, 29 for soils, 46 for streamflow, and 35 for precipitation. Many individuals responded for more than one study type.

Reporting Sources of Uncertainty

Sampling Error

As expected, sampling error was the most often reported of all sources of error for vegetation (Figure 3) and soils (Figure 4), which are generally described by replicate measurements. The highest rate of reporting of the 42 sources we listed was 96% for sampling error in soil chemistry (23 of 24 respondents). Sampling error in soil mass was less often reported (16 of 22 respondents for bulk density and 8 of 17 for coarse fraction). Sampling error in soil horizon depth was reported by about half of those respondents for which this source was relevant.

For vegetation, sampling error in biomass was reported by 30 of 35 respondents. Fewer of the respondents study vegetation chemistry, and the proportion of respondents who report sampling error in vegetation chemistry was somewhat less than for biomass (18 of 23 respondents).

We did not include questions about sampling error for precipitation and streams, because streams are not usually treated as replicates in ecosystem studies, and precipitation, when it is sampled at multiple points, is used to characterize spatial pattern rather than to establish confidence in a mean.

Measurement Error

Sources of measurement error varied according to the object of study. For analysis of precipitation and stream water (Figure 5), the source most often reported was the uncertainty of chemical analyses. In studies of streams, 84% (of 31 respondents) report the uncertainty of chemical analyses; in studies of precipitation, 63% (of 24 respondents) report this source. Uncertainty in chemical analyses is also reported by a majority of soil chemistry studies (68%) and vegetation chemistry studies (60%). Quality control in laboratory analyses is standardized, widely practiced, and routinely reported, at least by the laboratories. It may be more common for researchers to report the QC data than to propagate the uncertainty to see the effect on the reported results.

Other sources of measurement uncertainty differed by discipline. For vegetation studies (Figure 3), there are some sources of error that are thought not to be very important and are usually not reported, such as error in plot boundaries, plot area, and tree status (live, snag, or stump). Error in identifying species was more often rated as at least sometimes important, but was still usually not reported. Measurement error (for example, diameter of trees, clipping height for grasses) was rated as at least sometimes important by 60% of respondents, and 52% said they report this source of uncertainty.

For precipitation studies, measurement error of precipitation gages was rated as often important but not often reported, even though most respondents indicated that they know how to report this source (Figure 5). This could mean that measuring this source of uncertainty is feasible but rarely achieved (Yang and others 1998). For stream studies, the relationship between stage height and discharge was perceived as the most important source of measurement error and was also the most often reported. Area of the watershed is another source of measurement error in stream loads. This is the least often reported of all stream sources (39%), and while it was also rated least important, it still has the biggest gap between perceived importance (76%) and reporting.

Missing information in a time series is a source of uncertainty in precipitation and stream water studies. While the source of uncertainty is a gap in measurements, the method of filling gaps may entail model error. For stream studies, the rate of reporting error due to gap filling in both discharge (76%) and concentration (74%) is very high (Figure 5). For precipitation studies, error due to gap filling is less often reported: 54% for gaps in volume and 35% for gaps in chemistry. Gaps were rated as more important for streams (85% for volume, 92% for chemistry) than for precipitation (67% for volume, 65% for chemistry). Filling gaps in stream chemistry had the highest importance rating of all error sources in precipitation and stream studies. In most studies, stream chemistry is not measured continuously, and interpolation is required to make estimates of loads. In contrast, precipitation collectors integrate over a time period, and gaps as a source of uncertainty were less often rated as important, as described above. Gaps in stream flow records are more common than gaps in precipitation records, as some downtime is inevitable for weir maintenance, and accidents that prevent data collection are more common for streams than for precipitation (Campbell and others 2016).

Model Error

In studies of forest vegetation, biomass is commonly estimated from measurements of tree diameter (and sometimes height) using allometric regression models. Of the respondents who rated this source of uncertainty, most said that uncertainty in biomass models was at least sometimes important (Figure 3), with 39% reporting the uncertainty in predicting individuals (the prediction interval) and 70% reporting uncertainty in the regression (the confidence interval). Only 35% report both sources of error. Uncertainty in model selection was also considered to be important, and 53% claim to report this source.

Models are used in precipitation studies for interpolating spatially between gauges (Figure 5). Surprisingly, the uncertainty in model selection (45%) is more often reported than the model error itself (23%). For some types of interpolation (for example, regression models, kriging), the fit statistics provide estimates of uncertainty in the predictions. Simply assigning every point in space the value of the nearest collector (Theissen polygons) is a common practice in studies established before the advent of high-speed computing, and it’s not clear how to assign error to this approach. However, 65% of respondents said that they know how to estimate uncertainty in interpolation models, while only 54% said they know how to estimate uncertainty in model selection, so lack of knowledge does not explain the low rate of reporting of model uncertainty in precipitation interpolation. This was the least often reported of all error sources in precipitation studies.

Although precipitation is monitored at points on the landscape requiring spatial interpolation, streams integrate over a catchment area. Stream chemistry is usually measured infrequently (for example, weekly or monthly), requiring interpolation over time. Model error is much more often reported for stream studies than precipitation studies, with 74 and 68% of respondents claiming to report model error and model selection error, respectively. Fewer respondents claim to know how to report this source of error than the number of respondents who report it, which may seem odd, but there was only one case where this source was reported by a respondent who didn’t know how to report it; there were many who answered the question about knowledge even though they answered “not applicable” for the question about reporting this source of error.

Knowledge of Error Estimation

We expected sampling error to be the best understood of all the sources of uncertainty. Indeed, for soils, 100% of 24 respondents reported that they know how to report sampling error in soil chemistry (Figure 4). Only one person admitted not knowing how to report sampling error in soil mass (bulk density) and coarse fraction. Knowledge was slightly lower for uncertainty in spatial or temporal pattern, averaging 86% for soil chemistry and 78% for soil mass. Soil chemistry can be characterized from grab samples, whereas uncertainty in soil mass requires propagating errors in measurements of soil volume and bulk density, which are difficult to measure in rocky soils (Throop and others 2012; Vadeboncoeur and others 2012).

For vegetation, again, the highest knowledge rating was for sampling error (replicate plots) with 94% of 35 respondents for biomass and 87% of 23 respondents for vegetation chemistry claiming knowledge of how to report these sources (Figure 3). The sources with the lowest knowledge ratings were the measurement errors that are rarely reported (Holdaway and others 2014): errors in plot boundaries, plot area, species identification, and tree status (for example, live, snag, stump), with 25–37% of respondents claiming knowledge. Knowledge of how to report model error was intermediate, with 65% knowing how to report prediction intervals, 81% knowing how to report confidence intervals, and 67% knowing how to report model selection uncertainty. Knowledge among the vegetation scientists about reporting uncertainty in spatial and temporal patterns was high but slightly lower than among the soil scientists, averaging 71% for vegetation chemistry and 72% for biomass.

Rates of reported knowledge were highest among hydrologists (Figure 5). This field has a long history of uncertainty analysis (Hornbeck 1965; Kundzewicz 1995; Beven and Binley 2013). Within this group, the lowest rates of knowledge were reported for model selection error for both stream water fluxes and precipitation interpolation models.

Spatial and Temporal Patterns

For both vegetation and soils, we asked questions about uncertainties in spatial and temporal patterns. These sources of error were more often reported for soils than for vegetation (Figures 3, 4). As was the case for sampling error, uncertainty in soil patterns across space and time was more often reported for chemistry (81%) than for soil mass (62%). Uncertainty in temporal variation within years was less commonly reported for soils than uncertainty in temporal variation across years.

In contrast, for vegetation studies, uncertainty in tissue chemistry was rated more often important for pattern within years than across years. Uncertainty in changes in biomass over time was even more often reported (71%) than uncertainty in changes in tissue chemistry (47%).

Most researchers reported some sort of uncertainty in temporal patterns (for example, error in the slope over time), but the sources they chose to report varied. For example, researchers interested in vegetation often reported the error associated with changes in biomass over time (71% of respondents), but not changes in tissue chemistry (47% of respondents). In contrast, soil researchers were more likely to report the error associated with changes in soil chemistry (88% of respondents) than the error associated with estimates of soil mass (69% of respondents). This difference may be due in part to differences in the focus of monitoring efforts. For example, repeated “grab” samples of soil for chemical analysis are relatively easy to obtain compared to estimates of soil volume and density, and it is more common to take repeated measures of tree diameter than of wood tissue chemistry (Yang and others 2016). Reporting uncertainty in changes in tissue chemistry was less common for scientists studying forests (1 of 6 respondents) than for those working in other vegetation types (6 of 9 respondents), in which vegetation is destructively harvested to assess biomass.

Researchers interested in spatial patterns in biomass commonly reported error associated with the interpolation of biomass (67% of respondents) and tissue chemistry (47% of respondents), but of the researchers that reported at least one of these, only 20% reported both. For soil scientists, 91% of those interested in spatial patterns in soil reported the uncertainty associated with their interpolation of chemistry, while only 71% reported uncertainty associated with interpolation of mass.

Practices for Handling Problematic Data

We asked respondents how they handle problems with unusable values, such as outliers and unrealistic or impossible values such as negative masses or concentrations. Fifty-five researchers described how they identify unusable values (Figure 6). The great majority of these reported using expert judgment (85%), often in combination with statistical filtering (42%). A minority (15%) reported using statistical filtering without expert judgment.

Values below detection can be common when, for example, measuring chemical concentrations of precipitation, stream water, soils, or vegetation. A surprising number of respondents (15 of 60, or 25%) reported replacing these values with zeros, which would introduce a bias, as zero is always lower than the true value. A smaller number (9) reported omitting the values, which would introduce a bias in the other direction, as omitting low values would leave the others to overestimate the true mean. Using half the detection limit was about as common (17) as using zero or omitting the values. This may or may not be closer to the truth than using zero; 1/2 the detection limit is a reasonable estimate if the frequency distribution of concentrations is linear between zero and the detection limit. Using 1/\( \sqrt 2 \) may be better yet, assuming that the distribution resembles the tail of a normal distribution (Croghan and Egeghy 2003).

A variety of methods are used to fill gaps in the chemical record, as might occur when samples are judged to be unusable. For soils, the most common approach is to omit the values (67%). Soil sampling is commonly conducted with multiple spatially explicit observations, such that omitting an observation might amount to substituting the mean value. For precipitation studies, there was not a single most common approach: using regression (23%), the average of surrounding values (27%), and spatial interpolation (20%) were all common, while simulation modeling was less common (7%). Other approaches were also important (23%); these included using the values from the closest site and leaving the values unfilled. Methods for filling gaps in stream chemistry mirrored those of precipitation: the most common gap-filling method was linear interpolation (30%), followed by regression modeling (20%) and averaging surrounding values (20%). Simulation modeling was the least common method (7%), and nearly a quarter of respondents (23%) chose “other.” For gaps in precipitation volume, spatial interpolation was the most common method (36%), followed by regression (28%) and averaging surrounding values (15%).

Finally, we asked whether methods for filling gaps and identifying unusable values are standardized or left to the researcher’s discretion. Standardization was more common in studies of precipitation (72%) and stream flow (54%) than in studies of soils (42%) or vegetation (39%).

Other Considerations

Confidence in the Survey Results

We did not attempt to verify whether the researchers surveyed were accurately reporting their practices. Some of the sources of uncertainty are difficult to distinguish, and respondents may not have interpreted the questions in the way we intended. Future research will address the reporting of uncertainty sources in publications in these areas. It is interesting to note that our analysis of the calcium budget at Hubbard Brook (Campbell and others 2016) found gaps in precipitation chemistry to be the largest source of error to the net hydrologic flux, but this was ranked by researchers as the least important source of error in our survey. Likewise, our respondents ranked the runoff calculation and the stage-discharge model as the most important sources of error, but these do not appear to be important contributors to error at Hubbard Brook. Without comprehensive uncertainty budgets for many more sites, we cannot determine whether the errors reported for Hubbard Brook are unusual or whether other researchers are mistaken about error at their sites.

Future Research Needs

There is still a need for development of approaches to uncertainty analysis. Personal computers are powerful enough to implement Monte Carlo approaches to error propagation, which makes it possible to include multiple sources of uncertainty without making the assumptions needed for parametric statistics. It would be inefficient, though, to include all possible sources of error, and it is difficult to judge whether errors that are not accounted for are in fact important. Increased awareness of the possible sources of error and multiple reports of their magnitudes will improve our collective wisdom on the importance of various sources in different types of systems.

One area that requires attention is the issue of overlapping uncertainties (Figure 1 shows these relationships with red arrows). For example, measurement error affects estimates of every other error source. When measurements are taken to describe, for example, spatial pattern, we attribute the unexplained variation to sampling error. If both measurement error and sampling error are included in a Monte Carlo analysis, the measurement error is accounted for twice. Similarly, if two models are compared, we call the difference model selection error, but error in model fit can contribute to the observed difference. Model fit has traditionally been described using a confidence interval for the uncertainty in the mean and a prediction interval for the uncertainty in predicting an individual. Although the prediction interval is considered to include the confidence interval, randomly applying uncertainty in the individuals in a Monte Carlo implementation results in an underestimate of uncertainty in model fit (Yanai and others 2012), whereas including both would overestimate uncertainty. Bayesian approaches may provide a better method for propagating multiple overlapping uncertainty sources (Clark 2007).

Recommendations

There is still room for improvement in the application of uncertainty analysis in ecosystem studies. Some sources of uncertainty are well understood and commonly reported, such as the contribution of natural variation to sampling error. It is easy to overlook sources that are not routinely quantified. One way to attempt a comprehensive inventory of uncertainty sources is to scrutinize all the steps in data collection and analysis. This approach might reveal sources that are not usually reported, such as measurement of plot area for vegetation or of watershed area for runoff. Repeating the measurements is one approach to quantifying these sources (Holdaway and others 2014).

Uncertainty in chemical analyses is routinely quantified but less routinely reported, and may be confusing. There are multiple ways of reporting uncertainty in measurements, and the language used to describe them has been inconsistent (Csavina and others 2017; JCGM 2008). There are efforts to standardize terminology in the metrology community (JCGM 2008, 2012), but usage varies across disciplines. Manufacturers may overstate the performance of their instruments, and the quality metrics they provide are not always well documented (Csavina and others 2017); where possible, these should be independently verified.

Propagating multiple sources of uncertainty through an ecosystem calculation can be challenging. Monte Carlo approaches are attractive because they are computationally straightforward, even for complex calculations, and require few assumptions (IPCC 2006). If errors are independent and normally distributed, which is rarely the case, then summing in quadrature (for example, Harmel 2006) would give the same result as a Monte Carlo simulation. The Monte Carlo approach has an advantage over analytical uncertainty propagation because actual distributions of inputs can be used (for example, Campbell and others 2016) without making assumptions about statistical distributions.

Any approach will give biased results if it fails to account for correlated uncertainties. For example, propagating uncertainties in the slope and intercept of a regression as if they were independent overestimates the uncertainty in the statistical model. Uncertainty is underestimated if model error is applied independently to individuals in a sample; the same model error should be applied to all the individuals at each iteration of a Monte Carlo simulation (Yanai and others 2010). Modern computing makes it quick and easy to implement error propagation; the challenges are in identifying the sources of error that are important and in correctly formulating approaches to quantify them.

Value of Uncertainty Analysis

While scientists recognize that uncertainties are inevitable in any estimate, non-scientists may expect a single best answer and are dissatisfied with a distribution of potential values. For example, the wide variation in climate projections among models has been depicted as a scientific weakness. Avoiding knowledge of shortcomings would be a worse impediment to progress; only by quantifying sources of uncertainty can we determine how best to allocate limited resources to improve estimates. Uncertainty analysis can improve efficiency of environmental monitoring, evaluation of research priorities, and predictions of future environmental change that are essential for risk assessment and decision making.

References

Ascough JC, Maier HR, Ravalico JK, Strudley MW. 2008. Future research challenges for incorporation of uncertainty in environmental and ecological decision-making. Ecol Model 219:383–99.

Beven K, Binley A. 2013. Glue: 20 years on. Hydrological processes, pp. 5877–18.

Campbell JL, Yanai RD, Green MB, Likens GE, See CR, Bailey AS, Buso DC, Yang D. 2016. Uncertainty in the net hydrologic flux of calcium in a paired-watershed harvesting study. Ecosphere 7(6):e01299.

Clark JS. 2007. Models for ecological data: an introduction. Princeton: Princeton University Press.

Croghan C, Egeghy PP. 2003. Methods of dealing with values below the limit of detection using SAS. St Petersburg: Southeastern SAS User Group.

Csavina J, Roberti JA, Taylor JR, Loescher HW. 2017. Traceable measurements and calibration: a primer on uncertainty analysis. Ecosphere 8(2):e01683.

Harmel RD, Cooper RJ, Slade RM, Haney RL, Arnold JG. 2006. Cumulative uncertainty in measured streamflow and water quality data for small watersheds. Trans ASABE 49:689–701.

Harmon ME, Phillips DL, Battles J, Rassweiler A, Hall ROJ, Lauenroth WK. 2007. Quantifying uncertainty in net primary production measurements. In: Fahey TJ, Knapp AK, Eds. Principles and standards for measuring net primary production in long-term ecological studies. Cary: Oxford University Press.

Holdaway RJ, McNeill SJ, Mason NW, Carswell FE. 2014. Propagating uncertainty in plot-based estimates of forest carbon stock and carbon stock change. Ecosystems 17:627–40.

Hornbeck JW. 1965. Accuracy in streamflow measurements on the Fernow Experimental Forest. Research Note NE-29. Upper Darby, PA: U.S. Department of Agriculture, Forest Service, Northeastern Forest Experiment Station.

IPCC. 2006. 2006 IPCC Guidelines for National Greenhouse Gas Inventories. Prepared by the National Greenhouse Gas Inventories Programme. In: Eggleston HS, Buendia L, Miwa K, Ngara T, Tanabe K, Eds. Published: IGES, Japan. Volume 1, Chapter 3.

Joint Committee for Guides in Metrology (JCGM). 2008. Evaluation of measurement data—Guide to the expression of uncertainty in measurement (GUM). Genève, Switzerland: International Organization for Standardization.

Joint Committee for Guides in Metrology (JCGM). 2012. International vocabulary of metrology—Basic and general concepts and associated terms. Geneva: ISO Copyright Office.

Johnson CE, Johnson AH, Huntington TG. 1990. Sample size requirements for the determination of changes in soil nutrient pools. Soil Science 150:637–44.

Kundzewicz ZW. 1995. New uncertainty concepts in hydrology and water resources. International Hydrology Series. Cambridge: Cambridge University Press, Cambridge.

Regan HM, Colyvan M, Burgman MA. 2002. A taxonomy and treatment of uncertainty for ecology and conservation biology. Ecol Appl 12:618–28.

Throop HL, Archer SR, Monger HC, Waltman S. 2012. When bulk density methods matter: Implications for estimating soil organic carbon pools in rocky soils. J Arid Environ 77:66–71.

Vadeboncoeur MA, Hamburg SP, Blum JD, Pennino MJ, Yanai RD, Johnson CE. 2012. The quantitative soil pit method for measuring belowground carbon and nitrogen stocks. Soil Sci Soc Am J 76:2241–55.

Yanai RD, Levine CR, Green MB, Campbell JL. 2012. Quantifying uncertainty in forest nutrient budgets. J For 110:448–56.

Yanai RD, Battles JJ, Richardson AD, Rastetter EB, Wood DM, Blodgett C. 2010. Estimating uncertainty in ecosystem budget calculations. Ecosystems 13:239–48.

Yang D, Goodison BE, Metcalfe JR, Golubev VS, Bates R, Pangburn T, Hanson CL. 1998. Accuracy of NWS 8” standard nonrecording precipitation gauge: results and application of WMO intercomparison. J Atmos Ocean Technol 1:54–68.

Yang Y, Yanai RD, Fatemi FR, Levine CR, Lilly PJ, Briggs RD. 2016. Sources of variability in tissue chemistry in northern hardwood species. Can J For Res 46:285–96.

Acknowledgements

This project was launched at the 2015 All Scientists Meeting of the Long Term Ecological Research (LTER) Network, funded by the National Science Foundation (NSF). Mark Harmon guided our early thinking about how to identify uncertainty sources, and Janae Csavina advised us on measurement uncertainty. This paper is a contribution to the Hubbard Brook LTER and a product of QUEST (Quantifying Uncertainty in Ecosystem Studies), an NSF-funded Research Coordination Network. Please visit our website at www.quantifyinguncertainty.org for papers, sample code, presentations, tutorials, and discussion.

Author information

Authors and Affiliations

Corresponding author

Additional information

Author Contributions

This was a quintessentially collaborative effort, especially considering that 140 survey respondents provided the results. CRS designed the survey and analyzed the results, with input from RDY and JLC. JLC designed the graphical presentation of results, with input from CRS and RDY. RDY wrote the text, with input from JLC and CRS. All the authors learned a lot and had a good time.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Yanai, R.D., See, C.R. & Campbell, J.L. Current Practices in Reporting Uncertainty in Ecosystem Ecology. Ecosystems 21, 971–981 (2018). https://doi.org/10.1007/s10021-017-0197-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10021-017-0197-x