Abstract

We consider a new family of derivatives whose payoffs become strictly positive when the price of their underlying asset falls relative to its historical maximum. We derive the solution to the discretionary stopping problems arising in the context of pricing their perpetual American versions by means of an explicit construction of their value functions. In particular, we fully characterise the free-boundary functions that provide the optimal stopping times of these genuinely two-dimensional problems as the unique solutions to highly nonlinear first order ODEs that have the characteristics of a separatrix. The asymptotic growth of these free-boundary functions can take qualitatively different forms depending on parameter values, which is an interesting new feature.

Similar content being viewed by others

1 Introduction

Put options are the most common financial derivatives that can be used by investors to hedge against asset price falls as well as by speculators betting on falling prices. In particular, out-of-the-money put options can yield strictly positive payoffs only if the price of their underlying asset falls below a percentage of its initial value. In a related spirit, equity default swaps (EDSs) pay out if the price of their underlying asset drops by more than a given percentage of its initial value (EDSs were introduced by J.P. Morgan London in 2003, and their pricing was studied by Medova and Smith [16]). Further derivatives whose payoffs depend on other quantifications of asset price falls include the European barrier and binary options studied by Carr [2] and Večeř [27], as well as the perpetual lookback American options with floating strike that were studied by Pedersen [20] and Dai [5].

In this paper, we consider a new class of derivatives whose payoffs depend on asset price falls relative to their underlying asset’s historical maximum price. Typically, a hedge fund manager’s performance fees are linked with the value of the fund exceeding a “high watermark”, which is an earlier maximum. We have therefore named this new class of derivatives “watermark” options. Deriving formulas for the risk-neutral pricing of their European-type versions is a cumbersome but standard exercise. On the other hand, the pricing of their American-type versions is a substantially harder problem, as expected. Here, we derive the complete solution to the optimal stopping problems associated with the pricing of their perpetual American versions.

To fix ideas, we assume that the underlying asset price process \(X\) is modelled by a geometric Brownian motion given by

for some constants \(\mu\) and \(\sigma\neq0\), where \(W\) is a standard one-dimensional Brownian motion. Given a point \(s \geq x\), we denote by \(S\) the running maximum process defined by

In this context, we consider the discretionary stopping problems whose value functions are defined by

and

for some constants \(a, b, r, K > 0\), where \({\mathcal {T}}\) is the set of all stopping times. In practice, the inequality \(r \geq\mu\) should hold true. For instance, in a standard risk-neutral valuation context, \(r>0\) should stand for the short interest rate whereas \(r - \mu\geq0\) should be the underlying asset’s dividend yield rate. Alternatively, we could view \(\mu> 0\) as the short rate and \(r-\mu\geq0\) as additional discounting to account for counterparty risk. Here, we solve the problems without assuming that \(r \geq\mu\) because such an assumption would not present any simplifications (see also Remark 2.2).

Watermark options can be used for the same reasons as the existing options we have discussed above. In particular, they could be used to hedge against relative asset price falls as well as to speculate by betting on prices falling relatively to their historical maximum. For instance, they could be used by institutions that are constrained to hold investment-grade assets only and wish to trade products that have risk characteristics akin to the ones of speculative-grade assets (see Medova and Smith [16] for further discussion that is relevant to such an application). Furthermore, these options can provide useful risk-management instruments, particularly when faced with the possibility of an asset bubble burst. Indeed, the payoffs of watermark options increase as the running maximum process \(S\) increases and the price process \(X\) decreases. As a result, the more the asset price increases before dropping to a given level, the deeper they may be in the money.

Watermark options can also be of interest as hedging instruments to firms investing in real assets. To fix ideas, consider a firm that invests in a project producing a commodity whose price or demand is modelled by the process \(X\). The firm’s future revenue depends on the stochastic evolution of the economic indicator \(X\), which can collapse for reasons such as extreme changes in the global economic environment (see e.g. the recent slump in commodity prices) and/or reasons associated with the emergence of disruptive new technologies (see e.g. the fate of DVDs or firms such as Blackberry or NOKIA). In principle, such a firm could diversify risk by going long in watermark options.

The applications discussed above justify the introduction of watermark options as derivative structures. These options can also provide alternatives to existing derivatives that can better fit a range of investors’ risk preferences. For instance, the version associated with (1.5) effectively identifies with the Russian option (see Remark 3.5). It is straightforward to check that if \(s \geq1\), then the price of the option is increasing as the parameter \(b\) increases, ceteris paribus. In this case, the watermark option is cheaper than the corresponding Russian option if \(b< a\). On the other hand, increasing values of the strike price \(K\) result in ever lower option prices. We have not attempted any further analysis in this direction because this involves rather lengthy calculations and is beyond the scope of this paper.

The parameters \(a, b > 0\) and \(K \geq0\) can be used to fine-tune different risk characteristics. For instance, the choice of the relative value \(b/a\) can reflect the weight assigned to the underlying asset’s historical best performance relative to the asset’s current value. In particular, it is worth noting that larger (resp. smaller) values of \(b/a\) attenuate (resp. magnify) the payoff’s volatility that is due to changes of the underlying asset’s price. In the context of the problem with value function given by (1.5), the choice of \(a\), \(b\) can be used to factor in a power utility of the payoff received by the option’s holder. Indeed, if we set \(a = \tilde{a} q\) and \(b = \tilde{b} q\), then \(S_{\tau}^{b} / X_{\tau}^{a} = ( S_{\tau}^{\tilde{b}} / X_{\tau}^{\tilde{a}} )^{q}\) is the CRRA utility with risk-aversion parameter \(1-q\) of the payoff \(S_{\tau}^{\tilde{b}} / X_{\tau}^{\tilde{a}}\) received by the option’s holder if the option is exercised at time \(\tau\).

From a modelling point of view, the use of geometric Brownian motion as an asset price process, which is standard in the mathematical finance literature, is an approximation that is largely justified by its tractability. In fact, such a process is not an appropriate model for an asset price that may be traded as a bubble. In view of the applications we have discussed above, the pricing of watermark options when the underlying asset’s price process is modelled by diffusions associated with local volatility models that have been considered in the context of financial bubbles (see e.g. Cox and Hobson [3]) presents an interesting problem for future research.

The Russian options introduced and studied by Shepp and Shiryaev [25, 26] are the special cases that arise if \(a=0\) and \(b=1\) in (1.5). In fact, the value function given by (1.5) identifies with the value function of a Russian option for any \(a, b > 0\) (see Remark 3.5). The lookback American options with floating strike that were studied by Pedersen [20] and Dai [5] are the special cases that arise for the choices \(a=b=1\) and \(a=b=K=1\) in (1.4), respectively (see also Remark 3.3). Other closely related problems that have been studied in the literature include the well-known perpetual American put options (\(a = 1\), \(b = 0\) in (1.4)), which were solved by McKean [15], the lookback American options studied by Guo and Shepp [10] and Pedersen [20] (\(a=0\), \(b=1\) in (2.1)), and the \(\pi\)-options introduced and studied by Guo and Zervos [11] (\(a < 0\) and \(b>0\) in (1.3)).

Further works on optimal stopping problems involving a one-dimensional diffusion and its running maximum (or minimum) include Jacka [13], Dubins et al. [8], Peskir [21], Graversen and Peskir [9], Dai and Kwok [6, 7], Hobson [12], Cox et al. [4], Alvarez and Matomäki [1], and references therein. Furthermore, Peskir [22] solves an optimal stopping problem involving a one-dimensional diffusion, its running maximum as well as its running minimum. Papers on optimal stopping problems with an infinite time horizon involving spectrally negative Lévy processes and their running maximum (or minimum) include Ott [18, 19], Kyprianou and Ott [14], and references therein.

In Sect. 2, we solve the optimal stopping problem whose value function is given by (1.3) for \(a =1\) and \(b \in(0, \infty) \setminus\{ 1 \}\). To this end, we construct an appropriately smooth solution to the problem’s variational inequality that satisfies the so-called transversality condition, which is a folklore method. In particular, we fully determine the free-boundary function separating the “waiting” region from the “stopping” region as the unique solution to a first order ODE that has the characteristics of a separatrix. It turns out that this free-boundary function conforms with the maximality principle introduced by Peskir [21]: it is the maximal solution to the ODE under consideration that does not intersect the diagonal part of the state space’s boundary. The asymptotic growth of this free-boundary function is notably different in each of the cases \(1< b\) and \(1>b\), which is a surprising result (see Remark 2.6).

In Sect. 3, we use an appropriate change of probability measure to solve the optimal stopping problem whose value function is given by (1.4) for \(a =1\) and \(b \in(0, \infty)\setminus\{ 1 \}\) by reducing it to the problem studied in Sect. 2. We also outline how the optimal stopping problem defined by (1.1)–(1.3) for \(a = b =1\) reduces to the problem given by (1.1), (1.2) and (1.4) for \(a = b =1\), which is the one arising in the pricing of a perpetual American lookback option with floating strike that has been solved by Pedersen [20] and Dai [5] (see Remark 3.3). We then explain how a simple re-parametrisation reduces the apparently more general optimal stopping problems defined by (1.1)–(1.4) for any \(a>0\), \(b>0\) to the corresponding cases with \(a=1\), \(b>0\) (see Remark 3.4). Finally, we show that the optimal stopping problem defined by (1.1), (1.2) and (1.5) reduces to the one arising in the context of pricing a perpetual Russian option that has been solved by Shepp and Shiryaev [25, 26] (see Remark 3.5).

2 The solution to the main optimal stopping problem

We now solve the optimal stopping problem defined by (1.1)–(1.3) for \(a =1\) and \(b = p \in(0, \infty) \setminus\{ 1 \}\), namely, the problem defined by (1.1), (1.2) and

To fix ideas, we assume in what follows that a filtered probability space \((\varOmega,\! {\mathcal {F}},\! ({\mathcal {F}}_{t}),\! {\mathbb {P}})\) satisfying the usual conditions and carrying a standard one-dimensional \(({\mathcal {F}}_{t})\)-Brownian motion \(W\) has been fixed. We denote by \({\mathcal {T}}\) the set of all \(({\mathcal {F}}_{t})\)-stopping times.

The solution to the optimal stopping problem that we consider involves the general solution to the ODE

which is given by

for some \(A, B \in{\mathbb {R}}\), where the constants \(m < 0 < n\) are the solutions to the quadratic equation

given by

We make the following assumption.

Assumption 2.1

The constants \(p \in(0, \infty) \setminus \{ 1 \}\), \(r, K > 0\), \(\mu\in{\mathbb {R}}\) and \(\sigma\neq0\) are such that

Remark 2.2

We can check that given any \(r>0\), the equivalences

hold true. It follows that Assumption 2.1 holds true for a range of parameter values such that \(r \geq\mu\), which is associated with the applications we have discussed in the introduction, as well as such that \(r < \mu\).

We prove the following result in the Appendix.

Lemma 2.3

Consider the optimal stopping problem defined by (1.1), (1.2) and (2.1). If the problem data is such that either \(m+1 > 0\) or \(n+1-p < 0\), then \(v \equiv\infty\).

We solve the problem studied in this section by constructing a classical solution \(w\) to the variational inequality

with boundary condition

that identifies with the value function \(v\). Given such a solution, we denote by \({\mathcal {S}}\) and \({\mathcal {W}}\) the so-called stopping and waiting regions, which are defined by

In particular, we show that the first hitting time \(\tau_{\mathcal {S}}\) of \({\mathcal {S}}\), defined by

is an optimal stopping time.

To construct the required solution to (2.6) and (2.7), we first note that it is not optimal to stop whenever the state process \((X,S)\) takes values in the set

On the other hand, the equivalences

imply that the set

should be a subset of the continuation region \({\mathcal {W}}\) as well. Furthermore, since

the half-line \(\{ (x,s) \in{\mathbb {R}}^{2} : x = s > 0 \}\), which is part of the state space’s boundary, should also be a subset of \({\mathcal {W}}\) because the boundary condition (2.7) cannot hold otherwise.

In view of the preceding observations, we look for a strictly increasing function \(H: {\mathbb {R}}_{+} \rightarrow{\mathbb {R}}\) satisfying

where

such that

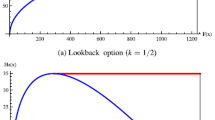

(see Fig. 1).

To proceed further, we recall the fact that the function \(w(\cdot, s)\) should satisfy the ODE (2.2) in the interior of the waiting region \({\mathcal {W}}\). Since the general solution to (2.2) is given by (2.3), we therefore look for functions \(A\) and \(B\) such that

To determine the free-boundary function \(H\) and the functions \(A\), \(B\), we first note that the boundary condition (2.7) requires that

where \(\dot{A}\), \(\dot{B}\) denote the first derivatives of \(A\), \(B\). In view of the regularity of the optimal stopping problem’s reward function, we expect that the so-called “principle of smooth fit” should hold true. Accordingly, we require that \(w(\cdot, s)\) should be \(C^{1}\) along the free-boundary point \(H(s)\) for \(s>0\). This requirement yields the system of equations

which is equivalent to

Differentiating these expressions with respect to \(s\) and substituting the results for \(\dot{A}\) and \(\dot{B}\) in (2.12), we can see that \(H\) should satisfy the ODE

where

In view of (2.9), we need to determine in the domain

the solution to (2.15) that satisfies \(H(0) = 0\). To this end, we cannot just solve (2.15) with the initial condition \(H(0) = 0\) because \({\mathcal {H}}(0,0)\) is not well defined. Therefore, we need to consider all solutions to (2.15) in \({\mathcal {D}}_{H}\) and identify the unique one that coincides with the actual free-boundary function \(H\). It turns out that this solution is a separatrix. Indeed, it divides \({\mathcal {D}}_{H}\) into two open domains \({\mathcal {D}}_{H}^{u}\) and \({\mathcal {D}}_{H}^{l}\) such that the solution to (2.15) that passes though any point in \({\mathcal {D}}_{H}^{u}\) hits the boundary of \({\mathcal {D}}_{H}\) at some finite \(\hat{s}\), while the solution to (2.15) that passes though any point in \({\mathcal {D}}_{H}^{l}\) has asymptotic growth as \(s \rightarrow\infty\) such that the corresponding solution to the variational inequality (2.6) does not satisfy the transversality condition that is captured by the limits on the right-hand side of (2.27) (see also Remark 2.5 and Figs. 2 and 3).

(\(\pmb{p<1}\)) Illustration of Lemma 2.4 (I) for \(s_{\circ}(\delta) = s^{\circ}(\delta)\). The free-boundary function \(H(\cdot) = H(\cdot; s_{\circ})\) that separates the stopping region \({\mathcal {S}}\) from the waiting region \({\mathcal {W}}\) is plotted in red. The intersection of \({\mathbb {R}}^{2}\) with the boundary of the domain \({\mathcal {D}}_{H}\) in which we consider solutions to the ODE (2.15) satisfying (2.18) is designated by green. Every solution to the ODE (2.15) satisfying (2.18) with \(s_{*} \in(s_{\dagger}, s_{\circ})\) (resp. \(s_{*} > s_{\circ}\)) hits the upper part of the boundary of \({\mathcal {D}}_{H}\) in the picture (resp. has asymptotic growth as \(s \rightarrow\infty\) that is of different order than the one of the free-boundary); such solutions are plotted in blue

(\(\pmb{p>1}\)) Illustration of Lemma 2.4 (II) for \(s_{\circ}(\delta) = s^{\circ}(\delta)\). The free-boundary function \(H(\cdot) = H(\cdot; s_{\circ})\) that separates the stopping region \({\mathcal {S}}\) from the waiting region \({\mathcal {W}}\) is plotted in red. The intersection of \({\mathbb {R}}^{2}\) with the boundary of the domain \({\mathcal {D}}_{H}\) in which we consider solutions to the ODE (2.15) satisfying (2.18) is designated by green. Every solution to the ODE (2.15) satisfying (2.18) with \(s_{*} \in(s_{\dagger}, s_{\circ})\) (resp. \(s_{*} > s_{\circ}\)) hits the upper part of the boundary of \({\mathcal {D}}_{H}\) in the picture (resp. has asymptotic growth as \(s \rightarrow\infty\) that is of different order than the one of the free boundary): such solutions are plotted in blue

To identify this separatrix, we fix any \(\delta> 0\) and consider the solution to (2.15) that is such that

where \(s_{\dagger}(\delta) \geq\delta\) is the intersection of \(\{ (x,s) \in{\mathbb {R}}^{2} : x = \delta\text{ and } s>0 \}\) with the boundary of \({\mathcal {D}}_{H}\), which is the unique solution to the equation

The following result, which we prove in the Appendix, is primarily concerned with identifying \(s_{*} > s_{\dagger}\) such that the solution to (2.15) that passes through \((\delta, s_{*})\), i.e., satisfies (2.18), coincides with the separatrix. Using purely analytical techniques, we have not managed to show that this point \(s_{*}\) is unique, i.e., that there exists a separatrix rather than a funnel. For this reason, we establish the result for an interval \([s_{\circ}, s^{\circ}]\) of possible values for \(s_{*}\) such that the corresponding solution to (2.15) has the required properties. The fact that \(s_{\circ}= s^{\circ}\) follows immediately from Theorem 2.8, our main result, thanks to the uniqueness of the optimal stopping problem’s value function.

Lemma 2.4

Suppose that the problem data satisfy Assumption 2.1. Given any \(\delta> 0\), there exist points \(s_{\circ}= s_{\circ}(\delta)\) and \(s^{\circ}= s^{\circ}(\delta)\) satisfying

where \(s_{\dagger}(\delta) \geq\delta\) is the unique solution to (2.19), such that the following statements hold true for each \(s_{*} \in[s_{\circ}, s^{\circ}]\):

(I) If \(p \in(0,1)\), the ODE (2.15) has a unique solution

satisfying (2.18) that is a strictly increasing function such that

where \(c = \frac{m+1}{mK} \in(0, \varGamma)\) (see Fig. 2).

(II) If \(p>1\), the ODE (2.15) has a unique solution

satisfying (2.18) that is a strictly increasing function such that

where \(c = \bigl( \frac{(m+1) (p-n-1)}{(n+1) (p-m-1)} \bigr) ^{1/(n-m)} \in(0,1)\) (see Fig. 3).

(III) The corresponding functions \(A\) and \(B\) defined by (2.13) and (2.14) are both strictly positive.

Remark 2.5

Beyond the results given in the last lemma, we can prove the following:

(a) Given any \(s_{*} \in(s_{\dagger}, s_{\circ})\), there exist a point \(\hat{s} = \hat{s} (s_{*}) \in(0, \infty)\) and a function \(H(\cdot) := H(\cdot; s_{*}) : (0, \hat{s}) \rightarrow{\mathcal {D}}_{H}\) that satisfies the ODE (2.15) as well as (2.18). In particular, \(H\) is strictly increasing and \(\lim_{s \uparrow\hat{s}} H(s) = (\varGamma\hat{s}^{p}) \wedge\hat{s}\).

(b) Given any \(s_{*} > s^{\circ}\), there exists a function \(H(\cdot) := H(\cdot; s_{*}) : (0, \infty) \rightarrow{\mathcal {D}}_{H}\) which is strictly increasing and satisfies the ODE (2.15) as well as (2.18).

Any solution to (2.15) that is as in (a) does not identify with the actual free-boundary function \(H\) because the corresponding solution to the variational inequality (2.6) does not satisfy the boundary condition (2.7). On the other hand, any solution to (2.15) that is as in (b) also does not identify with the actual free-boundary function \(H\) because we can show that its asymptotic growth as \(s \rightarrow\infty\) is such that the corresponding solution \(w\) to the variational inequality (2.6) does not satisfy (2.24), and the transversality condition, which is captured by the limits on the right-hand side of (2.27), is also not satisfied. To keep the paper at a reasonable length, we do not expand on any of these issues that are not really needed for our main results thanks to the uniqueness of the value function.

Remark 2.6

The asymptotic growth of \(H(s)\) as \(s \rightarrow\infty\) takes qualitatively different forms in each of the cases \(p<1\) and \(p>1\) (recall that the parameter \(p\) stands for the ratio \(b/a\), where the parameters \(a\), \(b\) are as in the introduction (see also Remark 3.4)). Indeed, if we denote the free-boundary function by \(H(\cdot; p)\) to indicate its dependence on the parameter \(p\), then

where \(c>0\) is the constant appearing in (I) or (II) of Lemma 2.4, according to the case. Furthermore, \(c\) is proportional to (resp. independent of) \(K^{-1}\) if \(p<1\) (resp. \(p>1\)).

We now consider a solution \(H\) to the ODE (2.15) that is as in Lemma 2.4. In the following result, which we prove in the Appendix, we show that the function \(w\) defined by

is such that

and satisfies (2.6) and (2.7).

Lemma 2.7

Suppose that the problem data satisfy Assumption 2.1. Also, consider any \(s_{*} \in[s_{\circ}(\delta), s^{\circ}(\delta) ]\), where \(s_{\circ}(\delta) \leq s^{\circ}(\delta)\) are as in Lemma 2.4 for some \(\delta> 0\), and let \(H(\cdot) = H(\cdot; s_{*})\) be the corresponding solution to the ODE (2.15) that satisfies (2.18). The function \(w\) defined by (2.20) and (2.21) is strictly positive, satisfies the variational inequality (2.6) outside the set \(\{ (x,s) \in{\mathbb {R}}^{2} : s > 0\textit{ and }x = H(s) \}\) as well as the boundary condition (2.7), and is such that (2.22) and (2.23) hold true. Furthermore, given any \(s>0\), there exists a constant \(C = C (s) > 0\) such that

where

We can now prove our main result.

Theorem 2.8

Consider the optimal stopping problem defined by (1.1), (1.2) and (2.1), and suppose that the problem data satisfy Assumption 2.1. The optimal stopping problem’s value function \(v\) identifies with the solution \(w\) to the variational inequality (2.6) with boundary condition (2.7) described in Lemma 2.7, and the first hitting time \(\tau_{\mathcal {S}}\) of the stopping region \({\mathcal {S}}\), which is defined as in (2.8), is optimal. In particular, \(s_{\circ}(\delta) = s^{\circ}(\delta)\) for all \(\delta> 0\), where \(s_{\circ}\leq s^{\circ}\) are as in Lemma 2.4.

Proof

Fix any initial condition \((x,s) \in{\mathcal {S}}\cup{\mathcal {W}}\). Using Itô’s formula, the fact that \(S\) increases only on the set \(\{ X = S \}\) and the boundary condition (2.7), we can see that

where

It follows that

Given a stopping time \(\tau\in{\mathcal {T}}\) and a localising sequence of bounded stopping times \((\tau_{j})\) for the local martingale \(M\), these calculations imply that

In view of the fact that \(w\) satisfies the variational inequality (2.6) and by Fatou’s lemma, we can see that

and the inequality

follows.

To prove the reverse inequality and establish the optimality of \(\tau_{\mathcal {S}}\), we note that given any constant \(T>0\), (2.25) with \(\tau= \tau_{\mathcal {S}}\wedge T\) and the definition (2.8) of \(\tau_{\mathcal {S}}\) yield

In view of (2.24), Lemma A.1 in the Appendix, the fact that \(S\) is an increasing process and the dominated and monotone convergence theorems, we can see that

Combining this result with (2.26), we obtain the identity \(v = w\) and the optimality of \(\tau_{\mathcal {S}}\). Finally, given any \(\delta> 0\), the identity \(s_{\circ}(\delta) = s^{\circ}(\delta)\) follows from the uniqueness of the value function \(v\). □

3 Ramifications and connections with the perpetual American lookback with floating strike, and with Russian options

We now solve the optimal stopping problem defined by (1.1), (1.2) and (1.4) for \(a =1\) and \(b = p \in(0, \infty) \setminus\{ 1 \}\), i.e., the problem given by (1.1), (1.2) and

by means of an appropriate change of probability measure that reduces it to the one we solved in Sect. 2. To this end, we denote

and we make the following assumption that mirrors Assumption 2.1.

Assumption 3.1

The constants \(p \in(0, \infty) \setminus\{ 1 \}\), \(r, K > 0\), \(\mu\in{\mathbb {R}}\) and \(\sigma\neq0\) are such that

where \(\tilde{m} < 0 < \tilde{n}\) are the solutions to the quadratic equation (2.4), which are given by (2.5) with \(\tilde{\mu}\) and \(\tilde{r}\) defined by (3.2) in place of \(\mu\) and \(r\).

Theorem 3.2

Consider the optimal stopping problem defined by (1.1), (1.2) and (3.1) and suppose that the problem data satisfy Assumption 3.1. The problem’s value function is given by

and the first hitting time \(\tau_{\mathcal {S}}\) of the stopping region \({\mathcal {S}}\) is optimal, where \(v\) is the value function of the optimal stopping problem defined by (1.1), (1.2) and (2.1), given by Theorem 2.8, and \({\mathcal {S}}\) is defined by (2.11) with \(\tilde{\mu}\), \(\tilde{r}\) defined by (3.2) and the associated \(\tilde{m}\), \(\tilde{n}\) in place of \(\mu\), \(r\) and \(m\), \(n\).

Proof

We are going to establish this result by means of an appropriate change of probability measure. We therefore consider a canonical underlying probability space because the problem we solve is over an infinite time horizon. To this end, we assume that \(\varOmega= C({\mathbb {R}}_{+})\), the space of continuous functions mapping \({\mathbb {R}}_{+}\) into ℝ, and we denote by \(W\) the coordinate process on this space, given by \(W_{t} (\omega) = \omega(t)\). Also, we denote by \(({\mathcal {F}}_{t})\) the right-continuous regularisation of the natural filtration of \(W\), which is defined by \({\mathcal {F}}_{t} = \bigcap_{\varepsilon> 0} \sigma( W_{s} , \, s \in[0, t+\varepsilon] )\), and we set \({\mathcal {F}}= \bigvee_{t \geq0} {\mathcal {F}}_{t}\). In particular, we note that the right-continuity of \(({\mathcal {F}}_{t})\) implies that the first hitting time of any open or closed set by an \({\mathbb {R}}^{d}\)-valued continuous \(({\mathcal {F}}_{t})\)-adapted process is an \(({\mathcal {F}}_{t})\)-stopping time (see e.g. Protter [24, Theorems I.3 and I.4]). Furthermore, we denote by ℙ (resp. \(\tilde{\mathbb {P}}\)) the probability measure on \((\varOmega, {\mathcal {F}})\) under which the process \(W\) (resp. the process \(\tilde{W}\) defined by \(\tilde{W}_{t} = - \sigma t + W_{t}\)) is a standard \(({\mathcal {F}}_{t})\)-Brownian motion starting from 0. The measures ℙ and \(\tilde{\mathbb {P}}\) are locally equivalent, and their density process is given by

where \(Z\) is the exponential martingale defined by

Given any \(({\mathcal {F}}_{t})\)-stopping time \(\tau\), we use the monotone convergence theorem, the fact that \({\mathbb {E}}[Z_{T} | {\mathcal {F}}_{\tau}] {\mathbf{1}} _{\{ \tau\leq T \}} = Z_{\tau}{\mathbf{1}} _{\{ \tau\leq T \}}\) and the tower property of conditional expectation to calculate

and the conclusions of the theorem follow from the fact that

and Theorem 2.8. □

Remark 3.3

Using a change of probability measure argument such as the one in the proof of the theorem above, we can see that if \(p=1\), the value function defined by (2.1) admits the expression

where \(X\) is given by

and expectations are computed under an appropriate probability measure \(\overline{{\mathbb {P}}}\) under which \(\overline{W}\) is a standard Brownian motion. This observation reveals that the optimal stopping problem defined by (1.1), (1.2) and (2.1) for \(p=1\) reduces to the one arising in the pricing of a perpetual American lookback option with floating strike, which has been solved by Pedersen [20] and Dai [5].

Remark 3.4

If \(\hat{X}\) is the geometric Brownian motion given by

then

and given any \(\hat{s} \geq\hat{x}\),

In view of these observations, we can see that the solution to the optimal stopping problem defined by (3.3), (3.4) and

which identifies with the problem (1.1)–(1.3) discussed in the introduction, can be immediately derived from the solution to the problem given by (1.1), (1.2) and (2.1). Similarly, we can see that the solution to the optimal stopping problem defined by (3.3), (3.4) and

which identifies with the problem (1.1), (1.2) and (1.4) discussed in the introduction, can be obtained from the solution to the problem given by (1.1), (1.2) and (3.1). In particular,

for

Therefore, having restricted attention to the problems given by (1.1), (1.2) and (2.1) or (3.1) has not involved any loss of generality.

Remark 3.5

Consider the geometric Brownian motion \(X\) given by (1.1) and its running maximum \(S\) given by (1.2). If \(\tilde{X}\) is the geometric Brownian motion defined by

and \(\tilde{S}\) is its running maximum given by

then

As a consequence, we obtain that the value function \(\upsilon_{0}\) defined by (1.5) admits the expression \(\upsilon_{0} (x,s) = \tilde{\upsilon_{0}} (x^{b}, s^{b})\), where

Using a change of probability measure argument such as the one in the proof of Theorem 3.2, we can see that

where expectations are computed under an appropriate probability measure \(\overline{{\mathbb {P}}}\) under which the dynamics of \(\tilde{X}\) are given by

for a standard Brownian motion \(\overline{W}\). It follows that the optimal stopping problem defined by (1.1), (1.2) and (1.5) reduces to the one arising in the context of pricing a perpetual Russian option, which has been solved by Shepp and Shiryaev [25, 26].

References

Alvarez, L.H.R., Matomäki, P.: Optimal stopping of the maximum process. J. Appl. Probab. 51, 818–836 (2014)

Carr, P.: Options on maxima, drawdown, trading gains, and local time. Working paper (2006). Available online at www.math.csi.cuny.edu/probability/Notebook/skorohod3.pdf

Cox, A.M.G., Hobson, D.: Local martingales, bubbles and option prices. Finance Stoch. 9, 477–492 (2005)

Cox, A.M.G., Hobson, D., Obłój, J.: Pathwise inequalities for local time: applications to Skorokhod embeddings and optimal stopping. Ann. Appl. Probab. 18, 1870–1896 (2008)

Dai, M.: A closed-form solution for perpetual American floating strike lookback options. J. Comput. Finance 4, 63–68 (2001)

Dai, M., Kwok, Y.K.: American options with lookback payoff. SIAM J. Appl. Math. 66, 206–227 (2005)

Dai, M., Kwok, Y.K.: Characterization of optimal stopping regions of American Asian and lookback options. Math. Finance 16, 63–82 (2006)

Dubins, L.E., Shepp, L.A., Shiryaev, A.N.: Optimal stopping rules and maximal inequalities for Bessel processes. Theory Probab. Appl. 38, 226–261 (1993)

Graversen, S.E., Peskir, G.: Optimal stopping and maximal inequalities for geometric Brownian motion. J. Appl. Probab. 35, 856–872 (1998)

Guo, X., Shepp, L.: Some optimal stopping problems with non-trivial boundaries for pricing exotic options. J. Appl. Probab. 38, 647–658 (2001)

Guo, X., Zervos, M.: \(\pi\) options. Stoch. Process. Appl. 120, 1033–1059 (2010)

Hobson, D.: Optimal stopping of the maximum process: a converse to the results of Peskir. Stochastics 79, 85–102 (2007)

Jacka, S.D.: Optimal stopping and best constants for Doob-like inequalities. I. The case \(p=1\). Ann. Probab. 19, 1798–1821 (1991)

Kyprianou, A.E., Ott, C.: A capped optimal stopping problem for the maximum process. Acta Appl. Math. 129, 147–174 (2014)

McKean, H.-P.: A free boundary problem for the heat equation arising from a problem of mathematical economics. Ind. Manage. Rev. 6, 32–39 (1965)

Medova, E.A., Smith, R.G.: A structural approach to EDS pricing. Risk 19, 84–88 (2006)

Merhi, A., Zervos, M.: A model for reversible investment capacity expansion. SIAM J. Control Optim. 46, 839–876 (2007)

Ott, C.: Optimal stopping problems for the maximum process with upper and lower caps. Ann. Appl. Probab. 23, 2327–2356 (2013)

Ott, C.: Bottleneck options. Finance Stoch. 18, 845–872 (2014)

Pedersen, J.L.: Discounted optimal stopping problems for the maximum process. J. Appl. Probab. 37, 972–983 (2000)

Peskir, P.: Optimal stopping of the maximum process: the maximality principle. Ann. Probab. 26, 1614–1640 (1998)

Peskir, P.: Quickest detection of a hidden target and extremal surfaces. Ann. Appl. Probab. 24, 2340–2370 (2014)

Piccinini, L.C., Stampacchia, G., Vidossich, G.: Ordinary Differential Equations in \(R^{n}\). Springer, New York (1984)

Protter, P.E.: Stochastic Integration and Differential Equations, 2nd edn. Springer, Berlin (2005)

Shepp, L., Shiryaev, A.N.: The Russian option: reduced regret. Ann. Appl. Probab. 3, 631–640 (1993)

Shepp, L., Shiryaev, A.N.: A new look at the “Russian option”. Theory Probab. Appl. 39, 103–119 (1994)

Večeř, J.: Maximum drawdown and directional trading. Risk 19, 88–92 (2006)

Acknowledgements

We are grateful to the Editor, the Associate Editor and two referees for their extensive comments and suggestions that significantly improved the paper.

Author information

Authors and Affiliations

Corresponding author

Appendix: Proof of results in Sect. 2

Appendix: Proof of results in Sect. 2

We need the following result, the proof of which can be found e.g. in Merhi and Zervos [17, Lemma 1].

Lemma A.1

Given any constants \(T>0\) and \(\zeta\in(0,n)\), there exist constants \(\varepsilon_{1} , \varepsilon_{2} > 0\) such that

Proof of Lemma 2.3

Suppose first that \(m+1 > 0\). Since \(m<0\) is a solution to the quadratic equation (2.4), this inequality implies that

It follows that

On the other hand, the inequality \(p-1 > n\) and the fact that \(n>0\) is a solution to the quadratic equation (2.4) imply that

In view of this inequality, we can see that

and the proof is complete. □

Proof of Lemma 2.4

Throughout the proof, we fix any \(\delta> 0\) and denote by \(s_{\dagger}= s_{\dagger}(\delta)\) the unique solution to (2.19). Combining the assumption \(m+1 < 0\) with the observation that

by (2.10) and the definition (2.17) of \({\mathcal {D}}_{H}\), we can see that

which implies that the function ℋ defined by (2.16) is strictly positive in \({\mathcal {D}}_{H}\). Since ℋ is locally Lipschitz in the open domain \({\mathcal {D}}_{H}\), it follows that given any \(s_{*} > s_{\dagger}\), there exist points \(\underline{s}_{*} \in[0, s_{*})\) and \(\overline{s}_{*} \in (s_{*}, \infty]\) and a unique strictly increasing function \(H(\cdot) = H(\cdot; s_{*}) : (\underline{s}_{*}, \overline{s}_{*}) \rightarrow{\mathcal {D}}_{H}\) that satisfies the ODE (2.15) with initial condition (2.18) and such that

(see Piccinini et al. [23, Theorems I.1.4 and I.1.5]). Furthermore, we note that uniqueness implies that

Given a point \(s_{*} > s_{\dagger}\) and the solution \(H(\cdot; s_{*})\) to (2.15)–(2.18) discussed above, we define

with the usual conventions that \(\inf\emptyset= \infty\) and \(\sup\emptyset= -\infty\), where \(c > 0\) is as in the statement of the lemma, depending on the case. We also denote

and we note that

In particular, we can see that these equivalences and (A.2) imply that

while (A.3) implies the equivalence

In view of the definitions (A.4)–(A.6), the required claims follow if we prove that

as well as

To prove that these results are true, we need to differentiate between the two cases of the lemma: although the main ideas are the same, the calculations involved are remarkably different (compare Figs. 4 and 5).

(\(\pmb{p<1}\)) Illustration of the proof of Lemma 2.4 (I) for \(s_{\circ}(\delta) = s^{\circ}(\delta)\). The identity \(h(s;s_{*}) = s^{-p} H(s;s_{*})\) for all \(s > 0\) relates the solutions to (A.14) for \(s_{*} = s_{*}^{1}, s_{\circ}, s_{*}^{2}\) plotted here with the solutions to the ODE (2.15) satisfying (2.18) that are plotted in Fig. 2. Furthermore, the intersection of \({\mathbb {R}}^{2}\) with the boundary of the domain \({\mathcal {D}}_{h}^{1}\) in which we consider solutions to (A.14) is designated in green

(\(\pmb{p>1}\)) Illustration of the proof of Lemma 2.4 (II) for \(s_{\circ}(\delta) = s^{\circ}(\delta)\). The identity \(h(s;s_{*}) = s^{-1} H(s;s_{*})\) for all \(s > 0\) relates the solutions to (A.19) for \(s_{*} = s_{*}^{1}, s_{\circ}, s_{*}^{2}\) plotted here with the solutions to the ODE (2.15) satisfying (2.18) that are plotted in Fig. 3. Furthermore, the intersection of \({\mathbb {R}}^{2}\) with the boundary of the domain \({\mathcal {D}}_{h}^{2}\) in which we consider solutions to (A.19) is designated in green

\(\underline{\textit{Proof of (I) }(p < 1).}\) In this case, which is illustrated by Fig. 4, we calculate

where

In the arguments that we develop, the inequalities

which are relevant to the case we now consider, are worth keeping in mind. In view of the inequalities

due to (A.7), we can see that

where the function \(\mathfrak{s}\) is defined by

Furthermore, we calculate

and we note that

In particular, this limit and (A.15) imply that

where \(\mathfrak{s}^{-1 }\) is the inverse function of \(\mathfrak{s}\). In view of these observations, we can see that

as well as

for a unique \(s^{\dagger}= s^{\dagger}(\delta) > s_{\dagger}\).

The conclusions (A.17) and (A.18) imply immediately that

Combining this inequality with (A.9) and (A.17), we can see that

In view of this observation, (A.9), (A.16), (A.17) and a straightforward contradiction argument, we can see that \(s_{\circ}\in(s_{\dagger}, s^{\circ}]\) holds true, the function \(h(\cdot; s^{\circ})\) is strictly increasing on \((0,\infty)\) and

It follows that (A.10)–(A.13) are all true.

\(\underline{\textit{Proof of (II) }(p > 1).}\) In this case, which is illustrated by Fig. 5, we calculate

where

In what follows, we use the inequalities

that are relevant to the case we now consider. In view of (A.8) and the inequalities

we can see that

where the function \(\mathfrak{s}\) is defined by

for \(\bar{h} \in(0, c)\). It is straightforward to check that

To proceed further, we define

we note that \(\varGamma= \varGamma_{1} \wedge \varGamma_{2}\), and we observe that

If \(\varGamma= \varGamma_{2}\), then we can use the inequality \((m+1)(n+1) - nm < 0\), which holds true in this case (see also (2.10)), to verify that

for all \(\bar{h} \in(0,1)\). Using this inequality, we can see that if \(\varGamma= \varGamma_{2}\), then

Combining (A.20) with (A.23) and (A.24), we obtain

where \(\mathfrak{s}^{-1 }\) is the inverse function of \(\mathfrak{s}\). It follows that

as well as

for a unique \(s^{\dagger}= s^{\dagger}(\delta) > s_{\dagger}\).

Arguing in exactly the same way as in Case (I) above by using (A.9), (A.25) and (A.26), we can see that (A.11)–(A.13) are all true. Furthermore, (A.10) follows from (A.21)–(A.24).

Proof of (III). In view of (2.17), (A.1) and the observation that

we can see that

If \(p<1\), then we can use the fact that

which we have established in part (I) of the lemma, to see that

is indeed true. On the other hand, if \(p>1\), then we can verify that

Using this inequality, we obtain

Combining this calculation with (2.17), we can see that

because otherwise \(H\) would exit the domain \({\mathcal {D}}_{H}\). □

Proof of Lemma 2.7

We first note that the strict positivity of \(w\) follows immediately from its definition in (2.20) and (2.21) and Lemma 2.4 (III). To establish (2.24), we fix any \(s>0\) and note that Lemma 2.4 implies that there exists a point \(\overline{s} \geq s\) such that

Combining this observation with the fact that \(H\) is continuous, we can see that there exists a constant \(C_{1} (s) > 0\) such that

and

In view of these calculations, the definition (2.20) and (2.21) of \(w\) and the inequalities \(m+1 < 0 < n\), we can see that there exists a constant \(C = C(s) > 0\) such that (2.24) holds true. For future reference, we note that the second of the estimates above implies that given any \(s>0\),

By construction, we shall prove that the positive function \(w\) is a solution to the variational inequality (2.6) with boundary condition (2.7) that satisfies (2.22) and (2.23) if we show that

and

Proof of ( A.28 ). In view of the assumption that \(m+1<0\) and the fact that \(m<0<n\) are the solutions to the quadratic equation (2.4) given by (2.5), we can see that

Combining this with the fact that

(see (2.9) and (2.10)), we calculate

and

It follows that \(f(x,s) < 0\) for all \(s > 0\) and \(x \in [0, H(s)]\), and (A.28) has been established.

\(\underline{\textit{A probabilistic representation of }g.}\) Before addressing the proof of (A.29), we first show that given any stopping time \(\tau\leq\tau_{\mathcal {S}}\), where \(\tau_{\mathcal {S}}\) is defined by (2.8), we have

To this end, we assume \((x,s) \in{\mathcal {W}}\) in what follows without loss of generality. Since the function \(w(\cdot,s)\) satisfies the ODE (2.2) in the waiting region \({\mathcal {W}}\), we can see that

Using Itô’s formula, (2.7), the definition of \(g\) in (A.29) and this calculation, we obtain

It follows that

where \((\tau_{j})\) is a localising sequence of stopping times for the stochastic integral.

Combining (2.24), (A.27) and the positivity of \(w\) with the definition of \(g\) in (A.29) and the fact that \(S\) is an increasing process, we can see that

On the other hand, (A.27), the definition of \(f\) in (A.28), (A.31) and the fact that \(S\) is an increasing process imply that there exists a constant \(C_{2} = C_{2} (s) > 0\) such that

These estimates, the fact that \(\gamma\in (0,n)\), the assumption that \(p-1 < n\) and Lemma A.1 imply that

and

In view of these observations and the fact that \(S\) is an increasing process, we can pass to the limits as \(j \rightarrow\infty\) and \(T \rightarrow\infty\) in (A.35) by using the dominated and the monotone convergence theorems to obtain (A.33).

Proof of ( A.29 ). We first note that (A.30) and the definition (A.22) of \(\varGamma_{1}\) imply that

Combining these inequalities with (A.31) and (A.32), we can see that

where \(\tilde{x} (s)\) is a unique point in \(( H(s), s )\) for all \(s>0\) such that \(s^{p-1} < \varGamma_{1}^{-1}\). In view of these inequalities, (A.34) and the maximum principle, we can see that given any \(s > 0\),

To proceed further, we use the identity

which holds true in \({\mathcal {W}}\) by the definition (2.20), (2.21) of \(w\), as well as (A.30) and (A.32) to calculate

This result and the identities \(g ( H(s), s ) = g_{x} ( H(s), s ) = 0\), which follow from the fact that \(w(\cdot,s)\) is continuously differentiable at \(H(s)\), imply that

Combining this observation with (A.36), we obtain (A.29) for all \(s>0\) such that \(s^{p-1} \geq \varGamma_{1}^{-1}\) and \(x \in( H(s), s )\). On the other hand, combining (A.39) with (A.37) and (A.38), we obtain (A.29) for all \(s>0\) such that \(s^{p-1} \leq K\) and \(x \in( H(s), s )\), because \(g(s,s) \geq0\) if \(s^{p-1} \leq K\) thanks to the positivity of \(w\). It follows that

In particular, (A.29) holds true if the problem data is such that \(\mu\geq\sigma^{2}\), thanks to the equivalences

To establish (A.29) if \(\mu< \sigma^{2}\), we argue by contradiction. In view of (A.37), (A.38), (A.40) and (A.41), we therefore assume that there exist strictly positive \(\tilde{s}_{1} < \tilde{s}_{2}\) such that \(\tilde{s}_{1}^{p-1} , \tilde{s}_{2}^{p-1} \in [K, \varGamma_{1}^{-1}]\),

Also, we note that (A.39) implies that there exists \(\tilde{\varepsilon} > 0\) such that

Given such an \(\tilde{\varepsilon} > 0\) fixed, we consider the solution to (1.1) with initial condition \(X_{0} = \tilde{s}_{2} - \tilde{\varepsilon}\) and the running maximum process \(S\) given by (1.2) with initial condition \(S_{0} = \tilde{s}_{2} - \tilde{\varepsilon}\). Also, we define

Using (A.33), (A.42), (A.43), the identity \(g (H(s), s ) = 0\) that holds true for all \(s>0\) and the fact that the function \(f(x,\cdot) : [x,\infty) \rightarrow{\mathbb {R}}\) is strictly decreasing for all \(x>0\), which follows from the calculation

due to (A.30), we obtain

which is a contradiction. □

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Rodosthenous, N., Zervos, M. Watermark options. Finance Stoch 21, 157–186 (2017). https://doi.org/10.1007/s00780-016-0319-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00780-016-0319-x

Keywords

- Optimal stopping

- Running maximum process

- Variational inequality

- Two-dimensional free-boundary problem

- Separatrix