Abstract

Enumeration of all combinatorial types of point configurations and polytopes is a fundamental problem in combinatorial geometry. Although many studies have been done, most of them are for 2-dimensional and non-degenerate cases. Finschi and Fukuda (Discrete Comput Geom 27:117–136, 2002) published the first database of oriented matroids including degenerate (i.e., non-uniform) ones and of higher ranks. In this paper, we investigate algorithmic ways to classify them in terms of realizability, although the underlying decision problem of realizability checking is NP-hard. As an application, we determine all possible combinatorial types (including degenerate ones) of 3-dimensional configurations of 8 points, 2-dimensional configurations of 9 points, and 5-dimensional configurations of 9 points. We also determine all possible combinatorial types of 5-polytopes with nine vertices.

Similar content being viewed by others

1 Introduction

Point configurations and convex polytopes play central roles in computational geometry and discrete geometry. For many problems, their combinatorial structures or types are often more important than their metric structures. The combinatorial type of a point configuration is defined by all possible partitions of the points by a hyperplane [(the definition given in (2.1)], and encodes various important information such as the convexity, the face lattice of the convex hull and all possible triangulations. One of the most significant merits to consider combinatorial types of them is that they are finite for any fixed sizes (dimension and number of elements) while there are infinitely many realizations of a fixed combinatorial type. This enables us to enumerate those objects and study them through computational experiments. For example, Finschi and Fukuda [21] constructed a counterexample for the conjecture by da Silva and Fukuda [16] using their database [19]. Aichholzer et al. [4] and Aichholzer and Krasser [3] developed a database of point configurations [1] and showed usefulness of the database by presenting various applications to computational geometry [2–4].

Despite its merits, enumerating combinatorial types of point configurations is known to be a quite hard task. In fact, the recognition problem of combinatorial types of point configurations is polynomially equivalent to the Existential Theory of the Reals (ETR), the problem to decide whether a given system of polynomial equalities and inequalities with integer coefficients has a real solution or not, which is a well known NP-hard problem. Furthermore, the realizability problem remains NP-hard even for 2-dimensional (rank \(3)\) case [37, 46]. To overcome this difficulty, enumerations have been done in the following two steps.

The first step is to enumerate a suitable super set of combinatorial types of point configurations which can be recognized easily. One of the most frequently used structures has been oriented matroids. Oriented matroids are defined by a simple set of axioms and various techniques exist for enumeration. Exploiting a canonical representation of oriented matroids and algorithmic advances, Finschi and Fukuda [20, 21] enumerated oriented matroids including non-uniform ones (Definition 2.2), degenerate configurations in the abstract setting, on up to 10 elements of rank 3 and those on up to 8 elements for every rank. In addition, Finschi, Fukuda and Moriyama enumerated uniform oriented matroids (Definition 2.2) in \(\mathrm{OM }(4,9)\) and \(\mathrm{OM }(5,9)\) using OMLIB [19], where \(\mathrm{OM }(r,n)\) denotes the set of all rank \(r\) oriented matroids on \(n\) elements. Aichholzer et al. [4] and Aichholzer and Krasser [3] enumerated uniform oriented matroids on up to 11 elements of rank 3. The enumeration results are summarized in Table 1.

In the second step, to obtain all possible combinatorial types of point configurations, we need to extract oriented matroids that are acyclic and realizable. Realizable oriented matroids (Definition 2.6) are oriented matroids that can be represented as vector configurations and acyclic-ness (Definition 2.3) abstracts the condition that a vector configuration can be associated to a point configuration. While checking the acyclic-ness is trivial, the realizability problem is polynomially equivalent to ETR [37, 46] and thus NP-hard. In this paper, we show that the realizability problem can be practically solved for small size instances by exploiting sufficient conditions of realizability or those of non-realizability.

1.1 Brief History of Related Enumeration

The enumeration of realizable oriented matroids has a long history. First, Grünbaum [29, 30] enumerated all realizable rank 3 oriented matroids on up to six elements through the enumeration of hyperplane arrangements. Then Canham [13] and Halsey [32] performed enumeration of all realizable rank 3 oriented matroids on seven elements. Goodman and Pollack [26, 27] proved that rank 3 oriented matroids on up to eight elements are all realizable. The enumeration of rank 3 uniform realizable oriented matroids on nine elements is due to Richter [41] and Gonzalez-Sprinberg and Laffaille [28]. The case of rank 4 uniform oriented matroids on eight elements was resolved by Bokowski and Richter-Gebert [10]. Bokowski, Laffille and Richter resolved the case of rank 3 uniform oriented matroids on ten elements (unpublished). Recently, Aichholzer et al. [4] developed a database of all realizable non-degenerate acyclic oriented matroids of rank 3 on ten elements and then Aichholzer and Krasser [3] uniform ones of rank 3 on 11 elements. The enumeration results are summarized in Table 2.

The enumeration of combinatorial types of convex polytopes also has a long history. The combinatorial types of convex polytopes are defined by face lattices [29, 51]. All combinatorial types of 3-polytopes can be enumerated by using Steinitz’ theorem [49, 50]. We can also obtain all combinatorial types of \(d\)-polytopes with \(n\) vertices using Gale diagrams for \(n \le d+3\) [25, 33]. On the other hand, the enumeration of combinatorial types of \(d\)-polytopes with (\(d+4\)) vertices and those of 4-polytopes are known to be quite difficult [37, 42]. Grünbaum and Sreedharan [31] listed all combinatorial types of simplical 4-polytopes with eight vertices, Altshuler et al. [6] those of simplicial 4-polytopes with nine vertices, and then Altshuler and Steinberg [5] those of non-simplicial 4-polytopes with eight vertices. The enumeration results are summarized in Table 3.

However, there is no database of these objects including degenerate ones or of higher dimensions, currently. Many problems in combinatorial geometry remain open especially for higher dimensional cases or degenerate cases, and thus a database of combinatorial types for higher dimensional or degenerate ones will be of great importance. For example, characterizing the \(f\) vectors of \(d\) polytopes is a big open problem for \(d \ge 4\) while the same questions for 3-polytopes and for simplicial polytopes have already been solved [7, 47, 48].

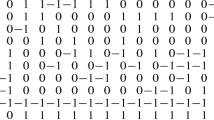

Since Finschi and Fukuda [19, 20] developed a database of oriented matroids containing non-uniform ones, the realizability classification of larger oriented matroids including non-uniform case has begun. Existing non-realizability certificates such as non-Euclideanness [18, 35] and biquadratic final polynomials [9] and existing realizability certificates such as non-isolated elements [44] and solvability sequence [11] were applied to \(\mathrm{OM }(4,8)\) and \(\mathrm{OM }(3,9)\) [24, 39, 40]. A new realizability certificate using polynomial optimization and generalized mutation graphs [40] and new non-realizability certificates non-HK* [24] and applying semidefinite programming [36] were proposed and applied to \(\mathrm{OM }(4,8)\) and \(\mathrm{OM }(3,9)\). Results of those classifications are summarized in Fig. 1.

It is important to observe that there are 4,803 oriented matroids in \(\mathrm{OM }(4,8)\) and 8,548 oriented matroids in \(\mathrm{OM }(3,9)\) whose realizability was previously unknown.

1.2 Our Contribution

In this paper, we complete the classification of \(\mathrm{OM }(4,8)\), \(\mathrm{OM }(3,9)\), and \(\mathrm{OM }(6,9)\) (Theorem 1.1) by providing a new method that could successfully find realizations of all previously unclassified oriented matroids.

As mentioned above, the realizability problem is as hard as solving general polynomial inequalities asymptotically. There are several methods to solve general polynomial inequalities such as cylindrical algebraic decomposition [14], but the problem size which can be practically dealt with is quite limited, and our instances turn out to be intractable. One of the reasons is that those methods compute the complete description of cylindrical decomposition of the solution set, which is not necessary for our purpose. It suffices to find one solution of the polynomial system to decide realizability, which is usually a much easier task. It is recently reported that methods based on random realizations are applied successfully to the classification of the realizability of uniform oriented matroids [3, 4] and that of triangulated surfaces [34]. However, those methods are not directly applicable to non-uniform oriented matroids.

In this paper, we take a fresh look at the solvability sequence method [11], which detects the realizability of a given oriented matroid, provided one can eliminate all variables in the polynomial system using a certain elimination rule. We extend the elimination rule and introduce some additional techniques so that they can be applied to a broader class of oriented matroids. We also use random realizations when there are remaining variables in the final step. Using this method, we manage to realize all realizable oriented matroids in \(\mathrm{OM }(4,8)\), \(\mathrm{OM }(3,9)\), and \(\mathrm{OM }(6,9)\). This in turn proves that every non-realizable oriented matroid in these classes admits a biquadratic final polynomial certificate.

Theorem 1.1

-

(a)

Among \(\mathrm{181{,}472 }\) oriented matroids in \(\mathrm{OM }(4, 8)\) (reorientation class), \(\mathrm{177{,}504 }\) oriented matroids are realizable and \(\mathrm{{3{,}968}}\) are non-realizable.

-

(b)

Among \(\mathrm{461{,}053 }\) oriented matroids in \(\mathrm{OM }(3, 9)\) (reorientation class), \(\mathrm{460{,}779 }\) oriented matroids are realizable and \(\mathrm{274 }\) are non-realizable.

-

(c)

Among \(\mathrm{508{,}321 }\) oriented matroids in \(\mathrm{OM }(6,9)\) (reorientation class), \(\mathrm{508{,}047 }\) oriented matroids are realizable and \(\mathrm{274 }\) are non-realizable.

We note here that the classification of \(\mathrm{OM }(6,9)\) is obtained from the classification of \(\mathrm{OM }(3,9)\) and the duality of oriented matroids [8]. As a byproduct, we obtain the following results.

Theorem 1.2

-

(a)

There are exactly \(\mathrm{15{,}287{,}993 }\) combinatorial types of 2-dimensional configurations of 9 points, \(\mathrm{105{,}128{,}749 }\) 5-dimensional configurations of 9 points, and \(\mathrm{10{,}559{,}305 }\) 3-dimensional configurations of 8 points.

-

(b)

There are exactly \(\mathrm{47{,}923 }\) combinatorial types of 5-dimensional polytopes with nine vertices. Among them, \(\mathrm{322 }\) are simplicial and \(\mathrm{126 }\) are simplicial neighborly.

Our classification results with certificates are available at http://www-imai.is.s.u-tokyo.ac.jp/~hmiyata/oriented_matroids/ To make the results as reliable as possible, we recomputed realizability or non-realizability even for oriented matroids whose realizability had been known previously. In the above web page, realizations of all realizable oriented matroids and final polynomials of all non-realizable oriented matroids are uploaded. One can check correctness of our results there.

Organization of the paper In Sect. 2, we present some basic notions on oriented matroids. Then we discuss a standard method to find realizations in Sect. 3. We first review the existing method to decrease the size of a polynomial system, and explain a new method to search for solutions of polynomial systems. We apply these methods to the classification of \(\mathrm{OM }(4,8)\), \(\mathrm{OM }(3,9)\), and \(\mathrm{OM }(6,9)\) in Sect. 4, and conclude the paper in Sect. 5.

2 Preliminaries

In this section, we review basic notions on oriented matroids that are used in the paper.

For further details about oriented matroids, see [8].

2.1 Point Configurations and Their Combinatorial Abstractions

Let \(P=\{ p_{1},\ldots ,p_{n} \}\) be a point configuration in \(\mathbb R ^{r-1}\). We define a map \(\chi :\{1,\ldots ,n\}^{r} \rightarrow \{ +,-,0 \}\) by

where \(v_{1}:=\big (\begin{array}{l}p_{1} \\ 1\end{array}\big )\),...,\(v_{n}:=\big (\begin{array}{l}p_{n} \\ 1 \end{array}\big ) \in \mathbb R ^{r}\) are the associated vectors of \(p_{1},\ldots ,p_{n}\). We define the map \(\chi \) as the combinatorial type of \(P\), which satisfies the following properties (a), (b), and (c) with \(E= \{ 1,\ldots ,n\}\).

Definition 2.1

(Chirotope axioms) Let \(E\) be a finite set and \(r\ge 1\) an integer. A chirotope of rank \(r\) on \(E\) is a mapping \(\chi :E^{r} \rightarrow \{ +1,-1,0\}\) which satisfies the following properties for any \(i_{1},\ldots ,i_{r},j_{1},\ldots ,j_{r} \in E\).

-

(a)

\(\chi \) is not identically zero.

-

(b)

\(\chi (i_{\sigma (1)},\ldots ,i_{\sigma (r)}) = \mathrm{sgn} (\sigma ) \chi (i_{1},\ldots ,i_{r})\) for all \(i_1,\dots ,i_r \in E\) and any permutation \(\sigma \).

-

(c)

If \(\chi (j_{s},i_{2},\ldots ,i_{r})\cdot \chi (j_{1},\ldots ,j_{s-1},i_{1},j_{s+1},\ldots ,j_{r}) \ge 0\, \mathrm{for \, all }\, s=1,\ldots ,r, \mathrm{then }\) \(\chi (i_{1},\ldots ,i_{r}) \cdot \chi (j_{1},\ldots ,j_{r}) \ge 0.\)

We note here the third property is an abstraction of Grassmann–Plücker relations:

where \([i_{1},\ldots ,i_{r}]:=\det (v_{i_{1}},\ldots ,v_{i_{r}})\) for all \(i_{1},\ldots ,i_{r} \in E\). We define an oriented matroid as a pair of a finite set \(E\) and a chirotope \(\chi :E^{r} \rightarrow \{ +1,-1,0 \}\) satisfying the above axioms. From now on, we set \(E:= \{ 1,\ldots ,n\}\) throughout this section. Since \(\chi \) is completely determined by the values on \(\Lambda (n,r):=\{ (i_1,\ldots ,i_r) \in \mathbb N ^r \mid 1 \le i_1 < \cdots < i_r \le n \}\), we sometimes regard the restriction \(\chi |_{\Lambda (n,r)}\) as the chirotope itself. We call the pair \((E, \{ \chi ,-\chi \} )\) a rank \(r\) oriented matroid with \(n(=|E|)\) elements. The concept of degeneracy is also defined for oriented matroids as follows.

Definition 2.2

A rank \(r\) oriented matroid \((E,\{ \chi ,-\chi \})\) is said to be uniform if \(\chi (i_{1},\dots ,i_{r}) \ne 0\) for all \((i_{1},\ldots ,i_{r}) \in \Lambda (n,r)\), otherwise non-uniform.

Note that every (\(d+1)\)-subset of a \(d\)-dimensional point configuration spans a \(d\)-dimensional space if and only if the underlying oriented matroid is uniform.

For a point configuration \(P=\{ p_1,\ldots ,p_n \} \subset \mathbb R ^d\), the data of chirotope is known to be equivalent to the following data.

An element of \(\mathcal{V}^*_P\) is called a covector. Another axiom system of oriented matroids (Covector axiom) can be obtained by abstracting properties of \(\mathcal{V}_P^*\). See [8, Chapt. 3], for details.

Note that every point configuration has the covector \((++\cdots +)\). Abstracting this property, acyclic oriented matroids are defined.

Definition 2.3

An oriented matroid is said to be acyclic if it has the covector \({(++ \cdots +)}\).

We shall see that there is a one-to-one correspondence between the acyclic realizable oriented matroids and the combinatorial types of point configurations.

From \(\mathcal{V}_P^*\), we can read off the convexity of \(P\). For \(i=\,1,\ldots ,n\), \(p_i\) is an extreme point of \(P\) if and only if \(\mathcal{V}_P^*\) contains the covector \({(+\cdots +0+\cdots +)}\) where \(0\) is on the \(i\)th position. In this way, matroid polytopes are introduced as abstractions of convex point configurations.

Definition 2.4

An acyclic oriented matroid on a ground set \(\{ 1,\ldots ,n\}\) is called a matroid polytope if for all \(i=1,\ldots ,n\), it has the covector \({(+\cdots +0+\cdots +)}\) where \(0\) is on the \(i\)th position.

For a matroid poltyope, its facets are defined by non-negative cocircuits i.e., non-negative minimal covectors. For details on matroid polytopes, see [8, Chapter 9].

2.2 The Realizability Problem

Every vector configuration has the underlying oriented matroid, but the converse is not true because “non-realizable” oriented matroids exist.

Definition 2.5

(The realizability problem of oriented matroids) Given a rank \(r\) oriented matroid \(M=(E,\{ \chi ,-\chi \} )\) with \(n\) elements, the realizability problem for \((E,\{ \chi ,-\chi \} )\) is to decide whether the following polynomial system has a real solution \(v_{1},\dots ,v_{n} \in \mathbb R ^{r}\):

Definition 2.6

A rank \(r\) oriented matroid is said to be realizable if it arises from an \(r\) dimensional vector configuration, otherwise non-realizable.

Not every realizable oriented matroid arises from a point configuration because some set of vectors cannot be on one open halfspace of a hyperplane. In fact, this property characterizes those realizable oriented matroids that do not arise from a point configuration. When this property is violated, then we have the covector \((++\cdots +)\) in the associated oriented matroid and thus it is acyclic. It follows that the combinatorial types of point configurations can be obtained by extracting acyclic ones from the complete list of realizable oriented matroids.

2.3 Isomorphic Classes

In this paper, we consider only simple oriented matroids, those without parallel elements and loops, see [8]. For simple oriented matroids, we consider the following two equivalent classes.

Definition 2.7

Let \(M=(E,\{ \chi , -\chi \} )\) and \(M^{\prime }=(E,\{ \chi ^{\prime }, -\chi ^{\prime }\} )\) be oriented matroids.

-

(a)

\(M\) and \(M^{\prime }\) are relabeling equivalent if

$$\begin{aligned} \chi (i_{1},\ldots ,i_{r})=\chi ^{\prime }(\phi (i_{1}),\ldots ,\phi (i_{r}))\, \quad \, \mathrm{for \, all} \, i_1,\ldots ,i_r \in E \end{aligned}$$or

$$\begin{aligned} \chi (i_{1},\ldots ,i_{r})=- \chi ^{\prime }(\phi (i_{1}),\ldots ,\phi (i_{r}))\, \quad \, \mathrm{for \, all}\, i_1,\ldots ,i_r \in E \end{aligned}$$for some permutation \(\phi \) on \(\{ 1,\ldots ,n\}\).

-

(b)

\(M\) and \(M^{\prime }\) are reorientation equivalent if \(M\) and \(-_{A}M^{\prime }\) are relabeling equivalent for some \(A \subset E\), where \(-_{A}M^{\prime }\) is the oriented matroid determined by the chirotope \(-_{A}\chi ^{\prime }\) defined as follows.

$$\begin{aligned} -_{A}\chi ^{\prime } (i_1,\ldots ,i_r):=(-1)^{|A \cap \{ i_1,\ldots ,i_r\} |}\chi ^{\prime } (i_1,\ldots ,i_r) \, \quad \,\mathrm{for}\, i_1,\ldots ,i_r \in E. \end{aligned}$$

We note here that the realizability is completely determined by the reorientation classes of oriented matroids, the equivalence classes defined by the reorientation equivalence. This is because if any oriented matroid in a reorientation class is realizable, then every oriented matroid in the class is realizable. A database of oriented matroids by Finschi and Fukuda [19] consists of the representatives of the reorientation classes. On the other hand, we say that point configurations \(P,P^{\prime }\) have the same combinatorial type if the combinatorial type of \(P\) and that of \(P^{\prime }\) belong to the same relabeling class.

3 Methods to Recognize Realizability of Oriented Matroids

Recognizing a given oriented matroid being realizable amounts to finding a solution to the associated polynomial system (2.2) in Definition 2.5.

Our strategy is as follows. We first simplify the polynomial system as much as possible, namely, eliminate as many variables as possible using simple elimination rules and then try random realizations if no further elimination of variables is possible by the elimination rules.

3.1 Inequality Reduction Techniques

There are three critical parameters to measure the difficulty of solving polynomial systems: the number of variables, the degrees of variables and the number of constraints. We must try to keep each of them small. For these purposes, we employ some techniques used in [11, 12, 38, 40]. Let us review these techniques briefly.

3.1.1 Fixing a Basis

A technique explained in the following was introduced in [11] and was used to reduce the degrees of polynomials and the number of variables in [11, 38, 40].

We assume that \(\chi (i_1,\ldots ,i_r)=+\) for some \(i_1,\ldots ,i_r\) by taking the negative of \(\chi \) if necessary. Let \(M_V:=(v_1,\ldots ,v_n) \in \mathbb R ^{d \times n}\) be the representation matrix of a vector configuration \(V\). Because the combinatorial type of \(V\) is invariant under any invertible linear transformations, we can assume that \(v_{b_{1}}=(1,0,\ldots ,0)^{T},v_{b_{2}}=(0,1,0,\ldots ,0)^{T},\ldots ,v_{b_{r}}=(0,\ldots ,0,1)^{T}\) for an \(r\) tuple \((b_1,\ldots ,b_r) \in \Lambda (n,r)\) such that \(\chi (b_1,\ldots ,b_r) = +\). Such an \(r\) tuple of indices is called a basis. We obtain a new polynomial system as follows.

The resulting polynomial system (3.1) depends on the choice of bases. In the next section, we present how to find a suitable basis.

Finally, note that the degree of each constraint can be computed easily by the following formula:

and that the sign of each variable \(v_{kl}\) is determined by the obvious equation:

3.1.2 Minimal Reduced Systems

Most of the techniques in this section were first introduced in [11] for uniform oriented matroids to reduce the number of constraints and then extended to general oriented matroids in [38, 40].

Recall that in the polynomial system (3.1) which describes the condition of realizability, there are \(\genfrac(){0.0pt}{}{n}{r}\) constraints. It contains possible redundancies, as one may reconstruct \(\chi \) from partial values of \(\chi \) by using Axiom (c) of chirotope. For example, if \(\chi (1,2,3)=\chi (1,4,5)=\chi (1,2,4)=\chi (1,3,5) = \chi (1,2,5)=+\), then we obtain \(\chi (1,3,4)=+\) by Axiom (c). For a subset \(R\) of \(\Lambda (n,r)\), we denote \(\langle R \rangle \) the set of all \(r\) tuples whose \(\chi \) signs are implied by the sign information on \(\chi \) over \(r\) tuples in \(R\) and the chirotope axioms. A minimal subset \(R(\chi )\) of \(\Lambda (n,r)\) sufficient to reconstruct \(\chi \) is called a minimal reduced system for \((E,\{ \chi , - \chi \} )\). First, we observe that generalized mutations to be defined below must be contained in any minimal reduced system of \(\chi \).

Definition 3.1

An \(r\) tuple \(\lambda \in \Lambda (n,r)\) is called a generalized mutation of \(\chi \) if there exists an oriented matroid \((E ,\{ \chi ^{\prime },-\chi ^{\prime }\})\) such that \(\chi (\mu ) = \chi ^{\prime }(\mu )\) for all \(\mu \in \Lambda (n,r) \setminus \{ \lambda \}\) and \(\chi (\lambda ) \ne \chi ^{\prime } (\lambda )\).

This definition is different from that of [38, 40], but turns out to be more natural because of the proposition below.

Let us denote the set of all generalized mutations of \(\chi \) by \(\mathrm{GMut}(\chi )\). A nice characterization of the mutations of uniform oriented matroids is given in [45, Theorem 3.4.], but such a characterization is not known for generalized mutations. However, one can compute \(\mathrm{GMut}(\chi )\) using the following proposition, which is a straightforward extension of [45, Proposition 3.3.].

Proposition 3.2

An \(r\)-tuple \(\lambda :=(i_1,\ldots ,i_r) \in \Lambda (n,r)\) is a generalized mutation of \(\chi \) if and only if \(\lambda \) is not determined by Grassmann–Plücker relations, i.e., the following condition holds:

for all \(1 \le j_1 < \cdots < j_r \le n\).

Proof

In this proof, we use the notation \(\langle k_1,\ldots ,k_r \rangle \) to represent an \(r\)-tuple \((k^{\prime }_1,\ldots ,k^{\prime }_r)\) such that \(\{ k^{\prime }_1,\ldots ,k^{\prime }_r\} = \{ k_1,\ldots ,k_r\}\) and \(k^{\prime }_1 \le \cdots \le k^{\prime }_r\), for \(k_1,\ldots ,k_r \in \mathbb N \).

To prove the if-part, let us take a map \(\chi :\Lambda (n,r) \rightarrow \{ +,-,0\}\) satisfying Condition (3.2). We consider a map \(\chi ^{\prime }:\{1,\ldots ,n\} \rightarrow \{ +,-,0\}\) satisfying Axioms (a) and (b) of chirotope and \(\chi ^{\prime } (\mu ) = \chi (\mu ) \) for all \(\mu \in \Lambda (n,r) \setminus \{ \lambda \}\) and \(\chi (\lambda ) \ne \chi ^{\prime } (\lambda )\). We prove that \(\chi ^{\prime }\) also satisfies Axiom (c). For \((k_1,\ldots ,k_r), (l_1,\ldots ,l_r) \in \Lambda (n,r)\), assume that

Under this condition, we prove \(\chi ^{\prime } (k_1,\ldots ,k_r)\chi ^{\prime } (l_1,\ldots ,l_r) \ge 0\) by the following case analyses.

-

(I) \(k_1 \in \{ l_1,\ldots ,l_r\}\). Let \(t \in \{ 1,\ldots ,r\}\) be an integer such that \(k_1 = l_t\).

$$\begin{aligned}&\chi ^{\prime } (l_{t},k_{2},\ldots ,k_{r})\cdot \chi ^{\prime } (l_{1},\ldots ,l_{t-1},k_{1},l_{t+1},\ldots ,l_{r}) \\&\quad = \chi ^{\prime } (k_1,\ldots ,k_{r})\cdot \chi ^{\prime } (l_{1},\ldots ,l_{r}) \ge 0. \end{aligned}$$ -

(II) \(k_1 \notin \{ l_1,\ldots ,l_r\}\).

-

(II-A) \(|\{ k_1,\ldots ,k_r\} \cap \{ l_1,\ldots ,l_r \} | = r-1\). Let us take \(l_u \notin \{ k_1,\ldots ,k_r \}\). Then we have

$$\begin{aligned}&\chi ^{\prime } (l_{u},k_{2},\ldots ,k_{r}) \cdot \chi ^{\prime } (l_{1},\ldots ,l_{u-1},k_{1},l_{u+1},\ldots ,l_{r}) \\&\quad = (-1)^u \cdot \chi ^{\prime } (l_{1},\ldots ,l_{r}) \cdot (-1)^u \cdot \chi ^{\prime } (k_{1},\ldots ,k_{r}) \\&\quad = \chi ^{\prime } (l_{1},\ldots ,l_{r}) \cdot \chi ^{\prime } (k_{1},\ldots ,k_{r}) \ge 0. \end{aligned}$$ -

(II-B) \(|\{ k_1,\ldots ,k_r\} \cap \{ l_1,\ldots ,l_r\}| < r-1\).

-

(i) \(\langle k_1,\ldots ,k_r \rangle = \lambda \). Since \(k_1 \notin \{ l_1,\ldots ,l_r\}\) and \(|\{ k_1,\ldots ,k_r\} \cap \{ l_1,\ldots ,l_r\}| < r-1\), \(\langle l_{s},k_{2},\ldots ,k_{r} \rangle \ne \lambda \) and \(\langle l_{1},\ldots ,l_{s-1},k_{1},l_{s+1},\ldots ,l_{r} \rangle \ne \lambda \) for \(s=1,\ldots ,r\). Therefore, Condition (3.3) implies

$$\begin{aligned} \chi (l_{s},k_{2},\ldots ,k_{r})\cdot \chi (l_{1},\ldots ,l_{s-1},k_{1},l_{s+1},\ldots ,l_{r}) \ge 0 \, \quad \, \mathrm{for\, all }\, s=1,\ldots ,r. \end{aligned}$$To satisfy Condition (3.2), the following must hold.

$$\begin{aligned} \chi ^{\prime } (l_1,\ldots ,l_r) = \chi (l_1,\ldots ,l_r) = 0. \end{aligned}$$ -

(ii) \(\langle l_1,\ldots ,l_r \rangle = \lambda \). Proved similarly to Case (i).

-

(iii) \(\langle k_1,\ldots ,k_r \rangle \ne \lambda \), \(\langle l_1,\ldots ,l_r \rangle \ne \lambda \).

First, we consider the case when there exists \(s_0\) such that \( \lambda = \langle l_{s_0},k_2,\ldots ,k_{r} \rangle \) and \(\chi (l_1,\ldots ,l_{s_0-1},k_1,l_{s_0+1},\ldots ,l_r) \ne 0\). Let us write down Condition (3.2) for \(\chi \) under \(i_1:=l_{s_0},i_2:=k_2,\ldots ,i_r:=k_r\) and \(j_1:=l_1,\ldots ,j_{s_0-1}:=l_{s_0-1},j_{s_0}:=k_1,j_{s_0+1}:=k_{s_0+1},\ldots ,j_r:=l_r:\)

This implies \(\chi ^{\prime } (k_{1},\ldots ,k_{r})\chi ^{\prime } (l_{1},\ldots , l_{r}) = +\).

We consider the other case. In this case, Condition (3.3) implies

and thus

Finally, we conclude \(\chi ^{\prime } (k_{1},\ldots , k_{r})\chi ^{\prime } (l_{1},\ldots , l_{r}) \ge 0\) for all cases. This proves the if-part.

We prove the only-if-part by contradiction. Suppose that there exist \(1 \le j_1 < \cdots < j_r \le n\) such that

In this case, we obtain \(\chi (i_1,\ldots ,i_r) = \chi (j_1,\ldots ,j_r) \chi (j_{1},i_{2},\ldots , i_{r}) \chi (i_{1},j_{2},\ldots , j_{r})\) using Axiom (c) of chirotope. On the other hand, for a map \(\chi ^{\prime }:\Lambda (n,r) \rightarrow \{ +,-,0 \}\) taking the same value as that of \(\chi \) except for \(\lambda \), the following holds.

This means that \(\chi ^{\prime }\) violates the chirotope Axiom (c). \(\square \)

The proposition above implies \(GMut(\chi ) \subset R(\chi )\). This leads to the following procedure to compute \(R(\chi )\), which is a slightly modified version of an algorithm in [12, 38].

Algorithm 3.3

(Computing a minimal reduced system for \(\chi )\)

Input: A chirotope \(\chi :\{ 1,\ldots ,n \}^{r} \rightarrow \{ +,-,0 \}\), \(b \in \Lambda (n,r)\) s.t. \(\chi (b) \ne 0\).

Output: A minimal reduced system \(R(\chi )\).

-

Step 1: Compute \(\mathrm{GMut}(\chi )\) and set \(R:=GMut(\chi )\).

-

Step 2: \(C:= \langle R \rangle \). If \(C =\Lambda (n,r)\), return \(R\). Otherwise go to Step \(3\).

-

Step 3: Choose \(\mu \in \Lambda (n,r) \setminus C\) that minimizes \(|\mu \setminus b|\), and add it to \(R\). Go to Step \(2\).

As a result, we obtain a new polynomial system:

One might be able to simplify the reduced polynomial system by selecting a different basis. One way is to search a better basis \(b^{\prime }\), for example, to minimize \(\sum _{\beta \in R(\chi )}{|b^{\prime } \setminus \beta |}\), the sum of degrees of monomials appearing in the system. Various weight functions of constraints are considered in [12, 38]. This is the subject of the next section.

3.1.3 Normalization (Eliminating Homogeneity)

A technique to be explained here is used in [38, 40] to reduce the number of variables and the degrees of polynomials, and in addition, to eliminate some of the equality constraints.

First, we negate the negative variables to make all variable non-negative, and obtain the following new polynomial system:

where \(s_{i_{1}\ldots i_{r}}\) denotes the number of negative variables in \(v_{1i_{1}},\ldots ,v_{ri_{1}},v_{1i_{2}},\ldots ,v_{ri_{r}}\).

Let \(\alpha _{1},\ldots ,\alpha _{n},\beta _{1},\ldots ,\beta _{r}\) be arbitrary positive numbers and \(w_{1},\ldots ,w_{r} \in \mathbb R ^{n}\) be row vectors of \(M_{V^{\prime }}:=(v^{\prime }_{1},\ldots ,v^{\prime }_{n})\). Then

where \(w_{j}(i_{1},\ldots ,i_{r})\) denotes the vector whose \(k\)th element is the \(i_k\)th element of \(w_{j}\) for \(1 \le i_1 < \ldots < i_r \le n\) and \(1 \le j \le r\). This allows us to fix two indices \(1 \le i \le n\) and \(1 \le j \le r\) and to assume that every component of the vectors \(v^{\prime }_{i}\) and \(w_{j}\) is \(0\) or \(1\).

Furthermore, we can eliminate some of equality constraints as follows. Let us classify the constraints arising from \(\chi (i_1,\ldots ,i_r)\) depending on the values of \(|\{ b_1,\ldots ,b_r \} \setminus \{ i_1,\ldots ,i_r \} |\).

-

Case (a)

\(|\{ b_1,\ldots ,b_r \} \setminus \{ i_1,\ldots ,i_r \} | = 1\). The constraint corresponds to the sign constraint “\(v^{\prime }_{ij} > 0\)” or “\(v^{\prime }_{ij} = 0\)” for certain \(1 \le i \le n\), \(1 \le j \le r\).

-

Case (b)

\(|\{ b_1,\ldots ,b_r \} \setminus \{ i_1,\ldots ,i_r \} | = 2\). Let \(\{ b_1,\ldots ,b_r \} \setminus \{ i_1,\ldots ,i_r \} =: \{ i,i^{\prime }\}\) and \(\{ i_1,\ldots ,i_r \} \setminus \{ b_1,\ldots ,b_r \} =: \{ j,j^{\prime }\}\). Then the constraint is of form \(v^{\prime }_{ij}v^{\prime }_{i^{\prime }j^{\prime }}-v^{\prime }_{i^{\prime }j}v^{\prime }_{ij^{\prime }}=0\), where each variable may be fixed to 0 or 1. If the row \(i\) is normalized, the constraint becomes \(v^{\prime }_{i^{\prime }j^{\prime }}-v^{\prime }_{i^{\prime }j}=0\) and we can eliminate the constraint in the trivial way without increasing the degree of the polynomial system. If the column \(j\) is normalized, the constraint becomes \(v^{\prime }_{ij^{\prime }}-v^{\prime }_{i^{\prime }j^{\prime }}=0\) and can be eliminated.

-

Case (c)

\(|\{ b_1,\ldots ,b_r \} \setminus \{ i_1,\ldots ,i_r \} | > 2\). The constraint cannot be eliminated trivially.

Remark 3.4

Equality constraints are a main obstacle for random realizations. The normalization technique turns out to be very useful in removing equations so that random realizations can be applied. In addition, the technique decreases the number of variables and that of constraints by 1. Therefore, we minimize the number of equality constraints over all choices of bases and columns and rows to be normalized, that can be computed easily.

3.2 Searching for Solutions of Polynomial Systems

Now, we present a practical method to find a solution of a polynomial system.

Cylindrical algebraic decomposition method [14] eliminates variables preserving the feasibility until a given polynomial system contains only one variable, solves the one-variable polynomial system, and then lifts the projected solution. It may sound quite simple but each step is a very hard task in general. That is why we employ the solvability sequence method [11], by which some variables might be eliminated in a simpler way.

Proposition 3.5

([11]) Let \(l_{1},l_{2},l_3 \ge 0\) be integers and \(R^{(1)}_i,R^{(2)}_i,L^{(1)}_j,L^{(2)}_j,P_k\) be polynomials for \(i=1,\ldots ,l_1, j=1,\ldots ,l_2, k=1,\ldots ,l_3\). Then the feasibility of rational polynomial system:

is equivalent to that of the following polynomial system:

under the condition \(R^{(2)}_{i}(x_{1},\ldots ,x_{n})L^{(2)}_{j}(x_{1},\ldots ,x_{n}) > 0\) for \(i=1,\ldots ,l_{1},j=1,\ldots ,l_{2}\).

Note that a solution \((y^{*},x_{1}^{*},\ldots ,x_{n}^{*})\) of the original system (3.5) can be constructed from a solution \((x_{1}^{*},\ldots ,x_{n}^{*})\) of the resulting system by setting

This elimination rule is used in the solvability sequence method under the bipartiteness condition for determinant systems, i.e., polynomial systems whose constraints are of form “\(\mathrm{sign}(\det (v_{i_{1}},\ldots ,v_{i_{r}})) = \chi (i_{1},\ldots ,i_{r})\).” In the determinant system, one can detect the signs of \(R^{(2)}_{i}(x_{1},\ldots ,x_{n})\) and \(L^{(2)}_{j}(x_{1},\ldots ,x_{n})\) in advance using the information of \(\chi \), and can solve the polynomial system by \(y\).

Definition 3.6

([11]) Consider the polynomial system (3.5) arising from a determinant system. Then each constraint can be rewritten as “\(\mathrm{sign}(\det (v_{i_{1}},\ldots ,v_{i_{r}})) = \chi (i_{1},\ldots ,i_{r})\)” where \((i_1,\dots ,i_r) \in \Lambda (n,r)\) and indexed by \(\{ i_1,\ldots ,i_r \}\). Let \(A\) be the set of indices which defines constraints of form

and \(B\) the index set whose elements define constraints of form

The polynomial system (3.5) is said to be bipartite if \(|\{ i_1,\ldots ,i_r \} \cap \{ j_1,\ldots ,j_r\}| = r-1\) for all \(\{ i_1,\ldots ,i_r \} \in A, \{ j_1,\ldots ,j_r\} \in B\).

In [11], it is proved that the feasibility of the polynomial system (3.5) is equivalent to that of the following polynomial system under the bipartiteness condition:

Therefore, the elimination does not produce new constraints under the bipartiteness condition, and one may proceed with eliminations without creating inconsistency. It detects realizability if all variables are eliminated by this elimination rule. However, the restriction of the bipartiteness condition is very strong. It is worthwhile to point out that the restriction of bipartiteness condition may be relaxed by allowing the elimination rules to destroy the determinant system. This relaxation turns out to be very useful when it is used together with branching rules, which will be explained later.

Before explaining branching rules, we also consider an elimination rule for polynomial systems containing equalities.

Proposition 3.7

Let \(l_{1},l_{2},l_3 \ge 0\) be integers and \(P_i,E_j,E,Q_k\) be rational polynomials for \(i=1,\ldots ,l_1, j=1,\ldots ,l_2, k=1,\ldots ,l_3\). Then the feasibility of a rational polynomial system:

is equivalent to that of the following rational polynomial system:

Proof

A solution \((x_{1}^{*},\ldots ,x_{n}^{*},y^*)\) to the original system (3.7) can be constructed from a solution \((x_{1}^{*},\ldots ,x_{n}^{*})\) of the resulting system (3.8) by setting \(y^{*}{:=}E(x_{1}^{*},\ldots ,x_{n}^{*})\). \(\square \)

In Propositions 3.5 and 3.7, we regard a variable \(y\) appearing in these forms as redundant. To apply these elimination rules to as many variables of this type as possible, we consider the following additional rules, which are called branching rules.

Proposition 3.8

Let \(l_1,l_2 \ge 0\). The polynomial system:

is feasible if and only if one of the following rational polynomial systems

for \(s:\{ 1,\ldots ,l\} \rightarrow \{ +,-,0\}\) is feasible.

Proof

We partition \(\mathbb R ^{n+1}\) into \(3^{l_1}\) parts \(R_s:=\{ (x_1,\ldots ,x_n,y) \mid \mathrm{sign}(A_{i}(x_{1},\ldots ,x_{n})) = s(i) \ (i=1,\ldots ,l_1) \}\) for \(s:\{ 1,\ldots ,l\} \rightarrow \{ +,-,0\}\). Let \(S\) be the solution space of the polynomial system (3.9). Then \(S \cap R_s\) is represented by the polynomial system (3.10). The set \(S\) is non-empty if and only if \(S \cap R_s\) is non-empty for some \(s:\{ 1,\ldots ,l\} \rightarrow \{ +,-,0\}\).

Observe that it is possible to reduce the search range as follows:

for \(s:\{ 1,\ldots ,l_1\} \rightarrow \{ +,-\}\).

This is because for any solution \((x^*_1,\ldots ,x^*_n,y^*)\) of (3.9), \((x^*_1+\epsilon _1,\ldots ,x^*_n+\epsilon _n,y^*+\epsilon _{n+1})\) is a solution of (3.9) for sufficiently small \(\epsilon _1,\ldots ,\epsilon _n > 0\). The following is a branching rule for the case when \(y\) appears in equations.

Proposition 3.9

Let \(l_1,l_2,l_3 \ge 0\). The polynomial system:

is feasible if and only if one of the following rational polynomial systems

is feasible.

Proof

The proof is similar to that of Proposition 3.8, by considering the following partition of \(R^{n+1}\): \(R_s:=\{(x_1,\ldots ,x_n,y) \mid \mathrm{sign}(A(x_1,\ldots ,x_n)) = s\}\) for \(s=+,-,0\). \(\square \)

To solve the polynomial system, the following operations are applied repeatedly. We first choose a variable \(y\) that can be eliminated by the above four rules. Then the branching rule in Proposition 3.8 or 3.9 is applied to obtain a set of polynomial system (3.10) or (3.13). We choose a sign pattern and apply the elimination rule in Proposition 3.5 or 3.7, and check the feasibility recursively. If the feasibility of the polynomial system for this sign pattern is certified, the original polynomial system is proved to be feasible. Otherwise, we backtrack and try another sign pattern until feasibility is certified. If no feasible polynomial system is found, we give up deciding feasibility. We adopt the following termination condition. If all variables are eliminated and the system is consistent, the original system is feasible. If a system does not have variables that can be eliminated, we try random realizations to prove the feasibility. There are many polynomial systems, which are trivially feasible but are hard to solve algebraically. For example, the following polynomial system is clearly feasible but is algebraically complicated.

Random realizations work well as long as the solution set is sufficiently large and are not affected so much by the algebraic complexity.

Algorithm 3.10

Sol(\(P)\) (\(P\): polynomial system)

-

1.

If there are no variables that can be eliminated by the above \(4\) rules in \(P\), try random assignments to the remaining variables. If a solution is found, return ‘feasible.’ Otherwise return ‘unknown.’

-

2.

Choose a variable \(y\) to eliminate in \(P\). Apply one of the branching rules (Proposition 3.8, Proposition 3.9) to obtain a set of polynomial systems \(P^{\prime }_1,\dots ,P^{\prime }_m\).

-

3.

For \(i = 1,\dots ,m\), apply one of the elimination rules (Proposition 3.5, Proposition 3.7) to \(P^{\prime }_i\) and obtain a new polynomial system \(Q_i\). If Sol(\(Q_i)\) returns ‘feasible,’ return ‘feasible.’

-

4.

Return ‘unknown.’

We sometimes generate too many branchings and thus apply the iterative lengthy search to the resulting tree search problem. The root node consists of the original polynomial system. Starting from the root node, we expand nodes using the elimination rules and the branching rules repeatedly. We decide whether we arrive at a goal node or not using random assignments to the remaining variables. In this setting, we define the cost of each node \(x\) by \(c(x):=\log _{2}(n_b)\), where \(n_b\) is the number of branching at \(x\), and apply the iterative lengthening search to it by increasing the limit of the total cost by 1.

Remark 3.11

Equalities containing no square-free variables cannot be eliminated by the above rules. The probability of yielding a solution to equality constraints by random realizations is 0 and if we cannot eliminate all equality constraints, it is highly unlikely that the above method generates a realization. In this case, which is quite rare for small instances, we need to use general algorithms such as Cylindrical algebraic decomposition [14] to find solutions.

We point out another tractable case, namely, the case when the ideal generated by the equality constraints is zero-dimensional (as an ideal in \(\mathbb C [x_s \mid s \in S]\), which denotes the polynomial ring over \(\mathbb C \) in variables \(x_s\) appearing in equality constraints). In other words, it is the case when the equality constraints have a finite number of complex solutions. In this case, we extract real solutions, substitute each solution to the original polynomial system and apply the above methods. We can check the zero-dimensionality and solve the equalities using Gröbner basis [15].

4 Realizability Classification

We apply our method to \(\mathrm{OM }(4,8)\) and \(\mathrm{OM }(3,9)\). All computations are made on a cluster of four servers, with each node having total 16-core CPUs (each core running at 2.2 GHz) and 128 GB RAM (each process uses only 1 CPU).

First, we apply the polynomial reduction techniques described in Sect. 3.1 to \(\mathrm{OM }(3,9)\). Tables 4 and 5 show the distributions of the number of variables and constraints of the resulting polynomial systems for \(\mathrm{OM }(3,9)\).

We note here that the case of more than ten variables occurs as an exceptional case, where the polynomial system consists of the following type of constraints.

Nakayama [38] proved that oriented matroids with such polynomial systems which do not admit biquadratic final polynomials are realizable. We detect polynomial systems of this type and stop the polynomial system reductions because our method of realizations can solve such polynomial systems easily.

We apply our method for searching realizations to the resulting polynomial systems and manage to find realizations of 460,778 oriented matroids in \(\mathrm{OM }(3,9)\). Table 6 shows the distribution of times that our method consumed to find realizations.

The very last undecided oriented matroid turns out to be an irrational one, which was found by Perles (see [30, p.73]). A realization can be found by using Gröbner basis.

As a result, we obtain a complete classification of \(\mathrm{OM }(3,9)\). In addition, it leads classification of \(\mathrm{OM }(6,9)\) because the realizability of oriented matroids is preserved by duality [8]. Similarly, we apply our method to \(\mathrm{OM }(4,8)\) and manage to give realizations to all realizable oriented matroids in \(\mathrm{OM }(4,8)\) (Theorem 1.1) except for two irrational ones found by Nakayama [38]. These two oriented matroids can also be realized using Gröbner basis.

Theorem 4.1

There are precisely 1, 1 and 2 irrational realizable oriented matroids in \(\mathrm{OM }(3,9)\), \(\mathrm{OM }(6,9)\) and \(\mathrm{OM }(4,8)\), respectively.

From these results, we obtain the combinatorial types of point configurations (Theorem 1.2) by generating relabeling classes of acyclic realizable oriented matroids. Matroid polytopes (i.e., acyclic oriented matroids with all elements being extreme points, for details, see [8]) are extracted from them. Then we compute the face lattices of the matroid polytopes and decide whether they occur from some realizable matroid polytopes or not in order to obtain combinatorial types of polytopes (Theorem ). All face lattices of matroid polytopes in \(\mathrm{OM }(4,8)\),\(\mathrm{OM }(3,9)\), and \(\mathrm{OM }(6,9)\) turned out to be realizable as those of convex polytopes. Tables 7 and 8 summarize the results.

The number of non-isomorphic face lattices of 3-polytopes with eight vertices coincides with the number in [30, p.424, Table 2]. We observe that one can associate a point configuration with rational coordinates to the combinatorial type of every 5-polytopes with nine vertices and thus obtain the following theorem.

Theorem 4.2

The combinatorial type of every 5-polytopes with nine vertices can be realized by a rational polytope.

5 Concluding Remarks

In this paper, we complete the realizability classification of \(\mathrm{OM }(4,8)\), \(\mathrm{OM }(3,9)\), and \(\mathrm{OM }(6,9)\) by developing new techniques to search for a realization of a given oriented matroid. Surprisingly, the biquadratic final polynomial method [9], which is based on a linear programming relaxation, could detect all non-realizable oriented matroids in these classes. In addition, one can also find all non-realizable uniform oriented matroids in \(\mathrm{OM }(3,10)\) and \(\mathrm{OM }(3,11)\) by this method [3, 4]. A known minimal non-realizable oriented matroid which cannot be determined to be non-realizable by the method is in \(\mathrm{OM }(3,14)\) [42]. It may be of interest to find a minimal example with such property.

Our classification almost reaches the limit of today’s computational power. At the current computational frontier, we could apply our method to \(\mathrm{OM }(4,9)\) and \(\mathrm{OM }(5,9)\) in order to find hyperplane arrangements maximizing the average diameters [17] and PLCP-orientations on the 4-cube [23]. Our classification results including certificates are available at http://www-imai.is.s.u-tokyo.ac.jp/~hmiyata/osriented_matroids/

References

Aichholzer, O.: Order types for small point sets. http://www.ist.tugraz.at/staff/aichholzer/research/rp/triangulations/ordertypes/

Aichholzer, O., Krasser, H.: The point set order type data base: a collection of applications and results. In: Proceedings of the 13th Canadian Conference on Computational Geometry (CCCG 2001), pp. 17–20. University of Waterloo, Waterloo (2001)

Aichholzer, O., Krasser, H.: Abstract order type extension and new results on the rectilinear crossing number. Comput. Geom. Theory Appl. 36, 2–15 (2006)

Aichholzer, O., Aurenhammer, F., Krasser, H.: Enumerating order types for small point sets with applications. Order 19, 265–281 (2002)

Altshuler, A., Steinberg, L.: The complete enumeration of the \(4\)-polytopes and \(3\)-spheres with eight vertices. Pac. J. Math. 117, 1–16 (1985)

Altshuler, A., Bokowski, J., Steinberg, L.: The classification of simplicial 3-spheres with nine vertices into polytopes and nonpolytopes. Discrete Math. 31, 115–124 (1980)

Billera, L.J., Lee, C.W.: A proof of the sufficiency of McMullen’s conditions for \(f\)-vectors of simplicial convex polytopes. J. Comb. Theory A 31, 237–255 (1981)

Björner, A., Las Vergnas, M., Sturmfels, B., White, N., Ziegler, G.: Oriented Matroids. Cambridge University Press, Cambridge (1999)

Bokowski, J., Richter, J.: On the finding of final polynomials. Eur. J. Comb. 11, 21–34 (1990)

Bokowski, J., Richter-Gebert, J.: On the classification of non-realizable oriented matroids, Preprint Nr. 1345. Technische Hochschule, Darmstadt (1990)

Bokowski, J., Sturmfels, B.: On the coordinatization of oriented matroids. Discrete Comput. Geom. 1, 293–306 (1986)

Bokowski, J., Richter, J., Sturmfels, B.: Nonrealizability proofs in computational geometry. Discrete Comput. Geom. 5, 333–350 (1990)

Canham, R.J.: Arrangements of hyperplanes in projective and euclidean spaces. Ph.D. Thesis, University of East Anglia, Norwich (1971)

Collins, G.E.: Quantifier elimination for real closed fields by cylindrical algebraic decomposition. Lecture Notes in Computer Science, vol. 33, pp. 515–532. Springer, Berlin (1975)

Cox, D., Little, J., O’Shea, D.: Ideals, varieties, and algorithms. Springer, Berlin (1992)

da Silva, I.P.F., Fukuda, K.: Isolating points by lines in the plane. J. Geom. 62(1–2), 48–65 (1998)

Deza, A., Miyata, H., Moriyama, S., Xie, F.: Hyperplane arrangements with large average diameter: a computational approach. Adv. Stud. Pure Math. 62, 59–74 (2012)

Edmonds, J., Fukuda, K.: Oriented matroid programming. Ph.D. Thesis, University of Waterloo, Waterloo (1982)

Finschi, L.: Homepage of oriented matroids. http://www.om.math.ethz.ch/

Finschi, L., Fukuda, K.: Generation of oriented matroids—a graph theoretical approach. Discrete Comput. Geom. 27, 117–136 (2002)

Finschi, L., Fukuda K.: Combinatorial generation of small point configurations and hyperplane arrangements. Discrete and Compuational Geometry: The Goodman–Pollack Festschrift, Algorithms and Combinatorics, vol. 25, pp. 425–440. Springer, Berlin (2003)

Folkman, J., Lawrence, J.: Oriented matroids. J. Comb. Theory B 25, 199–236 (1978)

Fukuda, K., Klaus, L., Miyata H., work in progress

Fukuda, K., Moriyama, S., Okamoto, Y.: The Holt–Klee condition for oriented matroids. Eur. J. Comb. 30, 1854–1867 (2009)

Fusy, É.: Counting \(d\)-polytopes with \(d+3\) vertices. Electr. J. Comb. 13, R23 (2006)

Goodman, J.E., Pollack, R.: On the combinatorial classification of nondegenerate configurations in the plane. J. Comb. Theory A 29, 220–235 (1980)

Goodman, J.E., Pollack, R.: Proof of Grünbaum’s conjecture on the stretchability of certain arrangements of pseudolines. J. Comb. Theory A 29, 385–390 (1980)

Gonzalez-Sprinberg, G., Laffaille, G.: Sur les arrangements simples de huit droites dans \(\mathbb{RP}^2\). C. R. Acad. Sci. Paris Sér. I Math. 309, 341–344 (1989)

Grünbaum, B.: Arrangements and Spreads. American Mathematical Society, New York (1972)

Grünbaum, B.: Convex Polytopes, 2nd edn. Springer, Berlin (2003)

Grünbaum, B., Sreedharan, V.P.: An enumeration of simplicial 4-polytopes with 8 vertices. J. Comb. Theory 2, 437–465 (1967)

Halsey, E.: Zonotopal complexes on the d-cube. Ph.D. Dissertation, University of Washington, Seattle (1971)

Lloyd, K.: The number of \(d\)-polytopes with (\(d+3\)) vertices. Mathematika 17, 120–132 (1970)

Lutz, F.H.: Enumeration and random realization of triangulated surfaces. In: Bobenko, A.I., Schröder, P., Sullivan, J.M., Ziegler, G.M. (eds.) Discrete Differential Geometry. Oberwolfach Seminars, vol. 38, pp. 235–253. Birkhäuser, Basel (2008)

Mandel, A.: Topology of oriented matriods. Ph.D. Thesis, University of Waterloo, Waterloo (1982)

Miyata, H., Moriyama, S., Imai, H.: Deciding non-realizability of oriented matroids by semidefinite programming. Pac. J. Optim. 5, 211–224 (2009)

Mnëv, M.N.: The universality theorems on the classification problem of configuration varieties and convex polytopes varieties, Topology and Geometry. Lecture Notes in Mathematics, vol. 1346, pp. 527–544. Springer, Heidelberg (1988)

Nakayama, H.: Methods for realizations of oriented matroids and characteristic oriented matroids. Ph.D. Thesis, The University of Tokyo, Tokyo (2007)

Nakayama, H., Moriyama, S., Fukuda, K., Okamoto, Y.: Comparing the strengths of the non-realizability certificates for oriented matroids. In: Proceedings of 4th Japanese–Hungarian Symposium on Discrete Mathematics and Its Applications, pp. 243–249 (2005)

Nakayama, H., Moriyama, S., Fukuda, K.: Realizations of non-uniform oriented matroids using generalized mutation graphs. In: Proceedings of 5th Hungarian–Japanese Symposium on Discrete Mathematics and Its Applications, Sendai, pp. 242–251 (2007)

Richter, J.: Kombinatorische Realisierbarkeitskriterien für orientierte Matroide. Master’s Thesis, Technische Hochschule, Darmstadt, 1988. Published in Mitteilungen Mathem. Seminar Giessen, Heft 194, Giessen (1989)

Richter-Gebert, J.: Realization spaces of polytopes. Lecture Notes in Mathematics, vol. 1643. Springer, Berlin (1996)

Richter-Gebert, J.: Two interesting oriented matroids. Doc. Math. 1, 137–148 (1996)

Richter, J., Sturmfels, B.: On the topology and geometric construction of oriented matroids and convex polytopes. Trans. Am. Math. Soc. 325, 389–412 (1991)

Roudneff, J.-P., Sturmfels, B.: Simplicial cells in arrangements and mutations of oriented matroids. Geom. Dedicata 27, 153–170 (1988)

Shor, P.W.: Stretchability of pseudolines is NP-hard. In: Gritzmann, P., Sturmfels, B. (eds.) Applied Geometry and Discrete Mathemaics: The Victor Klee Festschrift. DIMACS Series in Discrete Mathematics and Theoretical Computer Science, vol. 4, pp. 531–554. American Mathematical Society, Providence (1991)

Stanley, P.: The number of faces of simplicial convex polytopes. Adv. Math. 35, 236–238 (1980)

Steinitz, E.: Uber die Eulerschen polyederrelationen. Arch. Math. Phys. 11, 86–88 (1906)

Steinitz, E.: Polyeder und Raumeinteilungen. Encyclopädie der mathematischen Wissenschaften, Band 3 (Geometrie), pp. 1–139 (1922)

Steinitz, E.: Vorlesungen über die Theorie der Polyeder. Springer, Berlin (1934)

Ziegler, G.M.: Lectures on Polytopes. Springer, Berlin (1995)

Acknowledgments

The authors would like to thank Dr. Hiroki Nakayama for providing his programs. The computations throughout the paper were done on a cluster server of ERATO-SORST Quantusm Computation and Information Project, Japan Science and Technology Agency. This research partially supported by the Swiss National Science Foundation Project No. 200021-124752/1 (Komei Fukuda); by Grant-in-Aid for Scientic Research from the Japan Society for the Promotion of Science, and Grant-in-Aid for JSPS Fellows (Hiroyuki Miyata); and by Grant-in-Aid for Scientic Research from Ministry of Education, Science and Culture, Japan (Sonoko Moriyama).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Fukuda, K., Miyata, H. & Moriyama, S. Complete Enumeration of Small Realizable Oriented Matroids. Discrete Comput Geom 49, 359–381 (2013). https://doi.org/10.1007/s00454-012-9470-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00454-012-9470-0