Abstract

We prove that a random distribution in two dimensions which is conformally invariant and satisfies a natural domain Markov property is a multiple of the Gaussian free field. This result holds subject only to a fourth moment assumption.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 Setup and main result

The Gaussian free field (abbreviated GFF) has emerged in recent years as an object of central importance in probability theory. In two dimensions in particular, the GFF is conjectured (and in many cases proved) to arise as a universal scaling limit from a broad range of models, including the Ginzburg–Landau \(\nabla \varphi \) interface model [19, 27, 29], the height function associated to planar domino tilings and the dimer model [3, 4, 12, 13, 20, 23], and the characteristic polynomial of random matrices [17, 18, 32]. It also plays a crucial role in the mathematically rigourous description of Liouville quantum gravity; see in particular [10, 22] and [14] for some recent major developments (we refer to [30] for the original physics paper). Note that the interpretations of Liouville quantum gravity in the references above are slightly different from one another, and are in fact more closely related to the GFF with Neumann boundary conditions than the GFF with Dirichlet boundary conditions treated in this paper.

As a canonical random distribution enjoying conformal invariance and a domain Markov property, the GFF is also intimately linked to the Schramm–Loewner Evolution (SLE). In particular SLE\(_4\) and related curves can be viewed as level lines of the GFF [11, 31, 35, 36]. In fact, this connection played an important role in the approach to Liouville quantum gravity developed in [14, 15, 26, 38] (see also [5] for an introduction).

It is natural to seek an axiomatic characterisation of the GFF which could explain this ubiquity. In the present article we propose one such characterisation, in the spirit of Schramm’s celebrated characterisation of SLE as the unique family of conformally invariant laws on random curves satisfying a domain Markov property [34].

As the GFF is a random distribution (and not a random function) we will need to pay attention to the measure-theoretic formulation of the problem. We start by introducing some notations. Let D be a simply connected domain and let \(C_c^\infty (D)\) be the space of smooth functions that are compactly supported in D (the space of so-called test functions). We equip it with the topology such that \(\phi _n\rightarrow 0\) if and only if there is some \(M\Subset D\) containing the supports of all the \(\phi _n\), and all the derivatives of \(\phi _n\) converge uniformly to 0. (Here and in the rest of the paper, the notation \(M\Subset D\) means that the closure of M is compact and contained in the open set D.) For any two test functions \(\phi _1,\phi _2\), we define

and for any test function \(\phi \) we call \((\phi ,1)\) the mass of \(\phi \).

In order to avoid discussing random variables taking values in the space of distributions in D we take the simpler and more general point of view that we have a stochastic process \(h^D = (h^D_\phi )_{\phi \in C^\infty _c(D) }\) indexed by test functions and which is linear in \(\phi \): that is, for any \(\lambda , \mu \in {\mathbb {R}}\) and \(\phi , \phi '\in C_c^\infty (D)\),

almost surely. We then write, with an abuse of notation, \((h^D, \phi ) = h^D_\phi \) for \(\phi \in C_c^\infty (D)\). We call \(\Gamma ^D \) the law of the stochastic process \((h^D_\phi )_{\phi \in C_c^\infty (D)}\). Thus \(\Gamma ^D\) is a probability distribution on \({\mathbb {R}}^{C^\infty _c(D)}\) equipped with the product topology; by Kolmogorov’s extension theorem \(\Gamma ^D\) is characterised by its consistent finite-dimensional distributions, i.e., by the joint law of \((h^D, \phi _1), \ldots , (h^D, \phi _k)\) for any \(k \geqslant 1\) and any \(\phi _1, \ldots , \phi _k \in C_c^\infty (D)\).

Suppose that \(\Gamma :=\{ \Gamma ^D\}_{D \subset {\mathbb {C}}}\) is a collection of such measures, where \(D\subset {\mathbb {C}}\) ranges over all simply connected proper domains and \(\Gamma ^D\) is as above for each simply connected proper domain D. We will always denote by \({\mathbb {D}}\) the unit disc of the complex plane. We now state our assumptions:

Assumptions 1.1

Let \(D \subset {\mathbb {C}}\) be a proper simply connected open domain, and let \(h^D\) be a sample from \(\Gamma ^D\). We assume the following:

- (i)

(Moments, stochastic continuity) For every \(\phi \in C_c^\infty (D)\),

$$\begin{aligned} {\mathbb {E}}[(h^D,\phi )]=0 \;\; \text {and} \;\; {\mathbb {E}}[(h^D,\phi )^4]<\infty . \end{aligned}$$Moreover, there exists a continuous bilinear form \(K_2^D\) on \(C_c^\infty (D)\times C_c^\infty (D)\) such that

$$\begin{aligned} {\mathbb {E}}[(h^D,\phi )(h^D,\phi ')]=K_2^D(\phi ,\phi '), \quad \quad \phi , \phi ' \in C_c^\infty (D). \end{aligned}$$ - (ii)

(Dirichlet boundary conditions) Suppose that \((f_n)_{n \geqslant 1}\) is a sequence of nonnegative, radially symmetric functions in \(C_c^\infty ({\mathbb {D}})\), with uniformly bounded mass and such that for every \(M \Subset {\mathbb {D}}\), \({{\,\mathrm{Support}\,}}(f_n) \cap M = \varnothing \) for all large enough n. Then we have \({{\,\mathrm{Var}\,}}((h^{\mathbb {D}},f_n)) \rightarrow 0 \text { as } n \rightarrow \infty .\)

- (iii)

(Conformal invariance) Let \(f: D \rightarrow D'\) be a bijective conformal map. Then \( \Gamma ^{D} = \Gamma ^{D'}\circ f, \) where \(\Gamma ^{D'} \circ f\) is the law of the stochastic process \((h^{D'}, |(f^{-1})'|^2 (\phi \circ f^{-1}))_{\phi \in C_c^\infty (D)}\).

- (iv)

(Domain Markov property) Suppose \(D' \subset D\) is a simply connected Jordan domain. Then we can decompose \( h^D= h^{D'}_D+\varphi _D^{D'} \) where:

\( h^{D'}_D\) is independent of \(\varphi _D^{D'}\);

\((\varphi _D^{D'},\phi )_{\phi \in C_c^\infty (D)}\) is a stochastic process indexed by \(C_c^\infty (D)\) that is a.s. linear in \(\phi \) and such that \((\varphi _D^{D'},\phi )_{\phi \in C_c^\infty (D')}\) a.s. corresponds to integrating against a harmonic function in \(D'\);

\(((h^{D'}_D,\phi ))_{\phi \in C_c^\infty (D)}\) is a stochastic process indexed by \(C_c^\infty (D)\), such that \((h^{D'}_D,\phi )_{\phi \in C_c^\infty (D')}\) has law \(\Gamma ^{D'}\) and \((h^{D'}_D,\phi )=0\) a.s. for any \(\phi \) with \({{\,\mathrm{Support}\,}}(\phi )\subset D{\setminus } D'\).

Remark 1.2

Note that in the domain Markov property, we have (by linearity) that if \(D'\subset D\) is simply connected, and \(\phi _1=\phi _2\) on \(D'\), then \((h^{D'}_D,\phi _1)=(h^{D'}_D,\phi _2)\) almost surely.

When we discuss the domain Markov property later in the paper, we will often simply say that

These statements should be interpreted as described rigorously in Assumptions 1.1.

Remark 1.3

The finite fourth moment condition implies, in particular, that there exists a quadrilinear form \(K_4^D\) on \((C_c^\infty (D))^{\otimes 4}\) such that for every \(\phi _1,\ldots , \phi _4 \in C_c^\infty (D)\),

Lemma 1.4

The assumption of zero boundary conditions implies that the domain Markov decomposition from (iv) is unique.

Proof

Suppose that we have two such decompositions:

Suppose that we have two such decompositions:

Pick any \(z\in D'\) and let \(F:D'\rightarrow {\mathbb {D}}\) be a conformal map that sends z to 0. Further, let \((f_n)_{n\geqslant 1}\) be a sequence of nonnegative radially symmetric, mass one functions in \(C_c^\infty ({\mathbb {D}})\), that are eventually supported outside any \(K\Subset {\mathbb {D}}\), and set \(g_n := |F'|^2 (f_n \circ F)\) for each n. Then the assumption of Dirichlet boundary conditions plus conformal invariance implies that \((h^{D'}_D-{\tilde{h}}^{D'}_D,g_n )\rightarrow 0\) in probability as \(n\rightarrow \infty \). In turn, by (1.2), this means that \((\varphi _D^{D'}-{\tilde{\varphi }}_D^{D'},g_n) \rightarrow 0\) in probability.

However, since \((\varphi _D^{D'} - {\tilde{\varphi }}_D^{D'})\) restricted to \(D'\) is a.s. equal to a harmonic function, and since the \(f_n\)’s are radially symmetric with mass one, we have

for every n. This implies that for each fixed \(z\in D'\), \(\varphi _D^{D'}(z)={\tilde{\varphi }}_D^{D'}(z)\) a.s. Applying this to a countable dense subset of \(z\in D'\), together with the fact that \(h^D=\varphi _D^{D'}={\tilde{\varphi }}_D^{D'}\) a.s. outside of \(D'\), see Remark 1.2, then implies that \(\varphi _D^{D'}\) and \({{\tilde{\varphi }}}_D^{D'}\) are a.s. equal as stochastic processes indexed by \(C_c^\infty (D)\). \(\square \)

Definition 1.5

A mean zero Gaussian free field \(h_{{{\,\mathrm{GFF}\,}}} =h^D_{{{\,\mathrm{GFF}\,}}} \) with zero boundary conditions is a stochastic process indexed by test functions \((h_{{{\,\mathrm{GFF}\,}}}, \varphi )_{\varphi \in C_c^\infty (D)}\) such that:

\(h_{{{\,\mathrm{GFF}\,}}}\) is a centered Gaussian field; for any \(n\geqslant 1\) and any set of test functions \(\phi _1,\ldots , \phi _n \in C_c^\infty (D)\), \(((h_{{{\,\mathrm{GFF}\,}}},\phi _1),\ldots , (h_{{{\,\mathrm{GFF}\,}}},\phi _n))\) is a Gaussian random vector with mean \({{\mathbf {0}}}\);

for any two test functions \(\phi _1,\phi _2 \in C_c^\infty (D)\),

$$\begin{aligned} {\mathbb {E}}[(h_{{{\,\mathrm{GFF}\,}}},\phi _1) , (h_{{{\,\mathrm{GFF}\,}}},\phi _2)] = \int _{D} G^D(z,w) \phi _1(z)\phi _2(w)dzdw \end{aligned}$$where \(G^D\) is the Green’s function with Dirichlet boundary conditions on D.

It is well known and easy to check (see e.g. [5]) that Assumptions 1.1 are satisfied for the collection of laws \(\{\Gamma ^D_{{{\,\mathrm{GFF}\,}}}; D \subset {\mathbb {C}}\}\) obtained by considering the GFF, \(h^D_{{{\,\mathrm{GFF}\,}}}\), in proper simply connected domains. More generally any multiple of the GFF \(\alpha h^D_{{{\,\mathrm{GFF}\,}}}\) (with \(\alpha \in {\mathbb {R}}\)) will verify these assumptions. (In fact, the boundary conditions satisfied by the GFF are much stronger than what we assume: it is not just the average value of the GFF on the unit circle which is zero, but, e.g., the average value on any open arc of the unit circle.) The main result of this paper is the following converse:

Theorem 1.6

Suppose the collection of laws \(\{\Gamma ^D\}_{D\subset {\mathbb {C}}}\) satisfy Assumptions 1.1 and let \(h^D\) be a sample from \(\Gamma ^D\). Then there exists \(\alpha \in {\mathbb {R}}\) such that \(h^D = \alpha h_{{{\,\mathrm{GFF}\,}}}^D\) in law, as stochastic processes.

Remark 1.7

Given the close relationship between the GFF and SLE, it is natural to wonder if the characterisation Theorem 1.6 could be deduced from Schramm’s celebrated characterisation (and discovery) of SLE curves [34]. Perhaps if one is also given an appropriately defined notion of local sets in addition to the field (see [2, 36]), one could identify these local sets as SLE type curves with some unknown parameter. However, even this would not be sufficient to identify the field as the GFF. Indeed, note that the CLE\(_\kappa \) nesting fields [28] provide examples of conformally invariant random fields coupled with SLE-type local sets, yet are only believed to be Gaussian in the case \(\kappa = 4\).

1.2 Role of our assumptions

We take a moment to discuss the role of our assumptions. The fundamental assumptions of Theorem 1.6 are (ii), (iii) and (iv) which cannot be dispensed with. To see that they are necessary, the reader might consider the following two examples:

In both these examples, conformal invariance (or at least conformal covariance) and even a form of domain Markov property (but not exactly the one formulated here) hold; yet neither of these are the GFF (except in the second case when \(\kappa = 4\)). These two examples are the kind of possible counterexamples to keep in mind when considering Theorem 1.6 or possible variants.

The role of Assumption (i) however is more technical and is instead the result of a choice and/or limitations of our proof.

We do not know whether a fourth moment assumption is necessary. Our use of this assumption is to rule out by Kolmogorov estimates the possibility of Poissonian-type jumps. To explain the problem, the reader might think of the following rough analogy: if a centered process has independent and stationary increments, it does not follow that it is Brownian motion even if it has finite second moment; for instance, \((N_t - t)_{t \geqslant 0}\), where \(N_t\) is a standard Poisson process satisfies these assumptions. See the section on open problems for more discussion.

Regarding the assumption of stochastic continuity, we point out that \((\phi , \phi ') \mapsto K(\phi , \phi ') = {\mathbb {E}}[ (h^D, \phi ) (h^D, \phi ')]\) is clearly a bilinear map. So the assumption we make is simply that this map is jointly continuous. Another way to rephrase this assumption is to say that \(\varphi \mapsto (h^D, \varphi )\) is continuous in \(L^2({\mathbb {P}})\) (referred to as stochastic continuity by some authors), which seems quite basic.

1.3 The one-dimensional case

In one-dimension, the zero boundary GFF reduces to a Brownian bridge (see e.g. Sheffield [37]). However, even in this classical setup it seems that a characterisation of the Brownian bridge along the lines we have proposed in Theorem 1.6 was not known. Of course we need to pay some attention to the assumptions here, since it is not the case that a GFF is scale-invariant in dimension \(d\ne 2\). Instead, the Brownian bridge enjoys Brownian scaling.

Let \({\mathcal {I}}\) be the space of all closed, bounded intervals of \({\mathbb {R}}\) and assume that for each \(I\in {\mathcal {I}}\) we have a stochastic process \(X^I=(X^I(t))_{t \in I }\) indexed by the points of I. We let \(\mu ^I\) be the law of the stochastic process \((X^I(t))_{t\in I}\), so that \(\mu ^I\) is a probability distribution on \({\mathbb {R}}^{I}\) equipped with the product topology. Similarly to the two-dimensional case, by Kolmogorov’s extension theorem, \(\mu ^I\) is characterised by its consistent finite-dimensional distributions, i.e., by the joint law of \(X^I(t_1), \ldots , X^I(t_k)\) for any \(k \geqslant 1\) and any \(t_1,\ldots , t_k\in I\).

Assumptions 1.8

We make the following assumptions.

- (i)

(Tails) For each I and \(t\in I\), \({\mathbb {E}}[\log ^+|X^I(t)|]<\infty \).

- (ii)

(Stochastic continuity) For each I the process \((X^I(t))_{t\in I}\) is stochastically continuous: that is, \(\lim _{s\rightarrow t} {\mathbb {P}}(|X^I(t)-X^I(s)|>\varepsilon )=0\) for every \(\varepsilon >0\).

- (iii)

(Zero boundary condition) For each interval \(I =[a,b]\), \(X^{I}(a)=X^I(b) =0\).

- (iv)

(Domain Markov property) For each \(I' =[a,b] \subset I\), conditioned on \((X^I(t))_{t \in I \setminus I'}\), the law of \((X^I(s))_{ s \in I'}\) is the same as

$$\begin{aligned} L(s) + {{\tilde{X}}}^{I'}(s); \quad s \in I' \end{aligned}$$where L(s) is a linear function interpolating between \(X^I(a)\) and \(X^I(b)\) and \({{\tilde{X}}}^{I'}\) is an independent copy of \(X^{I'}\).

- (v)

(Translation invariance and scaling) For any \(a\in {\mathbb {R}}, c>0\)

$$\begin{aligned} (X^{I-a}(t-a))_{t \in I} {\mathop {=}\limits ^{(d)}}(X^{I}(t))_{t \in I} \end{aligned}$$and

$$\begin{aligned} \left( \frac{1}{\sqrt{c}}X^{cI}(ct))_{t \in I} {\mathop {=}\limits ^{(d)}}(X^I(t)\right) _{t \in I}. \end{aligned}$$

Our result in this case is as follows:

Theorem 1.9

Subject to Assumptions 1.8, a sample \(X^I\) has the law of a multiple \(\sigma \) of a Brownian bridge on the interval I, from zero to zero.

Interestingly, the proof in this case is substantially different from the planar case, and relies on stochastic calculus arguments. The definition in Assumption 1.8 is reminiscent of the classical notion of harness in one dimension: roughly speaking, a square integrable continuous process such that conditionally on the process outside of any interval, the process inside has an expectation which is the linear interpolation of the data outside. If such a process is defined on the entire nonnegative halfline, then Williams [39] proved that a harness is a multiple of Brownian motion plus drift; see Mansuy and Yor [25] for a survey and extensions. Theorem 1.9 may therefore be seen as a generalisation of Williams’ result to the case where the underlying domain is bounded, without assuming continuity and assuming only logarithmic tails (but assuming more in terms of the domain Markov property). To our knowledge, this result has not been previously considered in the literature.

1.4 Outline

We now summarise the structure of the proof of the main result (Theorem 1.6) and explain the organisation of the paper.

Our first goal is to make sense of circle averages of the field, which exist as a result of the domain Markov property, conformal invariance and zero boundary condition (Sect. 2.1). These circle averages can then fairly easily be seen to give rise to a two-point function \({{\tilde{K}}}_2(z_1, z_2)\) (Sect. 2.2). Intuitively, the bilinear form \(K_2\) in the assumption is simply the integral operator associated with this two-point function, but we do not need to establish this immediately (instead, it will follow from some estimates obtained later; see Lemma 2.18). In Sect. 2.5 we establish a priori logarithmic bounds on the two-point (and four-point) functions which are needed to control errors later on. The Markov property and conformal invariance are easily seen to imply that the two point function is harmonic off the diagonal (Sect. 2.4). This point of view culminates in Sect. 2.6, where it is shown that the two point function is necessarily a multiple of the Green’s function. (Intuitively, we rely on the fact that the Green’s function is characterised by harmonicity and logarithmic divergence on the diagonal, though our proof exploits an essentially equivalent but slightly shorter route). At this point we still have not made use of our fourth moment assumption.

To conclude it remains to show that the field is Gaussian in the sense that any test function \((h, \varphi )\) is a centered Gaussian random variable. This is the subject of Sect. 3 and is the most delicate and interesting part of the argument. The Gaussianity comes from an application of Lévy’s characterisation of Brownian motion, or more precisely, from the Dubins–Schwarz theorem. For this we need a certain process to be a continuous martingale, and it is only here that our fourth moment assumption is required: we use it in combination with a Kolmogorov continuity criterion and a deformation argument exploiting the form of a well-chosen family of conformal maps to prove continuity. The arguments are combined in Sect. 4 to conclude the proof of Theorem 1.6. Finally, the last section (Sect. 5) gives a proof in the one-dimensional case (Theorem 1.9) using stochastic calculus techniques. The paper concludes with a discussion of open problems in Sect. 6.

2 Two-point and four-point functions

To begin with, we make sense of circle averages of our field. These will play a key role in the proof of Theorem 1.6, as we will be able to identify the law of the circle average process around a point with a one-dimensional Brownian motion.

In fact, we will define something more general. Let \(\gamma \) be the boundary of a Jordan domain \(D'\subseteq D\). We will, given \(z\in D'\), define the harmonic average (as seen from z) of h on \(\gamma \) and will denote this average by \((h^D,\rho _z^\gamma )\). Note that since h can only be tested a priori against smooth functions, and therefore not necessarily against the harmonic measure on \(\gamma \), this is a slight abuse of notation. We will define the average in two equivalent ways: through an approximation procedure, and using the domain Markov property of the field.

2.1 Circle average

Let D be a simply connected domain such that \({\mathbb {D}}\subseteq D\) where \({\mathbb {D}}\) is the unit disc. We will first try to define \((h^{D}, \rho _0^{\partial {\mathbb {D}}} )\) as described above. To this end, let \({{\tilde{\psi }}}_0^{\delta }\) be a smooth radially symmetric function taking values in [0, 1], that is equal to 1 on \(A:=\{z:1-\delta \leqslant |z|\leqslant 1-\delta /2\}\) and is equal to 0 outside of the \(\delta /10\) neighbourhood of the annulus A. Let \(\psi _0^{\delta }= {\tilde{\psi }}_0^\delta /\int {\tilde{\psi }}_0^\delta \). Then for all \(\delta \in [0,1]\), since \(\psi _0^\delta \in C_c^\infty (D)\), the quantity \((h^D,\psi _0^{\delta })\) is well defined. We will take a limit as \(\delta \rightarrow 0\) to define the circle average (the precise definition of \(\psi _0^{\delta }\) does not matter, as will become clear from the proof).

Lemma 2.1

exists in probability and in \(L^2({\mathbb {P}})\). Moreover,

where \(h^D=h_D^{{\mathbb {D}}}+\varphi _D^{{\mathbb {D}}}\) is the domain Markov decomposition of \(h^D\) in \({\mathbb {D}}\) described in Assumptions 1.1.

Proof

We write \((h^D,\psi _0^{\delta })=(h_D^{{\mathbb {D}}},\psi _0^{\delta })+(\varphi _D^{{\mathbb {D}}}, \psi _0^{\delta })\) using the domain Markov decomposition. Note that because \(\psi _0^\delta \) is radially symmetric with mass 1, and is supported strictly inside \({\mathbb {D}}\) for each \(\delta \), by harmonicity \((\varphi _D^{{\mathbb {D}}},\psi _0^{\delta })\) must be constant and equal to \(\varphi _D^{\mathbb {D}}(0)\). Thus, we need only show that

However this follows from the fact that \(h^{{\mathbb {D}}}_D\overset{(d)}{=} h^{\mathbb {D}}\) has zero boundary conditions (see the definition in Assumptions 1.1), since for any \(M\Subset {\mathbb {D}}\), \(\psi _0^\delta \) is supported outside of M for small enough \(\delta \) and is radially symmetric. Note that the rate of convergence of the variance to 0 is uniform in the choice of domain D. \(\square \)

Remark 2.2

We could have simply defined \((h^D,\rho _0^{\partial {\mathbb {D}}} ):=\varphi _D^{{\mathbb {D}}}(0)\) as above. The reason we use the definition in terms of limits is so that later we are able to estimate its moments.

2.2 Harmonic average

Now, let \(D'\subset D\) be a Jordan domain bounded by a curve \(\gamma \). Given \(z\in D'\), also let \(f:D'\rightarrow {\mathbb {D}}\) be the unique conformal map sending \(z\mapsto 0\) and with \(f'(z)>0\). We define

and then set

which we know exists in \(L^2\) and in probability by the same argument as in the proof of Lemma 2.1 (note that by conformal invariance, \((h_D^{D'}, {\hat{\psi }}_z^\delta )\) is equal to \((h^{\mathbb {D}}, \psi _0^\delta )\) in law if \(h^D=h_D^{D'}+\varphi _D^{D'}\) is the domain Markov decomposition of \(h^D\) in \(D'\)). Again, we could have simply defined the harmonic average to be equal to \(\varphi _D^{D'}(z)\).

It is clear that the harmonic average is always a random variable with mean 0. We record here another useful property:

Lemma 2.3

Suppose \(D''\subset D'\subset D\) are Jordan domains and \(z\in D''\). Then

Proof

Let \(h^D=h_D^{D'}+\varphi _D^{D'}\) according to the domain Markov decomposition of \(h^D\) in \(D'\). Then we have that \((h^D,\rho _z^{\partial D'})=\varphi _D^{D'}(z)\). We can also decompose \(h_D^{D'}\) inside \(D''\) as \(h_D^{D'}=h_{D'}^{D''}+\varphi _{D'}^{D''}\), which means (by uniqueness of the decomposition) that \((h^D,\rho _z^{\partial D''})=\varphi _D^{D'}(z)+\varphi _{D'}^{D''}(z)\). By independence of \(\varphi _D^{D'}(z)\) and \(\varphi _{D'}^{D''}(z)\), and the fact that the harmonic average has mean 0, the result follows. \(\square \)

Later on in the proof we will also use some alternative approximations to \((h^D,\rho _z^\gamma )\), as different approximations will be useful in different contexts.

2.3 Circle average field

Now consider a general simply connected domain D. By the above construction, we can define

for all \(z \in D\) and all \(\varepsilon \) small enough, depending on z. We call this the circle average field. It will be important to know that this is a good approximation to our field when \(\varepsilon \) is small. To show this, we will first need the following lemma.

Lemma 2.4

For \(z_1\ne z_2\) distinct points in D,

exists. Moreover, for any \(D_1,D_2 \subset D\) Jordan subdomains such that \(D_1\cap D_2=\varnothing \) and \(z_1\in D_1, z_2\in D_2\), we have

Proof

Let \(D_1,D_2\) be as above and write, by the domain Markov property,

so that for \( \varphi =\varphi ^{D_1}_D-h^{D_2}_D=\varphi ^{D_2}_D-h^{D_1}_D\) we have

By definition of the domain Markov property, we can see that \((\varphi ,\phi )_{\phi \in C_c^\infty (D)}\) is a stochastic process that a.s. corresponds to a harmonic function when restricted to \(\phi \) in \( C_c^\infty (D_1)\) or \(C_c^\infty (D_2)\): in fact, we have that \(\varphi =\varphi _D^{D_1}\) in \(D_1\) and \(\varphi =\varphi _D^{D_2}\) in \(D_2\). Note that \(h^{D_2}_D\) is measurable with respect to \(\varphi _D^{D_1}\) by Remark 1.2 (and conversely with the indices 1 and 2 switched), so the three terms in (2.1) are pairwise independent.

Now let \(\varepsilon <\text {min}\{|z_1-z_2|/2, d(z_1,\partial D), d(z_2,\partial D)\}\). Choosing \(D\supset D_1\supset B_\varepsilon (z_1)\) and \(D\supset D_2\supset B_\varepsilon (z_2)\), this means (also using uniqueness of the domain Markov decomposition) that \(\varphi _D^{B_\varepsilon (z_i)}=\varphi +\varphi _i\) for \(i=1,2\) where \(\varphi ,\varphi _1,\varphi _2\) are pairwise independent and centered (indeed, \(\varphi _1,\varphi _2\) are measurable with respect to \(h^{D_1}_D, h^{D_2}_D\) respectively). This implies that

Hence the limit as \(\varepsilon \rightarrow 0\) exists, and we also see that it is equal to \({\mathbb {E}}[\varphi _D^{D_1}(z_1)\varphi _D^{D_2}(z_2)]\) for any \(D_1,D_2\) as in the statement of the Lemma. \(\square \)

Similarly, we have the following:

Lemma 2.5

For \(z_1,z_2,z_3,z_4\) be pairwise distinct points in D. Then

exists. Moreover, for any \(D_1,D_2,D_3,D_4 \subset D\) Jordan subdomains such that \(D_i\cap D_j=\varnothing \) for every \(1\leqslant i\ne j\leqslant 4\) and \(z_i\in D_i\) for \(1\leqslant i \leqslant 4\), we have

It will also be convenient in what follows to have an alternative, “hands-on” way of approximating \({\tilde{K}}_2^D\) and \({\tilde{K}}_4^D\), which corresponds to directy testing the field against smooth test functions (rather than using the slightly abstract notion of circle averages).

Definition 2.6

(Mollified field) Let \(\phi \) be a smooth radially symmetric function, supported in the unit disc, and with total mass 1. Let \(\phi ^z_\varepsilon (\cdot )=\varepsilon ^{-2}\phi (\frac{|\cdot -z|}{\varepsilon })\) so that \(\phi _{\varepsilon }^z\) is smooth, radially symmetric, has mass 1, and is supported in \(B_z(\varepsilon )\). Define \({\tilde{h}}_\varepsilon ^D(z):=(h^D,\phi _{\varepsilon }^z)\). Then by the domain Markov property again, we see that we can equivalently write

and

Note that here we do not have \({\tilde{h}}_\varepsilon ^D(z)=\varphi _D^{B_z(\varepsilon )}(z)\) for every \(\varepsilon \) (because \(\phi _\varepsilon ^z\) has support inside \(B_z(\varepsilon )\)), but we still have for small enough \(\varepsilon \) (depending on \(z_1,z_2\)) that \({\mathbb {E}}[{\tilde{h}}_\varepsilon ^D(z_1){\tilde{h}}_\varepsilon ^D(z_2)]={\tilde{K}}_2^D(z_1,z_2)\).

2.4 Properties of the two point kernel

We can now prove some of the important properties of our two point kernel \({\tilde{K}}_2^D\). Namely:

Proposition 2.7

(Harmonicity) For any \(x\in D\), \({\tilde{K}}_2^D(x,y)\), viewed as a function of y, is harmonic in \(D{\setminus } \{x\}\).

Proposition 2.8

(Conformal invariance) Let \(f:D\rightarrow f(D)\) be a conformal map. Then for any distinct \(x\ne y\) in D

Proof of Proposition 2.7

This is a direct consequence of the following Lemma (Lemma 2.9) and [16, §2.2, Theorem 3]. \(\square \)

Lemma 2.9

Fix \(x\in D\). Then \({\tilde{K}}_2^D(x,\cdot )\in C^2(D{\setminus } \{x\})\). Moreover, for any \(\eta >0\) and \(y\in D\) such that \(|x-y|\wedge d(y,\partial D)>\eta \):

Proof

In fact, the first regularity statement follows from (2.2). Indeed, take \(y\in D\setminus \{x\}\), pick \(\eta <|x-y|\wedge d(y,\partial D)\), and also take a smooth radially symmetric function \(\phi \) that has mass 1 and is supported on \(B_0(\eta /2)\). Set \(f(z)=\int _{D} {\tilde{K}}^D_2(x,w) \phi (z-w)dw \). Then \(f\in C^\infty (U)\) where \(U=B_y(\eta /2)\). Moreover, \(f(z)={\tilde{K}}_2^D(x,z)\) for \(z\in U\) by (2.2). This implies that f is twice continuously differentiable at y.

Thus, we only need to prove (2.2). However, this follows almost immediately from the definition of \({\tilde{K}}_2^D\). Take \(\eta \) and y as in the statement, and pick \(\varepsilon>0,\eta '>\eta \) such that \(B_x(\varepsilon )\) and \(B_y(\eta ')\) lie entirely in D and are disjoint.

Then by Lemma 2.4 we have

This allows us to conclude, since

which by harmonicity of \(\varphi \) in \(B_y(\eta ')\) is equal to \(|\partial B_y(\eta )|\) times

\(\square \)

Proof of Proposition 2.8

Let \(D_x\ni x\), \(D_y \ni y\) be two Jordan subdomains of D such that \(D_x\cap D_y=\varnothing \). Then we have

where we have used Lemma 2.4 in the first and final equalities, and conformal invariance of \(h^D\) in the second. \(\square \)

2.5 Estimates on two- and four-point functions

Before we can proceed to identify the two-point function as the Green’s function, we need to derive some bounds on \({\tilde{K}}_2^D\) and \({\tilde{K}}_4^D\). For any set of pairwise distinct points \(z_1,\ldots , z_k \in D\), we define

where R(z, D) is the conformal radius of z in the domain D. We also set

The following logarithmic bounds are the main results of this section. We will use these repeatedly in the sequel, in order to justify the use of Fubini’s theorem and the dominated convergence theorem. We will also use the four-point function bound in Sect. 3 to prove the estimate described in Proposition 3.2, which is essential to showing Gaussianity of the process.

Proposition 2.10

Fix D and let \(z_1,\ldots , z_4 \in D\). Then there exists some universal constant \(C>0\) such that for \(\varepsilon \) with \(B_{z_i}(\varepsilon )\subset D\) for all i:

In particular, using Definition 2.6, we see that

Remark 2.11

Using the fact that \(R(z_i;z_1,\ldots , z_4)/R(z_i,D)\leqslant 1/10\) for all \(i\leqslant 4\), the AM-GM inequality and Koebe’s quarter theorem, we see that we can also write

This alternative formulation will be useful in Sect. 3.

We first prove an intermediate lemma. Let \(\phi , (\phi _r^z)_{r>0,z\in D}: {\mathbb {C}}\rightarrow {\mathbb {R}}\) be as in Definition 2.6 and \(({\tilde{h}}^D_r(z))_{r>0,z\in D}\) be the mollified field. Then we have the following:

Lemma 2.12

Fix \(D \subset {\mathbb {C}}\). There exists \(C>0\) universal such that for all z, r with \(r\leqslant R(z,D)/10\),

Also,

Proof

Let \(N =\lfloor \log _2 (R(z,D)/5r) \rfloor \) and set \(B_k = B_z(2^kr)\) for \(k \leqslant N\); \(B_{N+1} = D\). By the domain Markov property, we can write

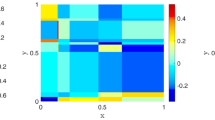

where \(\varphi _D^{B_N}\) is harmonic in \(B_N\) and \(h^{B_N}_D\) is independent of \(\varphi _D^{B_N}\) and is 0 outside \(B_N\) (Fig. 1).

Iterating this decomposition, we get

where:

the \(\varphi _k\)’s are independent and \(\varphi _k\) is harmonic in \(B_k\);

\({\tilde{h}}\) is an independent copy of \(h^{B_0}\) and is 0 outside of \(B_0=B_z(r)\).

Recall that \(\phi ^z_r\) is radially symmetric (about r) and has mass 1, so that

for every \(0\leqslant k \leqslant N\). Note that by scale and translation invariance we have \(({\tilde{h}},\phi _z^r)\overset{(d)}{=}(h^{{\mathbb {D}}},\phi )\), and therefore \(({\tilde{h}},\phi ^z_r)\) has finite variance (by Assumptions 1.1) that is independent of r and z. Also note that since \(\varphi _k\) is equal (in law) to the harmonic part in the decomposition \(h^{B_{k+1}}=h_{B_{k+1}}^{B_k}+\varphi _{B_{k+1}}^{B_k}\), we have by conformal invariance and the domain Markov property that

for \(0\leqslant k \leqslant N-1\). Combining this information and (2.6), we finally obtain that

This completes the proof using our finite variance assumption. Note that \(\text {Var}(\varphi ^{B_N}_D(z))\) can be bounded above by something which does not depend on either z or D. Indeed, by the Koebe quarter theorem, we can conformally map D to \({\mathbb {D}}\), with \(z\mapsto 0\) and \(B_N \mapsto D_N\), for some \(D_N\subset {\mathbb {D}}\) such that \(d(0,\partial D_N)\geqslant 1/40\). Then by conformal invariance and Lemma 2.3, \({{\,\mathrm{Var}\,}}(\varphi ^{B_N}_D(z))={{\,\mathrm{Var}\,}}(\varphi ^{D_N}_{\mathbb {D}}(0))\leqslant {{\,\mathrm{Var}\,}}(\varphi ^{B_0(1/40)}_{{\mathbb {D}}}(0))\).

Using the same decomposition, (2.5) and (2.6), and the fact that every variable in the decomposition has mean 0, we also obtain the fourth moment bound

for some constant \(C' >0\). \(\square \)

We now prove a corollary which gives the same bound for the variance of the field convolved with a mollifier at a point that is near the boundary.

Corollary 2.13

There exists a constant \(c>0\) such that for any point z with \(R(z,D)/10< r<d(z,\partial D)\),

Proof

We can find a domain \(D' \) containing D such that \(10r \leqslant R(z,D') \leqslant 11r \). Also we can write

for \(h_D^{D'}\overset{(d)}{=}h^D\) and \(\varphi _D^{D'}\) independent and harmonic inside D. We know from Lemma 2.12 that \({{\,\mathrm{Var}\,}}(h^{D'} ,\phi ^z_r) \leqslant c\). Since adding \(\varphi _D^{D'}\) only increases the variance, the proof is complete. \(\square \)

We now extend this to the full covariance structure of the mollified field to prove Proposition 2.10.

Proof of Proposition 2.10

We first prove (2.4) in the case of two points \(z_1\ne z_2\). Observe that by the domain Markov property, as in the proof of Proposition 4.1, if \(\varepsilon _0:= |z_1-z_2|/10 \wedge R(z_1,D)/10 \wedge R(z_2,D)/10\) then for all \(\varepsilon <\varepsilon _0\) we have that \({\mathbb {E}}[{\tilde{h}}_\varepsilon ^D(z_1){\tilde{h}}_\varepsilon ^D(z_2)]={\mathbb {E}}[{\tilde{h}}_{\varepsilon _0}^D(z_1){\tilde{h}}_{\varepsilon _0}^D(z_2)]\). Thus we need only prove the inequality for \(\varepsilon _0\leqslant \varepsilon < d(z,\partial D)\). However, this follows simply by applying Cauchy–Schwarz and using Lemma 2.12 and/or Corollary 2.13 as necessary (depending on whether \(\varepsilon _0\) is less than or greater than \(R(z_1,D)/10\) and \(R(z_2,D)/10\)). The case of four points follows in the same manner. \(\square \)

2.6 Identifying the two point function

In this section we prove that for \(z_1,z_2\) distinct

for some \(a>0\), where \(G^D\) is the Green’s function on D with Dirichlet boundary conditions.

We first need a technical lemma, namely, an exact expression for the variance of harmonic averages, derived from the bounds of the previous section together with the properties of the two-point kernel deduced in Sect. 2.4.

Lemma 2.14

Let \(\gamma \) be the boundary of a Jordan domain \(D'\subset D\), such that \(\gamma \cap \partial D=\varnothing .\) Let \(z\in D'\). Then

where \(\rho _z^\gamma \) is the harmonic measure seen from z on \(\gamma \).

Note that although the statement of this lemma may seem obvious, recall from Sect. 2.2 that the notation for the harmonic average \((h^D,\rho _z^\gamma )\) is an abuse of notation (the way we define it does not a priori have anything to do with integrating against harmonic measure).

Proof

Let \(\varphi :D'\rightarrow {\mathbb {D}}\) be the unique conformal map with \(\varphi (z)=0\) and \(\varphi '(z)>0\). Then by definition of the harmonic average,

where the last equality follows by definition of \({\hat{\psi }}^\delta _z\) and the harmonic average. Recall that \(\psi _0^{\delta }\) is defined by normalising a smooth radially symmetric function from \({\mathbb {D}}\) to [0, 1], that is equal to 1 on \(\{z: 1-\delta \leqslant |z| \leqslant 1-\delta /2\}\) and 0 on the \(\delta /10\) neighbourhood of this annulus, to have total mass 1.

We define

Observe that for every \(x\in {\mathbb {D}}\), by analyticity of \(\varphi \) and Proposition 2.7, f(x, y) viewed as a function of y is harmonic in \({\mathbb {D}}{\setminus } \{x\}.\) We also have the bound

for every \(x\ne y\) and some \(C=C(D)\) by Proposition 2.10. The dependence on the domain here comes from the bounded conformal radius term in (2.3). Now fix \(\delta _2>0\) and take \(\delta _1<\frac{4}{11} \delta _2\), so that the support of \(\psi _0^{\delta _1}\) lies entirely outside of \(B_0(1-4{\delta _2}/10)\supset \text {supp}(\psi _0^{\delta _2})\). Pick \(x \in \text {supp}(\psi _0^{\delta _1})\). Then it follows from harmonicity of f(x, y) in \(B_0(1-4{\delta _2}/10)\) that

Now, (2.8) tells us that (since the above expression does not depend on \(\delta _2\))

Furthermore, Proposition 2.7 together with the fact that \(\gamma \) lies strictly within D, implies that f(x, 0) extends to a continuous function on \(x\in \partial {\mathbb {D}}\). This means that the right hand side is equal to \(\int _{{\mathbb {D}}} f(x,0) \, \rho _0^{\partial {\mathbb {D}}}(dx)\), which is equal to \(\int _D {\tilde{K}}_2^D(w,z)\rho _z^\gamma (dw)\) by a change of variables. \(\square \)

Remark 2.15

As a direct consequence of the above proof we see that if \(\varepsilon <1\) then

We are now ready to prove (2.7): we start with the case \(x=0\) and \(D={\mathbb {D}}\).

Lemma 2.16

There exists \(a>0\) such that \({\tilde{K}}_2^{{\mathbb {D}}}(0,y)=-a\log |y|\) for all \(y\in {\mathbb {D}}{\setminus } \{0\}\).

Proof

First, we prove that there exists an \(a>0\) such that \(f(r):= {\mathbb {E}}[h_r^{\mathbb {D}}(0)^2]\) is equal to \(-a\log (r)\) for all \(r\in [0,1]\). To see this, note that by the domain Markov property and conformal invariance we have \(f(rs)=f(r)+f(s)\) for all \(r,s<1\). Moreover, f is continuous (by Remark 2.15 and Lemma 2.7) and decreasing (by Lemma 2.3), with \(f(1)=0\). This proves the claim.

With this in hand, by Remark 2.15 we can write

where by conformal invariance (in particular, rotational invariance) \({\tilde{K}}_2^{\mathbb {D}}(0,w)\) must be constant and equal to \({\tilde{K}}_2^{\mathbb {D}}(0,|y|)\) on \(\partial B_{0}(|y|)\). Since \(\rho _0^{\partial B_{0}(|y|)}(\cdot )\) has total mass 1 we obtain the result. \(\square \)

In particular, combining this with conformal invariance (Proposition 2.8) and Lemma 2.18, we obtain:

Corollary 2.17

\({\tilde{K}}_2^D=aG^D\), where \(G^D\) is the Green’s function with zero boundary conditions and \(a\geqslant 0\) is some constant.

2.7 The circle average approximates the field

We conclude this section by showing that, in fact, the covariance kernel \(K_2^D\) defined in Assumptions 1.1 (which we recall is a bilinear form on \(C_c^\infty (D)\times C_c^\infty (D)\)) corresponds to integrating against the two-point function \({\tilde{K}}_2^D\). Thus, due to Corollary 2.17, we can say that our field has “covariance given by a multiple of the Green’s function”. In particular, there exists \(a>0\) such that for any test function \(\phi \in C^\infty _c(D)\),

Lemma 2.18

For any \(\psi _1,\psi _2 \in C_c^\infty (D)\)

In particular, if \(h_\varepsilon ^D\) is the circle average field and \(\psi \in C_c^\infty (D)\), then

as \(\varepsilon \rightarrow 0\).

We will need this last statement for the conclusion of the proof: see Sect. 4.

Proof

We have, by Proposition 2.10 and dominated convergence (for this we use that \(\psi _1\) and \(\psi _2\) are compactly supported, meaning that for some \(\varepsilon _0>0\), \(B_x(\varepsilon _0)\subset D\) for all \(x\in \text {Support}(\psi _1)\cap \text {Support}(\psi _2)\)),

where the last line follows from definition of \(K_2^D\). Here \(K_2^D( \psi _1(x)\phi _{\varepsilon }^x,\psi _2(y)\phi _\varepsilon ^y )\) means the value of \(K_2^D(f,g)\) where \(f:z\mapsto \phi _{\varepsilon }^x(z)\psi _1(x)\) and \(g: z\mapsto \phi _{\varepsilon }^y(z)\psi _1(y)\) are both in \( C_c^\infty (D)\). Now we use the fact that \(K_2^D\) is a continuous bilinear form on \(C_c^\infty (D)\times C_c^\infty (D)\) with the topology discussed in the introduction. This means that if we fix \(y\in D\) and consider the map \(f\mapsto K_2^D(f,\phi _\varepsilon ^y\psi _2(y) )\), then this is a continuous linear map on \(C_c^\infty (D)\) i.e. it is a distribution. Standard theory of distributions (associativity of convolution, see for example [33, Theorem 6.30]), then tells us that

where \(\psi _1 * \phi _{\varepsilon }(z)=\int _D \psi _1 (x) \phi _{\varepsilon }^z (x) \, dx\). Now applying the same argument in the y-variable gives that the right hand side of (2.10) is equal to \(K_2^D(\psi _1 * \phi _{\varepsilon }, \psi _2* \phi _{\varepsilon })\), and we have overall attained the equality

Finally, since \(\psi _i*\phi _{\varepsilon }\rightarrow \psi _i\) in \(C_c^\infty (D)\) for each i as \(\varepsilon \rightarrow 0\) [16, §5.3, Theorem 1], and \(K_2^D\) is continuous, we can deduce the result.

For the statement concerning the variance, we expand

where the final equality follows by the same reasoning that led us to (2.11). Again, since \(\psi * \phi _\varepsilon \rightarrow \psi \) in \(C_c^\infty (D)\) as \(\varepsilon \rightarrow 0\), this allows us to conclude that the final expression converges to 0 as \(\varepsilon \rightarrow 0\). \(\square \)

Similarly, we deduce the following:

Lemma 2.19

For any \(\psi _1,\ldots , \psi _4 \in C_c^\infty (D)\)

Remark 2.20

Lemma 2.18 and Corollary 2.17 imply that Assumptions 1.1 (ii) (Dirichlet boundary conditions) is satisfied by a much wider family of test functions \(f_n\): in particular the assumption that \(f_n\) be rotationally symmetric in this assumption can be partly relaxed (however \(f_n\) cannot be completely arbitrary, i.e., it is not sufficient to assume that the support of \(f_n\) leaves any compact and that \(f_n\) has bounded mass, as can be seen by considering \(f_n\) to have unit mass within a ball of radius 1 / n at distance 1 / n from the boundary).

3 Gaussianity of the circle average

In this section, we argue that from Assumptions 1.1, we can deduce that the circle average field of \(h^D\) is Gaussian. This is where we will need to use our finite fourth moment assumption. Let \((h_\varepsilon ^D(z))_{z\in D}\) be the circle average field. The key result we prove here is the following:

Proposition 3.1

Let \(z_1,\ldots ,z_k\) be k pairwise distinct points in D with \(d(z_i,z_j)>2\varepsilon \) for every \(1\leqslant i \ne j\leqslant k\) and \(d(z_i,\partial D)>2\varepsilon \) for \(1\leqslant i \leqslant k\). Then the law of \((h^D_\varepsilon (z_1),\ldots ,h^D_\varepsilon (z_k))\) is that of a multivariate Gaussian random variable.

3.1 Bounds for the 4 point kernel

Let \(z_1,\ldots , z_4\) be pairwise distinct points in \(D={\mathbb {D}}\) and let

for \(1\leqslant i\leqslant 4\), where \(u(x)=x/|x|\) and \(|\cdot |_1\) is distance (with respect to arc length) on the unit circle. In words, we divide the disc into four wedges each containing one of the four distinguished points. By definition, the boundary between two adjacent wedges \(V_i\) and \(V_j\) is the ray emanating from the origin which bissects the rays going through \(z_i\) and \(z_j\) (Fig. 2).

We have (by definition of the harmonic average, and Lemma 2.5) the following expression for the four point kernel:

In the next section, we will require some bounds on these quantities when the \(z_i\)’s are close to the boundary of \({\mathbb {D}}\). We can estimate them as follows:

Proposition 3.2

Suppose that \(z_1,\ldots , z_4\) are pairwise distinct points in \({\mathbb {C}}\), each with modulus between \(1-\varepsilon \) and 1. Then if \(V_j\) is as described above (with respect to \(z_1,\ldots , z_4\)) and \(a_j=\text {min}_{i\ne j} \{|u(z_j)-u(z_i)|_1\}\) is the isolation radius of \(u(z_j)\) in \(\{u(z_1),\ldots , u(z_4)\}\) we have

for some universal constant c.

We remark that the bound above is much improved compared to Proposition 2.10 if \(\varepsilon \ll \text {min}_ja_j\). This is where the effect of the Dirichlet boundary condition assumption is manifested. Also the choice of the Voronoi cell is not crucial, any partition of the domain separating the points would work. This particular choice of cells is simply to make the calculations explicit.

Proof

First suppose that \(a_j>\varepsilon \). By Lemma 2.3 and Cauchy–Schwarz, it is enough to consider the wedge

for every \(\varepsilon \leqslant a \leqslant \pi /2\) and prove that \({\mathbb {E}}[(h^{\mathbb {D}},\rho _w^{\partial W_a})^4]\leqslant c\frac{\varepsilon ^4}{a^4}\log ^4(a)\) when \(w:=1-\varepsilon \). To begin, we describe how to approximate \((h^{\mathbb {D}},\rho _{w}^{\partial W_a})\) in a slightly different way. This is very similar to the approximation used in Sect. 2.2 (we take some smooth approximations to the harmonic measure on the boundary of a sequence of domains increasing to \(W_a\) from the inside) but is more explicit, which will be an advantage here.

For \(\delta \ll \varepsilon \), let

and let \(W_a^\delta = \{ \delta< |z| <1 - \delta ; \arg (z) \in (- a + \delta , a - \delta )\}\). Let \({{\hat{\nu }}}^\delta \) be the harmonic measure seen from w on the boundary of the domain \(W_a^\delta \) and let \(\nu ^\delta \) be the same harmonic measure, but restricted to the lines \(r_1^\delta \) and \( r_2^\delta \). Finally, let \(\phi \) be a smooth radially symmetric function with mass 1, supported on \({\mathbb {D}}\), and denote \(\phi ^z_\delta (\cdot )=\delta ^{-2}\phi (|z-\cdot |/\delta )\) as usual. Set \(p^\delta (z) = \int \phi ^z_{\delta /10}(x) \nu ^\delta (dx)\). We claim the following.

Claim

-

(a)

\((h^{\mathbb {D}},p^\delta )\rightarrow (h^{\mathbb {D}}, \rho _{w}^{\partial W_a})\) in \(L^2({\mathbb {P}})\) and in probability as \(\delta \rightarrow 0\).

-

(b)

\(p^\delta (z)\) is bounded above by some universal constant times \(\delta ^{-1}\frac{\varepsilon }{a}\frac{\pi }{2(a-\delta )}(\frac{|z|}{1-\delta })^{\frac{\pi }{2(a-\delta )}-1}\).

Proof of claim

For (a), we first prove the same statement with \(p^\delta \) replaced by

To do this, we apply the Markov property in \(W_a\), writing \(h^{\mathbb {D}}=h_{\mathbb {D}}^{W_a}+\varphi _{{\mathbb {D}}}^{W_a}\). First, we consider the part with zero boundary conditions: by Corollary 2.17, we have that

as \(\delta \rightarrow 0\) by standard properties of the Dirichlet Green’s function (note that \({{\hat{p}}}^\delta \) is simply a perturbation of the harmonic measure on \(\partial W_a\)).

Then we consider the harmonic part: we have

for every \(\delta \), since \(\phi \) is radially symmetric with mass 1, \(\varphi _{{\mathbb {D}}}^{W_a}\) is harmonic, and \({\hat{\nu }}\) is the harmonic measure on \(W_a^\delta \subset W_a\) meaning that \(\varphi _{\mathbb {D}}^{W_a}\) is a true harmonic function on \(W_a^\delta \). Combining these two facts, it follows that \((h^{\mathbb {D}}, {\hat{p}}^\delta )\) converges to \((h^{\mathbb {D}}, \rho _{w}^{\partial W_a})\) in \(L^2({\mathbb {P}})\) and in probability as \(\delta \rightarrow 0\). Now to conclude (a), simply observe that \({{\,\mathrm{Var}\,}}(h^{\mathbb {D}},p^\delta -{\hat{p}}^\delta )\) converges to 0 as \(\delta \rightarrow 0\): again, this follows from Corollary 2.17 and elementary properties of the Green’s function since \(p^\delta - {{\hat{p}}}^\delta \) is supported on an arbitrarily small neighbourhood of a fixed arc of the unit circle (and converges to the harmonic measure on that arc seen from w).

We now move on to (b). For \(z\in {\mathbb {D}}\) we have

by definition. Consider the maps

where \((1-\eta )=\varphi _2^\delta \circ \varphi _1^\delta (w).\) Then \(\varphi _1^\delta \) maps \({\tilde{W}}_a^\delta \) to the half disc \( {\mathbb {D}}\cap \{\mathfrak {R}(z) >0 \}\), \({\tilde{W}}_a^\delta = \{ |z| < 1- \delta : \arg (z) \in ( - a + \delta , a - \delta )\}\). It can also be checked using elementary properties of Möbius maps that \(\varphi _2^\delta \) maps the half disc to the full disc \({\mathbb {D}}\), and \(\varphi _3^\delta \) maps \({\mathbb {D}}\) to itself so that \(\varphi _2^\delta \circ \varphi _1^\delta (w)\) is sent to 0. Hence \(\varphi _3^\delta \circ \varphi _2^\delta \circ \varphi _1^\delta \) is a conformal map from \({\tilde{W}}_a^\delta \) to \({\mathbb {D}}\) sending w to 0,

A computation verifies that for any \(z\in {\mathbb {D}}\), \(\varphi _3^\delta \circ \varphi _2^\delta \circ \varphi _1^\delta (B_z(\delta )\cap \{r_1^\delta \cup r_2^\delta \} )\) is an arc of the unit circle with length less than \(\frac{c\varepsilon \delta \pi }{2a(a-\delta )}(\frac{|z|}{1-\delta })^{\frac{\pi }{2(a-\delta )}-1}\) for some universal constant c. In particular, we use that

and

where \(\eta \leqslant \frac{1}{a}c\varepsilon \) for some such c. By definition of \({\hat{\nu }}^\delta \), and the fact that the harmonic measure with respect to \(W_a^\delta \) is less than the harmonic measure with respect to \({\tilde{W}}_a^\delta \) for any fixed subset of \(\{r_1^\delta \cup r_2^\delta \}\), this finishes the proof of the claim. \(\square \)

With this claim in hand, we have by Fatou’s Lemma and Lemma 2.19

and then by Proposition 2.10 and Remark 2.11, we see that this is less than or equal to

another universal c (which may now change from line to line).

We can simplify this expression. Because \(p^\delta \) is supported in a strip of width \(\delta /10\) around the lines \(r_1^\delta \) and \(r_2^\delta \), we can change of variables by considering the orthogonal projection onto \(r_1^\delta \cup r_2^\delta \), so that we can write

Note then that \( \log ^2 | x_i - x_j| \leqslant \log ^2 ( \big ||z_i| - |z_j| \big |) \). Performing the change of variables \((u_i)_{1\leqslant i\leqslant 4} = (\frac{|z_i|}{1-\delta })_{1\leqslant i \leqslant 4}\)) we obtain that the above is less than or equal to

Thus, to conclude the proof in the case \(a_j\geqslant \varepsilon \), we need to show that

for some constant C and all \(b\geqslant 1\). To see this, we break up the integral into 4 regions. The first is \(S_1:=\{x\leqslant 1-\log (b)/b\}\cap \{y\leqslant 1-\log (b)/b\}\), and on this region \(bx^{b-1}\) and \(by^{b-1}\) are uniformly bounded in b (indeed, one can easily check that \(b(1-\log b/b)^{b-1}\rightarrow 1\) as \(b \rightarrow \infty \)). Since \(\iint _{[0,1]^2} \log ^2|x-y| dx dy\) is finite, this means that the integral over \(S_1\) is less than or equal to a universal constant. The second is \(S_2:=\{x \leqslant 1-\log (b)/b\}\cap \{y> 1-\log (b)/b\}\), and on this region, \(bx^{b-1}y^{b-1}\) is uniformly bounded in b for the same reason. Thus integrating over \(S_2\), and using that \(\int _0^a \log ^2(u) \, du = O(a\log ^2(a))\) as \(a\rightarrow 0\), we obtain something of order at most \(\log ^3(b)\). Symmetrically, the integral over the region \(S_3:=\{y \leqslant 1-\log (b)/b\}\cap \{x> 1-\log (b)/b\}\) is at most order \(\log ^3(b)\). The last region is \(S_4:=\{x\geqslant 1-\log (b)/b\}\cap \{y\geqslant 1-\log (b)/b\}\). Using that \(\iint _{[0,a]^2} \log ^2(|x-y|) dx dy=O(a^2\log ^2(a))\) as \(a\rightarrow 0\), we see that the integral over \(S_4\) is \(O(\log ^4(b))\), and this completes this part of the proof.

Finally, suppose that \(a_j<\varepsilon \). Then we have \(B_{z_j}(a_j/10)\subset V_j\), so by Lemma 2.3

Using Proposition 2.10, we see that this is less than \(c\log ^2(a_j)\) for some universal c.

\(\square \)

3.2 Proof of Gaussianity

The proof of Proposition 3.1 is based on the following lemma. Let \(D'\Subset D\) be an analytic Jordan domainFootnote 1 containing k pairwise distinct points \(z_1,\ldots , z_k\).

Proposition 3.3

\(((h^D,\rho _{z_1}^{\partial D'}), \ldots , (h^D,\rho _{z_k}^{\partial D'}))\) is a Gaussian vector.

By conformal invariance, we can assume for the proof that \(D={\mathbb {D}}\). To prove this we will need the following technical lemma.

Lemma 3.4

Let \(D'\Subset {\mathbb {D}}\) be an analytic Jordan domain containing k pairwise distinct points \(z_1,\ldots , z_k\). Then there exists a sequence of increasing domains \((D_s)_{s\in [0,1]}\) with \(D_0=D'\) and \(D_1={\mathbb {D}}\), such that

\(D_s\) is an analytic Jordan domain for every \(s\in [0,1]\).

\(d_H({\overline{D}}_s,{\overline{D}}_t)\leqslant c|s-t|\) for all \(s,t\in [0,1]\) where \(d_H\) is the Hausdorff distance and c does not depend on \(s,t\in [0,1]\).

If \(\phi _{j,s}: {\overline{D}}_s\rightarrow {\overline{{\mathbb {D}}}}\) for each \(1\leqslant j \leqslant k\) and \(s\in [0,1]\) is the unique conformal map sending \(z_j\mapsto 0\) and with \(\phi _{j,s}'(z_j)>0\) then

$$\begin{aligned} \sup _{s\in [0,1]}\sup _{1\leqslant j\leqslant k} \sup _{z\in D_s}|\phi _{j,s}'(z)| <\infty \end{aligned}$$

Proof

This fact seems intuitive and may well be known but we could not find a reference. The proof we give here is elementary and relies on Brownian motion estimates as well as explicit constructions of Riemann maps.

Consider the doubly connected domain \({\mathbb {D}}\setminus \overline{D'}\). Then, by the Riemann mapping theorem for doubly connected domains [1, Ch6, §5, Theorem 10], there exists a conformal map \(\phi \) from \({\mathbb {D}}{\setminus } \overline{D'}\) to the annulus \({\mathbb {D}}\setminus r{\overline{{\mathbb {D}}}}\) for some unique \(r<1\). We set

for each \(s\in [0,1]\) so that \(D_0=D'\), \(D_1={\mathbb {D}}\) and the \((D_s)_{s}\) are increasing as required. It is also clear that \(D_s\) is an analytic Jordan domain for each s.

Moreover, as \(D'=D_0\) has analytic boundary we know that \(\phi ^{-1}\) can be extended analytically, by Schwarz reflection, to \({\mathbb {D}}{\setminus } u{\overline{{\mathbb {D}}}}\) for some \(u<r\) (and we can pick u such that \(z_i\notin \phi ^{-1}({\mathbb {D}}{\setminus } u{\overline{{\mathbb {D}}}})\) for each \(1\leqslant i\leqslant k\)). We also have that \(|\phi '|\) is a continuous function on the compact set \({\overline{{\mathbb {D}}}}{\setminus } D' \) (because \(\phi \) extends analytically to \({\bar{{\mathbb {D}}}}{\setminus } {D'}\)) so is bounded above and below on this set. This provides the second statement of the lemma (concerning Hausdorff distance).

For the third statement, we pick \(u<v<r\) and define V to be the domain given by the interior of the Jordan curve \(\phi ^{-1}(\partial (v{\mathbb {D}}))\). Similarly, we define U to be the domain bounded by the curve \(\phi ^{-1}(\partial (u{\mathbb {D}}))\), so that \(U\Subset V\Subset D'\). Then we set

where \(K^{V}(x,\cdot )\) is the boundary Poisson kernel on \(\partial V\). That is, \(K^{V}(x,y)\) is the density, with respect to arc length, of the harmonic measure on \(\partial V\) viewed from \(x\in V\). We recall here that for an analytic Jordan domain D, and \(x\in D\), \(y\in \partial D\)

for \(\varphi _x:{\overline{D}}\rightarrow {\overline{{\mathbb {D}}}}\) the unique conformal map with \(\varphi _x(x)=0\) and \(\varphi _x'(x)>0\).Footnote 2 In particular, since \(\partial V\) is an analytic Jordan curve, \(|\varphi _{x}'(y)|\) is a continuous function on \(\partial U \times \partial V\), and this means that M defined above is finite.

We will use the fact that for any \(s\in [0,1]\), by definition of \(\phi \) and conformal invariance, the image under \(\phi \) of a Brownian motion started at \(y\in \partial V\) and stopped when it leaves \(D_s{\setminus } {\overline{U}}\) is a Brownian motion started at \(\phi (y)\in \partial (v{\mathbb {D}})\) and stopped when it leaves \((r+(1-r)s){\mathbb {D}}{\setminus } u{\overline{{\mathbb {D}}}}\). We refer to this elementary fact as (\(\dagger \)).

First, we will use (\(\dagger \)) to prove that for any \(z\in \partial D_s\), if \({\mathbf {n}}(z)\) is the inward unit normal vector to \(\partial D_s\) at z, then

where the constant c is independent of \(1\leqslant i \leqslant k\), \(s\in [0,1]\) and \(z\in \partial D_s\). To do this, without loss of generality we take \(i=1\). Assume that \(\delta \) is always small enough that \(z+\delta {\mathbf {n}}(z)\) does not intersect \({\overline{V}}\). Then we take a Brownian motion \((B_t)_{t\geqslant 0}\) in \({\mathbb {C}}\) started from \(z_1\), and define the following series of stopping times:

where \(\tau _{D_s}\) is the hitting time of \(\partial D_s\). Then for each time interval \([T_j,S_j]\), writing \(p_t\) for the transition density of Brownian motion in \({\mathbb {C}}\), we have

where \(|\partial V|\) is the length of the curve \(\partial V\). The inequality follows from (\(\dagger \)) since the expected time that a Brownian motion started at \(x\in \partial (v{\mathbb {D}})\) spends at any given point before exiting \((r+(1-r)s){\mathbb {D}}{\setminus } {\overline{U}}\) is less than the expected time spent there before exiting \((r+(1-r)s){\mathbb {D}}\). This gives us that

Now, since \(|\{j: S_j<\infty \}|\) is dominated by a geometric random variable with success probability uniformly bounded below (for example, the probability that a Brownian motion started on \(\partial (v{\mathbb {D}})\) hits \(\partial {\mathbb {D}}\) before \(\partial (u{\mathbb {D}})\)) we see that the expectation is bounded, independently of z and \(s\in [0,1]\). Thus we only need to consider the limsup term in the above. For this, we first note that \(|\phi (z+\delta {\mathbf {n}}(z))|\geqslant (r+(1-r)s)(1-K\delta )\) for some K depending only on \(\phi \) (since \(\phi \) has uniformly bounded derivative). Then, an explicit calculation using the Green’s function in the unit disc tells us that

where \(a:=v/(r+(1-r)s)\). Since \(|a|\leqslant v/r <1\), we obtain (3.2).

Now recall the definition of \(\phi _{j,s}\) from the statement of the lemma. We have just proved, by the second equality in (3.1), that

for some c not depending on j or s. However, since \(\phi '_{j,s}\) is analytic up to the boundary of \(D_s\) we obtain the same upper bound for \(\sup _{z\in {{\overline{D}}}_s}|\phi _{j,s}'(z)|.\)\(\square \)

Proof of Proposition 3.3

To prove Proposition 3.3, we take a sequence of increasing domains \((D_s)_{s\in [0,1]}\) as described by Lemma 3.4. Then we define

for all i, s and let

(note the reversal of time here—we want to now move inwards from \(\partial {\mathbb {D}}\) to \(\partial D_s\)). We will prove that for every s, \(\varvec{X}_s\) is distributed as a multivariate Gaussian random vector. Setting \(s=1\), this proves the lemma.

In fact, we will prove the following equivalent statement: for every vector \((a_1,\ldots , a_k) \in {\mathbb {R}}^k\), and \(s>0\)

is a Gaussian random variable. Note that \(Y_0=0\) because \(h^{\mathbb {D}}\) has zero boundary conditions, and it is also straightforward to check using the domain Markov property that \(Y_s\) has independent mean zero increments. By the Dubins–Schwarz theorem, these observations tell us that as long as \(Y_s\) has a continuous modification, it must be a Gaussian process (because it is a continuous martingale with deterministic quadratic variation process).

To prove that \(Y_s\) has continuous modification, we shall prove that for any \(\eta >0\) there exists some constant C such that for all \(\varepsilon >0\) and \(s\in [0,1]\)

Using Kolmogorov’s continuity criterion, (3.3) is enough to conclude that \(Y_s\) admits a continuous modification.

Fix some \(0<\eta <1\), let \(s\in [0,1)\) and let \(\gamma _\varepsilon \) be the curve defined by \(\partial D_{1-s-\varepsilon }\) inside \(D_{1-s}\). Then by definition, expansion and Cauchy-Schwarz,

In light of the above inequality, it is enough to show that there exists a C such that for all \(1\leqslant j\leqslant k\), \(s\in [0,1]\) and \(\varepsilon >0\)

For this, we use our hypotheses on the family of domains \((D_s)_{0\leqslant s \leqslant 1}\). These tell us that if \(\phi _{j,s}:D_s \rightarrow {\mathbb {D}}\) is the unique conformal map sending \(z_j\mapsto 0\) and with \(\phi '_{j,s}(z_j)>0\), we have that \(\phi _{j,s}(\gamma _{\varepsilon })\) is contained in \(\{z: 1-b\varepsilon<|z|<1\}\) for some \(b>0\) not depending on j, s or \(\varepsilon \). Then by conformal invariance, we can write

where the inequality follows from Lemma 2.3.

So we estimate the final quantity; without loss of generality, we assume that \(b=1\). By Fatou’s Lemma we have

recalling the definition of \({{\hat{\psi }}}^\delta \) from Sect. 2.2: it is a smooth function, bounded above by some constant multiple of \(\delta ^{-1}\), that is supported on the annulus \(\Omega _{\delta ,\varepsilon }:=\{z: (1-\varepsilon )(1-11\delta /10) \leqslant |z| \leqslant (1-\varepsilon )(1-4\delta /10) \}\). Suppose that \(\delta <\varepsilon \). Then the integrand is only supported on points \((x_1,x_2,x_3,x_4)\) all lying in \(\Omega _{\delta ,\varepsilon }\). Moreover, if \((x_1,\ldots , x_4)\) are 4 such points, then by Proposition 3.2

for some universal constant c, where \(a_j=\text {min}_{i\ne j}\{|u(x_i)-u(x_j)|_{1}\}\) (and \(u(x)=x/|x|\).) Using the bound on \({{\hat{\psi }}}^\delta \), we see that (3.5) is bounded above by

for another universal \(c'\).

Now, we rewrite the integral in polar coordinates \(x_j=r_j\text {e}^{i\theta _j}\) (so \(u_j=\text {e}^{i\theta _j}\)) and then, noticing that \(a_j\) depends only on the angular coordinate, integrate over \(r_1,\ldots , r_4\). This gives us that (3.6) is less than or equal to

where \(a_j=a_j(\theta _1,\ldots , \theta _4).\) Now, we divide the integral over the \(\theta _j\)’s into several parts, depending on which \(a_j\)’s are smaller or bigger than \(\varepsilon \). Let

A computation yields that the integral over \(A_j\) is \(O(\varepsilon ^{2-\eta })\) for all j, the integral over \(B_j\) is \(O( \varepsilon ^{3-\eta })\) for all j, the integral over D is \(O(\varepsilon ^{2-\eta })\), and the integral over E is \(O(\varepsilon ^{2-\eta })\). This completes the proof of equation 3.4 and hence the lemma. \(\square \)

Proof of Proposition 3.1

The strategy of the proof is to construct a sequence of analytic Jordan domains \((D_n)_{n\geqslant 1}\), all contained in D, such that \(((h^D,\rho _{z_1}^{D_n}),\ldots , (h^D,\rho _{z_k}^{D_n})) \rightarrow (h_\varepsilon (z_1) ,\ldots , h_\varepsilon (z_k))\) in a precise sense as \(n\rightarrow \infty \). More concretely, it is enough to show that for any \((a_1,\ldots , a_k)\in {\mathbb {R}}^k\), we can choose a sequence of analytic domains \((D_n)_{n\geqslant 1}\), such that setting

we have

as \(n\rightarrow \infty \). Since \(Y_n\) is Gaussian for every n (by Proposition 3.3) and Z has finite variance, this shows that Z is Gaussian.

So, we choose the \(D_n\). This will involve first defining a sequence of auxiliary domains \(D_n'\), that need not be analytic, and then using them to define the analytic domains \(D_n\).

To begin, we observe that for \(n\in {\mathbb {N}}\) with \(1/n<\varepsilon \), the balls \(\{B_{z_i}(\varepsilon +1/n): 1\leqslant i \leqslant k\}\) are disjoint. Let us choose a further point \(z\in D\), that does not lie in any of these balls. It is easy to see (since the set \(\{z_i\}_{1\leqslant i \leqslant k}\) is finite) that one can choose such a z, along with a smooth curve \(\gamma _i\) from z to \(z_i\) for each \(1\leqslant i \leqslant k\), and \(c,c'\in (0,1)\) such that:

\(\gamma _i\cap \partial B(z_j,\varepsilon )\) is empty for \(i\ne j\) and consists of exactly one point when \(i=j\), \(1\leqslant i \leqslant k\);

the c / n fattenings \(\gamma _i^n:= \{z\in D: d(z,\gamma _i)<c/n\}\) of the \(\gamma _i\) are such that \(D'_n:=\bigcup _{1\leqslant i \leqslant k} \gamma _i^n \cup B_{z_i}(\varepsilon +1/n)\) is a simply connected domain strictly contained in D for every \(n>1/\varepsilon \);

the boundary of \(D_n'\) contains, for each \(1\leqslant i \leqslant k\), the curve \(\partial B_{z_i}(\varepsilon +1/n){\setminus } A_i^n\), where \(A_i^n\) is an arc of \(\partial B_{z_i}(\varepsilon )\) that has length \(\leqslant c'/n\).

We need the following basic statement that says, in some sense, that \(D_n'\) is a good approximation to \(\cup _i B_{z_i}(\varepsilon )\) for large n.

Lemma 3.5

For every \(1\leqslant i \leqslant k\),

as \(n\rightarrow \infty \), where the supremum is over all simply connected domains \(D'\) satisfying the indicated inclusions.

Proof

Without loss of generality, we prove the result for \(i=1\), and assume that \(\text {diam}(D)\leqslant 1\). Fix \(D_n'\subset D' \subset D_{n/2}'\) simply connected. Then by harmonicity of the Green’s function, we have

and also for \(y\in \partial B_{z_1}(\varepsilon )\),

where the expectation \({\mathbb {E}}_y\) is for a Brownian motion B starting from y, and \(\tau _{D''}\) is its exit time from \(D'':=D_{n/2}'\).

Moreover, we have the upper bound \({\mathbb {E}}_y[\log (1/|B_{\tau _{D''}}-z_1|)]\geqslant \log (1/(\varepsilon +2/n))(1-p_{y,n}),\) where \(p_{y,n}\) is the probability that a Brownian motion started from y exits \(\partial B_{z_1}(\varepsilon +2/n)\) through the boundary arc \(A_1^{n/2}\). Since \(p_{y,n}\) tends to 0 as \(n\rightarrow \infty \) for almost every \(y\in B_{z_1}(\varepsilon )\) (in fact, the only y for which this fails to hold is the single intersection point of \(\partial B_{z_1}(\varepsilon )\) and \(\gamma _1\)), it follows by dominated convergence that \(\int G^{D''}(z_1,y)\rho _{z_1}^\varepsilon (dy)\rightarrow 0\) as \(n\rightarrow \infty \) (Fig. 3). The lemma then follows from the inequalities on the left hand side of (3.8). \(\square \)

The domain \(D_n'\) from the proof of Proposition 3.1. The boundary of \(D_n'\) overlaps with \(\partial B_{z_i}(\varepsilon +1/n)\) for \(1\leqslant i \leqslant k\) except on k small arcs \((A_i^n)_{1\leqslant i\leqslant k}\) with maximum length tending to 0. Here \(k=4\)

Now from the \((D_n')_n\) we define our sequence of domains \((D_n)_n\), such that \(D_n\) is analytic, and also \(D_n'\subset D_n\subset D_{n/2}'\) for each n. This second condition will allow us to apply Lemma 3.5.

By the Riemann mapping theorem for doubly connected domains, we know that we can choose a conformal map \(\phi \) from \(D{\setminus } \overline{D_n'}\) to the annulus \({\mathbb {D}}{\setminus } \overline{r{\mathbb {D}}}\) for some unique \(r\in (0,1)\). For each \(r<s<1\), denote by \(D_n'(s)\) the complement in D of the preimage of \({\mathbb {D}}{\setminus } \overline{s{\mathbb {D}}}\) under \(\phi \). Then \(D_n'(s)\) is a simply connected domain containing \(D_n'\) for every \(s\in (r,1)\), and \(\cap _{s\in (r,1)}D_n'(s)\) is equal to \(D_n'\). Hence there exists some \(1>s_n>r\) such that \(D'_n(s)\) is contained in \(D_{n/2}'\). We then define

It is clear that \(D_n\) is analytic for every n (since by definition its boundary is the image of the unit circle under a conformal map that is defined in a neighbourhood of the circle) and also, by construction, that \(D_{n/2}'\subset D_n\subset D_n'\).

Having defined the \(D_n\), we just need to prove (3.7). Without loss of generality it is enough to show that \({\mathbb {E}}[(h^D_\varepsilon (z_1)-(h^D,\rho _{z_1}^{\partial D_n}))^2]\rightarrow 0\) as \(n\rightarrow \infty \). For this, write \(h^D=h_D^{D_n}+\varphi _D^{D_n}\) using the domain Markov decomposition, so that \((h^D,\rho _{z_1}^{\partial D_n})=\varphi _D^{D_n}(z_1).\) Then since \(B_{z_1}(\varepsilon )\subset D_n\) we can further write \(h_D^{D_n}=h_{D_n}^{B_{z_1}(\varepsilon )}+\varphi _{D_n}^{B_{z_1}(\varepsilon )}\), and by uniqueness, we must have

Thus, we need to show that \({\mathbb {E}}[\varphi _{D_n}^{B_{z_1}(\varepsilon )}(z_1)^2]\rightarrow 0\) as \(n\rightarrow \infty \). However, from the definition of the circle average as an \(L^2\) limit (Lemma 2.1) and the identification of the covariance structure (2.9), we know that

The result then follows from Lemma 3.5. \(\square \)

4 Proof of Theorem 1.6

To conclude we prove convergence of the circle average field, which then implies Theorem 1.6 by Lemma 2.18.

Lemma 4.1

For any \(\phi \in C_c^\infty (D)\), \((h_\varepsilon ^D,\phi )\) converges to \((h^D_{\text {GFF}},\phi )\) in distribution as \(\varepsilon \rightarrow 0\).

We first see how this implies Theorem 1.6.

Proof of Theorem 1.6

To prove that \(h^D\overset{(d)}{=} h^D_{\text {GFF}}\) we need to show that for any \((\phi _1,\ldots , \phi _n)\) with \((\phi _i)_{1\leqslant i \leqslant n}\in C_c^\infty (D)\), \(((h^D,\phi _1), \ldots , (h^D,\phi _n))\) is a Gaussian vector with mean 0 and the correct covariance matrix. Equivalently, we need to show that for any \((u_1,\ldots , u_n)\in {\mathbb {R}}^n\), the sum \(\sum _1^n u_i (h^D,\phi _i)\) is a centered Gaussian variable with the correct variance. By linearity, we therefore need only prove that for any \(\phi \in C_c^\infty (D)\),

So, we fix such a \(\phi \). By Lemma 2.18, we know that \({{\,\mathrm{Var}\,}}((h_\varepsilon ^D,\phi )-(h^D,\phi ))\rightarrow 0\) as \(\varepsilon \rightarrow 0\). Thus \((h_\varepsilon ^D,\phi )\) converges to \((h^D,\phi )\) in distribution. From here, Lemma 4.1 implies the result. \(\square \)

Proof of Lemma 4.1

We will prove that for every \(n\in {\mathbb {N}}\), \({\mathbb {E}}[(h_\varepsilon ^D,\phi )^n]\rightarrow {\mathbb {E}}[(h_{\text {GFF}}^D,\phi )^n]\) as \(\varepsilon \rightarrow 0\), which implies the result by the method of moments, [6, Theorem 30.2]. This requires a bit of care however, since a priori it is not even clear that this moment is well defined when \(n \geqslant 4\).

To show the convergence, we need to compute the limit as \(\varepsilon \rightarrow 0\) of

where in the middle term, we have decomposed the integral over \(D^n\) into the integrals over \(A_\varepsilon := \{ (z_1,\ldots , z_n) \in D^n: |z_i- z_j|>2\varepsilon \text { for all } i,j\}\) and \(E_\varepsilon := D^n {\setminus } A_\varepsilon \). We assume that \(\varepsilon >0\) is always small enough that \(d(z,\partial D)>2\varepsilon \) for every z in the support of \(\phi \). We will consider the right hand side and show that both terms are well defined and finite, from which it will follow by Fubini’s theorem that the moment on the left hand side is also finite.

Let us first show that \(I^{E_\varepsilon }\rightarrow 0\) as \(\varepsilon \rightarrow 0\). This follows from our a priori bounds on the two point function in Lemma 2.12. Indeed, for any \(z_1,\ldots , z_n\) in the support of \(\phi \), we have that the \(h_\varepsilon (z_i)\) are marginally Gaussian (Proposition 3.1), and therefore the nth moment of \(|h_\varepsilon (z_i)|\) is at most \(c {\mathbb {E}}( h_\varepsilon (z_i)^2)^{n/2}\) for some constant c depending only on n. Therefore by Hölder’s inequality and Lemma 2.12, we have

for some constant c depending on n but not on \(\varepsilon \) (note this already implies that for fixed \(\varepsilon >0\), \((h_\varepsilon , \phi )\) has finite nth moment). Hence we can apply Fubini to bring the expectation inside the integral in \(I^{E_\varepsilon }\), and conclude that

Here we have used that the integral of \(\prod _i |\phi (z_i)|\) over \(E_\varepsilon \) is \(O(\varepsilon ^2)\): indeed the n-dimensional volume of \(E_\varepsilon \) is \(O(\varepsilon ^2)\) by definition for fixed \(n \geqslant 2\), and \(\phi \) is bounded.

Consequently, we need only consider the term \(I^{A_\varepsilon }\) on the right-hand side of (4.1). For this we use Proposition 3.1, which tells us that for every \((z_1,\ldots , z_n)\in A_\varepsilon \) , \((h_\varepsilon ^D(z_1),\ldots , h_\varepsilon ^D(z_n))\) is multivariate normal with mean \((0, \ldots , 0)\). Therefore, by the Wick rule (to be more precise, Isserlis’ theorem), we have that

on \(A_\varepsilon \), where the above sum is over all pairings of \(\{1,2,\ldots , n\}\). In fact, by (2.7), Proposition 2.10, Lemma 2.14 and Cauchy–Schwarz, we know that for any \(z_i,z_j \in A_\varepsilon \),

This allows us to deduce that the right hand side of (4.3) is bounded above by a function independent of \(\varepsilon \), that is also integrable over \(D^n\). Thus we can apply Fubini and then the dominated convergence theorem in (4.1), to see that

where the penultimate line follows by (2.7), and the final line by the same reasoning as in (4.2). From this, it follows that that \(\lim _{\varepsilon \rightarrow 0} {\mathbb {E}}[(h_\varepsilon ^D,\phi )^n]= {\mathbb {E}}[(h_{\text {GFF}}^D,\phi )^n]\), and hence we have concluded the proof of Lemma 4.1 and of Theorem 1.6. \(\square \)

5 Proof of Theorem 1.9

First, we prove that the family \(\{X^{[0,2^n]}(1)\}_{n\in {\mathbb {N}}}\) is tight:

Lemma 5.1

For any \(\varepsilon >0\) there exists \(M>0\) such that \({\mathbb {P}}(X^{[0,2^n]}(1)\geqslant M)\leqslant \varepsilon \) for all \(n\in {\mathbb {N}}\).

Proof

First observe that \(X^{[0,2^n]}(1){\mathop {=}\limits ^{(d)}}2^{\frac{n}{2}} X^{[0,1]}(2^{-n})\), by the assumption of Brownian scaling. Then, by iteratively dividing the interval [0, 1] into two and using scaling and the Markov property again, we can write

where the \((X^{[0,1]}_k \, : \, 0\leqslant k \leqslant n-1)\) are independent copies of \(X^{[0,1]}\). Write \(Y_n\) for the right hand side of (5.1), and let X have the law of \(X^{[0,1]}(1/2)\). By Assumptions 1.8 we know that

The idea is to derive a uniform bound (in n) for \({\mathbb {P}}(|Y_n|>M)\) by recursion.

To do this, write

(where \(X_n^{[0,1]}(1/2)\) has the same distribution as X). This means that if we pick some \(a\in (1,\sqrt{2})\) and set \(b=1-\frac{a}{\sqrt{2}}\in (0,1)\) we have that

Since \({\mathbb {P}}(|Y_0|\geqslant M)={\mathbb {P}}(|X|\geqslant M/2)\) we have by iteration that

and we can bound this sum above by

By (5.2) the right hand side converges to 0 as \(M\rightarrow \infty \), and it is clearly uniform in n, which completes the proof. \(\square \)

We now claim that, locally, the process \(X^{[0,2^n]}\) (in the large n limit) has to be a constant times a Brownian motion.

Lemma 5.2

We have the following convergence in the sense of finite dimensional distributions:

for some constant \(\sigma \geqslant 0\) where \((B(t))_{t\geqslant 0}\) is a standard Brownian motion.

Proof

Step one is to show that for any sequence of natural numbers going to infinity, there exists a subsequence n(k) such that \((X^{[0,2^{n(k)}]}(t))_{t\in [0, 1]}\) converges as \(k \rightarrow \infty \) (in the sense of finite-dimensional distributions). To do this, we write by the domain Markov property applied to the subinterval \([0,1] \subset [0,2^n]\):

where \({\tilde{X}}^{[0,1]}\) is an independent copy of \(X^{[0,1]}\). This means that to show convergence of (all) the finite dimensional distributions of \(X^{[0,2^n]}\) along (the same) subsequence, it suffices to show that \(X^{[0,2^n]}(1)\) has subsequential limits. However, this is just a consequence of Lemma 5.1.

So now assume that we have a subsequence \((n(k): k\geqslant 1)\) such that \((X^{[0,2^{n(k)}]}(t))_{t\in [0, 1]}\) converges to \((Y(t))_{t\in [0, 1]}\) in law for finite-dimensional distributions. If we can show that \(Y_t = \sigma B_t\) in the sense of finite-dimensional distributions for some \(\sigma \geqslant 0\) (not depending on the subsequence), then we will have completed the proof.

We first show that Y has independent and stationary increments. Pick \(0=t_0\leqslant t_1\leqslant \cdots \leqslant t_l \leqslant t_{l+1} =1\), and observe that by the Markov property,

is a limit in distribution as \(k\rightarrow \infty \) of

where the \(X^{[0,2^{n(k)}-t_{j-1}]}_j\) (for \(1\leqslant j\leqslant l+1\)) are independent copies of \(X^{[0,2^{n(k)}-t_{j-1}]}\) ; and \(Z^{k}_j\) for \(2\leqslant j \leqslant l\) is defined recursively by

Now we claim that for any \(s\in [0,1]\) and \(u\in [0,1)\), \(X^{[0,2^{n(k)}-s]}(u)\) converges in distribution as \(k\rightarrow \infty \) to the same limit as \(X^{[0,2^{n(k)}]}(u)\). To see this, we write by scaling and (5.3), whenever k is large enough that \(2^{n(k)}(2^{n(k)}-s)^{-1}u\leqslant 1\):

where \({\tilde{X}}^{[0,1]}\) is an independent copy of \(X^{[0,1]}\). Since \(X^{[0,1]}\) is stochastically continuous, the claim follows.

By the above claim, an induction argument, and the fact that \((t_{j+1}-t_j)/(2^{n(k)}-t_j) \rightarrow 0\) as \(k\rightarrow \infty \), it follows that \((t_{j+1}-t_j)/(2^{n(k)}-t_j) \times Z_j^k\) converges to 0 in distribution as \(k\rightarrow \infty \) for every \(1\leqslant j\leqslant l\). This means that the law of (5.4) is the same as the limit in distribution of

For this last step we have also used the independence of the \((X_j)\), the fact that the \((t_{j+1}-t_j)/(2^{n(k)}-t_j)\times Z_j^k\) actually converge in probability (because they converge in distribution to a constant), and the claim one more time.

Finally, by independence of the \(X_j\) again, we deduce that the entries in (5.4) (and so the increments of Y) must be independent. Furthermore the distribution of the jth entry depends only on \(t_j-t_{j-1}\) and so the increments are stationary. Hence, \((Y(t))_{t\in [0,1]}\) has independent and stationary increments. Y is also continuous in probability at every t, because of (5.3) and Assumptions 1.8. Thus Y is a Lévy process on [0, 1] (and can be extended to a Lévy process on all of \([0, \infty )\) by adding independent copies on \([1,2], [2,3], \ldots \)).

Now it is clear that Y also enjoys the scaling property: for \(t \leqslant 1\),

where all the equalities above are in law and the limits are in the sense of distribution. To justify the last equality we write, by the domain Markov property,

where X and \({\tilde{X}}\) are independent. Since the first term converges to \(\sqrt{t}Y(1)\) in distribution, and the second, by scaling, is equal in distribution to \(2^{-n(k)/2} X^{[0,1]}(t)\), we obtain the result.