Abstract

We introduce a graph-theoretic model of interface dynamics called competitive erosion. Each vertex of the graph is occupied by a particle that can be either red or blue. New red and blue particles alternately get emitted from their respective bases and perform random walk. On encountering a particle of the opposite color they kill it and occupy its position. We prove that on the cylinder graph (the product of a path and a cycle) an interface spontaneously forms between red and blue and is maintained in a predictable position with high probability.

Similar content being viewed by others

References

Asselah, A., Gaudilliere, A.: From logarithmic to subdiffusive polynomial uctuations for internal DLA and related growth models. Anna. Probab. 41(3A), 1115–1159 (2013)

Asselah, A., Gaudilliére, A.: Lower bounds on uctuations for internal DLA. Probab. Theory Relat. Fields 158(1–2), 39–53 (2014)

Candellero, E., Ganguly, S., Hoffman, C., Levine, L.: Oil and water: a two-type internal aggregation model, arXiv preprint. arXiv:1408.0776 (2014)

Fayolle, G., Malyshev, V.A., Menshikov, M.V.: Topics in the Constructive Theory of Countable Markov Chains. Cambridge University Press, Cambridge (1995)

Jerison, D., Levine, L., Sheffeld, S.: Logarithmic uctuations for internal DLA. J. Am. Math. Soc. 25(1), 271–301 (2012)

Jerison, D., Levine, L., Sheffeld, S.: Internal DLA and the gaussian free field. Duke Math. J. 163(2), 267–308 (2014)

Jerison, D., Levine, L., Sheffeld, S.: Internal DLA for cylinders. In: Fefferman, C., Ionescu, A.D., Phong D.H., Wainger, S. (eds.) Advances in Analysis: The Legacy of Elias M. Stein, pp. 189–214. Princeton University Press, Princeton (2014)

Kozma, G., Schreiber, E.: An asymptotic expansion for the discrete harmonic potential. Electron. J. Probab. 9(1), 1–17 (2004)

Lawler, G.F.: Intersections of Random Walks. Modern Birkhäuser Classics, Birkhäuser (2012)

Lawler, G.F., Bramson, M.: Internal diffusion limited aggregation. Ann. Probab. 20(4), 2117–2140 (1992)

Levin, D.A., Peres, Y., Wilmer, E.L.: Markov Chains and Mixing Times. American Mathematical Society, Providence (2009)

Levine, L., Peres, Y.: Strong spherical asymptotics for rotor-router aggregation and the divisible sandpile. Potential Anal. 30(1), 1–27 (2009)

Levine, L., Peres, Y.: Scaling limits for internal aggregation models with multiple sources. Journal d’Analyse Mathématique 111(1), 151–219 (2010)

Stanley, R.: Promotion and evacuation. Electron. J. Comb. 16(2), R9 (2009)

Timáar, Á.: Boundary-connectivity via graph theory. Proc. Am. Math. Soc. 141(2), 475–480 (2013). 47

Acknowledgments

We thank Gerandy Brito and Matthew Junge for helpful comments. We also thank the anonymous referees for many useful comments and suggestions that helped improve the paper. The work was initiated when S.G. was an intern with the Theory Group at Microsoft Research, Redmond and a part of it was completed when L.L. and J.P. were visiting. They thank the group for its hospitality.

Author information

Authors and Affiliations

Corresponding author

Additional information

Supported by NSF Grant DMS-1243606 and a Sloan Fellowship.

Partially supported by NSF Grant DMS-1001905.

Appendices

Appendix 1: IDLA on the cylinder

The proof of Theorem 6 follows by adapting the ideas of the proof appearing in [10]. The proof in [10] follows from a series of lemmas which we now state in our setting. Recall (74). Let \(\tau _{z}\), \({\tilde{\tau }}_{kn}\) be the hitting times of \(\phi (z)\) and \(\phi (Y_{kn,n})\) respectively.

Lemma 17

For any \(z=(x,y)\in {\mathcal {C}}_n\) with \(y\le kn\)

where \({\mathbb {P}}_{\phi (w)}\) and \({\mathbb {P}}_{Y_{0,n}}\) are the random walk measures on \({\mathcal {C}}_n\) with starting point \(\phi (w)\) and uniform over \(Y_{0,n}\) respectively.

Proof

By symmetry in the first coordinate, under \({\mathbb {P}}_{Y_{0,n}},\) for any j, the distribution of the random walk when it hits the set \(Y_{j,n},\) is uniform over the set \(Y_{j,n}\). Hence by the Markov property the chance that random walk hits \(\phi (z)\) before \(Y_{\phi (kn),n}\) after reaching the line \(Y_{\phi (j),n}\) is

Thus clearly for any \(j< kn\)

The lemma follows by summing over j from 0 through \(kn-1\). \(\square \)

Lemma 18

Given positive numbers k and \({\epsilon }\) with \({\epsilon }< 1\), there exists \(\beta =\beta (k,{\epsilon })\) such that for all \(z=(x,y)\) with \(y\le (1-{\epsilon })kn\)

Proof

Since \(\phi (kn)-\phi (y)\ge {\epsilon }kn\) the semi-disc of radius \(\min ({\epsilon }k,1/2)n\) around z lies below the line \(Y_{\phi (kn),n}\). The random walk starting uniformly on \(Y_{o,n}\) hits the interval \((z-\min ({\epsilon }k/2 ,1/4)n,z+\min ({\epsilon }k/2 ,1/4)n)\) with probability at least \(\min ({\epsilon }k,1/2)\). Now the lemma follows by the standard result that the random walk starting within radius n / 2 has \(\Omega (\frac{1}{\log n})\) chance of returning to the origin before exiting the ball of radius n in \({\mathbb {Z}}^2.\) This fact can be found in [9, Prop 1.6.7]. \(\square \)

The next result is the standard Azuma-Hoeffding inequality stated for sums of indicator variables.

Lemma 19

For any positive integer n if \(X_i\) \(i=1,2 \ldots n\) are independent indicator variables then

where \({\mu ={{\mathbb {E}}}\sum _{i=1}^{n}X_i}.\)

1.1 Hitting estimates

Consider the simple random walk \((Y(t))_{t \ge 0}\) on \({\mathbb {Z}}^2\).

Lemma 20

For \((x,y) \in {\mathbb {Z}}^2\), let \(h(x,y) = {{\mathbb {P}}}_{(x,y)} \{ Y(\tau ({\mathbb {Z}}\times \{0\})) = (0,0) \}\) be the probability of first hitting the x-axis at the origin. Then

Proof

Let

The discrete Laplacian

vanishes except when \((x,y) = (0,\pm 1)\), and \(\Delta \widetilde{h}(0,\pm 1) = \pm \frac{1}{4}\). Since \({{\widetilde{h}}}\) vanishes at \(\infty \) it follows that

where

is the recurrent potential kernel for \({\mathbb {Z}}^2\) (see [8]). Here \(\kappa \) is a constant whose value is irrelevant because it cancels in the difference (92). \(\square \)

Let \(X(\cdot )\) be the simple symmetric random walk on the half-infinite cylinder \({\mathcal {C}}_n=C_n \times {\mathbb {Z}}_{\ge 0}.\)

Lemma 21

For any positive integers \(j<k\), with \(\Delta =k-j<n \):

\(\mathrm{i.}\) for any \(w \in Y_{k,n}\),

where \(\tau (j)\) and \(\tau ^{+}(k)\) are the hitting and positive hitting times of \(Y_{j,n}\) and \(Y_{k,n}\) respectively for \(X(\cdot )\).

\(\mathrm{ii.}\) there exists a constant J such that for \(w\in Y_{j,n}\) and any subset \(B\subset Y_{k,n}\),

Proof

i. is the following standard result about one-dimensional random walk: starting from 1 the probability of hitting \(\Delta \) before 0 is \(\frac{1}{\Delta }.\)

Now we prove ii. Clearly it suffices to prove it in the case when B consists of a single element. Notice that, if \(Y(t)=(Y_1(t),Y_2(t))\) is the simple random walk on \({\mathbb {Z}}^2\), then

is distributed as the simple random walk on \({\mathcal {C}}_n.\) For any \(\ell \in {\mathbb {Z}}\) let \(\tau _1(\ell )\) be the hitting time of the line \(y=\ell ,\) for Y(t). Clearly by (93) for \(w=(0,j),z=(z_1,k)\in {\mathcal {C}}_n,\)

By union bound the RHS is at most

Using the notation in Lemma 20 we can write the above sum as

By Lemma 20 the above sum is

Hence we are done. \(\square \)

1.2 Proof of Theorem 6

Equipped with the results in the previous subsection the proof of Theorem 6 will now be completed by following the steps in [10] .

Lower bound It suffices to show \(C_n\times {[0,(1-\epsilon )kn]}\subset A({(1+\epsilon )kn^2})\). Fix \(z\in C_n\times {(1-\epsilon )kn}\) For any positive integer i we associate the following stopping times to the \(i^{th}\) walker:

-

\(\sigma ^i\): the stopping time in the IDLA process

-

\(\tau ^{i}_z\): the hitting time of \(\phi (z)\)

-

\(\tau ^{i}_{kn,n}\): the hitting time of the set \(\phi (Y_{kn,n}).\)

Now we define the random variables

Thus

Hence

where the last inequality holds for any a. Now by definition

We now bound the expectation of L. Define the following quantity: let independent random walks start from each \(w\in {\phi (C_n\times [0,kn])}\) and let

Clearly \(L\le {\tilde{L}}.\) Hence the RHS of (94) can be upper bounded by \({\mathbb {P}}(M<a)+{\mathbb {P}}({\tilde{L}}>a)\). Now

Choose \(a=(1+\epsilon /4)\max \bigl (\frac{\beta kn^2}{\ln n},{{\mathbb {E}}}({\tilde{L}})\bigr )\) where the \(\beta \) appears in Lemma 18. Now using Lemma 19, we get,

for some constant \(d=d({\epsilon },k)>0.\) Thus in (94) we get

The proof of the lower bound now follows by taking the union bound:

where the last inequality holds for large enough n when c is smaller than d.

Upper bound In [10] the upper bound is proven by showing that the growth of the cluster above level \((1+\epsilon )kn\) is dominated by a multitype branching process. However here we slightly modify the proof to take into account that in our situation the initial cluster is not empty. We define some notation. Let us denote the particles making it out of level \(\phi (Y_{kn,n})\) by \(w_1,w_2,\ldots \) and define

Choose \(k_0=k(1+\sqrt{{\epsilon }})n.\) We define

Given the above notation let

Define

Lemma 22

[10, Lemma 7] There exists a universal \(J_1>0\) such that for all \(k, {\epsilon }\in (0,1),\) \(n\ge N(k,{\epsilon })\) and all positive integers \(j,\ell \)

We include the proof of Lemma 22 for completeness. However first we show how it implies the upper bound in Theorem 6. Let us define the event

Now let \(B=B(k)>0\) be a constant to be specified later. Then

where \(n'=(k B)\sqrt{\epsilon }n-1 -k\sqrt{{\epsilon }} n.\) To see why these inequalities are true first note that the set \({\tilde{Y}}_{n',n}\) is at height less than \(k(1+B\sqrt{\epsilon }n).\) Hence the cluster at time \(kn^2\) should intersect \({\tilde{Y}}_{n',n}\) to grow beyond height \(k(1+B\sqrt{\epsilon })n.\) However on the event F at most \(2{\epsilon }k n^2\) particles out of the first \(kn^2\) move beyond height kn. Hence the size of the intersection of \({\tilde{Y}}_{n',n}\) and the cluster is at most \(Z_{n' }(2k \epsilon n^2).\) Thus we get the first inequality. The second inequality follows trivially from the fact that for a non-negative integer-valued random variable the expectation is at least as big as the probability of the random variable being positive. Using Lemma 22 we get

Thus

Now by choosing B such that \(4J_1 <(B-1)k\) we are done. \(\square \)

Proof of Lemma 22

The rate at which \({\tilde{Y}}_{\ell ,n}\) grows is at most the rate at which a particle exiting height kn reaches the occupied sites in \({\tilde{Y}}_{\ell -1,n}\). Thus if X(t) is the random walk on \(C_n\) defined in (73) then for any m

where the second inequality follows by Lemma 21 ii. Summing over \(m=0,1\ldots j\) we get

Iterating the above relation in \(\ell \) with fixed j gives us

The lemma follows by using the inequality

\(\square \)

Appendix 2: Green’s function and flows

We prove Lemma 6. We start by discussing some properties of the ordinary random walk on \(\mathrm {Cyl}_n\) [defined in (8)]. For any \(v \in \mathrm {Cyl}_n\) define

Lemma 23

For any point \((x,y)\in \mathrm {Cyl}_n\)

Proof

Consider the lazy symmetric random walk on the interval [0, n] where at 0 the chance that it moves to 1 is \(\frac{1}{4}\) and everywhere else the chance that it jumps is \(\frac{1}{2}.\) By symmetry of \(\mathrm {Cyl}_n\) in the x-coordinate it is clear that for all \((x,y)\in \mathrm {Cyl}_n,\) \(4n G_{n}(x,y)\) is the expected number of times that the above one-dimensional random walk starting from ny hits 0 before hitting n. The above quantity is easy to compute and is \(4n(1-y).\ \square \)

Remark 5

Thus for any \(\sigma \in \Omega \cup \Omega '_{n}\)

where \(h(\cdot )\) is defined in (14).

We now define the stopped Green’s function. For any \(A\subset \mathrm {Cyl}_n\) and \(v\in \mathrm {Cyl}_n\) define

Lemma 24

Given \(A \subset \mathrm {Cyl}_n\) such that \(A\cap (C_n \times \{1\})=\emptyset ,\) for all (x, y) in \(\mathrm {Cyl}_n\) we have

where \(H_{A}(\cdot )\) was defined in (27).

Proof

Let \(y_{t}\) be the height of the walk at time \(t\le {\tau }(A^c)\). Consider the following telescopic series:

Notice that since \(A\cap (C_n \times \{1\}) =\emptyset \), \(t<\tau _{A^c}\) implies \(y_t<1\). We make the following simple observation:

where \({\mathcal {F}}_t\) is the filtration generated by the random walk up to time t. Taking expectations on both sides of (102), we get

and hence we are done. \(\square \)

Remark 6

Note that the above lemma implies for any \((x,y)\,\in \,\mathrm {Cyl}_n\),

since the Green’s function is a non-negative quantity.

Next we relate the Green’s function to the solution of a variational problem. The results are well known and classical even though our setup is slightly different. Hence we choose to include the proofs for clarity. As defined in Sect. 4.1 let \(\vec {E}\) denote the set of directed edges of \(\mathrm {Cyl}_n.\)

For any function \(F: \mathrm {Cyl}_n \rightarrow {\mathbb {R}}\) define the gradient \(\nabla F : \vec {E} \rightarrow {\mathbb {R}}\) by

and the discrete Laplacian \(\Delta F : \mathrm {Cyl}_n \rightarrow {\mathbb {R}}\) by

Note that the graph \(\mathrm {Cyl}_n\) is 4-regular.

Recall the definition of energy from Sect. 4.1. The next result is a standard summation-by-parts formula.

Lemma 25

For any function \(F: \mathrm {Cyl}_n\rightarrow {\mathbb {R}}\)

The proof follows by definition and expanding the terms.

For a subset \(A \subset \mathrm {Cyl}_n\) recalling the definition of stopped Green’s function let

Also recall the definition of divergence (30).

Lemma 26

For any \((x,y)\in A\)

Proof

For any \(v=(x,y)\in A\) by definition

The last equality follows by the definition of \(G_{A}\) in (101) by looking at the first step of the random walk started from v. \(\square \)

We now prove that the random walk flow \(f_{A}\) on a set A is the flow with minimal energy.

Lemma 27

where the infimum is taken over all flows from \(\left( C_n \times \{0\}\right) \bigcap A\) to \(A^c\) such that for \((x,y)\in A\)

Proof

The proof follows by standard arguments, see [11, Theorem 9.10]. We sketch the main steps. One begins by observing that the flow \(f_{A}\) satisfies the cycle law, i.e. the sum of the flow along any cycle is 0. To see this notice that for any cycle

where \(x_{i}'s\in \mathrm {Cyl}_n,\)

The proof is then completed by first showing that the flow with the minimum energy must satisfy the cycle law, followed by showing that there is an unique flow satisfying the given divergence conditions and the cycle law. \(\square \)

Now suppose \(A\subset \mathrm {Cyl}_{n}{\setminus } \left( C_{n}\times \{1\}\right) \). Then

The first equality is by definition. The second equality follow from Lemma 25 and the fact that \(G_{A}\) is 0 outside A. The third equality is by (106). The last equality is by Lemma 24 since by hypothesis \(A \cap (C_{n}\times \{1\})=\emptyset \).

Proof of Lemma 6

The proof now follows from (107) and Lemma 27. \(\square \)

Appendix: Proof of Lemma 10

We first prove i. Looking at the process \(\omega (t)\) started from \(\omega (0)=\omega \) we see by (70) that the process

with \(X_0=g(\omega )\) is a submartingale with respect to the filtration \({\mathcal {F}}_t\). Also by hypothesis \(|Z_{t+1}-Z_{t}|\le 2A_2.\)

Now by the standard Azuma–Hoeffding inequality for submartingales, for any time \(t>0\) such that \(a_2-a_1t<0\) we have

Let T be as in the hypothesis of the lemma. We observe that the event \(\{X_{0} \ge A_1-a_2\} \cap \{ \tau (B)>T\}\) implies that

This is because by hypothesis \(Z_0=X_0\ge A_1-a_2\). Hence on the event \(\tau (B)>T\)

since \(X_T\le A_1\) by (68). Thus by (108)

To prove ii. let \(\omega _0=\omega (\tau (B^c)).\) By hypothesis

since by (69) the process cannot jump by more than \(A_2.\) Clearly it suffices to show

Now consider the submartingale

with \(W_0=x\), where \(\tau '=\tau (B)\) and \(\tau ''=\tau (B')\). We first claim that

To see this notice that by the Azuma-Hoeffding inequality it follows that

On the other hand the event \(\tau '\wedge \tau ''> T\) implies

Thus the event \(\tau '\wedge \tau ''> T\) implies

since by hypothesis \(T> \frac{2A_2}{a_1}\). (109) now follows from (110).

Now on the event \(\{\tau '\wedge \tau '' \le T\}\bigcap \{\tau ''< \tau '\}\) ,

Hence

since by hypothesis \(a_4> 2A_2\). Thus by (110) we have

Observe that

This along with (109) imply that \(\tau ''\) stochastically dominates a geometric variable with success probability at most

Thus we are done. \(\square \)

Future directions and related models

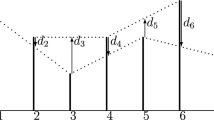

Fluctuations This article establishes that competitive erosion on the cylinder forms a macroscopic interface quickly. A natural next step is to find the order of magnitude of its fluctuations. Theorem 1 only shows that the fluctuations are o(n).

A variant of competitive erosion on \({\mathbb {Z}}^2\) with \(\mu _1 = \mu _2 = \delta _0\). From left to right, the set of all converted sites after \(n= 10^8, 10^9, 10^{10}\) particles have been released. Most red particles convert blue sites and vice versa, so that a relatively small number of sites are converted

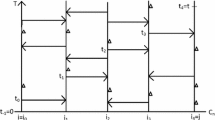

Randomly evolving interfaces Competitive erosion on the cylinder models a random interface fluctuating around a fixed line. It can also model a moving interface if the measures \(\mu _1\) and \(\mu _2\) are allowed to depend on time.

Another model of a randomly evolving interface arises in the case of fixed but equal measures \(\mu _1 = \mu _2\). Figure 11 shows a variant of competitive erosion in the square grid \({\mathbb {Z}}^2\). Initially all sites are colored white. Red and blue particles are alternately released from the origin. Each particle performs random walk until reaching a site in \({\mathbb {Z}}^2 -\{(0,0)\}\) colored differently from itself, and converts that site to its own color. Particles that return to the origin before converting a site are killed. One would not necessarily expect any interface to emerge from this process, but simulations show surprisingly coherent red and blue territories.

Conformal invariance Our choice of the cylinder graph with uniform sources \(\mu _i\) on the top and bottom is designed to make the function g in the level set heuristic (see (5)) as simple as possible: \(g(x,y) = 1-y\). A candidate Lyapunov function for more general graphs is

whose maximum over \(S \subset V\) of cardinality k is attained by the level set (7).

A case of particular interest is the following: Let \(V = D \cap (\frac{1}{n} {\mathbb {Z}}^2)\) where D is a bounded simply connected planar domain. We take \(\mu _i = \delta _{z_i}\) for points \(z_1,z_2 \in D\) adjacent to \(D^c\). As the edges of our graph we take the usual nearest-neighbor edges of \(\frac{1}{n} {\mathbb {Z}}^2\) and delete every edge between D and \(D^c\). In the case that D is the unit disk with \(z_1 = 1\) and \(z_2 = -1\), the level lines of g are circular arcs meeting \(\partial D\) at right angles. The location of the interface for general D can then be predicted by conformally mapping D to the disk. Extending the key Theorem 5 to the above setup is a technical challenge we address in a subsequent paper.

Rights and permissions

About this article

Cite this article

Ganguly, S., Levine, L., Peres, Y. et al. Formation of an interface by competitive erosion. Probab. Theory Relat. Fields 168, 455–509 (2017). https://doi.org/10.1007/s00440-016-0715-3

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00440-016-0715-3