Abstract

Information geometry provides a geometric approach to families of statistical models. The key geometric structures are the Fisher quadratic form and the Amari–Chentsov tensor. In statistics, the notion of sufficient statistic expresses the criterion for passing from one model to another without loss of information. This leads to the question how the geometric structures behave under such sufficient statistics. While this is well studied in the finite sample size case, in the infinite case, we encounter technical problems concerning the appropriate topologies. Here, we introduce notions of parametrized measure models and tensor fields on them that exhibit the right behavior under statistical transformations. Within this framework, we can then handle the topological issues and show that the Fisher metric and the Amari–Chentsov tensor on statistical models in the class of symmetric 2-tensor fields and 3-tensor fields can be uniquely (up to a constant) characterized by their invariance under sufficient statistics, thereby achieving a full generalization of the original result of Chentsov to infinite sample sizes. More generally, we decompose Markov morphisms between statistical models in terms of statistics. In particular, a monotonicity result for the Fisher information naturally follows.

Similar content being viewed by others

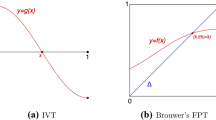

1 Introduction

Let us begin with a short synopsis of our work and its context. Parametrized statistics deals with families of probability measures on some sample space \(\Omega \) parametrized by a parameter \(x\) from some space \(M\) which we shall take to be a Banach manifold, in particular, a finite dimensional manifold. This parameter is to be estimated, and for that purpose, one wishes to quantify the dependence of the model on that parameter. That is achieved by the Fisher metric as first suggested by Rao [36], followed by Jeffreys [20], Efron [16] and then systematically developed by Chentsov [13, 14] and Morozova and Chentsov [32]. Moreover, there exists a natural affine structure on spaces of probability measures as discovered by Amari [1, 2] and Chentsov [15]. We refer the reader to [24, 31–33] and [4] for more extensive historical remarks and guide on other important contributions in the field. Such structures should be invariant under reparametrizations, and this leads us into the realm of differential geometry, the field of mathematics that systematically investigates geometric invariances. Statistics, however, requires more. There is the concept of a sufficient statistic, that is, a mapping between sample spaces that preserves all information about the parameter \(x\). Therefore, it is natural to also require the invariance of the geometric structures under sufficient statistics. It is relatively easy to see that the Fisher metric and the Amari–Chentsov tensor are invariant, but whether they are the only such invariant structures is more subtle. This is the question we are addressing and solving in the present paper. For finite sample spaces, this has been achieved by Chentsov long ago [14], see also Remark 3.18. The case of infinite sample spaces, however, is more difficult. The space of probability measures on an infinite sample space is infinite dimensional, and therefore, standard constructions from finite dimensional differential geometry may fail. The first successful approach to apply techniques of Banach space theory to the space of probability measures on an infinite sample space has been achieved by Pistone with Sempi [35] and other coworkers [11, 18]. However, there are technical difficulties, caused for instance by the fact that the topology on the considered Banach manifolds is so strong that the space of bounded random variables is not dense in that topology [11, Lemma 2].

Our approach is different. Our essential idea is that while the space of all probability measures \({\mathcal M}({\Omega })\) on the sample space \(\Omega \) in general will not carry the required geometric structures, it can still induce such structures on all finite or infinite dimensional models, that is, on statistical families with a Banach manifold of parameters. For that purpose, however, those families need to be embedded into the space of all measures, and including the embedding \(p\) as part of our notion of a statistical model allows us to treat the elements of \(M\) as measures on \(\Omega \). We can then pull back tensors from \({\mathcal M}({\Omega })\) to \(M\) and then require the needed regularity properties not on \({\mathcal M}({\Omega })\), where we might not be able to define them, but on \(M\), where they can be naturally defined. This leads us to a notion of a statistical model or statistical manifold [25–27] as a manifold \(M\) equipped with a (Fisher) metric \(g\) and an (Amari–Chentsov) 3-tensor which are induced by an embedding \(p\) into \({\mathcal M}({\Omega })\).

Our approach combines concepts from measure theory, information theory, and statistics. It thus is situated in information geometry, a new mathematical field that recently emerged, where geometric ideas and methods are exploited as principal tools to study mathematical statistics and related problems in information theory, neural networks, system theory [4]. Information geometry has also been identified as a natural formalism for complexity theory [5, 6]. In particular, complex networks can be analyzed with tools from information geometry [34]. We note that parameter spaces in information geometry are assumed to be smooth manifolds. This assumption is caused by limitation of methods of differential geometry. With recent extension of differential geometric methods to singular spaces, e.g. in [29], we hope to extend the field of applications of information geometry in the future.

The structure of our paper is as follows. In Sect. 2 we introduce the notion of a \(k\)-integrable parametrized measure model, which encompasses all known examples in information geometry considered by Chentsov, Amari and Pistone–Sempi. We compare our concept with the concept of a geometrically regular statistical model proposed by Amari. At the end of that section, we state our Main Theorem 2.10. In Sect. 3 we introduce the notion of sufficient statistics based on the Fisher–Neyman characterization (Definition 3.1, Lemma 3.3). We give a simple proof that the Amari–Chentsov structure is invariant under sufficient statistics (Theorem 3.5). At the end of the section we discuss Chentsov’s results on the uniqueness of the Fisher metric and the Amari–Chentsov tensor (Proposition 3.19, Remark 3.18, Corollary 3.20). At the end of that section, we prove our Main Theorem. In Sect. 4 we introduce the notion of a Markov morphism. A novel aspect of our concept of Markov morphisms between parametrized measure models is the consideration of smooth maps between the parameter spaces (Definition 4.4, Example 4.5). Thus, the geometry of parametrized measure models is intrinsic. We decompose a Markov morphism as a composition of the inverse of a Markov morphism, defined by a sufficient statistic, and a statistic (Theorem 4.10). As a consequence we give a geometric proof of the monotonicity theory for Markov morphisms (Corollary 4.11). Finally, in Sect. 5, we study the relations between \(k\)-integrable parametrized measure models and statistical models in the Pistone–Sempi theory.

2 Parametrized measure models

In this section we describe the geometry of spaces of measures and of parametrized families of measures. In technical terms, we introduce the notion of a \(k\)-integrable parametrized measure model (Definition 2.4) and the notion of tensor fields on them, following the locality and continuity condition (Definition 2.1, Remark 2.5). We show that our notion of generalized statistical models encompasses all statistical models considered by Chentsov, Amari, Pistone–Sempi (Remark 2.5, Example 2.6), and we compare our concept with that by Amari (Remark 2.7).

Let \(({\Omega }, \Sigma )\) be a measurable space. Later on, \({\Omega }\) will also have to carry a differentiable structure.

We consider the Banach space of all signed finite measures on \({\Omega }\) with the total variation \({\Vert \cdot \Vert }_{TV}\) as Banach norm. More precisely, the total variation of such a measure \(\mu \) is defined as

where the supremum is taken over all finite partitions \(\Omega = A_1 \dot{\cup }\cdots \dot{\cup }A_n\) with disjoint sets \(A_i \in \Sigma \). We consider the subset \({\mathcal M}({\Omega })\) of all finite non-negative measures on \({\Omega }\), and, with a \(\sigma \)-finite non-negative measure \(\mu _0\), we also consider the subspace

of signed measures dominated by \(\mu _0\). This space can be identified in terms of the canonical map \(i_{can}: {\mathcal S}({\Omega }, \mu _0) \rightarrow L^1({\Omega }, \mu _0)\), \(\mu \mapsto \frac{d \mu }{ d \mu _0}\). Note that

which implies that \(i_{can}\) is a Banach space isomorphism. Therefore, we refer to the topology of \({\mathcal S}({\Omega }, \mu _0)\) also as the \(L^1\) -topology. This is independent of the particular choice of the reference measure \(\mu _0\), because if \(\phi \in L^1({\Omega },\mu _0)\) and \(\psi \in L^1({\Omega },\phi \mu _0)\), then \(\psi \phi \in L^1({\Omega },\mu _0)\). Throughout the paper, we consider the following hierarchy of subsets of \({\mathcal S}({\Omega },\mu _0)\):

In particular, for \(\mu =\phi \mu _0 \in {\mathcal M}_+({\Omega }, \mu _0)\), i.e., \(\phi >0\), \(\mu _0\) and \(\mu \) have the same null sets and are equivalent, that is, \(\mu _0 =\phi ^{-1} \mu \in {\mathcal M}_+({\Omega },\mu )\). Thus, we have some kind of multiplicative structure on \({\mathcal M}_+({\Omega }, \mu _0)\), and one might hope to generate this via an exponential map from the linear structure on \(L^1({\Omega },\mu _0)\). The problem, however, is that if \(f\in L^1({\Omega },\mu _0)\), then we do not necessarily have \(e^f \in L^1({\Omega },\mu _0)\). When it is, then \(e^f \mu _0 \in {\mathcal M}_+({\Omega }, \mu _0)\), but when it is not, the measure \(e^f\mu _0\) is not well defined. Thus, certain infinitesimal deformations are obstructed, that is, cannot be integrated into local ones. Of course, this does not happen when \({\Omega }\) is finite, the case treated by Chentsov, and this is the technical reason why we need to work harder for our main result. (Pistone and Sempi have analyzed the underlying topological structure, and we shall describe their construction from our perspective in Sect. 5. The essential point for an intuitive understanding of this topology is that if \(e^f \in L^1\), then for \(0<t<1\), \(e^{tf}\in L^p\) for \(p=1/t >1\).)

In order to avoid this issue and in order to make contact with the basic construction of parametric statistics, we shall consider parametrized families of measures, that is, maps \(M \rightarrow {\mathcal M}({\Omega })\) of smooth Banach manifolds \(M\) into the “universal measure set” \({\mathcal M}(\Omega )\) and attempt to pull geometric structures from \({\mathcal M}({\Omega })\) back to \(M\) by such maps, which are, in a sense, similar to differentiable maps. Since, however, we may not be able to fully define these objects on \({\mathcal M}({\Omega })\), we shall have to push forward tensors from \(M\) instead, and integrate them w.r.t. the measures \(p(x)\) defined by a parametrized family. We shall now introduce the technical conditions needed to realize universal objects on \({\mathcal M}({\Omega })\) on such parametrized families.

Definition 2.1

A covariant \(n\) -tensor field on \({\mathcal M}({\Omega })\) assigns to each \(\mu \in {\mathcal M}({\Omega })\) a multilinear map \(\tau _\mu : \bigoplus ^n L^n ({\Omega }, \mu ) \rightarrow {\mathbb {R}}\) that is continuous w.r.t. the product topology on \(\bigoplus ^n L^n ({\Omega }, \mu )\).

In this definition, continuity refers to the continuity of the linear maps \(\tau _\mu \) for fixed \(\mu \). (This is different from requiring that \(\tau _\mu \) be continuous as a function of \(\mu \).)

Such objects then will be pulled back to \(M\) under a map \(p:M\rightarrow {\mathcal M}({\Omega })\), and they then operate on \(n\) vector fields on \(M\). When these vector fields are continuous, their evaluation under the pulled back covariant tensor field should also be continuous. Note that the (Banach) manifold structure on \(M\) defines a canonically induced structure of a (Banach) vector bundle \(TM \times _{n \, times} TM \rightarrow M\), regarding it as the \(n\)-fold Whitney sum of the (Banach) vector bundle \(TM \rightarrow M\). In contrast to direct sums, there is no canonical definition of a topology on the tensor product \(T_x^*M \otimes _{n\, times} T_x^* M\), whence the tensor product \(T^*M \otimes _{n\, times} T^* M \rightarrow M\) is not a (Banach) vector bundle if \(M\) is infinite dimensional. However, we can define weak continuity of its section as follows.

Definition 2.2

A continuous \(n\)-vector field \(V_n\) on a Banach manifold \(M\) is a continuous section of the bundle \(TM \times _{n \, times} TM \rightarrow M\). A section \(\tau \) of the bundle \(T^*M \otimes _{n\, times} T^* M\) is called a weakly continuous covariant \(n\)-tensor, if the value \(\tau (V_n)\) is a continuous function for any continuous \(n\)-vector field \(V_n\) on \(M\).

For a map \(p: M \rightarrow {\mathcal M}({\Omega },\mu )\) the composition \({\bar{p}} := i_{can}\circ p: M \rightarrow L^1(\Omega , \mu )\), \(x \mapsto {\bar{p}}(x) := \frac{d p(x)}{d \mu }\), will play a central role. Thus, \({\bar{p}}\) is a map from \(M\) to \(L^1(\Omega ,\mu )\), whence we can consider \({\bar{p}}\) also as a map \(M \times \Omega \rightarrow {\mathbb R}, (x, \omega ) \mapsto {\bar{p}}(\omega , x)\) such that

Of course, for a fixed \(x \in M\), the function \(\omega \mapsto {\bar{p}}(x, \omega )\) is only defined up to changes on a \(\mu \)-null set in \(\Omega \). We refer to a function \({\bar{p}}: M \times \Omega \rightarrow {\mathbb R}\) satisfying (2.1) as a density potential. However, this notation is slightly misleading, and the infinitesimal tangent vector of the family rather corresponds to \(\ln {\bar{p}}(\omega , x)\) (recall our discussion above of the exponentiation of \(f\in L^1({\Omega },\mu )\), and taking the logarithm of course is the inverse of exponentiation.) In particular, the pushforward of a tangent vector \(V\in T_x M\) is \({\partial }_V\ln {\bar{p}}(x, {\omega })\), and we often simply identify \(V\) with its pushforward when the map \(p\) is fixed in a given context.

Our parametrized families of measures will need to satisfy some further important technical requirements that we shall now list and that will lead us to our technical concept of a parametrized measure model.

-

1.

The parameter space \(M\) is a (finite or infinite dimensional) Banach manifold of class at least \(C^1\).

-

2.

There is a continuous mapping \(p : M \rightarrow {\mathcal M}_+({\Omega }, \mu )\), where the latter is provided with the \(L^1\)-topology.

-

3.

The composition \({\bar{p}} = i_{can}\circ p\) is Gateaux-differentiable as a map from the manifold \(M\) to the Banach space \(L^1({\Omega }, \mu )\).

-

4.

The 1-form

$$\begin{aligned} A(V)_x:= \int _{\Omega }{\partial }_V \ln {\bar{p}}(x, {\omega }) \, dp(x), \end{aligned}$$(2.2)the Fisher quadratic form

$$\begin{aligned} g^F(V, W)_x :=\int _{\Omega }{\partial }_V \ln {\bar{p}}(x, {\omega }) {\partial }_W \ln {\bar{p}}(x, {\omega }) \, dp(x) \end{aligned}$$(2.3)and the Amari–Chentsov 3-symmetric tensor

$$\begin{aligned} T^{AC} (V,W, X)_x : = \int _{\Omega }{\partial }_V \ln {\bar{p}}(x, {\omega }) {\partial }_W \ln {\bar{p}} (x, {\omega }){\partial }_X \ln {\bar{p}}(x, {\omega }) \ d p(x) \end{aligned}$$(2.4)are well-defined and continuous in the sense of Definition 2.2.

Remark 2.3

The name of Amari and Chentsov has been attributed to the tensor \(T^{AC}\) in [26] based on the fact that the 1-parameter family of affine connections that are differed by the Levi-Civita connection of the Fisher metric by the tensor \(T^{AC}\) up to a constant has been discovered by Chentsov and Amari independently. These connections are also called the Amari–Chentsov connections [26]. Earlier, in [25] Lauritzen has introduced the notion of a statistical manifold that is a smooth manifold equipped with a Riemannian metric and a 3-symmetric tensor.

We can now state our general definition of a parametrized measure model.

Definition 2.4

(cf. [3, §2 , p. 25], [4, §2.1]) Let \(k \ge 1\). A \(k\) -integrable parametrized measure model is a quadruple \((M, {\Omega }, \mu , p)\) consisting of a smooth (finite or infinite dimensional) Banach manifold \(M\) and a continuous map \( p: M \rightarrow {\mathcal M}_+({\Omega }, \mu )\) provided with the \(L^1\)-topology such that there exists a density potential \({\bar{p}} = {d p \over d\mu }: M \times {\Omega }\rightarrow {\mathbb {R}}\) satisfying (2.1), such that

-

1.

the function \(x \mapsto \ln {\bar{p}} (x, {\omega }) = \ln \frac{dp(x)}{d\mu }({\omega }): M \rightarrow {\mathbb {R}}\) is defined and continuously Gâteaux-differentiable for \(\mu \)-almost all \(\omega \in {\Omega }\), and the correspondence \(V \mapsto \partial _V \ln \frac{dp(x)}{d\mu }({\omega })\) depends continuously on \(V \in TM\) and is linear in each \(T_xM\),

-

2.

for all continuous vector fields \(V\) on \(M\) the function \( \omega \mapsto {\partial }_{V} \ln {\bar{p}}(x, {\omega })\) belongs to \(L^k({\Omega }, p(x))\); moreover, the function \(x \mapsto ||{\partial }_{V} \ln {\bar{p}}(x, {\omega })||_{L^k({\Omega }, p(x))}\) is continuous on \(M\).

We call \(M\) the parameter space of \((M, {\Omega }, \mu , p)\). We call \((M, {\Omega }, \mu , p)\) a statistical model if \(p(M)\subset {\mathcal P}_+ ({\Omega }, \mu )\). A \(k\)-integrable parametrized measure model \((M, {\Omega }, \mu , p)\) is called immersed if \(d_x \ln {\bar{p}}: T_xM \rightarrow L^k({\Omega }, p(x))\) is injective for all \(x \in M\).

Here the continuous Gâteaux-differentiability of \(\ln {\bar{p}}(x, {\omega })\), for a fixed \({\omega }\in {\Omega }\), is understood as the continuity of the Gateaux-differential as a function on \(TM\) [19, chapter I.3].

Remark 2.5

-

1.

Note that, as explained above, the choice of a reference measure in \({\mathcal M}_+({\Omega }, \mu )\) is immaterial for a \(k\)-integrable parametrized measure model \((M, {\Omega }, \mu , p)\).

-

2.

For a statistical model, (2.2) vanishes identically. Recalling the identification of the tangent vector \(V\) on \(M\) with its pushforward \({\partial }_V \ln {\bar{p}}\), this simply means

$$\begin{aligned} \int _{\Omega }Vd\mu =0. \end{aligned}$$(2.5)To obtain (2.5) we argue as follows. For a curve \(x(t), \, t \in (-{\varepsilon },{\varepsilon }),\) on \(M\) with \({\partial }_t : = \dot{x}(t) = V((x(t))\) the condition (2) in Definition 2.4 implies that

$$\begin{aligned} f(t): = \int _{{\Omega }}{\partial }_t \ln {\bar{p}} (x(t), {\omega })\,d p(x(t)) \end{aligned}$$is continuous and hence integrable over \((-{\varepsilon }, {\varepsilon })\). In particular, \(A(V)_x\) is continuous in \(x\). Apply the Fubini theorem and the condition (1) in Definition 2.4 we have

$$\begin{aligned}&\int _{-{\varepsilon }} ^{\varepsilon }\int _{\Omega }({\partial }_t \ln {\bar{p}} (x(t), {\omega }))p(x(t))d\mu \,dt = \int _{{\Omega }} \int _{-{\varepsilon }}^{\varepsilon }({\partial }_t \ln {\bar{p}}(t, {\omega })) p (t, {\omega }) dt d\mu \\&\quad =\int _{\Omega }( p({\varepsilon }, {\omega }) - p(-{\varepsilon }, {\omega }))d\mu = 0. \end{aligned}$$Observe that the above formula for general \(k\)-integrable parametrized measure models implies

$$\begin{aligned} {\partial }_V \int _\Omega \, dp(x) = \int _{{\Omega }} {\partial }_V \ln p(x, {\omega })\, dp(x) \end{aligned}$$(2.6)for all \(x \in M\) and for all tangent vectors \(V \in T_x M\).

-

3.

For any \(k\)-integrable parametrized measure model \((M,{\Omega }, \mu , p)\) the composition \({\bar{p}} = i_{can}\circ p: M \rightarrow L ^1 ({\Omega }, \mu )\) is Gâteaux-differentiable by (2.6) and taking into account

$$\begin{aligned} \int _{\Omega }|{\partial }_V e ^{ \ln {\bar{p}}(x)}| d\mu = \int _{{\Omega }} | {\bar{p}} (x) {\partial }_V \ln {\bar{p}} (x)| d\mu = \int _{{\Omega }} |{\partial }_V \ln {\bar{p}}(x)| d p(x)< \infty . \end{aligned}$$ -

4.

Any \(3\)-integrable parametrized measure model carries the Fisher quadratic form and the Amari–Chentsov tensor, which are continuous in the sense of Definition 2.2. On a \(k\)-integrable parametrized measure model \((M, {\Omega }, \mu , p)\) the covariant symmetric \(n\)-tensor field \(T^n(V, \ldots , V) := ({\partial }_V \ln {\bar{p}}(x, {\omega }))^n\) satisfies the locality and continuity conditions required in the introduction.

-

5.

In [15] Chentsov considered only statistical models \((M, {\Omega }_n, \mu _n, p)\) where \(M\) is a submanifold in \({\mathcal P}_{+} ({\Omega }_n, \mu _n)\) and \(p \) is the canonical embedding, see also Example 2.6. Amari and all authors before Pistone and Sempi considered statistical models \((M, {\Omega }, \mu , p)\) where \(M\) is finite dimensional and \(p(M) \subset {\mathcal P}_{+} ({\Omega }, \mu )\) [4]. Their examples satisfy the conditions in Definition 2.4.

-

6.

In [7, Chapter 3] and in [28, Definition 4.10] we propose different refinements of the notion of a \(k\)-integrable parametrized measure model, for which the validity of the condition (2) for \(k\ge 1\) implies the validity of the condition (2) for all \(1\le p \le k\). Though the present notion of a \(k\)-integrable parametrized measure model is not as elegant as we wish, it seems to us closest to suggestions of Amari and Cramer, see Remark 2.7.

Example 2.6

-

1.

Let \({\Omega }_n\) be a finite set of \(n\) elements and \(\mu _n\) a measure of maximal support on \({\Omega }_n\). It is evident that \({\mathcal M}_{+} ({\Omega }_n, \mu _n)\) is diffeomorphic to \({\mathbb {R}}^n\). Let \(S\) be a \(C^1\)-submanifold in \({\mathcal P}_{+}({\Omega }_n, \mu _n)\) and \(i_S : S \rightarrow {\mathcal P}_{+} ({\Omega }_n, \mu _n)\) the canonical embedding. Then \((S, {\Omega }_n, \mu _n, i_S)\) is an immersed \(k\)-integrable statistical model for all \(k \ge 1\). In particular, \(({\mathcal P}_{+}({\Omega }_n, \mu _n), {\Omega }_n, \mu _n, Id)\) is a \(k\)-integrable statistical model. Conversely, for any immersed \(1\)-integrable statistical model \((M, {\Omega }_n, \mu _n, p)\) the map \(p : M \rightarrow {\mathcal P}_{+}({\Omega }_n, \mu _n)\) defines an immersion \(M \rightarrow {\mathcal P}_{+} ({\Omega }_n, \mu _n)\) between differentiable manifolds.

-

2.

If \(s: N \rightarrow M\) is a smooth map and \((M, {\Omega }, \mu , p)\) is a \(k\)-integrable parametrized measure model, then \((N, {\Omega }, \mu , p\circ s)\) is a \(k\)-integrable parametrized measure model.

-

3.

For a measure space \(({\Omega }, \mu _0)\) we define the set

$$\begin{aligned} {\mathcal M}^{bd}_+({\Omega }, \mu _0) := \{ \mu = e^f \mu _0 \;: \;f \in L^\infty ({\Omega }, \mu _0)\}. \end{aligned}$$With the canonical identification \({\mathcal M}^{bd}_+({\Omega }, \mu _0) \ni \mu \mapsto \ln \left( \frac{d\mu }{d\mu _0}\right) \in L^\infty ({\Omega }, \mu _0)\), we may regard \({\mathcal M}^{bd}_+({\Omega }, \mu _0)\) as a Banach manifold, and it is straightforward to verify that the inclusion

$$\begin{aligned} p : {\mathcal M}^{bd}_+({\Omega }, \mu _0) \hookrightarrow {\mathcal M}_+({\Omega }, \mu _0) \end{aligned}$$is \(k\)-integrable for all \(k\).

-

4.

Let \({\Omega }_1, {\Omega }_2\) be smooth manifolds with their Borel \(\sigma \)-algebras, and let \(\kappa : {\Omega }_1 \rightarrow \Omega _2\) be differentiable. For a (signed) finite measure \(\mu \) on \({\Omega }_1\), we define its push-forward \(\kappa _*(\mu )\) as

$$\begin{aligned} \kappa _*(\mu ) (A) := \mu (\kappa ^{-1}(A)),\quad \text{ for } \text{ a } \text{ Borel } \text{ subset } A \subset \Omega _2. \end{aligned}$$Moreover, let \(\mu _1\) be a Lebesgue measure on \(\Omega _1\), i.e., a measure locally equivalent to the Lebesgue measure on \({\mathbb R}^n\), and let \(\mu _2 := \kappa _*(\mu _1)\). Then the set \(\Omega _2^{sing}\) of the singular values of \(\kappa \) is a null set w.r.t. \(\mu _2\), and for \({\omega }_2 \in \Omega _2^{reg} := \Omega _2 \backslash \Omega _2^{sing}\), there is a transverse measure \(\mu _{{\omega }_2}^\perp \) on \(\kappa ^{-1}({\omega }_2) \subset \Omega _1\) such that for each Borel set \(A \subset {\Omega }_2\)

$$\begin{aligned} \int _{\kappa ^{-1}(A)} d\mu _1 = \int _A \left( \int _{\kappa ^{-1}({\omega }_2)} d\mu _{{\omega }_2}^\perp \right) d\mu _2({\omega }_2). \end{aligned}$$Then the map

$$\begin{aligned} \kappa _*: {\mathcal M}^{bd}_+({\Omega }_1, \mu _1) \longrightarrow {\mathcal M}_+(\Omega _2, \mu _2) \end{aligned}$$with \({\mathcal M}^{bd}_+({\Omega }_1, \mu _1)\) from above is a \(k\)-integrable parametrized measure model for any \(k\).

On a 3-integrable parametrized measure model \((M, {\Omega }, \mu , p)\) the pair of the Fisher quadratic form and the Amari–Chentsov tensor will be called the Amari–Chentsov structure.

Remark 2.7

We would like to compare our concept of a \(k\)-integrable parametrized measure model with the concept of a geometrical regular statistical model proposed by Amari, for instance in [3, §2]. Amari listed 6 properties a geometrically regular statistical model \(\{p (x)\in {\mathcal P}_+({\Omega }, \mu )\}\) must satisfy [3], A\(_1\)–A\(_6\), p. 25]. The condition A\(_1\) says that the domain of parameter \(x\) is homeomorphic to \({\mathbb {R}}^n\). The conditions A\(_2\) and A\(_3\) are equivalent to our condition (2) listed just before Definition 2.4. The condition A\(_4\) requires that \({\bar{p}} (x, {\omega })\) is smooth in \(x\) uniformly in \({\omega }\), and moreover the relation (2.6) holds. The condition A\(_5\) requires that a statistical model is 3-integrable. The last condition A\(_6\) requires that the Fisher quadratic form is positive definite. Amari’s conditions are slightly stronger than ours, but in general our concept agrees with his concept. Note that similar regularity conditions have been posed by Cramer [12, p.500-501], see also [23, Chapter 2, §6].

As mentioned above, we consider tensor fields on parametrized measure models \((M, \Omega , \mu ,p)\) that are inherited from a corresponding field on the “universal measure set” \({\mathcal M}(\Omega )\) in terms of the parametrization \(p\).

Note that we do not impose any strong regularity conditions on tensor fields on \({\mathcal M}(\Omega )\). Instead, we assume the required regularity and continuity conditions to be satisfied on the pull-back of the field with respect to a parametrization \(p : M \rightarrow {\mathcal M}(\Omega )\). In addition to these conditions, the existence of a global tensor on \({\mathcal M}(\Omega )\) sets some compatibility constraints on the associated fields on the class of parametrized measure models \((M, \Omega , \mu ,p)\). In the following definition we summarize necessary regularity and compatibility conditions for tensor fields, which are, in particular, satisfied in the case of the Fisher quadratic form and the Amari–Chentsov tensor.

Definition 2.8

(Locality and continuity condition) A statistical covariant continuous \(n\)-tensor field \(A\) assigns to each parametrized measure model \((M, {\Omega }, \mu , p)\) a continuous (in the sense of Definition 2.2) covariant \(n\)-tensor field \(A|_{(M, {\Omega }, \mu , p)}\) on \(M\) (cf. Definition 2.1). A statistical covariant continuous \(n\)-tensor field \(A\) is called local if there is a pointwise continuous covariant \(n\)-tensor field \(\tilde{A}\) on \({\mathcal M}({\Omega })\) with the following property

In particular, this means that the value depends only on \(p(x)\), but not on the manifold \(M\) defining the parametrized family of which \(p(x)\) is a member.

Remark 2.9

-

1.

Assume that \(A\) is a local statistical covariant continuous \(n\)-tensor field. Using Example 2.6.3 we note that there exists at most one point-wise continuous \(n\)-tensor field \(\tilde{A}\) on \({\mathcal M}({\Omega })\) such that \(A\) is defined by \(\tilde{A}\) as in (2.7). Thus, in order to define \(A\) it suffices to determine the associated point-wise continuous \(n\)-tensor field \(\tilde{A}\) on \({\mathcal M}({\Omega })\) and then verify if the original statistical field \(A\) is continuous. Condition (2.7) holds for the Fisher quadratic form field and the Amari–Chentsov tensor field. The choice of \({\partial }_V \ln {\bar{p}} (x)\) is also related to the Gâteaux-differentiability of \(p\) (Remark 2.5.3). We choose \(L^n ({\Omega }, p(x)) \) as a natural condition for the value \({\partial }_{V_n}\ln {\bar{p}}(x)\) since it is a natural extension of the condition for the existence of the Fisher quadratic form and the Amari–Chentsov tensor on a parametrized measure model.

-

2.

The locality and continuity condition holds obviously for tensor fields on statistical models associated with finite sample spaces as in the Chentsov work [15].

-

3.

In [26] and [27], Lê proved the following variant of the locality condition, which has been asked by Lauritzen [25] and Amari–Nagaoka [4]. For any statistical manifold \((M, g, T)\) there exist a finite sample space \({\Omega }_n\) provided with a dominant measure \(\mu _n\) and an immersion \(p: M \rightarrow {\mathcal M}({\Omega }_n, \mu _n)= {\mathcal M}({\Omega }_n)\) such that the statistical structure \((g, T)\) is induced from the Amari–Chentsov structure on \(({\mathcal M}({\Omega }_n, \mu _n), {\Omega }_n, \mu , Id)\) via \(p\).

Our Main Theorem uses the notion of a sufficient statistic and the associated invariance property. As already stated in the introduction, sufficient statistics are important transformations between parametrized measure models, since they preserve the information of the underlying models. Although we introduce the corresponding definitions later in the paper, we present our Main Theorem already here so that its main structure guides the arguments and motivates further results of the paper.

Theorem 2.10

(Main theorem)

-

1.

Assume that \(A\) is a local statistical continuous 1-form field. If \(A\) is invariant under sufficient statistics then there is a continuous function \(c: {\mathbb {R}}\rightarrow {\mathbb {R}}\) such that for all finite measures \(\mu \) on \({\Omega }\) and for all \(V \in L^1 ({\Omega }, \mu )\) we have

$$\begin{aligned} \tilde{A}_\mu (V) = c\left( \int _{\Omega }d\mu \right) \cdot \int _{\Omega }V d\mu . \end{aligned}$$In particular\(,\) recalling (2.5), there is no weakly continuous 1-form field on statistical models that is invariant under sufficient statistics. On a parametrized measure model \((M, {\Omega }, \mu , p)\) the field \(A\) is expressed as follows

$$\begin{aligned} A (V)_x = c\left( \int _{\Omega }dp(x)\right) \cdot {\partial }_V \left( \int _{\Omega }dp (x)\right) . \end{aligned}$$(2.8) -

2.

Assume that \(F\) is a local statistical continuous quadratic form field. If \(F\) is invariant under sufficient statistics then there are continuous functions \(f, d: {\mathbb {R}}\rightarrow {\mathbb {R}}\) such that \(F(x) = f(\int _{\Omega }d p(x))g^F(x) + d(\int _{\Omega }d p(x))A(x)^2\), where \(A\) is the field in \((1)\) with \(c=1\) and \(g^F\) is the Fisher quadratic form. In particular, the Fisher quadratic form is the unique up to a constant weakly continuous quadratic form field on statistical models that is invariant under sufficient statistics.

-

3.

Assume that \(T\) is a local statistical continuous covariant symmetric 3-tensor field. If \(T\) is invariant under sufficient statistics then there is a continuous function \(t: {\mathbb {R}}\rightarrow {\mathbb {R}}\) such that \(T(x) = t(\int _{\Omega }p(x))T^{AC}(x) + A_1(x)^3 + A_2(x) \cdot g^F(x)\), where \(A_1, A_2\) are the fields described in \((1),\) \(g^F\) and \(T^{AC}\) are the Fisher quadratic form and the Amari–Chentsov tensor respectively. In particular, the Amari–Chentsov tensor is the unique up to a constant weakly continuous 3-symmetric tensors field on statistical models that is invariant under sufficient statistics.

Campbell noticed that the Fisher metric on parametrized measure models associated with a finite sample space \({\Omega }_n\) coincides with the Shahshahani metric [10], which is important in mathematical biology and game theory [37]. It is interesting to find applications in this direction of the Fisher metric and other natural metrics on generalized statistical models described in the Main Theorem.

3 Sufficient statistics and the Amari–Chentsov structure

A statistic \(\kappa \) is a measurable map between a measure space \(({\Omega }_1, \mu _1)\) and a measurable space \({\Omega }_2\). One of the most important properties of the Fisher quadratic form and the Amari–Chentsov tensor is the invariance of these structures under statistics \(\kappa : {\Omega }_1 \rightarrow {\Omega }_2\) that are sufficient (a notion introduced by Fisher in 1922 [17]) for the parameter \(x\in (M, {\Omega }_1, \mu , p)\) (Definition 3.1, Theorem 3.5). In other words, the Fisher quadratic form and the Amari–Chentsov tensor on \((M,{\Omega }_1, \mu , p)\) and \((M, {\Omega }_2, \kappa _*(\mu ), \kappa _*(p))\) coincide, if \(\kappa \) is sufficient. Sufficient statistics represent important transformations between parametrized measure models, since they preserve the information of the underlying models. Thus one wishes to know whether there are other quadratic forms and 3-symmetric tensors on parametrized measure models which are invariant under sufficient statistics. This question has been solved by Chentsov in the negative for statistical models associated with finite sample spaces [15], see Proposition 3.19. However, one naturally wishes to consider infinite sample spaces \(\Omega \), and in this case the space of measures becomes infinite dimensional, and the topological aspects then become more subtle. More precisely, the main difficulty for an extension of the Chentsov theorem to all parametrized measure models is caused by two facts. Firstly, a statistical model associated with a finite sample space can be regarded locally as a submanifold in a universal statistical model \(({\mathcal P}_+ ({\Omega }_n, \mu _n), {\Omega }_n, \mu _n)\), which is a finite-dimensional open simplex (Example 2.6). In this case, it suffices to consider the Fisher metric, the Amari–Chentsov tensor and other tensor fields on this open simplex. Secondly, the structure of sufficient statistics associated with the considered statistical models can be described in terms of Markov congruent embeddings [15], see also our discussion at the end of Sect. 4. It is not easy to generalize these facts to statistical models associated with infinite sample space, since, in particular, there is no canonical smooth structure on the set \({\mathcal M}_{+}({\Omega }, \mu )\) of all measures equivalent to \(\mu \), or on the set \({\mathcal M}({\Omega }, \mu )\) of all measures dominated by \(\mu \).

In this section, we first give a simple proof that the Amari–Chentsov structure is invariant under sufficient statistics (Theorem 3.5). We also give a geometric proof of the Fisher–Neyman factorization theorem which characterizes a sufficient statistic \(\kappa : ({\Omega }_1, \mu _1) \rightarrow {\Omega }_2\) under the assumption that \(\kappa : {\Omega }_1 \rightarrow {\Omega }_2\) is a smooth map (Theorem 3.10). Using Theorem 3.10 we present a proof of the monotonicity theorem (Theorem 3.11). We also consider examples of sufficient statistics, which are associated with Markov congruent embeddings from \({\mathcal M}_{+}({\Omega }_n, \mu _n)\) to \({\mathcal M}_{+}({\Omega }_m, \mu _m)\) (Example 3.14). Using them we discuss Chentsov’s results on geometric structures which are invariant under sufficient statistics between finite sample spaces (Proposition 3.19, Lemma 3.16). We shall then be in a position to prove our Main Theorem.

For a measurable map \(\kappa :({\Omega }_1,\mu _1) \rightarrow {\Omega }_2\) let us denote by \(\kappa _* (\mu _1)\) the push-forward measure on \({\Omega }_2\).

Definition 3.1

(cf. [4, (2.17)], [9, Theorem 1, p. 117]) Assume that \((M, {\Omega }_1, \mu _1, p_1)\) is a \(k\)-integrable parametrized measure model and \({\Omega }_2\) is a measurable space. A statistic \(\kappa : ({\Omega }_1, \mu _1) \rightarrow {\Omega }_2\) is said to be sufficient for the parameter \(x\in M\) if there exist a function \(s: M \times {\Omega }_2 \rightarrow {\mathbb {R}}\) and a function \(t \in L^1({\Omega }_1, \mu _1)\) such that for all \(x \in M\) we have \(s(x, {\omega }_2) \in L^1 ({\Omega }_2, \kappa _*(\mu _1))\) and

Remark 3.2

Definition 3.1 is a version of the Fisher–Neyman characterization theorem, which states that a statistic is sufficient for the parameter \(x \in M\) if and only if (3.1) holds.

A measurable map \(\kappa :({\Omega }_1,\mu _1) \rightarrow {\Omega }_2\) transforms a parametrized measure model \((M, {\Omega }_1, \mu _1, p_1)\) into the parametrized measure model \((M_1, {\Omega }_2, \kappa _*(\mu _1),\kappa _*(p_1))\) whose density potential \(\kappa _*({\bar{p}}_1)\) is defined by

Lemma 3.3

A statistic \(\kappa : ({\Omega }_1, \mu _1) \rightarrow {\Omega }_2\) is sufficient for the parameter \(x\in M\) if and only if the function

does not depend on \(x\) for almost all \({\omega }_1\in ({\Omega }_1,\mu _1).\)

Proof

The “if” part of Lemma 3.3 is obvious. Now we assume that (3.1) holds, i.e. \({\bar{p}}_1(x,{\omega }_1)=s(x,\kappa ({\omega }_1))\cdot t({\omega }_1)\) for all \(x\in M\) and almost everywhere on \(({\Omega }_1, \mu _1)\). Then for all \(x \in M\) and almost all \({\omega }_1 \in ({\Omega }_1, \mu _1)\) we have

From (3.3) we obtain for all \(x\in M\)

This completes the proof of Lemma 3.3.\(\square \)

We get immediately

Corollary 3.4

Assume that \(\kappa : {\Omega }_1 \rightarrow {\Omega }_2\) is a sufficient statistic for the parameter \(x\in M\) where \((M, {\Omega }_1, \mu _1, p_1)\) is a \(k\)-integrable parametrized measure model. Then \((M, {\Omega }_2, \kappa _*(\mu _1), \kappa _*(p_1))\) is also a \(k\)-integrable parametrized measure model.

Let \(\kappa : ({\Omega }_1, \mu _1) \rightarrow ({\Omega }_2, \mu _2)\) be a statistic and \((M, {\Omega }_1, \mu _1, p_1)\) a \(k\)-integrable parametrized measure model. The Fisher quadratic form \(\tilde{g} ^F\) on the transformed parametrized measure model \((M, {\Omega }_2, \kappa _*(\mu _1), \kappa _*(p_1))\) is defined by

Theorem 3.5

If a statistic \(\kappa \) is sufficient for the parameter \(x\in M,\) then the Amari–Chentsov structure transformed by \(\kappa \) is equal to the original structure.

Proof

Assume that a statistic \(\kappa \) is sufficient for the parameter \(x \in M\). By Lemma 3.3 we have for all \(x\in M\)

Hence for all \(x\in M\) and all \(V \in T_x M\)

It follows for all \(x\in M\) and all \(V \in T_x M\)

This proves the invariance of the Fisher metric under sufficient statistics. The invariance of the Amari–Chentsov tensor under sufficient statistics is proved in the same way.

\(\square \)

Corollary 3.6

Assume that \({\Omega }\) is a differentiable manifold provided with the Borel \(\sigma \)-algebra. The Amari–Chentsov structure on any \(k\)-integrable parametrized measure model \((M, {\Omega }, \mu , p)\) is invariant under the action of the diffeomorphism group of \({\Omega }\).

Remark 3.7

The first known variant of Theorem 3.5 is the second part of the monotonicity theorem (Theorem 3.11). The invariance of the Amari–Chentsov structure on statistical models associated with finite sample spaces under sufficient statistics has been discovered first by Chentsov [15].

In what follows we interpret the function \(r(x, {\omega }_1)\) assuming that \({\Omega }_1\) and \({\Omega }_2\) are smooth manifolds supplied with the Borel \(\sigma \)-algebra and \(\kappa \) is smooth. Furthermore, we assume that \(\mu _1\) is dominated by a Lebesgue measure on \({\Omega }_1\), i.e. a measure that is locally equivalent to the Lebesgue measure on \({\mathbb {R}}^n\). Then the set \({\Omega }_2^{sing}\) of singular values of \(\kappa \) is a null set in \(({\Omega }_2, \kappa _*(\mu _1))\). Let \({\omega }_2\) be a regular value of \(\kappa \). Then \(\kappa ^{-1}({\omega }_2)\) is a smooth submanifold of \({\Omega }_1\). Furthermore, any sufficiently small open neighborhood \(U_{\varepsilon }({\omega }_2)\subset {\Omega }_2\) of \({\omega }_2\) consists only of regular values of \(\kappa \). Without loss of generality we assume that the preimage \(\kappa ^{-1} ( U_{\varepsilon }({\omega }_2))\) is a direct product \(U_{\varepsilon }({\omega }_2) \times \kappa ^{-1} ({\omega }_2)\), which is the case if \(U_{\varepsilon }({\omega }_2)\) is diffeomorphic to a ball. The measure \(\mu _1\) (respectively, \(p_1(x)\)) on the source space and the induced measure \(\kappa _*(\mu _1)\) (respectively, \(\kappa _*(p_1(x))\)) on the target space define a “vertical” measure \(\mu ^\perp _{{\omega }_2}\), which depends on \(\mu _1\), on each fiber \(\kappa ^{-1} ({\omega }_2)\) by the following formula:

for all \(y \in \kappa ^{-1} ({\omega }_2)\). (Respectively, we replace \(\mu _1\) by \(p_1(x)\) in the LHS and RHS of (3.8)). Here we identify a point \(({\omega }_2, y\in \kappa ^{-1} ({\omega }_2))\) with the image of \(y\) in \({\Omega }_1\) via the inclusion \(f ^{-1} ({\omega }_2) \rightarrow {\Omega }_1\). Note that \(d\mu _{{\omega }_2}^\perp (\mu _1, y)\) is well-defined only if \({\omega }_2 \in \kappa ({\Omega }_1)\).

Lemma 3.8

Assume that the value \({\omega }_2\) of a statistic \(\kappa \) is regular. Then \(\mu ^\perp _{{\omega }_2}(\mu _1)\) is a probability measure on \(\kappa ^{-1} ({\omega }_2)\) for any finite measure \(\mu _1\) on \({\Omega }_1\).

Proof

We need to show that

Let \(g\) be a Riemannian metric on \({\Omega }_2\). Denote by \(D_{\varepsilon }({\omega }_2)\) the disk with center at \({\omega }_2\) and of radius \({\varepsilon }\). Using (3.8) and Fubini’s formula we obtain

Taking into account

we derive from (3.10)

This proves (3.9) and Lemma 3.8.\(\square \)

Remark 3.9

The measure \(\mu ^\perp _{{\omega }_2}\) is the conditional distribution \((d{\omega }_1| {\omega }_2)\) of the variable (elementary event) \({\omega }_1\) subject to the condition \(\kappa = {\omega }_2\). In general, a conditional distribution \( (d{\omega }_1|{\omega }_2)\) of the variable \({\omega }_1\) subject to condition \(\kappa = {\omega }_2\) can be defined for measurable mappings, which need not be smooth. We refer to [21, p. 81], [9, p. 106] for a definition of a conditional distribution in a general case.

Theorem 3.10

Assume that \({\Omega }_1\) and \({\Omega }_2\) are smooth manifolds supplied with Borel \(\sigma \)-algebras and \(\mu _1\) is a measure on \({\Omega }_1\) dominated by a Lebesgue measure. Let \((M, {\Omega }_1, \mu _1, p_1)\) be a \(k\)-integrable parametrized measure model. A smooth statistic \(\kappa : ({\Omega }_1, \mu _1) \rightarrow {\Omega }_2\) is sufficient for the parameter \(x\in M\) if and only if the conditional distribution \(\mu _{{\omega }_2}^\perp (p_1(x))\) defined on the set of regular values \({\omega }_2\) of \(\kappa \) is independent of \(x\in M\).

Proof

Representing a point \({\omega }_1\) by the pair \((\kappa ({\omega }_1), y)\), \(y \in \kappa ^{-1} (\kappa ({\omega }_1))\), we write

Observe that (3.13) is equivalent to the following

(3.14) implies that \(\tilde{\mu }^\perp _{\kappa ({\omega }_1)} (x, y)\) coincides with \( r(x, {\omega }_1)\). Now we obtain Theorem 3.10 from Lemma 3.3 immediately.

\(\square \)

Using Lemma 3.3 and Theorem 3.10 we will present a proof of the monotonicity theorem (Theorem 3.11), which characterizes sufficient statistics in terms of the Fisher information metric.

Theorem 3.11

(Monotonicity theorem, cf. [4, Theorem 2.1]) Assume that \({\Omega }_1\) and \({\Omega }_2\) are smooth manifolds provided with Borel \(\sigma \)-algebra and \(\mu _1\) is a Lebesgue measure on \({\Omega }_1\). Let \((M, {\Omega }_1, \mu _1, p_1)\) be a \(k\)-integrable parametrized measure model and \(\kappa : {\Omega }_1 \rightarrow {\Omega }_2\) a statistic. Denote by \(\tilde{g}^F\) the Fisher metric on the transformed parametrized measure model \((M, {\Omega }_2, \kappa _*(\mu _1), \kappa _*(p_1))\). For each \(x\in M\) and each \(V \in T_xM\) we have

Inequality (3.15) becomes an equality for all \(x \in M\) and for all \(V\in T_x M\) if and only if the statistic \(\kappa \) is sufficient for the parameter \(x\in M\).

Proof

Denote by \({\Omega }^{reg}_2\) the set of regular values of \(\kappa \). Using (3.8), we obtain

Recall that

To prove Theorem 3.11, comparing (3.16) with (3.17), it suffices to show that for each \(x\in M\) and for each \({\omega }_2 \in {\Omega }^{reg}_2\) the following inequality holds

and the equality holds for all \(x\in M\) and all regular values \({\omega }_2\) if and only if \(\kappa \) is sufficient for the parameter \(x\in M\).

Taking into account (3.14) and Lemma 3.8, we note that (3.18) is equivalent to the following inequality

\(\square \)

Lemma 3.12

For all \(x\in M\) we have

Proof

Writing \(\mu _{\kappa ({\omega }_1)}^\perp (p_1(x))= \tilde{\mu }^\perp _{\kappa ({\omega }_1)}(x, y)\mu _{\kappa ({\omega }_1)}^\perp (\mu _1)\), we observe that (3.20) is a consequence of the following identity for all \(x\in M\):

whose validity follows from Lemma 3.8.\(\square \)

Clearly (3.19) follows from Lemma 3.12, since \({\partial }_V \ln \kappa _*({\bar{p}}_1)(x, {\omega }_2)\) does not depend on \(y\). Note that (3.19), and hence (3.18), becomes an equality if and only if \(\mu ^\perp _{\kappa ({\omega }_1)}(p_1(x))\) is independent of \(x\). By Theorem 3.10 the last condition is equivalent to the sufficiency of the statistic \(\kappa \) for the parameter \(x\in M\). This proves Theorem 3.11. \(\square \)

Remark 3.13

Assume that a statistic \(\kappa \) is smooth. Denote by \(\hat{g} ^F_{{\omega }_2}\) the Fisher quadratic form on the statistical model \(\mu ^\perp _{{\omega }_2}(p_1(x), y)\) with respect to the reference measure \(\mu ^\perp _{\kappa ({\omega }_1)}(\mu _1, y)\) as in (3.13). Taking into account (3.16), (3.17) and (3.20) we obtain immediately the following equality for all \(x\in M\) and all \(V \in T_x M\) (cf. [4, Theorem 2.1])

The integral in the RHS of (3.21) is called the information loss [4, p.30].

Example 3.14

Let \({\Omega }_n\) be a finite set of \(n\) elements \(E_1,\ldots ,E_n\). Let \(\mu _n\) be the probability distribution on \({\Omega }_n\) such that \(\mu _n (E_i) = 1/ n\) for \(i \in [1,n]\). Clearly, the space \({\mathcal P}({\Omega }_n, \mu _n)\) consists of all probability distributions \(p\) on \({\Omega }_n\) which can be represented as

for some non-negative function \(f: {\Omega }_n \rightarrow {\mathbb {R}}\) such that \(\sum _{i = 1} ^n f(E_i) = n\). Denote by \(E_i^*\) the Dirac measure on \({\Omega }_n\) concentrated at \(E_i\). The space \({\mathcal M}_{+}({\Omega }_n, \mu _n)\) of measures equivalent to \(\mu _n\) consists of all measures \(p = \sum _{i=1}^n p_i E_i^*, p_i > 0\), so it is the positive cone \({\mathbb {R}}^n_+\). Let \(n \le m< \infty \). Let \(\{ \hat{F}_1,\ldots ,\hat{F}_n \}\) be a partition of the set \({\Omega }_m :=\{F_1,\ldots , F_m\}\) into disjoint subsets. Denote this partition by \(\bar{\kappa }\). We associate \(\bar{\kappa }\) with a map \(\kappa : {\Omega }_m \rightarrow {\Omega }_n\) by setting

We identify \({\mathcal M}_{+}({\Omega }_m, \mu _m)\) with \({\mathbb {R}}^m_+\) which is the convex hull of the Dirac measures \(F^*_j, j \in [1,m]\). Recall that a linear mapping \(\Pi : {\mathbb {R}}^n \rightarrow {\mathbb {R}}^m, \, \Pi (E^*_k) : = \sum _{j=1}^m\Pi _{kj}F^*_j,\) is called a Markov mapping, if \(\Pi _{ij} \ge 0\) and \(\sum _{j =1} ^m \Pi _{kj} = 1\) (cf. Example 4.6). Following Chentsov [15, p. 56 and Lemma 9.5, p. 136], we call \(\Pi \) a Markov congruent embedding subjected to a partition \(\bar{\kappa }\) if

-

\(F_j \not \in \kappa ^{-1} (E_i) \;\; \Longrightarrow \;\; \Pi (E_i ^*) (F_j) = 0\),

-

\(\Pi (E_i ^*) \not = 0\) for all \(i \in [1,n]\).

Note that \(\Pi ({\mathcal M}({\Omega }_n, \mu _n)) \subset {\mathcal M}({\Omega }_m, \mu _m)\). The restriction of \(\Pi \) to \({\mathbb {R}}^n_+ = {\mathcal P}_+({\Omega }_n, \mu _n)\) as well to \({\mathcal M}_+({\Omega }_n, \mu _n)\) is also denoted by \(\Pi \).

Proposition 3.15

-

1.

Let \(\Pi : {\mathcal P}_+({\Omega }_n, \mu _n) \rightarrow {\mathcal M}({\Omega }_m, \mu _m)\) be the restriction of a Markov mapping such that \(({\mathcal P}_+({\Omega }_n, \mu _n), {\Omega }_m, \mu _m, \Pi )\) is an immersed statistical model of dimension \(n-1\). A statistic \(\kappa : {\Omega }_m \rightarrow {\Omega }_n\) is sufficient for the parameter \(x\in ({\mathcal P}_+({\Omega }_n, \mu _n), {\Omega }_n, \mu _n, \Pi ),\) if \(\Pi \) is a Markov congruent embedding subjected to \(\kappa .\)

-

2.

Let \(\Pi : {\mathcal M}_+({\Omega }_n, \mu _n) \rightarrow {\mathcal M}({\Omega }_m, \mu _n)\) be the restriction of a Markov mapping such that \(({\mathcal M}_+({\Omega }_n, \mu _n), {\Omega }_n, \mu _n, \Pi )\) is an immersed parametrized model of dimension \(n\). A statistic \(\kappa : {\Omega }_m \rightarrow {\Omega }_n\) is sufficient for the parameter \(x\in ({\mathcal M}_+({\Omega }_n, \mu _n), {\Omega }_n, \mu _n, \Pi ),\) if \(\Pi \) is a Markov congruent embedding subjected to \(\kappa \).

Proof

The first assertion of Proposition 3.15 follows directly from the Chentsov results [15, Lemma 6.1, p.77 and Lemma 9.5, p.136].

The second assertion of Proposition 3.15 is a consequence of the first assertion and the following.

Lemma 3.16

Assume \((M, {\Omega }, \mu , p)\) is a parametrized measure model and \(\kappa : {\Omega }\rightarrow {\Omega }' \) is sufficient for the parameter \(x \in (M, {\Omega }, \mu , p)\). Then \(\kappa \) is also sufficient for the parameter \(x \in (M\times (0,1), {\Omega }, \mu , \hat{p}(x, t): = t p (x))\).

Proof of Lemma 3.16

Since \(\kappa _* (t\mu ) = t\kappa _*(\mu )\) for any finite measure \(\mu \) on \({\Omega }\) and \( t \in {\mathbb {R}}^+\), we get

Taking into account Lemma 3.3, this proves Lemma 3.16.\(\square \)

This completes the proof of Proposition 3.15.\(\square \)

Since \(\kappa _* \circ \Pi = Id\) for Markov congruent embeddings \(\Pi \), using Theorem 3.5 we obtain immediately

Corollary 3.17

Let \(\Pi : {\mathcal M}({\Omega }_n, \mu _n) \rightarrow {\mathcal M}({\Omega }_m, \mu _m)\) be a Markov congruent embedding. Then the Amari–Chentsov structure on \({\mathcal M}_{+}({\Omega }_n, \mu _n)\) coincides with the Amari–Chentsov structure on \(({\mathcal M}_{+}({\Omega }_n, \mu _n), {\Omega }_m, \mu _m, \Pi )\).

Remark 3.18

A variant of Proposition 3.15 has been proved by Chentsov [15, Lemma 6.1, p.77 and Lemma 9.5, p.136], see also Proposition 4.7 below. It plays a decisive role in the Chentsov theorem [15] on geometric structures on statistical models \((M, {\Omega }_n, \mu _n, p)\) that are invariant under sufficient statistics, which we reformulate in Proposition 3.19 below, see also the explanation that follows Proposition 3.19. Proposition 3.15 implies that such geometric structures are preserved under Markov congruent embeddings, which are easier to understand.

Proposition 3.19

-

1.

\((\)cf. [15, Lemma 11.1 p. 157]) Assume that \(C\) is a continuous function on statistical models \(({\mathcal P}_+({\Omega }_n, \mu _n), {\Omega }_n,\mu _n, Id )\) such that \(C\) is invariant under Markov congruent embeddings. Then \(C\) is a constant.

-

2.

\((\)cf. [15, Lemma 11.2, p. 158]) Assume that a \(A\) is a continuous 1-form field on statistical models \(({\mathcal P}_+({\Omega }_n, \mu _n), {\Omega }_n, \mu _n, Id)\) such that \(A\) is invariant under Markov congruent embeddings. Then \(A\) equals zero.

-

3.

\((\)cf. [15, Theorem 11.1, p. 159]) Assume that \(F\) is a continuous quadratic form field on statistical models \(({\mathcal P}_+({\Omega }_n, \mu _n), {\Omega }_n, \mu _n, p)\) such that \(F\) is invariant under Markov congruent embeddings. Then

-

4.

\((\)cf. [15, Theorem 12.2, p.175]) Assume that \(T\) is a continuous covariant 3-tensor field on statistical models \(({\mathcal P}_+({\Omega }_n, {\Omega }_n), {\Omega }_n, \mu _n, Id)\) such that \(F\) is invariant under Markov congruent embeddings. Then there is a continuous function \(t: {\mathbb {R}}\rightarrow R\) such that \(T = t (\sum _{i=1}^n p_i(x))\cdot T^{AC}\) where \(T^{AC}\) is the Amari–Chentsov tensor.

The argument of Chentsov for proving (1) (actually for its general form in [15, Lemma 11.1]) is based on the fact that the elementary geometry (with respect to the Markov congruent embeddings \(\Pi \)) of the spaces \(({\mathcal P}_+ ({\Omega }_n, \mu _n), {\Omega }_m, \mu _m, \Pi )\) is almost homogeneous. The Chentsov proof of (2) rests on (1) and on the permutation invariance, because a map from \({\Omega }_n\) to itself that permutes the points of \({\Omega }\) is clearly a sufficient statistic. The Chentsov proof of (3) uses similar arguments. Chentsov gave a proof of (4) in an equivalent formulation, namely the uniqueness of the Chentsov–Amari connections among those affine connections that are invariant under Markov embeddings, see also Remark 2.3.

In [10] Campbell gave a generalization of the second assertion of Proposition 3.19 for parametrized measure models associated with finite sample spaces. A generalization of Proposition 3.19 for parametrized measure models associated with finite sample spaces is given in the following

Corollary 3.20

-

1.

Assume that \(A\) is a continuous 1-form field on parametrized measure models \(({\mathcal M}_+({\Omega }_n, \mu _n), {\Omega }_n, \mu _n, Id)\) such that \(A\) is invariant under Markov congruent embeddings. Then there is a continuous function \(c: {\mathbb {R}}\rightarrow {\mathbb {R}}\) such that for all \(x \in M\) and all \(V \in T_x M \subset {\mathbb {R}}^n,\) \(A_x(V) = c(\sum _{i=1} ^n p_i(x))\sum _{i =1} ^n p_i {\partial }_V \ln p_i(x).\)

-

2.

Assume that \(F\) is a continuous quadratic form field on parametrized models \(({\mathcal M}_+({\Omega }_n, \mu _n), {\Omega }_n, \mu _n, Id)\) such that \(F\) is invariant under Markov congruent embeddings. Then there are continuous functions \(f, d: {\mathbb {R}}\rightarrow {\mathbb {R}}\) such that \( F = f (\sum _{i=1}^n p_i(x))\cdot g^F + d(\sum _{i=1}^n p_i(x)) A^2\) where \(A\) is the 1-form field described in (1) with \(c = 1\) and \( g^F\) is the Fisher metric.

-

3.

Assume that \(T\) is a continuous covariant symmetric 3-tensor field on statistical models \(({\mathcal M}_+({\Omega }_n,\mu _n), {\Omega }_n, \mu _n, p)\) associated with finite sample spaces \(\{{\Omega }_n\}\) such that \(F\) is invariant under Markov congruent embeddings. Then there is a continuous function \(t: {\mathbb {R}}\rightarrow R\) such that \(T = t (\sum _{i=1}^n p_i(x))\cdot T^{AC} + g^F \cdot A_2 + A^3_1 \) where \(g^F\) and \(T^{AC}\) are the Fisher metric and the Amari–Chentsov tensor respectively, and \(A_1, A_2\) are the fields described in (1).

Proof

-

1.

Using the induced Fisher metric on \(T^*{\mathcal M}_+({\Omega }_n, \mu _n)\), we decompose the 1-form \(A\in T^*{\mathcal M}_{+}({\Omega }_k, \mu _k)\) into a sum of two orthogonal 1-forms \(A_0 \) and \( A ^\perp \), where \(A_0\) annihilates the tangent hyperplane \(T {\mathcal M}^{ p_1+ \cdots +p_k}_{+} ({\Omega }_k, \mu _k) \subset T {\mathcal M}_{+} ({\Omega }_k,\mu _k)\) and \(A^\perp = A- A_0\). Since the Fisher metric is invariant under the Markov congruent embeddings, each component \(A_0\) and \(A^\perp \) is also invariant under the Markov congruent embeddings. Taking into account the first assertion (1) of Proposition 3.19, it follows that \(A_0(V)= c(\sum _{i =1}^k p_i (x))\cdot \sum _{i =1} ^k p_i {\partial }_V\ln p_i \) for some continuous function \(c\). By the second assertion of Proposition 3.19 the component \(A^\perp \) vanishes. This proves the the first assertion (1) of Corollary 3.20.

-

2.

Using the same argument, i.e. decomposing \(F\) into three orthogonal components, we obtain the second assertion of Corollary 3.20 from the third assertion of Proposition 3.19 and the first assertion of Corollary 3.20.

-

3.

The last assertion of Proposition 3.19 is obtained from its particular case for statistical models (the last assertion of Proposition 3.19) and from the first and the second assertion of Corollary 3.20. \(\square \)

Our proof of the Main Theorem (Theorem 2.10) is based on the following main observation. For each step function \(\tau \) on \(({\Omega }, \mu )\) subject to a statistic \(\kappa : ({\Omega }, \mu )\rightarrow {\Omega }_n: =\{ E_1,\ldots ,E_n\}\) (Definition 3.21) there exists a parametrized measure model \((M, {\Omega }, \mu , p)\) and a vector \(V\in T_xM\) such that \(p(x) = \mu \) and \({\partial }_V \ln {\bar{p}} = \tau \), moreover, \(\kappa \) is sufficient with respect to the parameter \(x\in M\) (Lemma 3.22). Thus, the computation of any pointwise continuous covariant \(k\)-tensor field on \({\mathcal M}({\Omega })\), whose induced \(k\)-tensor field on parametrized measure models is invariant under sufficient statistics, is reduced to the case \({\Omega }= {\Omega }_n\), which has been considered by Chentsov for \(k = 1,2,3\).

Definition 3.21

(cf. Example 3.14) Let \(({\Omega }, \mu )\) be a finite measure space and let \(\bar{\kappa }\) be a decomposition \({\Omega }= D_1 \dot{\cup }\cdots \dot{\cup }D_n\) where \(D_i\) is measurable. Denote by \(\kappa \) the associated statistic \({\Omega }\rightarrow {\Omega }_n, \, \kappa (D_i): = E_i\). A function \(\tau : {\Omega }\rightarrow {\mathbb {R}}\) is called a step function subject to \(\kappa \), if \(\tau ( {\omega }) = \tau _i \cdot \chi _{D_i}({\omega })\), where \(\tau _i \in {\mathbb {R}}\) and \(\chi _{D_i}\) is the characteristic function of \(D_i\).

Lemma 3.22

Let \(M = (0,1)\) and \({\Omega }\) be a smooth manifold. Given a finite measure \(\mu \in {\mathcal M}(\Omega ),\) a point \(x_0 \in M,\) and a step function \(\tau : = \sum _i \tau _i \chi _{D_i}\) on \({\Omega }\) subject to a statistic \(\kappa : ({\Omega }, \mu ) \rightarrow {\Omega }_n,\) there exist a \(k\)-integrable parametrized measure model \((M, {\Omega }, \mu , p)\) and \(V \in T_{x_0} M\) such that

-

1.

\(\kappa \) is sufficient for the parameter in \(M,\)

-

2.

\(p(x_0) = \mu ,\)

-

3.

\({\partial }_V \ln {\bar{p}} =\sum _i \tau _i \chi _{D_i}.\)

Proof

Note that \(\kappa \) is a sufficient statistic for a \(k\)-integrable parametrized measure model \((M, {\Omega }, \mu , p)\) iff \(p\) is given as in Definition 3.1, i.e.

for smooth functions \(s_i: M \rightarrow {\mathbb {R}}\) and \(t \in L^1 ({\Omega })\). For such \(\ln {\bar{p}} (x, {\omega })\) the conditions (2) and (3) are equivalent to the following

-

\(\sum _{i =1}^n s_i(x_0) \chi _{D_i}(\omega ) + \ln t(\omega )= 0\),

-

\(\sum _{i =1}^n {\partial }_V s_i(x_0) \chi _{D_i}(\omega ) = \sum _i \tau _i \chi _{D_i}({\omega })\).

Set \(t({\omega }) = 1\). The existence of functions \(s_i (s)\) satisfying the listed conditions is obvious: it suffices to choose smooth \(s_i \) such that \(s_i (x_0) = 0\) and \({\partial }_V s_i (x) = \tau _i\). In fact, we can simply take \(V={\partial }_x\) and \(s_i(x) = (x-x_0) \tau _i \). Finally, one verifies that the defined parametrized measure model is \(k\)-integrable, since the \(s_i\) are smooth. \(\square \)

Proof of the Main Theorem

-

1.

Let \(A\) be a pointwise continuous 1-tensor field on \({\mathcal M}({\Omega })\) satisfying the condition (1) in the Main Theorem. To prove the first assertion of the Main Theorem, it suffices to assume that \(V\) is a step function \(\sum _i \tau _i \chi _{D_i}\) (using again the identification between the tangent vector \(V\) and \({\partial }_V \ln {\bar{p}}\)) subject to a statistic \(\kappa :{\Omega }\rightarrow {\Omega }_n\). By Lemma 3.22 there exists a \(k\)-integrable parametrized measure model \((M, {\Omega }, \mu , p)\) such that

-

(1)

\({\bar{p}} (x, {\omega }) =e^{s_i(x)\chi _{D_i}}\) , where \(s_i \in C^\infty (M)\), hence \(\kappa \) is sufficient for the parameter \(x\in M\),

-

(2)

\(p(x_0) = \mu \),

-

(3)

\({\partial }_V \ln {\bar{p}}(x, {\omega }) = \sum _i \tau _i \chi _{D_i}\).

Set

$$\begin{aligned} d_i: = \int _{D_i}d\mu . \end{aligned}$$Then \(\kappa _*(\mu ) = d_i E_i ^*\), where \(E_i^*\) is the Dirac measure concentrated at \(E_i\). Since \(A\) is associated with a statistical field which is invariant under \(\kappa _*\) we have

$$\begin{aligned} A_\mu (\tau ) \!=\! (A_{\kappa _*(\mu )}({\partial }_V( \ln \kappa _*({\bar{p}}))) \!=\! A_{(d_1,\ldots ,d_n)}(\tau _1,\ldots ,\tau _n)\!=\! c \left( \sum _{i=1}^n d_i\right) \sum _{i=1}^n d_i \tau _i,\nonumber \\ \end{aligned}$$(3.23)where \(c\) is the function defined in Proposition 3.19.2. Note that

$$\begin{aligned}&\sum _i d_i = \int _{\Omega }d\mu , \\&\sum _i d_i\tau _i =\sum _i \left( \int _{D_i} \tau _id\mu \right) = \int _{\Omega }\tau d\mu . \end{aligned}$$This proves the first assertion in the Main Theorem. The next assertions of the Main Theorem concerning specification of the covariant 1-tensor field \(A\) follows immediately.

-

(1)

-

2.

Now assume that \(F\) is a pointwise continuous quadratic form on \({\mathcal M}({\Omega })\) and \(\mu \) is a finite measure. To prove the second assertion of the Main Theorem we follow the same line of arguments as above. It suffices to prove the validity of the second assertion for a step function \(\tau \) on \({\Omega }\), since \(F\) is a quadratic form (otherwise we have to consider step functions subjected to different statistics). We deduce the second assertion of the Main Theorem from Proposition 3.19.2 using the observation that the Fisher metric on \({\mathcal M}({\Omega })\) applied to \(\tau \)

$$\begin{aligned} g^F_\mu (\tau ) = \int _{\Omega }\tau ^2 d\mu =\sum _{i =1}^n d_i \tau _i^2 \end{aligned}$$is equal to the Fisher metric applied to \(\kappa _*(\tau ) = (\tau _1,\ldots ,\tau _n)\)

$$\begin{aligned} g^F_{ (d_1,\ldots ,d_n)} ([\tau _1,\ldots ,\tau _n])=\sum _{i =1}^n d_i\tau _i^2. \end{aligned}$$ -

3.

The last assertion of the Main Theorem is proven in the same way. It follows from Proposition 3.19.2 using the observation that the Amari–Chentsov 3-symmetric tensor on \({\mathcal M}({\Omega })\) applied to \(\tau \)

$$\begin{aligned} T^{AC}_\mu (\tau ) = \int _{\Omega }\tau ^3d\mu =\sum _{i =1}^n d_i \tau _i^3 \end{aligned}$$is equal to the Amari–Chentsov tensor applied to \(\kappa _*(\tau ) = (\tau _1,\ldots ,\tau _n)\)

$$\begin{aligned} T^{AC}_{ (d_1,\ldots , d_n)} ([\tau _1,\ldots , \tau _n]) = \sum _{i =1}^n d_i \tau _i^3. \end{aligned}$$To complete the proof of the Main Theorem we need to show that

-

(1)

all the tensor fields described in the Main Theorem are weakly continuous on \(n\)-integrable parametrized measure models,

-

(2)

the tensor field \(A\) is invariant under sufficient statistics.

Note that (1) holds since the value \(A (V)\) (resp. \(F(V)\), \(T(V)\)) of a tensor field \(A\) (resp. \(F\), \(T\)) in the Main Theorem at a continuous vector field \(V\) on \(M\) is an algebraic function whose arguments are tensor fields of the following forms: \((x, V) \mapsto c(\int _{\Omega }dp(x))\), \((x, V) \mapsto \int _{\Omega }({\partial }_V\ln {\bar{p}})^k \, d p(x)\), \( k = 1\) (resp \(k =2, 3\)), which are continuous by the condition (2) of Definition 2.4. The proof of (2) is similar to the proof of Theorem 3.5, observing that

$$\begin{aligned} {\partial }_V p(x) = {\partial }_V \ln {\bar{p}}(x) {\bar{p}}(x)\mu \end{aligned}$$for \(p(x) = {\bar{p}} (x) \mu \) (cf. Remark 2.5), and hence omitted. \(\square \)

-

(1)

Remark 3.23

It is not hard to prove a version of the Main Theorem for local continuous statistical covariant tensor fields on statistical models that are invariant under sufficient statistics, which is a direct generalization of the Chentsov theorem [14], see its formulation in Proposition 3.19. In particular, it implies the uniqueness of the Amari–Chentsov connections among those affine connections on statistical models that are invariant under sufficient statistics, see also Remark 2.3. All the arguments for the proof of the Main Theorem also holds for this “statistical” version, since the image of a statistical model under a sufficient statistic is also a statistical model.

4 Markov morphisms and sufficient statistics

In this section we introduce the notions of a Markov morphism, a \(\mu \)-representable Markov morphism, and a restricted Markov morphism (Definitions 4.1, 4.2, 4.4) extending the Chentsov notion of a Markov morphism [13], and the notion of a statistical morphism introduced independently by Morse and Sacksteder in [30]. These notions are needed for comparing two statistical models; they stem from the Blackwell concept of “comparison of experiments” in [8]. A novel aspect is our consideration of a parametrization of the parameter space \(M\) of a parametrized measure model \((M, {\Omega }, \mu , p)\) as a restricted Markov morphism (Definition 4.4, Example 4.5). Thus, the geometry of parametrized measure models is intrinsic (Example 4.5). We decompose a Markov morphism associated with a (positive) Markov transition kernel as a composition of a right inverse of a sufficient statistic and a statistic (Theorem 4.10). As a consequence we give a geometric proof of the monotonicity theory for Markov morphisms (Corollary 4.11).

Positivity assumption. In this section, for the simplicity of the exposition of the theory, when considering Markov transition kernels we restrict ourselves to positive ones.

Definition 4.1

(cf. [13, p. 194], [30, p. 205]) A Markov transition from a measurable space \(({\Omega }, \Sigma )\) to a measurable space \(({\Omega }', \Sigma ')\) is a map \(T: {\Omega }\rightarrow {\mathcal P}({\Omega }', \Sigma ')\) such that for each \(S \in \Sigma '\) the function \(\int _S d(T(x))\) is a \(\Sigma \)-measurable function. A Markov transition \(T: {\Omega }\rightarrow {\mathcal P}({\Omega }', \Sigma ')\) defines a Markov morphism \(T_*: {\mathcal M}({\Omega }, \Sigma ) \rightarrow {\mathcal M}({\Omega }', \Sigma ')\) by

for \(S \in \Sigma '\).

Since \( T({\Omega }) \subset {\mathcal P}({\Omega }',\Sigma ')\), substituting \(S : = {\Omega }'\) in (4.1), we obtain

for all \(a \in {\mathbb {R}}^+\).

Next, we assume that \(T({\omega })\) is dominated by a probability measure \(\mu ' \in {\mathcal P}({\Omega }', \Sigma ')\). Then there exists a measurable function \(\Pi _{\omega }: {\Omega }' \rightarrow {\mathbb {R}}\) such that for all \(S \in \Sigma '\) we have

If \( T({\Omega }) \subset {\mathcal P}({\Omega }', \mu ')\), by (4.2), there exists a Markov transition kernel \(\Pi : {\Omega }\times {\Omega }' \rightarrow {\mathbb {R}}\) from \({\Omega }\) to \({\mathcal M}({\Omega }', \mu ')\) such that

Definition 4.2

If (4.3) holds, \(T(\Pi ) : = T\) is called a \(\mu '\) -representable Markov transition, and \(T(\Pi )_*\) is called a \(\mu '\) -representable Markov morphism.

Note that any Markov transition kernel \(\Pi : {\Omega }\times {\Omega }'\rightarrow {\mathbb {R}}\) from \({\Omega }\) to \( {\mathcal P}({\Omega }', \mu ')\) satisfies

Abbreviate \(T(\Pi )_*\) as \(\Pi _*\). For any measure \(\nu \in {\mathcal M}({\Omega })\) and \(S \in \Sigma '\) we have

It follows

If \({\Omega },{\Omega }'\) are finite sets, then any Markov morphism \(T : {\mathcal M}({\Omega }, \Sigma ) \rightarrow {\mathcal M}({\Omega }', \Sigma ')\) is \(\mu \)-representable for any dominant measure \(\mu \) on \({\Omega }'\), see Example 4.6. This is not true, if \({\Omega }, {\Omega }'\) are open domains in \({\mathbb {R}}^n\), \(n \ge 1\), see the following.

Example 4.3

-

1.

(cf. [13, p. 511]) Let \(({\Omega }, \Sigma )\) be a measurable space. We define a Markov transition \(T^{Id}\) on \(({\Omega }, \Sigma )\) by setting

$$\begin{aligned} T ^{Id}({\omega }) (A) : = \chi _A ({\omega })\quad \text {for } {\omega }\in {\Omega }, \end{aligned}$$where \(\chi _A\) is the indicator function of \(A\in \Sigma \). Clearly \(T^{Id}_*\) defines a Markov morphism which is the identity transformation of \({\mathcal P}({\Omega }, \Sigma )\). Note that \(T^{Id}_*\) is not a \(\mu \)-representable Markov morphism for any measure \(\mu \in {\mathcal M}({\Omega }, \Sigma )\), if \({\Omega }\) is an open domain in \({\mathbb {R}}^n\) with Borel \(\sigma \)-algebra \(\Sigma \), and \(n \ge 1\). To see this, we note that if \(\mu \) dominates all the measures \(T^{Id} ({\omega }), {\omega }\in {\Omega }\), then \(\mu \) has no null set, in particular \(\mu (\{{\omega }\})>0\) for all \({\omega }\in {\Omega }\). It is easy to see that this is impossible, since \(\dim {\Omega }\ge 1\).

-

2.

Assume that \(\kappa : {\Omega }_1\rightarrow {\Omega }_2\) is a statistic. Then \(\kappa \) defines a Markov transition \(T^\kappa \) from \(({\Omega }_1,\Sigma _1)\) to \(({\Omega }_2, \Sigma _2)\) by setting

$$\begin{aligned} T^\kappa ({\omega }_1)(A):=\chi _A( \kappa ({\omega }_1))\quad \text {for } {\omega }_1 \in {\Omega }_1 \end{aligned}$$(4.8)and \(A \in \Sigma _2\). For \(\nu \in {\mathcal M}({\Omega }_1)\) and \(S \in \Sigma _2\), using (4.1), we get

$$\begin{aligned} T^\kappa _* (\nu )(S) = \int _{{\Omega }_1} \int _S d\chi _A(\kappa ({\omega }_1)) d\nu = \int _{\kappa ^{-1}( S)}d\nu . \end{aligned}$$Hence \(T^\kappa _* = \kappa _*\). Then \(T^{\kappa }_*\) is not a \(\mu _2\)-representable Markov morphism for any \(\mu _2 \in {\mathcal M}({\Omega }_2)\), if for instance \(\kappa ({\Omega }_1)\) and \({\Omega }_2\) are open domains in \({\mathbb {R}}^n\), \(n \ge 1\), since there exists \(\nu \in {\mathcal M}({\Omega }_1)\) such that \(\kappa _*(\nu )\) is not dominated by \(\mu _2\).

Denote by \(C^1(M_1, M_2)\) the space of all differentiable maps from a differentiable manifold \(M_1\) to a differentiable manifold \(M_2\). Let \(({\Omega }_1, \Sigma _1)\) and \(({\Omega }_2, \Sigma _2)\) be measurable spaces. Denote by \({\mathfrak M}({\Omega }_1, {\Omega }_2)\) the set of all Markov morphisms from \({\mathcal M}({\Omega }_1)\) to \({\mathcal M}({\Omega }_2)\).

Definition 4.4

Assume that \((M_1, {\Omega }_1, \mu _1, p_1)\) and \((M_2, {\Omega }_2, \mu _2, p_2)\) are parametrized measure models. A pair \((f\in C^1 (M_1, M_2), T \in {\mathfrak M} ({\Omega }_1, {\Omega }_2))\) is called a restricted Markov morphism, if for all \(x\in M\)

Example 4.5

-

1.

Assume that \((M, {\Omega }_1, \mu _1, p_1)\) is a parametrized measure model and \(\kappa : {\Omega }_1\rightarrow {\Omega }_2\) is a statistic. Then \((M, {\Omega }_2, \kappa _*(\mu _1), \kappa _* (p_1))\) is a parametrized measure model. By Example 4.3.2 the pair \((Id,\kappa _*)\) is a Markov morphism. We also call \((Id, \kappa _*)\) a statistic, if no misunderstanding occurs.

-

2.

Assume that \((M_2, {\Omega }_2, \mu _2, p_2)\) is a parametrized measure model and \(f: M_1\rightarrow M_2\) is a smooth map. Then \((M_1, {\Omega }_2, \mu _2, p_1: = p_2 \circ f)\) is a parametrized measure model and the pair \((f, Id)\) is a Markov morphism. Such a Markov morphism is called generated by a smooth map \(f\). It is easy to see that, if \(f\) is a differentiable map, then the Amari–Chentsov structure on \( M_1\) is obtained from the Amari–Chentsov structure on \(M_2\) via the pull-back map \(f^*\).

Example 4.6

Let \(({\Omega }_n, \mu _n)\) and \(({\Omega }_m, \mu _m)\) be the measure spaces in Example 3.14. Let \(\Pi : {\Omega }_n \times {\Omega }_m \rightarrow {\mathbb {R}}\) be a mapping such that \(\Pi _{i,j}:=\Pi (E_i, F_j)\) satisfies the following conditions

Clearly, \(\Pi \) is a Markov transition kernel from \({\Omega }_n\) to \({\mathcal M}({\Omega }_m, \mu _m)\). By (4.6) \(\Pi \) induces a map

Hence

Let

be statistical models. By (4.9), a pair \((f \in \mathrm{Diff}(M_1, M_2), \Pi \in {\mathfrak M}({\Omega }_n,{\Omega }_m))\) is a Markov morphism, if and only if for all \(x\in M_1\)

Thus for \(\Pi \in {\mathfrak M}({\Omega }_n,{\Omega }_m) \) the pair \( (f, \Pi )\) is a Markov morphism if and only if \(f = \Pi _*|_{ M_1}\). We also abbreviate \((\Pi _*|_{M_1}, \Pi )\) as \(\Pi \) if no misunderstanding occurs.

Next we drop the assumption that \(n \le m\). Note that there is a canonical map

Let \(\kappa : {\Omega }_n\rightarrow {\Omega }_m\) be a statistic. The composition \(\chi _m \circ \kappa : {\Omega }_n \rightarrow {\mathcal M}({\Omega }_m, \mu _m)\) defines the following map \(\Pi ^\kappa : {\Omega }_n \times {\Omega }_m \rightarrow {\mathbb {R}}\)

Clearly \(\sum _{j =1} ^m\Pi ^\kappa (E_i, F_j) = 1\) for all \(i\). Hence \(\Pi ^\kappa \) is a Markov transition kernel. Note that \(\Pi ^\kappa _*: {\mathcal M}({\Omega }_n, \mu _n) \rightarrow {\mathcal M}({\Omega }_m, \mu _m)\) coincides with the push-forward map \(\kappa _*: {\mathcal M}({\Omega }_n, \mu _n)\rightarrow {\mathcal M}({\Omega }_m, \mu _m)\).

Proposition 4.7

A linear mapping \(\Pi : {\mathbb {R}}^n \rightarrow {\mathbb {R}}^m \) is a Markov congruent embedding subjected to a statistic \( \kappa ,\) if and only if \(\Pi ^\kappa _* \circ \Pi (x) = x \) for all \(x \in {\mathbb {R}}^n_{\ge 0}\). A Markov mapping \(\Pi : {\mathbb {R}}^n \rightarrow {\mathbb {R}}^m\) has a left inverse if and only if it is a Markov congruent embedding.

The first assertion of Proposition 4.7 is obvious. The second assertion of Proposition 4.7 is a reformulation of [15, Lemma 6.1, p. 77 and Lemma 9.5, p.136].

Let \((M, {\Omega }_1,\mu _1, p_1)\) be a parametrized measure model, \(({\Omega }_2, \mu _2)\) a probability space and \(\Pi :{\Omega }_1 \times {\Omega }_2 \rightarrow {\mathbb {R}}\) a Markov transition kernel from \({\Omega }_1\) to \({\mathcal P}({\Omega }_2, \mu _2)\). We define a function \(\Pi ^{[p_1]}: M \times {\Omega }_1 \times {\Omega }_2 \rightarrow {\mathbb {R}}\) by setting:

Using (4.5), we get for all \(x \in M\) and any measurable set \(S\subset {\Omega }_1\)

Lemma 4.8

Then \( (M, {\Omega }_1 \times {\Omega }_2, \mu _1 \mu _2, \Pi ^{[p_1]}(x, {\omega }_1, {\omega }_2) d\mu _1d\mu _2)\) is a parametrized measure model. Moreover, the Amari–Chentsov structure on \((M, {\Omega }_1\times {\Omega }_2,\mu _1\mu _2, \Pi ^{[p_1]}(x, {\omega }_1, {\omega }_2)d\mu _1d\mu _2)\) coincides with the Amari–Chentsov structure on \((M, {\Omega }_1, \mu _1,p_1)\).

Proof

Let \(\pi _1: {\Omega }_1 \times {\Omega }_2 \rightarrow {\Omega }_1\) be a projection. Since \(\mu _2\) is a probability measure, \((\pi _1)_*(\mu _1\mu _2) = \mu _1\). Comparing (3.1) with (4.15), we observe that \(\pi _1\) is a sufficient statistic with respect to the parameter \( x\in (M, {\Omega }_1 \times {\Omega }_2, \mu _1 \mu _2, \Pi ^{[p]}(x,{\omega }_1, {\omega }_2)d\mu _1d\mu _2)\). By (4.16), the parametrized measure model \((M,{\Omega }_1, \mu _1,p_1)\) is the image of \((M, {\Omega }_1 \times {\Omega }_2, \mu _1 \mu _2, \Pi ^{[p]}(x,{\omega }_1, {\omega }_2)d\mu _1d\mu _2)\) under the Markov morphism \((Id, (\pi _1)_*)\). Combining this with Lemma 3.3, we obtain immediately Lemma 4.8.\(\square \)

We obtain immediately from the proof of Lemma 4.8

Corollary 4.9

Let \((M, {\Omega }_1, \mu _1, p_1)\) be a parametrized measure model and \(\mu _2\) a probability measure on \({\Omega }_2\). The projection \(\pi _1 : {\Omega }_1 \times {\Omega }_2\rightarrow {\Omega }_1\) is a sufficient statistic for the parametrized measure model \((M, {\Omega }_1 \times {\Omega }_2,\mu _1\mu _2, \Pi ^{[p]}(x,{\omega }_1, {\omega }_2) d\mu _1d\mu _2 )\).

Next, we consider a decomposition of a restricted Markov morphism.

Theorem 4.10

Let \((Id, \Pi _*):(M_1, {\Omega }_1, \mu _1, p_1) \rightarrow (M_1, {\Omega }_2, \mu _2, p_2)\) be a restricted Markov morphism between statistical models\(,\) where \(\Pi _*\) is \(\mu _2\)-representable by a positive Markov kernel. Then \((Id, \Pi _*)\) is a composition of the inverse of a Markov morphism, associated with a sufficient statistic, and a statistic.

Proof

Let \(\pi _2: {\Omega }_1 \times {\Omega }_2 \rightarrow {\Omega }_2\) be the projection onto the second factor. Then for any \(x\in M\) and any measurable set \(S \subset {\Omega }_2\) we have

Let \((Id, \Pi _{1,12}): (M_1, {\Omega }_1, \mu _1, p_1) \rightarrow (M_1, {\Omega }_1 \times {\Omega }_2, \mu _1\mu _2, \Pi ^{[p_1]}(x, {\omega }_1, {\omega }_2)d\mu _1d\mu _2)\) be a map between statistical models defined by

Then \((Id,\Pi _{1,12})\) is the inverse of the Markov morphism \((Id, (\pi _1)_*)\), associated with a sufficient statistic by Corollary 4.9. By (4.17), \((Id, \Pi _*)\) is a composition of \((Id,\Pi _{1,12})\) with \((Id, (\pi _2)_*)\). This completes the proof of Theorem 4.10.\(\square \)

Let \(M_1 = M_2\). A restricted Markov morphism of form \((f, T_*)\) is called representable if \(f\) is a diffeomorphism, and \(T_*\) is \(\mu \)-representable.

Corollary 4.11

(cf. [4, p. 31])

-

1.

Representable restricted Markov morphisms decrease the Fisher metric on \(k\)-integrable statistical models \((M, {\Omega }, \mu , p)\) where \({\Omega }\) is a smooth manifold and \(\mu \) is a Lebesgue probability measure.

-

2.

The Fisher metric is the unique up to a constant weakly continuous quadratic 2-form field on statistical models associated with finite sample spaces \(\{{\Omega }_n\}\) that is monotone under representable restricted Markov morphisms.

Proof