Abstract

We introduce ellipticity criteria for random walks in i.i.d. random environments under which we can extend the ballisticity conditions of Sznitman and the polynomial effective criteria of Berger, Drewitz and Ramírez originally defined for uniformly elliptic random walks. We prove under them the equivalence of Sznitman’s \((T')\) condition with the polynomial effective criterion \((P)_M\), for \(M\) large enough. We furthermore give ellipticity criteria under which a random walk satisfying the polynomial effective criterion, is ballistic, satisfies the annealed central limit theorem or the quenched central limit theorem.

Similar content being viewed by others

1 Introduction

We introduce ellipticity criteria for random walks in random environment which enable us to extend to environments which are not necessarily uniformly elliptic the ballisticity conditions for the uniform elliptic case of Sznitman [14] and of Berger et al. [2], their equivalences and some of their consequences [3, 7, 13, 14, 16].

For \(x\in \mathbb R ^d\), denote by \(|x|_1\) and \(|x|_2\) its \(l_1\) and \(l_2\) norm respectively. Call \(U:=\{e\in \mathbb Z ^d:|e|_1=1\}=\{e_1,\ldots ,e_{2d}\}\) the canonical vectors with the convention that \(e_{d+i}=-e_i\) for \(1\le i\le d\) and let \(\mathcal{P }:=\{p(e): p(e)\ge 0,\sum _{e\in U}p(e)=1\}\). An environment is an element \(\omega \) of the environment space \(\Omega :=\mathcal{{P}}^{\mathbb{Z }^d}\) so that \(\omega :=\{\omega (x):x \in \mathbb{Z }^d\}\), where \(\omega (x) \in \mathcal {P}\). We denote the components of \(\omega (x)\) by \(\omega (x,e)\). The random walk in the environment \(\omega \) starting from \(x\) is the Markov chain \(\{X_n: n \ge 0\}\) in \(\mathbb{Z }^d\) with law \(P_{x,\omega }\) defined by the condition \(P_{x,\omega }(X_0=x)=1\) and the transition probabilities

for each \(x \in \mathbb{Z }^d\) and \(e\in U\). Let \(\mathbb{P }\) be a probability measure defined on the environment space \(\Omega \) endowed with its Borel \(\sigma \)-algebra. We will assume that \(\{\omega (x):x\in \mathbb Z ^d\}\) are i.i.d. under \(\mathbb{P }\). We will call \(P_{x,\omega }\) the quenched law of the random walk in random environment (RWRE) starting from \(x\), while \(P_x:=\int P_{x,\omega }d\mathbb{P }\) the averaged or annealed law of the RWRE starting from \(x\).

We say that the law \(\mathbb{P }\) of the RWRE is elliptic if for every \(x\in \mathbb Z ^d\) and \(e\in U\) one has that \(\mathbb{P }(\omega (x,e)>0)=1\). We say that \(\mathbb{P }\) is uniformly elliptic if there exists a constant \(\kappa >0\) such that for every \(x\in \mathbb Z ^d\) and \(e\in U\) it is true that \(\mathbb{P }(\omega (x,e)\ge \kappa )=1\). Given \(l\in \mathbb S ^{d-1}\) we say that the RWRE is transient in direction \(l\) if

where

We say that it is ballistic in direction \(l\) if \(P_0\)-a.s.

The following is conjectured (see for example [15]).

Conjecture 1.1

Let \(l\in \mathbb S ^{d-1}\). Consider a random walk in a uniformly elliptic i.i.d. environment in dimension \(d\ge 2\), which is transient in direction \(l\). Then it is ballistic in direction \(l\).

Some partial progress towards the resolution of this conjecture has been made in [2, 4, 5, 13, 14]. In 2001 and 2002 Sznitman in [13, 14] introduced a class of ballisticity conditions under which he could prove the above statement. For each subset \(A\subset \mathbb Z ^d\) define the first exit time from the set \(A\) as

For \(L>0\) and \(l\in \mathbb S ^{d-1}\) define the slab

Given \(l\in \mathbb S ^{d-1}\) and \(\gamma \in (0,1)\), we say that condition \((T)_\gamma \) in direction \(l\) (also written as \((T)_\gamma |l\)) is satisfied if there exists a neighborhood \(V\subset \mathbb S ^{d-1}\) of \(l\) such that for all \(l'\in V\)

Condition \((T')|l\) is defined as the fulfillment of condition \((T)_\gamma |l\) for all \(\gamma \in (0,1)\). Sznitman [14] proved that if a random walk in an i.i.d. uniformly elliptic environment satisfies \((T')|l\) then it is ballistic in direction \(l\). He also showed that if \(\gamma \in (0.5,1)\), then \((T)_\gamma \) implies \((T')\). In 2011, Drewitz and Ramírez [4] showed that there is a \(\gamma _d\in (0.37,0.39)\) such that if \(\gamma \in (\gamma _d,1)\), then \((T)_\gamma \) implies \((T')\). In 2012, in [5], they were able to show that for dimensions \(d\ge 4\), if \(\gamma \in (0,1)\), then \((T)_\gamma \) implies \((T')\). Recently in [2], Berger et al. introduced a polynomial ballisticity condition, weakening further the conditions \((T)_\gamma \). The condition is effective, in the sense that it can a priori be verified explicitly for a given environment. To define it, for each \(L,\tilde{L}>0\) and \(l\in \mathbb S ^{d-1}\) consider the box

where \(R\) is a rotation of \(\mathbb R ^d\) defined by the condition

Given \(M\ge 1\) and \(L\ge 2\), we say that the polynomial condition \((P)_M\) in direction \(l\) is satisfied on a box of size \(L\) (also written as \((P)_M|l\)) if there exists an \(\tilde{L}\le 70 L^3\) such that the following upper bound for the probability that the walk does not exit the box \(B_{l,L,\tilde{L}}\) through its front side is satisfied

In [2], Berger et al. prove that every random walk in an i.i.d. uniformly elliptic environment which satisfies \((P)_M\) for \(M\ge 15 d+5\) is necessarily ballistic.

On the other hand, it is known (see for example Sabot-Tournier [10]) that in dimension \(d\ge 2\), there exist elliptic random walks which are transient in a given direction but not ballistic in that direction. The purpose of this paper is to investigate to which extent can the assumption of uniform ellipticity be weakened. To do this we introduce several classes of ellipticity conditions on the environment. Define for each \(\alpha \ge 0\),

and

Let \(\alpha \ge 0\). We say that the law of the environment satisfies the ellipticity condition \((E)_\alpha \) if for every \(e\in U\) we have that

Note that \((E)_0\) is equivalent to the existence of an \(\alpha >0\) such that \(\eta _\alpha <\infty \). To state the first result of this paper, we need to define the following constant

Throughout the rest of this paper, whenever we assume the polynomial condition \((P)_M\) is satisfied, it will be understood that this happens on a box of size \(L\ge c_0\).

Theorem 1.1

Consider a random walk in an i.i.d. environment in dimensions \(d\ge 2\). Let \(l\in \mathbb S ^{d-1}\) and \(M\ge 15 d+5\). Assume that the environment satisfies the ellipticity condition \((E)_0\). Then the polynomial condition \((P)_M|l\) is equivalent to \((T')|l\).

It is important to remark that we have not made any particular effort to optimize the values of \(15d+5\) and \(c_0\) in the above theorem.

In this paper we go further from Theorem 1.1, and we obtain assuming \((T')\), good enough tail estimates for the distribution of the regeneration times of the random walk. Let us recall that there exists an asymptotic direction if the limit

exists \(P_0\)-a.s. The polynomial condition \((P)_M\) implies the existence of an asymptotic direction (see for example Simenhaus [11]). Whenever the asymptotic direction exists, let us define the half space

Let \(\alpha >0\). We say that the law of the environment satisfies the ellipticity condition \((E')_\alpha \) if there exists an \(\{\alpha (e):e\in U\}\in (0,\infty )^{2d}\) such that

and

Note that \((E')_{\alpha '}\) implies \((E)_\alpha \) for

Furthermore, we say that the ellipticity condition \((E')_\alpha \) is satisfied towards the asymptotic direction if there exists an \(\{\alpha (e):e\in U\}\) satisfying (1.7) and (1.8) and such that

The second main result of this paper is the following theorem.

Theorem 1.2

(Law of large numbers) Consider a random walk in an i.i.d. environment in dimensions \(d\ge 2\). Let \(l\in \mathbb S ^{d-1}\) and \(M\ge 15 d+5\). Assume that the random walk satisfies the polynomial condition \((P)_M|l\) and the ellipticity condition \((E')_1\) towards the asymptotic direction (cf. (1.7), (1.8) and (1.10)). Then the random walk is ballistic in direction \(l\) and there is a \(v\in \mathbb R ^d\), \(v\ne 0\) such that

We have directly the following two corollaries, the second one following from Hölder’s inequality.

Corollary 1.1

Consider a random walk in an i.i.d. environment in dimensions \(d\ge 2\). Let \(l\in \mathbb S ^{d-1}\) and \(M\ge 15 d+5\). Assume that the random walk satisfies condition \((P)_M|l\) and that there is an \(\alpha >\frac{1}{4d-2}\) such that

Then the random walk is ballistic in direction \(l\).

Corollary 1.2

Consider a random walk in an i.i.d. environment in dimensions \(d\ge 2\). Let \(l\in \mathbb S ^{d-1}\) and \(M\ge 15 d+5\). Assume that the random walk satisfies condition \((P)_M|l\) and the ellipticity condition \((E)_{1/2}\). Then the random walk is ballistic in direction \(l\).

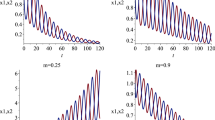

Theorems 1.1 and 1.2 give ellipticity criteria for ballistic behavior for general random walks in i.i.d. environments, which as we will see below, should not be far from optimal criteria. Indeed, the value \(1\) of condition \((E')_1\) in Theorem 1.2 is optimal: within the context of random walks in Dirichlet random environments (RWDRE), it is well known that there are examples of walks which satisfy \((E')_\alpha \) for \(\alpha \) smaller but arbitrarily close to \(1\), towards the asymptotic direction (cf. 1.10), which are transient but not ballistic in a given direction (see [8–10]). A RWDRE with parameters \(\{\beta _e:e\in U\}\) is a random walk in an i.i.d. environment whose law at a single site has the density

with respect to the Lebesgue measure on \(\mathcal P \). We can also explicitly construct examples as those mentioned above in analogy to the random conductance model (see for example Fribergh [6], where he characterizes the ballistic random walks within the directional transient cases). In fact, for every \(\epsilon >0\), one can construct an environment such that condition \((E')_{1-\epsilon }\) is satisfied towards the asymptotic direction, but the walk is transient in direction \(e_1\) but not ballistic in direction \(e_1\). Let \(\phi \) be any random variable taking values on the interval \((0,1/4)\) and such that the expected value of \(\phi ^{-1/2}\) is infinite, while for every \(\epsilon >0\), the expected value of \(\phi ^{-(1/2-\epsilon )}\) is finite. Let \(X\) be a Bernoulli random variable of parameter \(1/2\). We now define \(\omega _1(0,e_1)=2\phi \), \(\omega _1(0,-e_1)=\phi \), \(\omega _1(0,-e_2)=\phi \) and \(\omega _1(0,e_2)=1-4\phi \) and \(\omega _2(0,e_1)=2\phi \), \(\omega _2(0,-e_1)=\phi \), \(\omega _2(0,e_2)=\phi \) and \(\omega _2(0,-e_2)=1-4\phi \). We then let the environment at site \(0\) be given by the random variable \(\omega (0):=1_X(1)\omega _1(0) +1_X(0)\omega _2(0)\). This environment has the property that traps can appear, where the random walk gets caught in an edge, as shown shown in Fig. 1 and it does satisfy \((E')_{1-\epsilon }\) towards the asymptotic direction. Furthermore, it is not difficult to check that the random walk in this random environment is transient in direction \(e_1\) but not ballistic. It will be shown in a future work, that this environment satisfies the polynomial condition \((P)_M\) for \(M\ge 15d+5\).

As mentioned above, similar examples of random walks in elliptic i.i.d. random environment which are transient in a given direction but not ballistic have been exhibited within the context of the Dirichlet environment. Here, the environment is chosen i.i.d. with a Dirichlet distribution at each site \(D(\beta _1,\ldots ,\beta _{2d})\) of parameters \(\beta _1,\ldots ,\beta _{2d}>0\) (see for example [8–10]), the parameter \(\beta _i\) being associated with the direction \(e_i\). For a random walk in Dirichlet random environment, condition \((E')_1\) is equivalent to

This is the characterization of ballisticity given by Sabot in [9] for random walks in random Dirichlet environments in dimension \(d\ge 3\). Tournier in [17] proved that if \(\lambda \le 1\), then the RWDRE is not ballistic in any direction. Sabot in [9], showed that if \(\lambda >1\), and if there is an \(i=1,\ldots ,d\) such that \(\beta _i\ne \beta _{i+d}\), then the random walk is ballistic. It is thus natural to wonder to what general condition corresponds (not restricted to random Dirichlet environments), the characterization of Sabot and Tournier. In Sect. 2, we will see that there are several formulations of the necessary and sufficient condition for ballisticity of Sabot and Tournier for RWDRE (cf. (1.11)), but which are not equivalent for general RWRE. Among these formulations, the following one is the weakest one in general. We say that condition \((ES)\) is satisfied if

We have furthermore the following proposition whose proof will be presented in Sect. 2.

Proposition 1.1

Consider a random walk in a random environment. Assume that condition \((ES)\) is not satisfied. Then the random walk is not ballistic.

We will see in the proof of Proposition 1.1 how important is the role played by the edges depicted in Fig. 1 which play the role of traps.

Another consequence of Theorem 1.1 and the machinery that we develop to estimate the tails of the regeneration times is the following theorem.

Theorem 1.3

Consider a random walk in an i.i.d. environment in dimensions \(d\ge 2\). Let \(l\in \mathbb S ^{d-1}\) and \(M\ge 15 d+5\). Assume that the random walk satisfies condition \((P)_M|l\).

-

(a)

(Annealed central limit theorem) If \((E')_1\) is satisfied towards the asymptotic direction then

$$\begin{aligned} \epsilon ^{1/2}(X_{[\epsilon ^{-1}n]}-[\epsilon ^{-1}n]v) \end{aligned}$$converges in law under \(P_0\) as \(\epsilon \rightarrow 0\) to a Brownian motion with non-degenerate covariance matrix.

-

(b)

(Quenched central limit theorem) If \((E')_{88d}\) is satisfied towards the asymptotic direction, then \(\mathbb{P }\)-a.s. we have that

$$\begin{aligned} \epsilon ^{1/2}(X_{[\epsilon ^{-1}n]}-[\epsilon ^{-1}n]v) \end{aligned}$$converges in law under \(P_{0,\omega }\) as \(\epsilon \rightarrow 0\) to a Brownian motion with non-degenerate covariance matrix.

Part \((b)\) of the above Theorem is based on a result of Rassoul-Agha and Seppäläinen [7], which gives as a condition so that an elliptic random walk satisfies the quenched central limit theorem that the regeneration times have moments of order higher than \(176d\). As they point out in their paper, this particular lower bound on the moment should not have any meaning and it is likely that it could be improved. For example, Berger and Zeitouni in [3], also prove the quenched central limit theorem under lower order moments for the regeneration times but under the assumption of uniform ellipticity. It should be possible to extend their methods to elliptic random walks in order to improve the moment condition of part \((b)\) of Theorem 1.3.

It is possible using the methods developed in this paper, to obtain slowdown large deviation estimates for the position of the random walk in the spirit of the estimates obtained by Sznitman in [12] for the case where the environment is plain nestling (see also [1]), under hypothesis of the form

for some \(\beta >1\). Nevertheless, the estimates we would obtain would not be sharp, in the sense that we would obtain an upper bound for the probability that at time \(n\) the random walk is slowed down of the form

where \(\beta '(d)>1\), but \(\beta '(d)<d\) (as discussed in [15] and shown in [1], the exponent \(d\) is optimal). Indeed, in the uniformly elliptic case a sharp bound has been obtained by Berger [1], only in dimensions \(d\ge 4\) using an approach different from the one presented in this paper. We have therefore not included them in this article.

The proof of Theorem 1.1 requires extending the methods that have already been developed within the context of random walks in uniformly elliptic random environments. Its proof is presented in Sect. 3. To do this, we first need to show as in [2], that the polynomial condition \((P)_M\) for \(M\ge 15d+5\), implies the so called effective criterion, defined by Sznitman in [14] for random walks in uniformly elliptic environments, and extended here for random walks in random environments satisfying condition \((E)_0\). Two renormalization methods are employed here, which need to take into account the fact that the environment is not necessarily uniformly elliptic. These are developed in Sects. 3.1 and 3.2. In Sect. 3.4 it is shown, following [14], that the effective criterion implies condition \((T')\). The adaptation of the methods of [2] and [14] from uniformly elliptic environments to environments satisfying some of the ellipticity conditions that have been introduced is far from being straightforward.

The proof of Theorems 1.2 and 1.3, is presented in Sects. 4 and 5. In Sect. 4, an atypical quenched exit estimate is derived which requires a very careful choice of the renormalization method, and includes the definition of an event which we call the constrainment event, which ensures that the random walk will be able to find a path to an exit column where it behaves as if the environment was uniformly elliptic. In Sect. 5, we derive the moments estimates of the regeneration time of the random walk using the atypical quenched exit estimate of Sect. 4. Here, condition \((E')_1\) towards the asymptotic direction is required, and appears as the possibility of finding an appropriate path among \(4d-2\) possibilities connecting two points in the lattice.

2 Notation and preliminary results

Here we will fix up the notation of the paper and will introduce the main tools that will be used. In Sect. 2.2 we will prove Proposition 1.1. Its proof is straightforward, but instructive.

2.1 Setup and background

Throughout the whole paper we will use letters without sub-indexes like \(c\), \(\rho \) or \(\kappa \) to denote any generic constant, while we will use the notation \(c_{3,1},c_{3,2},\ldots , c_{4,1},c_{4,2},\ldots \) to denote the specific constants which appear in each section of the paper. Thus, for example \(c_{4,2}\) is the second constant of Sect. \(4\). On the other hand, we will use \(c_1,c_2,c_3,c_4, c_1'\) and \(c_2'\) for specific constants which will appear several times in several sections. Let \(c_1\ge 1\) be any constant such that for any pair of points \(x,y\in \mathbb Z ^d\), there exists a nearest neighbor path between \(x\) and \(y\) with less than

sites. Given \(U\subset \mathbb Z ^d\), we will denote its outer boundary by

We define \(\{\theta _n:n\ge 1\}\) as the canonical time shift on \({(\mathbb Z ^d)}^\mathbb{N }\). For \(l\in \mathbb S ^{d-1}\) and \(u\ge 0\), we define the times

and

Throughout, we will denote any nearest neighbor path with \(n\) steps joining two points \(x,y\in \mathbb Z ^d\) by \((x_1,x_2,\ldots ,x_n)\), where \(x_1=x\) and \(x_n=y\). Furthermore, we will employ the notation

for \(1\le i\le n-1\), to denote the directions of the jumps through this path. Finally, we will call \(\{t_x:x\in \mathbb Z ^d\}\) the canonical shift defined on \(\Omega \) so that for \(\omega =\{\omega (y):y\in \mathbb Z ^d\}\),

Let us now define the concept of regeneration times with respect to direction \(l\). Let

and

Define \(S_0:=0\), \(M_0:=X_0\cdot l\),

and recursively for \(k\ge 1\),

Define the first regeneration time as

The condition (2.5) on \(a\) will be eventually useful to prove the non-degeneracy of the covariance matrix of part \((a)\) of Theorem 1.3. Now define recursively in \(n\) the \((n + 1)\)-st regeneration time \(\tau _{n+1}\) as \(\tau _1(X_\cdot )+\tau _n(X_{\tau _1+\cdot }-X_{\tau _1})\). Throughout the sequel, we will occasionally write \(\tau _1^l,\tau _2^l,\ldots \) to emphasize the dependence of the regeneration times with respect to the chosen direction. It is a standard fact to show that the sequence \(( (\tau _1, X_{(\tau _1+\cdot )\wedge \tau _2}-X_{\tau _1}), (\tau _2-\tau _1, X_{(\tau _2+\cdot )\wedge \tau _3}-X_{\tau _2}), \ldots )\) is independent and except for its first term also i.i.d. with the same law as that of \(\tau _1\) with respect to the conditional probability measure \(P_0(\cdot |D^l=\infty )\) (see for example Sznitman and Zerner [16], whose proof of this fact is also valid without the uniform ellipticity assumption). This implies the following theorem (see Zerner [18] and Sznitman and Zerner [16] and Sznitman [12]).

Theorem 2.1

(Sznitman and Zerner [16], Zerner [18], Sznitman [12]) Consider a RWRE in an elliptic i.i.d. environment. Let \(l\in \mathbb S ^{d-1}\) and assume that there is a neighborhood \(V\) of \(l\) such that for every \(l'\in V\) the random walk is transient in the direction \(l'\). Then there is a deterministic \(v\) such that \(P_0\)-a.s. one has that

Furthermore, the following are satisfied.

-

(a)

If \(E_0[\tau _1]<\infty \), the walk is ballistic and \(v\ne 0\).

-

(b)

If \(E_0[\tau _1^2]<\infty \) we have that

$$\begin{aligned} \epsilon ^{1/2}\left( X_{[\epsilon ^{-1}n]}-[\epsilon ^{-1}n]v\right) \end{aligned}$$converges in law under \(P_0\) to a Brownian motion with non-degenerate covariance matrix.

In 2009, both Rassoul-Agha and Seppäläinen in [7] and Berger and Zeitouni in [3] were able to prove a quenched central limit theorem under good enough moment conditions on the regeneration times. The result of Rassoul-Agha and Seppäläinen which does not require a uniform ellipticity assumption is the following one.

Theorem 2.2

(Rassoul-Agha and Seppäläinen [7]) Consider a RWRE in an elliptic i.i.d. environment. Let \(l\in \mathbb S ^{d-1}\) and let \(\tau _1\) be the corresponding regeneration time. Assume that

for some \(p>176d\). Then \(\mathbb{P }\)-a.s. we have that

converges in law under \(P_{0,\omega }\) to a Brownian motion with non-degenerate covariance matrix.

We now define the \(n\) -th regeneration radius as

The following theorem was stated and proved without using uniform ellipticity by Sznitman as Theorem A.2 of [14], and provides a control on the lateral displacement of the random walk with respect to the asymptotic direction. We need to define for \(z\in \mathbb R ^d\)

Theorem 2.3

(Sznitman [14]) Consider a RWRE in an elliptic i.i.d. environment satisfying condition \((T)_\gamma |l\). Let \(l\in \mathbb S ^{d-1}\) and \(\gamma \in (0,1)\). Then, for any \(c>0\) and \(\rho \in (0.5,1)\),

where \(T_u^l\) is defined in (2.2).

Define the function \(\gamma _L: [3,\infty )\rightarrow \mathbb R \) as

Given \(l\in \mathbb S ^{d-1}\), we say that condition \((T)_{\gamma _L}\) in direction \(l\) (also written as \((T)_{\gamma _L}|l\)) is satisfied if there exists a neighborhood \(V\subset \mathbb S ^{d-1}\) of \(l\) such that for all \(l'\in V\)

where the slabs \(U_{l',L}\) are defined in (1.2).

Throughout this paper we will also need the following generalization of an equivalence proved by Sznitman [14], for the case \(\gamma \in (0,1)\) and which does not require uniform ellipticity. It is easy to extend Sznitman’s proof to include the case \(\gamma =\gamma _L\).

Theorem 2.4

(Sznitman [14]) Consider a RWRE in an elliptic i.i.d. environment. Let \(\gamma \in [0, 1)\) and \(l \in \mathbb S ^{d-1}\). Then the following are equivalent.

-

(i)

Condition \((T)_\gamma |l\) is satisfied.

-

(ii)

\(P_0(A_l) = 1\) and if \(\gamma >0\) we have that \(E_0[ \exp \{c(X^{(1)})^\gamma \}] < \infty \) for some \(c > 0\), while if \(\gamma =\gamma _L\) we have that \(E_0\left[ \exp \left\{ c(X^{*(1)})^{\frac{\log 2}{\log \log \left( 3\vee X^{*(1)}\right) }}\right\} \right] < \infty \) for some \(c>0\).

-

(iii)

There is an asymptotic direction \(\hat{v}\) such that \(l\cdot \hat{v}>0\) and for every \(l'\) such that \(l'\cdot \hat{v}>0\) one has that \((T)_\gamma |l'\) is satisfied.

The following corollary of Theorem 2.4 will be important.

Corollary 2.1

(Sznitman [14]) Consider a RWRE in an elliptic i.i.d. environment. Let \(\gamma \in (0,1)\) and \(l\in \mathbb S ^{d-1}\). Assume that \((T)_{\gamma }|_l\) holds. Then there exists a constant \(c\) such that for every \(L\) and \(n\ge 1\) one has that

2.2 Comments and proof of Proposition 1.1

Here we will show that \((E')_1\) implies \((ES)\). We will do this passing through another ellipticity condition. We say that condition \((ES')\) is satisfied if there exist non-negative real numbers \(\alpha _1,\ldots ,\alpha _d\) and \(\alpha '_1,\ldots ,\alpha '_d\) such that

and

We have the following lemma.

Lemma 2.1

Consider a random walk in an i.i.d. random environment. Then condition \((E')_{1}\) implies \((ES')\) which in turn implies \((ES)\). Furthermore, for a random walk in a random Dirichlet environment, \((E')_1\), \((ES)\) and \((ES')\) are equivalent to \(\lambda >1\) (cf. (1.11)).

Proof

We first prove that \((ES')\) implies \((ES)\). Note first that by the independence between \(\omega (0,e_i)\) and \(\omega (e_i,-e_i)\), (2.8) is equivalent to

Then it is enough to prove that for each pair of real numbers \(u_1, u_2\) in \((0,1)\) one has that

for any \(\alpha \), \(\alpha ' \ge 0\) such that \(\alpha +\alpha '>1\). Now if we denote by \(v_1=1-u_1\) and \(v_2=1-u_2\) then (2.9) is equivalent to

But (2.10) follows easily by our conditions on \(v_1\), \(v_2\), \(\alpha \) and \(\alpha '\). To prove that \((E')_1\) implies \((ES')\), we choose for each \(1 \le i \le d\)

Note in particular that

Now, it is easy to check that \((E')_1\) implies that

Therefore, by the monotonicity of the function \(\log x\) we have that \((E')_1\) implies that

and the corresponding inequalities with \(e_i\) replaced by \(-e_i\), for each \(1 \le i \le d\). Then \((ES')\) follows by (2.11) and (2.12). Let us now consider a Dirichlet random environment of parameters \(\{\beta _e:e\in U\}\) and assume that \((ES)\) is satisfied. It is well known that for each \(e\in U\), the random variable \(\omega (0,e)\) is distributed according to a Beta distribution of parameters \((\beta _e ,\sum _{e'\in U} \beta _{e'}-\beta _e)\): so it has a density

with respect to the Lebesgue measure in \([0,1]\). On the other hand, if we define \(v_1:=1-\omega (0,e)\) and \(v_2:=1-\omega (e,-e)\), we see that

is satisfied if and only if

is integrable. But by (2.13) this happens whenever

This proves that for a Dirichlet random environment \((ES)\) implies that \(\lambda >1\). It is obvious that for a Dirichlet random environment \(\lambda >1\) implies \((E')_1\). \(\square \)

Let us now prove Proposition 1.1. If the random walk is not transient in any direction, there is nothing to prove. So assume that the random walk is transient in a direction \(l\) and hence the corresponding regeneration times are well defined. Essentially, we will exhibit a trap as the one depicted in Fig. 1, in the edge \(\{0,e_i\}\). Define the first exit time of the random walk from the edge \(\{0,e_i\}\), so that

We then have for every \(k\ge 0\) that

and

This proves that under the annealed law,

We can now show using the strong Markov property under the quenched measure and the i.i.d. nature of the environment, that for each natural \(m>0\), the time \(T_{m}:=\min \{n\ge 0:X_n\cdot l>m\}\) can be bounded from below by a sequence \(F_1,\ldots ,F_m\) of random variables which under the annealed measure are i.i.d. and distributed as \(F\). This proves that \(P_0\)-a.s. \(T_{m}/m\rightarrow \infty \) which implies that the random walk is not ballistic in direction \(l\).

3 Equivalence between the polynomial ballisticity condition and \((T')\)

Here we will prove Theorem 1.1, establishing the equivalence between the polynomial condition \((P)_M\) and condition \((T')\). To do this, we will pass through both the effective criterion and an version of condition \((T)_\gamma \) which corresponds to the choice of \(\gamma =\gamma _L\) according to (2.6) (see [2]). Now, to prove Theorem 1.1, we will first show in Sect. 3.1 that \((P)_M\) implies \((T)_{\gamma _L}\) for \(M\ge 15d +5\). In Sect. 3.2, we will prove that \((T)_{\gamma _L}\) implies a weak kind of an atypical quenched exit estimate. In these first two steps, we will generalize the methods presented in [2] for random walks satisfying condition \((E)_0\). In Sect. 3.3, we will see that this estimate implies the effective criterion. Finally, in Sect. 3.4, we will show that the effective criterion implies \((T')\), generalizing the method presented by Sznitman [14], to random walks satisfying \((E)_0\).

Before we continue, we will need some additional notation. Let \(l\in \mathbb S ^{d-1}\). Let \(L,L'>0\), \(\tilde{L}>0\),

and

Here \(R\) is the rotation defined by (1.4). When there is no risk of confusion, we will drop the dependence of \(B(R,L,L',\tilde{L})\) and \(\partial _+B(R,L,L',\tilde{L})\) with respect to \(R\), \(L\), \(L'\) and \(\tilde{L}\) and write \(B\) and \(\partial _+B\) respectively. Let also,

where \(q_B:=P_{0,\omega }(X_{T_B}\notin \partial _+B)\) and \(p_B:=P_{0,\omega }(X_{T_B}\in \partial _+B)\).

3.1 Polynomial ballisticity implies \((T)_{\gamma _L}\)

Here we will prove that the Polynomial ballisticity condition implies \((T)_{\gamma _L}\). To do this, we will use a multi-scale renormalization scheme as presented in Sect. 3 of [2]. Let us note that [2] assumes that the walk is uniformly elliptic.

Proposition 3.1

Let \(M >15d+5\) and \(l \in \mathbb S ^{d-1}\). Assume that conditions \((P)_M|l\) and \((E)_0\) are satisfied. Then \((T)_{\gamma _L}|l\) holds.

Let us now prove Proposition 3.1. Let \(N_0\ge \frac{3}{2}c_0\), where \(c_0\) is defined in (1.6). For \(k \ge 0\), define recursively the scales

Define also for \(k \ge 0\) and \(x \in \mathbb{R }^d\) the boxes

and their middle frontal part

with the convention that \(N_{-1}:=2N_0/3\). We also define the the front side

the back side

and the lateral sides

We need to define for each \(n, m \in \mathbb{N }\) the sub-lattices

and refer to the elements of

as boxes of scale \(k\). When there is no risk of confusion, we will denote a typical element of this set by \(B_k\) or simply \(B\) and its middle part as \(\tilde{B}_k\) or \(\tilde{B}\). Furthermore, we have

which will be an important property that will be useful. In this subsection, it is enough to assume a weaker condition than \((P)_M|l\). The following lemma gives a practical version of the polynomial condition which will be used for the proof of Proposition 3.1.

Lemma 3.1

Let \(M>0\) and \(l\in \mathbb S ^{d-1}\). Assume that condition \((P)_M|l\) is satisfied. Then, for \(N_0\ge \frac{3}{2}c_0\) one has that

Proof

For each \(x\in \tilde{B}_0\), consider the box

Note that

Let \(L:=\frac{2}{3}N_0\). Note now that for all \(x\), \(A_0(x)\) is a translation of the box \(B_{l,L,81L^3}\) (c.f. (1.3)) so that the probability of the right-hand side of (3.2) is bounded from above by \(L^{-M}\le N_0^{-M}\). \(\square \)

We now say that box \(B \in \mathcal B _0\) is good if

Otherwise, we say that the box \(B\in \mathcal B _0\) is bad. The following lemma appears in [2] as Lemma 3.7, so its proof will also be omitted.

Lemma 3.2

Let \(M>0\) and \(l \in \mathbb S ^{d-1}\). Assume that \((P)_M|l\) holds. Let \(N_0\ge \frac{3}{2}c_0\). Then for all \(B_0 \in \mathcal B _0\) we have that

Now, we want to extend the concept of good and bad boxes of scale \(0\) to boxes of any scale \(k\ge 1\). To do this, due to the lack of uniform ellipticity, we need to modify the notion of good and bad boxes for scales \(k\ge 1\) presented in Berger et al. [2]. Consider a box \(Q_{k-1}\) of scale \(k-1\ge 1\). For each \(x \in \tilde{Q}_{k-1}\) we associate a natural number \(n_x\) and a self-avoiding path \(\pi ^{(x)}:=(\pi ^{(x)}_1,\ldots , \pi ^{(x)}_{n_x})\) starting from \(x\) so that \(\pi ^{(x)}_1=x\), such that \((\pi ^{(x)}_{n_x}-x)\cdot l\ge N_{k-2}\) and so that

for some

and

Now, let

We say that the box \(Q_{k-1} \in \mathcal B _{k-1}\) is elliptically good if for each \(x \in \tilde{Q}_{k-1}\) one has that

Otherwise the box is called elliptically bad. We can now recursively define the concept of good and bad boxes. For \(k \ge 1\) we say that a box \(B_k \in \mathcal B _k\) is good, if the following are satisfied:

-

(a)

There is a box \(Q_{k-1} \in \mathcal B _{k-1}\) which is elliptically good.

-

(b)

Each box \(C_{k-1} \in \mathcal B _{k-1}\) of scale \(k-1\) satisfying \(C_{k-1} \cap Q_{k-1} \ne \emptyset \) and \(C_{k-1} \cap B_k \ne \emptyset \) is elliptically good.

-

(c)

Each box \(B_{k-1} \in \mathcal B _{k-1}\) of scale \(k-1\) satisfying \(B_{k-1} \cap Q_{k-1} = \emptyset \) and \(B_{k-1} \cap B_k \ne \emptyset \), is good.

Otherwise, we say that the box \(B_k\) is bad. Now we will obtain an important estimate on the probability that a box of scale \(k\ge 1\) is good, corresponding to Proposition 3.8 of [2]. Nevertheless, note that here we have to deal with our different definition of good and bad boxes due to the lack of uniform ellipticity. Let

We first need the following estimate.

Lemma 3.3

For each \(k \ge 1\) we have that

Proof

By translation invariance and using Chebyshev’s inequality as well as independence, we have that for any \(\alpha >0\)

where \( N_{k-1}N_k^{3(d-1)}\) is an upper bound for \(|\tilde{B}_k|\) and we have used the inequality \(N_k\le 12 N_{k-1}^3\) and we have without loss of generality assumed that \(\alpha <1\). But this expression can be bounded by \(e^{9dN_{k-1}}\) due to our choice of \(N_0\). Then, using the definition of \(\Xi \) in (3.3), we have that

\(\square \)

We can now state the following lemma giving an estimate for the probability that a box of scale \(k\ge 0\) is bad. We will use Lemma 3.1.

Lemma 3.4

Let \(l \in \mathbb S ^{d-1}\) and \(M\ge 15d+5\). Assume that \((P)_M|l\) is satisfied. Then for \(N_0\ge \frac{3}{2}c_0\) one has that for all \(k \ge 0\) and all \(B_k \in \mathcal B _k\),

Proof

By Lemma 3.2 we see that

where

We will show that this implies for all \(k\ge 1\) that

for a sequence of constants \(\{c'_{3,k}: k\ge 0\}\) defined recursively by

We will now prove (3.5) using induction on \(k\). To simplify notation, we will denote by \(q_k\) for \(k\ge 0\), the probability that the box \(B_k\) is bad. Assume that (3.5) is true for some \(k\ge 0\). Let \(A\) be the event that all boxes of scale \(k\) that intersect \(B_{k+1}\) are elliptically good, and \(B\) the event that each pair of bad boxes of scale \(k\) that intersect \(B_{k+1}\), have a non-empty intersection. Note that the event \(A\cap B\) implies that the box \(B_{k+1}\) is good. Therefore, the probability \(q_{k+1}\) that the box \(B_{k+1}\) is bad is bounded by the probability that there are at least two bad boxes \(B_{k}\) which intersect \(B_{k+1}\) plus the probability that there is at least one elliptically bad box of scale \(k\), so that by Lemma 3.3, for each \(k \ge 0\) one has that

where \(m_k\) is the total number of bad boxes of scale \(k\) that intersect \(B_{k+1}\). Now note that

But by the the fact that \(c_{3,3} N_k \ge c'_{3,k}2^{k+1}\) for \(k\ge 0\) we have that

Hence, substituting this estimate and estimate (3.8) back into (3.7) and using the induction hypothesis, we conclude that

Now note that the recursive definition (3.6) implies that

Using the inequality \(\log (a+b)\le \log a+\log b\) valid for \(a,b\ge 2\), we see that

From these estimates we see that whenever \(M\ge 15d+5\) and

then for every \(k\ge 0\), one has that \(c'_{3,k}\ge 1\). But (3.9) is clearly satisfied for \(N_0\ge 3^{29d}\).

\(\square \)

The next lemma establishes that the probability that a random walk exits a box \(B_k\) through its lateral or back side is small if this box is good.

Lemma 3.5

Assume that \(N_0\ge \frac{3}{2}c_0\). Then, there is a constant \(c_{3,4}>0\) such that for each \(k\ge 0\) and \(B_k \in \mathcal B _k\) which is good one has

Proof

Since the proof follows closely that of Proposition 3.9 of [2], we will only give a sketch indicating the steps where the lack of uniform ellipticity requires a modification. We first note that that for each \(k\ge 0\),

We denote by \(p_k:=\sup _{x \in \tilde{B}_k}P_{x,\omega } \left( X_{T_{B_k}}\in \partial _{l} B_k \right) \) and \(r_k:=\sup _{x \in \tilde{B}_k}P_{x,\omega } \left( X_{T_{B_k}}\in \partial _{-} B_k \right) \). We show by induction on \(k\) that

where

and \(\Xi \) is defined in (3.3). The case \(k=0\) follows easily by the definition of good box at scale \(0\) with

We then assume that (3.10) and (3.11) hold for some \(k \ge 0\) and show that this implies that (3.10) is satisfied for \(k+1\), following the same argument presented in the proof of Proposition 3.9 of [2]. Next, assuming (3.10) and (3.11) for some \(k \ge 0\), we show that (3.11) is satisfied for \(k+1\). This is done essentially as in Proposition 3.9 of [2], coupling the random walk to a one-dimensional random walk. To perform this coupling, we cannot use uniform ellipticity as in [2], and should instead use the property of elliptical goodness satisfied by the corresponding suboxes. Note that we only need to use the elliptical goodness of the bad subox and of those which intersect it. We finally choose

Note that for \(N_0\ge \frac{3}{2}c_0\) we have that \(\log 27(N_0+j)^4\le 3(N_0+j-1)^2\) for \(j\ge 1\) and hence the second term in the definition of \(c_{3,k}''\) is bounded from above by \(4/N_0\). Also, the last term is bounded from above by \((10+6d(\log \Xi )^2)/N_0\). Hence, we conclude that \(c_{3,4}>0\). \(\square \)

We can now repeat the last argument of Proposition 2.1 of [2], which does not require uniform ellipticity, to finish the proof of Proposition 3.1.

3.2 Condition \((T)_{\gamma _L}\) implies a weak atypical quenched exit estimate

In this subsection we will prove that the condition \((T)_{\gamma _L}\) implies a weak atypical quenched exit estimate. Throughout, we will denote by \(B\) the box

as defined in (3.1), with \(R\) the rotation which maps \(e_1\) to \(l\). Let

Proposition 3.2

Let \(l\in \mathbb S ^{d-1}\). Assume that the ellipticity condition \((E)_0\) and that \((T)_{\gamma _L}|l\) are fulfilled. Then, for each function \(\beta _L:(0, \infty ) \rightarrow (0, \infty )\) and each \(c>0\) there exists \(c_{3,11}>0\) such that

where \(B\) is the box defined in (3.12).

Let us now prove Proposition 3.2. Let \(\varsigma >0\). We will perform a one scale renormalization analysis involving boxes of side \(\varsigma L^{\frac{\epsilon _L}{d+1}}\) which intersect the box \(B\). Without loss of generality, we assume that \(e_1\) belongs to the intersection of the half-spaces so that

and

Define the hyperplane perpendicular to direction \(e_1\) as

We will need to work with the projection on the direction \(l\) along the hyperplane \(H\) defined for \(z\in \mathbb Z ^d\) as

and the projection of \(z\) on \(H\) along \(l\) defined by

Let \(r>0\) be a fixed number which will eventually be chosen large enough. For each \(x \in \mathbb Z ^d\) and \(n\) define the mesoscopic box

and their front boundary

Define the set of mesoscopic boxes intersecting \(B\) as

From now on, when there is no risk of confusion, we will write \(D\) instead of \(D_n\) for a typical box in \(\mathcal D \). Also, let us set \(n:=\varsigma L^{\frac{\epsilon _L}{d+1}}\). We now say that a box \(D(x)\in \mathcal D \) is good if

Otherwise we will say that \(D(x)\) is bad.

Lemma 3.6

Let \(l\in \mathbb S ^{d-1}\) and \(M > 15d+5\). Consider a RWRE satisfying condition \((P)_M|l\) and the ellipticity condition \((E)_0\). Then, there is a \(c_{3,5}\) such that for \(r\ge c_{3,5}\) one has that

Proof

By (3.19) and Markov inequality we have that

Now, by Proposition 3.1 of Sect. 3.1, we know that the polynomial condition \((P)_M|l\) and the ellipticity condition \((E)_\alpha \) imply \((T)_{\gamma _L}|l\). But by Theorem 2.4, and the fact that \(e_1\) is in the half spaces determined by \(l\) and \(\hat{v}\) (see (3.14) and (3.15)), we can conclude that \((T)_{\gamma _L}|l\) implies \((T)_{\gamma _L}|_{e_1}\). On the other hand, it is straightforward to check that there are constants \(c_{3,5}\), \(c_{3,6}>0\) such that for \(r\ge c_{3,5}\), \((T)_{\gamma _L}|_{e_1}\) implies that

Substituting this back into inequality (3.21) we see that (3.20) follows. \(\square \)

For each \(m\) such that \(0\le m\le \left\lceil \frac{2L(l\cdot e_1)}{n}\right\rceil \) define the block \( R_m\) as the collection of mesoscopic boxes (see Fig. 2)

The collection of these blocks is denoted by \(\mathcal R \). We will say that a block \(R_m\) is good if every box \(D \in R_m\) is good. Otherwise, we will say that the block \(R_m\) is bad. Now, for each \(x \in R_m\) we associate a self-avoiding path \(\pi ^{(x)}\) such that

-

(a)

The path \(\pi ^{(x)}=(\pi ^{(x)}_1,\ldots , \pi ^{(x)}_{2n+1})\) has \(2n\) steps.

-

(b)

\(\pi ^{(x)}_1=x\) and the end-point \(\pi ^{(x)}_{2n+1} \in D\) for some \(D \in R_{m+1}\).

-

(c)

Whenever \(D(x)\) does not intersect \(\partial _+B\), the path \(\pi ^{(x)}\) is contained in \(B\). Otherwise, the end-point \(\pi _{2n+1}^{(x)}\in \partial _{+}B\).

Define next \(J\) as the total number of bad boxes of the collection \(\mathcal D \) and define

We will now denote by \(\{m_1, \ldots m_N\}\) a generic subset of \(\{0, \ldots , |\mathcal R |-1\}\) having \(N\) elements. Let \(\xi \in (0,1)\). Define

where the first supremum runs over \(N\le L^{\beta _L+\frac{d}{d+1}\epsilon _L}\) and all subsets \(\{m_1,\ldots ,m_N\}\) of the set of blocks. Now, we can say that

Let us now show that the first term on the right-hand side of (3.23) vanishes. Indeed, on the event \(G_1\cap G_2\), the probability \(p_B\) is bounded from below by the probability that the random walk exits every mesoscopic box from its front side. Since \(\omega \in G_1\), the random walk will have to do this for at most \(L^{\beta _L+\frac{d}{d+1}\epsilon _L}\) bad boxes. On each bad box \(D(x)\) it will follow the path \(\pi ^{(x)}\) defined above. But then on the event \(G_2\), we have a control on the product of the probability of traversing all these paths through the bad boxes. Hence, applying the strong Markov property and using the definition of good box, we conclude that there is a \(c_{3,7}>0\) such that for \(0<\varsigma \le c_{3,7}\) and on the event \(G_1\cap G_2\),

Let us now estimate the term \(\mathbb{P }(G_1^c)\) of (3.23). Note first that the set \(\mathcal D \) of mesoscopic boxes can be divided into less than \(2^dr^{d-1}\varsigma ^d L^{\frac{d\epsilon _L}{d+1}}\) collections of boxes, whose union is \(\mathcal D \) and each collection has only disjoint boxes. Let us call \(M\) the number of such collections. We also denote by \(\mathcal D _i\) and \(J_i\), where \(1\le i\le M\), the \(i\)-th collection and the number of bad boxes in such a collection respectively. We then have that

Now, by Chebyshev inequality

where \(p_L\) is the probability that a box is bad. Now the last factor of each term after the summation of the right-hand side of (3.25) is bounded by

which clearly tends to \(1\) as \(L\rightarrow \infty \) by the fact that \(|\mathcal D _i|\le c_{3,8}L^d\), the definition of \(\epsilon _L\) and by Lemma 3.6 for some \(c_{3,8}>0\). Thus, there is a constant \(c_{3,9}>0\) such that

Substituting this back into (3.24) we hence see that

Let us now bound the term \(\mathbb{P }(G_2^c)\) of (3.23). Define \(\beta '_L:=\beta _L+\frac{d}{d+1}\epsilon _L\). Note that for each \(0<\alpha <\bar{\alpha }\) one has that

where in the last line, we have used that \(|\mathcal{R}|\) can be bounded by \(\lceil \frac{2L(l\cdot e_1)}{n}\rceil \). It now follows that for \(\xi \) such that \(\log \left( \frac{1}{\xi ^{2\alpha }\eta _\alpha ^3}\right) >0\) one can find a constant \(c_{3,10}\) such that

Substituting back (3.26) and (3.27) into (3.23) we end up the proof of Proposition 3.2.

3.3 Condition \((T)_{\gamma _L}\) implies the effective criterion

Here we will introduce a generalization of the effective criterion introduced by Sznitman in [14] for RWRE, dropping the assumption of uniform ellipticity and replacing it by the ellipticity condition \((E)_0\). Let \(l \in \mathbb S ^{d-1}\) and \(d \ge 2\). We will say that the effective criterion in direction \(l\) holds if

where

while \(c_2(d)\) and \(c_3(d)\) are dimension dependent constants that will be introduced in Sect. 3.4. Note that in particular, the effective criterion in direction \(l\) implies that condition \((E)_0\) is satisfied. Here we will prove the following proposition.

Proposition 3.3

Let \(l\in \mathbb S ^{d-1}\). Assume that the ellipticity condition \((E)_0\) and that \((T)_{\gamma _L}|l\) are fulfilled. Then, the effective criterion in direction \(l\) is satisfied.

To prove Proposition 3.3, we begin defining the following quantities

We will write \(\rho \) instead of \(\rho _{B}\), where \(B\) is the box defined in (3.29) (see 3.1) with \(\tilde{L}=L^3\). Following [2], it is convenient to split \(\mathbb{E }\rho ^a\) according to

where

for \(j \in \{1, \ldots , n-1\}\), and

with parameters

for \(2 \le j \le n(L)\). We will now estimate each of the \(n\) terms appearing in (3.30). For the first \(n-1\) terms, we now state two lemmas proved by Berger, Drewitz and Ramírez in [2], whose proofs we omit. The following lemma is a consequence of Jensen’s inequality.

Lemma 3.7

Assume that \((T)_{\gamma _L}\) is satisfied. Then

as \(L \rightarrow \infty \).

The second lemma follows from Proposition 3.2.

Lemma 3.8

Assume that the weak atypical quenched exit estimate (3.13) is satisfied. Then there exists a constant \(c_{3,12}>0\) such that for all \(L\) large enough and all \(j \in \{1, \ldots , n-1\}\) one has that

In [2], where it is assumed that the environment is uniformly elliptic, one has that \(\mathcal E _n=0\). Nevertheless, since here we are not assuming uniform ellipticity this is not the case.

Lemma 3.9

Assume that \((E)_0\) and \((T)_{\gamma _L}\) are satisfied. Then there exists a constant \(c_{3,16}>0\) such that for all \(L\) large enough we have

Proof

Choose \(0<\alpha <\bar{\alpha }\). Consider a nearest neighbor path \((x_1,\ldots ,x_m)\) from \(0\) to \(\partial _+ B\), so that \(x_1=0\) and \(x_m\in \partial _+ B\), \(x_1,\ldots ,x_{m-1} \in B\) and which has the minimal number of steps \(m\) (note that it is then self-avoiding). Then,

where in the first line, we have used that for any \(\alpha >0\), \(a \le \frac{\alpha }{2}\) for \(L\) large. Now, using Cauchy-Schwartz inequality, Chebyshev inequality, (3.13) and the fact that the probability of exiting a square box through its front side is smaller than the probability of exiting a box of width \(L^3\) and length \(L\) through its front side, we can see that the right-hand side of (3.31) is smaller than

for some constant \(c_{3,13}>0\). Now, using the fact that there are constants \(c_{3,14}\) and \(c_{3,15}\) such that

we can substitute (3.32) into (3.31) to conclude that there is a constant \(c_{3,16}\) such that

\(\square \)

It is now straightforward to conclude the proof of Proposition 3.3 using the estimates of Lemmas 3.7, 3.8 and 3.9.

3.4 The effective criterion implies \((T')\)

We will prove that the generalized effective criterion and the ellipticity condition \((E)_0\) imply \((T')\). To do this, it is enough to prove the following.

Proposition 3.4

Throughout choose \(0<\alpha <\bar{\alpha }\). Let \(l\in \mathbb S ^{d-1}\) and \(d\ge 2\). If the effective criterion in direction \(l\) holds then there exists a constant \(c_{3,28}>0\) and a neighborhood \(V_l\) of direction \(l\) such that for all \(l'\in V_l\) one has that

In particular, if (3.28) is satisfied, condition \((T')|l\) is satisfied.

To prove this proposition, we will follow the same strategy used by Sznitman in [14] to prove Proposition 2.3 of that paper under the assumption of uniform ellipticity. Firstly we need to define some constants. Let

and

where \(c_1\) is defined in (2.1). Define for \(k\ge 0\) the sequence \(\{N_k:k\ge 0\}\) by

where \(u_0 \in (0,1)\). Let \(L_0,\tilde{L}_0, L_1\) and \(\tilde{L}_1\) be constants such that

Now, for \(k\ge 0\) define recursively the sequences \(\{L_k:k\ge 0\}\) and \(\{\tilde{L}_k:k\ge 0\}\) by

It is straightforward to see that for each \(k\ge 1\)

Furthermore, we also consider for \(k\ge 0\) the box

and the positive part of its boundary \(\partial _+B_k\), and will use the notations \(\rho _k=\rho _{B_k}, p_k=p_{B_k}, q_k=q_{B_k}\) and \(n_k=[N_k]\). Following Sznitman [14], we introduce for each \(i\in \mathbb Z \)

We also define the function \(I:\mathbb Z ^d\rightarrow \mathbb Z \) by

Consider now the successive times of visits of the random walk to the sets \(\{\mathcal{H}_i:i\in \mathbb Z \}\), defined recursively as

and

For \(\omega \in \Omega \), \(x \in \mathbb{Z }^d\), \(i \in \mathbb{Z }\), let

while \(\widehat{p}(x, \omega ):=1-\widehat{q}(x, \omega )\), and

We consider also the stopping time

and the function \(f:\{n_0+2, n_0+1, \ldots \} \times \Omega \rightarrow \mathbb R \) defined by

We will frequently write \(f(n)\) instead \(f(n,\omega )\). Let us now proceed to prove Proposition 3.4. The following proposition corresponds to the first step in an induction argument which will be used to prove Proposition 3.4.

Proposition 3.5

Let \(\alpha >0\). Let \(L_0, L_1,\tilde{L}_0 \) and \(\tilde{L}_1\) be constants satisfying (3.34), with \(N_0\ge 7\). Then, there exist \(c_{3,17}, c_{3,18}(d), c_{3,19}(d)>0\) such that for \(L_0 \ge c_{3,17}\), \(a \in (0,\min \{\alpha ,1\}]\), \(u_0 \in [\xi ^{L_0/d},1]\), \(0<\xi <\frac{1}{\eta _\alpha ^{2/\alpha }}\) and

the following is satisfied

Proof

The following inequality is stated and proved in [14] by Sznitman without using any kind of uniform ellipticity assumption (inequality (2.18) in [14]). For every \(\omega \in \Omega \)

Consider now the event

and write

The first term \(\mathbb{E }[\rho _1^{a/2},G]\) of (3.43), can in turn be decomposed as

where we have defined

Furthermore, note that

where

Therefore,

We now subdivide the rest of the proof in several steps corresponding to an estimation for each one of the terms in inequality (3.45).

Step 1: estimate of \(\mathbb{E }[\rho _1^{a/2},G,A_1]\). Here we estimate the first term of display (3.44). To do this, we can follow the argument presented by Sznitman in Sect. 2 of [14], to prove that inequality (3.42) implies that there exist constant \(c_{3,20}(d)\) such that

Indeed on \(G \cap A_1\) and with the help of (3.42) one gets that

where in the first inequality we have used the fact that \(\omega \in G\), while in the second that \(\omega \in A_1\). Regarding the term in the denominator in the last expression, we can use the definition of the function \(f\) and obtain

where we have used that \(\omega \in A_1\) in the last inequality. Substituting this estimate in (3.47), we conclude that for \(\omega \in G \cap A_1\) one has that

At this point, using (3.48), the fact that since \(a\in [0,1]\) one has that \((u+v)^{a/2} \le u^{a/2}+v^{a/2}\) for \(u,v \ge 0\), the fact that \(\{\widehat{\rho }(j,\omega ), j \,\text{ even } \}\) and \(\{\widehat{\rho }(j,\omega ), j \,\text{ odd } \}\) are two collections of independent random variables and the Cauchy-Schwartz’s inequality, we can assert that

In view of (3.38) one gets easily that for \(i\in \mathbb Z \) and \(x \in \mathcal H _i\),

where the canonical shift \(\{t_x:x\in \mathbb Z ^d\}\) has been defined in (2.4). Hence, for \(i \in \mathbb{Z }\) and \(x \in \mathcal H _i\),

Following Sznitman [14] with the help of (3.37) the estimate (3.46) follows.

Step 2: estimate of \(\mathbb{E }[\rho _1^{a/2},A_2]\). Here we will prove the following estimate for the second term of inequality (3.45),

By the definition of \(c_1\) (see (2.1)), we know that necessarily there exists a path with less than \(c_1(L_1+1+\sqrt{d})\) steps between the origin and \(\partial _+B_1\). Therefore, for \(L_0\ge 1+\sqrt{d}\), there is a nearest neighbor self-avoiding path \((x_1,\ldots ,x_n)\) with \(n\) steps from the origin to \(\partial _+B_1\), such that \(2c_1L_1\le n\le 2c_1L_1+1\), \(x_1, \ldots , x_n \in B_1\) and \(x_n \cdot l\ge L_1+1\). Thus, for every \(r\ge 0\) we have that

where \(\Delta x_i:=x_{i+1}-x_i\) for \(1\le i\le n-1\) as defined in (2.3). We then have applying inequality (3.49) with \(r=a/2\) that

Regarding the second term of the right side of (3.50), we can apply the Cauchy-Schwarz inequality, the exponential Chebyshev inequality and use the fact that the jump probabilities \(\{\omega (x_i,\Delta _i):1\le i\le n-1\}\) are independent to conclude that

Meanwhile, note that the first term on the right side of (3.50) can be bounded by

Hence, we need an adequate estimate for \(\mathbb{P }(A_2)\). Now,

The two terms in the rightmost side of display (3.53) will be estimated by similar methods: in both cases, we will use the fact that \(\{\widehat{\rho }(j,\omega ), j \,\text{ even } \}\) and \(\{\widehat{\rho }(j,\omega ), j \,\text{ odd } \}\) are two collections of independent random variables, the Cauchy-Schwartz’s inequality and the Chebyshev inequality. Specifically for the first term of the rightmost side of (3.53) we have that

By an estimate analogous to (3.49), we know that for \(L_0\ge 1+\sqrt{d}\), for each \(j \in \{0, \ldots , n_0+1\}\) and each \(x\in \mathcal H _j\), there exists a nearest neighbor self-avoiding path \((y_1,\ldots ,y_m)\) with \(m\) steps, such that \(2c_1L_0\le m\le 2c_1L_0+1\), between \(x\) and \(\mathcal{{H}}_{j+1}\). Also, \(y_1 \cdot l, \ldots , y_{m-1}\cdot l \in (1-L_0, L_0+1)\) and \(y_m\cdot l \ge L_0+1\). Then, in view of (3.35), (3.36), (3.38) and (3.39), we have that for each \(j \in \{0,\ldots , n_0+1\}\)

where the summation goes over all \(x \in \mathcal{{H}}_j\) such that \( \sup _{2 \le i \le d}|R(e_i)\cdot x|<\widetilde{L}_1\). Substituting the estimate (3.55) back into (3.54) we see that

where we have used the fact that for \(L_0\ge 2\log c_4\) it is true that \(\frac{\log N_0L_0}{L_0} \le 1+\log \left( \frac{1}{\xi }\right) \) for all \(u_0 \in [\xi ^{L_0/d},1]\). Meanwhile, for the second term of the rightmost side of (3.53), we have that

In analogy to (3.55), we can conclude that \(\mathbb{E }\left[ \widehat{\rho }(j,\omega )^{-\alpha }\right] ^{1/2} \le 2L_1^{3(d-1)}e^{\frac{(\log \eta _\alpha )m}{2}}\). Therefore, for \(L_0\ge 2\log c_4\) we see that

Now, in view of (3.52), (3.53), (3.56) and (3.57) the first term on the right side of (3.50) is bounded by

Now, since \(c_1'\ge 13+\frac{24d}{\alpha }+\frac{24d+12\log \eta _\alpha }{\alpha \log \frac{1}{\xi }}\), we conclude that

and therefore, by (3.51) and (3.58) we have that

Step 3: estimate of \(\mathbb{E }[\rho ^{a/2}_1,G, A_3]\). Here we will estimate the third term of the inequality (3.45). Specifically we will show that

This upper bound will be almost obtained as the previous case, where we achieved (3.59). Indeed, in analogy to the development of (3.47) in Step 3, one has that for \(\omega \in G\),

But, if \(\omega \in A_3\) also, one easily gets that \(0<\rho _1 \le 1\) if \(L_0\ge \frac{\alpha \log 4}{2 \log \eta _{\alpha }}\). Thus,

Therefore, since \(c_1'\ge 13+\frac{24d}{\alpha }+\frac{24d+12\log \eta _\alpha }{\alpha \log \frac{1}{\xi }}\), it is enough to prove that

To justify this inequality, note that

and hence we are in a very similar situation as in (3.53) and development in (3.54) and (3.57), from where we derive (3.61).

Step 4: estimate of \(\mathbb{E }[\rho _1^{a/2}, G^c]\). Here we will prove that there exist constants \(c_{3,21}(d)\) and \(c_{3,22}(d)\) such that

Firstly, we need to consider the event

In the case that \(\omega \in G^c \cap A_4\), the walk behaves as if effectively it satisfies a uniformly ellipticity condition with constant \(\kappa =\xi \), so that we can follow exactly the same reasoning presented by Sznitman in [14] leading to inequality (2.32) of that paper, showing that there exist constants \(c_{3,21}(d)\), \(c_{3,22}(d)\) such that whenever \(\widetilde{L}_1 \ge 48 N_0 \widetilde{L}_0\) one has that

The second inequality of (3.63) does not use any uniformly ellipticity assumption. It would be enough now to prove that

To do this we will follow the reasoning presented in Step 2. Namely, for \(L_0\ge 1+\sqrt{d}\), there is a nearest neighbor self-avoiding path \((x_1,\ldots ,x_n)\) with \(n\) steps from \(0\) to \(\partial _+B_1\) such that \(2c_1L_1\le n\le 2c_1L_1+1\), \(x_1,\ldots ,x_n\in B_1\) and \(x_n\cdot l\ge L_1+1\). Therefore

so that

which proves (3.64) and finishes Step 4.

Step 5: conclusion. Combining the estimates (3.46) of step 1, (3.59) of step 2, (3.60) of step 3 and (3.62) of step 4, we have (3.41). \(\square \)

We will now prove a corollary of Proposition 3.5, which will imply Proposition 3.4. For this, it will be important to note that the statement of Proposition 3.5 is still valid if given \(k\ge 1\) we change \(L_0\) by \(L_k\), \(L_1\) by \(L_{k+1}\), \(\tilde{L}_0\) by \(\tilde{L}_k\) and \(\tilde{L}_1\) by \(\tilde{L}_{k+1}\). In effect, to see this, it is enough to note that inequality (3.40) is satisfied with these replacements. Define

Corollary 3.1

Let \(0 <\xi <\min \{c_{3,23}, e^{-1/24} \} \) and \(\alpha >0\). Let \(\{L_k:k\ge 0\}\) and \(\{\widetilde{L}_k:k\ge 0\}\) be sequences satisfying (3.33), (3.34) and (3.35). Then there exists \(c_{3,25}(d,\alpha )>0\), such that when for some \(L_0 \ge c_{3,25}\), \(a_0 \in (0,\alpha ]\), \(u_0 \in [\xi ^{L_0/d},1]\), it is true that

then for all \(k \ge 0\),

with \(a_k:=a_0 2^{-k}\), \(u_k:=u_0 8^{-k}\).

Proof

We will use induction in \(k\) to prove (3.66). By hypothesis we only need to show (3.66) for \(n=k+1\) assuming that (3.66) holds for \(n=k\). To do this, with the help of Proposition 3.5 we have that for any \(k \ge 0\)

so that, for \(k \ge 0\) and with the help of (3.35)

Since \(\xi < c_{3,23} \), we can assert that \(c_{3,18}c_{3,19} \!\widetilde{L}_{k+2}^{(d-1)}L_{k+1} e^{\!-\!c_{1} L_{k\!+\!1} \log \frac{1}{\xi ^\alpha \eta _\alpha ^2}} \!\le \xi ^{ \alpha \!u_{k+1}L_{k+1}}\). Hence, we only need to prove that

Firstly, note that for \(L_0\) large enough by the induction hypothesis, (3.35) and the fact that \(\xi < e^{-\frac{1}{24}}\)

Substituting this estimate back into (3.67) and using the hypothesis induction again, we obtain that

where \(c_{3,24}:=2c_{3,18}c_{3,19}\). Thus, in order to show that \(\phi _{k+1} \le (k+2)\xi ^{\alpha u_{k+1}L_{k+1}}\) it is enough to prove that

First, note that by the induction hypothesis,

From (3.34), (3.35) and (3.36), we can say that

But, note that

for \(L_0\) large enough. Hence, substituting this estimate back into (3.70) and (3.69) we deduce that

by our choice of \(c_1'\). Finally, choosing \(L_0\) large enough, the expression \(N_k^{6d}\xi ^{77c_1L_k} \le 1\) for all \(k \ge 1\). In the case of \(k=0\), we have that

by our assumption on \(u_0\). Then (3.68) follows and thus we get (3.66) by induction and choosing \(L_0 \ge c_{3,25}\) for some constant \(c_{3,25}>0\). \(\square \)

The following corollary implies Proposition 3.4. Since such a derivation follows exactly the argument presented by Sznitman in [14], we omit it.

Corollary 3.2

Let \(l\in \mathbb S ^{d-1}\), \(d\ge 2\) and \(\Upsilon =\max \left\{ \frac{\alpha }{24}, \left( \frac{2c_1}{c_1-1}\right) \log \eta _{\alpha }^2 \right\} \). Then, there exist constants \(c_{3,26}=c_{3,26}(d)>0\) and \(c_{3,27}=c_{3,27}(d)>0\) such that if the following inequality is satisfied

where \(B=B(R,L_0-1,L_0+1,\tilde{L}_0)\), then there exists a constant \(c_{3,28}>0\) such that

Proof

If (3.71) holds then there is a \(\xi >0\) such that

with \(\xi <\{c_{3,23}, e^{-1/24}\}\). Then, by (3.33) and (3.34),

Now, the maximum of \(u_0^{3(d-1)}\xi ^{\alpha u_0L_0}\), as a function of \(u_0\) for \(u_0 \in [\xi ^{\frac{L_0}{d}},1]\), is given by \(c_{3,29}(d)\left( \alpha L_0 \log \frac{1}{\xi }\right) ^{-3(d-1)}\) for \(u_0=\frac{3(d-1)}{\alpha L_0 \log \frac{1}{\xi }}\), when \(L_0\) is large enough, where \(c_{3,29}(d):=\left( \frac{3(d-1)}{e}\right) ^{3(d-1)}\). Thus if (3.72) holds, (3.65) holds as well. Hence, applying Corollary 3.1 we can say that (3.66) is true for all \(k \ge 0\). The same reasoning used by Sznitman in [14] to derive Proposition 2.3 of that paper gives the estimate

for some constant \(c_{3,28}>0\) and \(L\) large enough, and where we have chosen \(u_0=\frac{3(d-1)}{\alpha L_0 \log \frac{1}{\xi }}\), \(\square \)

4 An atypical quenched exit estimate

Here we will prove a crucial atypical quenched exit estimate for tilted boxes, which will subsequently enable us in Sect. 5 to show that the regeneration times of the random walk are integrable. Let us first introduce some basic notation.

Without loss of generality, we will assume that \(e_1\) is contained in the open half-space defined by the asymptotic direction so that

Recall the definition of the hyperplane perpendicular to direction \(e_1\) in (3.16) so that

Let \(P:=P_{\hat{v}}\) (see (3.17)) be the projection on the asymptotic direction along the hyperplane \(H\) defined for \(z\in \mathbb Z ^d\)

and \(Q:=Q_l\) (see (3.18)) be the projection of \(z\) on \(H\) along \(\hat{v}\) so that

Now, for \(x\in \mathbb Z ^d\), \(\beta >0\), \(\varrho >0\) and \(L>0\), define the tilted boxes with respect to the asymptotic direction \(\hat{v}\) as

and their front boundary by

See Fig. 3 for a picture of the box \(B_{\beta ,L}\) and its front boundary.

Proposition 4.1

Let \(\alpha >0\) and assume that \(\eta _\alpha <\infty \) as defined in (1.5). Let \(l\in \mathbb S ^{d-1}\) and \(M\ge 15d+5\). Assume that \((P)_M|l\) is satisfied. Let \(\beta _0 \in (1/2,1)\), \(\beta \in \left( \frac{\beta _0+1}{2},1\right) \) and \(\zeta \in (0,\beta _0)\). Then, for each \(\kappa >0\) we have that

where

We will now prove Proposition 4.1 following ideas similar in spirit from those presented by Sznitman in [14].

4.1 Preliminaries

Firstly we need to define an appropriate mesoscopic scale to perform a renormalization analysis. Let \(\beta _0\in (0.5,1)\), \(\beta \in (\beta _0,1)\) and \(\chi :=\beta _0+1-\beta \in (\beta _0,1]\). Define

Now, for each \(x \in \mathbb{R }^d\) we consider the mesoscopic box

and its central part

Define also

and

We now say that a box \(\tilde{B}(x)\) is good if

Otherwise the box is called bad. At this point, by Theorem 1.1 proved in Sect. 3, we have the following version of Theorem 2.3 (Theorem A.2 of Sznitman [14]).

Theorem 4.1

Let \(l\in \mathbb S ^{d-1}\) and \(M\ge 15d+5\). Consider RWRE satisfying condition \((P)_M|l\) and the ellipticity condition \((E)_0\). Then, for any \(c>0\) and \(\rho \in (0.5,1)\),

where \(T_u^{e_1}\) is defined in (2.2).

The following lemma is an important corollary of Theorem 4.1.

Lemma 4.1

Let \(l\in \mathbb S ^{d-1}\) and \(M\ge 15d+5\). Consider RWRE satisfying the ellipticity condition \((E)_0\) and condition \((P)_M|l\). Then

Proof

By Chebyshev’s inequality we have that

By Theorem 4.1, the first summand can be estimated as

To estimate the second summand, since \((P)_M|l\) is satisfied, by Theorem 1.1 and the equivalence given by Theorem 2.4, we can chose \(\gamma \) close enough to \(1\) so that \(\gamma \beta _0 \ge \beta _0+\beta -1\) and such that

\(\square \)

Let \(k_1,\ldots , k_d\in \mathbb Z \). From now on, we will use the notation \(x=(k_1,\ldots ,k_d)\in \mathbb R ^d\) to denote the point

Define the following set of points which will correspond to the centers of mesoscopic boxes.

We will use subsequently the following property of the lattice \(\mathcal L \): there exist \(2^d\) disjoint sub-lattices \(\mathcal L _1,\ldots , \mathcal L _{2^d}\) such that \(\mathcal L =\cup _{i=1}^{2^d} \mathcal L _i\) and for each \(1\le i\le 2^d\), the sub-lattice \(\mathcal L _i\) corresponds to the centers of mesoscopic boxes which are pairwise disjoint. Let \(\mathcal L _0\) be the set defined by

For each \(x \in \mathcal L _0\) we define the column of mesoscopic boxes as

See Fig. 4 for a picture of the column \(C_x\), for some \(x \in \mathcal L _0\).

The collection of these columns will be denoted by \(\mathcal C \). Define now for each \(C_x \in \mathcal C \) and \(-1\le k\le [L^{1-\chi }]\) define

For each point \(y \in \partial _{k,1}{C}_{x}\) we assign a path \(\pi ^{(k)} =\{\pi _1^{(k)},\ldots ,\pi _{n_1}^{(k)}\}\) in \(\tilde{B}(x)\) with \(n_1:=\left[ 2c_1 \frac{\varrho }{\hat{v}\cdot e_1} L_0\right] \) steps from \(y\) to \(\partial _{k+1,1}{C}_{x}\), so that \(\pi _1^{(k)}=y\) and \(\pi _{n_1}^{(k)}\in \partial _{k+1,1}{C}_{x}\). For each point \(z \in \partial _{k,2}{C}_{x}\) we assign a path \(\bar{\pi }^{(k)} =\{\bar{\pi }_1^{(k)},\ldots ,\bar{\pi }_{n_2}^{(k)}\}\) with \(n_2:=\left[ 2c_1 \varrho L^{\beta _0}\right] \) steps from \(z\) to \(\partial _{k,1}{C}_{x}\), so that \(\bar{\pi }_1^{(k)}=z\) and \(\bar{\pi }_{n_2}^{(k)}\in \partial _{k,1}{C}_{x}\). We will also use the notation \(\{m_1, \ldots , m_N\}\) to denote some subset of \(\{-1, \ldots , [L^{1-\chi }] \}\) with \(N\) elements.

Let \(x\in \mathcal L _0\) and \(\xi >0\). A column of boxes \(\mathcal C _x \in \mathcal C \) will be called elliptically good if it satisfies the following two conditions

and

If either (4.5) or (4.6) is not satisfied, we will say that the column \(C_x\) is elliptically bad.

Lemma 4.2

For any \(x \in \mathcal L _0\), \(\beta \in \left[ \frac{\beta _0+1}{2}, 1\right) \) and \(\xi >0\) such that \(\log \frac{1}{\xi ^{2\alpha }\eta _{\alpha }^3}>0\) we have that

Proof

Let us first note that \(\frac{L^{\beta }}{L_0} \ge 1\) by our condition on \(\beta \). Now, it is clear that

Regarding the first term on the right of (4.8) and since \(2 \beta -\beta _0-1<\beta -\beta _0<\beta \) we have that

for some constant \(c_{4,1}>0\) if \(L\) is large enough and \(\log \frac{1}{\xi ^{2\alpha }\eta _{\alpha }^3}>0\).

Similarly for the rightmost term of (4.8) we have that,

for some constant \(c_{4,2}>0\) if \(L\) is large enough and \(\log \frac{1}{\xi ^{2\alpha }\eta _{\alpha }^3}>0\). Substituting (4.9) and (4.10) back into (4.8), (4.7) follows. \(\square \)

The proof Proposition 4.1 will be reduced to the control of the probability of the three events: the first one, corresponding to Sect. 4.2, gives a control on the number of bad boxes; the second one, corresponding to Sect. 4.3, gives a control on the number of elliptically good columns; the third one, corresponding to Sect. 4.4, gives a control on the probability that the random walk can find an appropriate path which leads to an elliptically good column.

4.2 Control on the number of bad boxes

We will need to consider only the mesoscopic boxes which intersect the box \(B_{\beta ,L}(0)\) and whose \(k_1\) index is larger than or equal to \(-1\). We hence define the collection of mesoscopic boxes

In addition, we call the number of bad mesoscopic boxes in \(\mathcal B \),

and for each \(1\le i\le 2^d\), call the number of bad mesoscopic boxes in \(\mathcal B \) with centers in the sub-lattice \(\mathcal L _i\) as

Define

Lemma 4.3

Assume that \(\beta >\frac{\beta _0+1}{2}\). Then, there is a constant \(c_{4,3}>0\) such that for every \(L>1\) we have that

Proof

Note that the number of columns intersecting the box \(B_{\beta ,L}(0)\) is equal to

Hence, whenever \(\omega \in G_1\), necessarily there exist at least \({\left\lceil \frac{\varrho ^{d-1} L^{(d-1)(\beta -\beta _0)}}{2(1+\varrho )^{d-1}}\right\rceil }_{}\) columns each one with at most \({\left\lceil \frac{L^{\beta }}{L_0}\right\rceil }_{}\) bad boxes. Let us take \(m_1:=\left[ \frac{\varrho ^{d-1} L^{(d-1)(\beta -\beta _0)}L^{\beta }}{2(1+\varrho )^{d-1}L_0} \right] \) and \(m_2:=|\mathcal B |=\left[ \frac{\varrho ^{d-2}L^{d(\beta -\beta _0)}}{(1+\varrho )^{d-1}}+ \frac{\varrho ^{d-1}L^{(d-1)(\beta -\beta _0)}}{(1+\varrho )^{d-1}} \right] \). Now, using the fact that the mesoscopic boxes in each sub-lattice \(\mathcal L _i\), \(1\le i\le 2^d\), are disjoint, and the estimate (4.4) of Lemma 4.1, we have by independence that there exists a constant \(c_{4,3}>0\) such that for every \(L\ge 1\),

Note that in the second to last inequality we have used the fact that \(2 \beta +\beta _0-2>0\) which is equivalent to the condition \(\beta >\frac{2-\beta _0}{2}\). Now, this last condition is implied by the requirement \(\beta >\frac{\beta _0+1}{2}\). \(\square \)

4.3 Control on the number of elliptically bad columns

Let \({m_3:=\left[ \frac{\varrho ^{d-1} L^{(d-1)(\beta -\beta _0)}}{2(1+\varrho )^{d-1}}\right] }_{}\) and define the event that any sub-collection of the set of columns of cardinality larger than or equal to \(m_3\) has at least one elliptically good column

Here we will prove the following lemma.

Lemma 4.4

There is a constant \(c_{4,4}>0\) such that for every \(L\ge 1\),

Proof

Note that the total number of columns intersecting the box \(B_{\beta ,L}\) is equal to

Using the fact that the events \(\{C_x \,\, \text{ is }\,\, \text{ elliptically }\,\, \text{ bad }\}\), \(\{C_y \,\, \text{ is }\,\, \text{ elliptically }\,\, \text{ bad }\}\) are independent if \(x \ne y\), since these columns are disjoint, we conclude that there is a constant \(c_{4,4}>0\) such that for all \(L\ge 1\),

where in the last inequality we have used the estimate (4.7) of Lemma 4.2 which provides a bound for the probability of a column to be elliptically bad. \(\square \)

4.4 The constrainment event

Here we will obtain an adequate estimate for the probability that the random walk hits an elliptically good column. We will need to introduce some notation, corresponding to the the box where the random walk will move before hitting the elliptically good column and a certain class of hyperplanes of this region. Let first \(\zeta \in (0, \beta _0)\), a parameter which gives the order of width of the box \(\bar{B}_{\zeta , \beta , L}\) where the random walk will be able to find a reasonable path to the elliptically good column, so that

Note that this box is contained in \(B_{\beta ,L}(0)\) and that it also contains the starting point \(0\) of the random walk. Define now for each \(0\le z\le L^\zeta \), the truncated hyperplane

and consider the two collections of truncated hyperplanes defined as

Whenever there is no risk of confusion, we will drop the subscript from \(H_z\) writing \(H\) instead. Let \(r:=[2 \varrho L^{\beta }]\). Now, for each \(H \in \mathcal H ^{+} \cup \mathcal H ^{-}\) and each \(j\) such that \(e_j\ne \pm e_1\), we will consider the set of paths \(\Pi _j\) with \(r\) steps defined by \(\pi = \{\pi _{1}, \ldots , \pi _{r}\}\in \Pi _j\) if and only if

In other words, \(\pi \) is contained in the truncated hyperplane \(H\) and it has steps which move only in the direction \(e_j\). We now say that an hyperplane \(H\in \mathcal H ^+\cap \mathcal H ^-\) is elliptically good if for all paths \(\pi \in \cup _{j\ne 1, d+1}\Pi _j\) one has that

Otherwise \(H\) will be called elliptically bad (See Fig. 5).

The box \(\bar{B}_{\zeta , \beta ,L}\). The arrows indicate the ellipticity condition given by (4.12), which implies that each hyperplane is elliptically good

From a routine counting argument and applying Chebyshev inequality, note that for each \(H \in \mathcal H ^{+} \cup \mathcal H ^{-}\) and \(\xi >0\) such that \(\log \frac{1}{\xi ^{\alpha }\eta _{\alpha }^2}>0\) there is a constant \(c_{4,5}>0\) such that

Now choose a rotation \(\hat{R}\) such that \(\hat{R}(e_1)=\hat{v}\). Let \(\hat{v}_j:=\hat{R}(e_j)\) for \(j \ge 2\). We now want to make a construction analogous to the one which led to the concept of elliptically good hyperplane. But now, we would need to define hyperplanes perpendicular to the directions \(\{\hat{v}_j\}\) which are not necessarily equal to a canonical vector. Therefore, we will work here with strips, instead of hyperplanes. For each \(z\in \mathbb Z \) even and \(k \in \{2, \ldots , d\}\) consider the strip \(I_{k,z}:=\{x \in \bar{B}_{\zeta , \beta , L}(0): z-1<x \cdot \hat{v}_j<z+1\}\). Consider also the two sets of strips, \(\mathcal I _k^{+}\) and \(\mathcal I _k^{-}\) defined by

Whenever there is no risk of confusion, we will drop the subscripts from a strip \(I_{k,z}\) writing \(I\) instead. We will need to work with the set of canonical directions which are contained in the closed positive half-space defined by the asymptotic direction, so that

Let \(s:=\left[ 2c_1\frac{L^{\zeta }}{\hat{v}\cdot e_1}\right] \). For each \(I \in \mathcal I _k^{+} \cup \mathcal I _k^{-}\) and each \(y \in I\) we associate a path \(\hat{\pi }=\{\hat{\pi }_{1}, \ldots , \hat{\pi }_{ n}\}\), with \(s\le n\le s+1\), which satisfies

and

Note that by the fact that the strip \(I\) has a Euclidean width \(1\), it is indeed possible to find a path satisfying these conditions and also that such a path is not necessarily unique. We will call \(\hat{\Pi }_k\) such a set of paths associated to all the points of the strip \(I\). Now, a strip \(I\in \mathcal I _k^+\cup \mathcal I _k^-\) will be called elliptically good if for all paths \(\hat{\pi }\in \hat{\Pi }_k\) one has that

Otherwise \(I\) will be called elliptically bad (See Fig. 6).

In each strip \(\mathcal I \), every path \(\pi \) chosen previously satisfies the ellipticity condition given by (4.14). Then \(\mathcal I \) is elliptically good

As before, from a routine counting argument and by Chebyshev inequality, note that for each \(k\in \{2,\ldots ,d\}\), \(I \in \mathcal I _{k}^{+} \cup \mathcal I _{k}^{-}\) and \(\xi >0\) which satisfies \(\log \frac{1}{\xi ^{\alpha }\eta _{\alpha }^2}>0\), there exists a constant \(c_{4,6}>0\) such that

We now define the constrainment event as

We can now state the following lemma which will eventually give a control on the probability that the random walk hits an elliptically good column.

Lemma 4.5

There is a constant \(c_{4,7}>0\) such that for every \(L \ge 1\),

Proof

Note that

Now, inequality (4.16) follows using the estimate (4.13) for the probability that a hyperplane is elliptically bad, the estimate (4.15) for the probability that a strip is elliptically bad, applying independence and translation invariance. \(\square \)

4.5 Proof of Proposition 4.1

Firstly, note that for any \(\kappa >0\),

Let us begin bounding the first three terms of the right-hand side of (4.17). Let \(\beta \in \left( \frac{\beta _0+1}{2},1 \right) \) and \(\zeta \in (0,\beta _0)\). By Lemma 4.3 of Sect. 4.2, Lemma 4.4 of Sect. 4.3 and Lemma 4.5 of Sect. 4.4 we have that there is a constant \(c_{4,8}>0\) such that

Since \(\beta <1\) is equivalent to \(\beta +(d-1)(\beta -\beta _0)>3\beta -2+(d-1)(\beta -\beta _0)\), the sum in (4.18) can be bounded as

for some constant \(c_{4,9}>0\) and where \(g(\beta , \beta _0, \zeta ):=\min \{\beta +\zeta , 3\beta -2+(d-1)(\beta -\beta _0)\}\).

We will now prove that the fourth term of the right-hand side of inequality (4.17) satisfies for \(L\) large enough

In fact, we will show that for \(L\) large enough on the event \(G_1\cap G_2\cap G_3\) one has that

We will prove (4.21) showing that the walk can exit \(B_{\beta , L}(0)\) through \(\partial _{+}B_{\beta , L}(0)\) choosing a strategy which corresponds to paths which go through an elliptically good column. This implies, in particular, that the walk exits successively boxes \(\tilde{B}(x)\) through \(\partial _{+}\tilde{B}(x)\). The event \(G_1\) implies that there exist at least \(m_3=\left[ \frac{\varrho ^{d-1} L^{(d-1)(\beta -\beta _0)}}{2(1+\varrho )^{d-1}}\right] \) columns each one with at most \(\left[ \frac{L^{\beta }}{L_0}\right] \) of bad boxes. Meanwhile, the event \(G_2\) asserts that in any collection of columns with cardinality \(m_3\) or more, there is at least one elliptically good column. Therefore, on the event \(G_1\cap G_2\) there exists at least one elliptically good column \(D\) with at most \(L^\beta /L_0\) bad boxes. Thus, on \(G_1\cap G_2\) we have that for any point \(y\in D\) and \(\xi >0\),

where the first factor is a bound for the probability that the random walk exits all the good boxes of the column through their front side, while the second factor is a bound for the probability that the walk traverses each bad box (whose number is at most \(L^\beta /L_0\)) exiting through its front side and following a path with at most \({\frac{2c_1\rho L_0}{\hat{v}\cdot e_1}}_{}\) steps and is given by the condition (4.5) for elliptically good columns, while the third factor is a bound for the probability that once the walk exits a box (whose number is at most \(L^{\beta -\beta _0}+1\)) it moves through its front boundary to the central point of this front boundary following a path with at most \([2c_1\rho L^\beta _0]\) steps and is given by the condition (4.6) for elliptically good columns.