Abstract

Locally optimal designs are derived for generalized linear models with first order linear predictors. We consider models including a single factor, two factors and multiple factors. Mainly, the experimental region is assumed to be a unit cube. In particular, models without intercept are considered on arbitrary experimental regions. Analytic solutions for optimal designs are developed under the D- and A-criteria, and more generally, for Kiefer’s \(\Phi _k\)-criteria. The focus is on the vertex type designs. That is, the designs are only supported by the vertices of the respective experimental regions. By the equivalence theorem, necessary and sufficient conditions are developed for the local optimality of these designs. The derived results are applied to gamma and Poisson models.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The generalized linear model (GLM) was developed by Nelder and Wedderburn (1972). It is viewed as a generalization of the ordinary linear regression which allows continuous or discrete observations from one-parameter exponential family distributions to be combined with explanatory variables (factors) via proper link functions. Generalized linear models include several types such as Poisson, gamma, logistic models among others. Therefore, wide applications can be addressed by GLMs such as social and educational sciences, clinical trials, insurance, industry (Walker and Duncan 1967; Myers and Montgomery 1997; Fox 2015; Goldburd et al. 2016).

While deriving optimal designs is obtained by minimizing the variance-covariance matrix there is no loss of generality to concentrate on maximizing the Fisher information matrix. For generalized linear models the Fisher information matrix depends on the model parameters. Therefore, the optimal design cannot be found without a prior knowledge of the parameters. One approach is the so-called local optimality, which was proposed by Chernoff (1953). This approach aims at deriving an optimal design at a given parameter value (best guess).

As in many research works the results on optimal designs in particular, on a continuous experimental region are influenced by the type of models used. For example, Ford et al. (1992) used single-factor GLMs. Moreover, Gaffke et al. (2019) and Russell et al. (2009) used the gamma and the Poisson models, respectively, while the logistic model was employed by Yang et al. (2011) and Atkinson and Haines (1996).

In this paper we focus on the problem of finding locally optimal designs for a class of generalized linear models, which is motivated by the work of Yang and Stufken (2009) and Tong et al. (2014), who provided analytic results for a general setup of the GLM with binary factors. Here, we are also interested in deriving locally optimal designs for a general setup of generalized linear models on continuous and discrete experimental regions. Schmidt and Schwabe (2017) showed that the support points of the optimal designs for GLMs on an experimental region given by a polytope are located at the edges of the experimental region. In particular, in Gaffke et al. (2019) we proved that the optimal designs for gamma models are supported by the vertices of the experimental region (polytope). In this paper, we will restrict our attention to the vertices of the experimental region by which optimal designs can be supported for the corresponding generalized linear models. Throughout the sequel, we confine ourselves to the general equivalence theorem to establish a necessary and sufficient condition for a design to be locally optimal.

The remainder of the paper is organized as follows. In Sect. 2 we introduce the generalized linear model and optimality of designs. Approaches to determine the optimal weights for some particular designs under D-, A- and Kiefer \(\Phi _k\)-criteria are characterized in Sect. 3. Then optimal designs are derived under the single-factor and the two-factor models in Sects. 4 and 5, respectively. First order models of multiple factors are presented in Sect. 6, and optimal designs are derived for such models with and without intercept. Applications of the results are discussed under gamma and Poisson model in Sect. 7.

2 Preliminary

In this section, we introduce the generalized linear model and give a characterization of optimal designs. Let the univariate observation (response) Y belongs to a one-parameter exponential family distribution in the canonical form

where \(b(\cdot )\) and \(c(\cdot )\) are known functions while \(\theta \) is a canonical parameter. In the generalized linear model each response Y of a statistical unit is observed at a certain value of a covariate \(\varvec{x}=(x_1, \dots , x_\nu )^\mathsf{T}\) that belongs to an experimental region \(\mathcal{X}\subseteq {\mathbb {R}}^{\nu }, \nu \ge 1\). Here, \(\theta :=\theta (\varvec{x},\varvec{\beta })\) varies with the value of \(\varvec{x}\in {\mathcal {X}}\) at a fixed value of the vector of model parameters \(\varvec{\beta }\in {\mathbb {R}}^{p}\). The expected mean is given by \(\mathrm {E}(Y)=\mu (\varvec{x},\varvec{\beta })=b^\prime (\theta )\) with the variance function \(V\big (\mu (\varvec{x},\varvec{\beta })\big )=b^{\prime \prime }(\theta )\) [see McCullagh and Nelder (1989, Sect. 2.2.2)]. Let \(\varvec{f}(\varvec{x}):\mathcal{X}\rightarrow {\mathbb {R}}^{p}\) be a vector of continuous regression functions \(f_{1}(\varvec{x}),\dots , f_{p}(\varvec{x})\) which are assumed to be linearly independent. Denote the linear predictor by \(\eta =\varvec{f}^\mathsf{T}(\varvec{x})\varvec{\beta }\). In the generalized linear model it is assumed that \(\eta =g\big (\mu (\varvec{x},\varvec{\beta })\big )\), where g is a link function and assumed to be one-to-one and differentiable. We can define the intensity function at a point \(\varvec{x} \in {\mathcal {X}}\) as

where \(\mathrm{d}\mu (\varvec{x},\varvec{\beta })/\mathrm{d}\eta =1/g^\prime (\mu (\varvec{x},\varvec{\beta }))\). Obviously, \(u(\varvec{x},\varvec{\beta })\) is positive for all \(\varvec{x} \in {\mathcal {X}}\) and may be regarded as a weight for the corresponding unit at the point \(\varvec{x}\) (Atkinson and Woods 2015). The Fisher information matrix at \(\varvec{x}\in {\mathcal {X}}\) [see Fedorov and Leonov (2013, Sect. 1.3.2)] is given by

An information matrix of the form (2.3) is appropriate for other nonlinear models, e.g., model with survival times observations employing the proportional hazards (Schmidt and Schwabe 2017). Moreover, under homoscedastic regression models the intensity function is constantly equal to 1 whereas, under heteroscedastic regression models we get intensity that is equal to \(1/\mathrm {var}(Y)\), which depends on \(\varvec{x}\) only and thus we have information matrix of the form \( \varvec{M}(\varvec{x})=u(\varvec{x})\,\varvec{f}(\varvec{x})\,\varvec{f}^\mathsf{T}(\varvec{x})\) that does not depend on the model parameters. The latter case was discussed in Graßhoff et al. (2007) and in the book by Fedorov and Leonov (2013, p.13).

Throughout the present work we will deal with the approximate (continuous) design theory. An approximate design \(\xi \) can be defined as a probability measure with finite support on the experimental region \(\mathcal{X}\),

where \(r\in {\mathbb {N}}\), \(\varvec{x}_1,\varvec{x}_2, \dots ,\varvec{x}_r\in {\mathcal {X}}\) are pairwise distinct points and \(\omega _1, \omega _2, \dots , \omega _r>0\) with \(\sum _{i=1}^{r} \omega _i=1\). The set \(\mathrm{supp}(\xi )=\{\varvec{x}_1,\varvec{x}_2, \dots ,\varvec{x}_r\}\) is called the support of \(\xi \) and \(\omega _1,\ldots ,\omega _r\) are called the weights of \(\xi \) [see Silvey (1980, p.15)]. The information matrix of a design \(\xi \) from (2.4) at a parameter point \(\varvec{\beta }\) is defined by

One might recognize \(\varvec{M}(\xi , \varvec{\beta })\) as a convex combination of all information matrices for all support points of \(\xi \). Another representation of the information matrix (2.5) can be utilized based on the \(r \times p\) design matrix \(\varvec{F}=[\varvec{f}(\varvec{x}_1),\dots ,\varvec{f}(\varvec{x}_r)]^\mathsf{T}\) and the \(r\times r\) weight matrix \(\varvec{V}=\mathrm {diag}(\omega _iu(\varvec{x}_i,\varvec{\beta }))_{i=1}^{r}\) and hence we can write

Remark

A particular type of designs appears frequently when the support size equals the dimension of \(\varvec{f}\), i.e., \(r=p\). In such a case the design is minimally supported and it is often called a minimal-support or a saturated design.

This paper focuses on optimal designs within the family of Kiefer’s \(\Phi _k\)-criteria (Kiefer 1975). These criteria aim at minimizing the k-norm of the eigenvalues of the variance-covariance matrix and include the most common criteria for D-, A- and E- optimality. Denote by \(\lambda _i(\xi ,\varvec{\beta })\, (1\le i \le p)\) the eigenvalues of a nonsingular information matrix \(\varvec{M}(\xi ,\varvec{\beta })\). Denote by “\(\det \)” and “\(\mathrm{tr}\)” the determinant and the trace of a matrix, respectively. The Kiefer’s \(\Phi _k\)-criteria are defined by

Note that \(\Phi _0\big (\varvec{M}(\xi ,\varvec{\beta })\big )\), \(\Phi _1\big (\varvec{M}(\xi ,\varvec{\beta })\big )\) and \(\Phi _\infty \big (\varvec{M}(\xi ,\varvec{\beta })\big )\) are the D-, A- and E-criteria, respectively. Since \(\varvec{M}(\xi , \varvec{\beta })\) depends on the values of the parameters, a best guess of \(\varvec{\beta }\) is adopted here and locally D-optimal designs are constructed (Chernoff 1953). A locally \(\Phi _k\)-optimal design \(\xi ^*\) (at \(\varvec{\beta }\)) minimizes the function \(\Phi _k\big (\varvec{M}(\xi ,\varvec{\beta })\big )\) over all designs \(\xi \) whose information matrix \(\varvec{M}(\xi ,\varvec{\beta })\) is nonsingular. For \(0\le k<\infty \) the strict convexity of \(\Phi _k\big (\varvec{M}(\xi ,\varvec{\beta })\big )\) implies that the information matrix of a locally \(\Phi _k\)-optimal design (at \(\varvec{\beta }\)) is unique. That is, if \(\xi ^*\) and \(\xi ^{**}\) are two locally \(\Phi _k\)-optimal designs (at \(\varvec{\beta }\)) then \(\varvec{M}(\xi ^*,\varvec{\beta })=\varvec{M}(\xi ^{**},\varvec{\beta })\) (Kiefer 1975). In particular, D-optimal designs are constructed to minimize the determinant of the variance-covariance matrix of the estimates or equivalently to maximize the determinant of the information matrix. The D-criterion is typically defined by the convex function \(\Phi _{\mathrm {D}}(\varvec{M}(\xi , \varvec{\beta }))=-\log \det \big (\varvec{M}(\xi , \varvec{\beta })\big )\). A-optimal designs are constructed to minimize the trace of the variance-covariance matrix of the estimates, i.e., to minimize the average variance of the estimates. The A-criterion is typically defined by \(\Phi _{\mathrm {A}}\big (\varvec{M}(\xi , \varvec{\beta })\big )=\mathrm{tr}\bigl (\varvec{M}^{-1}(\xi , \varvec{\beta })\bigr )\). Moreover, E-optimal designs maximize the smallest eigenvalue of \(\varvec{M}(\xi , \varvec{\beta })\).

In order to verify the local optimality of a design the general equivalence theorem is commonly employed [see Atkinson et al. (2007, p.137)]. It provides necessary and sufficient conditions for a design to be optimal and thus the optimality of a suggested design can be easily verified or disproved. The design \(\xi ^*\) is \(\Phi _k\)-optimal if and only if

Furthermore, if the design \(\xi ^*\) is \(\Phi _k\)-optimal then inequality (2.6) becomes equality at its support.

Remark

The left hand side of condition (2.6) of the general equivalence theorem is called the sensitivity function.

3 Determination of locally optimal weights

In this section we provide the optimal weights of particular types of designs that will appear throughout the paper with respect to Kiefer’s \(\Phi _k\)-criteria. Particular emphasis will be on the A-criterion (\(k=1\)) and the D-criterion (\(k=0\)). This work mostly deals with saturated designs (i.e., \(r=p\)). Let the support points be given by \(\varvec{x}_1^*,\dots ,\varvec{x}_p^*\) such that \(\varvec{f}(\varvec{x}_1^*),\dots , \varvec{f}(\varvec{x}_p^*)\) are linearly independent. For the A-criterion (\(k=1\)) the optimal weights are given according to Pukelsheim (1993, Sect. 8.8), which has been modified in Gaffke et al. (2019). The design \(\xi ^*\) which achieves the minimum value of \(\mathrm{tr}\bigl (\varvec{M}^{-1}(\xi ,\varvec{\beta })\bigr )\) over all designs \(\xi \) with \({\mathrm{supp}(\xi )=\{\varvec{x}_1^*,\ldots ,\varvec{x}_p^*\}}\) is given by

where \(u_i=u(\varvec{x}_i^*,\varvec{\beta })\) (\(1\le i\le p\)) and \(c_{ii}\) (\(1\le i\le p\)) are the diagonal entries of the matrix \(\varvec{C}=(\varvec{F}^{-1})^\mathsf{T}\varvec{F}^{-1}\) and \(\varvec{F}=\bigl [\varvec{f}(\varvec{x}_1^*),\ldots ,\varvec{f}(\varvec{x}_p^*)\bigr ]^\mathsf{T}\).

For the D-criterion (\(k=0\)) the optimal weights are given by \(\omega _i^*=1/p\) (\(1\le i\le p\)), see Lemma 5.1.3 of Silvey (1980). This means that the locally D-optimal saturated design assigns equal weights to the support points. On the other hand, there is no unified formulas for the optimal weights of a non-saturated design specifically, with respect to D-criterion. However, for \(p=3\) the following lemma provides the optimal weights of a design with four support points \(\xi ^*=\{(\varvec{x}_i^*,\omega _i^*), i=1,2,3,4 \}\) under certain conditions.

Lemma 3.1

Let \(p=3\). Let the design points \(\varvec{x}_1^*,\,\varvec{x}_2^*,\,\varvec{x}_3^*,\, \varvec{x}_4^* \in {\mathcal {X}}\) be given such that any three of the four vectors \(\varvec{f}(\varvec{x}_1^*)\), \(\varvec{f}(\varvec{x}_2^*)\), \(\varvec{f}(\varvec{x}_3^*)\), \(\varvec{f}(\varvec{x}_4^*)\) are linearly independent. Denote

such that \(d_i\ne 0,\,i=1, 2, 3, 4\). For a given parameter point \(\varvec{\beta }\) denote \(u_{i}=u(\varvec{x}_i^*,\varvec{\beta }),\,i=1, 2, 3, 4\). Assume that \(u_2=u_3\) and \(d_2^2=d_3^2\) and let

Assume that \(\omega _i^*>0 ,i=1,2,3,4\). Then the design \(\xi ^*\) which achieves the minimum value of \(-\log \det \bigl (\varvec{M}(\xi ,\varvec{\beta })\bigr )\) over all designs \(\xi \) with \(\mathrm{supp}(\xi )=\{\varvec{x}_1^*, \varvec{x}_2^*, \varvec{x}_3^*, \varvec{x}_4^*\}\) is given by \(\xi ^*=\{(\varvec{x}_i^*,\omega _i^*), i=1,2,3,4 \}\).

Proof

Let \(\varvec{f}_\ell =\varvec{f}(\varvec{x}_\ell ^*)\,\,(1\le \ell \le 4)\). The \(4 \times 3\) design matrix is given by \( \varvec{F}=\bigl [\varvec{f}_1,\varvec{f}_2,\varvec{f}_3,\varvec{f}_4\bigr ]^\mathsf{T}\). Denote \(\varvec{V}=\mathrm{diag}\bigl (\omega _\ell u_\ell \bigr )_{\ell =1}^{4}\). Then \(\varvec{M}(\xi ,\varvec{\beta })=\varvec{F}^\mathsf{T}\varvec{V}\varvec{F}\) and by the Cauchy–Binet formula the determinant of \(\varvec{M}(\xi ,\varvec{\beta })\) is given by the function \(\varphi (\omega _1,\omega _2,\omega _3,\omega _4)\) where

By assumptions \(u_2=u_3\), \(d_2^2=d_3^2\) the function \(\varphi (\omega _1,\omega _2,\omega _3,\omega _4)\) is invariant w.r.t. permuting \(\omega _2\) and \(\omega _3\), i.e., \(\varphi (\omega _1,\omega _2,\omega _3,\omega _4)=\varphi (\omega _1,\omega _3,\omega _2,\omega _4)\) and thus minimizing (3.2) has the same solutions for \(\omega _2\) and \(\omega _3\). Thus we can write \(\omega _4=1-\omega _{1}-2\omega _{2}\,\) and (3.2) reduces to

where \(\alpha _1=-2\,\alpha _2=-2\,d_4^2\,u_2^2\,u_4\), \(\alpha _3=-\alpha _5=-4\,d_2^2\,u_1\,u_2\,u_4\), \(\alpha _4=u_2^2 \left( d_1^2\,u_1-d_4^2\,u_4\right) -4\,d_2^2\,u_1\, u_2\,u_4\). Thus we obtain the system of two equations \(\partial \varphi /\partial \omega _1=0\), \({\partial \varphi /\partial \omega _2=0}\). Straightforward computations show that the solution of the above system is the optimal weights \(\omega _\ell ^*\,\,(1\le \ell \le 4)\) presented by the lemma. Hence, these optimal weights minimizing \(\varphi (\omega _1,\omega _2)\). \(\square \)

Moreover, the choice of optimal weights of the saturated design under Kiefer \(\Phi _k\)-criteria was given in Pukelsheim et al. (1991). It was stated in Schmidt (2019), Sect. 5, that the method of Pukelsheim et al. (1991) provides a system of equations that must be solved numerically. In the following, explicit optimal weights of the \(\Phi _k\)-optimal saturated design are derived for a GLM without intercept specifically, under the first order model \(\varvec{f}(\varvec{x})=(x_1,\dots ,x_\nu )^\mathsf{T}\) and a parameter vector \(\varvec{\beta }=(\beta _1,\dots ,\beta _\nu )^\mathsf{T}\). The choice of locally \(\Phi _k\)-optimal weights which yields the minimum value of \(\Phi _k\big (\varvec{M}(\xi ,\varvec{\beta })\big )\) over all saturated designs with the same support is given by the following lemma.

Lemma 3.2

Consider a GLM without intercept with \(\varvec{f}(\varvec{x})=(x_1,\dots ,x_\nu )^\mathsf{T}\) on an experimental region \({\mathcal {X}}\). Denote by \(\varvec{e}_i\) for all \((1\le i \le \nu )\) the \(\nu \)-dimensional unit vectors. Let \(\varvec{x}_i^*=a_i\, \varvec{e}_i,\,a_i>0\) for all \((1\le i \le \nu )\) be design points in \({\mathcal {X}}\). For a given parameter point \(\varvec{\beta }=(\beta _1,\dots ,\beta _\nu )^\mathsf{T}\) let \(u_i=u(\varvec{x}_i^*,\varvec{\beta })\) for all \((1\le i \le \nu )\). Let a vector \(\varvec{a}=(a_1,\dots ,a_\nu )^\mathsf{T}\) be given with positive components. Then the design \(\xi _{\varvec{a}}^*\) which achieves the minimum value of \(\Phi _k\big (\varvec{M}(\xi _{\varvec{a}},\varvec{\beta })\big )\) over all designs \(\xi _{\varvec{a}}\) with \(\mathrm{supp}(\xi _{\varvec{a}})=\{\varvec{x}_1^*,\ldots ,\varvec{x}_\nu ^*\}\) assigns weights

to the corresponding design points \(\varvec{x}_1^*,\dots ,\varvec{x}_\nu ^*\). Hence,

for D-optimality (\(k=0\)), \(\omega _i^*=1/\nu \) \((1\le i\le \nu )\).

for A-optimality (\(k=1\)), \(\omega _i^*=\frac{(a_i^2u_{i})^{-1/2}}{\sum _{j=1}^{\nu }(a_j^2u_{j})^{-1/2}}\) \((1\le i\le \nu )\).

for E-optimality (\(k\rightarrow \infty \)), \(\omega _i^*=\frac{(a_i^2u_{i})^{-1}}{\sum _{j=1}^{\nu }(a_j^2u_{j})^{-1}}\) \((1\le i\le \nu )\).

Proof

Define the \(\nu \times \nu \) design matrix \(\varvec{F}=\mathrm {diag}(a_i)_{i=1}^{\nu }\) with the \(\nu \times \nu \) weight matrix \(\varvec{V}=\mathrm {diag}(u_{i}\omega _i)_{i=1}^\nu \). Then we have \( \varvec{M}\bigl (\xi _{\varvec{a}}, \varvec{\beta }\bigr )=\varvec{F}^\mathsf{T}\varvec{V}\varvec{F}=\mathrm {diag}(a_i^2u_{i}\omega _i)_{i=1}^\nu \) and \(\varvec{M}^{-k}\bigl (\xi _{\varvec{a}}, \varvec{\beta }\bigr )=\mathrm {diag}\big ((a_i^2u_{i}\omega _i)^{-k}\big )_{i=1}^\nu \) with \(\mathrm {tr}\big (\varvec{M}^{-k}(\xi _{\varvec{a}}, \varvec{\beta })\big ) =\sum _{i=1}^{\nu }(a_i^2u_{i}\omega _i)^{-k}\). Thus

Now we aim at minimizing \(\Phi _k\big (\varvec{M}(\xi _{\varvec{a}},\varvec{\beta })\big )\) such that \(\omega _i>0\) and \(\sum _{i=1}^{\nu }\omega _i=1\). We write \(\omega _\nu =1-\sum _{i=1}^{\nu -1}\omega _i\) then (3.3) becomes

It is straightforward to see that the equation \(\frac{\partial \Phi _k\big (\varvec{M}(\xi _{\varvec{a}},\varvec{\beta })\big )}{\partial \omega _i}=0\) is equivalent to

which gives \(\omega _i=\Big (a_\nu ^2u_\nu /(a_i^2u_i)\Big )^{\frac{k}{k+1}}\omega _\nu \) \({(1\le i\le \nu -1)}\), thus \(\omega _i\, (a_i^2u_{i})^\frac{k}{k+1}=\omega _\nu \) \((a_\nu ^2 u_{\nu })^\frac{k}{k+1}\) \({(1\le i\le \nu -1)}\). This means \(\omega _i\, (a_i^2u_{i})^\frac{k}{k+1}\) \({(1\le i\le \nu )}\) are all equal, i.e., \(\omega _i\, (a_i^2u_{i})^\frac{k}{k+1}=c\) \((1\le i\le \nu )\), where \(c>0\). It implies that \(\omega _i=c\,(a_i^2u_{i})^\frac{-k}{k+1}\,(1\le i\le \nu )\). Due to \(\sum _{i=1}^{\nu }\omega _i=1\) we get \({\sum _{i=1}^{\nu }c\,(a_i^2u_{i})^\frac{-k}{k+1}=c\sum _{i=1}^{\nu }(a_i^2u_{i})^\frac{-k}{k+1}=1}\), and thus \(c=\bigl (\sum _{i=1}^{\nu }(a_i^2u_{i})^\frac{-k}{k+1}\bigr )^{-1}\). So we finally obtain \(\omega _i=(a_i^2u_{i})^\frac{-k}{k+1}/\bigl (\sum _{j=1}^{\nu }(a_j^2u_{j})^\frac{-k}{k+1}\bigr )\) for all \((1\le i\le \nu )\) which are the optimal weights given by the lemma. \(\square \)

4 Single-factor model

In this section we deal with the simplest case under a model with a single factor

Let the experimental region is taken to be the continues unit interval \({\mathcal {X}}=[0,1]\). In the following we introduce, for a fixed \(\varvec{\beta }=(\beta _0, \beta _1)^\mathsf{T}\), the function

which will be utilized for the characterization of the optimal designs. Consider the following conditions:

- (i):

-

\(u(x,\varvec{\beta })\) is positive and twice continuously differentiable on [0, 1].

- (ii):

-

\(u(x,\varvec{\beta })\) is strictly increasing on [0, 1].

- (iii):

-

\(h^{\prime \prime }(x)\) is an injective (one-to-one) function on [0, 1].

Recently, Lemma 1 in Konstantinou et al. (2014) showed that under the above conditions (i)-(iii) a locally D-optimal design on [0, 1] is only supported by two points a and b where \({0\le a< b\le 1}\). In what follows an analogous result is presented for locally optimal designs under various optimality criteria.

Lemma 4.1

Consider a GLM with \(\varvec{f}(x)=(1,x)^\mathsf{T}\) and the experimental region \({\mathcal {X}}=[0,1]\). Let a parameter point \(\varvec{\beta }=(\beta _0,\beta )^\mathsf{T}\) be given. Let conditions (i)-(iii) be satisfied. Denote by \(\varvec{A}\) a positive definite matrix and let c be constant. Then if the condition of the general equivalence theorem is of the form

then the support points of a locally optimal design \(\xi ^*\) is concentrated on exactly two points a and b where \(0\le a< b\le 1\).

Proof

Let \(\varvec{A}=[a_{ij}]_{i,j=1,2}\). Then let \(p(x)=\varvec{f}^\mathsf{T}(x)\varvec{A}\varvec{f}(x)=a_{22}x^2+2a_{12}x+a_{11}\) which is a polynomial in x of degree 2 where \(x\in {\mathcal {X}}\). Hence, by the general equivalence theorem \(\xi ^*\) is locally optimal (at \(\varvec{\beta }\)) if and only if

The above inequality is similar to that obtained in the proof of Lemma 1 in Konstantinou et al. (2014) and thus the rest of our proof is analogous to that. \(\square \)

Accordingly, for D-optimality we have \(c=2\) and \(\varvec{A}=\varvec{M}^{-1}(\xi ^*,\varvec{\beta })\). For A-optimality we have \(c=\mathrm {tr}(\varvec{M}^{-1}(\xi ^*,\varvec{\beta }))=\bigl (\sqrt{(a^2+1)/u_b}+\sqrt{(b^2+1)/u_a}\bigr )/(b-a)^2\) where \(u_{a}=u(a,\varvec{\beta })\) and \({u_{b}=u(b,\varvec{\beta })}\) with \(\varvec{A}=\varvec{M}^{-2}(\xi ^*,\varvec{\beta })\). In general, under Kiefer’s \(\Phi _k\)-criteria we denote \({c=\mathrm {tr}(\varvec{M}^{-k}(\xi ^*,\varvec{\beta }))}\) and \(\varvec{A}=\varvec{M}^{-k-1}(\xi ^*,\varvec{\beta })\). Moreover, the Generalized D-criterion and L-criterion can be applied (Atkinson and Woods 2015, Chapter 10).

As a consequence of Lemma 4.1, we next provide sufficient conditions for a design supported by the boundary points 0 and 1 to be locally D- or A-optimal on \({\mathcal {X}}=[0,1]\) at a given \(\varvec{\beta }\). Let \(q(x)=1/u(x,\varvec{\beta })\) and denote \(q_0=q^{\frac{1}{2}}(0)\) and \(q_1=q^{\frac{1}{2}}(1)\).

Theorem 4.1

Consider a GLM with \(\varvec{f}(x)=\bigl (1,x\bigr )^\mathsf{T}\) and the experimental region \({\mathcal {X}}=[0,1]\). Let a parameter point \(\varvec{\beta }=(\beta _0,\beta )^\mathsf{T}\) be given. Let q(x) be positive and twice continuously differentiable. Then:

- (i):

-

The unique locally D-optimal design \(\xi ^*\) (at \(\varvec{\beta }\)) is the two-point design supported by 0 and 1 with equal weights 1/2 if

$$\begin{aligned} q_{0}^{2}+q_{1}^{2} > q^{\prime \prime }(x)/2\, \text{ for } \text{ all } x \in (0,1). \end{aligned}$$(4.1) - (ii):

-

The unique locally A-optimal design \(\xi ^*\) (at \(\varvec{\beta }\)) is the two-point design supported by 0 and 1 with weights

$$\begin{aligned} \omega _0^*=\frac{\sqrt{2} q_{0}}{\sqrt{2}q_{0}+q_{1}} \text{ and } \omega _1^*=\frac{q_{1}}{\sqrt{2}q_{0}+q_{1}} \end{aligned}$$if

$$\begin{aligned} q_{0}^{2}+q_{1}^{2}+\sqrt{2}q_{0}q_{1} > q^{\prime \prime }(x)/2\, \text{ for } \text{ all } x \in (0,1). \end{aligned}$$(4.2)

Proof

Part (i): Condition (2.6) of the general equivalence theorem for \(k=0\) implies that \(\xi ^*\) is locally D-optimal if and only if

Since the support points are \(\{0,1\}\), the l.h.s. of the above inequality equals zero at the boundaries of [0, 1]. Then it is sufficient to show that the aforementioned l.h.s. is convex on the interior (0, 1) and this convexity realizes under condition (4.1) asserted in the theorem. Now to show that \(\xi ^*\) is unique at \(\varvec{\beta }\) assume that \(\xi ^{**}\) is locally D-optimal at \(\varvec{\beta }\). Then \(\varvec{M}(\xi ^*,\varvec{\beta })=\varvec{M}(\xi ^{**}, \varvec{\beta })\) and therefore, the condition of the equivalence theorem under \(\xi ^{**}\) is equivalent to (4.3) and this is an equation only at the support of \(\xi ^*\), i.e., 0 and 1.

Part (ii): This case can be shown in analogy to Part (i) by employing condition (2.6) of the general equivalence theorem for \(k=1\) with \(\mathrm {tr}(\varvec{M}^{-1}(\xi ^*,\varvec{\beta }))=(\sqrt{2}q_{0}+q_{1})^2\). The optimal weights \(\omega _0^*\) and \(\omega _1^*\) are derived according to (3.1) in Sect. 3. \(\square \)

5 Two-factor model

In this section we consider a first order model of two factors

5.1 Continuous factors

Let the experimental region be given by the unit rectangle \({\mathcal {X}}=[0,1]^2\). Denote the vertices of \({\mathcal {X}}\) by \(\varvec{x}^*_1=(0,0)^\mathsf{T}\), \(\varvec{x}^*_2=(1,0)^\mathsf{T}\), \(\varvec{x}^*_3=(0,1)^\mathsf{T}\) and \(\varvec{x}^*_4=(1,1)^\mathsf{T}\). In the following we provide necessary and sufficient conditions for the designs that are supported by the vertices \(\varvec{x}^*_1\), \(\varvec{x}^*_2\), \(\varvec{x}^*_3\), \(\varvec{x}^*_4\) to be locally D- and A-optimal.

Theorem 5.1

Consider a GLM with \(\varvec{f}(\varvec{x})=\bigl (1,x_1,x_2\bigr )^\mathsf{T}\) and the experimental region \({\mathcal {X}}=[0,1]^2\). For a given parameter point \(\varvec{\beta }=(\beta _0,\beta _1,\beta _2)^\mathsf{T}\) let \(u_i=u(\varvec{x}^*_i,\varvec{\beta })\) (\(1\le i\le 4\)). Then:

(o) The locally D-optimal design \(\xi ^*\) (at \(\varvec{\beta }\)) is unique.

-

(1)

\(\xi ^*=\left( \begin{array}{ccc} \varvec{x}^*_1 &{} \varvec{x}^*_2 &{}\varvec{x}^*_3\\ [.5ex] 1/3&{}1/3&{}1/3\end{array}\right) \) if and only if

$$\begin{aligned} (1-x_1-x_2)^2u_{1}^{-1}+x_1^2 u_{2}^{-1}+x_2^{2}u_{3}^{-1}\le u^{-1}(\varvec{x},\varvec{\beta })\,\,\forall \varvec{x}\in [0,1]^2. \end{aligned}$$ -

(2)

\( \xi ^*=\left( \begin{array}{ccc} \varvec{x}^*_1 &{} \varvec{x}^*_2 &{}\varvec{x}^*_4\\ [.5ex] 1/3&{}1/3&{}1/3\end{array}\right) \) if and only if

$$\begin{aligned} (1-x_1)^2u_{1}^{-1}+(x_1-x_2)^2 u_{2}^{-1}+x_2^{2}u_{4}^{-1}\le u^{-1}(\varvec{x},\varvec{\beta })\,\,\forall \varvec{x}\in [0,1]^2. \end{aligned}$$ -

(3)

\( \xi ^*=\left( \begin{array}{ccc} \varvec{x}^*_1 &{} \varvec{x}^*_3 &{}\varvec{x}^*_4\\ [.5ex] 1/3&{}1/3&{}1/3\end{array}\right) \) if and only if

$$\begin{aligned} (1-x_2)^2u_{1}^{-1}+(x_2-x_1)^2 u_{3}^{-1}+x_1^{2}u_{4}^{-1}\le u^{-1}(\varvec{x},\varvec{\beta })\,\,\forall \varvec{x}\in [0,1]^2. \end{aligned}$$ -

(4)

\( \xi ^*=\left( \begin{array}{ccc} \varvec{x}^*_2 &{} \varvec{x}^*_3 &{}\varvec{x}^*_4\\ [.5ex] 1/3&{}1/3&{}1/3\end{array}\right) \) if and only if

$$\begin{aligned}&(1-x_2)^2 u_{2}^{-1}+(1-x_1)^2u_{3}^{-1}+(x_1+x_2-1)^{2}u_{4}^{-1}\\&\quad \quad \quad \quad \le u^{-1}(\varvec{x},\varvec{\beta })\,\,\forall \varvec{x}\in [0,1]^2. \end{aligned}$$ -

(5)

Otherwise, \(\xi ^*\) is supported by the four design points \(\varvec{x}^*_1, \varvec{x}^*_2, \varvec{x}^*_3, \varvec{x}^*_4\).

Proof

The proof of cases (1) – (4) is demonstrated by making use of condition (2.6) for \(k=0\) of the general equivalence theorem. For case (\(\ell \)) (\(1\le \ell \le 4\)) denote the design matrix \(\varvec{F}=[\varvec{f}(\varvec{x}_i^*), \varvec{f}(\varvec{x}_j^*), \varvec{f}(\varvec{x}_k^* )]^\mathsf{T}\) and the weight matrix \(\varvec{U}=\text{ diag }\big (u_i , u_j, u_k\big )\) such that \(1\le i<j<k\le 4\) and \( i, j, k\ne 4-\ell +1\). We will show that the condition in each case (1)–(4) is equivalent to

To this end, for each case (1) – (4), we report the matrices \(\varvec{F}\), \(\varvec{F}^{-1}\) and \(\varvec{U}\)

It remains to show that the design \(\xi ^*\) is unique at \(\varvec{\beta }\). Suppose that \(\xi ^*\) and \(\xi ^{**}\) are locally D-optimal at \(\varvec{\beta }\). Then by the strict convexity of the D-criterion we have \(\varvec{M}(\xi ^*,\varvec{\beta })=\varvec{M}(\xi ^{**},\varvec{\beta })\). Thus \(\varvec{M}(\xi ^*,\varvec{\beta })-\varvec{M}(\xi ^{**},\varvec{\beta })=\sum _{i=1}^{4}(\omega _{i}^*-\omega _{i}^{**})u_i\varvec{f}(\varvec{x}_{i}^*)\varvec{f}^\mathsf{T}(\varvec{x}_{i}^*)=0\). The intensities \(u_i\,(1\le i\le 4)\) are positive and \(\varvec{f}(\varvec{x}_{i}^*)\varvec{f}^\mathsf{T}(\varvec{x}_{i}^*)\,(1\le i\le 4)\) are linearly independent. It follows that \({\omega _{i}^*-\omega _{i}^{**}=0\,(1\le i\le 4)}\). \(\square \)

In analogy to Theorem 5.1 we introduce locally A-optimal designs in the next theorem.

Theorem 5.2

Consider the assumptions and notations of Theorem 5.1. Denote \(q_i=u_i^{-1/2}\) \({(1\le i \le 4)}\). Then:

(o) The locally A-optimal design \(\xi ^*\) (at \(\varvec{\beta }\)) is unique.

-

(1)

\(\xi ^*=\left( \begin{array}{ccc} \varvec{x}^*_1 &{}\varvec{x}^*_2 &{}\varvec{x}^*_3\\ \sqrt{3}q_{1}/c &{}q_{2}/c &{}q_{3}/c\end{array}\right) \) if and only if

$$\begin{aligned}&(1-x_1-x_2)^2q_1^{2}+x_1^2q_2^{2}+x_2^2q_3^{2}-\frac{2}{\sqrt{3}}(1-x_1-x_2)\big ( x_1q_2+x_2q_3\big )q_1\\&\quad \le u^{-1}(\varvec{x},\varvec{\beta })\,\,\forall \varvec{x}\in [0,1]^2. \end{aligned}$$ -

(2)

\(\xi ^*=\left( \begin{array}{ccc} \varvec{x}^*_1 &{} \varvec{x}^*_2 &{}\varvec{x}^*_4\\ \sqrt{2}q_{1}/c &{}\sqrt{2}q_{2}/c &{}q_{4}/c\end{array}\right) \) if and only if

$$\begin{aligned}&\quad \quad (1-x_1)^2q_1^{2}+(x_1-x_2)^2q_2^{2}+x_2^2q_4^{2}- (x_1-x_2)\big ((1-x_1)q_1+\sqrt{2}x_2q_4\big )q_2 \\&\quad \quad \quad \le u^{-1}(\varvec{x},\varvec{\beta })\,\,\forall \varvec{x}\in [0,1]^2. \end{aligned}$$ -

(3)

\(\xi ^*=\left( \begin{array}{ccc} \varvec{x}^*_1 &{} \varvec{x}^*_3 &{}\varvec{x}^*_4\\ \sqrt{2}q_{1}/c&{}\sqrt{2}q_{3}/c &{}q_{4}/c\end{array}\right) \) if and only if

$$\begin{aligned}&\quad \quad (1-x_2)^2q_1^{2}+(x_2-x_1)^2q_3^{2}+x_1^2q_4^{2}- (x_2-x_1)\big ((1-x_2)q_1+\sqrt{2}x_1q_4\big )q_3\\&\quad \quad \quad \le u^{-1}(\varvec{x},\varvec{\beta })\,\,\forall \varvec{x}\in [0,1]^2. \end{aligned}$$ -

(4)

\(\xi ^*=\left( \begin{array}{ccc} \varvec{x}^*_2 &{} \varvec{x}^*_3 &{}\varvec{x}^*_4\\ [.5ex] \sqrt{2}q_{2}/c&{}\sqrt{2}q_{3}/c &{}\sqrt{3}q_{4}/c\end{array}\right) \) if and only if

$$\begin{aligned}&\quad \quad \quad (1-x_2)^2q_2^{2}+(1-x_1)^2q_3^{2}+(x_2+x_1-1)^2q_4^{2}+ (1-x_1)(1-x_2)q_2q_3 \\&\quad \quad \quad -2\sqrt{\frac{2}{3}}(x_1+x_2-1)\big ((1-x_2)q_2-(1-x_1)q_3\big )q_4 \\&\quad \quad \quad \le u^{-1}(\varvec{x},\varvec{\beta })\,\,\forall \varvec{x}\in [0,1]^2. \end{aligned}$$For each case (1)–(4), the constant c appearing in the weights equals the sum of the numerators of the three ratios.

-

(5)

Otherwise, \(\xi ^*\) is supported by the four design points \(\varvec{x}^*_1, \varvec{x}^*_2, \varvec{x}^*_3, \varvec{x}^*_4\).

Proof

We make use of condition (2.6) for \(k=1\) of the general equivalence theorem. In analogy to the proof of Theorem 5.1 for case (\(\ell \)) (\(1\le \ell \le 4\)) denote \(\varvec{F}=[\varvec{f}(\varvec{x}_i^*), \varvec{f}(\varvec{x}_j^*), \varvec{f}(\varvec{x}_k^* )]^\mathsf{T}\), \(\varvec{U}=\text{ diag }\big (u_i , u_j, u_k\big )\) and \(\varvec{\Omega }=\text{ diag }(\omega _i^*, \omega _j^*, \omega _k^*)\) such that \(1\le i<j<k\le 4\) and \( i, j, k\ne 4-\ell +1\). Then we obtain \(\varvec{C}=\bigl (\varvec{F}^{-1}\bigr )^\mathsf{T}\varvec{F}^{-1}\). An elementary calculation shows that the weights given by (3.1) for an A-optimal design coincide with the \(\omega _i^*\) (\(1\le i\le 3\)) as stated in the theorem. Now we show that the design \(\xi ^*\) is locally A-optimal if and only if the corresponding condition holds. We have

Since \(\varvec{U}^{-1/2}\varvec{\Omega }^{-1}=c\,\mathrm{diag}\bigl (c_{11}^{-1/2}, c_{22}^{-1/2},c_{33}^{-1/2}\bigr )\), we obtain

where \(\varvec{C}^*=\mathrm{diag}\bigl (c_{11}^{-1/2}, c_{22}^{-1/2},c_{33`}^{-1/2}\bigr )\,\varvec{C}\,\mathrm{diag}\bigl (c_{11}^{-1/2}, c_{22}^{-1/2}, c_{33}^{-1/2}\bigr )\). So, together with condition (2.6) of the general equivalence theorem for \(k=1\) the design \(\xi ^*\) is locally A-optimal (at \(\varvec{\beta }\)) if and only if

Straightforward calculation shows that condition (5.2) is equivalent to the respective condition in Case (\(\ell \)). \(\square \)

Remark

Yang et al. (2011) developed a method to find locally optimal designs for logistic models of multiple factors. It was assumed that one factor is defined on the whole real line while the other factors belong to a compact region which seems in conflict with the experimental region given in Theorem 5.1. Then a subclass of designs was established by Loewner semi ordering of nonnegative definite matrices and so, one could focus on this subclass to derive optimal designs. A similar strategy was used in Gaffke et al. (2019) for gamma models on the experimental region \([0,1]^\nu , \nu \ge 1\). Nevertheless, it seems that this strategy may not work for a general setup of the generalized linear model. However, consider a logistic model of two factors with \(\varvec{f}(\varvec{x})=(1, x_1, x_2)^\mathsf{T}\) and intensity function \(u(\varvec{x},\varvec{\beta })=\exp (\beta _0+\beta _1x_1+\beta _2x_2)/(1+\exp (\beta _0+\beta _1x_1+\beta _2x_2))^2\). According to Yang et al. (2011) the experimental region is assumed to be \({\mathcal {X}}= [0,1]\times {\mathbb {R}}\), i.e., \(x_2\in (-\infty ,\infty )\). From Yang et al. (2011), Corollary 1, a locally D-optimal design is given by

where \(c^*\) is the maximizor of \(c^2\,\bigl ( \exp (c)/(1+\exp (c))^2\bigr )^3\). In general, \(\xi ^*\) is not covered by Theorem 5.1. In contrast to that, for a particular parameter point \(\varvec{\beta }=(\beta _0, \beta _1,\beta _2)^\mathsf{T}\) such that \(\beta _1=0\), \(\beta _2=-2\beta _0\) and \(\beta _0=c^*\) the design \(\xi ^*\) is supported by the vertices of \([0,1]^2\).

5.2 Discrete factors

Here, we assume two factors each at two levels, i.e., 0 and 1. The experimental region is given by \(\tilde{{\mathcal {X}}}=\{0,1\}^2\) which consists of the vertices of the unit rectangle \([0,1]^2\). So we write \(\tilde{{\mathcal {X}}}=\{\varvec{x}_1^*, \varvec{x}_2^*, \varvec{x}_3^*, \varvec{x}_4^*\}\).

Corollary 5.1

Consider a GLM with \(\varvec{f}(\varvec{x})=\bigl (1,x_1,x_2\bigr )^\mathsf{T}\) and the experimental region \(\tilde{{\mathcal {X}}}=\{0,1\}^2\). For a given parameter point \(\varvec{\beta }=(\beta _0,\beta _1,\beta _2)^\mathsf{T}\) let \(u_i=u(\varvec{x}^*_i,\varvec{\beta })\) (\(1\le i\le 4\)). Denote by \(u_{(1)}\le u_{(2)}\le u_{(3)}\le u_{(4)}\) the intensity values \(u_1,u_2,u_3,u_4\) rearranged in ascending order. Then:

- (i):

-

The design \(\xi ^*\) is supported by the three design points whose intensity values are given by \(u_{(2)}\), \(u_{(3)}\), \(u_{(4)}\), with equal weights 1/3 if and only if

$$\begin{aligned} u_{(2)}^{-1}+u_{(3)}^{-1}+u_{(4)}^{-1}\,\le \, u_{(1)}^{-1}. \end{aligned}$$ - (ii):

-

The design \(\xi ^*\) is supported by the four design points \(\varvec{x}^*_1,\varvec{x}^*_2,\varvec{x}^*_3,\varvec{x}^*_4\) with weights \(\omega _1^*,\omega _2^*,\omega _3^*,\omega _4^*\) which are uniquely determined by the condition

$$\begin{aligned} \omega _i^*>0\ (1\le i\le 4),\ \sum _{i=1}^4\omega _i^*=1,\ \text{ and } \ u_i\omega _i^*\bigl ({\textstyle \frac{1}{3}}-\omega _i^*\bigr )\ \ (1\le i\le 4)\ \text{ are } \text{ equal } \end{aligned}$$(5.3)if and only if \(u_{(2)}^{-1}+u_{(3)}^{-1}+u_{(4)}^{-1}\,>\,u_{(1)}^{-1}\).

Proof

The proof is demonstrated by Theorem 5.1. The condition of \(\xi ^*\) in part (i) comes by the the corresponding inequality in cases (1)–(4) of Theorem 5.1 which arises at the point that is not a support of the respective \(\xi ^*\). The equal values of the identity \(u_i\omega _i^*\bigl ({\textstyle \frac{1}{3}}-\omega _i^*\bigr )\) for \( i=1, 2, 3, 4\) were proved in Gaffke et al. (2019). \(\square \)

Remark

In part (i) of Corollary 5.1 the design points with highest intensities perform as a support of a locally D-optimal design.

Theorem 5.3

Under the assumptions of Corollary 5.1 let the parameter point \({\varvec{\beta }=(\beta _0,\beta _1,\beta _2)^\mathsf{T}}\) be given with \(\beta _1=\beta _2=\beta \) which fulfills assumption (ii) of Corollary 5.1. Then the locally D-optimal design (at \(\varvec{\beta }\)) is supported by the four design points \(\varvec{x}_1^*,\varvec{x}_2^*,\varvec{x}_3^*,\varvec{x}_4^*\) with positive weights

Proof

Since assumption (ii) of Corollary 5.1 is fulfilled by a point \(\varvec{\beta }\) the design is supported by all points \(\varvec{x}^*_1\), \(\varvec{x}^*_2\), \(\varvec{x}^*_3\), \(\varvec{x}^*_4\). Then the optimal weights are obtained according to Lemma 3.1 where we have \(d_i^2=1\,(1\le i \le 4)\) and \(u_2=u_3\). Hence, the results follow. \(\square \)

Now we restrict to A-optimal designs on the set of vertices \(\tilde{{\mathcal {X}}}=\{0,1\}^2\). It can also be noted that the design points with highest intensities perform as a support of a locally A-optimal design at a given parameter value.

Corollary 5.2

Consider the assumptions and notations of Corollary 5.1. Denote \(q_i=u_i^{-1/2}\) \({(1\le i \le 4)}\). Then the unique locally A-optimal design \(\xi ^*\) is as follows.

-

(1)

\(\xi ^*=\left( \begin{array}{ccc} \varvec{x}^*_1 &{}\varvec{x}^*_2 &{}\varvec{x}^*_3\\ \sqrt{3}q_{1}/c &{}q_{2}/c &{}q_{3}/c\end{array}\right) \) if and only if

$$\begin{aligned} q_{1}^{2} + q_{2}^{2} + q_{3}^{2} + \frac{2}{\sqrt{3}}q_{1}(q_{2} + q_{3})\le q_{4}^{2}. \end{aligned}$$ -

(2)

\(\xi ^*=\left( \begin{array}{ccc} \varvec{x}^*_1 &{} \varvec{x}^*_2 &{}\varvec{x}^*_4\\ \sqrt{2}q_{1}/c &{}\sqrt{2}q_{2}/c &{}q_{4}/c\end{array}\right) \) if and only if

$$\begin{aligned} q_{1}^{2} + q_{2}^{2} + q_{4}^{2} + q_{1}q_{2} + \sqrt{2}q_{2}q_{4}\le q_{3}^{2}. \end{aligned}$$ -

(3)

\(\xi ^*=\left( \begin{array}{ccc} \varvec{x}^*_1 &{} \varvec{x}^*_3 &{}\varvec{x}^*_4\\ \sqrt{2}q_{1}/c&{}\sqrt{2}q_{3}/c &{}q_{4}/c\end{array}\right) \) if and only if

$$\begin{aligned} q_{1}^{2}+q_{3}^{2}+q_{4}^{2}+ q_{1}q_{3}+\sqrt{2}q_{3}q_{4}\le q_{2}^{2}. \end{aligned}$$ -

(4)

\(\xi ^*=\left( \begin{array}{ccc} \varvec{x}^*_2 &{} \varvec{x}^*_3 &{}\varvec{x}^*_4\\ \sqrt{2}q_{2}/c&{}\sqrt{2}q_{3}/c &{}\sqrt{3}q_{4}/c\end{array}\right) \) if and only if

$$\begin{aligned} q_{2}^{2}+q_{3}^{2}+q_{4}^{2}+ q_{2}q_{3}+2\sqrt{\frac{2}{3}} q_{4}(q_{2}+ q_{3})\le q_{1}^{2}. \end{aligned}$$For each case (i) – (iv), the constant c appearing in the weights equals the sum of the numerators of the three ratios.

-

(5)

Otherwise, \(\xi ^*\) is supported by the four design points \(\varvec{x}^*_1, \varvec{x}^*_2, \varvec{x}^*_3, \varvec{x}^*_4\).

As the optimal weights of the A-optimal designs depend on the model parameters each condition provided in the theorem characterizes a subregion of the parameter space where the corresponding designs with the same support are A-optimal.

6 Multiple regression model

6.1 Model with intercept

Consider a first order model of multiple factors

Here, we are interested in providing an extension of locally D- and A-optimal designs with support \((0,0)^\mathsf{T}, (1,0)^\mathsf{T}, (0,1)^\mathsf{T}\) that are given in part (1) of Theorems 5.1 and 5.2.

Theorem 6.1

Consider model (6.1) with experimental region \({\mathcal {X}}=[0,1]^\nu \), where \(\nu \ge 2\). Define particular design points by

For a given parameter point \(\varvec{\beta }=(\beta _0,\beta _1,\ldots ,\beta _\nu )^\mathsf{T}\) let \(u_i=u(\varvec{x}_i^*,\varvec{\beta })\,(1 \le i \le \nu +1)\). Then the design \(\xi ^*\) which assigns equal weights \(1/(\nu +1)\) to the design points \(\varvec{x}_i^*\) for all \(\,(1 \le i \le \nu +1)\) is locally D-optimal (at \(\varvec{\beta }\)) if and only if

Proof

Define the \((\nu +1)\times (\nu +1)\) design matrix \(\varvec{F}=\bigl [\varvec{f}(\varvec{x}_1^*),\ldots ,\varvec{f}(\varvec{x}_{\nu +1}^*)\bigr ]^\mathsf{T}\), then

We have

where \(\varvec{0}_{1\times \nu }\), \(\varvec{1}_{\nu \times 1}\), and \(\varvec{I}_\nu \) denote the \(\nu \)-dimensional row vector of zeros, the \(\nu \)-dimensional column vector of ones, and the \(\nu \times \nu \) unit matrix, respectively. So, by condition (2.6) of the general equivalence theorem for \(k=0\) the design is locally D-optimal if and only if

The l.h.s. of (6.4) reads as

and hence it is obvious that (6.4) is equivalent to (6.2). \(\square \)

Remark

The D-optimal design under a two-factor model with support \((0,0)^\mathsf{T}\), \((1,0)^\mathsf{T}\), \((0,1)^\mathsf{T}\) from Theorem 5.1 , part (1) is covered by Theorem 6.1 for \(\nu =2\). It is clear that condition (6.2) for \(\nu =2\) is equivalent to the inequality \((1-x_1-x_2)^2u_{1}^{-1}+x_1^2 u_{2}^{-1}+x_2^{2}u_{3}^{-2}\le u^{-1}(\varvec{x},\varvec{\beta })\,\,\forall \varvec{x}\in [0,1]^2\).

In analogy to Theorem 6.1 we present locally A-optimal designs in the next theorem.

Theorem 6.2

Consider the assumptions and notations of Theorem 6.1. Denote \(q_i=u_i^{-1/2}\) \({(1\le i \le \nu +1)}\). Then the design \(\xi ^*\) which is supported by \(\varvec{x}_i^*\,(1\le i \le \nu +1)\) with weights

is locally A-optimal (at \(\varvec{\beta }\)) if and only if for all \(\varvec{x}=(x_1,\ldots ,x_\nu )^\mathsf{T}\in \bigl [0,1\bigr ]^\nu \)

Proof

As in the proof of Theorem 6.1 the design matrix \(\varvec{F}\) and its inverse are given by (6.3) and we obtain

This yields \(\sqrt{c_{11}/u_1}=\sqrt{\nu +1}q_1\) and \(\sqrt{c_{ii}/u_i}=q_i\) for \(i=2,\ldots ,\nu +1\) according to (3.1) in Sect. 3 with \(p=\nu +1\). An elementary calculation shows that the weights given by (3.1) for an A-optimal design coincide with the \(\omega _i^*\) (\(1\le i\le p\)) as stated in the theorem. Now we show that the design \(\xi ^*\) is locally A-optimal if and only if (6.5) holds. Let \(\varvec{U}=\mathrm{diag}\bigl (u_1,\ldots ,u_p\bigr )\), \(\varvec{\Omega }=\mathrm{diag}\bigl (\omega _1^*,\ldots ,\omega _p^*\bigr )\) and \(\varvec{V}=\varvec{\Omega }\varvec{U}\). Then we have

Since \(\varvec{U}^{-1/2}\varvec{\Omega }^{-1}=c\,\mathrm{diag}\bigl (c_{11}^{-1/2},\ldots ,c_{pp}^{-1/2}\bigr )\), we obtain

where \(\varvec{C}^*=\mathrm{diag}\bigl (c_{11}^{-1/2},\ldots ,c_{pp}^{-1/2}\bigr )\,\varvec{C}\,\mathrm{diag}\bigl (c_{11}^{-1/2},\ldots ,c_{pp}^{-1/2}\bigr )\). So, together with condition (2.6) of the general equivalence theorem for \(k=1\) the design \(\xi ^*\) is locally A-optimal (at \(\varvec{\beta }\)) if and only if

Straightforward calculation shows that condition (6.6) is equivalent to condition (6.5). \(\square \)

Remark

Theorem 6.2 with \(\nu =2\) covers the result stated in part (1) of Theorem 5.2. It can be seen that with the notations of Theorem 5.2, the inequality \(q_{1}^{2}+q_{2}^{2}+q_{3}^{2}+\frac{2}{\sqrt{3}}q_{1}q_{2}+\frac{2}{\sqrt{3}}q_{1}q_{3}\le q_{4}^{2}\) is equivalent to condition (6.5) of Theorem 6.2 for \(\nu =2\).

6.2 Model without intercept

Consider a model of multiple factors and without intercept. We assume a first order model

The experimental region \({\mathcal {X}}\) has an arbitrary form. Locally optimal designs will be derived under Kiefer’s \(\Phi _k\)-criteria. The support points are located at the boundary of \({\mathcal {X}}\) and the optimal weights are obtained according to Lemma 3.2.

Theorem 6.3

Consider model (6.7) on an experimental region \({\mathcal {X}}\). Let a vector \(\varvec{a}=(a_1,\dots ,a_\nu )^\mathsf{T}\) be given such that \(a_i>0\,\,(1\le i\le \nu )\). Denote the design points by \(\varvec{x}_i^*=a_i\varvec{e}_i\,\,(1\le i\le \nu )\) that are assumed to belong to \({\mathcal {X}}\). For a given parameter point \(\varvec{\beta }\) let \(u_i=u(\varvec{x}_{i}^*,\varvec{\beta })\) \((1\le i\le \nu )\). Let k with \(0\le k<\infty \) be given. Let \(\xi _{\varvec{a}}^*\) be the saturated design whose support consists of the points \(\varvec{x}_i^*\) \((1\le i\le \nu )\) with the corresponding weights

Then \(\xi _{\varvec{a}}^*\) is locally \(\Phi _k\)-optimal (at \(\varvec{\beta }\)) if and only if

Proof

Define the \(\nu \times \nu \) design matrix \(\varvec{F}=\mathrm {diag}(a_i)_{i=1}^\nu \) with the \(\nu \times \nu \) weight matrix

Then we have

Adopting these formulas simplifies the l.h.s. of condition (2.6) of the general equivalence theorem to \(u(\varvec{x},\varvec{\beta })\Big (\sum _{j=1}^{\nu }(a_{j}^{2}u_{j})^\frac{-k}{k+1}\Big )^{k+1}\sum _{i=1}^{\nu }u_{i}^{-1}a_{i}^{-2}x_i^2\) which is bounded by \(\Big (\sum _{j=1}^{\nu }(a_{j}^{2}u_{j})^\frac{-k}{k+1}\Big )^{k+1}\) if and only if condition (6.8) holds true. \(\square \)

The optimality condition (6.8) does not depend on the value of k. However, from Theorem 6.3 the locally D-optimal design (\(k=0\)) has weights \(\omega _i^*=1/\nu \,\, (1\le i\le \nu )\) and the locally A-optimal design (\(k=1\)) has weights \(\textstyle {\omega _i^*=(a_i^2u_{i})^{-1/2}/\sum _{j=1}^{\nu }(a_j^2u_{j})^{-1/2}}\) \((1\le i\le \nu )\).

7 Applications

In this section, we give a discussion on the application of the previous results for the generalized linear models. Here, emphasis will be laid on gamma and Poisson models. However, it is known that the linear regression model is a GLM. Therefore, to begin with, we briefly focus on the \(\Phi _k\)-optimality under a non-intercept linear model with \({\varvec{f}(\varvec{x})=(x_1,\dots ,x_\nu )^\mathsf{T}}\) on the continuous experimental region \({{\mathcal {X}}=[0,1]^\nu ,\,\nu \ge 2}\). Here, \(u(\varvec{x},\varvec{\beta })=1\) for all \(\varvec{x}\in {\mathcal {X}}\) so the information matrices in a linear model are independent of \(\varvec{\beta }\). Note that Theorem 6.3 does not cover a non-intercept linear model on \({\mathcal {X}}\) since condition (6.8) does not hold true for \(\nu \ge 2\). However, the l.h.s. of condition (2.6) of the general equivalence theorem under linear models, i.e., when \(u(\varvec{x},\varvec{\beta })=1\), is strictly convex and it attains its maximum at some vertices of \({\mathcal {X}}\). Thus the support of any \(\Phi _k\)(or D, A)-optimal design is a subset of \(\{0,1\}^\nu \). As a result, in particular for D- and A-optimality, one might apply the results of Theorem 3.1 in Huda and Mukerjee (1988), which were obtained under linear models on \(\{0,1\}^\nu \).

-

For odd numbers of factors \(\nu =2q+1,\,\,q\in {\mathbb {N}}\), the equally weighted designs \(\xi ^*\) supported by all \(\varvec{x}^*=(x_1,\dots ,x_\nu )\in \{0,1\}^\nu \) such that \(\sum _{i=1}^{\nu }x_i=q+1\) is either D- or A-optimal.

-

For even numbers of factors \(\nu =2q,\,\,q\in {\mathbb {N}}\), the equally weighted design \(\xi ^*\) supported by all \(\varvec{x}^*=(x_1,\dots ,x_\nu )\in \{0,1\}^\nu \) such that \(\sum _{i=1}^{\nu }x_i=q\) or \(\sum _{i=1}^{\nu }x_i=q+1\) is D-optimal. Moreover, the design \(\xi ^*\) which assigns equal weights to all points \(\varvec{x}^*=(x_1,\dots ,x_\nu )\in \{0,1\}^\nu \) such that \(\sum _{i=1}^{\nu }x_i=q\) is A-optimal.

7.1 Gamma model

A gamma model is given by

Here, \(\kappa \) is the shape parameter of the gamma distribution which is assumed to be fixed and positive. The expected mean \(\mu (\varvec{x},\varvec{\beta })\) for the gamma distribution is positive for all \(\varvec{x}\in {\mathcal {X}}\). The parameter space including all possible parameter vector \(\varvec{\beta }\) is determined by the assumption \(\varvec{f}^\mathsf{T}(\varvec{x})\varvec{\beta }>0\) for all \(\varvec{x}\in {\mathcal {X}}\).

Let the experimental region be the cube \({\mathcal {X}}=[0,1]^\nu ,\nu >1\). In Gaffke et al. (2019) we showed that the locally optimal designs under a first order gamma model \(\varvec{f}(\varvec{x})=(1, x_1, \dots , x_\nu )^\mathsf{T}\) are only supported by the vertices of the cube \([0,1]^\nu \). Therefore, in the following we focus on the set of vertices \(\{0,1\}^\nu \).

In what follows, firstly we consider a two-factor gamma model with the linear predictor \(\eta (\varvec{x},\varvec{\beta })=\beta _0+\beta _1x_1+\beta _2x_2\) and experimental region \({\mathcal {X}}=[0,1]^2\). Denote \(\varvec{x}^*_1=(0,0)^\mathsf{T}\), \(\varvec{x}^*_2=(1,0)^\mathsf{T}\), \(\varvec{x}^*_3=(0,1)^\mathsf{T}\) and \(\varvec{x}^*_4=(1,1)^\mathsf{T}\). Let \(u_k=u(\varvec{x}^*_k,\varvec{\beta })\) (\(1\le k\le 4\)), i.e.,

In view of Corollaries 5.1 and 5.2 the following explicit results are immediate.

Corollary 7.1

Consider a gamma model with \(\varvec{f}(\varvec{x})=\bigl (1,x_1,x_2\bigr )^\mathsf{T}\) and the experimental region \({\mathcal {X}}=[0,1]^2\). Let \(\varvec{\beta }=(\beta _0,\beta _1,\beta _2)^\mathsf{T}\) be a parameter point such that \(\beta _0>0\), \(\beta _0+\beta _1>0\), \(\beta _0+\beta _2>0\), and \(\beta _0+\beta _1+\beta _2>0\). Then the unique locally D-optimal design \(\xi ^*\) (at \(\varvec{\beta }\)) is as follows.

-

(1)

\(\xi ^*\) assigns equal weights 1/3 to \(\varvec{x}^*_1,\varvec{x}^*_2,\varvec{x}^*_3\) if and only if \(\beta _0^2-\beta _1\beta _2\le 0 \).

-

(2)

\(\xi ^*\) assigns equal weights 1/3 to \(\varvec{x}^*_1,\varvec{x}^*_2,\varvec{x}^*_4\) if and only if \((\beta _0+\beta _1)^2+\beta _1\beta _2\le 0\).

-

(3)

\(\xi ^*\) assigns equal weights 1/3 to \(\varvec{x}^*_1,\varvec{x}^*_3,\varvec{x}^*_4\) if and only if \((\beta _0+\beta _2)^2+\beta _1\beta _2\le 0\).

-

(4)

\(\xi ^*\) assigns equal weights 1/3 to \(\varvec{x}^*_2,\varvec{x}^*_3,\varvec{x}^*_4\) if and only if \(\beta _0^2+\beta _1^2+\beta _2^2+\beta _1\beta _2+2\beta _0(\beta _1+\beta _2)\le 0\).

-

(5)

Otherwise, \(\xi ^*\) is supported by the four points \(\varvec{x}^*_1\), \(\varvec{x}^*_2\), \(\varvec{x}^*_3\), \(\varvec{x}^*_4\).

Proof

In view of Corollary 5.1, part (i), straightforward computations show that the corresponding conditions of parts (1)–(4) are equivalent to \(u_1^{-1}+u_2^{-1}+u_3^{-1}\le \, u_4^{-1}\), \(u_1^{-1}+u_2^{-1}+u_4^{-1}{\le \,u_3^{-1}}\), \(u_1^{-1}+u_3^{-1}+u_4^{-1}\le \,u_2^{-1}\), and \(u_2^{-1}+u_3^{-1}+u_4^{-1}\le \,u_1^{-1}\), respectively.

Remark

According to Corollary 7.1, part (5), the subregion where a four-point design is D-optimal has been determined by computer algebra and is given below.

-

\(-\beta _0<\beta _1<0\) and \( \frac{1}{2}\big ( \sqrt{-(3\beta _1^2+4\beta _0\beta _1)}-(\beta _1+2\beta _0)\big )<\beta _2<-(\beta _1+\beta _0)^2/\beta _1\).

-

\(\beta _1=0\) and \(\beta _2>-\beta _0\).

-

\(\beta _1>0\) and \( \frac{1}{2}\big ( \sqrt{4\beta _0\beta _1+\beta _1^2}-(\beta _1+2\beta _0)\big )<\beta _2<\beta _0^2/\beta _1\).

On each subregion the optimal weights of a D-optimal design depend on the parameter values.

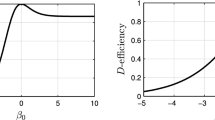

If \(\beta _0>0\) define the ratios \(\gamma _1=\beta _1/\beta _0\) and \(\gamma _2=\beta _2/\beta _0\). So we have \(\gamma _1>-1\), \(\gamma _2>-1\) and \(\gamma _1+\gamma _2>-1\). Without loss of generality the conditions of the D-optimal designs given in Corollary 7.1 can be written in terms of \(\gamma _1\) and \(\gamma _2\). In the left panel of Fig. 1 the parameter subregions of \(\gamma _1\) and \(\gamma _2\) are depicted where the designs given by Corollary 7.1 are locally D-optimal. In particular, the design with support \(\varvec{x}^*_1,\varvec{x}^*_2,\varvec{x}^*_3\) is locally D-optimal over the larger subregion for positive larger values of \(\gamma _1\) and \(\gamma _2\). The diagonal line represents the case of equal effects \(\beta _1=\beta _2=\beta \) where \( \beta >-(1/2)\beta _0\). In particular, in the case \(-(1/3)\beta _0< \beta <\beta _0\) the design is supported by the four design points with optimal weights

These weights as functions of \(\gamma \) are exhibited in Fig. 2. Obviously, the weights are positive over the respective domain \(\gamma \in (-1/3,1)\) and \(1/4\le \omega _2^*=\omega _3^* \le 1/3\). The design \(\xi ^*\) at \(\gamma =0\) assigns uniform weights 1/4 to the set of points \(\{\varvec{x}^*_1,\varvec{x}^*_2,\varvec{x}^*_3,\varvec{x}^*_4\}\). This case is equivalent to an ordinary linear regression model with two binary factors. At the limits of \((-1/3,1)\) the D-optimal four-point design becomes a D-optimal saturated design. This means that at \(\gamma =-1/3\) we have \(\omega _1^*=0\) and at \(\gamma =1\) we have \(\omega _4^*=0\).

Dependence of optimal designs under gamma models on \(\varvec{\beta }\); Left panel: D-optimal designs. Right panel: A-optimal designs. \(\mathrm {supp}(\xi ^*_{ijk})=\{\varvec{x}^*_i,\varvec{x}^*_j,\varvec{x}^*_k\}\subset \{\varvec{x}^*_1,\varvec{x}^*_2,\varvec{x}^*_3,\varvec{x}^*_4\}\) and \(\mathrm {supp}(\xi ^*_{1234})=\{\varvec{x}^*_1,\varvec{x}^*_2,\varvec{x}^*_3,\varvec{x}^*_4\}\). The diagonal dashed line is \(\gamma _2=\gamma _1\) where \(\gamma _i=\beta _i/\beta _0, i=1,2\)

Corollary 7.2

Under the assumptions and notations of Corollary 7.1. The unique locally A-optimal design (at \(\varvec{\beta }\)) is as follows.

-

(1)

\(\xi ^*=\left( \begin{array}{ccc} \varvec{x}^*_1 &{} \varvec{x}^*_2 &{}\varvec{x}^*_3\\ \sqrt{3}\beta _0/c &{} (\beta _0+\beta _1)/c &{} (\beta _0+\beta _2)/c \end{array}\right) \) if and only if

$$\begin{aligned} (1+2/\sqrt{3})\beta _0^2+(1/\sqrt{3})\beta _0(\beta _1+\beta _2)-\beta _1\beta _2\le 0. \end{aligned}$$ -

(2)

\(\xi ^*=\left( \begin{array}{ccc} \varvec{x}^*_1 &{} \varvec{x}^*_2 &{}\varvec{x}^*_4\\ \sqrt{2}\beta _0/c &{} \sqrt{2}(\beta _0+\beta _1)/c &{} (\beta _0+\beta _1+\beta _2)/c \end{array}\right) \) if and only if

$$\begin{aligned} (3+\sqrt{2})\beta _0^2+(2+\sqrt{2})(\beta _1^2+\beta _1\beta _2)+(5+2\sqrt{2})\beta _0\beta _1+\sqrt{2}\beta _0\beta _2\le 0. \end{aligned}$$ -

(3)

\(\xi ^*=\left( \begin{array}{ccc} \varvec{x}^*_1 &{} \varvec{x}^*_3 &{}\varvec{x}^*_4\\ \sqrt{2}\beta _0/c &{} \sqrt{2}(\beta _0+\beta _2)/c &{} (\beta _0+\beta _1+\beta _2)/c\end{array}\right) \) if and only if

$$\begin{aligned} (3+\sqrt{2})\beta _0^2+(2+\sqrt{2})(\beta _2^2+\beta _1\beta _2)+(5+2\sqrt{2})\beta _0\beta _2+\sqrt{2}\beta _0\beta _1\le 0. \end{aligned}$$ -

(4)

\(\xi ^*=\left( \begin{array}{ccc} \varvec{x}^*_2 &{} \varvec{x}^*_3 &{}\varvec{x}^*_4\\ \sqrt{2}(\beta _0{+}\beta _1)/c &{} \sqrt{2}(\beta _0{+}\beta _2)/c &{} \sqrt{3}(\beta _0{+}\beta _1{+}\beta _2)/c\end{array}\right) \) if and only if

$$\begin{aligned} (3{+}4\sqrt{2/3})(\beta _0^2{+}\beta _1\beta _2){+}2(1+\sqrt{2/3})(\beta _1^2{+}\beta _2^2)+(5+6\sqrt{2/3})\beta _0(\beta _1+\beta _2)\le 0. \end{aligned}$$For each case (1) – (4), the constant c appearing in the weights equals the sum of the numerators of the three ratios.

-

(5)

Otherwise, \(\xi ^*\) is supported by the four design points \(\varvec{x}^*_1, \varvec{x}^*_2, \varvec{x}^*_3, \varvec{x}^*_4\).

Proof

The result follows from Corollary 5.2 by denoting \(u_k=u(\varvec{x}^*_k,\varvec{\beta })\) (\(1\le k\le 4\)) and \(q_i=u_i^{-1/2}\,\,(1\le i \le 4)\). \(\square \)

Again, the conditions of A-optimal designs can be written in terms of the ratios \(\gamma _1=\beta _1/\beta _0\) and \(\gamma _2=\beta _2/\beta _0\), \(\beta _0>0\). In the right panel of Fig. 1 the parameter subregions of \(\gamma _1\) and \(\gamma _2\) are depicted where the designs given by Corollary 7.2 are locally A-optimal. Comparing to the left panel under D-optimality, similar interpretation might be observed. In particular, the largest subregion of the parameter points is for A-optimal designs with support \(\{\varvec{x}^*_1,\varvec{x}^*_2,\varvec{x}^*_3\}\).

Remark

For the multiple-factor gamma model \(\varvec{f}(\varvec{x})=(1, x_1, \dots , x_\nu )^\mathsf{T}\) on the experimental region \({\mathcal {X}}=[0,1]^\nu ,\nu \ge 2\), the result of Burridge and Sebastiani (1994) can be applied. It was shown that the design which assigns equal weights \(1/(\nu +1)\) to the design points \(\varvec{x}_1^*=(0,\dots ,0)^\mathsf{T},\ \ \varvec{x}_2^*=(1,\dots ,0)^\mathsf{T},\ \ldots ,\ \varvec{x}_{\nu +1}^*=(0,\dots ,1)^\mathsf{T}\) is locally D-optimal for a given \(\varvec{\beta }=(\beta _0, \beta _1, \dots , \beta _\nu )^\mathsf{T}\) if and only if \(\beta _0^2\le \beta _i\beta _j,\,1\le i<j\le \nu \). This result can be considered as a special case of Theorem 6.1. Note that for \(\nu =2\) this result is also covered by part (1) of Corollary 7.1.

Now consider a non-intercept gamma model with multiple factors. The linear predictor can be written as \(\eta (\varvec{x},\varvec{\beta })=\varvec{x}^\mathsf{T}\varvec{\beta }=\sum _{i=1}^\nu \beta _ix_i\). Let the experimental region be given by \({\mathcal {X}}=[0,\infty )^\nu \setminus \{\varvec{0}\}\) with intensity \(u(\varvec{x},\varvec{\beta })=(\varvec{x}^\mathsf{T}\varvec{\beta })^{-2}\) for all \(\varvec{x}\in {\mathcal {X}}\). The parameter space is determined by \(\varvec{\beta }\in (0,\infty )^\nu \), i.e., \(\beta _i>0\) for all (\(1 \le i \le \nu \)). From Theorem 6.3 the following corollary is obtained for the gamma model without intercept.

Corollary 7.3

Consider a non-intercept gamma model with \(\varvec{f}(\varvec{x})=\varvec{x}\) on the experimental region \({{\mathcal {X}}=[0,\infty )^\nu \setminus \{\varvec{0}\}}\) and intensity \(u(\varvec{x},\varvec{\beta })=(\varvec{x}^\mathsf{T}\varvec{\beta })^{-2}\) for all \(\varvec{x}\in {\mathcal {X}}\). For a given vector \(\varvec{a}=(a_1,\dots ,a_\nu )^\mathsf{T}\) where \(a_i>0\,\,(1\le i\le \nu )\) let \({\varvec{x}_i^*=a_i\varvec{e}_i}\) for all \(i=1,\dots , \nu \). Let k with \(0\le k <\infty \) be given. For a given parameter point \(\varvec{\beta }\in (0,\infty )^\nu \) let \(\xi _{\varvec{a}}^*\) be the saturated design whose support consists of the points \(\varvec{x}_i^*\) \((1\le i\le \nu )\) with the corresponding weights

Then \(\xi _{\varvec{a}}^*\) is locally \(\Phi _k\)-optimal (at \(\varvec{\beta }\)).

Proof

Let \(u_i=u(\varvec{x}_i^*,\varvec{\beta })\,\,(1\le i \le \nu )\). Thus \(u_i=(a_i\beta _i)^{-2}\,\,(1\le i \le \nu )\). Then condition (6.8) of Theorem 6.3 is equivalent to \(-2\sum _{i<j=1}^{\nu }\beta _i\beta _jx_ix_j\le 0\) for all \(\varvec{x} \in {\mathcal {X}}\). Since \({\beta _i>0, x_i>0\,\,\, (1\le i \le \nu )}\) the condition holds true. \(\square \)

The optimal weights given in Corollary 7.3 are the same irrespective of the values \(a_1,\dots ,a_\nu \). The reason is that the information matrix under a gamma model without intercept is invariant with respect to simultaneous scaling of the factors. Therefore, we get \(\varvec{M}(a_i\varvec{e}_i,\varvec{\beta })=\varvec{M}(\varvec{e}_i,\varvec{\beta }), i=1,\dots , \nu \). Note also that Corollary 7.3 covers Theorem 3.1 in Idais and Schwabe (2020) who provided locally D- and A-optimal designs for non-intercept gamma models.

7.2 Poisson model

A Poisson model is given by

Here, the expected mean \(\mu (\varvec{x},\varvec{\beta })\) for the Poisson distribution is positive for all \(\varvec{x}\in {\mathcal {X}}\). The parameter vector \(\varvec{\beta }\in {\mathbb {R}}^{p}\) is a real-valued vector.

For a two-factor model with linear predictor \(\eta (\varvec{x},\varvec{\beta })=\beta _0+\beta _1x_1+\beta _2x_2\) and experimental region \({\mathcal {X}}=\{0,1\}^2\) the next corollary presents the locally D-optimal designs. The same results for D-optimality were obtained in Graßhoff et al. (2013) under the Rasch Poisson counts model in item response theory. Denote \(\varvec{x}^*_1=(0,0)^\mathsf{T}\), \(\varvec{x}^*_2=(1,0)^\mathsf{T}\), \(\varvec{x}^*_3=(0,1)^\mathsf{T}\) and \(\varvec{x}^*_4=(1,1)^\mathsf{T}\). Let \(u_k=u(\varvec{x}^*_k,\varvec{\beta })\) (\(1\le k\le 4\)), i.e.,

Corollary 7.4

Consider a Poisson model with \(\varvec{f}(\varvec{x})=\bigl (1,x_1,x_2\bigr )^\mathsf{T}\) and the experimental region \({\mathcal {X}}=\{0,1\}^2\). Let \(\varvec{\beta }=(\beta _0,\beta _1,\beta _2)^\mathsf{T}\) be a given parameter point. Then the unique locally D-optimal design \(\xi ^*\) (at \(\varvec{\beta }\)) is as follows.

-

(1)

\(\xi ^*\) assigns equal weights 1/3 to \(\varvec{x}^*_1,\varvec{x}^*_2,\varvec{x}^*_3\) if and only if \(\beta _2\le \log \bigl ((1+\exp (\beta _1))/(1-\exp (\beta _1))\bigr )\).

-

(2)

\(\xi ^*\) assigns equal weights 1/3 to \(\varvec{x}^*_1,\varvec{x}^*_2,\varvec{x}^*_4\) if and only if \( \beta _2\le \log \bigl ((1-\exp (-\beta _1))/(1+\exp (-\beta _1))\bigr )\).

-

(3)

\(\xi ^*\) assigns equal weights 1/3 to \(\varvec{x}^*_1,\varvec{x}^*_3,\varvec{x}^*_4\) if and only if \( \beta _2\le -\log \bigl ((1-\exp (\beta _1))/(1+\exp (\beta _1))\bigr )\).

-

(4)

\(\xi ^*\) assigns equal weights 1/3 to \(\varvec{x}^*_2,\varvec{x}^*_3,\varvec{x}^*_4\) if and only if \(\beta _2\le -\log \bigl ((1-\exp (-\beta _1))/(1+\exp (-\beta _1))\bigr )\).

-

(5)

Otherwise, \(\xi ^*\) is supported by the four points \(\varvec{x}^*_1,\varvec{x}^*_2,\varvec{x}^*_3,\varvec{x}^*_4\).

Proof

The proof is analogous to that of Corollary 7.1. \(\square \)

Remark

Graßhoff et al. (2013) showed that a four-point design from part (5) of Corollary 7.4 is locally D-optimal in the subregion

Next we provide the locally A-optimal designs.

Corollary 7.5

Under the assumptions and notations of Corollary 7.4 the unique locally A-optimal design \(\xi ^*\) (at \(\varvec{\beta }\)) is as follows.

-

(1)

\(\xi ^*=\left( \begin{array}{ccc} \varvec{x}^*_1 &{} \varvec{x}^*_2 &{}\varvec{x}^*_3\\ \sqrt{3}/c &{} \exp (-\beta _1/2)/c &{} \exp (-\beta _2/2)/c\end{array}\right) \) if and only if \(\exp (-\beta _1)+\exp (-\beta _2)+\exp (-(\beta _1+\beta _2))+\exp (-(\beta _1+\beta _2)/2)+2\sqrt{2/3}(\exp (-(2\beta _1+\beta _2)/2))+\exp (-(2\beta _2+\beta _1)/2))-1\le 0.\)

-

(2)

\(\xi ^*=\left( \begin{array}{ccc} \varvec{x}^*_1 &{} \varvec{x}^*_2 &{}\varvec{x}^*_4\\ \sqrt{2}/c &{} \sqrt{2}\exp (-\beta _1/2)/c &{} \exp (-(\beta _1+\beta _2)/2)/c\end{array}\right) \) if and only if

$$\begin{aligned}&\exp (\beta _1)+\exp (-\beta _2)+\exp (\beta _1-\beta _2)\\&+\exp (\beta _1-\beta _2/2)+\sqrt{2}\exp (\beta _1/2-\beta _2)-1\le 0. \end{aligned}$$ -

(3)

\(\xi ^*=\left( \begin{array}{ccc} \varvec{x}^*_1 &{} \varvec{x}^*_3 &{}\varvec{x}^*_4\\ \sqrt{2}/c &{} \sqrt{2}\exp (-\beta _2/2)/c &{} \exp (-(\beta _1+\beta _2)/2)/c\end{array}\right) \) if and only if

$$\begin{aligned}&\exp (\beta _2)+\exp (-\beta _1)+\exp (\beta _2-\beta _1)\\&\quad +\exp (\beta _2-\beta _1/2)+\sqrt{2}\exp (\beta _2/2-\beta _1)-1\le 0. \end{aligned}$$ -

(4)

\(\xi ^*=\left( \begin{array}{ccc} \varvec{x}^*_2 &{} \varvec{x}^*_3 &{}\varvec{x}^*_4\\ \sqrt{2}\exp (-\beta _1/2)/c &{} \sqrt{2}\exp (-\beta _2/2)/c &{} \sqrt{3}\exp (-(\beta _1+\beta _2)/2)/c\end{array}\right) \) if and only if

$$\begin{aligned}&\exp (\beta _1)+\exp (\beta _2)+\exp (\beta _1+\beta _2)+2\sqrt{2/3}(\exp (\beta _2+\beta _1/2)\\&\quad +\exp (\beta _1+\beta _2/2))-1\le 0. \end{aligned}$$For each case (1) – (4), the constant c appearing in the weights equals the sum of the numerators of the three ratios.

-

(5)

Otherwise, \(\xi ^*\) is supported by the four design points \(\varvec{x}^*_1, \varvec{x}^*_2, \varvec{x}^*_3, \varvec{x}^*_4\).

Figure 3 shows the dependence of the locally D- and A-optimal designs from Corollaries 7.4 and 7.5, respectively on the parameters \(\beta _1\) and \(\beta _2\).

Dependence of optimal designs under Poisson models on \(\varvec{\beta }\); Left panel: D-optimal designs. Right panel: A-optimal designs. \(\mathrm {supp}(\xi ^*_{ijk})=\{\varvec{x}^*_i,\varvec{x}^*_j,\varvec{x}^*_k\}\subset \{\varvec{x}^*_1,\varvec{x}^*_2,\varvec{x}^*_3,\varvec{x}^*_4\}\) and \(\mathrm {supp}(\xi ^*_{1234})=\{\varvec{x}^*_1,\varvec{x}^*_2,\varvec{x}^*_3,\varvec{x}^*_4\}\). The diagonal dashed line is \(\beta _2=\beta _1\)

Remark

For the multiple-factor Poisson model \(\varvec{f}(\varvec{x})=(1, x_1, \dots , x_\nu )^\mathsf{T}\) on the experimental region \({\mathcal {X}}=[0,1]^\nu ,\nu \ge 2\), the result of Russell et al. (2009) can be applied. It was shown that the design which assigns equal weights \(1/(\nu +1)\) to the design points \(\varvec{x}_1^*=(0,\dots ,0)^\mathsf{T},\ \ \varvec{x}_2^*=(1,\dots ,0)^\mathsf{T},\ \ldots ,\ \varvec{x}_{\nu +1}^*=(0,\dots ,1)^\mathsf{T}\) is locally D-optimal at a given \(\varvec{\beta }=(\beta _0, \beta _1, \dots , \beta _\nu )^\mathsf{T}\) such that \(\beta _i=-2, i=1, \dots ,\nu \).

Now consider a non-intercept Poisson model with multiple factors. The linear predictor can be written as \(\eta (\varvec{x},\varvec{\beta })=\varvec{x}^\mathsf{T}\varvec{\beta }=\sum _{i=1}^\nu \beta _ix_i\). Let the experimental region be given by \({\mathcal {X}}=\{0,1\}^\nu \) with intensity \(u(\varvec{x},\varvec{\beta })=\exp \big (\varvec{x}^\mathsf{T}\varvec{\beta }\big )\) for all \(\varvec{x}\in {\mathcal {X}}\). In the following we will apply Theorem 6.3 for a Poisson model without intercept. Let us restrict to the case of \(a_i=1\,\,(1 \le i \le \nu )\), i.e., the design points are the unit vectors \(\varvec{e}_i\) \((1\le i \le \nu )\). As a result, condition (6.8) is simplified as presented in the following corollary.

Corollary 7.6

Consider a non-intercept Poisson model with \(\varvec{f}(\varvec{x})=\varvec{x}\) on the experimental region \({\mathcal {X}}=\{0,1\}^\nu ,\,\,\nu \ge 2\) and intensity \(u(\varvec{x},\varvec{\beta })=\exp \big (\varvec{x}^\mathsf{T}\varvec{\beta }\big )\) for all \(\varvec{x}\in {\mathcal {X}}\). For a given parameter point \(\varvec{\beta }=(\beta _1,\dots ,\beta _\nu )^\mathsf{T}\) define \(u_i=\exp (\beta _i)\,\,(1\le i\le \nu )\) and denote by \(u_{[1]}\ge u_{[2]}\ge \dots \ge u_{[\nu ]}\) the descending order of \(u_{1},u_{2},\dots ,u_{\nu }\). Let k be given with \(0\le k<\infty \). Let \(\xi ^* \) be the saturated design supported by the unit vectors \(\varvec{e}_i\) \((1\le i \le \nu )\) with weights \(\omega _i^*=u_i^\frac{-k}{k+1}/\sum _{j=1}^\nu u_j^\frac{-k}{k+1}\,\,(1\le i \le \nu )\). Then \(\xi ^*\) is locally \(\Phi _k\)-optimal (at \(\varvec{\beta }\)) if and only if

Proof

Condition (6.8) of Theorem 6.3 reduces to

For any \(\varvec{x}=(x_1,\dots ,x_\nu ) \in \{0,1\}^\nu ,\nu \ge 2\) define the index set \(S\subseteq \{1,\ldots ,\nu \}\) such that \(x_i=1\) if \(i\in S\) and \(x_i=0\) else. So for \(\varvec{x}\) described by \(S\subseteq \{1,\ldots ,\nu \}\) and \(s=\#S\), if \(s=0\) (i.e., \(S=\emptyset \)) then the l.h.s. of (7.2) is zero. If \(s=1\), inequality (7.2) becomes an equality. Let \(s\ge 2\). Then the l.h.s. of (7.2) is equal to \(\exp (\sum _{i\in S}\beta _i)\sum _{i\in S}\exp (-\beta _i)\) which thus rewrites as \( \prod _{i\in S}u_i\sum _{i\in S}u_i^{-1}\) or equivalently as \(\sum _{i\in S}\prod _{j\in S\setminus \{i\}}u_j\). By the the descending order \(u_{[1]}\ge u_{[2]}\ge \dots \ge u_{[\nu ]}\) of \(u_{1},u_{2},\dots ,u_{\nu }\) we obtain for all subsets \(S\subseteq \{1,\ldots ,\nu \}\) of the same size \(s\ge 2\),

Denote \(T_s=\sum _{i=1}^{s}u_{[i]}^{-1}\prod _{i=1}^{s}u_{[i]}\). Hence, inequality (7.2) is equivalent to \(T_s \le 1\) for all \(s=2,\dots ,\nu \). Then it is sufficient to show that

For “\(\Longleftarrow \)”, \(T_2=u_{[1]}+u_{[2]}\le 1\). For “\(\Longrightarrow \)”, we use induction. Firstly, note that \(T_2=u_{[1]}+u_{[2]}\) thus \(T_s\le 1\) is true for \(s=2\). Now assume \(T_s\le 1\) is true for some \(s=q\) where \(2 \le q\le \nu \). We want to show that it is true for \(s=q+1\). We can write

References

Atkinson A, Haines L (1996) 14 designs for nonlinear and generalized linear models. In: Ghosh S, Rao C (eds) Design and analysis of experiments. Handbook of statistics, vol 13. Elsevier, Amsterdam, pp 437–475

Atkinson AC, Woods DC (2015) Designs for generalized linear models. In: Dean A, Morris JSM, Bingha D (eds) Handbook of design and analysis of experiments. Chapman & Hall/CRC Press, Boca Raton, pp 471–514

Atkinson A, Donev A, Tobias R (2007) Optimum experimental designs, with SAS, vol 34. Oxford University Press, Oxford

Burridge J, Sebastiani P (1994) D-optimal designs for generalised linear models with variance proportional to the square of the mean. Biometrika 81:295–304

Chernoff H (1953) Locally optimal designs for estimating parameters. Ann Math Stat 24:586–602

Fedorov VV, Leonov SL (2013) Optimal design for nonlinear response models. CRC Press, Boca Raton

Ford I, Torsney B, Wu CFJ (1992) The use of a canonical form in the construction of locally optimal designs for non-linear problems. J R Stat Soc Ser B (Methodol) 54:569–583

Fox J (2015) Applied regression analysis and generalized linear models, 3rd edn. SAGE Publications, Los Angeles

Gaffke N, Idais O, Schwabe R (2019) Locally optimal designs for gamma models. J Stat Plan Inference 203:199–214

Goldburd M, Khare A, Tevet D (2016) Generalized linear models for insurance rating. Casualty Actuarial Society, Virginia

Graßhoff U, Großmann H, Holling H, Schwabe R (2007) Design optimality in multi-factor generalized linear models in the presence of an unrestricted quantitative factor. J Stat Plan Inference 137:3882–3893

Graßhoff U, Holling H, Schwabe R (2013) Optimal design for count data with binary predictors in item response theory. In: Ucinski D, Atkinson AC, Patan M (eds) mODa 10-advances in model-oriented design and analysis. Springer, Heidelberg, pp 117–124

Huda S, Mukerjee R (1988) Optimal weighing designs: approximate theory. Statistics 19:513–517

Idais O, Schwabe R (2020) Analytic solutions for locally optimal designs for gamma models having linear predictors without intercept. Metrika. https://doi.org/10.1007/s00184-019-00760-3

Kiefer J (1975) Optimal design: variation in structure and performance under change of criterion. Biometrika 62:277–288

Konstantinou M, Biedermann S, Kimber A (2014) Optimal designs for two-parameter nonlinear models with application to survival models. Stat Sin 24:415–428

McCullagh P, Nelder J (1989) Generalized linear models, 2nd edn. Chapman & Hall, London

Myers RH, Montgomery DC (1997) A tutorial on generalized linear models. J Qual Technol 29:274–291

Nelder JA, Wedderburn RWM (1972) Generalized linear models. J R Stat Soc Ser A (Gen) 135:370–384

Pukelsheim F (1993) Optimal design of experiments. Wiley, New York

Pukelsheim F, Torsney B (1991) Optimal weights for experimental designs on linearly independent support points. Ann Stat 19:1614–1625 09

Russell KG, Woods DC, Lewis SM, Eccleston JA (2009) D-optimal designs for poisson regression models. Stat Sin 19:721–730

Schmidt D (2019) Characterization of c-, l- and \(\phi _k\)-optimal designs for a class of non-linear multiple-regression models. J R Stat Soc Ser B (Stat Methodol) 81:101–120

Schmidt D, Schwabe R (2017) Optimal design for multiple regression with information driven by the linear predictor. Stat Sin 27:1371–1384

Silvey SD (1980) Optimal design. Chapman & Hall, London

Tong L, Volkmer HW, Yang J (2014) Analytic solutions for D-optimal factorial designs under generalized linear models. Electron J Stat 8:1322–1344

Walker SH, Duncan DB (1967) Estimation of the probability of an event as a function of several independent variables. Biometrika 54:167–179

Yang M, Stufken J (2009) Support points of locally optimal designs for nonlinear models with two parameters. Ann Stat 37:518–541

Yang M, Zhang B, Huang S (2011) Optimal designs for generalized linear models with multiple design variables. Stat Sin 21:1415–1430

Acknowledgements

Open Access funding provided by Projekt DEAL. The author acknowledges the support by a scholarship of the Landesgraduiertenförderung Sachsen-Anhalt [GradFVO (GVBl. LSA 1992, 402)].

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Idais, O. On local optimality of vertex type designs in generalized linear models. Stat Papers 62, 1871–1898 (2021). https://doi.org/10.1007/s00362-020-01158-4

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00362-020-01158-4