Abstract

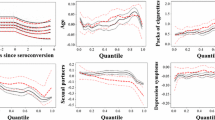

HIV damages the immune system by targeting the CD4 cells. Hence CD4 count data modeling is important in the analysis of HIV infection. This paper considers a semiparametric mixed effects model for the analysis of CD4 longitudinal data. The model is a natural extension to the linear mixed and semiparametric models that uses parametric linear model to present the covariate effects and an arbitrary nonparametric smooth function to model the time effect and account for the within subject correlation using random effects. We approximate the nonparametric function by the profile kernel method, and make use of the weighted least squares to estimate the regression coefficients. Under some regularity conditions, the asymptotic normality of the resulting estimator is established and the performance is compared with the backfitting method. Although, two estimators share the same asymptotic variance matrix for independent data, it is shown that, backfitting often produces larger bias and variance than the profile-kernel method, asymptotically. Consequently, the use of backfitting method is no longer advised in semiparametric mixed effect longitudinal model. For practical implementation and also improve efficiency, the estimation of the covariance function is accomplished using an iterative algorithm. Performance of the proposed methods are compared through a simulation study and the analysis of CD4 data.

Similar content being viewed by others

References

Akdeniz F, Roozbeh M (2017) Generalized difference-based weighted mixed almost unbiased ridge estimator in partially linear models. Statistical Papers, Basel. https://doi.org/10.1007/s00362-017-0893-9

Carroll RJ, Fan J, Gijbels I, Wand MP (1997) Generalised linear single-index models. J Am Stat Assoc 92:477–89

Fan JQ, Li RZ (2004) New estimation and model selection procedures for semiparametric modeling in longitudinal data analysis. J Am Stat Assoc 99:710–723

Fan JQ, Huang T, Li RZ (2007) Analysis of longitudinal data with semiparametric estimation of covariance function. J Am Stat Assoc 102:632–641

Fitzmaurice GM, Laird NM, Ware JH (2004) Applied longitudinal analysis. Wiley, Hoboken

He XM, Zhu ZY, Fung WK (2002) Estimation in a semiparametric model for longitudinal data with unspecified dependence structure. Biometrika 89:579–590

Hu Z, Wang N, Carroll R (2004) Profile-kernel versus backfitting in the partially linear models for longitudinal/clustered data. Biometrika 91:251–262

Li Z, Zhu L (2010) On variance components in semiparametric mixed models for longitudinal data. Scand J Stat 37:442–457

Liang H (2009) Generalized partially linear mixed-effects models incorporating mismeasured covariates. Ann Inst Stat Math 61:27–46

Lin X, Carroll RJ (2001) Semiparametric regression for clustered data using generalised estimating equations. J Am Stat Assoc 96:1045–56

Opsomer JD, Ruppert D (1999) A root-n consistent backfitting estimator for semiparametric additive modeling. J Comput Graph Stat 8:715–32

Qin GY, Zhu ZY (2007) Robust estimation in generalized semiparametric mixed models for longitudinal data. J Mult Anal 98:1658–1683

Qin GY, Zhu ZY (2009) Robustified maximum likelihood estimation in generalized partial linear mixed model for longitudinal data. Biometrics 65:52–59

Rice JA (1986) Convergence rates for partially splined models. Stat Prob Lett 4:204–8

Roozbeh M (2015) Shrinkage ridge estimators in semiparametric regression models. J Mult Anal 136:56–74

Roozbeh M (2016) Robust ridge estimator in restricted semiparametric regression models. J Mult Anal 147:127–144

Severini TA, Staniswalis JG (1994) Quasi-likelihood estimation in semi-parametric models. J Am Stat Assoc 89:501–12

Speckman PE (1988) Regression analysis for partially linear models. J R Stat Soc B 50:413–436

Wang N (2003) Marginal nonparametric kernel regression accounting for within-subject correlation. Biometrika 90:43–52

Wang WL, Fan TH (2010) ECM-based maximum likelihood inference for multivariate linear mixed models with autoregressive errors. Comput Stat Data Anal 54:1328–1341

Wang WL, Lin TI (2014) Multivariate t nonlinear mixed-effects models for multi-outcome longitudinal data with missing values. Stat Med 33:3029–3046

Wang WL, Lin TI (2015) Bayesian analysis of multivariate t linear mixed models with missing responses at random. J Stat Comput Simul 85:3594–3612

Wang N, Carroll R, Lin XH (2005) Efficient semiparametric marginal estimation for longitudinal/clustered data. J Am Stat Assoc 100:147–157

Wang WL, Lin TI, Lachos VH (2015) Extending multivariate-t linear mixed models for multiple longitudinal data with censored responses and heavy tails. Meth Med Res Stat 27:48–64

Wu CFJ (1983) On the convergence properties of the EM algorithm. Anal Stat 11:95–103

Yu Y, Ruppert D (2002) Penalised spline estimation for partially linear single index models. J Am Stat Assoc 97:1042–54

Zeger SL, Diggle PJ (1994) Semi-parametric models for longitudinal data with application to CD4 cell numbers in HIV seroconverters. Biometrics 50:689–99

Zhang D (2004) Generalized linear mixed models with varying coefficients for longitudinal data. Biometrics 60:8–15

Acknowledgements

We would like to thank the reviewers for their constructive comments which significantly improved the presentation and led to put many details in the paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

In this section, we provide the proof of the main results along with a brief introduction of the EM method, which is used for the convergency of Algorithm 1. In the following proofs, recall that \({\varvec{t}}\), \(\varvec{X}\) and \({\varvec{Y}}\) denote the observations over all the subjects; that is \({\varvec{t}}=({\varvec{t}}_1^\top , \ldots , {\varvec{t}}_n^\top )^\top \), \({\varvec{t}}_i=(t_{i1}, \ldots , t_{in_i})\) and similarly for \(\varvec{X}\) and \({\varvec{Y}}\). Also, \({\varvec{V}}\) and \({\varvec{V}}_{o}\) stand for the \(N \times N\) assumed and true covariance matrices for all data, respectively.

1.1 Proof of Theorem 1

For the kernel estimator, based on expression (4.1),

where

and

Denote,

Simple calculations show that \(C_n\), can be expanded as \(C_n = C_{1n}- C_{2n}, + o_p(1)\), where, denoting \({\varvec{\mu }}_i=\varvec{X}^\top _i {\varvec{\beta }}+ {\varvec{g}}({\varvec{t}}_i)\) and \({\varvec{W}}_{1i}={\varvec{V}}_i^{-1}{\tilde{\varvec{X}}}_i\),

and

Obtaining asymptotic distribution of \(\sqrt{n}C_{1n}\) is simple. Now examine the distribution of \(\sqrt{n}C_{2n}\). Following Lin and Carroll (2001), we have

where \({\varvec{W}}_{2i}=\lbrace {\varvec{W}}_{2i1}, \ldots ,{\varvec{W}}_{2im}\rbrace ^\top \) and

It follows that

where the bias term \({\varvec{b}}_K({\varvec{\beta }}, {\varvec{g}})=D^{-1}E\lbrace {\tilde{\varvec{X}}}^\top {\varvec{V}}^{-1}{\varvec{g}}^{(2)}({\varvec{t}}) \rbrace \). Equivalently,

, where \({\varvec{V}}_K=D^{-1} E\big \lbrace ({\varvec{W}}_1-{\varvec{W}}_2)^\top {\varvec{V}}_o({\varvec{W}}_1-{\varvec{W}}_2)\big \rbrace D^{-1}\) with \({\varvec{V}}_o=var({\varvec{Y}}|\varvec{X},{\varvec{t}})\).

1.2 Proof of Theorem 3

The asymptotic variance \({\varvec{V}}_{BF}\) has its central component generated from \(\varvec{X}^\top {\varvec{V}}^{-1}({\varvec{I}}-{\varvec{S}}){\varvec{\varepsilon }}^*\), where \({\varvec{\varepsilon }}^*={\varvec{Z}}^\top {\varvec{b}}+{\varvec{\varepsilon }}\). Similarly, the central component in the asymptotic variance \({\varvec{V}}_K\) is from \(\varvec{X}^\top ({\varvec{I}}-{\varvec{S}})^\top {\varvec{V}}^{-1} ({\varvec{I}}-{\varvec{S}}){\varvec{\varepsilon }}^*\), which is \({\tilde{\varvec{X}}}^\top {\varvec{V}}^{-1} ({\varvec{I}}-{\varvec{S}}){\varvec{\varepsilon }}^*\) asymptotically. To compare \({\varvec{V}}_K\) and \({\varvec{V}}_{BF}\), it is thus sufficient to compare the variances of the two central terms.

We now show that, under the condition that \({\varvec{V}}\) and \({\varvec{V}}_o\) depend only on \({\varvec{t}}\),

For the backfitting estimator,

In this expression, \({\varvec{\mu }}_X({\varvec{t}})\) is generally nonzero and the first term is positive semidefinite because \({\varvec{V}}^{-1}({\varvec{I}}-{\varvec{S}}){\varvec{V}}_o({\varvec{I}}-{\varvec{S}})^\top {\varvec{V}}^{-1}\) is positive semidefinite. Also,

Note that

Therefore, the first term in (A2) is 0, and the second terms in (A1) and (A2) are identical. It follows that \(\hbox {cov} \lbrace \varvec{X}^\top {\varvec{V}}^{-1}({\varvec{I}}-{\varvec{S}}){\varvec{\varepsilon }}^* \rbrace \ge \hbox {cov} \lbrace {\tilde{\varvec{X}}}^\top {\varvec{V}}^{-1} ({\varvec{I}}-{\varvec{S}}){\varvec{\varepsilon }}^* \rbrace \), and consequently \({\varvec{V}}_{BF}\ge {\varvec{V}}_K\).

1.3 EM algorithm-related to Algorithm 1

The EM algorithm, which is composed of the expectation (E) step and the maximization (M) step at each iteration, has several appealing features such as simplicity of implementation and monotone increase of the likelihood at each iteration. Toward this end, we let \({\varvec{\Theta }}=({\varvec{\beta }}, {\varvec{D}}, {\varvec{\Sigma }}, \phi )\) be the set of entire model parameters. Treating the unobservable random effects \({\varvec{b}}_i\) as the missing data, we have the complete data \(\lbrace ({\varvec{y}}_i, {\varvec{b}}_i), i = 1, \ldots ,n\rbrace \). Let the density function of \(({\varvec{y}}_i, {\varvec{b}}_i)\) be \( f({\varvec{y}}_i, {\varvec{b}}_i|{\varvec{\Theta }}) \) with parameters \( {\varvec{\Theta }}\in \Omega \) and let the density function of \( {\varvec{y}}_i \) given by

The parameters \( {\varvec{\Theta }}\) are to be estimated by maximizing \( f({\varvec{y}}_i|{\varvec{\Theta }}) \) over \( {\varvec{\Theta }}\in \Omega \). In many statistical problems, maximization of the complete-data specification \( f({\varvec{y}}_i,{\varvec{b}}_i|{\varvec{\Theta }}) \) is simpler than that of the incomplete-data specification \( f({\varvec{y}}_i|{\varvec{\Theta }}) \). A main feature of the EM algorithm is maximization of \( f({\varvec{y}}_i,{\varvec{b}}_i|{\varvec{\Theta }}) \) over \( {\varvec{\Theta }}\in \Omega \). Then the log-likelihood function is

where \( Q({\varvec{\Theta }}^{'}|{\varvec{\Theta }})=\hbox {E} \{\log f({\varvec{y}}_i|{\varvec{b}}_i,{\varvec{\Theta }})\} \) and \( H({\varvec{\Theta }}^{'}|{\varvec{\Theta }})= \hbox {E}\{\log f({\varvec{b}}_i|{\varvec{\Theta }})\} \) are assumed to exist for all pairs \(({\varvec{\Theta }}^{'}, {\varvec{\Theta }})\). We now define the EM iteration \( {\varvec{\Theta }}_{r}\rightarrow {\varvec{\Theta }}_{r+1} \in M({\varvec{\Theta }}_{r}) \) as follows:

- E-step:

-

Determine \(Q({\varvec{\Theta }}|{\varvec{\Theta }}_{r})\).

- M-step:

-

Choose \( {\varvec{\Theta }}_{r+1} \) to be any value of \( {\varvec{\Theta }}\in \Omega \) which maximizes \( Q({\varvec{\Theta }}|{\varvec{\Theta }}_{r}) \).

Note that M is a point-to-set map, i.e., \( M({\varvec{\Theta }}_{r}) \) is the set of \( {\varvec{\Theta }}\) values which maximizes \(Q({\varvec{\Theta }}|{\varvec{\Theta }}_{r})\). Many applications of EM are for the curved exponential family, for which the E-step and M-step take special forms. Sometimes it may not be numerically feasible to perform the M-step. So let defined a generalized EM algorithm (a GEM algorithm) to be an iterative scheme \( {\varvec{\Theta }}_{r}\rightarrow {\varvec{\Theta }}_{r+1} \in M({\varvec{\Theta }}_{r})\), where \( {\varvec{\Theta }}\rightarrow M({\varvec{\Theta }}) \) is a point-to-setm ap, such that

EM is a special case of GEM. For any instance \( \{{\varvec{\Theta }}_{r}\} \) of a GEM algorithm,

and

See Wu (1983) for more details.

Rights and permissions

About this article

Cite this article

Taavoni, M., Arashi, M. Kernel estimation in semiparametric mixed effect longitudinal modeling. Stat Papers 62, 1095–1116 (2021). https://doi.org/10.1007/s00362-019-01125-8

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00362-019-01125-8