Abstract

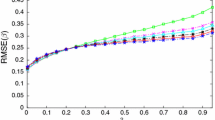

For assessing the precision of measurement systems that classify items dichotomically with the possibility of repeated ratings, the maximum likelihood method is commonly used to evaluate misclassification probabilities. However, a computationally simpler and more intuitive approach is the method of simple majority. In this approach, each item is labelled as conforming if the majority of repeated classification outcomes are conforming. A previous study has indicated that this technique yields estimators that have a lower mean squared error than but the same asymptotic properties as the corresponding maximum likelihood estimators. However, there are circumstances in which the use of measurement systems with a wider scale of responses is necessary. In this paper, we propose estimators based on simple majority results for evaluating the classification errors of measurement systems that rate items in a trichotomous domain. We investigate their properties and compare their performance with that of maximum likelihood estimators. We focus on the context in which the true quality states of the objects cannot be determined. The simple majority procedure is modelled using a mixture of three multinomial distributions. The proposed estimators are shown to be a competitive alternative because they offer closed-form expressions and demonstrate a performance similar to that of maximum likelihood estimators.

Similar content being viewed by others

References

Blischke WR (1962) Moment estimators for the parameters of a mixture of two binomial distributions. Ann Math Stat 33:444–454

Blischke WR (1964) Estimating the parameters of mixtures of binomial distributions. J Am Stat Assoc 59:510–528

Boyles RA (2001) Gage capability for pass-fail inspection. Technometrics 43:223–229

Bross I (1954) Misclassification in 2\(\times \)2 tables. Biometrics 10:478–486

Chena LH, Changb FM, Chen YL (2011) The application of multinomial control charts for inspection error. Int J Ind Eng 18(5):244–253

De Mast J, Van Wieringen WN (2007) Measurement system analysis for categorical measurements: Agreement and kappa-type indices. J Qual Technol 39:191–202

De Mast J, Van Wieringen WN (2010) Modeling and Evaluating Repeatability and Reproducibility of Ordinal Classifications. Technometrics 52:94–106

Fujisawa H, Izume S (2000) Inference about misclassification probabilities from repeated binary responses. Biometrics 56:706–711

Greenberg BS, Stokes SL (1995) Repetitive resting in the presence of inspection errors. Technometrics 37(1):102–111

Gustafson PI (2003) Measurement error and misclassification in statistics and epidemiology: impacts and Bayesian adjustments. Chapman & Hall, New York

Hui SL, Zhou XH (1998) Evaluation of diagnostics tests without gold standards. Stat Methods Med Res 7:354–370

Johnson NL, Kotz S (1985) Some tests for detection of faulty inspection. Stat Pap 26:19–29

Johnson NL, Kotz S (1988) Estimation from binomial data with classifiers of known and unknown imperfections. Nav Res Logist 35:147–156

Johnson NL, Kotz S, Wu X (1991) Inspection errors for attributes in quality control. Chapman & Hall, London

Li J, Tsung F, Zou C (2014) Multivariate binomial/multinomial control chart. IIE Trans 46:526–542

McLachlan GJ, Krishnan T (1997) The EM algorithm and extensions. Wiley, New York

Pham-Gia T, Turkhan N (2005) Bayesian decision criteria in the presence of noises under quadratic and absolute value loss functions. Stat Pap 46:247–266

Quinino RC, Ho LL, Suyama E (2013) Alternative estimator for the parameters of a mixture of two binomial distributions. Stat Pap 54:47–69

Van Wieringen WN, De Mast J (2008) Measurement system analysis for binary data. Technometrics 50:468–478

Acknowledgements

The authors would like to thank the Editor and the anonymous reviewers for their valuable comments, which enabled great improvement of the manuscript. The fourth author would also like to acknowledge CNPq (Process # 304670/2014-6) for partial financial support.

Author information

Authors and Affiliations

Corresponding author

Additional information

The first author is a member of the professorial staff of the Federal Center of Technological Education of Minas Gerais and is grateful for the institutional support received during the preparation of this article.

Appendix

Appendix

Proof of Lemma:

The condition “\(W_0^i> W_1^i \text{ and } W_0^i > W_2^i\)” in the definition of \(F_i\) is equivalent to the event \(``2 W_1^i + W_2^i< r \text{ and } W_1^i + 2 W_2^i < r''\), as \( W_0^i + W_1^i + W_2^i = r \). Therefore,

and according to Fig. 3, \( \Big [ W_1^i + W_2^i < \displaystyle { \frac{r}{2}} \Big ] \subset \big [ F_i = 0 \big ] \); consequently,

However, \( P \Big ( W_1^i + W_2^i < \displaystyle { \frac{r}{2}}\ \Big |\ Y_i =0 \Big ) = P \Big ( W_0^i > \displaystyle { \frac{r}{2} }\ \Big |\ Y_i =0 \Big )\), and \(W_0^i \Big |\ Y_i = 0 \) follows a binomial distribution with a number of trials r and a probability of “success” of \(1 - \pi _0^1 - \pi _0^2\). Therefore, for sufficiently large r, we obtain the following approximation:

where Z follows a standard normal distribution and \(\Phi \) is its cumulative distribution function. The restrictions expressed in (3) imply that

Hence, \( \displaystyle { \lim _{r \rightarrow \infty } }\ P \Big ( W_1^i + W_2^i < \displaystyle { \frac{r}{2}}\ \Big |\ Y_i =0 \Big ) = 1\); thus,

The proofs are analogous for \(k=1 \) and \( k =2 \). This completes the demonstration of the assertion presented in (8). The equality given in (9) is an immediate consequence of (8), and from

it follows that \( \displaystyle { \lim _{r \rightarrow \infty } }\ P \big ( F_i = k \big ) = \theta _k \) for \(k=0\). Similarly, we can demonstrate that this fact is valid for \(k=1\) and \(k=2\), and thus, equality (10) is proven. \(\square \)

Proof of Theorem 1:

Note that

Therefore, \( \text{ E } \big [ \widehat{\theta }_1 \big ] = \displaystyle {- \frac{1}{n} } \displaystyle {\sum _{i= 1 }^{n}} \text{ E } \big [ F_i ( F_i -2) \big ] = P\big ( F_i =1 \big ) \), and by the limit given in (10), we can conclude that \(\displaystyle { \lim _{r \rightarrow \infty } }\, \text{ E } \big [ \widehat{\theta }_k \big ] = \theta _k \) for \(k=1\). This equality can also be established for \(k=0\) and \(k=2\) in a similar manner. \(\square \)

Proof of Theorem 2:

The variance of \(\widehat{\theta }_1\) can be written as

Moreover,

Therefore,

and thus, \( \begin{array}{lll} \text{ Var }\big [\widehat{\theta }_1 \big ]= & {} \displaystyle { \frac{1}{n} \cdot P\big (F_i =1 \big ) \big [ 1 - P\big (F_i =1 \big ) \big ]}. \end{array}\) By Chebyshev’s inequality, for all real \(\epsilon >0 \),

However, \( \text{ Var }\big [\widehat{\theta }_1 \big ] \rightarrow 0 \) as \( n \rightarrow \infty \), and \( \text{ E } \big [ \widehat{\theta }_1 \big ] \rightarrow \theta _1\) as \(r\rightarrow \infty \). Consequently, \(\widehat{\theta }_1\) converges in probability to \(\theta _1\) as \( n \rightarrow \infty \) and \(r\rightarrow \infty \), that is, \(\widehat{\theta }_1\) is consistent. The same reasoning and conclusion are valid for the statistics \(\widehat{\theta }_0\) and \(\widehat{\theta }_2\). \(\square \)

Proof of Theorem 3:

To prove that \(\widehat{\pi }_0^1\) satisfies the conditions described, we note that the sequence \( \displaystyle { \Bigg \{ \frac{(F_i-1) (F_i - 2)}{2} \Bigg \}_{r \ge 1}} \) converges in probability\(\big (\overset{P}{\longrightarrow }\big )\) to the variable \({\displaystyle { \frac{(Y_i -1)(Y_i -2)}{2}}}\), that is, for all real \(\epsilon > 0 \),

In fact, if we define \( I_{\big [F_i = 0\big ]} = \displaystyle { \frac{(F_i-1) (F_i - 2)}{2}} \) and \(I_{\big [Y_i = 0\big ]} = \displaystyle { \frac{(Y_i -1)(Y_i -2)}{2}} \), then we can write

where \( A = \{ (0,1),\ (0,2),\ (1,0),\ (2,0) \} \). Therefore, by applying (9), we obtain the equality given in (14). In addition, the sequence \( \displaystyle { \Bigg \{ \frac{ W_1^i}{r} \cdot \frac{(F_i-1) (F_i - 2)}{2}} \Bigg \}_{ r \ge 1}\) converges in mean square to the variable \(\displaystyle { \pi _{0}^{1}} \cdot \frac{(Y_i -1)(Y_i -2)}{2}\), that is,

Indeed, if we let

then we can write \(\displaystyle { \text{ E } \Bigg [ \Bigg \{ \frac{ W_1^i}{r} \cdot I_{\big [F_i = 0\big ]} - \pi _{0}^{1} \cdot I_{\big [Y_i = 0\big ]} \Bigg \}^2 \Bigg ]}\)

where \(\mathfrak {R} = \displaystyle { \sum _{(y,f) \in A} \text{ E }_{y,f} \cdot P\big (F_i = f \big | Y_i =y \big )\cdot P\big ( Y_i = y \big )}\) and A is the set defined previously. Because the expected values that appear in \(\mathfrak {R}\) are bounded, the limits given in (9) imply that \( \displaystyle { \lim _{r \rightarrow \infty } } \mathfrak {R} = 0 \). For this reason, we now focus on the asymptotic behaviour of \(\text{ E }_{0,0}\).

Note that

Thus, \( \text{ E }_{0,0} = \text{ E } \Bigg [ \Bigg \{ \displaystyle { \frac{ W_1^i}{r} } - \pi _{0}^{1} \Bigg \}^2 \Bigg | Y_i =0,\ F_i = 0 \Bigg ] \)

Furthermore, as \( W_1^i \big |\, Y_i = 0 \) follows a binomial distribution with a number of runs r and a probability of “success” \( \pi _0^1 \), we conclude that \( \displaystyle { \text{ E } \Bigg [ \frac{ W_1^i}{r} \ \Bigg |\ Y_i = 0 \Bigg ] = \pi _0^1} \) and

From the last equality, inequality (16) and result (10), it follows that \(\displaystyle {\lim _{r \rightarrow \infty } \text{ E }_{0,0} } = 0 \). This proves (15) and ensures the abovementioned convergence.

Markov’s inequality guarantees that

Thus, as a consequence of the probability limits established above, we have

and

which imply that

i.e, \(\widehat{\pi }_{0}^{1}\) is a consistent estimator. Additionally, as this statistic is bounded,

that is, \( \widehat{\pi }_{0}^{1} \) is an asymptotically unbiased estimator. By the same reasoning, it can be shown that the other statistics \(\widehat{\pi }_k^{k'}\) are consistent and asymptotically unbiased. \(\square \)

Proof of Remark 2:

The convergence of the first two components follows directly from (10). To prove \(\displaystyle { \lim _{r \rightarrow \infty } \pi _{k,r}^{k'}} = \pi _k^{k'}\), note that Bayes’ Theorem and limits (8)–(10) together imply that

Moreover, we can write

and through a similar strategy, we obtain

From the above inequality and limits (8)–(10) and (18), it can be found that \( \displaystyle { \lim _{r \rightarrow \infty } \pi _{0,r}^1 = \pi _0^1} \). Using the same arguments, this result can be proven for the other values of k and \(k'\). \(\square \)

Rights and permissions

About this article

Cite this article

de Morais, R.S., Quinino, R.d.C., Suyama, E. et al. Estimators of parameters of a mixture of three multinomial distributions based on simple majority results. Stat Papers 60, 1283–1316 (2019). https://doi.org/10.1007/s00362-017-0875-y

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00362-017-0875-y

Keywords

- Misclassification

- Measurement system

- Mixture of multinomial distributions

- Simple majority procedure

- Latent class model

- Maximum likelihood estimators