Abstract

Accuracy, precision, repeatability and long-term stability, are the most important requirements to enable reliable airborne humidity measurements, which are needed for climate models or to validate e.g. remote sensing instrumentation like satellites. However, various hygrometer artifacts which depend on the individual sensor principle and the application profile frequently cause problems and significantly complicate the hygrometer choice. Sensor intercomparisons are one way of providing the information for an optimal choice.

In this paper we present the first part of a blind, static, laboratory-based intercomparison of a new, calibration-free, 1.4 μm diode laser-based, optical hygrometer (SEALDH) with the two most important measurement principles for airborne hygrometry (frost-point hygrometers, FPH, and Lyman-alpha fluorescence hygrometers, LAFH). During three days of measurement, the TDL-hygrometer achieved a H2O resolution of up to 0.5 ppmv (Δt=2 sec) at tropospheric pressure and H2O concentration levels (100–800 hPa, 10 to 8000 ppmv H2O). Its absolute accuracy was investigated via blind intercomparison with two reference FPHs and a LAFH. Without any calibration of SEALDH, i.e. without a comparison to a water vapor standard, we achieve an excellent agreement with the reference sensors, with an average systematic offset (over all three days) of −3.9 %±1.5 %, which is fully consistent with the sensor’s uncertainty bounds.

Further we also reevaluated the SEALDH data of day 2 and 3 in a calibrated mode using an independent set of FPH data from the first day and found an 8-fold accuracy improvement, yielding an excellent overall relative deviation of only 0.52 %±1.5 % with respect to a LAFH and a D/FH sensor.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The significant influence of water vapor on the global climate has early been recognized and first radiosonde soundings were carried out—largely by Hugo Hergesell—in the early 1890s to quantify the atmospheric water content. Currently, the somewhat limited quality of the atmospheric water vapor data hinders their efficient use in climate models, forecast modeling or climate trend studies. Further atmospheric H2O profiles are needed for satellite validation [1]. Despite the constant improvements in sensor accuracy and response time it remains a technical challenge to accurately and precisely measure atmospheric water vapor with sufficient temporal resolution in harsh field situations. This is particularly true in the upper troposphere and lower stratosphere region (UT/LS).

The most important platforms for atmospheric H2O measurements are balloon-borne radiosondes [2], satellites [1], ground-based monitoring stations [3] and finally aircraft [4]. Radiosondes and balloon platforms offer the advantage of high-altitude, stratospheric coverage (up to 50 km), combined with well-known instrument properties and frequent validations in numerous intercomparisons e.g. [2]. However, they provide only very limited spatial coverage, which is also strongly influenced by the wind conditions. Satellites (e.g. ENVISAT [5]), in contrast, are indispensable for large-scale spatial coverage, but only at very limited spatial resolution (10s to 100s of km) and along fixed trajectories and at fixed observation intervals [6]. Further, local measurements are needed for validation of the satellite retrieval processes. Ground-based [7], FTIR- [8] or LIDAR-based [9] stationary monitoring stations [3] have despite their easy access only limited applications, are often limited to the lower troposphere and are difficult to apply to global questions, particularly in marine areas. Airborne platforms like the new German HALO research aircraft [10], with long traveling distances (12500 km), high-altitude capabilities (15500 m) and large payloads (2800 kg) provide a solution for numerous uncovered research tasks, by allowing an easy alignment of the flight plans to scientific questions e.g. detailed investigations of cirrus clouds or intersections with satellite trajectories with a “flying” laboratory. The use of passenger [11] and cargo aircraft [12] as sensing platforms allows very frequent measurement cycles at relatively low cost and provides results along the routes of the commercial aircraft and large long-term measurement series e.g. in the MOZAIC [4] airborne program (with 7500 flights, and 54000 flight hours during 3 years) which will be an important way in the future for a wide meteorological data base [13].

1.1 Airborne hygrometry

Historically a very broad range of hygrometer principles is used for atmospheric monitoring on the above platforms: Most commonly used water vapor sensors are dew-/frost-point hygrometers, solid state sensors and Lyman-alpha or tunable diode laser-based spectroscopic hygrometer.

Dew-/frost-point hygrometers (DPH/FPH) [14] determine the temperature needed for liquid (dew-point) or solid (frost-point) condensate formation on a small cooled surface, either via an optical reflection measurement (e.g. Buck CR1 [15]) or electronically, via the damping of a surface acoustic wave [16]. Today, special commercial DPH/FPH are available for high-accuracy metrological applications [17], for frost points even below −90 °C, as well as for airborne sensing [18]. Recently new, compact and very low weight FPHs have been presented for weather balloon measurements [16]. Common problems of DPH/FPH instruments are primarily the slow temporal response at low FPs, and the risk of unwanted interfering surface deposits when measuring polluted air.

Condensing F/DPH’s are often replaced by solid state sensors (SSS) in applications with severe restrictions in sensor size, weight, power consumption and/or cost. Hygristors, using bulk resistance changes during H2O invasion [19], provide lowest cost and size, but only a rather low accuracy and repeatability, which prevents them from frequent use. Improved SSS performance is available via polymer-based thin film capacitance sensors (TFCS) [20]. Again, time response is limited by adsorption/desorption processes, particularly at low humidities. Further, the TFCS are “relative humidity (rH)” sensors, which require additional temperature and pressure measurements if absolute H2O values are needed. A risk in airborne TFCS use is the interference by hydrocarbon compounds (e.g. kerosene vapor) which may permanently change the sensor calibration, or even worse, lead to a long-term drift of the sensor. When applying a sensor recalibration every 500 hours in a calibration facility [21] TFCS’s showed during the MOZAIC program [22] a long-term accuracy in the 5–10 % range and in the troposphere a time response better than 10 s [23]. However, TFCS performance is insufficient for stratospheric measurements with rH’s below 5 % and absolute humidity levels of 10 ppm and below.

Unlike FPH and SSS sensors, optical hygrometer principles (using UV lamps, IR lamps or diode lasers), do not require direct contact to the gas: Strong water absorption in the vacuum ultraviolet, i.e. the Lyman-alpha (L α ) emission line of atomic hydrogen at 121.6 nm is used for two powerful and frequently used sensor principles. The Lyman-alpha Absorption Hygrometer LAAH [24] uses the direct absorption of water vapor and allows a sensitive and fast (ms) H2O detection with only a few millimeter optical absorption path. Problems are caused by O2 cross interference of the absorption signal, which requires efficient offset correction at low water vapor volume mixing ratios (WVMR). Further critical points requiring frequent calibration are long-term stability of the UV lamps, long-term intensity fluctuations, and degradation of the MgF2 windows via solarization. Commercial LAAH’s were available in the 90’s (Buck Research e.g. the “L-5” [15]). This instrument covered a frost-/dew-point range of −80 °C to 20 °C at 100 Hz time resolution and achieved 4 % accuracy in the boundary layer but only 50 % in the upper troposphere [15]. An improved two path/two wavelength calibration strategy is described in [25]. In some cases the LAAH speed was combined with the FPH accuracy e.g. for an in-flight calibration [26].

Significantly higher sensitivity and chemical selectivity was achieved with the development of the Lyman-alpha Fluorescence Hygrometer (LAFH), which uses H2O photo dissociation to yield an excited OH∗ molecule and H-atom, which is then very effectively detected against a zero background via its chemiluminescence at 305–325 nm [27]. A very well characterized airborne LAFH, called FISH = fast in situ stratospheric hygrometer [28], which was frequently used in many balloon [29] or airplane campaigns [30], achieves a precision better than 0.2 ppmv at 1 s integration time, with an accuracy 4 % in the range up to 1000 ppmv.

Instead of the UV, IR absorption hygrometers (IAH) use spectrally broadband absorption of IR light typically in the short wave mid infrared around 2.6 μm. A mechanical chopper wheel selects two wavelengths ranges from an infrared source, one in the fundamental H2O absorption band (e.g. 2.6 μm) and a non-absorbing reference range (e.g. 2.5 μm). IAH’s [31, 32] achieve at 50 cm optical path length 1 % accuracy for H2O densities above 2 g/m3 and 6 % at around 0.1 g/m3. Typical airborne applications are fast vertical H2O fluxes in and above the planetary boundary layer [33].

Over the last years tunable diode laser absorption spectroscopy (TDLAS), particularly in the near infrared spectral range between 1 to 3 μm, had huge influence on the atmospheric hygrometry [34–38]. This reflects the unique properties of diode lasers (DL) like very high spectral resolution and spectral power density, continuous tunability, in combination with excellent technical properties, i.e. rather low cost, very low size, weight and power consumption, long laser life time, good beam quality and optical fiber coupling to name a few. One interesting TDLAS variant employs photo-acoustic (PA) detection [39], but the linear sensitivity dependence on laser power, makes diode lasers with their rather low output power, in the 10 s of mW, a suboptimal choice. Nevertheless, an airborne PA hygrometer with a differential acoustic detection scheme to suppress outside noise has been demonstrated, claiming detection limits below 1 ppmv at 5 s response time [40].

In most cases, however, TDLAS hygrometers use optical detection schemes. These can be classified according to (A) the light path type (single path [41]/classical multipath beam [42]/or cavity-enhanced (CE) techniques like Cavity-Ring-Down-Spectroscopy, CRDS [43] or Cavity-Enhanced-Absorption-Spectroscopy, CEAS [44]), (B) the wavelength modulation scheme (single modulation = direct TDLAS [45] or double modulation schemes like wavelength modulation spectroscopy, WMS [46] and others) and (C) the calibration strategy (calibration with the target gas (two- or multi-point calibration), a zero gas calibration (one-point calibration) or none of them (calibration-free spectrometer); also called self-calibrating spectrometer).

The optical path length necessary for TDLAS depends on the required S/N ratio, the dynamic range, the line strength of the transition, the temporal response, but also on detector properties, choice of electronics, optical noise by fibers and other optical elements and many other factors, making the TDLAS system design a non-trivial optimization problem. Path length folding, such as in White [47], Herriott [35, 38], and astigmatic Herriott [48] arrangements, is a frequently applied principle, but difficult to optimize, in airborne applications. Cavity-based techniques e.g. [49, 50] combine—depending on mirror reflectivity—longest path lengths with very small cell volumes. But this performance depends on mirror degradation and requires strict protection from mirror contamination e.g. by aerosols, thereby excluding sampling-free open-path setups. Open-path TDLAS systems, however, avoid H2O adsorption/desorption and gas transport problems, maximize temporal response, can be combined with multipath arrangements to enhance sensitivity and still be applied to harsh airborne applications like [37, 38, 51–53]. In addition, CRD Systems, have to “wait” for the individual built-up and ring down events. This limits the wavelength scan speed, absorption line recovery and therefore temporal resolution especially when resolving fine and fast water vapor structures during flight operations. Direct TDLAS allows kHz repetitions rates and μs time resolution, so the time resolution is limited by the gas exchange rate—not by the spectroscopic properties. Most important for field and particular for airborne instruments is the calibration strategy. WMS techniques [54–57] or [58] offer great sensitivity due to the small detection bandwidth and are very frequently employed in airborne hygrometers. However, they are expressly hampered by their complicated signal dependence on line width hence on air pressure and temperature and therefore need constant pressure extractive detection or complicated 2-D calibration matrixes. Due to its presumed S/N advantage, WMS is often combined with shorter absorption paths, giving smaller, lighter and more compact detections systems. This, however, comes at the price of decreased optical baseline stability, which limits the system performance or significantly increases the system complexity, the opto-mechanical and temperature stability requirements, as well as the calibration procedure, thereby compensating the S/N “advantage”. In addition it was shown recently that the WMS S/N advantage is only marginal with today’s high-bit-resolution DAQ cards [59].

Given the complexity of WMS, the missing robustness of cavity systems, the need for complex and performance limiting calibration procedures for most airborne hygrometers it seems essential to develop a self-calibrating airborne TDLAS hygrometer based on direct absorption spectroscopy, which is on the other hand much faster than frost/dew-point hygrometers as the best absolute hygrometry principle.

In this paper we describe the application of the SEALDH spectrometer (Selective Extractive Airborne Laser Diode Hygrometer), an extractive, self-calibrating, absolute TDLAS instrument to a blind intercomparison with other hygrometer principles which took place in the framework of the joint research activity “Development and Evaluation of Novel Compact Hygrometers for Airborne Research” (DENCHAR) within the EU-project “European Facility for Airborne Research” (EUFAR). In addition we independently evaluated the SEALDH raw signals in a “calibrated mode” and discuss the performance and the risk of using an intercomparison-based sensor calibration.

2 Direct TDLAS

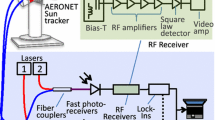

The basic principle of Tunable Diode Laser Absorption Spectroscopy (TDLAS, Fig. 1) [60] and how to extract absolute H2O volume mixing ratio [45] has been explained in detail in literature. For completeness the basics will be discussed here briefly and emphasis will be given to the special features of this application.

TDLAS uses a narrow-band, continuously tunable diode laser as light source. The transmitted light is attenuated by wavelength-dependent molecule-specific absorption, while the laser wavelength is periodically modulated by a triangular laser current modulation. The repetition rate of this modulation is chosen depending on the individual application and is typically in the range between 100 Hz and 10 kHz. A time resolution in the ms to μs range is therefore possible and has already been demonstrated [61].

An appropriate absorption line near 1370 nm was selected in order to minimize cross-sensitivity to other gases or neighboring H2O lines, the temperature dependence of the line area [62] and characterized regarding line strength, air broadening and its temperature dependence [63]. Another advantage of this line is excellent availability of high-quality telecom-grade fiber optical components and fiber-coupled laser modules, which minimizes size, weight and cost of the instrument and allows effective suppression of parasitic water absorption in air absorption paths outside the intended measurement cell.

For the low light intensities of a few mW the amount of transmitted light I(λ) can be described by the extended Lambert–Beer equation (1) which includes background radiation (E(t)) and broadband transmission losses (Tr(t)).

This can be converted to describe the H2O volume mixing ratio, c, Eq. (2) by applying the ideal gas law

Here c describes the H2O volume mixing ratio, k B the Boltzmann constant, T the measured temperature, L the absorption path length, I(ν) the measured intensity at the detector, the initial light intensity I 0(ν), the background emission E(t) and the transmission losses Tr(t). Finally, \(\frac{d\nu}{dt}\) describes the dynamic tuning coefficient of the laser, which has to be determined experimentally using the Airy-signal when the laser light is transmitted through a planar air-spaced etalon [45]. A closer look at Eq. (2) shows that no additional calibration parameter is needed to describe the transmitted laser light. Pressure and temperature sensors are calibrated, of course, but this is routinely done in metrology institutes with very high accuracy and stability (pressure uncertainty <10−4 (full scale), temperature uncertainty <0.1 K deviation). The length of the optical path is determined by the design of the mechanical structure. The temperature dependent line strength S(T) is taken from HITRAN08 [64] or own measurements [65].

Finally, no calibration has to take place at the target gas; the spectrometer can surely be calibrated to increase the accuracy—also in a post sequent calibration when it is necessary. A typical raw data trace is shown (Fig. 2) to illustrate the data evaluation process, which is based entirely on Eq. (2). The raw signal shows a strong tuning-related amplitude modulation of the laser and a weak but clearly visible molecular H2O absorption signal. The entire fitting scheme is described in detail in [45] and here is only explained briefly. The model function used for all data evaluation in this paper is composed of a third order polynomial function for the baseline and a Voigt profile for the absorption line. Depending on the application and the signal quality the line width typically is calculated from broadening coefficients and pressure and temperature measurements in order to minimize the degrees of freedom of the fit. The entire fit is realized using an in-house Labview program which allows monitoring and interacting with the fitting process at any time. The peculiarity of the subsequent data evaluation is based on the decoupling of measurement and evaluation speed, which enables measurement rates of up to a few kHz and synchronous measurement of pressure and temperature. Each single, measurement period (absorption scan) contains all the information to evaluate the signal in this absolute, self-calibrating way.

3 The SEALDH spectrometer

The spectrometer used in this intercomparison is based on the above explained principle of direct TDLAS in combination with extractive gas sampling into a small optical measurement cell. Its basic configuration follows completely Fig. 1. The laser is modulated at a frequency of 140 Hz with a wavelength scan depth of 1.5 cm−1 across the (110) to (211) H2O absorption line at 1370 nm [65]. Successive numbers of scans (typically 20, depending on applications) are averaged, evaluated by fitting a model function consisting of a background polynomial and Voigt line shape and used to extract the line area. Transmission and emission correction according Eq. (1) are employed as described in previous literature [45]. Line area in combination with the ideal gas law, measured pressure, temperature and pressure broadening coefficient in combination with HITRAN08 [64] line strength and partition function data is used to compute the H2O volume mixing ratio. Full details of the instrument e.g. the line locking scheme, the autonomous functions, details of the electronics and special instrumental adaptation to autonomous airborne measurements will be published in [66].

The measurement cell of the SEALDH instrument is a new, fiber-coupled, extractive, multipath absorption cell in White configuration [47]. The external cell dimensions, 120 by 80 by 50 mm, yield an internal cell volume of about 300 cm3 and permit optical path lengths of about 1.1 meters. The built-in detector, thermocouple, and fiber coupling minimize parasitic water effects. An estimated flow of 5 sl/minute yields a response time of 10.8 seconds under the assumption of a turbulent flow (with approximately 98 % volume exchange (3 × cell volume)) and approximately 3.6 seconds at plug flow conditions with 1 cell volume exchange. In this configuration the cell is suitable for a concentration range of 50–25000 ppmv at pressures from 0 to 1200 hPa. Figure 3 shows two typical absorption signals for water concentration around 870 ppm and pressures of 400 and 200 hPa, both recovered with the compact White cell and fitted with the above-described Voigt profile.

A quick evaluation of the very short-term (single scan) spectrometer performance is done using the absorption profiles (Fig. 3) via analysis of the residual (Fig. 3 below) between the measured data and the model. At 400 hPa we find an excellent agreement between measurement and model fit with a 1σ noise on the residual of almost 10−4 OD. From the peak OD and the standard deviation of the residuum at 400 hPa we determine a SNR of better than 480 at 200 hPa. The residuum of the 200 hPa trace shows clear deviations between the Voigt model and the measured line shape, indicating that higher order line shape models like Galatry might be needed. In order to determine the SNR in this case, we use the “local” residual in a spectral region (blue shaded in Fig. 3) which is not influenced by the line shape deficits, which then yields a SNR of 400. Using Eq. (2) we convert the measured line areas in the high/low pressure case into absolute H2O concentrations of 860 (880) ppmv (Table 1), which vice versa allows us to estimate the 1σ noise equivalent concentrations of 1.8 (2.2) ppmv from the SNR. In another computation step we can convert noise equivalent concentrations in approximate frost-point precision or we normalize C noise by path length and measurement bandwidth to yield C precision.

To investigate the spectrometer’s longer term stability we measured the water vapor concentration of a humidified reference gas provided by a PTB humidity generator. The produced highly stable, constant water vapor concentration in air was measured by the spectrometer at a pressure P absolute=907.54 hPa (P noise=0.124 hPa (1σ)), a temperature of T absolute=21.47 °C (T noise=0.045 K (1σ)) and a concentration of C absolute=1171.1 ppmv (C noise=0.877 ppmv (1σ)). The statistical variations of the spectrometer H2O concentration signal were evaluated via the Allan-variance [67, 68]. The total H2O variance for an observation interval of about 45 minutes was 877 ppbv (1σ), resulting in a water vapor SNR of 1335, indicating a very stable H2O reference from the PTB generator.

From these 45 min of data we derived an Allan plot (Fig. 4) for the SEALDH spectrometer, which indicated an optimal precision of 160 ppbv (equiv. to FP precision=8 mK at FP absolute=−19.737 °C) when 41 recorded (pre-average 20) scans are averaged, which is in this configuration equivalent to a time resolution of 58 seconds and to a normalized precision of: 2,4 ppmv m Hz\(^{-\frac{1}{2}}\). However, it should be noted that this Allan data set was acquired in a configuration identical to the latter blind intercomparison in which the spectrometer operating conditions where adapted to conditions with slow concentration variations, which in this special case limits the data throughput to around 10–20 % of the available scans per second, so that for a speed optimized version we will have a three-fold improvement in normalized precision.

3.1 Humidity sensor intercomparisons (IC)

The vast majority of humidity sensors work in an extractive sampling mode and need more or less frequent calibrations, which is done with a broad range of methods in different laboratories. Here either two (or more) water sensors (one reference and one test device) are intercompared simultaneously by analyzing a dynamically generated humidified gas sample. Alternatively a well validated reference humidity generator provides a well-defined humidified gas sample which is analyzed by the test device. Calibrated sensors thus depend critically on the quality and validation of the reference sensor or the reference generator (but also on the sampling capabilities of the sensors and the calibration lab, as the gas “transport” to the sensor may be strongly influenced by the strong absorption of the water molecule). Large calibrating infrastructures are set up by the national metrology institutes (which provide the national primary humidity standards) and national calibration services like the German DAKKS or DKD, which are linked to these primary standards, to provide the necessary humidity references. While the industry makes ample use of these calibration services, direct linkage to such reference infrastructures is much less common in the scientific communities and frequently proprietary calibration setups without sufficient validation are used for calibration. Furthermore it is also quite uncommon to provide sufficient information about the calibration path, the used infrastructure and their validation in scientific publications, so that the validity of such “calibrated” values has to be critically reviewed and probably explains a substantial fraction of the variation in sensor intercomparisons.

The performance of absolute, self-calibrating sensors does not depend on such intercomparisons. Nevertheless, intercomparisons are absolutely essential to validate the sensor. Furthermore, it is important to note that errors in the reference generator or instrument are not transferred (via the calibration) onto the absolute sensor and thus cannot falsify the instrument. Instead absolute sensors like D/FPH or direct TDLAS always work as a consistency check of the actual state of the setup and of the whole methodology.

Numerous in-flight intercomparisons (IIC) of humidity sensors on different platforms are published, e.g. between several radiosondes and a D/FPH [2], or between a suite of totally different instruments [69]. The results show significant scatter and document for certain instruments deviations of up to 110 % throughout the entire altitude region [69]. A static study on various capacitive humidity sensors [22] showed deviations of 5 % at 80–120 ppmv between the capacity sensors, but a temperature dependent 5–15 % deviation to the cryogenic reference FPH. A balloon-borne IIC between a research grade FPH and LAFH [70], resulted in a mean deviation of −2.4 %±3.1 % at heights of 15 to 25 km. An IIC of an in situ, 1.4 μm TDL instrument with a FPH resulted in a ±10 % deviation over a range of mixing ratios and altitudes [71]. On the other hand, a calibrated 1.4 μm open-path TDL instrument with a 0.3 ppmv average sensitivity within the tropopause (12 km) and 0.6 ppmv (average) under tropospheric boundary-layer conditions reported over 20 % deviation to a cryo FPH due to “increased pressure broadening” [72].

Surprisingly, lab-based IC under better controlled, quasi-static conditions are published much less frequently. Of course, lab comparisons do not directly provide a full evaluation of possible in-flight deviations between hygrometers, as numerous in-flight effects which influence sensor performance might be absent. But a static lab IC much better elucidates the absolute accuracy of the sensor principle and isolates it from problems due to sampling difficulties (e.g. from aircraft inlets) or problems from the dynamic sensor behavior and thus is an important sensor validation step. Under such quasi-static conditions an advanced, well-validated D/FPH is an excellent reference instrument. Accordingly, if the above-mentioned or e.g. this Fluorescence Water Vapor Sensor [73], which reported differences to a frost-point hygrometer mounted on the same aircraft, would have been compared under static condition it would have been easier to evaluate if there is a principal problem, or a problem of the harsh environment.

A quite recent, very comprehensive and simultaneous lab IC is “AquaVIT” [36], which was realized at the AIDA cloud chamber under well-controlled, quasi-static, UT/LS equivalent conditions. IC conditions were unusually strict and organized as a blind and externally refereed IC. AUQUAVIT encompassed 22 instruments (TDL, D/FPH, LAFH and other principles) from 17 international research groups. The instruments were categorized in well validated “core” instruments (APicT, FISH, FLASH, HWV, JLH, CFH) and “younger” non-core instruments. AquaVIT documented even between the core hygrometers deviations of ±10 % in the important 1 to 150 ppmv WVMR range. A full, very detailed analysis covering 0.1 to a few 100 ppm is available online in a white paper [36]. Other, smaller lab IC were performed like [74] respectively of two FPH (MBW 373 and TOROS) against a calibrated, airborne 1.4 μm TDL (WVSS-II) (maximum deviation about ±15 %) [75] which is interesting, as the German Weather Service and others [12] use the WVSS to derive important meteorological data.

In summary, the above-mentioned intercomparisons show that even if they are done in quasi static, well controlled laboratory campaigns they still lead to deviations of more than 10 %. The harsh condition in in-flight campaigns increase this difference, so it is purposeful to show in laboratory campaigns the current state of development—especially if they are done as blind intercomparisons.

3.2 Blind intercomparison (BIC)

The intercomparison, of which data are shown here, took place at the Research Center in Jülich within the EUFAR/DENCHAR project. The comparison was, similar to AquaVIT [36], designed as a “blind” comparison; this means that participants receive no data from the reference or the other test instruments until all participants have submitted their own data. After the submission is complete the data of the entire group are disclosed. Any change in the data after the disclosure had to be justified, reasoned and this information is to be stored with the modified data. The idea of such a comparison is to prevent any intended or unintended external influence of the “known” results on the respective data evaluation.

The following sensors participated in the intercomparison: As reference instruments, a Lyman (α) fluorescence hygrometer [27] and a D/FPH (General Eastern, Type D1311R), a 1.4 μm TDL instrument (WVSS-II–x) [54], a surface-acoustic-wave sensor SAW [16], a 1.4 μm diode laser-based photo-acoustic instrument called “WaSul-Hygro” [76] and finally two further D/FPH (MBW973, IfT, Leipzig). Further, the calibration bench used before the blind intercomparison contains another D/FPH sensor (DP30, MBW).

In this paper, we only discuss the intercomparison of our SEALDH instrument (Selective Extractive Airborne Laser Diode Hygrometer), vs. the reference instruments. A publication with all other test instruments is currently discussed.

4 Results

4.1 Absolute comparison SEALDH vs. FPH (MBW DP30)

The comparison was split into two parts. A first set of experiments served as a calibration run for the calibrated sensor principles. Since SEALDH dos not need to be calibrated, we used this pre-experiment as a further IC run with regard to the Jülich laboratory calibration bench [28]. In the calibration bench dry synthetic air (H2O < 5 ppmv) from a pressurized gas cylinder is divided into two branches one of which is moistened using a temperature-stabilized bubbler, while the other one serves as dry reference zero air. Zero and humidified air could be mixed in a 2 meter long mixing loop via two flow controllers. The reference DPH sensor (MBW DP30, accuracy 0.1 K) in combination with the calibrated pressure sensor of the calibration bench is specified to permit an absolute accuracy of 4 %. The different H2O concentration levels during these experiments were chosen to reflect typical conditions of the upper troposphere.

Figure 5 compares the concentration profiles simultaneously measured with SEALDH (in self-calibrating mode using HITRAN08 data) and the DP30 FPH, both connected in parallel to the H2O source of the calibration bench [28]. The sample air was sucked through the extractive multipath cell of SEALDH while the cell flow and thus the cell pressure was manually adapted using needle valves before and after the instruments. This stabilized the measurement conditions, but also allowed to examine pressure dependencies of the signals.

Absolute H2O concentration profiles simultaneously measured with the TDLAS spectrometer SEALDH (in self-calibrating mode using HITRAN08 data) and the FPH DP30 (MBW) as reference instrument. Relative deviations are discussed below in Fig. 7 and others

With an effective calibration of the DP30 temperature sensor, the DP30 is an absolute humidity sensor and thus an interesting high-quality reference for the validation of the self-calibrating absolute detection capabilities of the SEALDH spectrometer. A first look at the overall deviations between DP30 and SEALDH, shows over the entire DP30 data set a promising SEALDH performance with an average negative deviation of only 2.85%±1.22 %. This systematic deviation is fully compatible with the uncertainty of the H2O linestrength data (5–10 % in HITRAN 2008 or 3 % in own measurements [65]). However, if we look closer on the deviations and their dependence on the boundary conditions, we find a strong correlation with the SEALDH cell pressure (Fig. 6).

No correlation of ΔH2Orel was found with the H2O concentration, aside of a noise increase at lower concentrations, which is expected due to the decrease in SEALDH’s signal to noise ratio. Other possibilities for the systematic deviations like faulty pressure sensor or errors in the pressure calibration could be ruled out by further tests. Problems with the HITRAN data or with line shape model where excluded, based on the good performance of our TDLAS instruments during the “Aquavit” intercomparison [36], which also work on the same absorption line. This led to the assumption of a very small leak, which was supported by the fact that in this case we should see a ΔH2Orel correlation with the pressure drop across the leak as well as the outside H2O concentration. The leak is expected to behave like a critical nozzle, once the pressure in the cell drops below half of the outside pressure, which then locks the mass flow through the leak. The sample gas, however, is sucked through the cell by a vacuum pump which delivers a constant volume flow. Reducing the cell pressure with the pump reduces the volume flow through the cell while the mass flow through the leak stays constant which leads to pressure dependent “spiking” of the sample gas with humid lab air.

The ΔH2Orel vs. pressure relationship was fitted by a linear regression (Fig. 7) with relatively good correlation coefficient. The good correlation between model assumption and measured deviation justified a systematic dismantling of the spectrometer (after the blind intercomparison campaign), and lead to the detection and removal of a tiny leak due to a mechanical damage in the inner gasket of a metal fitting. The leak size can be estimated, based on the cell flow initiated by the pump of 1 standard liter/min (slm) at about 100 hPa cell pressure. The deviation of the reported SEALDH value to the DP30 value is smaller than 4 % at a 300 ppmv absolute water vapor level (see Fig. 6). This gas flow is mixed with the leakage flow of the outside air, containing about 10000 ppmv of water vapor, so that the contaminating flow can be finally estimated in the order of <10−3 slm, which is plausible size for the damaged fitting.

It has to be kept in mind that the small leak was found during the data analysis of the raw data weeks after the intercomparison campaign. Thus, all SEALDH intercomparison data in this paper are influenced by this small effect, which is still within the uncertainty bounds of the instruments. If we compare our approach based on a calibration-free sensor (here TLDAS) with the usual calibration strategies, e.g. typically pre- and/or post-campaign laboratory calibration, it is obvious that the small discrepancy, e.g. by our leak—in most cases—would have disappeared or have been “corrected” during the calibration procedure. Hence, the functional relationship between ΔH2Orel and pressure due to the leak would have become a “part” of the calibration curve. As long as the boundary conditions during the calibration are constant and identical to the campaign conditions a sensor calibrated like this would, despite the hidden effects, probably provide correct results and the malfunction (leak) would not be noticed. Constant calibration conditions are quite likely in a typical air-conditioned laboratory. In this case, the lab air humidity could shift from 10000 to 9500 ppmv and the absolute change in the relative deviation would still only be 0.15 % and therefore hardly detectible. However, if the calibrated sensor is used e.g. in the cargo compartment of an airplane, “outside” humidity (and temperature) would change significantly and large deviations will appear, which are quite difficult to debug, as the calibration is assumed to be valid.

At this point the unique advantage of a calibration-free sensor is shown impressively. Such instruments, when carefully validated with other absolute references allow a much easier debugging, as the instrument is consequently checked until it reports correct values within the uncertainty range. For SEALDH (and other spectroscopic sensors) it also proves to be a particularly important strategy to also store raw unprocessed data, as these allow at any time—even months after the campaign—diverse cross-checking possibilities and an independent analysis of the physical background of the measurement process, e.g. for TDLAS, problems due to window fouling, detector offsets by straylight or misalignment, or for instance an independent check of the pressure sensor by comparing expected and fitted absorption line width, based on signals provided from the spectrometer during operation.

4.2 Absolute comparison: SEALDH vs. LAFH and GE D/FPH

The second part of the campaign was organized as the “blind” part of the comparison, which took place at the environmental simulation facility chamber (ESF-chamber) of the research center in Jülich, and covered the simultaneous comparison with the reference instruments and the other participating instruments. The ESF-chamber is a computer controlled, stainless steel vacuum chamber (internal size 80×80×80 cm, volume 500 L) [21], which is used to simulate tropospheric to lower stratospheric temperature, pressure, and humidity conditions corresponding to altitudes of up to 15 km, and e.g. frost-point temperatures down to −80 °C can be reached.

All sensors were connected to the ESF via heated sample stainless steel lines, which were kept as short as possible. As reference instruments served for low water vapor volume mixing ratios (WVMR: 1–1000 ppmv) a LAFH [27] with an overall accuracy of ±4 %, and for larger WVMR up to 20000 ppmv a build-in D/FPH (General Eastern, Type D1311R) with an accuracy of ±0.5 K.

The temporal ESF p, T-profiles were chosen to mimic the requirements for airborne measurements as close as possible. Temporarily constant conditions over longer time spans were to ensure that thermodynamic equilibrium is achieved in the rather large chamber. The relatively slow variations between the steps could be used a test scenario for the low frequency dynamic behavior. Two p, T, rH profiles were realized: (A) with strong WVMR variation and relatively small pressure changes and (B) inverse, with near-constant WVMR variation and large pressure steps.

Figure 8 depicts the WVMR measurements with SEALDH and the LAFH instrument during the constant pressure profile 2A. Over WVMR range from 25 to 8000 ppmv we have a very good, consistent behavior of the two sensors, with an average negative relative deviation of 3.8±2.1 %, which is within the 4 % uncertainty of the LAFH instrument.

The biggest deviations happen particularly within the slopes of the changes and in the case of upward WVMR steps, indicating thermal inertia of or adsorption/desorption processes in the ESF chamber. The systematic offset between SEALDH and LAFH is consistent with the DP30 measurements shown in Fig. 6. Due to the small pressure variations (≈50 hPa) the pressure-dependent contributions are relatively constant. Removing the average offset reveals—even for slow transitions—further oscillatory deviations of up to 5 %, which are caused by different sensors response times as well as thermal hysteresis effects and therefore limit the direct point-by-point comparison of the sensor response. Thus, only when the chamber is at least close to thermodynamic equilibrium, the recorded values are suitable for a quasi-static absolute comparison. In Fig. 8 we therefore sectioned the relative deviation (2A to 2D in Fig. 8) and statistically evaluated only these data with respect to average offset to the reference and the standard deviation of the offset. Figure 8 also shows in the relative deviation trace additionally to the slow variations an additional strong noise-like component that is investigated in Fig. 9, which shows an enlarged section of the original WVMR signals of SEALDH and the LAFH in area 2C of Fig. 8. Figure 9 clearly indicates that short-term fluctuations in the LAFH sensor are the source of this noise, particularly since the time resolution of both sensors is about one second and an averaging effect can therefore be neglected.

Detailed view of area 2C in Fig. 8 showing the comparison of SEALDH and LAFH. Top: absolute traces, Bottom: relative deviation of SEALDH to LAFH. Obviously the relative deviation noise results from short-term fluctuations of the reference device and not from the SEALDH TDL spectrometer

4.3 Absolute comparison with General Eastern, Type D1311R

The second part of the blind intercomparison targeted strong pressure variations similar to ascents or descents in flight applications in combination with near constant, but relatively high, quasi-tropospheric WVMRs. The aim was to evaluate the pressure dependence of the instruments and validate the stability at a relatively constant H2O-level. Since the LAFH is not suitable for such high WVMR it was necessary to compare to a different reference instrument, which was in this case a D/FPH sensor (General Eastern, GE, Type D1311R). As shown in Fig. 10 the absolute values and overall performances of SEALDH and the GE FPH matched very well; SEALDH offsets and dynamic deviations are again consistent with the results of the previous comparisons shown above. Significant deviations over the −4 % offset show up only during highly dynamic and large pressure changes, which we attribute to the insufficient temporal behavior of the GE FPH.

The absolute validation showed very good results, especially due to the excellent error control processes of SEALDH in the data evaluation with its various debugging and cross-checking options.

Regardless of the leakage problem a conservative uncertainty budget estimated comprises the following contributions: A relative uncertainty of 5×10−4 (full scale of the pressure sensor, Baratron, 1000 hPa), and an absolute offset uncertainty of 2 hPa; 10−3 relative uncertainty from the analog readout signal and its digitization; about 1 % (or 2 K) from the temperature sensor (a thermocouple, which was calibrated in a thermal bath) with its readout electronics and its long time stability; 1.9 % (or 21 mm) for the uncertainty of the optical path length in the absorption cell; and 1 % for the fit process. In addition, the currently best uncertainty of the H2O line strength is 3.5 % [65]. Finally, this results in an overall uncertainty of 4.3 %. The largest relative influences to this total uncertainty budget come from the line strength (65 %), followed by the uncertainty of the path length (19 %), pressure and temperature measurements (5 % each).

4.4 Calibrated SEALDH reevaluation

Of course, an absolute instrument like SEALDH can be calibrated, too. In principle, this contradicts our philosophy for this instrument development for the reasons declared in the introduction. For the measurements presented here, a belated “offline” calibration using data from the blind intercomparison offers the possibility to remove the small but significant, systematic and pressure dependent errors caused by the leak, thereby avoiding the necessity to repeat all measurements. Simultaneously this calibration of course also removes all other systematic effects e.g. by the line strength uncertainty. Another positive effect of the calibration would be that we get a better estimate of the sensor performance under conditions when the leak is removed and when the line strength data had been improved, which then provides a better basis for an unbiased comparison between SEALDH and calibrated sensors.

To be compatible and comparable with the calibration approach of the other instruments in this blind IC we also use for our SEALDH calibration only the SEALDH-DP30 data, hence i.e. the linear function derived from our correlation from Fig. 7.

Figure 11 shows the modified SEALDH GE-FPH intercomparison data (Fig. 10) after calibrating SEALDH by use of this linear calibration (Fig. 7).

It is clearly visible that the SEALDH calibration (using Fig. 7) greatly reduces the deviation to the reference D/FPH (General Eastern, Type D1311R) to almost zero (average deviation μ=0.17 % and 0.23 %). The same procedure is also applied to the SEALDH-LAFH intercomparison (Fig. 8). The average relative offset μand the standard deviation of the relative deviation σ is calculated in the same data windows (2A–2D and 3A, 3B) like before (Table 2).

With calibration we find an 8-fold reduction of the average sensor offset to only +0.52 %. The LAFH and the GE FPH reference sensor thus show an excellent agreement with SEALDH. The “deviation noise” remains unchanged. This result is also noticeable because SEALDH is calibrated with the completely independent DP30 data set and then compared with two completely different sensors.

The effect of the SEALDH calibration would be even better for the leak-free version of the sensor, because in this case the major part of our uncertainty contributions stems from the line strength, so that the calibration of the troubleshooted SEALDH effectively corrects the HITRAN08 line strength errors. This is important to note as S(T) is—without temperature changes of course—constant in time, so that a one-time calibration of SEALDH should be sufficient to significantly enhance the sensor accuracy. The long-term stability and repeatability of the system (which could not be analyzed with this short 3-day data set) should remain unchanged from this process. Repeated SEALDH calibrations would mainly act on errors in the p, T measurements as well as optical baseline effects, which seem to have only minor influence at our current accuracy level. Contrarily, it has to be taken into account that all limitations and errors of the calibration procedure itself are projected onto the sensor performance, so that a careful absolute evaluation of the calibrator itself would be required to exclude that influence and avoid this obvious risk.

5 Conclusion and outlook

A new, absolute, calibration-free, 1.4 μm diode laser-based hygrometer, SEALDH, was validated in a blind comparison at the Jülich Environmental Simulation Facility chamber with two dew/frost-point hygrometers (MBW DP30 and a General Eastern, Type D1311R) and a Lyman-alpha fluorescence hygrometer. During three days of measurement, the TDL-hygrometer achieves at tropospheric pressure levels (100–800 hPa) a resolution of 0.5 ppmv (Δt=2 s). Without any calibration, i.e. without a comparison to a water vapor standard, we achieve an excellent absolute accuracy with an average systematic offset (over all three days) of −3.9 %±1.5 %. This offset is fully consistent within the sensor’s error bounds, which are dominated by the H2O line strength uncertainty (80 % thereof). Compared to calibrated sensors, which tend to compensate and thus “hide” sensor problems by projecting the performance of the reference instrument to the device under test, our calibration-free, model-based sensor SEALDH was shown to be more robust and easier to troubleshoot due to the required self-consistency of the model parameters. Particularly the storage of all raw data with the possibility of a later, more precise reevaluation provided a powerful concept to detect faults and maximize sensor performance. Finally we also evaluated for the first time the possibilities of a calibrating the SEALDH sensor and found for the intercomparison data presented here an 8-fold accuracy improvement, yielding in the calibrated mode an excellent overall relative deviation of only 0.52 %±1.5 % with respect to a LAFH and a D/FH sensor.

Within a recently started project (“EUMETRISPEC”) at PTB we aim at traceable, FTIR based, high-accuracy line data measurements, which will allow to directly improve the accuracy of absolute TDL spectrometers like SEALDH even further and without the need for calibrations. Such high-accuracy, calibration-free TDL hygrometers will in combination with their fast response time and high precision turn out to be an even more interesting tool for atmospheric research and a promising e.g. for applications like the explorations of cirrus clouds [77], or as a reference instrument to validate other devices [78].

References

H. Oelhaf, A. Fix, C. Schiller, K. Chance, in Chemistry Validation, vol. 2004 (2004), pp. 3–7

L.M. Miloshevich, H. Vömel, D.N. Whiteman, B.M. Lesht, F.J. Schmidlin, F. Russo, J. Geophys. Res. 111, 1 (2006)

J. Zachariassen, K. Zeller, N. Nikolov, T. McClelland, A Review of the Forest Service Remote Automated Weather Station (RAWS) Network (US Dept. of Agriculture, Forest Service, Rocky Mountain Research Station, Fort Collins, 2003)

A. Marenco, V. Thouret P. Nédélec, H. Smit, M. Helten, D. Kley, F. Karcher, P. Simon, K. Law, J. Pyle, G. Poschmann, R. Von Wrede, C. Hume, T. Cook, J. Geophys. Res. 103, 25631 (1998)

J. Louet, S. Bruzzi, in Geoscience and Remote Sensing Symposium, IGARSS, 1999, ’99 Proceedings. IEEE 1999 International, vol. 3 (1999), pp. 1680–1682

R. Mendelsohn, P. Kurukulasuriya, A. Basist, F. Kogan, C. Williams, Clim. Change 81, 71 (2007)

S.F. Clifford, J.C. Kaimal, R.J. Lataitis, R.G. Strauch, Proc. IEEE 82, 313 (1994)

M. Schneider, F. Hase, T. Blumenstock, Atmos. Chem. Phys. 6, 811 (2006)

P. Minnis, D.F. Young, K. Sassen, J.M. Alvarez, C.J. Grund, Mon. Weather Rev. 118, 2426 (1990)

High altitude and long range research aircraft (2012), http://www.halo.dlr.de/

C.A.M. Brenninkmeijer, P. Crutzen, F. Boumard, T. Dauer, B. Dix, R. Ebinghaus, D. Filippi, H. Fischer, H. Franke, U. Frieß, J. Heintzenberg, F. Helleis, M. Hermann, H.H. Kock, C. Koeppel, J. Lelieveld, M. Leuenberger, B.G. Martinsson, S. Miemczyk, H.P. Moret, H.N. Nguyen, P. Nyfeler, D. Oram, D. O’Sullivan, S. Penkett, U. Platt, M. Pupek, M. Ramonet, B. Randa, M. Reichelt, T.S. Rhee, J. Rohwer, K. Rosenfeld, D. Scharffe, H. Schlager, U. Schumann, F. Slemr, D. Sprung, P. Stock, R. Thaler, F. Valentino, P. van Velthoven, A. Waibel, A. Wandel, K. Waschitschek, A. Wiedensohler, I. Xueref-Remy, A. Zahn, U. Zech, H. Ziereis, Atmos. Chem. Phys. 7, 4953 (2007)

R. Petersen, L. Cronce, W. Feltz, E. Olson, in Meteorological Satellite (2010)

M. Baker, M. Curtis, M. Helms, M.A. Homans, Effectiveness of the water vapor sensing system, WVSS-II, in supporting airline operations and improved air traffic capacity (2010), ams.confex.com

P. Wiederhold, Water Vapor Measurement: Methods and Instrumentation (Marcel Dekker Inc., New York, 1997). 357 pp.

R. Busen, A.L. Buck, J. Atmos. Ocean. Technol. 12, 73 (1995)

G.M. Hansford, R.A. Freshwater, L. Eden, K.F.V. Turnbull, D.E. Hadaway, V.P. Ostanin, R.L. Jones, Rev. Sci. Instrum. 77, 014502 (2006)

MBW 373HX. MBW Calibration Ltd.

FAAM Aircraft Water Vapour Measurements, Change, p. 2010 (2010)

B. Jianchun, C. Hongbin, H. Väomel, D. Yunjun, X. Yuejian, L. Daren, Adv. Atmos. Sci. 28, 139 (2011)

E. Salasmaa, P. Kostamo, NATO Adv. Stud. Inst. Ser., Ser. E Appl. Sci. 111, 135 (1986)

H.G.J. Smit, W. Sträter, M. Helten, D. Kley, Environmental Simulation Facility to Calibrate Airborne Ozone and Humidity Sensors, Forschungszentrum Jülich Berichte, vol. 3796 (2000)

M. Helten, H.G.J. Smit, D. Kley, J. Ovarlez, H. Schlager, R. Baumann, U. Schumann, P. Nedelec, A. Marenco, J. Geophys. Res. 104, 26087 (1999)

M. Helten, H.G.J. Smit, D. Sträter, W.S. Kley, P. Nedelec, M. Zöger, R. Busen, J. Geophys. Res. 103, 25643 (1998)

A. Buck, in Moisture and Humidity Symposium (Instrument Society of America, Pittsburgh, 1985), pp. 411–436

A.J. Weinheimer, R.L. Schwiesow, J. Atmos. Ocean. Technol. 9, 407 (1992)

C. Friehe, R. Grossman, Y. Pann, J. Atmos. Ocean. Technol. 3, 299 (1986)

D. Kley, E.J. Stone, Rev. Sci. Instrum. 49, 691 (1978)

M. Zöger, A. Afchine, N. Eicke, M.-T. Gerhards, E. Klein, D.S. McKenna, U. Mörschel, U. Schmidt, V. Tan, F. Tuitjer, T. Woyke, C. Schiller, J. Geophys. Res. 104, 1807 (1999)

M. Zöger, A. Engel, D.S. McKenna, C. Schiller, U. Schmidt, T. Woyke, J. Geophys. Res. 104, 1817 (1999)

N.M. Sitnikov, V.A. Yushkov, A.A. Afchine, L.I. Korshunov, V.I. Astakhov, A.E. Ulanovskii, M. Kraemer, A. Mangold, C. Schiller, F. Ravegnani, Instrum. Exp. Tech. 50, 113 (2007)

E. Ohtaki, T. Matsui, Bound.-Layer Meteorol. 24, 109 (1982)

T.A. Cerni, J. Atmos. Ocean. Technol. 11, 445 (1994)

R. Desjardins, J. MacPherson, P. Schuepp, F. Karanja, Bound.-Layer Meteorol. 47, 55 (1989)

H.I. Schiff, G.I. Mackay, J. Bechara, Res. Chem. Intermed. 20, 525 (1994)

W. Gurlit, R. Zimmermann, C. Giesemann, T. Fernholz, V. Ebert, J. Wolfrum, U. Platt, J.P. Burrows, Appl. Opt. 44, 91 (2005)

D. Fahey, R. Gao, Summary of the AquaVIT water vapor intercomparison: static experiments (2009), https://aquavit.icg.kfa-juelich.de/WhitePaper/AquaVITWhitePaper_Final_23Oct2009_6MB.pdf

G.S. Diskin, J.R. Podolske, G.W. Sachse, T.A. Slate, Proc. Soc. Photo-Opt. Instrum. Eng. 4817, 196 (2002)

R.D. May, J. Geophys. Res. 103, 19161 (1998)

A. Mikloós, P. Hess, Z. Bozóki, Rev. Sci. Instrum. 72, 1937 (2001)

M. Szakáll, Z. Bozóki, A. Mohácsi, A. Varga, G. Szabó, Appl. Spectrosc. 58, 792 (2004)

V. Ebert, T. Fernholz, H. Pitz, in Laser Applications to Chemical and Environmental Analysis (2000), pp. 4–6

S. Hunsmann, K. Wunderle, S. Wagner, U. Rascher, U. Schurr, V. Ebert, Appl. Phys. B, Lasers Opt. 92, 393 (2008)

B.A. Paldus, A. Kachanov A, Can. J. Phys. 83, 975 (2005)

G. Berden, R. Engeln, Cavity Ring-Down Spectroscopy: Techniques and Applications (John Wiley and Sons, New York, 2006). 322 pp.

V. Ebert, J. Wolfrum, in Optical Measurements-Techniques and Applications, ed. by F. Mayinger (Springer, Berlin, 1994), pp. 273–312

J.A. Silver, Appl. Opt. 31, 4927 (1992)

F. Dingler, H. von Lucke, J. Anders, Appl. Opt. 38, 5338 (1999)

J. McManus, P. Kebabian, M. Zahniser, Appl. Opt. 34, 3336 (1995)

D. Romanini, M. Chenevier, S. Kassi, M. Schmidt, C. Valant, M. Ramonet, J. Lopez, H.-J. Jost, Appl. Phys. B, Lasers Opt. 83, 659 (2006)

H. Chen, J. Winderlich, C. Gerbig, A. Hoefer, C.W. Rella, E.R. Crosson, A.D. Van Pelt, J. Steinbach, O. Kolle, V. Beck, B.C. Daube, E.W. Gottlieb, V.Y. Chow, G.W. Santoni, S.C. Wofsy, Meas. Tech. 3, 375 (2010)

Kaise-Threde Gmbh, W. Strafle, Science 37, 33 (1996)

J.A. Silver, D.C. Hovde, Rev. Sci. Instrum. 65, 1691 (1994)

G. Durry, N. Amarouche, L. Joly, X. Liu, B. Parvitte, V. Zéninari, Appl. Phys. B, Lasers Opt. 90, 573 (2007)

A.K. Vance, A. Woolley, R. Cotton, K. Turnbull, S. Abel, C. Harlow, Final Report on the WVSS-II Sensors fitted to the FAAM BAe 146. Met Office (2011), 31 pp.

J.A. Silver, D.C. Hovde, Rev. Sci. Instrum. 65, 1691 (1994)

J. Silver, M. Zondlo, in SPIE 6378, 63780J-1 (2006)

C.R. Webster, G.J. Flesch, K. Mansour, R. Haberle, J. Bauman, Appl. Opt. 43, 4436 (2004)

J. Podolske, M. Loewenstein, Appl. Opt. 32, 5324 (1993)

B. Lins, R. Engelbrecht, B. Schmauss, Appl. Phys. B, Lasers Opt. 106, 999 (2011)

C. Schulz, A. Dreizler, V. Ebert, J. Wolfrum, in Springer Handbook of Experimental Fluid Dynamics, ed. by C. Tropea, J. Foss, A. Yarin (2007), pp. 1241–1316

H. Teichert, T. Fernholz, V. Ebert, Appl. Opt. 42, 2043 (2003)

K. Wunderle, T. Fernholz, V. Ebert, VDI-Ber. 1959, 137 (2006)

S. Hunsmann, S. Wagner, H. Saathoff, O. Moehler, U. Schurath, V. Ebert, O. Möhler, VDI-Ber. 1959, 149 (2006)

L.S. Rothman, I.E. Gordon, A. Barbe, D.C. Benner, P.F. Bernath, M. Birk, V. Boudon, L.R. Brown, A. Campargue, J.-P. Champion, K. Chance, L.H. Coudert, V. Dana, V.M. Devi, S. Fally, J.-M. Flaud, R.R. Gamache, A. Goldman, D. Jacquemart, I. Kleiner, N. Lacome, W.J. Lafferty, J.-Y. Mandin, S.T. Massie, S.N. Mikhailenko, C.E. Miller, N. Moazzen-Ahmadi, O.V. Naumenko, A.V. Nikitin, J. Orphal, V.I. Perevalov, A. Perrin, A. Predoi-Cross, C.P. Rinsland, M. Rotger, M. Šimecková, M.A.H. Smith, K. Sung, S.A. Tashkun, J. Tennyson, R.A. Toth, A.C. Vandaele, J. Vander Auwera, J. Quant. Spectrosc. Radiat. Transf. 110, 533 (2009)

S. Hunsmann, S. Wagner, H. Saathoff, O. Möhler, U. Schurath, V. Ebert, in VDI Berichte 1959, (VDI, Düsseldorf, 2006), pp. 149–164

B. Buchholz, V. Ebert, Appl. Phys. B (2012, in preparation)

D.W. Allan, Proc. IEEE 54, 221 (1966)

P. Werle, R. Mücke, F. Slemr, Appl. Phys. B, Lasers Opt. 57, 131 (1993)

H. Vömel, D.E. David, K. Smith, J. Geophys. Res. 112, 1 (2007)

H. Vömel, V. Yushkov, S. Khaykin, L. Korshunov, E. Kyrö, R. Kivi, J. Atmos. Ocean. Technol. 24, 941 (2007)

S.A. Vay, B.E. Anderson, E.J. Jensen, G.W. Sachse, J. Ovarlez, G.L. Gregory, S.R. Nolf, J.R. Podolske, T.A. Slate, C.E. Sorenson, J. Geophys. Res. 105, 3745 (2000)

D.M. Sonnenfroh, W.J. Kessler, J.C. Magill, B.L. Upschulte, M.G. Allen, J.D.W. Barrick, Appl. Phys. B, Lasers Opt. 67, 275 (1998)

I. Keramitsoglou, J. Harried, J. Foot, Phys. Chem. Earth, Part B, Hydrol. Oceans Atmos. 24, 939 (1999)

A. Mangold, “Wodca” team, in EGS-AGU-EUG Joint Assembly, vol. 1 (2003), p. 9330

A. Hoff, WVSS-II Assessment at the DWD Deutscher Wetterdienst/German meteorological service climate chamber of the meteorological observatory lindenberg, Measurement, 1–13 (2009)

H. Huszár, Z. Bozóki, Á. Mohácsi, G. Szabó, A. Zahn, Assembly 10, 3 (2008)

J. Ovarlez, J. Gayet, K. Gierens, Geophys. Res. Lett. 29, 2 (2002)

A. Behrendt, V. Wulfmeyer, T. Schaberl, H.-S. Bauer, C. Kiemle, G. Ehret, C. Flamant, S. Kooi, S. Ismail, R. Ferrare, E.V. Browell, D.N. Whiteman, J. Atmos. Ocean. Technol. 24, 3 (2007)

Open Access

This article is distributed under the terms of the Creative Commons Attribution License which permits any use, distribution, and reproduction in any medium, provided the original author(s) and the source are credited.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 2.0 International License (https://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Buchholz, B., Kühnreich, B., Smit, H.G.J. et al. Validation of an extractive, airborne, compact TDL spectrometer for atmospheric humidity sensing by blind intercomparison. Appl. Phys. B 110, 249–262 (2013). https://doi.org/10.1007/s00340-012-5143-1

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00340-012-5143-1