Abstract

The present work proposes a second-order time splitting scheme for a linear dispersive equation with a variable advection coefficient subject to transparent boundary conditions. For its spatial discretization, a dual Petrov–Galerkin method is considered which gives spectral accuracy. The main difficulty in constructing a second-order splitting scheme in such a situation lies in the compatibility condition at the boundaries of the sub-problems. In particular, the presence of an inflow boundary condition in the advection part results in order reduction. To overcome this issue a modified Strang splitting scheme is introduced that retains second-order accuracy. For this numerical scheme a stability analysis is conducted. In addition, numerical results are shown to support the theoretical derivations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The aim of this paper is to develop time splitting schemes in combination with transparent boundary conditions that have spectral accuracy in space. Splitting schemes are based on the divide and conquer idea; i.e. to divide the original problem into smaller sub-problems which are, hopefully, easier to solve. However, obtaining an approximation of the solution of the original problem from the solutions of the sub-problems is not always straightforward: order reductions or strong CFL conditions that destroy the convergence of the numerical scheme are known to arise, see e.g. [8, 10, 18]. Furthermore, transparent boundary conditions are non-local in time and depend on the solution. Imposing them with splitting methods poses a challenge in the derivation of stable numerical schemes of order higher than one.

In this paper, we show that it is possible to construct a second-order splitting scheme that performs well, in the context outlined above, and can be implemented efficiently. In particular, we show that the proposed numerical method is stable independent of the space grid spacing (i.e. no CFL type condition is needed). We focus our attention on a linearised version of the Korteweg–de Vries equation

where \(T>0\). The same ideas, however, can be applied to a more general set of linear partial differential equations with variable coefficients. Note that the partial differential Eq. (1), despite being linear, finds many applications in a physical context. For example, it is used to model long waves in shallow water over an uneven bottom, see e.g. [17, 21].

The goal of this work is to design a splitting scheme that is second order in time with spectral accuracy in space. This paper can be seen as an extension to [12], where a splitting scheme of order one in time and spectral accuracy in space is presented. When solving (1) one of the main difficulties one has to face is the unbounded domain \(\mathbb {R}\). Numerical simulations typically consider a finite domain that leads to boundary conditions. Our goal is to design a numerical scheme that retains the same dynamics as the original problem (1), but on a finite domain. This can be achieved by imposing transparent boundary conditions. The advantage of such boundary conditions is the zero-reflection property of the solution at the boundaries. Further, the solution can leave the finite domain and re-enter at a later time without any loss of information. On the downside, transparent boundary conditions are non-local in time (and space for two and three-dimensional problems), therefore, they become expensive for long time simulations. In particular, memory requirements grow proportionally with the number of time steps. While it is still possible to employ them in 1D, the multidimensional cases become impracticable. A remedy is to approximate transparent boundary conditions and obtain so-called absorbing boundary conditions. In this way, information at the boundaries is lost, but memory requirements remain constant. A lot of work has been done for the Schrödinger equation in recent years, see [1, 3, 5] and references therein. For third-order problems, we refer the reader to [6, 7, 12, 23] and references therein.

In the present case, the third derivative in space renders any explicit integrator extremely expensive. Therefore, an implicit scheme should be implemented. While coupling an implicit time discretization with a spectral space discretization yields banded matrices for constant advection, they lead to full matrices if g varies in space. We therefore employ a time-splitting approach in order to separate the advection problem from the dispersive problem. Operator splitting methods for dispersive problems have been employed and studied before, we refer the reader to [9, 11, 12, 15]. For splitting method with absorbing boundary conditions we cite the work [5]. Splitting methods allow us to design specific solvers for the variable coefficient problem. For example, [20] uses a technique based on preconditioning. However, a direct splitting of (1) is not advisable. The problem of separating the advection equation is the potential requirement of inflow conditions at the boundaries. The actual inflow, however is unknown and should be estimated for example by extrapolation methods. This leads to instabilities when spectral methods are applied unless a very restrictive CFL condition is satisfied. The idea to overcome this problem is to perform a modified splitting that allows us to treat the advection problem without prescribing any inflow condition. The boundary conditions are transferred to the dispersive problem only. In this case, we can compute the values we need with the help of the \(\mathcal {Z}\)-transform, as has been done for a constant coefficient dispersive problem in [6, 7]. Another popular technique to avoid reflections at the boundaries is the perfectly matched layer method (PML). This method has been introduced in [4] for Maxwell’s equations. Subsequently, it has been adapted to the Schrödinger equation [22] and very recently a general PML approach in combination with pseudo-spectral methods has been proposed in [2]. To the best of our knowledge a PML method for a linearised Korteweg–de Vries equation is currently not available.

The paper is organized as follows. In Sect. 2 we derive the semi-discrete scheme, discrete in time and continuous in space, by applying the Strang splitting method. In Sect. 3 we impose transparent boundary conditions for the scheme derived in Sect. 2. In particular, we determine the proper values of the numerical solution at the boundaries with the help of the \(\mathcal {Z}\)-transform. The stability of the resulting numerical method is then analyzed in Sect. 4. In Sect. 5 we describe a pseudo-spectral method for the spatial discretization which takes the transparent boundary conditions into account. Finally, in Sect. 6 we present numerical results that illustrate the theory.

2 Time discretization: modified splitting approach

In this section we derive a semi-discrete scheme by applying the Strang splitting method to problem (1) restricted to a finite interval [a, b], where \(a<b\). Inspired by the ideas in [12], we perform a time splitting in order to separate the advection problem \(\partial _t u(t,x) + g(x) \partial _x u(t,x) = 0\) from the dispersive problem \(\partial _t u(t,x) + \partial _x^3 u(t,x) =0\). In the following, for brevity, time and space dependence for the unknown \(u=u(t,x)\) are omitted.

In Sect. 2.1 we present the canonical splitting of (1). This approach illustrates the difficulty to prescribe the inflow condition to the advection equation. In Sect. 2.2 we then propose the modified splitting and show how this problem can be avoided.

2.1 Canonical splitting

Before applying any splitting, a preliminary analysis shows us that the inflow conditions to the advection problem depend on the sign of g(x) at \(x=a\) and \(x=b\). We summarise in Table 1 the four possible outcomes.

For this presentation, we restrict our attention to \(g(x) > 0\) for \(x\in [a,b]\). This setting requires an inflow condition at \(x=a\). Let \(M\in \mathbb {N}\), \(M>0\) be the number of time steps, \(\tau = T/M\) the step size and \(t^m = m\tau \), \(k=0,\dots ,M\). We apply the Strang splitting method to (1), which results in the two sub-problems

Let \(\varphi _{t}^{[1]}\) be the flow of (2a) and let \(\varphi _{t}^{[2]}\) be the flow of (2b). Let u(t, x) be the solution of (1) at time t. Then, the solution to (1) at time \(t + \tau \) is approximated by the Strang splitting

In order to get a numerical scheme, we apply the Peaceman–Rachford scheme to (3). This consists in computing the first flow \(\varphi _{\frac{\tau }{2}}^{[1]}\) by the explicit Euler method, the middle flow \(\varphi _{\tau }^{[2]}\) by the Crank–Nicolson method and the last flow by the implicit Euler method. Let \(u^m(x) = u(t^m,x)\). Then, we get

The latter numerical scheme is known to be second-order in time due to its symmetry. Notice that Eq. (5) is a time approximation of

The function f(t) encodes the inflow condition at \(x=a\). For \(t\in (0,\tau ]\) the inflow condition is unknown. It can be approximated by extrapolation methods which typically leads to instabilities. The idea to overcome this problem is to formulate the advection problem without any inflow condition. For this purpose we introduce next a modified splitting.

2.2 Modified splitting

Based on the observations in Sect. 2.1, we rewrite the governing equation in (1) as follows

where \(p_g(x)\) is the line connecting the points \(\left( a,g(a)\right) \) and (b, g(b)). We now apply a splitting method that results in the two sub-problems

Let \(\varphi _{t}^{[1]}\) be the flow of (8a) and let \(\varphi _{t}^{[2]}\) be the flow of (8b). Let u(t, x) be the solution of (1) at time t. The solution to (1) at time \(t + \tau \) is then approximated by the Strang splitting

By applying the Peaceman-Rachford scheme to (9), we get

where \(g^*(x) = g(x) - p_g(x)\). Notice that \(g^*(a) = g^*(b) = 0\). This means that no inflow or outflow condition needs to be prescribed to Eq. (11). The modified splitting allows us to solve the advection equation only for the interior points, i.e. \(x\in (a,b)\).

Remark

Notice that both problems (8a), (8b) have a variable coefficient advection. However, as shown in Sect. 5 the matrix associated to the space discretization of Problem (8a), despite the space dependent coefficient \(p_g\), is still banded. This is a property of the spectral space discretization that we employ.

3 Discrete transparent boundary conditions

When it comes to numerical simulations, a finite spatial domain is typically considered. Problem (1) is then transformed into the following boundary value problem

Due to the third order dispersion term, three boundary conditions are required. In particular, depending on the sign of the dispersion coefficient, we have either two boundary conditions at the right boundary and one at the left boundary or vice-versa. In this work we consider a positive dispersion coefficient. We assume g(x) constant for \(x\in \mathbb {R}\setminus [a,b]\) and that \(u^0(x)\) is a smooth initial value with compact support in [a, b]. Transparent boundary conditions are established by considering (13) on the complementary unbounded domain \(\mathbb {R}\setminus (a,b)\). Let \(g_{a,b}\) be the values of g(x) in \((-\infty , a]\) and \([b,\infty )\), respectively. In the interval \((-\infty , a]\) we consider the problem

whereas in the interval \([b,\infty )\) we consider the problem

The initial value u(0, x) is set to 0 because \(u^0(x)\) has compact support in [a, b]. The boundary conditions at \(x\rightarrow \pm \infty \) are set to 0 because we ask for \(u\in L^2(\mathbb {R})\). Therefore, the solution u must decay for \(x\rightarrow \pm \infty \). We focus our attention on (14) and impose discrete transparent boundary conditions at \(x=a\). A similar procedure can be applied to (15).

The mathematical tool we employ in order to impose discrete transparent boundary conditions to (13) is the \(\mathcal {Z}\)-transform. We recall the definition and the main properties of the \(\mathcal {Z}\)-transform, which are used extensively in this section. For more details we refer the reader to [3]. The \(\mathcal {Z}\)-transform requires an equidistant time discretization. Given a sequence \(\mathbf {u} = \{u^l\}_l\), its \(\mathcal {Z}\)-transform is defined by

where \(\rho \) is the radius of convergence of the series. The following properties hold

-

Linearity

for \(\alpha ,\beta \in \mathbb {R}\), \(\mathcal {Z}(\alpha \mathbf {u}+\beta \mathbf {v})(z) = \alpha \hat{u}(z)+ \beta \hat{v}(z)\);

-

Time advance

for \(k>0\), \(\mathcal {Z}(\{u^{l+k}\}_{l\ge 0})(z) = z^k\hat{u}(z)-z^k\sum _{l=0}^{k-1}z^{-l}u^l\);

-

Convolution

\(\mathcal {Z}\big (\mathbf {u} *_d \mathbf {v}\big )(z) = \hat{u}(z)\hat{v}(z)\);

where \(*_d\) denotes the discrete convolution

Remark

The Peaceman–Rachford scheme given in (10)–(12) reduces to a Crank–Nicolson scheme outside the computational domain [a, b]. Therefore, discrete transparent boundary conditions are derived discretizing (14) by the Crank–Nicolson method.

Discretizing (14) by the Crank–Nicolson method, gives

Let \(\mathbf {u}(x) = \{u^k(x)\}_k\) be the time sequence (x plays the role of a parameter) associated to the Crank–Nicolson scheme (17). Then its \(\mathcal {Z}\)-transform is given by

Taking the \(\mathcal {Z}\)-transform of (17) gives

where we used the time advance property of the \(\mathcal {Z}\)-transform and \(u^0(x) =0\). In particular, (18) is an ODE in the variable x. It can be solved by using the ansatz

where \(\lambda _i\), \(i=1,2,3\) are the roots of the characteristic polynomial associated to (18):

The roots \(\lambda _i\) can be ordered such that \(\mathrm {Re}\, \lambda _{1}<0\) and \(\mathrm {Re}\, \lambda _{2,3}>0\), see [6]. By the decay condition \(\hat{u}(x,z)\rightarrow 0\) for \(x\rightarrow -\infty \), we obtain \(c_1(z) = 0\) and

Since \(c_2\) and \(c_3\) are unknown, the way to compute the discrete transparent boundary conditions is to make use of the derivatives of \(\hat{u}\) to derive an implicit formulation. Computing the first and second derivatives of \(\hat{u}\) gives

We then have

In the latter equation the z dependence is omitted. The roots \(\lambda _i\), \(i=1,2,3\) satisfy

This allows us to rewrite Eq. (20) in terms of the root \(\lambda _1\) to obtain

We can finally determine the value of \(u^{m+1}(a)\) by evaluating (21) at \(x=a\) and taking the inverse \(\mathcal {Z}\)-transform. Let

then

where we used the convolution property of the \(\mathcal {Z}\)-transform. We remark that to compute \(u^{m+1}(a)\) we need to know \(\partial _x u^{m+1}(a)\) and \(\partial _x^2 u^{m+1}(a)\). Similarly, for problem (15), we obtain

where

with \(\sigma _1\) root of

The time discrete numerical scheme to problem (13) becomes (for \(0\le m\le M-1\))

where

Equations (25a)–(25c) are the Peaceman–Rachford scheme. Equation (25d) is the initial data and Equations (25e)–(25g) are the discrete transparent boundary conditions.

Remark

(Computation of \(\mathbf {Y}_j\)) The quantities \(\mathbf {Y}_j\), \(j=1,\dots , 4\) are given by the inverse \(\mathcal {Z}\)-transform through Cauchy’s integral formula

where \(S_r\) is a circle with center 0 and radius \(r>\rho \), where \(\rho \) is the radius of convergence in (16). An exact evaluation of the contour integrals might be too complicated or infeasible. Therefore, we employ a numerical procedure in order to approximate these quantities. In this work we use the algorithm described in [13, Sec. 2.3], which results in stable and accurate results.

4 Stability of the semi-discrete scheme

For this section it is convenient to adopt a more compact notation. Thus, we write \(D_3 = p_g\partial _x + \partial _x^3\) and \(D = g^*\partial _x\). Then, the Peaceman–Rachford scheme (25a)–(25c) becomes

for \(m=0,\dots ,M-1\). The scheme can be rewritten separating the first step, i.e. when \(m=0\), as follows:

Using the commutativity between \(I+\frac{\tau }{2}D_3\) and \(I-\frac{\tau }{2}D_3\) leads to

We now show that the semi-discrete numerical scheme (26) is stable. The proof follows a similar approach as in [13].

Theorem 4.1

(Stability) The semi-discrete numerical scheme (26) is stable if \(\partial _x g^*\in L^{\infty }(a,b)\) and \(\tau < 4/\Vert \partial _x g^*\Vert _{\infty } \).

Proof

Let \((\cdot ,\cdot )\) be the usual inner product on \(L^2(a,b)\) and \(\Vert \cdot \Vert \) the induced norm. We define \(w := \left( I+\frac{\tau }{2}D\right) ^{-1}\left( I-\frac{\tau }{2}D\right) y^m\). Then

Applying the inner product with \(y^{m+1} + w\) gives

or equivalently

Integrating the right-hand side by parts gives

Notice that \(\partial _x p_g\) is constant since \(p_g\) is a polynomial of degree 1. In order to complete the proof, a bound for \(\Vert w\Vert ^2\) is needed. By definition of w, we have

Taking the inner product with \(w+y^m\) gives

Integrating by parts and using the fact that \(g^*(a) = g^*(b) = 0\) gives

Using the hypothesis \(\tau <4/\Vert \partial _x g^*\Vert _{\infty }\) leads to

Combining (27) with (29) gives the bound

where

In the definition of \(B^m\) we used \(p_g(a) = g(a)\), \(p_g(b) = g(b)\) and

Multiplying both sides of (30) by \(1- \frac{\tau }{4}\Vert \partial _x g^*\Vert _{\infty }\) and taking the sum over m gives

with

By Lemma 4.1 the quantity \(\sum B^m\) is negative. Therefore,

and stability follows by Gronwall’s inequality since \(c_2 =\mathcal {O}(\tau )\). \(\square \)

Lemma 4.1

It holds \(\sum _{m=0}^{M-1} B^m \le 0\).

Proof

Consider

where

and

Inserting the discrete transparent boundary conditions (22)–(24) in \(B^M_a,\) \(B^M_b\) gives

Let us extend the sequences \(B^M_a,\) \(B^M_b\) to infinity sequences by zero and apply Parseval’s identity

We obtain

Notice that \(B^M_a\) and \(B^M_b\) are real values, therefore the imaginary parts of the right-hand sides in (31) must integrate to 0. Therefore,

The quantities \(B^M_a\) and \(B^M_b\) are now in the same form as [13, Sect. 2.2], therefore the result follows by [7, Prop. 2.4]. \(\square \)

5 Spatial discretization: pseudo-spectral approach

The spatial discretization of problem (25a)–(25g) is carried out by a dual Petrov–Galerkin method. In particular, we follow the approach given in [19] for the dispersive part and the approach given in [20] for the variable coefficient advection. It is very well known that pseudo-spectral methods achieve high accuracy even for a modest number of collocation points N, provided the solution is smooth. However, these methods have to be carefully designed in order to obtain sparse mass and stiffness matrices in frequency space. Then, the associated linear system can be solved in \(\mathcal {O}(N)\) operations.

In the following description we assume, without loss of generality, \(a=-1\) and \(b=1\). The idea is to choose the dual basis functions of the dual Petrov–Galerkin formulation in such a manner that boundary terms from integration by parts vanish. Let us introduce a variational formulation for

so that the discrete transparent boundary conditions are satisfied. To this goal, let \(\mathcal {P}_N\) be the space of polynomials up to degree N. For (33) and (35) we introduce the dispersive space

The conditions in \(V^d_N\) collect the left-hand side of (25e)–(25g). Let \((u,v) = \int _{a}^b u(x)v(x)\,\mathrm {d}x\) be the usual \(L_2\) inner product. The dual space \(V_N^{d,*}\) is defined in the usual way, i.e. for every \(\phi ^d\in V_N^d\) and \(\psi ^d\in V_N^{d,*}\) it holds

Lemma 5.1

The dual space \(V_N^{d,*}\) of \(V_N^d\) is given by

Proof

Integrating \((p_g\partial _x \phi ^d,\psi ^d)\) by parts and integrating \((\partial _x^3 \phi ^d,\psi ^d)\) by parts three times gives

We want the boundary terms to vanish. For \(x=b\) we have

The last equality is obtained by substituting \(p_g(b) = g_b\) and using the relations given by the space \(V_N^d\) for \(\partial _x\phi ^d(b)\) and \(\partial _x^2\phi ^d(b)\). Similarly for \(x=a\) we have

which leads to the boundary relations of the dual space \(V_N^*\). \(\square \)

We proceed by introducing the advection space for (34):

Notice that due to the variable coefficient \(g^*\) the space \(V^a_N\) is free from inflow or outflow conditions. The dual space \(V_N^{a,*}\) is defined so that for every \(\phi ^a\in V_N^a\) it holds

for every \(\psi ^a\in V_N^{a,*}\). The dual space \(V^{a,*}_N\) is \(\mathcal {P}_N\). Let \(L_j\) be the jth Legendre polynomial. We define

where the coefficients \(\alpha _j,\beta _j,\gamma _j,\alpha _j^*,\beta _j^*,\gamma _j^*\) are chosen in such a way that \(\phi _j^d\), \(\psi _j^d\) belong to \(V_N^d\), \(V_N^{d,*}\), respectively, see “Appendix A”. The sequences \(\{\phi _j^d\}_{j=0}^{N-3}\) and \(\{\psi _j^d\}_{j=0}^{N-3}\) are a basis of \(V_N^d\) and \(V_N^{d,*}\) respectively. We are now ready to consider the variational formulation.

5.1 Variational formulation

The dual Petrov–Galerkin formulation of (33) reads: find \(u^*\in \mathcal {P}_N\) such that

holds for every \(\psi ^d_j\in V_N^{d,*}\), \(j=0,\dots ,N-3\). In general the function \(u^m\) does not belong to the space \(V_N^d\). Indeed \(u^m\) satisfies the discrete transparent boundary conditions

However, we can write \(u^m = u^m_h + p_2^m\), where \(u^m_h\in V_N^d\) and \(p_2^m\) is the unique polynomial of degree two such that

The function \(u^*\) also does not belong to the space. Similarly, we can write \(u^* = u^*_h + p_2^*\). We assume that \(u^*\) satisfies the same boundary conditions as \(u^m\), therefore \(p^*_2 = p^m_2\). We thus obtain

We proceed with the dual Petrov–Galerkin formulation of (34). Find \(u^{m+1/2}\in \mathcal {P}_N\) such that

holds for every \(\psi ^a_j\in V_N^{a,*}\), \(j=0,\dots ,N\). Notice that \(u^*, u^{m+1/2}\in V_N^a\). So, differently from the dispersive case, we obtain the solution \(u^{m+1/2}\) without performing any shift.

Similarly to (33), the dual Petrov–Galerkin formulation of (35) reads: find \(u^{m+1}\in \mathcal {P}_N\) such that

holds for every \(\psi ^d_j\in V_N^{d,*}\), \(j=0,\dots ,N-3\).

5.2 Implementation in frequency space

This section is dedicated to compute the mass and stiffness matrices for (39)–(41). When it comes to numerical implementation, the \(L^2\) inner product (u, v) needs to be approximated. We use two different discrete inner products for the spaces \(V^d_N\) and \(V_N^a\). This choice is motivated by the fact that the spaces \(V_N^d\) and \(V_N^a\) satisfy different boundary conditions.

Definition

(Dispersive inner product) Let \(\langle \cdot ,\cdot \rangle _N^d\) be the dispersive inner product defined as

where \(y_l\) are the roots of the Jacobi polynomial \(P^{(2,1)}_{N-2}(y)\) and \(w_l\) the associated weights.

Definition

(Advection inner product) Let \(\langle \cdot ,\cdot \rangle _N^a\) be the advection inner product defined as

where \(y_l\) are the roots of the Jacobi polynomial \(P^{(0,0)}_{N+1}(y)\) and \(w_l\) the associated weights.

We have \((u,v) = \langle u,v\rangle _N^d\) for all polynomials u, v such that \(\deg u + \deg v \le 2N-2\) and \((u,v) = \langle u,v\rangle _N^a\) for all polynomials u, v such that \(\deg u + \deg v \le 2N+1\). For more details about generalized quadrature rules, we refer the reader to [16].

Stiffness and mass matrices for (39), (41) Since \(u^m_h\in V_N^d\), we can express it as linear combination of \(V_N^d\) basis functions, i.e.

The first step is to obtain the frequency coefficients \(\tilde{u}_{h,k}^{m,d}\) in (44). We take the dispersive inner product on both sides

The mass matrix is

Using the orthogonality relation between \(\phi ^d_k\) and \(\psi ^d_j\) gives \(\langle \phi ^d_k,\psi ^d_j\rangle ^d_N = 0\) if \(|k-j|>3\) and \(j+k\le 2N-8\), see “Appendix B”. Then, \(\mathbf {M}^d\) is a 7-diagonal matrix. Equation (45) in matrix form reads

The left-hand side of (46) can also be written in matrix form:

where

We obtain the frequency coefficients

The second step is to compute the stiffness matrix and the frequency coefficients of the second term of the addition in (39). The stiffness matrix is

Lemma 5.2

\(\mathbf {S}^d\) is a 7-diagonal matrix.

Proof

We now that \(\phi ^d_k\) is a polynomial of degree \(k+3\). Therefore, \( q(x):= p_g(x)\partial _x \phi _k^d(x) + \partial ^3_x\phi ^d_k(x)\) is a polynomial of degree \(\le k+3\). We write q as a linear combination of Legendre polynomials up to degree \(k+3\):

Let us consider the dispersive inner product \(\langle q,\psi ^d_j\rangle ^d_N\) and \(k+3<j\). Then,

The last equation follows from the definition of \(\psi ^d_j\) and the orthogonality property of the Legendre polynomials. Let \(j < k+3\) with \(k+j\le 2N-8\), then (see “Appendix B”)

The polynomial \(\tilde{q} = \partial _x(p_g\psi _j^d)+\partial _x^3\psi _j^d\) is of degree j. Similarly to q, we obtain \(\langle \phi _k^d,\tilde{q}\rangle ^d_N=0\) and the result follows. \(\square \)

The frequency coefficients of the second term on the right-hand side of (39) are given by

Notice that \(p_g\partial _xp_2^m\) is a polynomial of degree 2. Therefore, it can be written as a linear combination of the Legendre polynomials \(L_0\), \(L_1\) and \(L_2\). Using the orthogonality property of Legendre polynomials we obtain \(\langle p_g\partial _xp_2^m,\psi _j^d\rangle _N^d=0\) for \(j>2\). Problem (39) is equivalent to

A similar procedure applies to (41), where we obtain

with

Both linear systems (47)-(48) can be solved in \(\mathcal {O}(N)\) operations since \(\mathbf {M}^d\) and \(\mathbf {S}^d\) are 7-diagonal matrices.

Stiffness and mass matrices for (40) We can express the functions \(u^*\) and \(u^{m+1/2}\) as linear combinations of \(V_N^a\) basis functions, i.e.

Similarly to the dispersive case, we need the frequency coefficients in (49), (50). We take the advection inner product in (49), (50) on both sides

Using the orthogonality relation between \(\phi ^a_k\) and \(\psi ^a_j\) gives \(\langle \phi ^a_k,\psi ^a_j\rangle ^a_N = 0\) if \(k\ne j\). Then, the mass matrix

is a diagonal matrix. Finally, Problem (40) is equivalent to

with the stiffness matrix \(\mathbf {S}^a\in \mathbb {R}^{(N+1)\times (N+1)}\) defined by

The stiffness matrix \(\mathbf {S}^a\) is in general a full matrix. A direct inversion of (53) requires \(\mathcal {O} (N^3)\) operations, thus is not advisable. Applying an iterative scheme is preferable, but multiplying the matrix \(\mathbf {S}^a\) with a vector costs \(\mathcal O(N^2)\) operations. A more efficient way is to compute \(g^*\partial _x u^*\) (and \(g^*\partial _x u^{m+1/2}\)) in the physical space at the advection collocation points. The point-wise multiplication with \(g^*\) costs only \(\mathcal O(N)\) operations. The result is then transformed back to the frequency space. Transforming back and forth to the frequency space can be done efficiently by employing the discrete Lagrange transform (DLT) and the inverse discrete Lagrange transform (IDLT) developed in [14], see “Appendix C”.

Remark

For the special case where \(g^*\) is a polynomial of degree n, the stiffness matrix \(\mathbf {S}^a\) is banded with bandwidth less or equal to 2n. This implies that for a small n the linear system (53) is sparse and can be solved in \(\mathcal O(N)\) operations without switching from the frequency to the physical space.

Transition matrices In order to connect (53) to (47) and (48), it is necessary to transfer information from the dispersive space to the advection space and vice-versa. In particular, the aim is to translate the frequency coefficients from the dispersive space to the advection space in an efficient way. Let

By using the orthogonality property of Legendre polynomials, one can prove that \(\mathbf {M}^{da}\) and \(\mathbf {M}^{ad}\) are 4-diagonal matrices. Consider

for \(j=0,\dots ,N.\) Then,

The frequency coefficients \(\tilde{\mathbf {u}}^{*,a}\) are obtained directly from the coefficients \(\tilde{\mathbf {u}}^{*,d}\) in \(\mathcal {O}(N)\) operations. Similarly, consider

for \(j=0,\dots , N-3\). Then

The coefficients \(\tilde{\mathbf {u}}^{m+1/2,d}\) can be directly obtained from \(\tilde{\mathbf {u}}^{m+1/2,a}\) in \(\mathcal {O}(N)\) operations.

Full discretization The implementation in frequency space results in

The solution \(u^{m+1}(x)\) can be reconstructed by

6 Numerical results

In this section, we present numerical results that illustrate the theoretical investigations of the previous chapters. For that purpose, we consider

with final time \(T=1\) and initial value \(u^0(x)=\mathrm {e}^{-x^2}\). We restrict (61) to the interval \((-6,6)\) and impose transparent boundary conditions at \(x=\pm 6\). The initial data is chosen such that \(|u^0(\pm 6)|\le 10^{-15}\).

For the numerical simulations, we employ a time discretization with constant step size

and a space discretization given by the dual-Petrov–Galerkin variational formulation with N collocation points. We consider the error \(\ell ^2\) of the full discretization defined as

where

is the relative \(\ell ^2\) spatial error computed at time \(t^m = \tau m\). The points \(x_j\) are chosen to be equidistant in \([-6,6]\) with \(0\le j\le J=2^7\). Finally, the function \(u^m_{\text {ref}}\) is either a reference solution or the exact solution, if available. The function \(u^m_N\) is the numerical solution at time \(t^m\) employing N collocation points.

Example 1

(Constant advection) We consider (61) with constant advection \(g(x)=6\). This is the same problem which is considered in [6]. The setting reduces the advection equation in the modified splitting (8b) to the identity map. Even if the time-splitting is trivial, for this particular problem the exact solution can be computed via Fourier transform, see [6]. Consequently the constant advection problem offers a good benchmark for testing the convergence of the proposed numerical method in that context.

Snapshots of the exact solution \(u_{\mathrm {ex}}\) and the numerical solution \(u^m_N\) for \(g=6\) and \(t=\frac{1}{4}\), \(\frac{1}{2}\), \(\frac{3}{4}\), 1 with \(\tau =2^{-12}\). The number of collocation points is set to \(N=2^6\). We notice that the cross marks representing the exact solution lie on the numerical solution

In Fig. 1 snapshots of the numerical solution \(u^m_N\) for \(t = \frac{1}{4},\) \(\frac{1}{2},\) \(\frac{3}{4}\), 1 and \(\tau = 2^{-12}\) are shown. Notice that the numerical solution “leaves” the domain at the boundary \(x=-6\) without any reflection. As time increases the solution moves to the right and re-enters the computational domain. Finally, the solution matches the boundary at \(x=6\) without any reflection.

In Table 2 the full discretization error between the numerical solution and the exact solution varying N and M is reported. In particular, in Table 2 (left) the number of time steps M is fixed to \(2^{12}\) and the number of collocation points N is varying from 24 to 40. In this way the time discretization error is small enough to be negligible with respect to the spatial error. The value \(\alpha \) denotes the slope of the line obtained by connecting two subsequent error values and varying N in a semi-logarithmic plot. More specifically, let \(N_1\) and \(N_2\) with \(N_1<N_2\) be two subsequent values of N and \(\Vert \mathrm {err}_1\Vert _{\ell ^2}\), \(\Vert \mathrm {err}_2\Vert _{\ell ^2}\) the associated error values. Then

Notice that \(\alpha \) remains constant when N is varying, which confirms the spectral accuracy of the numerical scheme.

In Table 2 (right) the number of collocation points is fixed to \(2^6\) and the number of time steps M is varying from \(2^5\) to \(2^8\). In this way the space error is small enough to be negligible with respect to the time error. The value \(\beta \) denotes the slope of the line obtained connecting two subsequent error values and varying M in a double-logarithmic plot. More specifically, let \(M_1\) and \(M_2\) with \(M_1<M_2\) be two subsequent values of M and \(\Vert \mathrm {err}_1\Vert _{\ell ^2}\), \(\Vert \mathrm {err}_2\Vert _{\ell ^2}\) the associated error values. Then

We can clearly see \(\beta \approx 2\), which confirms second order accuracy in time.

Example 2

We consider (61) with \(g(x) = - x^3/54 + x + 3\). As mentioned in Sect. 5.2, for g being a low degree polynomial, the stiffness matrix \({\mathbf {S}}^{a}\) results in a banded matrix. Therefore, the linear system associated to the advection equation can be solved in O(N) operations. The exact solution for this problem is not known, so we test the numerical solution \(u^m_N\) against a reference solution \(u^m_{\mathbf {\text {ref}}}\) computed using a significantly greater number of points (both in time and space).

In Fig. 2 snapshots of the numerical solution \(u^m_N\) for \(t = \frac{1}{4}\), \(\frac{1}{2}\), \(\frac{3}{4}\), 1 are shown. The solution is dragged to the right with an increasing speed. No appreciable reflections can be seen at the boundaries. Similarly to example 1, we report in Table 3 full discretization errors varying N and M with respect to a reference solution \(u^m_{\text {ref}}\) computed using \(N_{\text {ref}}=2^6\) and \(M_{\text {ref}}=2^{12}\).

Example 3

We consider (61) with \(g(x) = \mathrm {e}^{-(x+6)^2} + \mathrm {e}^{-x^2} + \mathrm {e}^{-(x-6)^2}-\frac{1}{2}\). This example is interesting because of g is not polynomial and its sign alternates. The produced effects are a concentration of mass at the points \(\bar{x}\) such that \(g(\bar{x}) = 0\), \(\partial _x g(\bar{x}) < 0\) and a thinning out where \(g(\bar{x})=0\), \(\partial _x g(\bar{x}) >0\). Snapshots of the numerical solution that illustrate this phenomena are shown in Fig. 3. No reflections are detected at the boundaries, as expected.

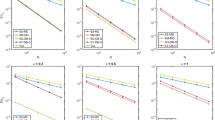

Dotted lines show the full discretization errors \(\Vert \text {err}\Vert _{\ell ^2}\) between numerical solutions and a reference solutions for examples 1 (blue circles), 2 (red stars) and 3 (yellow squares). (Left plot). On the x-coordinate the number of collocation points N, squared, varying from 24 to 40. On the y-coordinate the full discretization error \(\Vert \mathrm {err}\Vert _{\ell ^2}\) with \(M=2^{12}\) fixed. For \(N=40\) collocation points accuracy to \(10^{-6}\) is achieved for examples 1 and 2, while for example 3 the accuracy is \(10^{-4}\). (Right plot). On the x-coordinate the number of time steps M varying from \(2^5\) to \(2^9\). On the y-coordinate the full discretization error \(\Vert \mathrm {err}\Vert _{\ell ^2}\) with \(N=2^6\) fixed. In black, a solid line of slope \(-2\). Second order in time is observed for examples 1, 2 and 3

Similarly to example 2, we report in Table 4 the full discretization error by varying N and M with respect to a reference solution \(u^m_{\text {ref}}\) computed using \(N_{\text {ref}}=2^6\) and \(M_{\text {ref}}=2^{12}\). In Table 4 (left) we observe a smaller value \(\alpha \) with respect to Tables 2 and 3. Therefore, spatial convergence is slower with respect to examples 1 and 2, but still spectral accuracy is achieved. The slower convergence rate is related to the variations of the function \(g^*\), which are greater in magnitude than in examples 1 and 2.

In Fig. 4 we collect error plots for examples 1, 2 and 3. For all numerical tests we observe second order in time and the typical exponential convergence \(\mathrm {exp}(-\alpha N^2)\), \(\alpha >0\) in space.

The numerical experiments confirm that the proposed approach performs well in the one dimensional case. However, the extension to higher dimensions is not straightforward. Transparent boundary conditions together with the pseudo-spectral discretization become more involved to compute. This poses a real challenge and is object of future studies.

References

Antoine, X., Arnold, A., Besse, C., Ehrhardt, M., Schädle, A.: A review of transparent and artificial boundary conditions techniques for linear and nonlinear Schrödinger equations. Commun. Comput. Phys. 4, 729–796 (2008)

Antoine, X., Geuzaine, C., Tang, Q.: Perfectly matched layer for computing the dynamics of nonlinear Schrödinger equations by pseudospectral methods: application to rotating Bose-Einstein condensates. Commun. Nonlinear Sci. Numer. Simul. 90, 105406 (2020)

Arnold, A., Ehrhardt, M., Sofronov, I.: Discrete transparent boundary conditions for the Schrödinger equation: fast calculation, approximation, and stability. Commun. Math. Sci. 1, 501–556 (2003)

Berenger, J.-P.: A perfectly matched layer for the absorption of electromagnetic waves. J. Comput. Phys. 114, 185–200 (1994)

Bertoli, G.B.: Splitting methods for the Schrödinger equation with absorbing boundary conditions., Master’s thesis, Université de Genève (2017). https://archive-ouverte.unige.ch/unige:121404

Besse, C., Ehrhardt, M., Lacroix-Violet, I.: Discrete artificial boundary conditions for the linearized Korteweg-de Vries equation. Numer. Methods Partial Differ. Equ. 32, 1455–1484 (2016)

Besse, C., Noble, P., Sanchez, D.: Discrete transparent boundary conditions for the mixed KdV-BBM equation. J. Comput. Phys. 345, 484–509 (2017)

Einkemmer, L., Ostermann, A.: Overcoming order reduction in diffusion-reaction splitting: part 1: Dirichlet boundary conditions. SIAM J. Sci. Comput. 37, 1577–92 (2014)

Einkemmer, L., Ostermann, A.: A splitting approach for the Kadomtsev-Petviashvili equation. J. Comput. Phys. 299, 716–730 (2015)

Einkemmer, L., Ostermann, A.: Overcoming order reduction in diffusion-reaction splitting: part 2: oblique boundary conditions. SIAM J. Sci. Comput. 38, A3741–A3757 (2016)

Einkemmer, L., Ostermann, A.: A split step Fourier/discontinuous Galerkin scheme for the Kadomtsev-Petviashvili equation. Appl. Math. Comput. 334, 311–325 (2018)

Einkemmer, L., Ostermann, A., Residori, M.: A pseudospectral splitting method for linear dispersive problems with transparent boundary conditions. J. Comput. Appl. Math. 385:113240 (2021)

Fang, J., Wu, B., Liu, W.: An explicit spectral collocation method for the linearized Korteweg-de Vries equation on unbounded domain. Appl. Numer. Math. 126, 34–52 (2018)

Hale, N., Townsend, A.: A fast FFT-based discrete Legendre transform. IMA J. Numer. Anal. 36, 1670–1684 (2015)

Holden, H., Karlsen, K.H., Risebro, N.H., Tao, T.: Operator splitting for the KdV equation. Math. Comput. 80, 821–846 (2011)

Huang, W., Sloan, D.: The pseudospectral method for third-order differential equations. SIAM J. Numer. Anal. 29, 1626–1647 (1992)

Kakutani, T.: Effect of an uneven bottom on gravity waves. J. Phys. Soc. Jpn. 30, 272–276 (1971)

Nakano, K., Kemmochi, T., Miyatake, Y., Sogabe, T., Zhang, S.-L.: Modified Strang splitting for semilinear parabolic problems. JSIAM Lett. 11, 77–80 (2019)

Shen, J.: A new dual-Petrov-Galerkin method for third and higher odd-order differential equations: application to the KdV equation. SIAM J. Numer. Anal. 41, 1595–1619 (2004)

Shen, J., Wang, L.-L.: Legendre and Chebyshev dual-Petrov–Galerkin methods for hyperbolic equations. Computer Methods in Applied Mechanics and Engineering, 196 (2007), pp. 3785–3797. Special Issue Honoring the 80th Birthday of Professor Ivo Babuška

Whitham, G.: Linear and Nonlinear Waves. Wiley, New York (2011)

Zheng, C.: A perfectly matched layer approach to the nonlinear Schrödinger wave equations. J. Comput. Phys. 227, 537–556 (2007)

Zheng, C., Wen, X., Han, H.: Numerical solution to a linearized KdV equation on unbounded domain. Numer. Methods Partial Differ. Equ. 24, 383–399 (2008)

Funding

Open access funding provided by University of Innsbruck and Medical University of Innsbruck.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

A Finding the coefficients in (36)

The Legendre polynomials \(L_j(x)\) satisfy the following orthogonality relation

Further, at \(x=\pm 1\) we have

where \((j)_k = j(j+1),\dots (j+k-1)\). Inserting the dispersive basis function \(\phi ^d_j\) given in (36) in the boundary relations of the space \(V^d_N\) leads to the following linear system for \((\alpha _j,\beta _j,\gamma _j)^T\):

with \(\mathbf {A}\in \mathbb {R}^{3\times 3}\), \(\mathbf {b}\in \mathbb {R}^3\),

Similarly, for \((\alpha _j^*\), \(\beta _j^*\), \(\gamma _j^*)^T\), we get

with

B Inner product and discrete inner product

The entries of the mass matrix \(\mathbf {M}^d\) are given by

The discrete inner product is equal to the usual \(L^2\) inner product for all polynomials up to degree \(2N-2\). Since \(\phi _k^d\) is a polynomial of degree \(k+3\) and \(\psi ^d_j\) a polynomial of degree \(j+3\) we have

This means that all entries of \(\mathbf {M}^d_{kj}\) except for \((j,k)=\{(N-4,N-3),(N-3,N-4),(N-3,N-3)\}\) can be analytically pre-computed. For the last three entries the discrete inner product defined in (42) must be used. This implies that for the last three entries the orthogonality relation between \(\phi ^d_k\) and \(\psi ^d_j\) might not hold for the dispersive inner product. However, the bandwidth of the matrix will not change. A similar analysis applies for the stiffness matrix \(\mathbf {S}^d\). For \(\mathbf {M}^{ad}\) we have

The entries \((k,j) = \{(N-1,N-3),(N,N-4),(N,N-3)\}\) must be computed by using the dispersive inner product. The transition matrix \(\mathbf {M}^{da}\) is given by

Since \(0\le k\le N-3\) and \(0\le j\le N\) all entries can be computed analytically. A similar analysis applies for the advection mass matrix \(\mathbf {M}^a\).

C DLT and IDLT

We recall briefly the definitions of DLT and IDLT. For more details we refer the reader to [14] . Given \(N+1\) values \(\tilde{u}_0, \tilde{u}_1,\dots \tilde{u}_{N}\) the discrete Legendre transform is defined by

where \(y_k\) are the roots of the Legendre polynomial \(L_{N+1}(y)\). The inverse discrete Legendre transform computes \(\tilde{u}_0,\tilde{u}_1,\dots ,\tilde{u}_N\) for given \(u_0,u_1,\dots ,u_N\). It takes the form

where \(w_k\), \(k=0,\dots N\) are the Gauss–Legendre quadrature weights. (Notice that \(y_k\) and \(w_k\) are the same collocation points and weights as defined in the advection inner product (43)). Both the DLT and the IDLT can be computed in \(\mathcal O(N(\log N)^2/\log \log N)\) operations, see [14].

For our application, let

Clearly, to compute \(\tilde{\mathbf {s}}^{*}\) (and \(\tilde{\mathbf {s}}^{m+1/2}\)) we need to reconstruct \(\partial _x u^*\) (and \(\partial _x u^{m+1/2}\)) starting from the frequency coefficients \(\tilde{\mathbf {u}}^{*,a}\) (and \(\tilde{\mathbf {u}}^{m+1/2,a}\)). This can be done as follows. Note that we have

The first relation is obtained by simply taking the derivative with respect to x in (49). The second relation comes from the fact that \(\partial _x u^*\) is a polynomial of degree up to N and thus it belongs to \(V^{a}_N\). Therefore, it can be written as a linear combination of \(V^a_N\) basis functions. Taking the advection inner product in both relations with \(\psi ^a_j - \psi ^a_{j+2}\) for \(j=0,\dots ,N,\) gives

The choice of the test functions is motivated by the fact that the resulting matrices

are both banded matrices with bandwidth two and three, respectively. In matrix form, (63) reads

from which we obtain \(\widetilde{\partial _x\mathbf {u}^{*}}^{a}\) in \(\mathcal {O}(N)\) operations. A similar procedure applies to the frequency coefficients of \(\partial _x u^{m+1/2}\). Finally \(\partial _x u^*\) and \(\partial _x u^{m+1/2}\) are obtained by applying the DLT to the corresponding frequency coefficients. Summarizing, we have

Thus, (40) can be solved in \(\mathcal O(N(\log N)^2/\log \log N)\) operations for a general function \(g^*\).

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Einkemmer, L., Ostermann, A. & Residori, M. A pseudo-spectral Strang splitting method for linear dispersive problems with transparent boundary conditions. Numer. Math. 150, 105–135 (2022). https://doi.org/10.1007/s00211-021-01252-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00211-021-01252-1