Abstract

This paper deals with testing for nondegenerate normality of a d-variate random vector X based on a random sample \(X_1,\ldots ,X_n\) of X. The rationale of the test is that the characteristic function \(\psi (t) = \exp (-\Vert t\Vert ^2/2)\) of the standard normal distribution in \({\mathbb {R}}^d\) is the only solution of the partial differential equation \(\varDelta f(t) = (\Vert t\Vert ^2-d)f(t)\), \(t \in {\mathbb {R}}^d\), subject to the condition \(f(0) = 1\), where \(\varDelta \) denotes the Laplace operator. In contrast to a recent approach that bases a test for multivariate normality on the difference \(\varDelta \psi _n(t)-(\Vert t\Vert ^2-d)\psi (t)\), where \(\psi _n(t)\) is the empirical characteristic function of suitably scaled residuals of \(X_1,\ldots ,X_n\), we consider a weighted \(L^2\)-statistic that employs \(\varDelta \psi _n(t) -(\Vert t\Vert ^2-d)\psi _n(t)\). We derive asymptotic properties of the test under the null hypothesis and alternatives. The test is affine invariant and consistent against general alternatives, and it exhibits high power when compared with prominent competitors. The main difference between the procedures are theoretically driven by different covariance kernels of the Gaussian limiting processes, which has considerable effect on robustness with respect to the choice of the tuning parameter in the weight function.

Similar content being viewed by others

1 Introduction

A useful tool for assessing the fit of data to a family of distributions are empirical counterparts of distributional characterizations. Such characterizations often emerge as solutions of an equation of the type \(\rho (\mathrm{D}f,f)=0\). Here, \(\rho (\cdot ,\cdot )\) is some distance on a suitable function space, while f may be either the moment generating function, the Laplace transform, or the characteristic function. Moreover, D denotes a differential operator, i.e., this operator can be regarded as ordinary differentiation if f is a function of only one variable or, for instance, the Laplace operator in the multivariate case. Such (partial) differential equations have been used to test for multivariate normality, see Dörr et al. (2020), Henze and Visagie (2020), exponentiality, see Baringhaus and Henze (1991a), the gamma distribution, see Henze et al. (2012), the inverse Gaussian distribution, see Henze and Klar (2002), the beta distribution, see Riad and Mabood (2018), the univariate and multivariate skew-normal distribution, see Meintanis (2010) and Meintanis and Hlávka (2010), and the Rayleigh distribution, see Meintanis and Iliopoulos (2003). In all these references, the authors propose a goodness-of-fit test by plugging in an empirical counterpart \(f_n\) for f into \(\rho (\mathrm{D}f,f)\), and by measuring the deviation from the zero function in a suitable function space. If, under the hypothesis to be tested, the function f has a closed form and is known, there are two options for obtaining an empirical counterpart to the characterizing equation, namely \(\rho (\mathrm{D}f_n,f)=0\), or \(\rho (\mathrm{D}f_n,f_n)=0\). To the best of our knowledge, the effect of considering both options for the same testing problem and to study the consequences on the performance of the resulting test statistics has not yet been considered, neither from a theoretical point of view, nor in a simulation study. In this spirit, the purpose of this paper is to investigate the effect on the power of a recent test for multivariate normality based on a characterization of the multivariate normal law in connection with the harmonic oscillator, see Dörr et al. (2020).

In what follows, let \(d\ge 1\) be a fixed integer, and let \(X,X_1,\ldots ,X_n,\ldots \) be independent and identically distributed (i.i.d.) d-dimensional random (column) vectors, that are defined on a common probability space \((\varOmega ,{{\mathcal {A}}}, {\mathbb {P}})\). We write \({\mathbb {P}}^X\) for the distribution of X, and we denote the d-variate normal law with expectation \(\mu \) and nonsingular covariance matrix \(\varSigma \) by N\(_d(\mu ,\varSigma )\). Moreover, \({\mathcal {N}}_d=\{\mathrm{N}_d(\mu ,\varSigma ):\mu \in {\mathbb {R}}^d,\,\varSigma \in {\mathbb {R}}^{d\times d}\; \text{ positive } \text{ definite }\} \) stands for the class of all nondegenerate d-variate normal distributions. To check the assumption of multivariate normality means to test the hypothesis

against general alternatives. The starting point of this paper is Theorem 1 of Dörr et al. (2020). To state this result, let \(\varDelta \) denote the Laplace operator, \(\Vert \cdot \Vert \) the Euclidean norm in \({\mathbb {R}}^d\), and I\(_d\) the identity matrix of size d. Then Theorem 1 of Dörr et al. (2020) states that the characteristic function \(\psi (t)=\exp \left( -\Vert t\Vert ^2/2\right) \), \(t\in {\mathbb {R}}^d,\) of the d-variate standard normal distribution N\(_d(0,\mathrm{I}_d)\) is the unique solution of the partial differential equation

Writing \(\overline{X}_n= n^{-1} \sum _{j=1}^nX_j\) for the sample mean and \(S_n= n^{-1} \sum _{j=1}^n(X_j-\overline{X}_n)(X_j -\overline{X}_n)^{\top }\) for the sample covariance matrix of \(X_1,\ldots ,X_n\), respectively, where the superscript \(\top \) means transposition, the standing tacit assumptions that \({\mathbb {P}}^X\) is absolutely continuous with respect to Lebesgue measure and \(n \ge d+1\) guarantee that \(S_n\) is invertible almost surely, see Eaton and Perlman (1973). The test statistic is based on the so-called scaled residuals

Here, \(S_n^{-1/2}\) is the unique symmetric positive definite square root of \(S_n^{-1}\). Letting \(\psi _n(t)=n^{-1}\sum _{j=1}^n \exp (\mathrm{{i}}t^{\top } Y_{n,j})\), \(t\in {\mathbb {R}}^d\), denote the empirical characteristic function (ecf) of \(Y_{n,1},\ldots ,Y_{n,n}\), the test statistic proposed in Dörr et al. (2020) is

where

and \(a>0\) is a fixed constant. The statistic \(T_{n,a}\) has a nice closed-form expression as a function of \(Y_{n,i}^{\top } Y_{n,j}\), \(i,j \in \{1,\ldots ,n\}\) (see display (10)-(12) of Dörr et al. (2020)) and is thus invariant with respect to full-rank affine transformations of \(X_1,\ldots ,X_n\). Theorems 2 and 3 of Dörr et al. (2020) show that, elementwise on the underlying probability space, suitably rescaled versions of \(T_{n,a}\) have limits as \(a \rightarrow \infty \) and \(a \rightarrow 0\), respectively. In the former case, the limit is a measure of multivariate skewness, introduced in Móri et al. (1993), whereas Mardia’s time-honored measure of multivariate kurtosis (see Mardia 1970) shows up as \(a \rightarrow 0\). As \(n \rightarrow \infty \), the statistic \(T_{n,a}\) has a nondegenerate limit null distribution (Theorem 7 of Dörr et al. 2020), and a test of (1) that rejects \(H_0\) for large values of \(T_{n,a}\) is able to detect alternatives that approach \(H_0\) at the rate \(n^{-1/2}\), irrespective of the dimension d (Corollary 10 of Dörr et al. 2020). Under an alternative distribution satisfying \({\mathbb {E}}\Vert X\Vert ^4 <\infty \), \(n^{-1}T_{n,a}\) converges almost surely to a measure of distance \(\Delta _a\) between \({\mathbb {P}}^X\) and the class \({{\mathcal {N}}}_d\) (Theorem 11 of Dörr et al. 2020). As a consequence, the test for multinormality based on \(T_{n,a}\) is consistent against any such alternative. By Theorem 14 of Dörr et al. (2020), the sequence \(\sqrt{n}(n^{-1}T_{n,a} -{\Delta }_a)\) converges in distribution to a centered normal law. Since the variance of this limit distribution can be estimated consistently from \(X_1,\ldots ,X_n\) (Theorem 16 of Dörr et al. 2020), we have an asymptotic confidence interval for \(\Delta _a\).

The novel approach taken in this paper is to replace both of the functions f occurring in (2) by the ecf \(\psi _n\). Since, under \(H_0\), \(\varDelta \psi _n(t)\) and \((\Vert t\Vert ^2 -d)\psi _n(t)\) should be close to each other for large n, it is tempting to see what happens if, instead of \(T_{n,a}\) defined in (3), we base a test of \(H_0\) on the weighted \(L^2\)-statistic

and reject \(H_0\) for large values of \(U_{n,a}\).

Since \(\varDelta \psi _n(t)=- n^{-1} \sum _{j=1}^n\Vert Y_{n,j} \Vert ^2\exp (\mathrm{{i}}t^{\top } Y_{n,j})\), the relation

valid for \(c \in {\mathbb {R}}^d\) and \(a>0\), and tedious but straightforward calculations yield the representation

which is amenable to computational purposes. Moreover, \(U_{n,a}\) turns out to be affine invariant.

The rest of the paper is organized as follows. In Sect. 2, we derive the elementwise limits of \(U_{n,a}\), after suitable transformations, as \(a \rightarrow 0\) and \(a \rightarrow \infty \). Section 3 deals with the limit null distribution of \(U_{n,a}\) as \(n \rightarrow \infty \). In Sect. 4, we show that, under the condition \({\mathbb {E}}\Vert X\Vert ^4 < \infty \), \(n^{-1}U_{n,a}\) has an almost sure limit as \(n \rightarrow \infty \) under a fixed alternative to normality. As a consequence, the test based on \(U_{n,a}\) is consistent against any such alternative. Moreover, we prove that the asymptotic distribution of \(U_{n,a}\), after a suitable transformation, is a centered normal distribution. In Sect. 5, we present the results of a simulation study that compares the power of the test for normality based on \(U_{n,a}\) with that of prominent competitors. Section 6 shows a real data example, and Sect. 7 contains some conclusions and gives an outlook on potential further work.

2 The limits \(a\rightarrow 0\) and \(a \rightarrow \infty \)

This section considers the (elementwise) limits of \(U_{n,a}\) as \(a \rightarrow 0\) and \(a \rightarrow \infty \). The results shed some light on the role of the parameter a that figures in the weight function \(w_a\) in (4). Notice that, from the definition of \(U_{n,a}\) given in (5), we have \(\lim _{a\rightarrow \infty } U_{n,a} =0\) and \(\lim _{a\rightarrow 0} U_{n,a} =\infty \), since \(\int \left| \varDelta \psi _n(t) -\left( \Vert t\Vert ^2-d\right) \psi _n(t)\right| ^2\, \text{ d }t=\infty \). Suitable transformations of \(U_{n,a}\), however, yield well-known limit statistics as \(a \rightarrow 0\) and \(a \rightarrow \infty \).

Theorem 1

Elementwise on the underlying probability space, we have

Proof

Starting with (7), \((a/\pi )^{d/2} U_{n,a}\) is, apart from the factor 1/n, a double sum over j and k. Since each summand for which \(j\ne k\) vanishes asymptotically as \(a \rightarrow 0\), we have

as \(a \rightarrow 0\), and the result follows from the fact that \(\sum _{j=1}^n \Vert Y_{n,j}\Vert ^2 = nd\). \(\square \)

Theorem 1 means that a suitable affine transformation of \(U_{n,a}\) has a limit as \(a \rightarrow 0\), and that this limit is — apart from the additive constant \(d^2\) — the time-honored measure of multivariate kurtosis in the sense of Mardia, see Mardia (1970). The same measure — without the subtrahend \(d^2\) — shows up as a limit of \((a/\pi )^{d/2} T_{n,a}\) as \(a \rightarrow 0\), see Theorem 3 of Dörr et al. (2020). The next result shows that \(U_{n,a}\) and \(T_{n,a}\), after multiplication with the same scaling factor, converge to the same limit as \(a \rightarrow \infty \), cf. Theorem 2 of Dörr et al. (2020).

Theorem 2

Elementwise on the underlying probability space, we have

Proof

The proof follows the lines of the Proof of Theorem 2 of Dörr et al. (2020) and is thus omitted. \(\square \)

The limit figuring on the right hand side of (9) is a measure of multivariate skewness, introduced by Móri et al. (1993). Theorems 1 and 2 show that the class of tests for \(H_0\) are in a certain sense “closed at the boundaries” \(a\rightarrow 0\) and \(a \rightarrow \infty \). However, in contrast to the test for multivariate normality based on \(U_{n,a}\) for fixed \(a \in (0,\infty )\), tests for \(H_0\) based on measures of multivariate skewness and kurtosis lack consistency against general alternatives, see, e.g., (Baringhaus and Henze 1991b, 1992; Henze 1994).

3 The limit null distribution of \(U_{n,a}\)

In this section, we assume that the distribution of X is some nondegenerate d-variate normal law. In view of affine invariance, we may further assume that \({\mathbb {E}}(X) = 0\) and \({\mathbb {E}}(XX^{\top }) = \mathrm{I}_d\). By symmetry, it is readily seen that \(U_{n,a}\) defined in (5) takes the form

where

In view of (10), our setting for asymptotics will be the separable Hilbert space \({\mathbb {H}}\) of (equivalence classes of) measurable functions \(f: {\mathbb {R}}^d \rightarrow {\mathbb {R}}\) that satisfy \(\int f^2(t) w_a(t) \, \mathrm{d}t < \infty \). Here and in the sequel, each unspecified integral will be over \({\mathbb {R}}^d\). The scalar product and the norm in \({\mathbb {H}}\) are given by \(\langle f,g\rangle _{\mathbb {H}} = \int f(t)g(t) w_a(t)\, \mathrm{d}t\) and \(\Vert f\Vert _{\mathbb {H}} = \langle f,f\rangle _{\mathbb {H}}^{1/2}\), respectively. Notice that, in this notation, (10) takes the form \(U_{n,a} = \Vert S_n\Vert ^2_{\mathbb {H}}\), where \(S_n\) is given in (11).

Putting \(\psi (t) = \exp (-\Vert t\Vert ^2/2)\) as before, and writing \({\mathop {\longrightarrow }\limits ^{{\mathcal {D}}}}\) for convergence in distribution, the main result of this section is as follows.

Theorem 3

If X has some nondegenerate normal distribution, we have the following:

-

a)

There is a centered Gaussian random element \({{\mathcal {S}}}\) of \({\mathbb {H}}\) having covariance kernel

$$\begin{aligned} K(s, t)= & {} \psi (s-t)\Big \{ 2d + \Vert s\Vert ^2 \Vert t\Vert ^2 - 2 s^{\top } t \Vert s -t\Vert ^2 -4 \Vert s-t\Vert ^2\Big \}\\&+ 2 \psi (s)\psi (t) \Big \{ 2\Vert s\Vert ^2 + 2 \Vert t\Vert ^2 - d - 2 s^{\top } t -4 (s^{\top } t)^2\Big \}, \quad s,t \in {\mathbb {R}}^d, \end{aligned}$$such that, with \(S_n\) defined in (11), \(S_n {\mathop {\longrightarrow }\limits ^{{\mathcal {D}}}}{{\mathcal {S}}}\) as \(n \rightarrow \infty \).

-

b)

We have

$$\begin{aligned} U_{n,a} {\mathop {\longrightarrow }\limits ^{{\mathcal {D}}}}\int {{\mathcal {S}}}^2(t) w_a(t) \, \mathrm{d}t \quad \text {as } n \rightarrow \infty . \end{aligned}$$(12)

Proof

Since the proof is analogous to the proof of Proposition 5 of Dörr et al. (2020), it will only be sketched. If \(S_n^0(t)\) stands for the modification of \(S_n(t)\) that results if we replace \(Y_{n,j}\) with \(X_j\), then a Hilbert space central limit theorem holds for \(S_n^0\), since the summands of \(S_n^0\) are square-integrable centered random elements of \({\mathbb {H}}\). The idea is thus to find a random element \(\widetilde{S}_n\) of \({\mathbb {H}}\) such that \(\widetilde{S}_n {\mathop {\longrightarrow }\limits ^{{\mathcal {D}}}}{{\mathcal {S}}}\) and \(\Vert S_n -\widetilde{S}_n \Vert _{\mathbb {H}} = o_{\mathbb {P}}(1)\). Putting \(Y_{n,j} = X_j +\varDelta _{n,j}\) in (11) and using the fact that \(\cos (t^{\top } Y_{n,j}) = \cos (t^{\top } X_j) -\sin (\varTheta _j) t^{\top } \varDelta _{n,j}\), \(\sin (t^{\top } Y_{n,j}) =\sin (t^{\top } X_j) + \cos (\varGamma _j) t^{\top } \varDelta _{n,j}\), where \(\varTheta _j, \varGamma _j\) depend on \(X_1,\ldots ,X_n\) and t and satisfy \(|\varTheta _j - t^{\top } X_j| \le |t^{\top } \varDelta _{n,j}|\), \(|\varGamma _j - t^{\top } X_j| \le |t^{\top } \varDelta _{n,j}|\), some algebra and Proposition 18 of Dörr et al. (2020) show that a choice of \(\widetilde{S}_n\) is given by

where

Tedious calculations then show that the covariance kernel of \({{\mathcal {S}}}\), which is \({\mathbb {E}}[h(X,s)h(X,t)]\), is equal to K(s, t) given above. \(\square \)

Notice that the covariance kernel figuring in Theorem 3 is much shorter than the corresponding kernel given in Theorem 7 in Dörr et al. (2020) for the related test statistic \(T_{n,a}\) defined in (3). Thus, the double estimation leads to a simpler covariance kernel. Let \(U_{\infty ,a}\) denote a random variable having the limit distribution of \(U_{n,a}\) given in (12). Since the distribution of \(U_{\infty ,a}\) is that of \(\Vert {{\mathcal {S}}}\Vert _{\mathbb {H}}^2\), where \({{\mathcal {S}}}\) is the Gaussian random element of \({\mathbb {H}}\) figuring in Theorem 3, it is the distribution of \(\sum _{j \ge 1} \lambda _jN_j^2\), where \(N_1,N_2, \ldots \) is a sequence of i.i.d. standard normal random variables, and \(\lambda _1,\lambda _2, \ldots \) are the positive eigenvalues corresponding to normalized eigenfunctions of the integral operator \(f \mapsto Af\) on \({\mathbb {H}}\), where \((Af)(s) = \int K(s,t) f(t) \, w_a(t) \, \mathrm{d}t\). It seems to be hopeless to obtain closed-form expressions of these eigenvalues. However, in view of Fubini’s theorem, we have

and thus straightforward manipulations of integrals yield the following result.

Theorem 4

Putting \(\gamma = (a/(a+1))^{d/2}\), we have

From this result, one readily obtains

It is interesting to compare this limit relation with (8). If the underlying distribution is standard normal, i.e., if \({\mathbb {P}}^X = \mathrm{N}_d(0,\mathrm{I}_d)\), we have \({\mathbb {E}}\Vert X\Vert ^4 = 2d + d^2\). Now, writing \(Y_{n,j} = X_j +\varDelta _{n,j}\) and using Proposition 18 of Dörr et al. (2020), the right hand side of (8) turns out to converge in probability to \({\mathbb {E}}\Vert X\Vert ^4 - d^2\) as \(n \rightarrow \infty \), and this expectation is the right hand side of (13). Regarding the case \(a \rightarrow \infty \), the representation of \({\mathbb {E}}[U_{\infty ,a}]\) easily yields

This result corresponds to (9), since, by Theorem 2.2 of Henze (1997), the right hand side of (9), after multiplication with n, converges in distribution to \(2(d+2)\chi ^2_d\) as \(n \rightarrow \infty \) if \({\mathbb {P}}^X = \mathrm{N}_d(0,\mathrm{I}_d)\). Here, \(\chi ^2_d\) is a random variable having a chi square distribution with d degrees of freedom.

4 Limits of \(U_{n,a}\) under alternatives

In this section we assume that \(X,X_1,X_2, \ldots \) are i.i.d., and that \({\mathbb {E}}\Vert X\Vert ^4 < \infty \). Moreover, let \({\mathbb {E}}(X) =0\) and \({\mathbb {E}}(XX^{\top }) = \mathrm{I}_d\) in view of affine invariance, and recall the Laplace operator \(\varDelta \) from Sect. 1. The characteristic function of X will be denoted by \(\psi (t) = {\mathbb {E}}[\exp (\mathrm{i}t^{\top } X)]\), \(t \in {\mathbb {R}}^d\). Letting

we first present an almost sure limit for \(n^{-1} U_{n,a}\), which is the same limit as for \(T_{n,a}\), see Theorem 11 in Dörr et al. (2020).

Theorem 5

We have

where

Proof

In what follows, we write \(\mathrm{CS}^\pm (\xi ) = \cos (\xi ) \pm \sin (\xi )\), and we put \(Y_j = Y_{n,j}\), \(\varDelta _j = \varDelta _{n,j}\) for the sake of brevity. From (10) and (11), we have \(n^{-1}U_{n,a} = \Vert V_n +W_n\Vert _{\mathbb {H}}^2\), where

Putting

the strong law of large numbers in Hilbert spaces (see, e.g., Theorem 7.7.2 of Hsing and Eubank (2015)) yields \(\Vert V_n^0+ W_n^0\Vert _{\mathbb {H}}^2 {\mathop {\longrightarrow }\limits ^{\mathrm{a.s.}}}\varGamma _a\) as \(n \rightarrow \infty \), and thus it suffices to prove \(\Vert V_n+ W_n\Vert _{\mathbb {H}}^2 - \Vert V_n^0+ W_n^0\Vert _{\mathbb {H}}^2 {\mathop {\longrightarrow }\limits ^{\mathrm{a.s.}}}0\). From

the Cauchy–Schwarz inequality, the fact that \(\max (|W_n(t)|,|W_n^0(t)|) \le 2(d+\Vert t\Vert ^2)\), \(|V_n(t)| \le 2d\), \(|V_n^0(t)| \le 2n^{-1}\sum _{j=1}^n \Vert X_j\Vert ^2\) and Minkowski’s inequality, it suffices to prove \(\Vert V_n-V_n^0\Vert _{\mathbb {H}} {\mathop {\longrightarrow }\limits ^{\mathrm{a.s.}}}0\) and \(\Vert W_n - W_n^0\Vert _{\mathbb {H}} {\mathop {\longrightarrow }\limits ^{\mathrm{a.s.}}}0\) as \(n \rightarrow \infty \). As for \(W_n-W_n^0\), the inequalities \(|\cos (t^{\top } Y_j)- \cos (t^{\top } X_j)| \le \Vert t\Vert \, \Vert \varDelta _j\Vert \), \(|\sin (t^{\top } Y_j)- \sin (t^{\top } X_j)| \le \Vert t\Vert \, \Vert \varDelta _j\Vert \) and the Cauchy–Schwarz inequality yield \(|W_n(t)-W_n^0(t)| \le (\Vert t\Vert ^2 + d) 2 \Vert t\Vert (n^{-1}\sum _{j=1}^n \Vert \varDelta _j\Vert ^2)^{1/2}\). In view of Proposition 18 b) of Dörr et al. (2020), we have \(\Vert W_n - W_n^0\Vert _{\mathbb {H}} {\mathop {\longrightarrow }\limits ^{\mathrm{a.s.}}}0\). Regarding \(V_n-V_n^0\), we decompose this difference according to

The squared norm in \({\mathbb {H}}\) of the second summand on the right hand side converges to zero almost surely, see the treatment of \(U_{n,1}\) in the Proof of Theorem 11 of Dörr et al. (2020). The same holds for the first summand, since its modulus is bounded from above by \(4\Vert t\Vert n^{-1}\sum _{j=1}^n \Vert \varDelta _j\Vert +2n^{-1}\sum _{j=1}^n \Vert \varDelta _j\Vert ^2\), and the inequality \(n^{-1}\sum _{j=1}^n \Vert \varDelta _j\Vert \le (n^{-1}\sum _{j=1}^n \Vert \varDelta _j\Vert ^2)^{1/2}\), together with Proposition 18 b) of Dörr et al. (2020), yield the assertion. \(\square \)

Since, under the conditions of Theorem 5, \(\varGamma _a\) is strictly positive if the underlying distribution does not belong to \({{\mathcal {N}}}_d\), \(U_{n,a}\) converges almost surely to \(\infty \) under such an alternative, and we have the following result.

Corollary 1

The test which reject the hypothesis \(H_0\) for large values of \(U_{n,a}\) is consistent against each fixed alternative satisfying \({\mathbb {E}}\Vert X\Vert ^4 < \infty \).

The next result, which corresponds to Theorem 13 of Dörr et al. (2020), shows that the (population) measure of multivariate skewness in the sense of Móri, Rohatgi and Székely emerges as the limit of \(\varGamma _a\), after a suitable scaling, as \(a\rightarrow \infty \).

Theorem 6

Under the condition \({\mathbb {E}}\Vert X\Vert ^6 < \infty \), we have

Proof

By definition,

In what follows, let Y, Z be independent copies of X. Since \(\psi ^+(t)^2 = {\mathbb {E}}[\mathrm{CS}^+(t^{\top } Y)\mathrm{CS}^+(t^{\top } Z)]\), the addition theorems for the cosine and the sine function and symmetry yield

Putting \(c= Y-Z\), display (6) then gives

Likewise, it follows that \(\psi ^+(t)\varDelta \psi ^+(t) =-{\mathbb {E}}[\Vert Y\Vert ^2 \cos (t^{\top }(Y-Z))]\), whence

Finally,

and it follows that

Now, dominated convergence yields

as \(a \rightarrow \infty \). Since \({\mathbb {E}}\Vert Y\Vert ^2 = d ={\mathbb {E}}\Vert Z\Vert ^2\) and \({\mathbb {E}}(Y) = {\mathbb {E}}(Z) =0\), we have

and the assertion follows. \(\square \)

We close this section with a result on the asymptotic normality of \(U_{n,a}\) under fixed alternatives. That such a result holds in principle follows from Theorem 1 of Baringhaus et al. (2017). To state the main idea, write again \(\mathrm{CS}^\pm (\xi ) = \cos (\xi ) \pm \sin (\xi )\) and notice that, by (10), \(U_{n,a} =\Vert {{\mathcal {S}}}_n\Vert _{\mathbb {H}}^2\), where \({{\mathcal {S}}}_n(t)\) is given in (11). Putting

where \({{\mathcal {V}}}_n^*(t) =\sqrt{n}({{\mathcal {S}}}_n^*(t) -z(t))\), \(t \in {\mathbb {R}}^d\). In the sequel, let \(\nabla (f)(t)\) denote the gradient of a differentiable function \(f:{\mathbb {R}}^d \rightarrow {\mathbb {R}}\), evaluated at t, and write \(\mathrm{H}f(t)\) for the Hessian matrix of f at t if f is twice continuously differentiable. By proceeding as in the Proof of Theorem 6 of Dörr et al. (2020), there is a centered Gaussian element \({{\mathcal {V}}}^*\) of \({\mathbb {H}}\) having covariance kernel

where

such that \({{\mathcal {V}}}_n^* {\mathop {\longrightarrow }\limits ^{{\mathcal {D}}}}{{\mathcal {V}}}^*\) as \(n \rightarrow \infty \). In view of (15) and the fact that the distribution of \(2\langle {{\mathcal {V}}}^*,z\rangle _{\mathbb {H}}\) is centered normal, we have the following result.

Theorem 7

Under the standing assumptions stated at the beginning of this section, we have

where

We remark that a consistent estimator of \(\sigma _a^2\) can be obtained by analogy with the reasoning given in Dörr et al. (2020), see Lemma 15, Theorem 16 and the Remark before Section 7 of that paper. Note that the above result differs from that of Theorem 6 in Dörr et al. (2020), since \(h^*(\cdot ,\cdot )\), compared to \(v(\cdot ,\cdot )\) in display (23) of Dörr et al. (2020), has different components. Interestingly and in contrast to the null distribution, the formulas for \(h^*(\cdot ,\cdot )\) are more involved.

5 Simulations

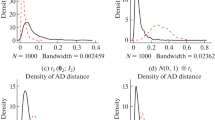

In this section, we present the results of a Monte Carlo simulation study on the finite-sample power of the tests based on \(U_{n,a}\) and \(T_{n,a}\). This study is twofold in the sense that we consider testing for both univariate and multivariate normality, where the latter case is restricted to dimensions \(d\in \{2,3,5\}\). Moreover, the study is designed to match and complement the counterparts in Dörr et al. (2020), Section 7, and Henze and Visagie (2020), since we take exactly the same setting with regard to sample size, nominal level of significance and selected alternative distributions. In this way, we facilitate an easy comparison with existing procedures. Note that the test families \(U_{n,a}\) and \(T_{n,a}\) have been implemented in the R package mnt, see Butsch and Ebner (2020). In the univariate case, we consider sample sizes \(n\in \{20,50,100\}\) and restrict the simulations to \(n\in \{20,50\}\) in the multivariate setting. The nominal level of significance is fixed throughout all simulations to 0.05. We simulated empirical critical values under \(H_0\) for \(d^{-2}\left( a/\pi \right) ^{d/2}U_{n,a}\) with 100,000 replications, see Table 1, and used Table 2 in Dörr et al. (2020) for critical values of \(T_{n,a}\). In each table, the rows entitled ’\(\infty \)’ give approximations of the quantiles of the limit random element \(U_{\infty ,a}=\int {{\mathcal {S}}}^2(t) w_a(t) \, \mathrm{d}t\) in Theorem 3(b). The entries have been calculated by the method presented in Dörr et al. (2020), Section 7, setting \(\ell = 100,000\) and \(m=2000\) for \(d\in \{2,3,5,10\}\). Note that this approach only relies on the structure of the covariance kernel given in Theorem 3(a), the multivariate normal distribution, and the weight function.

In the univariate case, we consider the following alternatives: symmetric distributions, like the Student t\(_{\nu }\)-distribution with \(\nu \in \{1,3, 5, 10\}\) degrees of freedom (note that t\(_1\) is the standard Cauchy distribution), as well as the uniform distribution U\((-\sqrt{3}, \sqrt{3})\), and asymmetric distributions, such as the \(\chi ^2_{\nu }\)-distribution with \(\nu \in \{5, 15\}\) degrees of freedom, the beta distributions B(1, 4) and B(2, 5), and the gamma distributions \(\varGamma (1, 5)\) and \(\varGamma (5, 1)\), both parametrized by their shape and rate parameter, the Gumbel distribution Gum(1, 2) with location parameter 1 and scale parameter 2, the Weibull distribution W(1, 0.5) with scale parameter 1 and shape parameter 0.5, and the lognormal distribution LN(0, 1). As representatives of bimodal distributions, we simulate the mixture of normal distributions NMix\((p, \mu , \sigma ^2)\), where the random variables are generated by \((1 - p) \, \mathrm{N}(0, 1) + p \, \mathrm{N}(\mu , \sigma ^2)\), \(p \in (0, 1)\), \(\mu \in {\mathbb {R}}\), \(\sigma > 0\). Note that these alternatives can also be found in the simulation studies presented in Betsch and Ebner (2020), Dörr et al. (2020), Romão et al. (2010). We chose these alternatives in order to ease the comparison with many other existing tests.

First we oppose the tests \(T_{n,a}\) and \(U_{n,a}\) in Table 2. Remarkably, the test based on \(U_{n,a}\) shows a better performance for the NMix-alternatives, especially for the choice of the tuning parameter \(a\in \{0.25,0.5\}\). On the other hand, \(U_{n,a}\) is almost uniformly dominated by \(T_{n,a}\) for the t\(_{\nu }\)-distribution. If the underlying distribution is \(\chi ^2\), beta, gamma, Weibull, Gumbel or lognormal, both procedures have a comparable power. Table 4 in Dörr et al. (2020) also provides finite-sample powers of strong either time-honored or recent tests for normality, like the Shapiro–Wilk test, the Shapiro–Francia test, the Anderson–Darling test, the Baringhaus–Henze–Epps–Pulley test (BHEP), see Henze and Wagner (1997), the del Barrio–Cuesta-Albertos–Mátran–Rodríguez-Rodríguez test (BCMR), see del Barrio et al. (1999), and the Betsch–Ebner test, see Betsch and Ebner (2020). For a description of the test statistics and critical values, see Dörr et al. (2020) and the references therein. A comparison shows that, for suitable choice of the tuning parameter, \(U_{n,a}\) can compete with each of these tests, sometimes outperforming them, for example in case of the uniform distribution, \(n=20\), and \(a=0.25\), and the \(\chi ^2_{15}\)-distribution for all sample sizes and \(a=5\), but mostly being on the same power level. It is interesting to see that the finite-sample power of \(U_{n,a}\) depends heavily on the choice of a. This observation is in contrast to the behavior of \(T_{n,a}\), the power of which depends much less on a.

In the multivariate case, the alternative distributions are selected to match those employed in the simulation studies in Dörr et al. (2020), Henze and Visagie (2020), and are given as follows. Let NMix\((p,\mu ,\varSigma )\) be the normal mixture distribution generated by

where \(p \in (0, 1)\), \(\mu \in {\mathbb {R}}^d\), and \(\varSigma \) is a positive definite matrix. In this notation, \(\mu =3\) stands for a d-variate vector of 3’s, and \(\varSigma =\mathrm{B}_d\) is a \((d \times d)\)-matrix containing 1’s on the main diagonal, and each off-diagonal entry has the value 0.9. We denote by t\(_\nu (0,{\mathrm{I}}_d)\) the multivariate t\(_{\nu }\)-distribution with \(\nu \) degrees of freedom, see Genz and Bretz (2009). The acronym DIST\(^d(\vartheta )\) stands for a d-variate random vector with i.i.d. marginal laws that belong to the distribution DIST with parameter \(\vartheta \). In the sequel, DIST is either the lognormal distribution LN, the gamma distribution \(\varGamma \), or the Pearson Type VII distribution P\(_{VII}\). Note that t\(_1(0,{\mathrm{I}}_d)\) stands for the marginal standard Cauchy distribution C\(^d(0,1)\) in the previous notation. For the latter distribution, \(\vartheta \) denotes the number of degrees of freedom. The spherical symmetric distributions have been simulated using the R package distrEllipse, see Ruckdeschel et al. (2006). These are denoted by \({\mathcal {S}}^d(\text{ DIST})\), where DIST stands for the distribution of the radii, and was chosen to be the exponential, the beta and the \(\chi ^2\)-distribution.

Tables 3, 4, and 5 can be contrasted to Table 5–7 in Dörr et al. (2020), and for \(n=50\), with Tables 3–5 in Henze and Visagie (2020). Again, we start with a comparison of \(T_{n,a}\) and \(U_{n,a}\). For \(d=2\) (see Table 3 and Table 5 in Dörr et al. (2020)), \(T_{n,a}\) is outperformed by \(U_{n,a}\) for NMix\((0.1,3,I_2)\) and NMix\((0.9,3,B_2)\), but shows a stronger performance for NMix\((0.5,3,B_2)\). In case of the multivariate t\(_\nu \)-distributions, both procedures have a similar performance, as well as for the DIST\(^d(\vartheta )\) distributions. The spherical symmetric distributions are dominated by \(U_{n,a}\) for a suitable choice of the tuning parameter, except for the \({\mathcal {S}}^d(\chi ^2_{5})\) distribution, where a similar behaviour is asserted. Again, \(U_{n,a}\) seems to be much more sensitive to the choice of a proper tuning parameter than \(T_{n,a}\). Competing tests of multivariate normality are the Henze–Visagie test, see Henze and Visagie (2020), the Henze–Jiménez-Gamero test, see Henze and Jiménez-Gamero (2019), the BHEP-test, the Henze–Jiménez-Gamero–Meintanis test, see Henze et al. (2019), and the energy test, see Székely and Rizzo (2005). A description of the test statistics, as well as procedures for computing critical values is found in Henze and Visagie (2020). The BHEP-test performs best for the NMix\((0.1,3,I_2)\)-distribution (NMIX1 in Henze and Visagie 2020) but is outperformed by \(T_{n,a}\) for NMix\((0.5,0,B_2)\), and by \(U_{n,a}\) for the NMix\((0.9,3,B_2)\) (NMIX2 in Henze and Visagie 2020), where these procedures show the best performance of all tests considered. A similar behavior is observed for the t\(_\nu \)- and the spherical symmetric distributions, where again \(U_{n,a}\) and \(T_{n,a}\) are strong competitors to all procedures considered.

6 A real data example

As a real data example, we examine the meteorological data set weather provided in the R package RandomFields, see Schlather et al. (2019), which consists of differences between forecasts and observations (forecasts minus observations) of temperature and pressure at \(n=157\) locations in the North American Pacific Northwest. The data are pointwise realizations of a bivariate (\(d=2\)) error Gaussian random field, see Fig. 1. The forecasts are from the GFS member of the University of Washington regional numerical weather prediction ensemble, see Eckel and Mass (2005), and they were valid on December 18, 2003 at 4 p.m. local time, at a forecast horizon of 48 hours. We ignore the given location of measurements in this evaluation and test the hypothesis that the pairs of differences can be modeled as i.i.d. copies from a bivariate normal distribution. In Table 6, we calculate empirical p-values based on 10,000 replications for \(U_{n,a}\) for the univariate differences of temperature and pressure, as well as for the bivariate data for the whole data set, \(n=157\), and for a random selection of \(n=50\) points (selected in R with function sample() and seed fixed to ’0721’). Regarding the complete data set, we reject the hypothesis of normality in nearly all cases on a \(5\%\) level of significance, while on a \(1\%\) level of significance we are not able to reject \(H_0\) for the differences in pressure. However, for the temperature and the bivariate data the hypothesis of normality is nearly always rejected. These results are not surprising, since the weather data set is an example of influence of spatial correlation, which has to be carefully modeled. In Gneiting et al. (2010), a bivariate Gaussian random field is fitted to the data, taking the mentioned spatial correlation into account, for a visualization of the locations see Figure 3 in Gneiting et al. (2010). For the subsample of points we see that the structure vanishes, and we throughout do not reject the hypotheses. In Table 7 we conduct the same study using the method \(T_{n,a}\) to contrast the empirical p-values to those of \(U_{n,a}\). As would be expected, we nearly draw the same conclusions, although we can reject the hypothesis of normality for pressure in all cases on a \(5\%\) level of significance for the full data set. In comparison, the p-values in Table 7 show a smaller fluctuation than in Table 6. Here, we have only applied the methods as a proof of principle.

7 Conclusions and outlook

We have introduced and studied a new affine invariant class of tests for multivariate normality that is easy to apply and consistent against general alternatives. Although consistency has only been proved under the condition \({\mathbb {E}}\Vert X\Vert ^4 < \infty \), the test should be “all the more consistent” if \({\mathbb {E}}\Vert X\Vert ^4 =\infty \), and we conjecture that, as is the case for the BHEP-tests, also the test based on \(U_{n,a}\) is consistent against each nonnormal alternative distribution. A further topic of research would be to choose the tuning parameter a in an adaptive way, similar to the bootstrap based univariate approaches in Allison and Santana (2015) and Tenreiro (2019). It would also be of interest to obtain more information on the limit null distribution of \(U_{n,a}\). We finish the outlook by pointing out that, with respect to the references in the introduction regarding other procedures and distributions, a similar analysis can be performed, and it is of theoretical and practical relevance to study the resulting statistics in order to assess the influence of the options of estimating or not estimating certain of the pertaining functions.

After a comparison of \(U_{n,a}\) and \(T_{n,a}\) from Dörr et al. (2020), and in view of the results of the simulation study, we recommend to use \(T_{n,a}\), since it seems to be more robust with respect to the choice of the tuning parameter a. Nevertheless, \(U_{n,a}\) is a strong competitor, and with a suitable data driven procedure for the choice of a at hand, \(U_{n,a}\) may turn out to be a favorable choice over the most classical and recent tests of uni- and multivariate normality.

References

Allison JS, Santana L (2015) On a data-dependent choice of the tuning parameter appearing in certain goodness-of-fit tests. J Stat Comput Simul 85(16):3276–3288

Baringhaus L, Ebner B, Henze N (2017) The limit distribution of weighted \(l^2\)-statistics under fixed alternatives, with applications. Ann Inst Stat Math 69(5):969–995

Baringhaus L, Henze N (1991) A class of consistent tests for exponentiality based on the empirical Laplace transform. Ann Instit Stat Math 43(3):551–564

Baringhaus L, Henze N (1991) Limit distributions for measures of multivariate skewness and kurtosis based on projections. J Multivar Anal 38(1):51–69

Baringhaus L, Henze N (1992) Limit distributions for Mardia’s measure of multivariate skewness. Ann Stat 20(4):1889–1902

del Barrio E, Cuesta-Albertos JA, Matran C, Rodriguez-Rodriguez JM (1999) Tests of goodness of fit based on the \({L}_2\)-Wasserstein distance. Ann Stat 27(4):1230–1239

Betsch S, Ebner B (2020) Testing normality via a distributional fixed point property in the Stein characterization. TEST 29(1):105–138

Butsch L, Ebner B (2020) mnt: Affine invariant tests of multivariate normality. https://CRAN.R-project.org/package=mnt. R package version 1.3

Dörr P, Ebner B, Henze N (2020) Testing multivariate normality by zeros of the harmonic oscillator in characteristic function spaces. Scand J Stat pp. 1–34. https://doi.org/10.1111/sjos.12477

Eaton ML, Perlman MD (1973) The non-singularity of generalized sample covariance matrices. Ann Stat 1(4):710–717

Eckel FA, Mass CF (2005) Aspects of effective mesoscale, short-range ensemble forecasting. Weather and Forecast 20(3):328–350

Genz A, Bretz F (2009) Computation of multivariate normal and t probabilities. Lecture notes in statistics, 195. Springer, Dordrecht

Gneiting T, Kleiber W, Schlather M (2010) Matérn cross-covariance functions for multivariate random fields. J Am Stat Assoc 105(491):1167–1177

Henze N (1994) On Mardia’s kurtosis test for multivariate normality. Commun Stat – Theory Methods 23(4):1031–1045

Henze N (1997) Limit laws for multivariate skewness in the sense of Móri, Rohatgi and Székely. Stat Probab Lett 33(3):299–307

Henze N, Jiménez-Gamero MD (2019) A class of tests for multinormality with i.i.d. and GARCH data based on the empirical moment generating function. TEST 28(2):499–521

Henze N, Jiménez-Gamero MD, Meintanis SG (2019) Characterizations of multinormality and corresponding tests of fit, including for GARCH models. Econom Theory 35(3):510–546

Henze N, Klar B (2002) Goodness-of-fit tests for the inverse Gaussian distribution based on the empirical Laplace transform. Ann Instit Stat Math 54(2):425–444

Henze N, Meintanis SG, Ebner B (2012) Goodness-of-fit tests for the Gamma distribution based on the empirical Laplace transform. Commun Stat – Theory and Methods 41(9):1543–1556

Henze N, Visagie J (2020) Testing for normality in any dimension based on a partial differential equation involving the moment generating function. Ann Inst Stat Math 72:1109–1136

Henze N, Wagner T (1997) A new approach to the BHEP tests for multivariate normality. J Multivar Anal 61(1):1–23

Hsing T, Eubank R (2015) Theoretical foundations of functional data analysis, with an introduction to linear operators. Wiley, New York

Mardia KV (1970) Measures of multivariate skewness and kurtosis with applications. Biometrika 57(3):519–530

Meintanis S, Iliopoulos G (2003) Tests of fit for the Rayleigh distribution based on the empirical Laplace transform. Ann Instit Stat Math 55(1):137–151

Meintanis SG (2010) Testing skew normality via the moment generating function. Math Methods Stat 19(1):64–72

Meintanis SG, Hlávka Z (2010) Goodness-of-fit tests for bivariate and multivariate skew-normal distributions. Scand J Stat 37(4):701–714

Móri TF, Rohatgi VK, Székely GJ (1993) On multivariate skewness and kurtosis. Teoriya Veroyatnostei I Yeye Primeniya 38(3):675–679

Riad M, Mabood OFAE (2018) A new goodness of fit test for the beta distribution based on the empirical Laplace transform. Adv Appl Stat 53(2):165–177

Romão X, Delgado R, Costa A (2010) An empirical power comparison of univariate goodness-of-fit tests for normality. J Stat Comput Simul 80(5):545–591

Ruckdeschel P, Kohl M, Stabla T, Camphausen F (2006) S4 classes for distributions. R News 6(2):2–6

Schlather M, Malinowski A, Oesting M, Boecker D, Strokorb K, Engelke S, Martini J, Ballani F, Moreva O, Auel J, Menck PJ, Gross S, Ober U, Ribeiro P, Ripley BD, Singleton R, Pfaff B, R Core Team (2019) RandomFields: simulation and analysis of random fields. https://cran.r-project.org/package=RandomFields. R package version 3.3.6

Székely GJ, Rizzo ML (2005) A new test for multivariate normality. J Multivar Anal 93:58–80

Tenreiro C (2019) On the automatic selection of the tuning parameter appearing in certain families of goodness-of-fit tests. J Stat Comput Simul 89(10):1780–1797

Acknowledgements

The authors thank two anonymous referees and the editor for valuable remarks.

Funding

Open Access funding provided by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Dörr, P., Ebner, B. & Henze, N. A new test of multivariate normality by a double estimation in a characterizing PDE. Metrika 84, 401–427 (2021). https://doi.org/10.1007/s00184-020-00795-x

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00184-020-00795-x