Abstract

Meta-analysis has developed to be a most important tool in evaluation research. Heterogeneity is an issue that is present in almost any meta-analysis. However, the magnitude of heterogeneity differs across meta-analyses. In this respect, Higgins’ \(I^2\) has emerged to be one of the most used and, potentially, one of the most useful measures as it provides quantification of the amount of heterogeneity involved in a given meta-analysis. Higgins’ \(I^2\) is conventionally interpreted, in the sense of a variance component analysis, as the proportion of total variance due to heterogeneity. However, this interpretation is not entirely justified as the second part involved in defining the total variation, usually denoted as \(s^2\), is not an average of the study-specific variances, but in fact some other function of the study-specific variances. We show that \(s^2\) is asymptotically identical to the harmonic mean of the study-specific variances and, for any number of studies, is at least as large as the harmonic mean with the inequality being sharp if all study-specific variances agree. This justifies, from our point of view, the interpretation of explained variance, at least for meta-analyses with larger number of component studies or small variation in study-specific variances. These points are illustrated by a number of empirical meta-analyses as well as simulation work.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and background

Meta-analysis is a statistical methodology for the analysis and integration of results from individual, independent studies. In the last decades, meta-analysis developed a crucial role in many fields of science such as medicine and pharmacy, health science, psychology, and social science (Petitti 1994; Schulze et al. 2003; Böhning et al. 2008; Sutton et al. 2000; Egger et al. 2001). Consider the typical set-up in a meta-analysis: effect measure estimates \({\hat{\theta }}_{1}, \ldots , {\hat{\theta }}_{k}\) are available from k studies with associated variances \(\sigma _1^2, \ldots , \sigma _k^2\), which are assumed known and equal to their sampling counterparts. Typically, the random effects model

is employed where \(\delta _i\sim N(0,\tau ^2)\) is a normal random effect and \( \epsilon _i \sim N(0,\sigma _i^2)\) is a normal random error, all random effects and errors being independent, and \(\tau ^2 \ge 0\). Furthermore, let \(w_i=1/\sigma _i^2\) and \(W_i=1/(\sigma _i^2+\tau ^2)\). The heterogeneity statistic Q is defined as

where \({\bar{\theta }}= \sum _{i=1}^{k} w_{i} {\hat{\theta }}_{i}/\sum _{i=1}^{k} w_i\). The distribution of Q has been investigated including Biggerstaff and Jackson (2008) and a critical appraisal of Q is given by Hoaglin (2016). More importantly for this work, Q is also the basis of the DerSimonian–Laird estimator for the heterogeneity variance \(\tau ^2\), which is given, in its untruncated form, by

It is also the foundation of Higgins’ \(I^2\) defined as

designed to provide a measure of quantifying the magnitude of heterogeneity involved in the meta-analysis (Higgins and Thompson 2002; Borenstein et al. 2009). Higgins’ \(I^2\) is very popular and has been discussed intensively including critical appraisals (its dependence on the study-specific precision) given in Rücker et al. (2008) or, more recently, in Borenstein et al. (2017). \(I^2\) has also been recently generalized to the multivariate context, see Jackson et al. (2012). \(I^2\) is indeed a proportion and, if multiplied by 100, a percentage. In addition, \(I^2\) has a variance component interpretation. The variance of the effect measure \(Var({\hat{\theta }}_{i})= \tau ^2+\sigma _i^2\) can be partitioned into the within-study variance and the variance between studies. If all studies would have the same within-study variance \(\sigma _i^2=\sigma ^2\), then it would be easy to define with \(\tau ^2/(\tau ^2+\sigma ^2)\) the proportion of variance due to across-study variation, or simply due to heterogeneity. With study-specific variances an average variance needs to be used and, if a specific average is selected, then \(I^2\) can be interpreted as the proportion of variation due to heterogeneity. This might be not obvious from the definition provided in (1) but becomes more evident from the identity (although this can be found elsewhere a proof of this identity is given in the Appendix for completeness)

where \(s^2=(k-1)\sum _{i=1}^k w_i/[(\sum _{i=1}^k w_i)^2 - \sum _{i=1}^k w_i^2]\). If \(s^2\) could be viewed as some sort of average of the study-specific variances \( \sigma _1^2\), ..., \(\sigma _k^2\), then \(I^2\) could be validly interpreted, as typically done in variance component models, as the proportion of the total variance (variance due to heterogeneity plus within-study variance).

This short note serves two purposes:

-

we will show that, under mild regularity assumptions, \(s^2\) is asymptotically identical to the harmonic mean \({ {\bar{\sigma }^2}} =\left[ \frac{1}{k} \sum _{i=1}^k w_i\right] ^{-1}\) of the study-specific variances.

-

we will show that \(s^2 \ge {{\bar{\sigma }^2}}\) with the difference \(s^2 - {{\bar{\sigma }^2}}\) being zero if all study-specific variances are identical, and for the more general case of non-identical study-specific variances, approaching zero for k becoming large.

2 Main results

2.1 The harmonic mean result

We have the following result:

Theorem 1

If there exist positive constants b and B such that for all \(i=1,2,\ldots ,k\)

then

Proof

We can write \(s^2\) as

As \((k-1)/k \rightarrow 1\) for \(k \rightarrow \infty \), it is sufficient to show that

as \(k \rightarrow \infty \). As \(\sigma _i^2\) is bounded below by b for all i, we have that

for all i, so that

In addition, as \(\sigma _i^2\) is bounded above by B for all i, we have that

so that

Taking (3) and (4) together yields

for \(k \rightarrow \infty \). This ends the proof. \(\square \)

2.2 The inequality

Further clarification on the relation between \(s^2\) and \({{\bar{\sigma }^2}}\) is given by the following inequality. Note that this result does not require any assumption on the variances \( \sigma _1^2\), ..., \(\sigma _k^2\).

Theorem 2

with equality if

Proof

We need to show that

This is equivalent to showing that

or

This is equivalent to

for a random variable V giving equal weights to \(w_1,\ldots , w_k\). This inequality holds as

with equality if \(w_1=w_2=\cdots =w_k\), and this ends the proof. \(\square \)

3 Empirical illustrations

Here, we illustrate these relationships on the basis of 15 meta-analyses. Details on these are given in Table 1. These meta-analyses were not selected in any particular way, they were simply collected from the literature while teaching a course on statistical methods for meta-analysis.

It is clear from Theorem 1 that the difference between \(s^2\) and \({{\bar{\sigma }^2}}\) should become smaller with increasing number of studies k. In Fig. 1, we examine \(\log (s^2 /{{\bar{\sigma }^2}})\) in dependence on k. Here we are taking the log-ratio of \(s^2\) and \( {{\bar{\sigma }^2}}\) to remove any scaling factor variation across different meta-analyses. There is a clear decreasing trend for \(\log (s^2 /{{\bar{\sigma }^2}})\) with increasing k.

In Fig. 2, we examine \(\log (s^2 /{\bar{\sigma }^2})\) vs. \(\sqrt{\frac{1}{k-1}\sum _i(\sigma _i^2-{\tilde{\sigma }^2})^2}/{\tilde{\sigma }^2}\) where \({\tilde{\sigma }^2}=\frac{1}{k}\sum _i \sigma _i^2\). Here we are considering coefficient of variation as measure of variability of the within-study variances. Again we need to take the coefficient of variation (in contrast to the standard deviation) to remove scale variation across different meta-analyses. There is a clear increasing trend for \(\log (s^2 /{\bar{\sigma }^2})\) with increasing variability of the study-specific variances involved in the meta-analysis.

4 A simulation study

To further investigate these findings, we have undertaken the following simulation work. We assume that study-specific variances differ only by the study size. Hence \( \sigma _i^2 = \sigma ^2/n_i\) where \(n_i\) is the sample size of study i. We take \(\sigma ^2=1\) so that \(w_i= \frac{1}{\sigma _i^2}= n_i\). We consider three settings in which \(w_i=n_i\) is sampled from a uniform with common mean 55 but different range:

-

setting 1: \(w_i=n_i \sim Uniform(10,100)\) for \(i=1,\ldots ,k\) is arising from a uniform distribution on [10, 100];

-

setting 2: \(w_i=n_i \sim Uniform(30,80)\) for \(i=1,\ldots ,k\) is arising from a uniform distribution on [30, 80];

-

setting 3: \(w_i=n_i \sim Uniform(45,65)\) for \(i=1,\ldots ,k\) is arising from a uniform distribution on [45, 65].

Setting 3 has the smallest range whereas setting 1 has the largest, and setting 2 is in between. For \(i=1,\ldots , k\) the following measures are calculated:

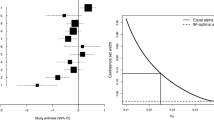

Scatter plot of \(\log (s^2 /{\bar{\sigma }^2})\) versus the number of studies k involved in the meta-analysis for the three settings of the simulation study as described in Sect. 4

-

1.

the arithmetic mean \({\tilde{\sigma }^2}=\frac{1}{k}\sum _i \sigma _i^2= \frac{1}{k} \sum _{i=1}^k w_i^{-1}\) of the study-specific variances;

-

2.

the harmonic mean \({\bar{\sigma }^2} =[\frac{1}{k} \sum _{i=1}^k w_i]^{-1}\) of the study-specific variances;

-

3.

\(s^2=(k-1)\sum _{i=1}^k w_i/[(\sum _{i=1}^k w_i)^2 - \sum _{i=1}^k w_i^2]\);

-

4.

\(\log (s^2/{\bar{\sigma }^2})\);

-

5.

the coefficient of variation \(CV = \sqrt{\frac{1}{k-1}\sum _i(\sigma _i^2-{\tilde{\sigma }^2})^2}/{\tilde{\sigma }^2}\) where \({\tilde{\sigma }^2}=\frac{1}{k}\sum _i \sigma _i^2\).

This process has been repeated 10,000 times and the mean of the above performance measures calculated. Figure 3 shows a scatter plot of \(\log (s^2 /{\bar{\sigma }^2})\) vs. the number of studies k involved in the meta-analysis. It can be seen that for k larger than 20 the difference between \(s^2\) and the harmonic mean becomes rather small. Of course, this occurs much earlier for the small-range setting 3 and the moderate-range setting 3. This illustrates the result of Theorem 1 and also indicates when the limit is approached with acceptable approximation. Figure 4 shows that \(\log (s^2 /{\bar{\sigma }^2})\) also depends strongly on the variability of the study-specific variances involved in the meta-analysis. Clearly, the smaller the coefficient of variation the closer to one is also the ratio of \(s^2\) to \({\bar{\sigma }^2}\). This illustrates Theorem 2.

Scatter plot of \(\log (s^2 /{\bar{\sigma }^2})\) versus the coefficient of variation (CV) of the study-specific variances involved in the meta-analysis for the three settings of the simulation study as described in Sect. 4; the numbers next to the symbols in the graph indicate the number of studies involved in the meta-analysis

5 Discussion and conclusion

It was seen that \(s^2\) approximates the harmonic mean \([\frac{1}{k} \sum _{i=1}^k w_i]^{-1}\). This asymptotic result has been achieved under fairly mild assumptions (study-specific variances need to be bounded). As a referee gratefully points out, Jackson and Bowden (2009, Sect. 3.1) also mention this result without proof in a simulation study and it remains unclear, in the work of Jackson and Bowden, how general the result is meant to hold. Some general conditions are given here, in Theorem 1, under which the asymptotic result is valid.

The harmonic mean appears to be a more reasonable summary measure of the study-specific variance than the arithmetic mean. To make this point more clear consider that situation that \(\sigma _i^2=\sigma ^2/n_i\) with \(\sigma ^2=1\) for simplicity. Let us further assume that \(\sigma _i^2\) is estimated by \(s_i^2/n_i\) where \(s_i^2\) is the study-specific variance of study i. Then, under normality, \(Var(s_i^2)\) is proportional to \(1/n_i\), in other words, \(Var(s_i^2/n_i)\) is proportional to \(1/n_i^3\). As an optimal meta-analytic weighting scheme uses inverse variances the optimal summary measure would be

The harmonic mean \([\frac{1}{k} \sum _{i=1}^k n_i]^{-1}\) is a sharp upper bound for the RHS in (5), with the bound being sharp if all sample sizes agree. Hence we can understand the harmonic mean as an approximation of the optimal weighted summary of the study-specific variances.

Higgins’ \(I^2\) can be validly interpreted as the proportion of variance due to heterogeneity if the variance of the study-specific variances are small and/or the number of component studies is moderately large. This provides a valuable way of interpreting any value of \(I^2\). If, however, the number of component studies are small with considerable variation in the variances of the component studies it may be advisable to consider a more direct measure for the proportion due to heterogeneity.

Evidently, there is interest in developing a measure of heterogeneity which has an interpretation as a ‘proprotion of total variation due to heterogeneity’. In this line, Wetterslev et al. (2009) suggest a measure of heterogeneity, denoted by \(D^2\), involving, besides \(\tau ^2\), a new measure of average sampling error. The basis of \(D^2\) is the ratio of the harmonic mean of the study-specific variance increased by the heterogeneity variance to the harmonic mean of the study-specific variances. This new measure of heterogeneity seems to have a lot of rationale in its construction: however, it remains to be seen if it establishes itself as an alternative to Higgins’ \(I^2\).

In conclusion, a measure of heterogeneity of the form \(\tau ^2/(\tau ^2+ {\bar{\sigma }^2})\), with \({\bar{\sigma }^2}\) being the harmonic mean of the study-specific variances, seems to be a reasonable good choice. It seems also possible to extend this most straight forward definition of \(I^2\) to meta-regressive contexts. Clearly, estimating \(I^2\) as \(\frac{Q-(k-1)}{Q}\) (and not as \({\hat{\tau }^{2}}/({\hat{\tau }^{2}}+ {\bar{\sigma }^2})\) has considerable benefits, in particular as it is easily carried forward to meta-analytic regression approaches. The results given here justify that \(\frac{Q-(k-1)}{Q}\) can still be interpreted as proportion of explained variance due to heterogeneity. In fact, it is the latter way of defining \(I^2\) which allow Jackson et al. (2012) to extend \(I^2\) to a multivariate meta-analytic context. Here, multivariate meta-analysis is understood in the dense of having several outcome measures or endpoints simultaneously available for meta-analysis. In the approach of Jackson et al. (2012), Q is first extended to a quadratic form incorporating all outcome measures, and then, in a second step, \(I^2\) is then defined as \(\frac{Q-\nu }{Q}\) where \(\nu \) then corresponds to the degrees of freedom involved in the quadratic form. The concept of ‘explained variance due to heterogeneity’ is evidently more difficult to generalize as within-study and between-study variation involve covariance matrices due to the multivariate nature of the meta-analysis. This area is clearly of great interest for future work.

References

Biggerstaff BJ, Jackson D (2008) The exact distribution of Cochran’s heterogeneity statistic in one-way random-effects meta-analysis. Stat Med 27:6093–6110

Böhning D, Kuhnert R, Rattanasiri S (2008) Meta-analysis of binary data using profile likelihood. Chapman & Hall, London

Borenstein M, Hedges LV, Higgins JPT, Rothstein HR (2009) Introduction to meta-analysis. John Wiley & Sons, Chichester

Borenstein M, Higgins JPT, Hedges LV, Rothstein HR (2017) Basics of meta-analysis: \(I^2\) is not an absolute measure of heteorgeneity. Res Synth Methods 8:5–18

Egger M, Smith GD, Aliman DG (2001) Systematic reviews in health care: meta-analysis in context. BMJ Publishing Group, London

Hoaglin DC (2016) Misunderstandings about \(Q\) and ‘Cochran’s \(Q\) test’ inn meta-analysis. Stat Med 35:485–495

Higgins JPT, Thompson SG (2002) Quantifying heterogeneity in meta-analysis. Stat Med 21:1539–1558

Jackson D, Bowden J (2009) A re-evaluation of the ‘quantile approximation method’ for random effects meta-analysis. Stat Med 28:338–348

Jackson D, White IR, Riley RD (2012) Quantifying the impact of between-study heterogeneity in multivariate meta-analysis. Stat Med 31:3805–3820

Petitti DB (1994) Meta-analysis decision analysis and cost-effectiveness analysis. Oxford University Press, New York

Rücker G, Schwarzer G, Carpenter JR, Schumacher M (2008) Undue reliance on \(I^2\) in assessing heterogeneity may mislead. BMC Med Res Methodol 8:79

Schulze R, Holling H, Böhning D (2003) Meta-analysis: new developments and applications in medical and social sciences. Göttingen, Hogrefe & Huber

Sutton AJ, Abrams KR, Jones DR, Sheldon TA, Song F (2000) Methods for meta-analysis in medical research. Wiley, New York

Wetterslev J, Thorlund K, Brok J, Gluud C (2009) Estimating required information size by quantifying diversity in random-effects model meta-analyses. BMC Med Res Methodol 9:86

Acknowledgements

DB is grateful to the PhD students of statistics at Thammasat University, Faculty of Sciences, Rangsit Campus, Bangkok for their interest and discussions at the occasion of a course on Statistical Methods for Meta-Analysis which DB gave during the period of August 2016. This research is funded by the German Research Foundation (DFG) under project HO 1286/7-3. All authors are grateful to two anonymous referees for their very insightful comments. Thanks go also to the Editor for the helpful remarks.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

Theorem 3

Let \(I^2\) be defined as \(I^2=\frac{Q-(k-1)}{Q}\). Then

where \(s^2=(k-1)\sum _{i=1}^k w_i/[(\sum _{i=1}^k w_i)^2 - \sum _{i=1}^k w_i^2]\).

Proof

We have that

which ends the proof. \(\square \)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Böhning, D., Lerdsuwansri, R. & Holling, H. Some general points on the \(I^2\)-measure of heterogeneity in meta-analysis. Metrika 80, 685–695 (2017). https://doi.org/10.1007/s00184-017-0622-3

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00184-017-0622-3