Abstract

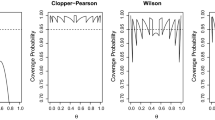

Two-sided confidence intervals for a probability \(p\) under a prescribed confidence level \(\gamma \) are an elementary tool of statistical data analysis. A confidence interval has two basic quality characteristics: i) exactness, i. e., whether the actual coverage probability equals or exceeds the prescribed level \(\gamma \); ii) inferential precision, measured by the length of the confidence interval. The interval provided by Clopper and Pearson (Biometrika 26:404–413, 1934) is the only exact interval actually used in statistical data analysis. Various authors have suggested shorter, i. e., more precise exact intervals. The present paper makes two contributions. i) We provide a general design scheme for minimum volume confidence regions under prior knowledge on the target parameter. ii) We apply the scheme to the problem of confidence intervals for a probability \(p\) where prior knowledge is expressed in a flexible way by a beta distribution on a subset of the unit interval.

Similar content being viewed by others

References

Abramowitz M, Stegun IA (eds) (1972) Handbook of mathematical functions with formulas, graphs, and mathematical tables. New York: Dover Publications

Agresti A, Coull BA (1998) Approximate is better than “exact” for interval estimation of binomial proportions. Am Stat 52:119–126

Agresti A, Min Y (2001) On small-sample confidence intervals for parameters in discrete distributions. Biometrics 57(3):963–971

Berg N (2006) A simple Bayesian procedure for sample size determination in an audit of property value appraisals. Real Estate Econ 34(1):133–155

Blaker H (2000) Confidence curves and improved exact confidence intervals for discrete distributions. Can J Stat 28(4):783–798

Blocher E, Robertson JC (1976) Bayesian sampling procedures for auditors: computer-assisted instruction. Acc Rev 51(2):359–363

Blyth CR, Hutchinson DW (1960) Tables of Neyman-shortest unbiased confidence intervals for the binomial parameter. Boimetrika 47:381–391

Blyth CR, Still HA (1983) Binomial confidence intervals. J Am Stat Assoc 78(381):108–116

Bunke O (1959/1960) Neue Konfidenzintervalle für den Parameter der Binomialverteilung. Wissenschaftliche Zeitschrift der Humboldt-Universität Berlin, Mathematisch-Naturwissenschaftliche Reihe 9:335–363

Chaloner KM, Duncan GT (1983) Assessment of a beta prior distribution: PM elicitation. The Statistician 32:174–180

Clopper CJ, Pearson ES (1934) The use of confidence or fiducial limits illustrated in the case of the binomial. Biometrika 26:404–413

von Collani E, Dräger K (2001) Binomial distribution handbook for scientists and engineers. Birkhäuser, Boston

von Collani E, Dumitrescu M (2001) Complete Neyman measurement procedure. Metrika 54(2):111–130

von Collani E, Dumitrescu M, Lepenis R (2001) Neyman measurement and prediction procedures. Econ Qual Control 16(1):109–132

Corless JC (1972) Assessing prior distributions for applying Baysian statistics in auditing. Acc Rev 47(3):556–566

Crow EL (1956) Confidence interval for a proportion. Biometrika 43:423–435

Garthwaite PH, O’Hagan A (2000) Quantifying expert opinion in the UK water industry: an experimental study. The Statistician 49:455–477

Godfrey J, Andrews R (1982) A finite population Bayesian model for compliance testing. J Acc Res 20(2):304–315

Hald A (1981) Statistical theory of sampling inspection by attributes. Academic Press, London

Ham J, Losell D, Smieliauskas W (1985) An empirical study of error characteristics in accounting populations. Acc Rev 60(3):387–406

Hewitt E, Stromberg K (1969) Real and abstract analysis, 2nd edn. Springer, New York

Hogarth RM (1975) Cognitive processes and the assessment of subjective probability distributions. J Am Stat Assoc 70:271–289

Jeffreys H (1946) An invariant form for the prior probability in estimation problems. Proc Royal Soc Series A, Math Phys Sci 186(1007):453–461

Johnson JR, Leitch RA, Neter J (1981) Characteristics of errors in accounts receivable and inventory audits. Acc Rev 56(2):270–293

Kadane JB, Dickey JM, Winkler RL, Smith WS, Peters SC (1980) Interactive elicitation of opinion for a normal linear model. J Am Stat Assoc 75:845–854

Kendrick T (2009) Identifying and managing project risk: essential tools for failure-proofing your project, 2nd edn. AMACOM, New York

Krishnamoorty K, Peng J (2007) Some properties of the exact and score methods for binomial proportion and sample size calculation. Communications in statistics: simulation and computation 36:1171–1186

Lurz K, Göb R (2013) Shortest two-sided confidence intervals for a probability under prior information: computational algorithm and the R Package CI preprints of the Institute of Mathematics, 331, University of Würzburg

Neyman J (1935) On the problem of a confidence interval. Ann Math Stat 6(3):111–116

Neyman J (1937) Outline of a theory of statistical estimation based on the classical theory of probability. Philos Trans Royal Soc, Series A, Math Phys Sci 236(767):333–380

O’Hagan A (1998) Eliciting expert beliefs in substantial practical applications. The Statistician 47(1):21–35

Sterne TE (1954) Some remarks on confidence or fiducial limits. Biometrika 41:275–278

Uhlmann W (1982) Statistische Qualitätskontrolle, 2. Auflage, Teubner, Stuttgart

Walls L, Quigley J (2001) Building prior distributions to support Bayesian reliability growth modeling using expert judgment. Reliab Eng Sys Saf 74(2):117–128

Wilson EB (1927) Probable inference, the law of succession, and statistical inference. J Am Stat Assoc 22:209–212

Author information

Authors and Affiliations

Corresponding author

Appendices

Proofs related to Section 2

1.1 Proof of theorem 1

Proof of assertion b) of theorem 1

Let \(A\) be an arbitrary level \(\gamma \) MPS. Let \(y\in R_2\) be fixed. We have

and hence \(\text{ P }_{y} (X\in A^{\star }_y{\setminus }A_y) \le \text{ P }_{y} (X\in A_y{\setminus }A^{\star }_y)\). We have \(A^{\star }_y{\setminus }A_y \subset D_{\ge s_y} (y)\) and \(A_y{\setminus }A^{\star }_y \subset D_{\le s_y} (y)\), thus \(\inf _{x\in A^{\star }_y{\setminus }A_y} Q_y(x) \ge \sup _{x\in A_y{\setminus }A^{\star }_y} Q_y(x)\). If \(\inf _{x\in A^{\star }_y{\setminus }A_y} Q_y(x)=0\), then \(s_y =0\), hence \(D_{> s_y}(y)=\{x\vert f_{X\vert Y=y}(x)>0\}\), and thus \(G_y(s_y)=\text{ P }_{y} (X\in D_{> s_y} (y)) =1>\gamma \) contradictory to the assumptions of theorem 1. Hence \(\inf _{x\in A^{\star }_y{\setminus }A_y} Q_y(x)>0\). We obtain the inequalities

Hence \(\int _{A^{\star }_y{\setminus }A_y} f_{X}(x) \, \text{ d }\mu _1(x)\le \int _{A_y{\setminus }A^{\star }_y} f_{X}(x) \, \text{ d }\mu _1(x)\), and thus we have \( \int _{A^{\star }_y} f_{X}(x) \, \text{ d }\mu _1 (x)\le \int _{A_y} f_{X}(x) \, \text{ d }\mu _1(x)\).

The latter inequality was proven for arbitrary \(y\in R_2\). Hence by definition (3) we obtain \(V(A^{\star }) \le V(A)\). This completes the proof of assertion b) of theorem 1.

Proof of assertion c) of theorem 1

Consider \(y\in R_2\) where \(D_{= s_y} (y)= \bigcup _I B_i\) is a countable union of intervals \(B_i\) with disjoint interior. We can assume \(G_y(s_y^-)- G_y(s_y)= \text{ P }_y (X\in D_{= s_y}(y))>0\). From properties of the Lebesgue–Borel integral it follows that for any \(0\le \alpha _i\le \int _{B_i}f_X\, \text{ d }\mu _1\) there is a subinterval \(B_{i,\alpha _i}\subset B_i\) with \(\int _{B_{i,\alpha _i}}f_X\, \text{ d }\mu _1 =\alpha _i\). Let \(\rho = [\gamma -G_y(s_y)]/[G_y(s_y^-)- G_y(s_y)]\). For \(i\in I\), let \(\alpha _i= \rho \int _{B_{i}}f_X\, \text{ d }\mu _1\). Let \(E(y)= \bigcup _I B_{i,\alpha _i}\). Then

hence \(\text{ P }_{y} (X\in D_{> s_y}(y)\cup E(y)) = G_y(s_y) + \gamma -G_y(s_y)=\gamma \).

1.2 Proof of proposition 2

We prove assertion a) of proposition 2. The proof of assertion b) is completely analogous. The sets of conditions a.i) and a.ii) are completely symmetric. Hence it suffices to prove the implication from a.i) to a.ii). Assume that the conditions a.i.1), a.i.2), a.i.3) are valid.

To prove the validity of b.ii.1), assume \(y\in R_2\) such that \(A_y\) is not an interval. Then there are \(x_1, x^{\prime }, x_2 \in A_y, x_1 < x^{\prime }< x_2\), with \(x_1, x_2\in A_y, x^{\prime }\notin A_y\). Hence \(y\notin A_{x^{\prime }}\), i. e., either \(y < A_{x^{\prime }}\), or \(y > A_{x^{\prime }}\). Consider the former case. By property i.2), \(y<A_{x_2}\), hence \(x_2\notin A_y\), contradictory to the assumptions on \(x_2\). The second case \(y > A_{x^{\prime }}\) is treated analogously. It follows that \(A_y\) is an interval.

To prove the validity of b.ii.2), consider \(y_1, y_2 \in R_2, y_1 < y_2, x\in R_1\) with \(x < A_{y_1}\). Assume \(x^{\prime }\in A_{y_2}\) with \(x\ge x^{\prime }\). Then \(x^{\prime } \le x < A_{y_1}\). Hence \(x^{\prime } \in A_{y_2}{\setminus }A_{y_1}\). Since \(x\notin A_{y_1}\) we have \(y_1\notin A_{x}\). Because of the stipulated interval property of \(A_x\), see assumption a.i.1), we have either \(y_1< A_x\) or \(y_1> A_x\). Assume \(y_1> A_x\). Then by property a.i.3) also \(y_1> A_{x^{\prime }}\), hence \(y_2> A_{x^{\prime }}\), hence \(x^{\prime }\notin A_{y_2}\), in contradiction to the above result \(x^{\prime } \in A_{y_2}{\setminus }A_{y_1}\). Thus \(y_1< A_x\). From \(x^{\prime } \le x < A_{y_1}\) we obtain by property i.2) for all \(x^{\prime \prime }\in A_{y_1}\) that \(y_1< A_{x^{\prime \prime }}\), hence in particular \(x^{\prime \prime }\notin A_{y_1}\), in contradiction to the assumption \(x^{\prime \prime }\in A_{y_1}\). This final contradiction results from assuming an \(x^{\prime }\in A_{y_2}\) with \(x\ge x^{\prime }\). Hence \(x < A_{y_2}\). This proves the validity of bii.2).

The proof of the validity of b.ii.3) proceeds analogously.

Proofs related to Section 5

1.1 Proof of proposition 5

Proof of assertion a) of proposition 5

Let \(0<p_0 <p_1 <1\). We have

\([p_0;p_1]\ni t \mapsto \ln (t/(1-t))^m\) is a continuous function on \([p_0; p_1]\). Hence

Hence, by applying the well-known theorem on differentiation under the integral sign to (13), we find that \(v_{p_0,p_1,a,b}(x)\) can be differentiated with respect to \(x\) with the result given by Eq. (16). By Eq. (16) we have \(v^{\prime \prime }_{p_0,p_1,a,b}(x)>0\) on \([0;n]\). Hence \(v^{\prime }_{p_0,p_1,a,b}\) is strictly increasing on \([0;n]\). The remainder of assertion a) follows obviously.

Proof of assertion b) of proposition 5

The first partial derivatives of the symmetric beta function are given by the equation \(\frac{\partial }{\partial s} B(s,t) = B(s,t) \Big [\psi (s)-\psi (s+t)\Big ]\), see Abramowitz and Stegun (1972), equation 6.6.8, where \(\psi \) is the digamma function. With Eqs. (9) and (13) we obtain the derivative (17). The digamma function is strictly increasing on \((0;+\infty )\), see equation 6.3.21 of Abramowitz and Stegun (1972), for instance. Since \(B(x+a,n-x+b)>0\), the sign of \(v^{\prime }_{0,1}(x)\) is determined by the sign of \(\psi (x+1)-\psi (n-x+1)\). The integral representation 6.3.21 of Abramowitz and Stegun (1972) shows that \(\psi \) is strictly increasing on \((0;+\infty )\), hence \([0;n] \ni x \mapsto \psi (x+a)-\psi (n-x+b)\) is strictly increasing.

Proof of assertion c) of proposition 5

Let \(0<p_0 < 1\). For \(p_0 <q \le 1, x\in [0;n]\) let

Evidently, \(\lim _{q\uparrow 1} h_{p_0,q,a,b}(x) = h_{p_0,1,a,b}(x) = v_{p_0,1,a,b}(x)\) \(x\in [0;n]\). Analogously to the proof of a) of proposition 5 we find

for \(m=0,1,\ldots \), and we see that there exists a value \(0\le z_{p_0,q,a,b}\le n\) such that \(h^{\prime }_{p_0,q,a,b}(x)<0\) for \(0 < x < z_{p_0,q,a,b}\), and \(h^{\prime }_{p_0,q,a,b}(x)>0\) for \( z_{p_0,q,a,b}< x < n\). From Eq. (24) we obtain for fixed \(x\in [0;n], m=0,1,\ldots \),

for \( p_0 <q < 1\), with the recursion

From (25) we find in particular for \(m=1,2,\ldots \)

Let \(p_0 < q_1<q_2<\cdots <1\) with \(\lim _k q_k=1, q_k>0.5\). From (27) we see that the sequence \((z_{p_0, q_k,a,b})_{k\in \mathbb{N }}\) is decreasing. Since \(0\le z_{p_0, q_k,a,b} \le n, (z_{p_0, q_k,a,b})_{k\in \mathbb{N }}\) converges to a value \(x_{p_0,1,a,b} \in [0;n]\). Let \(0<u <w <x_{p_0,1,a,b}\). Then \(0<u <w <z_{p_0, q_k,a,b}\) for all \(k\in \mathbb{N }\), thus \(h_{p_0,q_k,a,b}(u) > h_{p_0,q_k,a,b}(w)\) for all \(k\in \mathbb{N }\), and hence by convergence \(v_{p_0,1,a,b}(u) \ge v_{p_0, 1,a,b}(w)\). Let \(x_{p_0,1,a,b} <u <w <n\). Then there is a \(k_0\in \mathbb{N }\) with \(z_{p_0, q_k,a,b} <u <w <n\) for all \(k\ge k_0\). Thus \(h_{p_0,q_k,a,b}(u) < h_{p_0,q_k,a,b}(w)\) for all \(k\ge k_0\), and hence by convergence \(v_{p_0,1,a,b}(u) \le v_{p_0, 1,a,b}(w)\).

The proof of assertion d) of proposition 5 is completely analogous to the proof of assertion c).

1.2 Proof of proposition 6

Elementary calculus provides the derivative (18) in assertion a).

Proof of assertion b) of proposition 6

Consider assertion b) with the case \(0<p_0 <p_1 <1\). Let \(0<x<n\) and let the measure \(\mu _x\) on the Borel field in \([p_0;p_1]\) be defined by

For \(x\in (0;n)\) we obtain from Eq. (13) \(\mu _x([p_0;p_1])= v_{p_0,p_1,a,b}(x)\). With Eq. (16) we obtain for \(x\in (0;n)\)

The strict Cauchy–Schwarz inequality \(\;[\int _B \vert g_1 g_2\vert \, \text{ d }\mu _x]^2 < \int _B g_1^2 \, \text{ d }\mu _x(t) \int _B g_2^2 \, \text{ d }\mu _x(t)\;\) holds for \(g_1(t)= \ln (t/(1-t)), g_2(t)=1\), since these functions are not linearly dependent, see Hewitt and Stromberg (1969). Hence

Hence \({{\text{ d }}\over {\text{ d }} x} {v^{\prime }_{p_0,p_1,a,b}(x) \over v_{p_0,p_1,a,b}(x)} >0\) on \([0;n]\), and \([0;n]\ni x \mapsto v^{\prime }_{p_0,p_1,a,b}(x) / v_{p_0,p_1,a,b}(x)\) is strictly increasing. The remainder of assertion b) follows from the Eq. (18) for the derivative \(Q_{p_0,p_1,a,b,y}^{\prime } \) on \([0;n]\).

Consider assertion b) with the case \(0=p_0, 1=p_1\). From Eq. (17) we obtain

The proof of assertion b) of proposition 5 demonstrates that \([0;n] \ni x \mapsto \psi (x+a)-\psi (n-x+b)\) has at most one change of sign on \([0;n]\) which is from \(-\) to \(+\), if existing. Again, the remainder of assertion b) follows from the Eq. (18) for the derivative \(Q_{p_0,p_1,a,b,p}^{\prime }\) on \([0;n]\).

Proof of assertion c) of proposition 6

For \(p_0 <q \le 1, x\in [0;n]\), consider \(h_{p_0,q,a,b}(x) \) as defined by Eq. (23). Proceeding analogously to the proof of of assertion b) of proposition 6, we find that \({\text{ d } \over \text{ d } x} {h^{\prime }_{p_0,q,a,b}(x) \over h_{p_0,q,a,b}(x)} >0\) on \([0;n]\), and \([0;n]\ni x \mapsto h^{\prime }_{p_0,q,a,b}(x) / h_{p_0,q,a,b}(x)\) is strictly increasing for \(p_0 < q< 1\). In analogy to the definition of \(x_{p_0,q,a,b,y}\) in assertion b), define \(0\le z_{p_0,q,a,b,y}\le n\) by comparing \(h^{\prime }_{p_0,p_1,a,b}/ h_{p_0,p_1,a,b}\) with \(\ln (y /[ 1-y])\). From Eqs. (23) and (24) we find

With the recursion (26) and with the inequality (28) we obtain for fixed \(x\in [0;n]\)

for \(p_0 <q < 1, q>0.5\). Let \(p_0 < q_1<q_2<\cdots <1\) with \(\lim _k q_k=1, q_k>0.5\). From (29) we see that the sequence \((z_{p_0, q_k,a,b,y})_{k\in \mathbb{N }}\) is decreasing. Since \(0\le z_{p_0, q_k,a,b,y} \le n, (z_{p_0, q_k,a,b,y})_{k\in \mathbb{N }}\) converges to a value \(x_{p_0, 1,a,b, y} \in [0;n]\). The remainder of the proof proceeds analogously to the proof of assertion c) of proposition 5.

The proof of assertion d) of proposition 6 is completely analogous to the proof of assertion c).

Proofs related to Section 6

1.1 Proof of proposition 7

Consider the assumptions of assertion a). By proposition 2, \(A_x =\{y \in [p_0;p_1] \vert c_L(y) \le x\le c_U(y)\}\) is an interval for all \(x\in \{0,\ldots ,n\}\) with endpoints \(\inf A_x, \sup A_x\) increasing in \(x\). We have to prove that the endpoints \(\inf A_x, \sup A_x\) are elements of \(A_x\) for \(x\in \{0,\ldots ,n\}\). Let \(x\in \{0,\ldots ,n\}\). If \(A_x\) is one-elementary, then clearly \(\inf A_x, \sup A_x \in A_x\). Let \(A_x\) have an open interior, and let \((y_l)\) be a sequence from \(A_x\) with \(y_1 > y_2 >\ldots , \lim _l y_l= \inf A_x\). Then \(c_L(y_l)\le x \le c_U(y_l)\) for all \(l\). Hence by convergence \(c_L ({\inf A_x}) \le c_L ({\inf A_x}^+) \le x \le c_U ({\inf A_x}^+)=c_U ({\inf A_x})\), hence \(\inf A_x \in A_x\), and thus \(\inf A_x =\min A_x\). The proof of \(\sup A_x =\max A_x \in A_x\) is completely analogous.

Assertion b) is an application of proposition 2.

1.2 Proof of proposition 8

The proof makes use of the subsequent proposition 9 on the binomial OC \(L_{n,c}(y)\) defined by Eq. (22), see Uhlmann (1982).

Proposition 9

(Binomial OC) Let \(n\in \mathbb{N }, c \in \mathbb{N _{0}}, c\le n\).

For \(y \!\in \! (0;1)\) we have \(\; L^{\prime }_{n, c} (y) \!=\! - n \left( \begin{array}{l} n\!-\!1\\ c \end{array}\right) y^c (1-y)^{n -c-1} \!=\! -(n -c) \left( \begin{array}{l} n\\ c \end{array}\right) y^c (1-y)^{n-c-1}\). In case of \(c < n, L_{n,c}\) is strictly decreasing on the interval \([0;1]\) with \(L_{n,c}(0)=1, L_{n,c}(1)=0\). In case of \(c= n \) we have \(L_{n,c}(y) = L_{n, n}(y) = 1\) for \(y\in [0;1]\).

Assertion a) of proposition 8 follows directly from the definition of the quantities \(p_{x,\gamma }, \widetilde{p}_{x, \gamma }\), and the monotonicity properties of the binomial OC explained by proposition 9.

For the proof of assertion b), let \(\gamma \ge 0.5\). Let \(0<x<n\). Then

hence \(\widetilde{p}_{x, \gamma }> p_{x, \gamma }\) since \(L_{n,x}\) is strictly decreasing on \([0;1]\). In the case of \(x=0\), we have by definition \(p_{x, \gamma }=0.0 <\widetilde{p}_{x, \gamma }\), and similarly in the case of \(x=n\) by definition \(p_{x, \gamma }<1.0=\widetilde{p}_{x, \gamma }\).

For the proof of assertion c), let \(x\in \{0,\ldots ,n\}, y\in (p_{x, \gamma }; \widetilde{p}_{x, \gamma }) \cap [p_0;p_1]\). Assume \(x\le c_L(y)-1\). Then we have \(x\le n-1\) and we find

in contradiction to the property (21). Now assume \(x\ge c_U(y)+1\). Then we have \(x\ge 1\) and we find

in contradiction to the property (21). This proves \(c_L(y) \le x\le c_U(y)\), and hence also \(y_L(x)\le y \le y_U(x)\).

Rights and permissions

About this article

Cite this article

Göb, R., Lurz, K. Design and analysis of shortest two-sided confidence intervals for a probability under prior information. Metrika 77, 389–413 (2014). https://doi.org/10.1007/s00184-013-0445-9

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00184-013-0445-9