Abstract

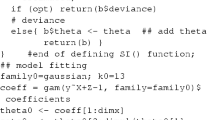

The single-index model is an important tool in multivariate nonparametric regression. This paper deals with M-estimators for the single-index model. Unlike the existing M-estimator for the single-index model, the unknown link function is approximated by B-spline and M-estimators for the parameter and the nonparametric component are obtained in one step. The proposed M-estimator of unknown function is shown to attain the convergence rate as that of the optimal global rate of convergence of estimators for nonparametric regression according to Stone (Ann Stat 8:1348–1360, 1980; Ann Stat 10:1040–1053, 1982), and the M-estimator of parameter is \(\sqrt{n}\)-consistent and asymptotically normal. A small sample simulation study showed that the M-estimators proposed in this paper are robust. An application to real data illustrates the estimator’s usefulness.

Similar content being viewed by others

References

Carroll RJ, Fan J, Gijbels I, Wand MP (1997) Generalized partially linear single-index models. J Am Stat Assoc 92:477–489

Cheng G, Huang JZ (2010) Bootstrap consistency for general semiparametric M-estimation. Ann Stat 38:2884–2915

Cox DD (1983) Asymptotics for M-type smoothing splines. Ann Stat 11:530–551

Delecroix M, Hristache M, Patilea V (2006) On semiparametric M-estimation in single-index regression. J Stat Plan Infer 136:730–769

Eggleston HG (1958) Convexity, Cambridge tracts in mathematics and mathematical physics, vol 47. Cambridge University Press, Cambridge

Elmi A, Ratcliffe SJ, Parrey S, Guo WS (2011) A B-spline based semiparametric nonlinear mixed effects model. J Comput Graph Stat 20:492–509

Fan J, Hu T, Truong Y (1994) Robust nonparametric function estimation. Scand J Stat 21:433–446

Gao J, Liang H (1997) Statistical inference in single-index and partially nonlinear models. Ann Inst Stat Math 49:493–517

Hample FR, Ronchetti EM, Rousseeuw PJ, Stahel WA (1986) Robust ststistics. The approach based on influence functions. Wiley, New York

Härdle W, Stoker TM (1989) Investing smooth multiple regression by the method of average derivatives. J Am Stat Assoc 84:986–995

Härdle W, Hall P, Ichimura H (1993) Optimal smoothing in single-index models. Ann Stat 21:157–178

He X, Shi P (1994) Convergence rate of B-spline estimators of nonparametric conditional quantile functions. J Nonparametric Stat 3:299–308

He X, Shi P (1996) Bivariate tensor—product B-spline in a partly linear model. J Multivar Anal 58:162–181

He X, Zhu ZY, Fung WK (2002) Estimationin a semiparametric model for longitudinal data with unspecidied dependence structure. Biometrika 89:579–590

He X, Fung WK, Zhu ZY (2005) Robust estimation in genearlized partial linear models for clusters data. J Am Stat Assoc 100:1176–1184

Hristache M, Juditsky A, Spokoiny V (2001) Direct estimation of the index coefficient in a single-index model. Ann Stat 29:595–623

Huang JZ (2003) Local asymptotic for polynomial spline regression. Ann Stat 31:1600–1635

Huang JZ, Liu L (2006) Polynomial spline estimation and inference of proportional hazards regression models with flexible relative risk form. Biometric 62:793–802

Huang J, Wu C, Zhou L (2002) Varying-coefficient models and basis function approximations for the analysis of repeated measurements. Biometrika 89:111–128

Huber PJ (1964) Robust estimation of location parameter. Ann Math Stat 35:73–101

Huber PJ (1981) Robust statistics. Wiley, New York

Ichimura H (1993) Semiparametric least square (SLS) and weighted SLS estimation of single-index models. J Econom 58:71–120

Jiang CR, Wang JL (2011) Functional single index models for longitudinal data. Ann Stat 39:362–388

Kozek AS (2003) On M-estimators and normal quantiles. Ann Stat 31:1170–1185

Li JB, Zhang RQ (2011) Partially varying coefficient single-index proportional hazards regression models. Comput Stat Data Anal 55:389–400

Liang H, Liu X, Li RZ, Tsai CL (2010) Estimation and testing for partially linear single-index model. Ann Stat 38:3811–3836

Lin X, Wang N, Welsh AH, Carroll RJ (2004) Equivalent kernel of smoothing splines in nonparametric regression for clustered/longitudinal data. Biometrika 91:177–193

Naik P, Tsai CL (2000) Partial least squares estimator for single-index. J R Stat Soc Ser B 62:763–771

Powell JL, Stoker JH, Stoker TM (1989) SAemiparametric estimation of index coefficients. Econometrica 57:1403–1430

Rice J (1986) Convergence rates for partially splined models. Stat Probab Lett 4:203–208

Schumaker LL (1981) Spline functions. Wiley, New York

Shi P, Li G (1995) Optimal global rates of convergence of B-spline M-estimators for nonparametric regression. Stat Sinica 5:303–318

Silverman BW (1984) Spline smoothing: the equivalent variable kernel method. Ann Stat 12:898–916

Speckman P (1988) Kernel smoothing in partial linear models. J R Stat Soc Ser B 50:413–436

Stoker TM (1986) Consistent estimation of scaled coefficients. Econometrica 54:1461–1481

Stone C (1980) Optimal rates of convergence for nonparametric estimators. Ann Stat 8:1348–1360

Stone C (1982) Optimal global rates of convergence for nonparametric regression. Ann Stat 10:1040–1053

Wang N (2003) Margnial nonparametric kernel regression accounting for within-subject correlation. Biometrika 90:43–52

Wang L, Yang LJ (2009) Spline estimation of single-index models. Stat Sinica 19:765–783

Welsh AH (1996) Robust estimation of smooth regression and spread functions and theirs derivates. Stat Sinica 6:347–366

Welsh AH, Lin X, Carroll RJ (2002) Marginal nonpartametric regression: locality and efficiency of spline and kernel methods. J Am Stat Assoc 97:482–493

Wu WB (2007) M-estimation of linear models with dependent errors. Ann Stat 35:495–521

Wu TZ, Yu KM, Yu Y (2010) Single-index quantile regression. J Multi Anal 101:1607–1621

Xia YC, Härdle W (2006) Semi-parametric estimation of partially linear single-index models. J Multi Anal 97:1162–1184

Xia YC, Tong H, Li WK, Zhu LX (2002) An adaptive estimation of dimension reduction space. J R Stat Soc Ser B 64:363–410

Yu Y, Ruppert D (2002) Penalized spline estimation for partially linear single-index models. J Am Stat Assoc 97:1042–1054

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

In this section, we prove the results of Theorems 1 and 2. Let

\(H_n^2=NZ_n^\tau Z_n,\quad z_i=H_n^{-1}B(x_i^\tau \beta _0), \lambda _n\) is the smallest eigenvalue of \(N/nH_n^2\).

Lemma 6.1

If the Assumption 1–2 is satisfied, then

where \(I\) is an identity matrix.

Proof

The proof of this Lemma 6.1 is referred to Gao and Liang (1997). \(\square \)

Lemma 6.2

Under Assumption 1, there exists a constant \(c\) such that

where \(k_n\) is the number of knots in the same order of \(N\).

This Lemma justifies the approximation power of B-spline. Its proof follows readily from Corollary 6.21 in Schumaker (1981) .

Lemma 6.3

Assume \(lim_{n\rightarrow \infty } n^{\gamma -1}k_n^2=0\) for some \(\gamma \ge 0\), then with probability one

A complete proof can be found in Shi and Li (1995).

Proof of Theorem 1

By Lemma 6.2, there exists a constant \(M\) such that

Thus,

Then, in order to prove (2.5) hold, it suffices to show that

Denote \(V^*(\theta _0)=(I-P)V(\theta _0)= (v_1^*(\theta _0),\ldots ,v_n^*(\theta _0))^\tau , S_n=V^*(\theta _0)^\tau V^*(\theta _0)\).

Let

In order to attain (6.7), we first show that \(||\hat{\theta }||=O_p(k_n^{1/2})\). Let \(\tilde{v_i}(\theta _0)=S_n^{-\frac{1}{2}}v_i^*(\theta _0), \tilde{B}(x_i^\tau \beta _0)=H_n^{-1}B(x_i^\tau \beta _0)N^{1/2}\).

Then, we have that

which is minimized at \(\hat{\theta }\), where \(R^*(\theta )=B^{\prime }(\beta _0^\tau x_i)^\tau \delta _0 x_i^\tau S_n^{-1/2}\theta _1-B^{\prime }(x_i^\tau \beta ^*)^\tau \delta x_i^\tau \) \( S_n^{-1/2}\theta _1, \beta ^*\) is between \(\beta \) and \(\beta _0\).

According to the properties of B-spline, there exist constant vectors \(s_j j=1, \ldots , N\), for any \(h_j(x_i^\tau \beta _0)\),

where \(\tilde{R}_{nij}\) is the remainder of \(h_j(x_i^\tau \beta _0)\) approximating by B-spline. According to Lemma 6.2, \(g^{\prime }(x_i^\tau \beta _0)x_i=B^{\prime }(x_i^\tau \beta _0)^\tau \delta _0 x_i+\tilde{R}_{ni}, \sup _{1\le ni}|\tilde{R}_{ni}|\le k_n^{-(r-1)}\) by Assumption 3, we know that there exist matrices \(G\) and \(W_n\) such that

where \(W_n=\tilde{R}_n+U_n, \tilde{R}_n=(\tilde{R}_{nij})_{n\times N}, U_n=(u_{ij})_{n\times N}, G=(s_1,\ldots ,s_N)\). Hence, any column of \(V^*(\theta _0)\) is of the order of \(O_p(n^{1/2})\),

Therefore, according to (6.8), Lemmas 6.1, 6.2 and 6.3

Thus, when \(|\theta |\le L, \delta =\delta _0+O_p(Nn^{-1/2})\). Because the derivative of \(g(\cdot )\) is continuous and bounded, by Lemma 6.2, have

As the support of \(X\) is convex, so when \(|\theta |\le L\)

Therefore

According to Assumption 4–5, by similar argument to those for the below Lemma 6.4 of this paper, we have for any \(L>0\), that

Note that \(|k_n^{-1/2}\sum _{i=1}^n\tilde{v_i}(\theta _0)R_{ni}b| =||k_n^{-1/2}S_n^{-\frac{1}{2}}(W_n^\tau (I-P)+G^\tau Z_n^\tau (I-P))r_n||=o_p(1),\) where \(r_n\) is a vector formed by \(R_{ni}b\) and \(R^*(\theta )=O_p(n^{-1/2})=o_p(R_{ni})\) for any \(L>0\).

By Assumption 4–5 and the fact \(\sum _{i=1}^n\tilde{v_i} (\theta _0)\tilde{B}(x_i^\tau \beta _0)^\tau =0\), we have

By the Tchebychev inequality and Assumption 4, we obtain

Hence, \(\sup _{||\theta ||=L} k_n^{-1/2}|\sum _{i=1}^n \tilde{v_i}(\theta _0)^\tau \theta _1\psi (e_i)|=O_p(L)\).

Similarly, \(\sup _{||\theta ||=L} k_n^{-1/2}|\sum _{i=1}^n \tilde{B}(x_i^\tau \beta _0)^\tau \theta _2\psi (e_i)|=O_p(L)\).

Thus, it follows from (6.13) that, for sufficiently large \(L\),

with probability tending to 1 as \(n\rightarrow \infty \). Combining (6.12) and (6.14) yields

which implies,by the convexity of \(\rho \), that

We then conclude that \(||\hat{\theta }||=O_p(k_n^{1/2})\). By (6.8), we have

Thus

Hence,

As the derivative of \(g(\cdot )\) is bounded and the support of \(X\) is compact, so

The proof of Theorem 1 is completed.

To obtain asymptotic normality of \(\hat{\beta }_0\), we shall make use of several asymptotic linearization results which are similar to Lemmas 6.3 and 6.4 of He and Shi (1996). The proofs is similar to the argument in He and Shi (1996).

Lemma 6.4

Under the Assumption of Theorem 2, we have for any \(L>0\) and \(M_0>0\)

where \(E_e\) is the expectation with respect to \(e\).

Proof

Because \(f_e(\cdot )\) is continuous at 0, there exist two positive numbers \(\omega _1,\omega _2\) such that when \(|t|\le \omega _2\), \(|f_e(t)|<\omega _1\) holds. By (6.13), (6.14) and Lemma 6.2, have

In order to prove the (6.19), it suffices to show that given \(\varepsilon >0\),

Denote \(\Gamma =\{\theta _1:|\theta _1|\le 1, \theta _1\in R^{p}\}\) as a union of \(K_n\) disjoint parts \(\Gamma _1,\ldots ,\Gamma _{k_n}\) such that the diameter of each part does not exceed \(q_0=k_n\varepsilon /(8b_2M\omega _2n)\). Then \(K_n\le (2\sqrt{p}/q_0+1)^p\). Choose \(\theta _{1j}\in \Gamma _j\), for each of \(j=1,\ldots , K_n\), by Assumptions 5–6 and Lemma 6.3, we have

where \(I(\cdot )\) is a indicator function. According to Assumption 6 and the mean theorem of differential, have

It follows the Assumption 6 again that,

Therefore, given \(X^{*}=(X_1,\ldots ,X_n)\), by (6.23), we have

By (6.21), (6.22), (6.23), (6.24) and Bernstein inequality, given any \(\varepsilon >0\), we have

where \(\gamma =\varepsilon ^2/8b_2^2M^2\omega _1\omega _2\). As \(k_n=n^{1/(2r+1)}\), so (6.19) holds. The Lemma 6.4 is shown. \(\square \)

Lemma 6.5

If Assumptions of Theorem 2 hold, then

where \(\sup _{|\theta _1|\le M_0,|\theta _2\le Lk_n^{1/2}}|r(\theta _1,\theta _2)|=o_p(1)\).

The proof of Lemma 6.5 is similar to those of (6.16), so the proof is omitted.

Proof of Theorem 2

Let \(\hat{\theta }^\tau =(\hat{\theta }_1^\tau , \hat{\theta }_2^\tau )\) as \(\hat{\theta }\) in the proof of Theorem 1, and \(\tilde{\theta _1}=b^{-1}\sum _{i=1}^n\tilde{v_i}(\theta _0)\psi (e_i)\). Following Lemmas 6.4, 6.5 and triangle inequality, for any \(L>0\) and \(\varepsilon >0\), we obtain

Notice that when \(L\rightarrow \infty , P\{|\tilde{\theta }_1|\le L\}\rightarrow 1, P\{|\hat{\theta }_2|\le Lk_n^{1/2}\}\rightarrow 1\) hold. Then

Therefore, when \(|\theta _1-\tilde{\theta }_1|=\varepsilon ,\theta _1\in R^p\), we obtain

It follows from the above formula that

According to Corollary 25 of Eggleston (1958), we have

By the definition of \(\hat{\theta }\), we obtain

According to Lemma 6.1, the central limit theorem and Slutsky’s theorem, we have

Rights and permissions

About this article

Cite this article

Zou, Q., Zhu, Z. M-estimators for single-index model using B-spline. Metrika 77, 225–246 (2014). https://doi.org/10.1007/s00184-013-0434-z

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00184-013-0434-z