Abstract

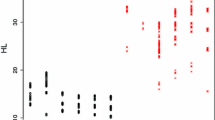

Finite mixture modeling approach is widely used for the analysis of bimodal or multimodal data that are individually observed in many situations. However, in some applications, the analysis becomes substantially challenging as the available data are grouped into categories. In this work, we assume that the observed data are grouped into distinct non-overlapping intervals and follow a finite mixture of normal distributions. For the inference of the model parameters, we propose a parametric approach that accounts for the categorical features of the data. The main idea of our method is to impute the missing information of the original data through the Bayesian framework using the Gibbs sampling techniques. The proposed method was compared with the maximum likelihood approach, which uses the Expectation-Maximization algorithm for the estimation of the model parameters. It was also illustrated with an application to the Old Faithful geyser data.

Similar content being viewed by others

References

Albert JH, Chib S (1993) Bayes inference via Gibbs sampling of autoregressive time series subject to markov mean and variance shifts. J Bus Econ Stat 11:1–15

Boldea O, Magnus JR (2009) Maximum likelihood estimation of the multivariate normal mixture model. J Am Stat Assoc 104:1539–1549

Cadez IV, Smyth P, McLachlan GJ, McLaren CE (2002) Maximum likelihood estimation of mixture densities for binned and truncated multivariate data. Mach Learn 47:7–34

Chen CWS, Chan JSK, So MKP, Lee K (2011) Classification in segmented regression problems. Comput Stat Data Anal 55:2276–2287

Chibs S (1996) Calculating posterior distributions and modal estimates in Markov mixture models. J Econom 75:79–97

Dempster AP, Laird NM, Rubin DB (1977) Maximum likelihood estimation incomplete data via the EM algorithm (with discussion). J R Stat Soc B 39:1–38

Diebolt J, Robert CP (1994) Estimation of finite mixture distributions through Bayesian sampling. J R Stat Soc B 56:363–375

Frühwirth-Schnatter S (2001) Markov Chain Monte Carlo estimation of classical and dynamic switching and mixture models. J Am Stat Assoc 96:196–209

Frühwirth-Schnatter S (2006) Finite mixture and Markov switching models. Springer, New York

Hardle W (1991) Smoothing techniques with implementation in S. Springer, New York

Gelfand AE, Hills SE, Racine-Poon A, Smith AFM (1990) Illustration of Bayesian inference in normal data models using Gibbs sampling. J Am Stat Assoc 85:972–985

Geman S, Geman D (1984) Stochastic relaxation, Gibbs distributions, and the Bayesian restoration of images. IEEE Trans Pattern Anal Mach Intell 6:721–741

Hunter DR, Wang S, Hettmansperger TP (2007) Inference for mixture of symmetric distributions. Ann Stat 35:224–251

Hogg RV, Klugman SA (1984) Loss distributions. Wiley-Interscience, New York

McLachlan GJ, Jones PN (1988) Fitting mixture models to grouped and truncated data via the EM algorithm. Biometrics 44:571–578

McLachlan GJ, Krishnan T (1997) The EM algorithm and extensions. Wiley, New York

McLachlan GJ, Peel D (1998) Robust cluster analysis via mixtures of multivariate t-distributions. In: Amin A, Dori D (eds) Lecture notes in computer science, vol 1451

McLachlan GJ, Peel D (2000) Finite mixture models. Wiley, New York

Melnykov V, Melnykov I (2012) Initializing the EM algorithm in Gaussian mixture models with an unknown number of components. Comput Stat Data Anal 56:1381–1395

Pearson K (1894) Contribution to the theory of mathematical evolution. Philos Trans R Soc Lond A 186: 343–414

Qu P, Qu Y (2000) A Bayesian approach to finite mixture models in bioassay via data augmentation and Gibbs sampling and its application to insecticide resistance. Biometrics 56:1249–1255

Richardson S, Green PJ (1997) On Bayesian analysis of mixtures with an unknown number of components. J R Stat Soc Ser B 59:731–792

Scallan AJ (1999) Fitting a mixture distribution to complex censored survival data using generalized linear models. J Appl Stat 26:747–753

Stephens M (2000) Dealing with label switching in mixture models. J R Stat Soc Ser B 62:795–809

Tanner MA, Wong WH (1987) The calculation of posterior distribution by data augmentation (with discussion). J Am Stat Assoc 82:528–550

Tanner MA (1994) Tools for statistical inference. Springer, New York

Acknowledgments

We thank the Editor and two anonymous reviewers for their insightful comments that helped improve the content of the paper. Also, we acknowledge the support by the National Science Council (NSC) of Taiwan Grant NSC101-2118-M-035-004-MY2 (Lee).

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1: Conditional distribution of \({\mathbf {Z}}_{i}\) given \(\varvec{\Psi }\) and \(D_{ik}=1\)

The conditional distribution of \({\mathbf {D}}_i\) given \((\varvec{\Psi },Z_{ri}=1)\) is the multinomial distribution \(Multinomial\left( 1,P_{1r}(\varvec{\Theta }_r), \ldots ,P_{Kr}(\varvec{\Theta }_r)\right) \). Hence conditionally on \(\varvec{\Psi }\) and \(Z_{ri} = 1\) the probability density function (pdf) of \(\left( D_{i1},\ldots ,D_{iK}\right) \) can be expressed as \(\{P_{kr}(\varvec{\Theta }_r)\}^{D_{ik}}\). It follows that the general expression of the pdf of \(\left( D_{i1},\ldots ,D_{iK}\right) |(Z_{1i},\ldots ,Z_{Ri})\) is \(\prod ^R_{r=1} [\prod ^K_{k=1}\{P_{kr}(\varvec{\Theta }_r)\}^{D_{ik}}]^{Z_{ri}}\). As a result, the joint pdf of \(\left( D_{i1},\ldots ,D_{iK}\right) \) and \((Z_{1i},\ldots ,Z_{Ri})\) is given as follows:

Moreover, the marginal pdf of \((D_{i1} = d_{i1},\ldots ,D_{iK} = d_{iK})\) is given as

Therefore,

and

Appendix 2: Joint probability density function of \((x_i,{\mathbf {D}}_{i},{\mathbf {Z}}_{i},\varvec{\Psi })\)

The conditional pdf of \(x_i\) given \(D_{ik}=1\) and \(Z_{ri}=1\) is specified as in (3). It follows that the general expression of the pdf of \(x_i\) given \(\varvec{\Psi }, D_{i}\) and \(Z_{i}\) can be expressed as

and the joint pdf of \((x_i, {\mathbf {D}}_i, {\mathbf {Z}}_i, \varvec{\Psi })\) is

Under the assumption that the components of the mixture distribution are normal we have

for \(r=1,\ldots ,R\). Moreover, noting that \(\sum _{k=1}^{K}D_{ik}=1, i=1,\ldots ,n\), it is straightforward to see that

Rights and permissions

About this article

Cite this article

Gau, SL., de Dieu Tapsoba, J. & Lee, SM. Bayesian approach for mixture models with grouped data. Comput Stat 29, 1025–1043 (2014). https://doi.org/10.1007/s00180-013-0478-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00180-013-0478-6