Abstract

An exponential penalty function (EPF) formulation based on method of multipliers is presented for solving multilevel optimization problems within the framework of analytical target cascading. The original all-at-once constrained optimization problem is decomposed into a hierarchical system with consistency constraints enforcing the target-response coupling in the connected elements. The objective function is combined with the consistency constraints in each element to formulate an augmented Lagrangian with EPF. The EPF formulation is implemented using double-loop (EPF I) and single-loop (EPF II) coordination strategies and two penalty-parameter-updating schemes. Four benchmark problems representing nonlinear convex and non-convex optimization problems with different number of design variables and design constraints are used to evaluate the computational characteristics of the proposed approaches. The same problems are also solved using four other approaches suggested in the literature, and the overall computational efficiency characteristics are compared and discussed.

Similar content being viewed by others

Notes

MATLAB Version 7.12.0.635 (R2011a); OS: XP SP3; Processor: Intel(R) Core(TM)2 Duo CPU E8400 @ 3 GHz and 3.25 GB RAM.

References

Bertsekas DP (2003) Nonlinear programming, 2nd edn, 2nd printing. Athena Scientific, Belmont

Chan KY (2008) A sequential linearization technique for analytical target cascading. In: Proceedings of ASME Design Engineering Technical Conferences IDETC, New York City, NY, USA

Han J (2008) Sequential linear programming coordination strategy for deterministic and probabilistic analytical target cascading. PhD Dissertation, Mechanical Engineering, The University of Michigan

Han J, Papalambros PY (2010) A sequential linear programming coordination algorithm for analytical target cascading. J Mech Des 132(2):0210031–0210038

Kim HM (2001) Target cascading in optimal system design. PhD Dissertation, Mechanical Engineering, The University of Michigan

Kim HM, Michelena NF, Papalambros PY, Jiang T (2000) Target cascading in optimal system design. In: Proceedings of the 26th design automation conference, Baltimore, Maryland

Kim HM, Rideout DG, Papalambros PY, Stein JL (2001) Analytical target cascading in automotive vehicle design. In: Proceedings of ASME design engineering technical conference and computers and information in engineering conference, Pittsburgh, PA

Kim HM, Kokkolaras M, Louca LS, Delagrammatikas GJ, Michelena NF, Filipi ZS, Papalambros PY, Stein JL, Assanis DN (2002) Target cascading in automotive vehicle design: a class 6 truck study. Int J Vehicle Des 29(3):199–225

Kim MK, Michelena NF, Papalambros PY, Jiang T (2003) Target cascading in optimal system design. J Mech Des 125(3):474–480

Kim HM, Chen W, Wiecek MM (2006) Lagrangian coordination for enhancing the convergence of analytical target cascading. AIAA J 44(10):2197–2207

Kort BW, Bertsekas DP (1972) A new penalty function method for constrained minimization. In: Proceedings 1972 IEEE conf. decision and control, San Diego, CA

Lassiter JB, Wiecek MM, Andrighetti KR (2005) Lagrangian coordination and analytical target cascading: solving ATC-decomposed problems with Lagrangian duality. Optim Eng 6:361–381

Li Y, Lu Z, Michalek J (2007) Diagonal quadratic approximation for parallelization of analytical target cascading. In: Proceedings of the ASME International Design Engineering Technical Conferences & Computers and Information in Engineering Conference IDETC/CIE, Las Vegas

Li Y, Lu Z, Michalek JJ (2008) Diagonal quadratic approximation for parallelization of analytical target cascading. J Mech Des 130:051402-1–051402-11

Michalek JJ, Papalambros PY (2005a) An efficient weighting update method to achieve acceptable inconsistency deviation in analytical target cascading. J Mech Des 127(2):206–214

Michalek JJ, Papalambros PY (2005b) Weights, norms, and notation in analytical target cascading. J Mech Des 127(3):499–501

Michelena NF, Kim HM, Papalambros PY (1999) A system partitioning and optimization approach to target cascading. In: Proceedings of the 12th international conference on engineering design, Munich, Germany

Michelena NF, Park H, Papalambros PY (2003) Convergence properties of analytical target cascading. AIAA J 41(5):897–905

Montes EM, Coello CAC (2005) A simple multimembered evolution strategy to solve constrained optimization problems. IEEE Trans Evol Comput 9(1):1–17

Tosserams S (2004) Analytical target cascading: convergence improvement by subproblem post-optimality sensitivities. MS Thesis, Eindhoven University of Technology, The Netherlands, SE-420389

Tosserams S, Etman LFP, Papalambros PY, Rooda JE (2006) An augmented lagrangian relaxation for analytical target cascading using the alternating directions method of multipliers. Struct Multidisc Optim 31(3):176–189

Tosserams S, Etman LFP, Rooda JE (2007) An augmented lagrangian decomposition method for quasi-separable problems in MDO. Struct Multidisc Optim 34(3):211–227

Tosserams S, Etman LFP, Rooda JE (2008) Augmented lagrangian coordination for distributed optimal design in MDO. Int J Numer Methods Eng 73(12):1885–1910

Tosserams S, Etman LFP, Rooda JE (2009) Block-separable linking constraints in augmented Lagrangian coordination. Struct Multidisc Optim 37(5):521–527

Tosserams S, Kokkolaras M, Etman LFP, Rooda JE (2010a) A non-hierarchical formulation of analytical target cascading. ASME J Mech Des 132(5):051002

Tosserams S, Etman LFP, Rooda JE (2010b) Multi-modality in augmented Lagrangian coordination for distributed optimal design. Struct Multidisc Optim 40:329–352

Tseng P, Bertsekas DP (1993) On the convergence of the exponential multiplier method for convex programming. Math Program 60:1–19

Tzevelekos N, Kokkolaras M, Papalambros PY, Hulshof MF, Etman LFP, Rooda JE (2003) An empirical local convergence study of alternative coordination schemes in analytical target cascading. In: Proceedings of the 5th world congress on structural and multidisciplinary optimization, Lido di Jesolo, Venice

Wang W, Blouin VY, Gardenghi M, Wiecek MM, Fadel GM, Sloop B (2010) A cutting plane method for analytical target cascading with augmented Lagrangian coordination. In: Proceedings of the 2010 ASME International Design Engineering Technical Conferences & Computers and Information in Engineering Conference IDETC/CIE, Montreal, Quebec, Canada

Author information

Authors and Affiliations

Corresponding author

Additional information

An earlier version of this paper was presented at 14th AIAA/ISSMO Multidisciplinary Analysis and Optimization Conference, Indianapolis, IN, 17–19 September 2012.

Appendices

Appendix A

Alternative approaches for choosing the penalty parameters are considered, where \(a_{\mathrm {ij}}^{\mathrm {k}}=a_{0} \) and \(b_{\mathrm {ij}}^{\mathrm {k}} =b_0\; \forall {\mathrm {k}}\) or \(a_{\mathrm {ij}}^{{\mathrm {k}}+1} =\beta a_{\mathrm {ij}}^{\mathrm {k}} >a_{\mathrm {ij}}^{\mathrm {k}} \) and \(b_{\mathrm {ij}}^{{\mathrm {k}}+1} =\beta b_{\mathrm {ij}}^{\mathrm {k}} >b_{\mathrm {ij}}^{\mathrm {k}} \;\forall {\mathrm {k}}\) with no dependence on values of the multipliers. For this case, \(a_{ij}=b_{ij} = 1\) or \(a_{\mathrm {ij}}^{{\mathrm {k}}+1} =\beta a_{\mathrm {ij}}^{\mathrm {k}} \) and \(b_{\mathrm {ij}}^{{\mathrm {k}}+1} =\beta b_{\mathrm {ij}}^{\mathrm {k}} \) with \(\beta = 2\). For the updating approach with dependence on values of the multipliers, \(\boldsymbol {\omega }_{ij}^k =\boldsymbol {\omega }_0 =1\;\forall k\) and \(\boldsymbol {\nu }_{ij}^k =\boldsymbol {\nu }_0 =1\) as well as \(\boldsymbol {\omega }_{ij}^{k+1} =\beta \boldsymbol {\omega }_{ij}^k \) and \(\nu _{ij}^{k+1} =\beta \nu _{ij}^k \) with \(\beta = 2\). These approaches were applied in the solution to Problem 2 according to EPF I approach. Same initial design point x \(^{(0)} = [3, 3, 3, 3, 3, 3, 3]\) was selected for all approaches with \(\mu ^{(0)} = 1\) and \(\gamma ^{(0)} = 1\).

Results in Table 2 show that for the same level of accuracy, the number of function evaluations and CPU time are generally reduced when the penalty parameters are kept independent of the multipliers. Also, by allowing the penalty parameters to be updated during the optimization process, solution efficiency improves.

Appendix B

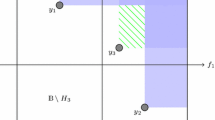

Problem 3 is solved using three different decompositions. Decomposition 1, as shown in Fig. 13a, consists of two elements, element 11 at the top level and element 22 at the bottom. The target/response variables are \(x_{3}\) and \(x_{6}\), {\(x_{1}\), \(x_{2}\), \(x_{4}\), \(x_{5}\), \(x_{7}\)} are the local variables for element 11 and {\(x_{8}\), \(x_{9}\), \(x_{10}\), \(x_{11}\), \(x_{12}\), \(x_{13}\), \(x_{14}\)} are the local variables for elements 2. The objective function is assigned to element 11. The constraints \(g_{1}\), \(g_{2}\), \(h_{1}\), \(h_{2}\) are allocated to elements 11 and the others to element 22. Decomposition 2 shown in Fig. 13b also consists of two elements as in the previous case, but the target/response variables are \(x_{5}\) and \(x_{11}\), {\(x_{3}\), \(x_{4}\), \(x_{8}\), \(x_{9}\), \(x_{10}\)} are the local variables for element 11 and {\(x_{2}\), \(x_{6}\), \(x_{7}\), \(x_{12}\), \(x_{13}\), \(x_{14}\)} are the local variables for elements 22. The objective function is decomposed into two parts, \(x_1^2\) assigned to element 1 and \(x_2^2\) to element 22. The constraints \(g_{1}\), \(g_{3}\), \(g_{4}\), \(h_{1}\), \(h_{3}\) are allocated to elements 11 and the others to element 22. Decomposition 3 is the three-level hierarchy presented in Fig. 9.

Figure 14 displays the number of function evaluations and the CPU time versus the absolute solution error \(e\) for termination tolerances \(\tau = 10^{-2}, 10^{-3}, 10^{-4}, 10^{-5}\). The initial values for the penalty parameters in EPF I and EPF II are set to \(\mu ^{(0)} = 1\) and \(\gamma ^{(0)} = 1\). The starting point for all decompositions is x \(^{(0)} = [\)5.0, 5.0, 2.76, 0.25, 1.26, 4.64, 1.39, 0.67, 0.76, 1.7, 2.26, 1.41, 2.71, 2.66\(]\).

The results show that the form of decomposition affects computational efficiency. For all the cases considered, decomposition 1 is more efficient than the other two. Moreover, EPF II (single-loop) is more computationally efficient than EPF I regardless of the decomposition model used. In particular, EPF II for decomposition 1 requires the least number of function evaluations and CPU time whereas EPF I for decomposition 3 requires the most. Comparing the two-level decompositions 1 and 2, it appears that EPF I_1 requires 61 % less function evaluations than EPF I_2, whereas EPF II_1 requires 32 % less function evaluations than EPF II_2. In terms of CPU time, EPF I_1 is 78 % faster than EPF I_2, whereas EPF II_1 is 16 % faster than EPF II_2.

Rights and permissions

About this article

Cite this article

DorMohammadi, S., Rais-Rohani, M. Exponential penalty function formulation for multilevel optimization using the analytical target cascading framework. Struct Multidisc Optim 47, 599–612 (2013). https://doi.org/10.1007/s00158-012-0861-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00158-012-0861-x