Abstract

This naturalistic study integrates specific ‘question moments’ into lesson plans to increase pupils’ classroom interactions. A range of tools explored students’ ideas by providing students with opportunities to ask and write questions. Their oral and written outcomes provide data on individual and group misunderstandings. Changes to the schedule of lessons were introduced to explore these questions and address disparities. Flexible lesson planning over 14 lessons across a 4-week period of high school chemistry accommodated students’ contributions and increased student participation, promoted inquiring and individualised teaching, with each teaching strategy feeding forward into the next.

Similar content being viewed by others

Introduction

Chomsky (1995) argued that the formation of questions is an essential, integral part of a universal grammar: Forming questions is part of the blueprint for language that is hard-wired into the human brain. In this vein, Jordania (2006) suggested that the ability to ask questions is in fact the central cognitive element that distinguishes humans from other mammals. The position we adopt in this paper differs—not in the essentially human qualities of asking questions, but by directing our research from within a constructivist perspective. In this, we are akin to Dabrowska and Lieven (2005) for whom question-asking—hard-wired or otherwise—is an act of meaning-making. Question askers, they say, build questions by recycling and recombining previously experienced ‘chunks’ of language, knowledge and understanding.

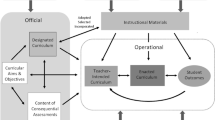

In this paper, we explore everyday classroom mechanisms for enabling construction of understanding to take place. The teacher of chemistry, in this case, explores a range of approaches to encourage question-asking with young adolescents in school-time lessons. She (SL) uses student-generated questions when designing teaching to cover the required curriculum (Hagay et al. 2013) and works to provide ‘question moments’ when the members of the class have opportunities to turn the tables: to ask the teacher questions rather than the other way round. Such student-centred approaches are commonly challenging for teachers, not least because they ‘require teachers to assume a guiding role and to simultaneously attend to many different aspects of the classroom’ (Brush and Saye 2000, p.8). This naturally results in a broader set of teacher ‘management responsibilities’ and skills than those required in more traditional classrooms (Mergendoller and Thomas 2005, p.8). These approaches also demand a predisposition to understand students’ points of view about the matters discussed in class. In order to do this the teacher must adopt, on the one hand, a reflective attitude towards the interpretation of data and, on the other, an investigative attitude to collecting questions and answers. In this study, there is a third requirement: She must also develop and implement tools and strategies fitted to improving the process of teaching and learning in future lessons.

We have combined here the three dimensions of reflection on practice, collection of data about that practice and the creation of strategies to improve it and interrelated these in a continuous process of reciprocal feedback. Action research, or in this case, teacher action research, was the most appropriate approach to tackle this in practice. In such research, the teacher studies her own situation ‘to improve the quality of processes and results within it’ (Schmuck 2009, p.19). In other words, the teacher conducts research on practice in order to improve the experiences of her students. This kind of research differs from traditional approaches in that it studies personal practice, seeks continuous change, is reflective about thoughts and feelings and strives for improvements through planned change. In this way, the traditional gap between researcher and research subject is removed because they are one and the same person.

In this paper, we describe a teacher fostering the participation of students in her class, collecting their ideas and queries and reflecting on the information collected with the intent to make adaptations for the next set of lessons. After each lesson, she designs flexible instruction that takes account of the questions collected, and these designs are then implemented in the next series of classes. More responses are collected, a further stage of re-design takes place—and so on as the series of lessons progresses. Our report captures the adoption of such approaches, and by doing so, we chart the forging of educative interventions informed by the teacher, the subject matter and the students involved. That is, we have provided opportunity for ‘question moments’, collected the questions, identified students' individual learning needs and implemented suitable strategies to deal with these. This is essentially a co-constructivist approach to teaching (Watts and Pedrosa de Jesus, 2005), where knowledge and understanding are built, developed and tailored to particular needs within a classroom community.

Students Asking Questions

At a surface level, it would seem rather straightforward to ask students about their uncertainties and difficulties. However, as Maskill and Pedrosa de Jesus (1997) have pointed out, pupils may not feel at ease when answering teachers’ questions because they link questioning to assessment, and perhaps fear exposing ideas that might reveal learning problems. Hence, we needed a secondary route to access pupils’ states of knowledge. Maskill and Pedrosa de Jesus (1997) have claimed that pupils’ questions can reveal even more about their thinking than their answers. However, research shows that students seldom ask questions in the classroom, commonly keeping their doubts and uncertainties to themselves (Dillon 1988; Maskill and Pedrosa de Jesus 1997; Watts and Pedrosa de Jesus 2010; Moreira 2012). Pedrosa de Jesus and Watts (2012) explore some of the reasons for this and suggest that asking a question in class can give rise to feelings of exposure and vulnerability that may prevail over curiosity, doubt and uncertainty and prevent the act of questioning. Therefore, pupils need to feel safe before they risk asking any important question. Another factor pointed out in literature that might hinder question asking is the lack of time to lack of time to develop and articulate questions (Chin and Brown 2000; Dillon 1988).

To overcome some of these difficulties, Maskill and Pedrosa de Jesus (1997), Moreira (2012), Neri de Souza (2006), Pedrosa de Jesus et al. (2001), Silva (2002) and Teixeira-Dias et al. (2005) all suggest that teachers might use learners’ written questions. These can be used as a ‘secure’ and private way of exposing doubts and knowledge gaps, while also providing a longer time for reflection than the short time commonly prevailing in oral interactions (Maskill and Pedrosa de Jesus 1997). Etkina (2000) discussed the use of a weekly report, a structured journal in which students answered three questions: (a) ‘What did you learn this week?’ (b) ‘What questions remain unclear?’ and (c) ‘If you were the teacher, what questions would you ask to find out whether the students understood the most important material of this week?’. Etkina’s suggestion was that this approach not only encourages students to think about the gaps in their current knowledge, but also serves as an assessment tool and allows the instructor to modify subsequent instruction to address students’ needs. The difficulty here is that these were teachers’ questions, not students’, and little is offered by way of discussion of how subsequent instruction was actually modified. Teixeira-Dias et al. (2005) do describe how they used students’ questions as the springboard for instructional interventions. They collected questions using a ‘question box’ within classrooms for anonymous written questions and an email facility for ‘out-of-hours’ questions. In this instance, teachers were able to respond to direct queries and created a series of special lecture sessions to tackle obstinate issues. Students broadly welcomed these although the pace of curricular change was difficult to maintain. In other studies (for example, Kulas 1995), students recorded their ‘puzzle questions’ in a diary or learning journal, setting out their ‘I wonder’ questions; Dixon (1996) used a ‘question board’ to display students’ questions and suggested that these questions could be used as starting points for scientific investigations. In Maskill and Pedrosa de Jesus (1997), we argued for a question ‘brainstorm’ at the start of a topic, a question box on a side table where students could submit their questions, turn-taking questioning around the class where each student or group of students must prepare a question to be asked of others, and ‘question-making’ homework.

The two gaps in this literature, then, relate to (i) the provision of specific in-lesson time for students’ question and (ii) a systematic approach to using student questions as part of the plans of forth-coming lessons. In Watts, Alsop, Gould, and Walsh (1997), we suggested including specific times for questions, such as a period of ‘free question time’ within a lesson or block of lessons, but failed to carry this through and explore the outcomes. With Teixeira-Dias et al. (2005), we designed instructional interventions but lacked a systematic form of iterative lesson planning. In the study described here, we sought to address both issues by testing the situations that best generated questions by students, providing vehicles for question-asking in class, and then acting thoughtfully and reflectively on the questions that were asked.

The Study Design

Action research may be divided into several steps, although it would be a misleading to suggest strict order and linearity in work of this kind. We organised our study through three phases: (i) an initial exploratory or ‘pre-study’ phase as defined by Craig (2009), (ii) a question-generating phase or ‘data collection phase’ as defined by Henning et al. (2009), and (iii) an implementation or ‘intervention’ phase (Meyer 2006). In our case, the exploratory phase comprised three separate tasks: (i) observing the classroom and students in order to uncover problems, issues and concerns; (ii) deciding on a focus by asking questions such as ‘What do I want to find about these specific students or situation?’ ‘What do I want to improve?’, and finally (iii) ‘reviewing helpful theories in order to gather information to make informed decisions and to design the action plan’ (Craig 2009, p.17). During the ‘question-generating’ data collection phase, new teaching strategies were put into action. During the third phase, implementation, a number of ‘spirals of activity’ emerged comprising periods of planning, acting, observing, reflecting and re-planning and often ‘leading to other spin-off spirals of further work’ (Meyer 2006, p. 265). At all points, data were collected through participant and non-participant observation, field notes, pupils’ written questions and answers, and also by audio-taping classroom lessons in order to analyse oral interactions.

Context

The research is naturalistic, conducted in situ with two parallel classes and a total of 54 pupils (year 7, 12–13-year olds) in a Portuguese secondary school. The timescale covers 14 1-h lessons across a 4-week period of high school chemistry. Both classes were taught by the same chemistry teacher (SL) while she was undertaking masters’ research in education. These circumstances helped in establishing a bridge between academic research and professional practice. The chemistry topics included ‘physical and chemical transformations’, ‘physical properties of materials’ and the ‘separation of mixtures’, all fairly traditional components of the science curriculum at this level.

The First Phase: Exploratory

The initial exploratory phase lasted 5 months, and during this time data were collected on the students’ characteristics and the class dynamics through both participant and non-participant observation. We also sought to identify issues that were problematic for students’ learning and therefore worthy of study in more detail. The key observations of both classes during the exploratory phase related directly to problems of pupil non-participation: Very few students asked questions. There were three aspects to this:

-

Pupils’ verbal contributions were generally low and this was reflected in the very sparse level of their question-asking

-

Most student contributions followed a request from the teacher, i.e. few of them were spontaneous

-

Spontaneous contributions that did arise were almost always from the same pupils.

The Second Phase: Question-Asking

In the second phase, we sought to implement question-asking strategies and therefore our research question became the following:

-

How can we stimulate seventh grade pupils’ question-asking during chemistry lessons, both in oral and written format?

During this phase, efforts were made to increase pupils’ interactions. Specific tools were designed and incorporated in lessons to encourage pupils to be more involved, participate, ask questions and explore their ideas. These tools included:

Students’ Oral Questions

As noted earlier, these were elicited during the process of the classes, which were audio-recorded. The recorded sessions were transcribed and carefully read for content analysis—in order to identify possible doubts, as well as incorrect ideas or concepts that needed to be re-discussed in class.

Written Questions

Three changes were made to the usual conduct of chemistry lessons:

-

Question sheets: Drawing on the work of Pedrosa de Jesus et al. (2001) and Neri de Souza (2006), question sheets were introduced at the start and collected at the end of every lesson, and all questions were then read by the teacher and analysed for content and issues

-

Group open-ended questions: For this study, we created a set of open-ended questions, which were part of the sheets handed out by the teacher to each group. These provided questions that were designed to foster discussion and hypothesising. Open-ended questions are defined as questions that have multiple possible answers. This kind of question allows students to incorporate previous experiences into their explanations, contributing to richer and more meaningful answers. Such questions also require students to justify their statements and explain the underlying logic. Hence, they help develop argumentative skills and provide deeper information about students’ understanding. They may even reveal that students have a broader understanding of a particular topic than the teacher might have imagined (Lund and Kirk 2010)

-

Closed questions: These were also created by the teacher and included in order to identify possible gaps at the conceptual level, which could render difficulty in the development of deeper reasoning and explanations. The written answers given by students during the group work were also gathered and organised according to the key ideas they presented.

Question Moments

For us, a question moment is a pause in proceedings to allow students to write down their questions and doubts. While question moments were intended as short breaks in normal class time to collect students’ individual questions, students were nevertheless encouraged to write and ask questions whenever they wished. Importantly, they could also write questions about any topic—even those that had nothing to do with the matters being considered in the classroom. We expected that these questions would provide some insight into pupils’ wonderings, reasoning, doubts and difficulties.

During this second phase, we explored the effect of such question moments and so varied their inclusion. As Table 1 shows (in grey), class A had two lessons where question moments were provided, class B had one. The results are clear: Perhaps unsurprisingly, the number of written questions is substantially higher in the lessons where specific question moments were provided, far outstripping lessons where no moments (shown in the table in white) were provided.

Group Discussion Time

After working in groups, pupils shared their answers to the open-ended questions with the class, presenting their arguments for and against each of the alternatives presented. The main aim of this discussion was to choose procedures that could provide solutions for the problems posed in the open-ended questions. These discussions, we hoped, would also provide greater insights into pupils’ understanding as well as allow them to test their ideas.

Oral and Written Questions

Each class was divided in two (shift 1 and shift 2), each having lessons at different times of the school day.

As shown in Table 2 below, in both lessons, the number of pupils who wrote questions was higher than those who asked them verbally. This means that some of the pupils who did not participate verbally during those lessons wrote questions, which suggests that those pupils are more disposed to write rather than to speak their doubts out aloud.

The Third Phase: Implementation

In this phase, our question became the following:

-

How can we systematically shape lesson planning to incorporate responses to their questions and integrate pupils’ questions and ideas in the teaching and learning process?

Two approaches were adopted to explore this. First, all the ‘research data’, the written and oral questions, the group open and closed questions and the audio records, were objects of content analysis, interpretation and reflection in an attempt to gain insights into pupils’ understanding, their doubts and possible lack of knowledge and understanding. Content analysis is widely used in naturalistic qualitative research (Hsieh and Shannon 2005) to interpret meaning from text and observational data. In this study, we used conventional content analysis, isolating and coding categories derived directly from the recorded data in order to count instances and make comparisons. This allowed us to identify student problems, which then served as a basis for planning the next lessons. Second, these data were presented to the class for discussion, as a form of respondent analysis while also enabling students to revisit topics previously addressed and to clarify doubts.

We hoped that integrating pupils’ data of this kind into the lesson would increase their motivation to participate. Given the volume of data, it became necessary to select items that would be discussed. Our choices were based largely on two criteria: the number of pupils who expressed similar doubts, concerns or curiosity, and the relevance of those worries to the organisation of, and the approach to, the curriculum matters in hand. A first example of this is the lesson of 30th March when, of a total of seven written questions, four focussed on the phenomenon of sublimation:

-

How can it [a substance] pass from the solid state into the gaseous state and vice-versa?

-

How can the solid state transform into gaseous and vice-versa and not pass through the liquid state?

-

How can it [a substance] pass directly to the gaseous state?

-

How can the ice transform into water vapour and not pass through the liquid state and vice-versa?

These are essentially the same question, and about a relevant aspect of the topic—physical transformation. Therefore, we thought that it was important to promote class discussion on this in order to address these questions.

A second example is drawn from the lesson of March 22nd, when two similar written questions were received about the concept of pressure:

-

I did not understand the explanation about pressures.

-

I did not understand very well that part of the lesson about pressure.

Both these questions concern the meaning of the word pressure—the two students had simply not understood the explanation presented to them. There was no oral intervention (no question or comment of any kind) from any student during either class, no questions asked of the presentation of the concept of pressure at the time. One possibility is that the concept was so abstract that these two students (at least) felt too uneasy even to comment/question it at the time. Therefore, although these two questions were only a small fraction of the whole set of questions (49), we thought it was appropriate to discuss this concept again. In addition, pressure is a term used to define boiling and fusion points, both central concepts of the programme, and we therefore thought students needed a ‘palpable’ understanding. This, then, prompted a class discussion on the concept while referring to a chemical reaction, where a balloon was inflated because of the pressure exerted by a gas formed during the reaction.

Outcomes and Discussion

The outcomes of this work are not straightforward, but naturalistic research is seldom direct and clear-cut. While observing and recording people in natural, unstructured settings comes closest to what we all do in our daily lives, it can prove ‘messy’, with many of the key issues intermingled.

The initial exploratory phase allowed us to identify two groups of pupils whose preferences were distinct: the minority of ‘oral askers’ who would speak out and ask questions in class, airing their doubts and reasoning, and the majority of ‘question writers’ in the two classes, who preferred writing their ideas in a more individual and private manner. The difference in the numbers may be due to factors discussed before—social discomfort or fear of ridicule (Graesser and McMahen 1993), or even the arduousness of asking a question in the short time frames that characterise oral interaction. These observations, though, do support the idea put forward by several authors (Dillon 1988; Maskill and Pedrosa de Jesus 1997; Watts and Pedrosa de Jesus 2010) that pupils do have questions to ask and are able to ask them if the right conditions are provided.

The second, question-asking phase introduced a series of measures to promote students’ questions. These were the following:

-

1.

The classroom question moments

Table 1 above shows that the inclusion of specific question moments for writing questions in class clearly favours the writing of questions (Watts and Pedrosa de Jesus 2005). Interestingly, even in the lessons where no such moments where provided, some students did write questions, which suggests that the question sheet alone was also a meaningful tool for these students.

-

2.

Students’ written questions

The moments for writing questions allowed the collection of a varied set of questions (a total of 105 over the course of the study). Analysis of these showed that they fit the picture described by others ( Chin and Brown 2000; Watts and Pedrosa de Jesus 2005), with most of the questions seeking factual information and concerning concepts or terms used in the classroom. Some examples are the following:

-

How can we know if the transformation is chemical or physical?

-

What is the name of the temperature symbol θ?

-

What is a reagent?

There were a few questions, however, which were more specialised and for example, tried to establish connections between issues learnt in the classroom and pupils’ previous knowledge, or imagining scenarios, as a way of ‘testing’ the new information:

-

When the water passes into the gaseous state, does it disperse in the atmosphere? If so, can we say that an ice cube is created from the liquid water that came from the gaseous state of several places?

-

If water modifies its physical state (when it is too hot it evaporates and when it is too cold it solidifies) why are there more clouds in winter, when it is colder?

The number of questions written during two of the lessons where question moments were provided was compared with the pupils’ oral interactions during the same lessons. Table 2 above shows the results. As noted earlier, in the lesson on March 30th, four of the seven written questions were related to the idea of sublimation, the topic taught for the first time in that lesson. In this case, in order to help pupils understand the phenomenon, the teacher decided to break with her schedule for the next lesson in order to demonstrate sublimation with a sphere of naphthalene, which sublimates at room temperatures and was a familiar example for pupils. Table 3 below contains a small extract of the subsequent discussion.

This discussion, built from the pupils’ initial written questions, addressed their doubts and also allowed other pupils to express their ideas and reveal misconceptions. For example, the idea of the physical transformation of the naphthalene from solid state into gaseous state as a ‘transmission of particles’ was deconstructed and given adequate feedback for a better understanding of the phenomenon.

Within this strategy, it is worth noting that many of the questions that pupils wrote concerned matters discussed in lessons that had taken place 2 weeks before. This means that those pupils had kept their doubts to themselves for all that time, revealing them only when they had the opportunity to write them down.

-

3.

Open-ended and closed question activity sheets

The group activity sheets used in the lessons comprised a total of six open-ended and three closed questions. Four of the open-ended questions allowed for at least two valid solutions. That is, those questions could be answered in (at least) two different, yet valid ways. Our approach was that this activity was best suited to group work, so that students collaborated to develop an answer to the question. They could also explore the issues involved by raising their own questions. Some of the solutions that students proposed, and that could be considered valid, had not been foreseen by the teacher. For instance, two groups suggested separating a mixture of flour and iron filings by adding water to the mixture:

-

We could separate the iron filings from flour using water because the flour would stay at the surface and the iron filings would sink.

-

To separate the flour from the iron, we would have to put them in glassware with water. One stays on top and the other stays at the bottom.

That is, students thought of taking advantage of the different densities of flour and iron filings. Although the density concept had not been formally taught, students seemed to have an intuitive understanding of the different behaviour that the two components of the mixture would display in the presence of water. Alluding to this proposal in the next lesson was then a useful ‘starting point’ for the introduction of the concept of density, incorporating students’ own ideas as part of the lesson plan. The ‘expected’ answer in this instance was the use of a magnet, and it was, indeed, the answer given by the majority of students (12 groups):

-

We could use a magnet and it would attract the iron filings.

Class discussion was then used to help choose the more efficient means to separate, in practice, the components of the flour and iron filing mixture. This process increased participation and involved a greater number of students because it incorporated their different ideas and presented reasons for choosing one proposal over the other, instead of dismissing or simply neglecting the unexpected ideas.

Examples of the implementation phase of the research have already been included in the comments made above, and suggest that the strategies, which were designed and implemented in order to accommodate individual differences, with this strong emphasis on teacher reflective practice, stimulated and increased pupils’ participation, increased pupil-teacher classroom cooperation and helped the teacher to individualise teaching, that is, to take into account pupils’ learning preferences. As discussed, one of the pupils’ tasks was to answer a set of open-ended questions using work sheets. The analysis of the answers allowed the teacher to characterise their main misunderstandings of the concepts involved, choosing some she considered relevant to stimulate the discussion in the following lesson. For example, on March 22nd, a lesson dealing with the concepts of boiling and fusion point was planned, taking into account the answers already provided for the following question:

-

Why is water in a solid state at normal pressure and ambient temperature of −5 °C?

The answers required pupils to understand the concept of boiling point so, the teacher decided to begin the discussion using one of the student's answers to the question.

The example presented in Table 4 is a small extract of the discussion raised by that answer.

Similar analyses of classroom talk, for further classroom use, were taken from the recordings pupils’ oral interactions in the course of their practical activities.

-

4.

The rolling programme

The classroom discussions are examples of a ‘rolling programme’ of revisions and classroom interventions. In this instance, the teacher modified the lesson on March 30th (Table 1) in the light of the previous lesson, then the lesson on April 6th in the light of March 30th; the lesson on April 27th after April 6th, and so on, a series of previously unplanned departures from her curriculum schedule of lessons as a result of students’ questions. Her reflective log at the end of each lesson served as a basis for the next lessons.

In some of the lessons (for example, April 6th, April 26th and 27th), the ‘activity sheets’ were used and the discussions in the lessons centred on students’ oral inquiries and the written answers given there and then to the questions on the sheets. Lesson time was tight, to both undertake the activity and maintain momentum within the curriculum and, in some instances, students began to answer the questions on the sheets in one lesson (22nd March) but finished them in the next (29th March). Time was then made in that subsequent lesson to discuss students’ written answers.

Summary

Question moments like the ones described above are a basic form of inquiry-based learning, where learners identify and explore issues and questions as they develop their knowledge. Inquiry learning is a frame of mind (Kuhlthau 2013), where knowledge is built from experience and process, especially socially-based experience. In this instance, through the work of the teacher, it is closer to a form of ‘guided inquiry’ (Moog and Spencer 2008): Through question moments, students learn through interaction with each other alongside direct instruction. Students asked, and were asked, questions at multiple levels, including their concrete knowledge, their ability to analyse and their creativity in synthesis. As Yoon et al. (2012) point out, this kind of inquiry is most successful when students have several opportunities to learn and practice different ways to plan experiments and record data. The kind of teacher guidance we describe here provides an environment that supports classroom questioning and channels students into productive inquiry while not making excessive demands on the teacher.

Reflection on students’ oral and written questions, and subsequent classroom discussions, provided the teacher-researcher with relevant information about individual and group ‘knowledge gaps’, doubts and perplexities, both implicit and explicit. The teacher created time in subsequent lessons to address the identified problems and to promote class discussions initiated by presenting the pupils’ ‘questioning products’. The exemplar data above illustrates how the teacher used the collected information and managed discussions, based on pupils’questions and answers to trigger conversations.

There is no doubt that promoting learners’ questioning demands more of the teacher than simply lecturing to a sea of blank faces; the price for prompting engagement and inquiry in the classroom is the development of a set of planning and organisational skills, alongside a willingness to receive, analyse, evaluate and address students’ questions. These examples are merely illustrative and serve only to illuminate some of the key issues involved in inquiry-based teaching. The data do not build theory but provide empirically grounded contexts for demonstrating the advent of classroom questions, descriptions of the situations that give rise to them, and some of the possible ways in which teachers can manage them.

Limitations of This Research and Suggestions for Future Research

Qualitative investigators in general, and as teacher-researchers in particular, maintain that research respondents must ‘speak for themselves’ (Sherman and Webb 2001, p. 5). While it is true that the main focus of this research is students’ outputs, this has been limited to classroom discourse and did not include students’ critique or views about the strategies we introduced. It would be important, in a future study, to allow students to express themselves more about the research intervention itself, and the strategies used. Such studies might also use peer collaboration between a group of teachers and joint analysis of the data collected (van Kraayenoord et al. 2011).

One of the main concerns during the study was the limited classroom time spent with students. For example, wish as we might, we could not include question moments for all students to write questions in every lesson. Aware that students do improve the quality of their questions with practice, it would have been desirable to use this strategy regularly and for longer periods of time. It would also be interesting to follow each student’s individual progress in writing questions. In this way, students’ potential for writing questions could be explored even further. Each student used the same question sheet for writing questions throughout the research so they could read their previous questions, think about them and ‘act’ upon them. For instance, one student took the initiative of later answering one of his own questions (written in a previous lesson). Inspired by this, it would be interesting to encourage all students to answer their own questions (and others’) once they felt able to do so. This is not a new idea and has been suggested by Chin and Kayalvizhi (2002) and Kuhlthau (2013), among others. It does, however, deserve more attention: Teachers who find ways to help students answer their own questions are helping learners become more knowledgeable, reflective and in control of their own cognitive resources.

The constraints of curriculum time also meant that, of all the alternative, creative solutions the groups put forward to solve problems, only one was actually tested in practice. This procedure was chosen as the most efficient and, importantly, the one that could be undertaken using the available laboratory materials. While the other suggestions were taken into serious consideration, and their pros and cons debated, there was simply too little time and immediate resources to undertake these experimentally. This is the next step, to find the means, within a fairly standardized and regulated curriculum system, to enable students to enact and compare their alternative solutions to decide which was the most effective procedure based on their own question answers.

References

Brush, T., & Saye, J. (2000). Design, implementation, and evaluation of student-centered learning: a case study. Educational Technology Research and Development, 48(3), 79–100.

Chin, C., & Brown, D. E. (2000). Learning in science: a comparison of deep and surface approaches. Journal of Research in Science Teaching, 37(2), 109–138.

Chin, C., & Kayalvizhi, G. (2002). Posing questions for open investigations: what questions do pupils ask? Research in Science & Technological Education, 20(2), 269–287.

Chomsky, N. (1995). The minimalist program. Cambridge: MIT.

Craig, D. (2009). Introduction to action research. In J. Bass (Ed.), Action Research Essentials (pp. 1-28). San Francisco. Jossey-Bass.

Dabrowska, E., & Lieven, E. (2005). Towards a lexically specific grammar of children’s question constructions. Cognitive Linguistics, 16, 437–474.

Dillon, J. T. (1988). Questioning and teaching: a manual of practice. Beckenham: Croom Helm Ltd.

Dixon, N. (1996). Developing children’s questioning skills through the use of a question board. Primary Science Review, 44, 8–10.

Etkina, E. (2000). Weekly reports: a two-way feedback tool. Science Education, 84(5), 594–605.

Graesser, A. C., & McMahen, C. L. (1993). Anomalous information triggers questions when adults solve quantitative problems and comprehend stories. Journal of Educational Psychology, 85(s.a), 136–151.

Hagay, G., Peleg, R., Laslo, E., & Baram-Tsabari, A. (2013). Nature or nurture? A lesson incorporating students’ interests in a high-school biology class. Journal of Biological Education, 47(2), 117–122.

Henning, J. E., Stone, J. M., & Kelly, J. L. (2009). An introduction to action research. In using action research to improve instruction: an interactive guide for teachers. New York, NY: Routledge.

Hsieh, H.-F., & Shannon, S. E. (2005). Three approaches to qualitative content analysis. Qualitative Health Research, 15(9), 1277–1288.

Jordania, J. (2006). Who asked the first question? The origins of human choral singing, intelligence, language and speech. Tbilisi: Logos.

Kuhlthau, C.C. (2013). Inquiry inspires original research. School Library Monthly, November, 5–8.

Kulas, L. L. (1995). I wonder. Science and Children, 32(4), 16–18.

Lund, J. L., & Kirk, M. F. (2010). Open-response questions. In S. Quinn (Ed.), Performance-based assessment for middle and high school physical education (pp. 95–110). Windsor: Human Kinetics.

Maskill, R. & Pedrosa-de-Jesus, H. (1997). Pupils' questions, alternative frameworks and the design of science teaching. International Journal of Science Education, 19(7), 781–799.

Mergendoller, J., & Thomas, J. W. (2005). Managing project-based learning: principles from the field. Retrieved June 14, 2005, from http://www.bie.org/tmp/research/researchmanagePBL.pdf.

Meyer, J. (2006). Action research. In K. Gerrish & A. Lacey (Eds.), The research process in nursing. Oxford: Blackwell.

Moog, R.S. & Spencer, J.N. (Eds.) (2008). POGIL: Process Oriented Guided Inquiry Learning. ACS Symposium Series 994. American Chemical Society: Washington, DC.

Moreira, A.C. (2012). O questionamento no alinhamento do ensino, aprendizagem e avaliação. [Questioning for the alignment of teaching, learning and assessment. Unpublished Master Dissertation. Aveiro: University of Aveiro, Portugal].

Neri de Souza, F. (2006). Perguntas na aprendizagem de Química no Ensino Superior. Tese de Doutoramento não publicada. Aveiro: Universidade de Aveiro, Portugal. [Questioning in Higher Education Chemistry learning. Unpublished PhD thesis. University of Aveiro, Portugal].

Pedrosa-de-Jesus, H., Neri de Souza, F., Teixeira-Dias, J. J. C. & Watts, D.M. (2001). Questioning in Chemistry at The University. Paper presented at the 6th European Conference on Research in Chemical Education, University of Aveiro, Aveiro, 4–8 September.

Pedrosa de Jesus, M.H. & Watts, D.M. (2012) Managing the affect of learners’ questions in undergraduate chemistry, Studies in Higher Education, 1–15.

Schmuck, A. (Ed.). (2009). Practical action research. Thousand Oaks: Corwin.

Sherman, R. R., & Webb, R. B. (2001). Qualitative research in education: a focus. In R. B. Webb, R. R. Sherman, & R. B. Webb (Eds.), Qualitative research in education: focus and methods (Chapter I). London: Routledge Falmer.

Silva, M. R. P. (2002). O desenvolvimento de competências de comunicação e a formação inicial de professores de Ciências: o caso particular das perguntas na sala de aula. Dissertação de Mestrado não publicada. Aveiro: Universidade de Aveiro, Portugal. [The development of communication competencies in Science teachers training: the case of classroom questioning. Unpublished Master Dissertation. University of Aveiro, Portugal].

Teixeira-Dias, J. J. C., Pedrosa-de-Jesus, M. H., Neri de Souza, F., & Watts, D. M. (2005). Teaching for quality learning in chemistry. International Journal of Science Education, 27(9), 1123–1137.

van Kraayenoord, C. E., Honan, E., & Moni, K. B. (2011). Negotiating knowledge in a researcher and teacher collaborative research partnership. Teacher Development: An International Journal of Teachers’ Professional Development, 15(4), 403–420.

Watts, D.M., Alsop, S., Gould, G. & Walsh, A. (1997). Prompting teachers’ constructive reflection: pupils’ questions as critical incidents. International Journal of Science Education 19(9), 1025–1037.

Watts, D.M. & Pedrosa-de-Jesus, H. (2005). The cause and affect of asking questions: Reflective case studies from undergraduate sciences. Canadian Journal of Science, Mathematics and Technology Education, 5(4), 437–452.

Watts, D.M. & Pedrosa-de-esus, H. (2010). Questions and Science. In R. Toplis (Ed.), How Science Works - Exploring effective pedagogy and practice (pp. 85–102). London: Routledge.

Yoon, H., Joung, Y. J., & Kim, M. (2012). The challenges of science inquiry teaching for pre-service teachers in elementary classrooms: difficulties on and under the scene. Research in Science & Technological Education, 42(3), 589–608.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Pedrosa-de-Jesus, H., Leite, S. & Watts, M. ‘Question Moments’: A Rolling Programme of Question Opportunities in Classroom Science. Res Sci Educ 46, 329–341 (2016). https://doi.org/10.1007/s11165-014-9453-7

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11165-014-9453-7