Abstract

Purpose

Confirmatory factor analysis fit criteria typically are used to evaluate the unidimensionality of item banks. This study explored the degree to which the values of these statistics are affected by two characteristics of item banks developed to measure health outcomes: large numbers of items and nonnormal data.

Methods

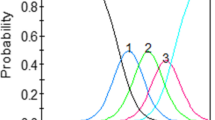

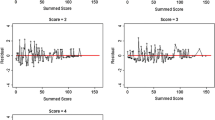

Analyses were conducted on simulated and observed data. Observed data were responses to the Patient-Reported Outcome Measurement Information System (PROMIS) Pain Impact Item Bank. Simulated data fit the graded response model and conformed to a normal distribution or mirrored the distribution of the observed data. Confirmatory factor analyses (CFA), parallel analysis, and bifactor analysis were conducted.

Results

CFA fit values were found to be sensitive to data distribution and number of items. In some instances impact of distribution and item number was quite large.

Conclusions

We concluded that using traditional cutoffs and standards for CFA fit statistics is not recommended for establishing unidimensionality of item banks. An investigative approach is favored over reliance on published criteria. We found bifactor analysis to be appealing in this regard because it allows evaluation of the relative impact of secondary dimensions. In addition to these methodological conclusions, we judged the items of the PROMIS Pain Impact bank to be sufficiently unidimensional for item response theory (IRT) modeling.

Similar content being viewed by others

Abbreviations

- CAT:

-

Computer adaptive testing

- CFA:

-

Confirmatory factor analyses

- CFI:

-

Comparative Fit Index

- EAP:

-

Expected a priori

- EFA:

-

Exploratory factor analyses

- GED:

-

General Educational Development

- GRM:

-

Graded response model

- IRT:

-

Item response theory

- NIH:

-

National Institutes of Health

- NNFI:

-

Nonnormed Fit Index

- PROMIS:

-

Patient-Reported Outcomes Measurement Information System

- PROs:

-

Patient-reported outcomes

- RMSEA:

-

Root-mean-square error of approximation

- SD:

-

Standard deviation

- SRMR:

-

Standardized root-mean-square error

- TLI:

-

Tucker–Lewis index

- WLSMV:

-

Weighted least squares with mean and variance adjustment

- WRMR:

-

Weighted root-mean-square residual

References

Embretson, S. E., & Reise, S. P. (2000). Item response theory for psychologists. Mahway, NJ: Lawrence Erlbaum Associates, Publishers.

Hambleton, R., Swaminathan, H., & Rogers, H. J. (1991). Fundamentals of item response theory. Newbury Park, CA: Sage Publishing, Inc.

Cook, K. F., O’Malley, K. J., & Roddey, T. S. (2005). Dynamic assessment of health outcomes: Time to let the CAT out of the bag? Health Services Research, 40, 1694–1711. doi:10.1111/j.1475-6773.2005.00446.x.

Dodd, B. G., De Ayala, R. J., & Koch, W. R. (1995). Computerized adaptive testing with polytomous items. Applied Psychological Measurement, 19, 5–22. doi:10.1177/014662169501900103.

Hays, R. D., Morales, L. S., & Reise, S. P. (2000). Item response theory and health outcomes measurement in the 21st century. Medical Care, 38, II28. doi:10.1097/00005650-200009002-00007.

Wainer, H. (1990). Computerized adaptive testing: A primer. Hillsdale, NJ: Lawrence Erlbaum Associates.

Cook, K. F., Teal, C. R., Bjorner, J. B., Cella, D., Chang, C. H., Crane, P. K., et al. (2007). IRT health outcomes data analysis project: An overview and summary. Quality of Life Research, 16(Suppl 1), 121–132. doi:10.1007/s11136-007-9177-5.

McDonald, R. (1981). The dimensionality of test and items. The British Journal of Mathematical and Statistical Psychology, 34, 100–117.

Reise, S. P., & Haviland, M. G. (2005). Item response theory and the measurement of clinical change. Journal of Personality Assessment, 84, 228–238. doi:10.1207/s15327752jpa8403_02.

Reise, S. P., Waller, N. G., & Comrey, A. L. (2000). Factor analysis and scale revision. Psychological Assessment, 12, 287–297. doi:10.1037/1040-3590.12.3.287.

Cella, D., Yount, S., Rothrock, N., Gershon, R., Cook, K., Reeve, B., et al. (2007). The Patient-Reported Outcomes Measurement Information System (PROMIS): Progress of an NIH Roadmap cooperative group during its first two years. Medical Care, 45, S3–S11. doi:10.1097/01.mlr.0000258615.42478.55.

Bjorner, J. B., Kosinski, M., & Ware, J. E., Jr. (2003). Calibration of an item pool for assessing the burden of headaches: An application of item response theory to the headache impact test (HIT). Quality of Life Research, 12, 913–933. doi:10.1023/A:1026163113446.

Lai, J. S., Crane, P. K., & Cella, D. (2006). Factor analysis techniques for assessing sufficient unidimensionality of cancer related fatigue. Quality of Life Research, 15, 1179–1190. doi:10.1007/s11136-006-0060-6.

Brown, T. A. (2006). Confirmatory factor analysis for applied research. New York: The Guilford Press.

Gorsuch, R. L. (1983). Factor analysis (2nd ed.). Hillsdale, NJ: Lawrence Erlbaum Associates.

Hu, L. T., Bentler, P. M., & Kano, Y. (1992). Can test statistics in covariance structure analysis be trusted? Psychological Bulletin, 112, 351–362. doi:10.1037/0033-2909.112.2.351.

Floyd, F. J., & Widaman, K. F. (1995). Factor analysis in the development and refinement of clinical assessment instruments. Psychological Assessment, 7, 286. doi:10.1037/1040-3590.7.3.286.

Mannion, A. F., Elfering, A., Staerkle, R., Junge, A., Grob, D., Semmer, N. K., et al. (2005). Outcome assessment in low back pain: How low can you go? European Spine Journal, 14, 1014–1026. doi:10.1007/s00586-005-0911-9.

McDonald, R. P., & Mok, M. M. C. (1995). Goodness of fit in item response models. Multivariate Behavioral Research, 30, 23–40. doi:10.1207/s15327906mbr3001_2.

Bentler, P. M., & Mooijaart, A. (1989). Choice of structural model via parsimony: A rationale based on precision. Psychological Bulletin, 106, 315–317. doi:10.1037/0033-2909.106.2.315.

Browne, M. W., & Cudeck, R. (1993). Alternative ways of assessing model fit. In K. A. Bollen & J. S. Long (Eds.), Testing structural equation models (pp. 136–172). Newbury Park, CA: Sage Publications.

Hu, L., & Bentler, P. M. (1995). Evaluating model fit. In R. H. Hoyle (Ed.), Structural equation modeling: Concepts, issues and applications (pp. 76–79). Thousand Oaks, CA: Sage Publications.

Hu, L. T., & Bentler, P. (1999). Cutoff criteria for fit indices in covariance structure analysis: Conventional criteria versus new alternatives. Structural Equation Modeling, 6, 1–55.

Muthen, B. O., & Muthen, L. K. (2001). Mplus user’s guide. Los Angeles, CA: Muthen & Muthen.

Yu, C. Y. (2002). Evaluating cutoff criteria of model fit indices for latent variable models with binary and continuous outcomes. Doctoral dissertation, University of California, Los Angeles.

Tucker, L., & Lewis, C. (1973). A reliability coefficient for maximum likelihood factor analysis. Psychometrika, 38, 1–10. doi:10.1007/BF02291170.

Bentler, P. (1990). Comparative fit indices in structural models. Psychological Bulletin, 107, 238–246. doi:10.1037/0033-2909.107.2.238.

Joreskog, K. G., & Sorbom, D. (1993). LISREL 8: Structural equation modeling with the SIMPLIS command language. Lincolnwood, IL: Scientific Software International, Inc.

Bentler, P. M. (1995). EQS structural equations program manual. Encino, CA: Multivariate Software.

Browne, M. W. (1984). Asymptotically distribution-free methods for the analysis of covariance structures. The British Journal of Mathematical and Statistical Psychology, 37, 62–83.

Reeve, B. B., Hays, R. D., Bjorner, J. B., Cook, K. F., Crane, P. K., Teresi, J. A., et al. (2007). Psychometric evaluation and calibration of health-related quality of life item banks: Plans for the Patient-Reported Outcomes Measurement Information System (PROMIS). Medical Care, 45, S22–S31. doi:10.1097/01.mlr.0000250483.85507.04.

McDonald, R. P. (1999). Test theory: A unified treatment. Mahway, NJ: Lawrence Earlbaum.

Kline, R. B. (1998). Principles and practice of structural equation modeling. New York, NY: The Guilford Press.

West, S. G., Finch, J. F., & Curran, P. J. (1995). SEM with nonnormal variables. Thousand Oaks, CA: Sage Publications.

Joreskog, K. G. (2005). Structural equation modeling with ordinal variables using LISREL. Lincolnwood, IL: Scientific Software International, Inc.

Yuan, K. H., & Bentler, P. M. (1997). Mean and covariance structure analysis: Theoretical and practical improvements. Journal of the American Statistical Association, 92, 767–774. doi:10.2307/2965725.

O’Connor, B. P. (2000). SPSS and SAS programs for determining the number of components using parallel analysis and Velicer’s MAP test. Behavior Research Methods, Instruments, & Computers, 32, 396–402.

Reise, S., Widaman, K., & Pugh, R. (1993). Confirmatory factor analysis and item response theory: Two approaches for exploring measurement invariance. Psychological Bulletin, 114, 552. doi:10.1037/0033-2909.114.3.552.

Reise, S. P., Morizot, J., & Hays, R. D. (2007). The role of the bifactor model in resolving dimensionality issues in health outcomes measures. Quality of Life Research, 16(Suppl 1), 19–31. doi:10.1007/s11136-007-9183-7.

Yung, Y. F., Thissen, D., & McLeod, L. D. (1999). On the relationship between the higher-order factor model and the hierarchical factor model. Psychometrika, 64, 113–128. doi:10.1007/BF02294531.

DeWalt, D. A., Rothrock, N., Yount, S., & Stone, A. A. (2007). Evaluation of item candidates: The PROMIS qualitative item review. Medical Care, 45, S12–S21. doi:10.1097/01.mlr.0000254567.79743.e2.

Reeve, B. B., Burke, L. B., Chiang, Y. P., Clauser, S. B., Colpe, L. J., Elias, J. W., et al. (2007). Enhancing measurement in health outcomes research supported by Agencies within the US Department of Health and Human Services. Quality of Life Research, 16(Suppl 1), 175–186. doi:10.1007/s11136-007-9190-8.

Thissen, D., Chen, W.-H., & Bock, R. D. (2003). Multilog (version 7). Lincolnwood, IL: Scientific Software International.

Samejima, F. (1969). Estimation of latent ability using a response pattern of graded scores. Psychometrika Monograph Supplement No. 17.

Bjorner, J. B., Smith, K. J., Stone, C., & Sun, X. (2007). IRTFIT: A macro for item fit and local dependence tests under IRT models. Lincoln, RI: QualityMetric.

Orlando, M., & Thissen, D. (2003). Further investigation of the performance of S-X2: An item fit index for use with dichotomous item response theory models. Applied Psychological Measurement, 27, 289–298. doi:10.1177/0146621603027004004.

Han, K. T. (2007). WinGen: Windows software that generates IRT parameters and item responses. Applied Psychological Measurement, 31, 457–459. doi:10.1177/0146621607299271.

Han, K. T., & Hambleton, R. K. (2007). User’s manual: WinGen (Center for Educational Assessment Report No. 642). Amherst, MA: University of Massachusetts, School of Education.

Choi, S. W. (2008). Firestar: Computerized adaptive testing (CAT) simulation program for polytomous IRT models. Applied Psychological Measurement (in press).

Zinbarg, R. E., Barlow, D. H., & Brown, T. A. (1997). Hierarchical structure and general factor saturation of the Anxiety Sensitivity Index: Evidence and implications. Psychological Assessment, 9, 277–284. doi:10.1037/1040-3590.9.3.277.

Acknowledgements

The Patient-Reported Outcomes Measurement Information System (PROMIS) is a National Institutes of Health (NIH) roadmap initiative to develop a computerized system measuring patient-reported outcomes in respondents with a wide range of chronic diseases and demographic characteristics. PROMIS was funded by cooperative agreements to a Statistical Coordinating Center (Evanston Northwestern Healthcare, PI: David Cella, PhD, U01AR52177) and six Primary Research Sites (Duke University, PI: Kevin Weinfurt, PhD, U01AR52186; University of North Carolina, PI: Darren DeWalt, MD, MPH, U01AR52181; University of Pittsburgh, PI: Paul A. Pilkonis, PhD, U01AR52155; Stanford University, PI: James Fries, MD, U01AR52158; Stony Brook University, PI: Arthur Stone, PhD, U01AR52170; and University of Washington, PI: Dagmar Amtmann, PhD, U01AR52171). NIH Science Officers on this project are Deborah Ader, PhD, Susan Czajkowski, PhD, Lawrence Fine, MD, DrPH, Louis Quatrano, PhD, Bryce Reeve, PhD, William Riley, PhD, and Susana Serrate-Sztein, PhD. This manuscript was reviewed by the PROMIS Publications Subcommittee prior to external peer review. See the web site at www.nihpromis.org for additional information on the PROMIS cooperative group.

Author information

Authors and Affiliations

Corresponding author

Appendix

Rights and permissions

About this article

Cite this article

Cook, K.F., Kallen, M.A. & Amtmann, D. Having a fit: impact of number of items and distribution of data on traditional criteria for assessing IRT’s unidimensionality assumption. Qual Life Res 18, 447–460 (2009). https://doi.org/10.1007/s11136-009-9464-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11136-009-9464-4