Abstract

The acquisition of bidirectional action–effect associations plays a central role in the ability to intentionally control actions. Humans learn about actions not only through active experience, but also through observing the actions of others. In Experiment 1, we examined whether action–effect associations can be acquired by observational learning. To this end, participants observed how a model repeatedly pressed two buttons during an observation phase. Each of the buttonpresses led to a specific tone (action effect). In a subsequent test phase, the tones served as target stimuli to which the participants had to respond with buttonpresses. Reaction times were shorter if the stimulus–response mapping in the test phase was compatible with the action–effect association in the observation phase. Experiment 2 excluded the possibility that the impact of perceived action effects on own actions was driven merely by an association of spatial features with the particular tones. Furthermore, we demonstrated that the presence of an agent is necessary to acquire novel action–effect associations through observation. Altogether, the study provides evidence for the claim that bidirectional action–effect associations can be acquired by observational learning. Our findings are discussed in the context of the idea that the acquisition of action–effect associations through observation is an important cognitive mechanism subserving the human ability for social learning.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

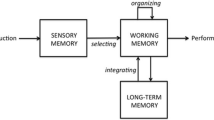

An influential account of action control has proposed that actions are controlled through bidirectional action–effect associations (Elsner & Hommel, 2001; Hommel, Müsseler, Aschersleben, & Prinz, 2001; Kühn, Elsner, Prinz, & Brass, 2009; Kunde, Hoffmann, & Zellmann, 2002). According to this ideomotor approach, actions are represented in terms of their sensory consequences, and action knowledge is acquired through the repeated experience of co-occurrences of actions and their effects. The cognitive representations of intentional actions are therefore characterized by the associations of motor codes with sensory codes. Since the intention to elicit a particular sensory effect is assumed to activate directly the motor program associated with this effect, acquired action–effect associations can be used for the control of actions (Hommel, 2009). Evidence for this notion has been provided by Elsner and Hommel (2001). Participants were required to press buttons as a response to a visual stimulus and experienced the co-occurrence of their actions with specific tones. Importantly, these tones were irrelevant to the participants’ task. In a subsequent test phase, participants were presented with both tones again and were asked to press the buttons in response to the tones. The authors reported that participants’ responses were faster when the preceding tone had previously been the consequence of this action (i.e., were perceived as an effect of the action) and concluded that the perception of the tone activated the corresponding motor program.

However, action knowledge is acquired not only through active action experiences, but also through the observation of other people’s actions (e.g., Blandin, Lhuisset, & Proteau, 1999; Brass & Heyes, 2005; Cross, Kraemer, Hamilton, Kelley, & Grafton, 2009; Jeannerod, 2001; see also Bandura, 1977). One might, therefore, speculate that information about other people’s actions and their outcomes is used by observers to control their own actions. More precisely, the claim that all actions are cognitively represented and selected in terms of their effects (e.g., Hommel et al., 2001) and the findings that action knowledge can also be acquired through mere observation lead, thus, to the assumption that bidirectional action–effect associations can also be acquired by observational learning. Importantly, to employ observed action–effect contingencies for our own-action control, we need to relate our own motor codes to the cognitive representation of the observed effect of another person’s action. Previous research has shown that the mere perception of someone else’s action facilitates the execution of the same by the observer (Brass, Bekkering, Wohlschläger, & Prinz, 2000; Fadiga, Fogassi, Pavesi, & Rizzolatti, 1995; Prinz, 1997). That is, considering this automatic motor activation during observation, we hypothesize that motor codes associated with an observed action and sensory representations of the observed action outcome become simultaneously activated and a new bidirectional action–effect association can be acquired (cf. Elsner & Hommel, 2001).

In contrast to this consideration, however, it might be possible that motor and sensory codes do not become associated while others’ actions are observed. This alternative hypothesis recently received support by findings suggesting that action–effect binding occurs only when people intend to elicit an effect with their action (Herwig, Prinz, & Waszak, 2007). More precisely speaking, Herwig and colleagues showed that participants acquired an action–effect association only when their action was determined by their own choice, but not when the actions were triggered by external stimulus events. Importantly, when observing another person’s action, people do not intend to execute this action and merely perceive it as triggered by someone else. Following this argumentation, one would expect that no action–effect binding will occur in such a situation.

Taken together, the aim of the present study was to integrate the ideas of observational learning and ideomotor action control and to examine whether the observation of another person’s actions and their effects results in an incidental learning of action–effect associations that modulates subsequent action execution (see Elsner & Hommel, 2001). In an action observation phase, the participants observed another actor pressing two buttons that triggered two different auditory effects. In a subsequent test phase, the same tones were presented as stimuli to which the participants had to react as quickly as possible with buttonpresses. A finding of faster responses in the test phase for stimulus–response (S–R) mappings that are compatible with the action–effect mappings in the observation phase (as compared with incompatible mappings) would provide evidence for the notion that participants acquire action–effect associations via the observation of others’ actions (i.e., social learning; Bandura, 1977).

Experiment 1

Method

Participants

A total of 24 students at Radboud University Nijmegen (18—31 years; 6 of them male) participated in the experiment in return for €8 or course credits.

Setup and stimuli

Participants were seated opposite to the experimenter. An LED device was used to present the visual stimuli in the observation phase. The device was positioned in the middle of a table and contained two displays (77 × 18 mm), one facing the participant and the other facing the experimenter (viewing distance of approximately 75 cm; see Fig. 1). The participant had no view of the experimenter's display. Visual stimuli consisted of left- and right-pointing arrowheads. At the left- and right-hand sides of the device were two buzzer buttons (diameter, 5 cm; 3.5 cm high), which were positioned close to the experimenter’s side of the table. One of the buttons was red, the other one black. Auditory stimuli were 400- and 800-Hz tones, presented for 200 ms simultaneously through two speakers to the left and right sides.

Procedure and design

Observation phase

On the displays of the LED device, arrowheads were presented at the beginning of each trial. The experimenter performed left and right buttonpress responses as instructed via the arrowheads on his display. Each button triggered one of the two different sound effects. On about 20% of the trials (randomly distributed), the arrowheads on the two sides of the LED device pointed in two different directions.

Participants were informed that the tones were irrelevant to the task (see Elsner & Hommel, 2001) and were instructed to closely observe the experimenter’s actions and count the number of mistakes. An incorrect response occurred if the experimenter performed a buttonpress on the side that had not been indicated by the arrowhead on the participants’ display. Importantly, independently of the correctness of the responses, the buttonpresses were always coupled with the same tones. Participants were provided with a pen and a sheet of paper. Every time they registered a mistake, they had to cross out one of a row of circles on the sheet.

Each observation trial started with a fixation cross for 500 ms. After an interstimulus interval of 1,000 ms, an arrowhead was presented. It remained visible until the experimenter pressed one of the two buttons. The corresponding tone was presented 50 ms after the experimenter’s response. The next trial started after an intertrial interval of 1,500 ms.

The observation phase comprised 300 trials composed of the factorial combination of two pointing directions of the arrowheads and the accuracy of the experimenter’s performance (i.e., 80% correct trials, 20% “mistakes”). Trials were presented in a randomized order. The action–effect mapping was counterbalanced across participants. For half of the participants, the right buttonpress elicited a high tone and the left buttonpress a low tone (mapping A), whereas the action–effect mapping was reversed for the other half of the participants (mapping B).

Test phase

The procedure for the test phase followed exactly that in Elsner and Hommel (2001). The buttons were positioned in front of the participant, before the experimenter left the room. Participants were asked to discriminate between the presented tones and to react as quickly and correctly as possible by pressing one of the two buttons. Participants were randomly assigned to one of two S–R mapping conditions. In the compatible S–R condition, the S–R-mapping was the same mapping between response and tone as the participants had experienced in the observation phase. In the incompatible condition, the relation was reversed, and participants had to respond to the tone with the buttonpress that was opposite to the one associated with the tone in the observation phase. Responses had to be given within 2,000 ms. The intertrial interval was 1,500 ms.

The test phase consisted of 100 randomly ordered trials (50 high and 50 low tones). Half of the participants in each condition had experienced action–effect mapping A in the observation phase, and the other half had experienced action–effect mapping B.

Results

Reaction times (RTs) were measured relative to the onset of the tones (see Fig. 2 for means). Trials with incorrect responses, no responses, and RTs deviating more than two standard deviations of the mean RT were excluded from the subsequent analyses. The 100 trials were divided into three blocks consisting of either 33 or 34 trials each. Mean RTs were calculated and submitted to an analysis of variance (ANOVA) with the between-subjects factor of compatibility (compatible, incompatible) and the within-subjects factor of block (1, 2, 3). The RT analysis revealed a main effect of compatibility, F(1, 22) = 7.86, p = .01, η 2p = .26, showing that the response latencies in the compatible group (371 ms) were significantly shorter than the latencies in the incompatible group (427 ms). There were no other significant effects (all ps > .25).

Mean reaction times in Experiment 1 and Experiment 2. Dark bars represent reaction times in the compatible condition, light bars in the incompatible condition. Error bars indicate the standard errors

Discussion

Responses in the test phase of Experiment 1 were faster for S–R mappings compatible with the action–effect mapping of another person’s actions (i.e., in an observation phase), as compared with the incompatible S–R mappings. This suggests that participants had acquired action–effect associations by observational learning.

What are the underlying cognitive mechanisms? Previous research has provided evidence for motor activation when participants merely observe the action of another person. In particular, it has been suggested that when performing an action (e.g., a hand movement), people associate the visual effect of this action (e.g., the moving hand) with the activated motor code (Catmur, Walsh, & Heyes, 2007; Heyes, 2010). When they subsequently perceive the hand movement of another person, the associated motor code will become activated, and the action will thus be “mirrored.” We suggest that in our experiment, the perception of the other’s buttonpress (i.e., hand action) led to an activation of the corresponding motor code in the observer’s own motor system. When the observer concurrently perceived the auditory effect of the hand action, the representation of the effect (i.e., effect code) became associated with the activated motor code by means of Hebbian learning (see Hommel et al., 2001; Keysers & Perrett, 2004). The presentation of the tone in the subsequent test phase led then to an activation of the associated motor code and, thus, to facilitation of the respected action in the compatible condition (see Elsner & Hommel, 2001).

Experiment 2

The aim of Experiment 2 was two-fold. First, it was designed to examine whether the observation of a real action is necessary to acquire novel action–effect contingencies or whether the mere belief that an observed effect was caused by another person’s action would be sufficient (see Sebanz, Knoblich, & Prinz, 2005). To investigate this question, participants in Experiment 2 experienced the co-occurrences of a visual stimulus presented on the right or left side followed by one of two auditory events (i.e., a low or a high tone). By means of a cover story, participants were led to believe that the yellow circles on the left and right sides of the screen indicated another human agent’s left or right buttonpresses (action belief condition; see the Procedure section of Experiment 2). On the basis of findings that action observation and action imagination share common features (e.g., Jeannerod, 2001; Munzert, Lorey, & Zentgraf, 2009), one would expect the same effects as in Experiment 1.

The second aim of the experiment was to control for a possible alternative explanation of the findings in Experiment 1. That is, it cannot be excluded at this point. that the faster response execution in the compatible condition in Experiment 1 was driven by learned associations between two perceptual features—that is, a spatial event feature (e.g., left) with a particular tone (e.g., low tone). When this tone was presented again in the test phase, participants might have reacted faster with the corresponding buttonpress because the perception of this specific tone primed actions on the side that had been associated with it. That would mean that participants’ facilitated response execution was the result of a previously acquired association of visuospatial feature codes with different sounds (i.e., perceptual associations), rather than being due to the acquisition of action–effect associations.

Consequently, half of the participants in Experiment 2 followed the same protocol as the participants in the action belief condition, with the only difference that no cover story was presented to them (nonaction belief condition). That means that the participants experienced the co-occurrences of the visual stimuli (i.e., yellow circles) and the auditory stimuli (i.e., tones) without linking these events to an action. If the effect of Experiment 1 was driven only by an association of visuospatial feature codes with different sounds, the nonaction belief condition should result in the same pattern of effects (i.e., a facilitation in the compatible condition). If, however, the effect of Experiment 1 was due to the acquisition of action–effect associations, no facilitation effects would be expected in this condition.

Method

Participants

A total of 48 students at Radboud University Nijmegen (18–33 years; 12 of them male) participated in the experiment in return for €8 or course credits.

Setup and stimuli

Participants were seated in front of a computer screen with no other person present in the room. On the screen, white left- or right-pointing arrowheads were displayed centrally on a black background. Additionally, yellow circles appeared as targets on either the right or the left side of the screen. Motor responses and auditory effects were the same as in Experiment 1.

Procedure and design

Observation phase

The procedure was similar to that in Experiment 1. Instead of a human model pressing the right or left button, participants in both conditions of Experiment 2 (action belief and nonaction belief) viewed left or right target circles preceded by arrowheads. The interstimulus-interval varied randomly between 250 and 1,250 ms (matching the confederate’s performance in Experiment 1). Each appearance of a circle was followed by a tone. Similar to Experiment 1, participants were asked to indicate mismatches between arrow direction and target position (20% of the trials).

Importantly, the participants in the action belief condition were scheduled in pairs. When they arrived at the lab, they were told that they were going to perform a task together on two different computers. Each of them was brought into a separate room and subsequently instructed like the first half of the participants, with the important difference that they were told that the two circles were caused by a buttonpress of the other person. Before the observation phase started, the experimenter demonstrated that the circles could be caused by a buttonpress (e.g., pressing the right button caused a circle to appear on the right side of the screen). Then they were told that in the first phase of the experiment, their partner would perform an RT task—namely, pressing buttons as a reaction to the arrowheads on the screen. It was mentioned that their screen was an exact copy of their partner’s screen, so that they were able to see the arrowheads as well as their partner’s reactions to them as indicated by the circle positions.

Test phase

The test phase was identical to that in Experiment 1.

Results

Mean RTs were calculated and submitted to an ANOVA with the between-subjects factors of compatibility (compatible, incompatible) and instruction (action belief, non-action belief) and the within-subjects factor of block (1, 2, 3). The analysis revealed a main effect of block, F(2, 43) = 7.76, p < .01, η 2p = .27, but no main effect of compatibility, F < 1, or instruction, F(1, 44) = 2.47, p = .12, η 2p = .05, and no interaction effect between compatibility and instruction, F < 1 (see Fig. 1). As post hoc t-tests revealed, response latencies in the first block were significantly longer (390 ms) than latencies in the second block (369 ms) and the third block (371 ms), t(47) = 3.94, p < .001, and t(47) = 3.07, p < .01, respectively. This indicates that participants became faster over time, suggesting a practice effect.

To investigate whether the null effect in Experiment 2 was due to a lack of power, we performed a post hoc power analysis. Given the conventional level of statistical significance of α = .05 and a sample size of 48 participants, a post hoc power analysis revealed an excellent statistical power of (1-β) = .98 for the detection of a compatibility main effect of η 2 = .23 (i.e., he same size as the effect observed in Experiment 1). This suggests that the power of Experiment 2 was large enough to detect possible differences between the conditions.

Discussion

The results of Experiment 2 show that participants did not react faster in the compatible than in the incompatible condition—either in the nonaction belief condition or in the action belief condition. This suggests that no bidirectional action–effect-association was acquired.

The aim of Experiment 2 was to examine whether the acquisition of an association between tones and the spatial perceptual features of left and right would affect subsequent responses to buttons on the left and right sides of the participant when responses were produced due to signaling by the tones (non-action belief condition). The fact that participants did not react faster in the compatible condition allows the conclusion that a perceptual processing of visuospatial features does not lead to a facilitated processing of an event in the left or right action space. This suggests that the participant’s faster reaction in the compatible condition in Experiment 1 cannot have been due to simple perceptual priming of a previously acquired association between visuospatial feature and tone.

Experiment 2 tested, moreover, whether the mere belief that these spatial perceptual features were the consequences of another person’s actions allowed participants to acquire action–effect associations through observational learning (action belief condition). In this condition, we could not find evidence that participants acquired a novel action–effect association. There are three possible explanations for this finding. First, one could argue that a pure imagination of a goal-directed action does not lead to an action representation that is comparable to the observation of a goal-directed action (see also Caettano, Caruana, Jezzini, & Rizzolatti, 2009), even though they share many features (e.g., Jeannerod, 2001). Second, it was assumed that the verbal description of the other person performing an action would lead to an activation of the respective motor code (see Paulus, Lindemann, & Bekkering, 2009). However, participants were not directly asked to imagine the other’s action. Different results might have been obtained in the latter case. Third, since we used yellow circles to indicate the action of the other person, it is possible that the participants learned the circles as effects of the other’s action, and not the tones (see Ziessler, Nattkemper, & Frensch, 2004). Future research is thus necessary to examine whether action–effect associations can be acquired by the mere belief that another person has performed an action.

General discussion

The present study shows that bidirectional action–effect associations can also be acquired through the observation of other people’s actions and their effects and, thereby, extends the ideomotor approach to the realm of observational learning. These findings have implications for notions regarding the acquisition of S–R mappings and for social learning theories.

Recent research has shown that an acquired action–effect mapping affects participants’ subsequent performance on an S–R task when the previous effect of an action serves as the stimulus to which participants have to respond with the same action (Elsner & Hommel, 2001; Kunde et al., 2002). Our study adds to these results the finding that observed actions performed by others and their effects also affect subsequent S–R tasks. Importantly, these effects were present only when the associations were experienced in an action context, but not when participants merely believed that an outcome was caused by another person’s action. Our results thus extend recent theoretical approaches to perception–action coupling (Heyes, 2010; Hommel et al., 2001; Keysers & Perrett, 2004) and provide the first empirical evidence that people can acquire bidirectional associations between actions and distal effects through observing others’ actions.

Interestingly, it has recently been suggested that action–effect associations will be acquired only if someone intends to produce an effect by his or her action (Herwig et al., 2007). Our finding of observation-based action–effect learning is not necessarily in contrast to these findings. It shows that the processes involved in the observation of another person’s action share crucial features with processes involved in the planning and execution of intentional action (e.g., Jeannerod, 2001). Future research is necessary to investigate in greater detail which features are necessary for action–effect binding to occur.

Our finding, moreover, has implications for theories of social learning (Bandura, 1977; Miller & Dollard, 1941), since it suggests a cognitive mechanism that enables humans to learn through the observation of others’ actions. Whereas it has been established that humans learn through the observation of others’ actions (Cross et al., 2009; Torriero, Oliveri, Koch, Caltagirone, & Petrosini, 2007), enabling them to avoid costly learning by trial and error, the cognitive basis behind this ability has remained a topic of intense discussion (e.g., Brass & Heyes, 2005). The present findings provide evidence that the acquisition of bidirectional action–effect associations might be a crucial mechanism that allows humans to acquire novel action knowledge through the observation of others’ actions (Paulus, Hunnius, Vissers, & Bekkering, 2011).

In particular, we suggest that the perception of an action leads to the activation of the same motor code in the observer’s own motor repertoire. When the effect of this action is perceived concurrently with the action, the representation of the effect (perceptual code) will be associated with the activated motor code, leading to the acquisition of a novel action–effect association (see Elsner & Hommel, 2001). When, on a later occasion, the same effect is perceived or intended and the perceptual code is thus activated, the associated motor code will also be activated, leading to or facilitating the execution of the action (Hommel et al., 2001). We propose that the acquisition of bidirectional action–effect associations through observational means might play an important role in the uniquely human ability for social learning and imitation of different behaviors, such as aggressive behavioral tendencies in children (Bandura, Ross, & Ross, 1961) or the acquisition of novel action knowledge as already seen in infancy (Elsner & Aschersleben, 2003; Paulus et al., 2011). Future research is needed to examine the scope and limitations of this cognitive mechanism, as well as possibly differences between observationally acquired action–effect associations and the ones that are acquired through active action experiences.

In sum, the present study demonstrates an influence of observed action–effect contingencies on own-action execution. Our results suggest that bidirectional action–effect associations can be acquired via observation and that this cognitive mechanism might underlie the human ability for social learning.

References

Bandura, A. (1977). Social learning theory. Englewood Cliffs, NJ: Prentice Hall.

Bandura, A., Ross, D., & Ross, S. A. (1961). Transmission of aggression through imitation of aggressive models. Journal of Abnormal and Social Psychology, 63, 575–582.

Blandin, Y., Lhuisset, L., & Proteau, L. (1999). Cognitive processes underlying observational learning of motor skills. Quarterly Journal of Experimental Psychology, 52A, 957–979.

Brass, M., Bekkering, H., Wohlschläger, A., & Prinz, W. (2000). Compatibility between observed and executed finger movements: Comparing symbolic, spatial, and imitative cues. Brain and Cognition, 44, 124–143.

Brass, M., & Heyes, C. M. (2005). Imitation: Is cognitive neuroscience solving the correspondence problem? Trends in Cognitive Sciences, 9, 489–495.

Caettano, L., Caruana, F., Jezzini, A., & Rizzolatti, G. (2009). Representation of goal and movements without overt motor behaviour in the human motor cortex: A transcranial magnetic stimulation study. Journal of Neuroscience, 29, 11134–11138.

Catmur, C., Walsh, V., & Heyes, C. M. (2007). Sensorimotor learning configures the human mirror neuron system. Current Biology, 17, 1527–1531.

Cross, E. S., Kraemer, D. J. M., de Hamilton, A. F. C., Kelley, W. M., & Grafton, S. T. (2009). Sensitivity of the action observation network to physical and observational learning. Cerebral Cortex, 19, 315–326.

Elsner, B., & Aschersleben, G. (2003). Do I get what you get? Learning about effects of self-performed and observed actions in infants. Consciousness and Cognition, 12, 732–751.

Elsner, B., & Hommel, B. (2001). Effect anticipation and action control. Journal of Experimental Psychology. Human Perception and Performance, 27, 229–240.

Fadiga, L., Fogassi, L., Pavesi, G., & Rizzolatti, G. (1995). Motor facilitation during action observation: A magnetic stimulation study. Journal of Neurophysiology, 73, 2608–2611.

Herwig, A., Prinz, W., & Waszak, F. (2007). Two modes of sensorimotor integration in intention-based and stimulus-based actions. Quarterly Journal of Experimental Psychology, 60, 1540–1554.

Heyes, C. M. (2010). Where do mirror neurons come from? Neuroscience and Biobehavioral Reviews, 34, 575–583.

Hommel, B. (2009). Action control according to TEC (theory of event coding). Psychological Research, 73, 512–526.

Hommel, B., Müsseler, J., Aschersleben, G., & Prinz, W. (2001). The theory of event coding (TEC): A framework for perception and action planning. The Behavioral and Brain Sciences, 24, 849–878.

Jeannerod, M. (2001). Neural simulation of action: A unifying mechanism for motor cognition. NeuroImage, 14, S103–S109.

Keysers, C., & Perrett, D. I. (2004). Demystifying social cognition: A Hebbian perspective. Trends in Cognitive Sciences, 8, 501–507.

Kühn, S., Elsner, B., Prinz, W., & Brass, M. (2009). Busy doing nothing: Evidence for nonaction-effect binding. Psychonomic Bulletin & Review, 16, 542–549.

Kunde, W., Hoffmann, J., & Zellmann, P. (2002). The impact of anticipated action effects on action planning. Acta Psychologica, 109, 137–155.

Miller, N. E., & Dollard, J. (1941). Social learning and imitation. New Haven, CT: Yale University Press.

Munzert, J., Lorey, B., & Zentgraf, K. (2009). Cognitive motor processes: The role of motor imagery in the study of motor representations. Brain Research Reviews, 60, 306–326.

Paulus, M., Hunnius, S., Vissers, M., & Bekkering, H. (2011). Bridging the gap between the other and me: The functional role of motor resonance and action effects in infants’ imitation. Developmental Science, 14, 901–910. doi:10.1111/j.1467-7687.2011.01040.x

Paulus, M., Lindemann, O., & Bekkering, H. (2009). Motor simulation in verbal knowledge acquisition. Quarterly Journal of Experimental Psychology, 62, 2298–2305.

Prinz, W. (1997). Perception and action planning. European Journal of Cognitive Psychology, 9, 129–154.

Sebanz, N., Knoblich, G., & Prinz, W. (2005). How to share a task: Corepresenting stimulus–response mappings. Journal of Experimental Psychology. Human Perception and Performance, 31, 1234–1246.

Torriero, S., Oliveri, M., Koch, G., Caltagirone, C., & Petrosini, L. (2007). The what and how of observational learning. Journal of Cognitive Neuroscience, 19, 1656–1663.

Ziessler, M., Nattkemper, D., & Frensch, P. A. (2004). The role of anticipation and intention in the learning of effects of self-performed action. Psychological Research, 68, 163–175.

Open Access

This article is distributed under the terms of the Creative Commons Attribution Noncommercial License which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

Author information

Authors and Affiliations

Corresponding author

Additional information

We thank Gunda Carina Echeverria for help with data acquisition and Angela Khadar for proofreading the manuscript.

Rights and permissions

Open Access This is an open access article distributed under the terms of the Creative Commons Attribution Noncommercial License (https://creativecommons.org/licenses/by-nc/2.0), which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

About this article

Cite this article

Paulus, M., van Dam, W., Hunnius, S. et al. Action-effect binding by observational learning. Psychon Bull Rev 18, 1022–1028 (2011). https://doi.org/10.3758/s13423-011-0136-3

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13423-011-0136-3