Abstract

This paper reviews Vlasov-based numerical methods used to model plasma in space physics and astrophysics. Plasma consists of collectively behaving charged particles that form the major part of baryonic matter in the Universe. Many concepts ranging from our own planetary environment to the Solar system and beyond can be understood in terms of kinetic plasma physics, represented by the Vlasov equation. We introduce the physical basis for the Vlasov system, and then outline the associated numerical methods that are typically used. A particular application of the Vlasov system is Vlasiator, the world’s first global hybrid-Vlasov simulation for the Earth’s magnetic domain, the magnetosphere. We introduce the design strategies for Vlasiator and outline its numerical concepts ranging from solvers to coupling schemes. We review Vlasiator’s parallelisation methods and introduce the used high-performance computing (HPC) techniques. A short review of verification, validation and physical results is included. The purpose of the paper is to present the Vlasov system and introduce an example implementation, and to illustrate that even with massive computational challenges, an accurate description of physics can be rewarding in itself and significantly advance our understanding. Upcoming supercomputing resources are making similar efforts feasible in other fields as well, making our design options relevant for others facing similar challenges.

Similar content being viewed by others

1 Introduction

While physical understanding is inherently based on empirical evidence, numerical simulation tools have become an integral part of the majority of fields within physics. When tested against observations, numerical models can strengthen or invalidate existing theories and quantify the degree to which the theories have to be improved. Simulation results can also complement observations by giving them a larger context. In space physics, spacecraft measurements concern only one point at one time in the vast volume of space, indicating that discerning spatial phenomena from temporal changes is difficult. This is a shortcoming that has also led to the use of spacecraft constellations, like the European Space Agency’s Cluster mission (Escoubet et al. 2001). However, simulations are considerably more cost-effective compared to spacecraft, and they can be adopted to address physical systems that cannot be reached by in situ experiments, like the distant galaxies. Finally, and most importantly, predictions of physical environments under varying conditions are always based on modelling. Predicting the near-Earth environment in particular has become increasingly important, not only because the near-Earth space hosts expensive assets used to monitor our planet. The space environmental conditions threatening space- or ground-based technology or human life are commonly termed as space weather. Space weather predictions include two types of modelling efforts; those targeting real-time modelling (similar to terrestrial weather models), and those which test and improve the current space physical understanding together with top-tier experiments. This paper concerns the latter approach.

The physical conditions within the near-Earth space are mostly determined by physics of collisionless plasmas, where the dominant physical interactions are caused by electromagnetic forces over a collection of charged particles. There are three main approaches to model plasmas: (1) the fluid approach (e.g., magnetohydrodynamics, MHD), (2) the fully kinetic approach, and (3) hybrid approaches combining the first two. Present global models including the entire near-Earth space in three dimensions (3D) and resolving the couplings between different regions are largely based on MHD (e.g., Janhunen et al. 2012). However, single-fluid MHD models are basically scale-less in that they assume that plasmas have a single temperature approximated by a Maxwellian distribution. Therefore they provide a limited context to the newest space missions, which produce high-fidelity multi-point observations of spatially overlapping multi-temperature plasmas. The second approach uses a kinetic formulation as represented by the Vlasov theory (Vlasov 1961). In this approach, plasmas are treated as velocity distribution functions in a six-dimensional phase space consisting of three-dimensional ordinary space (3D) and a three-dimensional velocity space (3V). The majority of kinetic simulations model the Vlasov theory by a particle-in-cell (PIC) method (Lapenta 2012), where a large number of particles are propagated within the simulation, and the distribution function is constructed from particle statistics in space and time. The fully kinetic PIC approach means that both electrons and protons are treated as particles within the simulation. Such simulations in 3D are computationally extremely costly, and can only be carried out in local geometries (e.g., Daughton et al. 2011).

A hybrid approach in the kinetic simulation regime means usually that electrons are treated with a fluid description, but protons and heavier ions are treated kinetically. Again, the vast majority of simulations use a hybrid-PIC approach, which have previously considered 2D spatial regimes due to computational challenges (e.g., Omidi et al. 2005; Karimabadi et al. 2014), but have recently been extended into 3D using a limited resolution (e.g., Lu et al. 2015; Lin et al. 2017). This paper does not discuss the details of the PIC approach, but instead concentrates on a hybrid-Vlasov method, where the ion velocity distribution is discretised and modelled with a 3D–3V grid. The difference to hybrid-PIC is that in hybrid-Vlasov the distribution functions are evolved in time as an entity, and not constructed from particle statistics. The main advantage is therefore that the distribution function becomes noiseless. This can be important for the problem at hand, because the distribution function is in many respects the core of plasma physics as the majority of the plasma parameters and processes can be derived from it. As will be described, hybrid-Vlasov methods have been used mostly in local geometries, because the 3D–3V requirement implies a large computational cost. A global approach, which in space physics means simulation box sizes exceeding thousands of ion inertial lengths or gyroradii per dimension, have not been possible as naturally the large volume has to consider the velocity space as well. The world’s (so far) only global magnetospheric hybrid-Vlasov simulation, the massively parallel Vlasiator, is therefore the prime application in this article.

This paper is organised as follows: Sect. 2 introduces the typical plasma systems and relevant processes one encounters in space. Sections 3 and 4 introduce the Vlasov theory and its numerical representations. Section 5 describes Vlasiator in detail and justifies the decisions made in the design of the code to aid those who would like to design their own (hybrid-)Vlasov system. At the time of writing, there are no standard verification cases for a (hybrid-)Vlasov system, but we describe the test cases used for Vlasiator. The physical findings are then illustrated briefly, showing that Vlasiator has made a paradigm change in space physics, emphasising the role of scale coupling in large-scale plasma systems. While this paper concerns mostly the near-Earth environment, we hope it is useful for astrophysical applications as well. Astrophysical large-scale modelling is still mostly based on non-magnetised gas (Springel 2005; Bryan et al. 2014), while in reality astrophysical objects are in the plasma state. In the future, pending new supercomputer infrastructure, it may be possible to design astrophysical simulations based on MHD first, and later possibly on kinetic theories. If this becomes feasible, we hope that our design strategies, complemented and validated by in situ measurements, can be helpful.

2 Kinetic physics in astrophysical plasmas

Thermal and non-thermal interactions between charged particles and electromagnetic fields follow the same basic rules throughout the universe, but the applicability of simplified theories and the relevant spatial, temporal, and virial scales vary greatly between different scopes of research. In this section, we present an overview of regions of interest and the phenomena found within them.

2.1 Astrophysical media and objects

Prime examples of themes requiring modelling are e.g., the dynamics of hot, cold, and dark matter in an expanding universe with unknown boundaries. The birth of the universe connects the rapid expansion and cooling of baryonic matter with quantum fluctuation anisotropies that eventually lead to the formation of galactic superclusters. Astrophysical simulations of the universe should naturally account for expansion of space-time and associated effects of general relativity, and modelling of high-energy phenomena should correctly account for special relativity due to velocities approaching the speed of light. A recent forerunner in modelling the universe is EAGLE (Schaye et al. 2015), which utilises smoothed particle hydrodynamics, with subgrid modelling providing feedback of star formation, radiative cooling, stellar mass loss and feedback from stars and accreting black holes. These simulations operate on very much larger scales compared to the Vlasov equation for ions and electrons, yet they depend strongly on knowledge of processes at smaller length and time scales. Due to the majority of the universe consisting of the mostly empty interstellar and intergalactic media, the energy content of turbulent space plasmas must be understood. This has been investigated through the Vlasov equation (see, e.g., Weinstock 1969). Conversely, turbulent behaviour at large scales can act as a model for extending power laws to smaller scales (Maier et al. 2009). An alternative if less common approach for modelling galactic dynamics is to describe the distribution of stars as a Vlasov–Poisson system, to be explained below, with gravitational force terms instead of electromagnetic effects (Guo and Li 2008). This approach highlights the use of the Vlasov equation also on large spatial scales.

2.2 Solar system

Plasma simulations of the solar system are mostly concerned with the modelling of solar activity and its influence on the heliosphere. Solar activity can be divided into two components: the solar wind, consisting of particles escaping continuously from the solar corona due to its thermal expansion, and carrying with them turbulent fields; and transient phenomena such as flares and coronal mass ejections, during which energy and plasma are released explosively from the Sun into the heliosphere. Topics of active research in solar physics include for example the acceleration and expansion of the solar wind (Yang et al. 2012; Verdini et al. 2010; Pinto and Rouillard 2017), coronal heating (De Moortel and Browning 2015; Cranmer et al. 2017) and flux emergence (Schmieder et al. 2014). The latter is particularly important for transient solar activity, as flares and coronal mass ejections are due to the destabilisation of coronal magnetic structures through magnetic reconnection. The typical length of these coronal structures ranges between \(10^{6}\) and \(10^{8}\) m. Of great interest is also the propagation of the solar wind and solar transients into the heliosphere, in particular for studying their interaction with Earth and other planetary environments. Because of the large scales of the systems considered, solar and heliospheric simulations are generally based on MHD, indicating that currently existing theories of the Sun and the solar eruption are mostly based on the MHD approximation. Applying the Vlasov approach to near-Earth physics, having important analogies to the solar plasmas, may therefore provide important feedback to existing solar theories as well.

2.3 Near-Earth space and other planetary environments

Figure 1 illustrates the near-Earth space. The shock separating the terrestrial magnetic domain from the solar wind is called the bow shock (e.g., Omidi 1995), and the region of shocked plasma downstream is the magnetosheath (Balogh and Treumann 2013). The interplanetary magnetic field (IMF), which at 1 AU typically forms an angle of 45\(^{\circ }\) relative to the plasma flow direction, intensifies at the shock, increasing the magnetic field strength to roughly four-fold compared to that in the solar wind (e.g., Spreiter and Stahara 1994).

The bow shock–magnetosheath system hosts highly variable and turbulent environmental conditions, with the bow shock normal angle with respect to the IMF direction being one of the most important factors controlling the level of variability. At portions of the bow shock where the IMF is quasi-parallel with the bow shock normal (termed quasi-parallel shock), some particles reflect at the shock and propagate back upstream causing instabilities and waves in the foreshock upstream of the bow shock (e.g., Hoppe et al. 1981). On the quasi-perpendicular side of the shock, where the IMF direction is more perpendicular to the bow shock normal, the downstream magnetosheath is much smoother, but exhibits large-scale waves originating from anisotropies in the ion distribution function (e.g., Génot et al. 2011; Soucek et al. 2015; Hoilijoki et al. 2016). The foreshock–bow shock–magnetosheath coupled system is under active research, and since it is the magnetosheath plasma which ultimately determines the conditions within the near-Earth space, most important open questions include the processes which determine the plasma characteristics in space and time. The entire system has previously been modelled with MHD, which is usable to infer average properties of the dayside system (e.g., Palmroth et al. 2001; Chapman and Cairns 2003; Dimmock and Nykyri 2013; Mejnertsen et al. 2018), but unable to take into account particle reflection, kinetic waves, turbulence, and it neglects e.g., plasma asymmetries between the quasi-parallel and quasi-perpendicular sides of the shock that require a non-Maxwellian ion distribution function.

The earthward boundary of the magnetosheath is called the magnetopause, a current layer exhibiting large gradients in the plasma parameter space. Energy and mass exchange between the upstream plasma and the magnetosphere occurs at the magnetopause (Palmroth et al. 2003, 2006c; Pulkkinen et al. 2006; Anekallu et al. 2011; Daughton et al. 2014; Nakamura et al. 2017), and therefore its processes are important in determining the amount of energy driving the space weather phenomena, which can endanger technological systems or human health (Watermann et al. 2009; Eastwood et al. 2017). Space weather phenomena are complicated and varied, and we give a non-exhaustive list just to name a few most important categories. Direct energetic particle flows from the Sun alter the communication conditions especially at high latitudes, affecting radio broadcasts, aircraft communication with air traffic control, and radar signals. Sudden changes in the magnetic field induce currents in the terrestrial long conductors, such as gas pipelines, railways, and power grids that can sometimes be disrupted (e.g., Wik et al. 2008). Increasing numbers of satellites are being launched, vulnerable to sudden events in the geospace, as it has been experienced that some spacecraft have stopped operation in response to space weather events (Green et al. 2017). Overall, some estimations show that in the worst case, an extreme space weather event could induce economic costs of the order of 1–2 trillion USD during the first year following its occurrence, and that it could take 4–10 years for the society to recover from its effects (National Research Council 2008). Understanding and predicting the geospace is ultimately done by modelling. While the previous global MHD models can be executed near real-time and they provide the average description of the system, they cannot capture the kinetic physics that is needed to explain the most severe space weather events.

One additional factor in the accurate modelling of the geospace as a global system is that one needs to address the ionised upper atmosphere called the ionosphere within the simulation. The Earth’s ionosphere is a weakly ionised medium, divided into three regions—named D (60–90 km), E (90–150 km), and F (> 150 km)—corresponding to three peaks in the electron density profile (Hargreaves 1995). From the magnetospheric point of view, the ionosphere represents a conducting layer closing currents flowing between the ionosphere and magnetosphere (Merkin and Lyon 2010), reflecting waves (Wright and Russell 2014), and depositing precipitating particles (e.g., Rodger et al. 2013). Further, the ionosphere is a source of cold electrons (Cran-McGreehin and Wright 2005) and heavier ions (e.g., Peterson et al. 1981). These cold ions of ionospheric origin may affect local processes in the magnetosphere, such as magnetic reconnection at the magnetopause (André et al. 2010; Toledo-Redondo et al. 2016). The global MHD models typically use an electrostatic module for the ionosphere, coupled to the magnetosphere by currents, precipitation and electric potential (e.g., Janhunen et al. 2012; Palmroth et al. 2006a). The ionosphere itself is modelled either empirically or based on first principles: The International Reference Ionosphere (IRI) model describes the ionosphere empirically from 50 to 1500 km altitude (Bilitza and Reinisch 2008), while for instance, the Sodankylä Ion and Neutral Chemistry model solves the photochemistry of the D region, taking into account several hundred chemical reactions involving 63 ions and 13 neutral species (Verronen et al. 2005, and references therein). At higher altitudes, transport processes become important, and models such as TRANSCAR (Blelly et al. 2005, and references therein) or the IRAP Plasmasphere–Ionosphere Model (Marchaudon and Blelly 2015) couple a kinetic model for the transport of suprathermal electrons with a fluid approach to resolve the chemistry and transport of ions and thermal electrons in the convecting ionosphere. Neither empirical nor the first-principles based models are using the Vlasov equation, which at the ionosphere concerns much finer scales.

In general, the interaction of the solar wind with the other magnetized planets in our solar system is essentially similar to that with Earth. The main differences stem from the scales of the systems, which depend on the strength of their intrinsic magnetic field and the solar wind parameters changing with heliospheric distance. While the modelling of the magnetospheres of the outer giants is only achievable to date using fluid approaches, the small size of Mercury’s magnetosphere has been targeted for global kinetic simulations (Richer et al. 2012). For the same reason, kinetic models are also a popular tool to investigate the plasma environment of non-magnetized bodies such as Mars, Venus, comets, and asteroids. In particular, Umeda and Ito (2014) and Umeda and Fukazawa (2015) have studied the interaction of a weakly magnetized body with the solar wind by means of full-Vlasov simulations.

2.4 Scales and processes

The following processes are central in explaining plasma behaviour in the Solar–Terrestrial system and astrophysical domains: (1) magnetic reconnection enabling energy and mass transfer between different magnetic domains, (2) shocks forming due to supersonic relative flow speeds between plasma populations, (3) turbulence providing energy dissipation across scales, and (4) plasma instabilities transferring energy between the plasma and waves. All these processes contribute to particle acceleration, which is one of the most researched topics within Solar–Terrestrial and astrophysical domains, and notorious in requiring understanding of both local microphysics and global scales. Below, we introduce some examples of these processes within systems having scales that can be addressed with the Vlasov approach. Simulations of non-thermal space plasmas encompass a vast range of scales, from the smallest ones (electron scales, ion kinetic scales) to local and even global structures. Table 1 lists typical ranges of a handful of plasma parameters encountered in different branches of space sciences and astrophysics. Especially in a larger astrophysical context, simulations cannot directly encompass all relevant spatial and temporal scales. It is important to note, however, that scientific results of kinetic effects can be achieved even without directly resolving all the spatial scales that may at first glance appear to be a requirement (Pfau-Kempf et al. 2018).

Reconnection is a process whereby oppositely oriented magnetic fields break and re-join, allowing a change in magnetic topology, plasma mixing, and energy transfer between different magnetic domains. Within the magnetosphere, reconnection occurs between the terrestrial northward oriented magnetic field and the magnetosheath magnetic field that mostly mimics the direction of the IMF, but can sometimes be significantly altered due to magnetosheath processes (Turc et al. 2017). Magnetospheric energy transfer is most efficient when the magnetosheath magnetic field is southward, while for northward IMF reconnection locations move to the nightside lobes (Palmroth et al. 2006c). Actively researched topics focus on understanding the nature and location of reconnection as a function of driving conditions (e.g., Hoilijoki et al. 2014; Fuselier et al. 2017). Energy transfer at the magnetopause sets the near-Earth space into a global circulation (Dungey 1961), leading to reconnection in the magnetospheric tail. The tail centre hosts a hot and dense plasma sheet, the home of perhaps most diligent scientific investigations within the domain of magnetospheric physics. Especially in focus have been explosive times when the magnetospheric tail disrupts and launches into space, accelerating particles and causing abrupt changes in the global geospace (e.g., Sergeev et al. 2012). Reconnection has been suggested as one of the main drivers of tail disruptions (e.g., Angelopoulos et al. 2008), while other theories related to plasma kinetic instabilities exist as well (e.g., Lui 1996). Tail disruptions have important analogues in solar eruptions (e.g., Birn and Hesse 2009), and investigating the tail disruptions with global simulations together with in situ measurements may shed light into other astrophysical systems as well.

Collisionless shocks form due to plasma populations flowing supersonically with respect to each other, redistributing flow energy into thermal energy and accelerating particles (e.g., Balogh and Treumann 2013; Marcowith et al. 2016). Shock fronts such as those found at supernova explosions are an efficient accelerator (Fermi 1949). Diffusive shock acceleration (e.g., Axford et al. 1977; Krymskii 1977; Blandford and Ostriker 1978; Bell 1978) is the primary source of solar energetic particles, and occurs from the non-relativistic (e.g., Lee 2005) to the hyper-relativistic (Aguilar et al. 2015) energy regimes. Shock–particle interactions including kinetic effects have been modelled using various analytical and semiempirical methods (see, e.g. Afanasiev et al. 2015, 2018; Hu et al. 2017; Kozarev and Schwadron 2016; Le Roux and Arthur 2017; Luhmann et al. 2010; Ng and Reames 2008; Sokolov et al. 2009; Vainio et al. 2014), but drastic approximations are usually required in order to model the whole acceleration process, and Vlasov methods have not yet been utilised. The classic extension of hydrodynamic shocks into the MHD regime has been disproven by a number of hybrid models due to, e.g., shock reformation (Caprioli and Spitkovsky 2013; Hao et al. 2017) and anisotropic pressure and energy imbalances due to non-thermal particle populations (Chao et al. 1995; Génot 2009). Only a self-consistent treatment including kinetic effects is capable of describing diffusive shock acceleration accurately. Recent works coupling shocks and high-energy particle effects include, e.g., those by Guo and Giacalone (2013), Bykov et al. (2014), Bai et al. (2015) and van Marle et al. (2018). Challenges associated with simulating shocks include modelling gyrokinetic scales for ions whilst allowing the simulation to cover the large spatial volume involved in the particle trapping and energisation process. Radially expanding shock fronts within strong magnetic domains result in a requirement for high resolution both spatially and temporally. Modern numerical approaches usually make some sacrifices, e.g. performing 1D–2V self-consistent calculations or advancing 3D–1V semi-analytical models. The Vlasov approach is especially interesting in probing the physics of particle injection, trapping, acceleration and escape.

In addition to shock acceleration, kinetic simulations of solar system plasmas are also applied to the study of solar wind turbulence. How energy cascades from large to small scales and is eventually dissipated is an outstanding question, which can be addressed using kinetic simulations (Bruno and Carbone 2013). Hybrid-Vlasov simulations (Valentini et al. 2010; Verscharen et al. 2012; Perrone et al. 2013) have in particular been utilised to study the fluctuations around the ion inertial scale, which is of particular importance as it marks the transition between the fluid and kinetic scales.

Plasma instabilities arise when a source of free energy in the plasma allows a wave mode to grow non-linearly. They are ubiquitous in our universe, and play an important role in both solar–terrestrial physics, where, for example, the Kelvin–Helmholtz instability transfers solar wind plasma into the Earth magnetosphere (e.g., Nakamura et al. 2017), and in astrophysical media, for instance in accretion disks, where the turbulence is driven by the magnetorotational instability. Vlasov models have been applied to the study of many instabilities, such as the Rayleigh–Taylor instability (Umeda and Wada 2016, 2017), Weibel-type instabilities (Inglebert et al. 2011; Ghizzo et al. 2017), and the Kelvin–Helmholtz instability (Umeda et al. 2010b).

3 Modelling with the Vlasov equation

Simulating plasma provides numerous challenges from the modelling perspective. Constructing a perfect representation of the plasma, where every single charge carrier is factored in the equations, would require an immense amount of computational power. Spatial scales required to fully describe the plasma environment range from the microscale Debye length, up to the macroscale of the phenomena one is trying to simulate. The particle density needed is such that performing a fully kinetic simulation of even a low-density plasma self-consistently, using Maxwell’s equations for the electric and magnetic fields and Lorentz’ equation for the protons and electrons, is out of reach even to present day large supercomputers. Currently, only plasma phenomena that occur in relatively short spatial and temporal scales, such as magnetic reconnection and high frequency waves, are modelled using this approach. For this reason, adopting a continuous resolution of the velocity space and using distribution functions as the main object to be simulated provides a meritorious form of simulating plasmas.

Plasmas can also be treated as a fluid and the standard way of doing that is using the magnetohydrodynamic (MHD) approximation. This modelling approach is more suitable for large domain sizes where detailed information is not necessary. However, MHD does not offer information about small spatio-temporal scales. Statistical mechanics provides some information about a neutral gas based on assumptions done at the atomic scale. The kinetic plasma approach treated in the following is based on this same principle. It describes the plasmas using distribution functions in phase space and uses Maxwell’s equations and the Vlasov equation to advance the fields and the distribution functions, respectively.

3.1 The Vlasov equation

In plasmas, as in neutral gases, the dynamical state of every constituent particle can be described by its position (\(\mathbf {x}\)) and momentum (\(\mathbf {p}\)) (or velocity \(\mathbf {v}\)) at a given time t. It is also common to separate the different species s in a plasma (electrons, protons, helium ions, etc). Accordingly, the dynamical state of a system of particles of species s, at a given time, can be described by a distribution function \(f_s(\mathbf {x},\mathbf {v},t)\) in 6-dimensional space, also called phase space.

The distribution function \(f_{s}(\mathbf {x},\mathbf {v},t)\) represents the phase-space density of the species inside a phase-space volume element of size \(\mathrm {d}^3\mathbf {x} \mathrm {d}^3\mathbf {v}\) during a time \(\mathrm {d}t\) at the (\(\mathbf {x},\mathbf {v},t\)) point. Hence, in a system with N particles, integrating over the spatial volume \(\mathcal {V}_r\) and the velocity volume \(\mathcal {V}_v\) (i.e., the entire phase-space volume \(\mathcal {V}\)) one obtains

It is important to represent and describe the time evolution of the distribution functions given some external conditions. The Boltzmann equation,

uses the distribution function \(f_{s}\) to describe the collective behaviour of a system of particles subject to collisions and external forces \(\mathbf {F}\), where the term on the right-hand side represents the forces acting on particles in collisions. Its derivation starts from the standard equation of motion and also takes Liouville’s theorem into account (see Sect. 3.3) and it is therefore valid for any Hamiltonian system. In plasmas, the Lorentz force takes the role of the external force and collisions between particles are often neglected. Taking these two assumptions into consideration, one obtains the Vlasov equation (Vlasov 1961), often called “the collisionless Boltzmann equation”:

If a significant part of the plasma acquires high enough kinetic energy, then relativistic effects start to become important. It can be shown that the Vlasov equation (3) is Lorentz-invariant and therefore holds in such cases if v is simply considered to be the proper velocity (Thomas 2016). There are only very few numerical applications related to space or astrophysics that directly solve the relativistic Vlasov equation (as opposed to the particle-in-cell approach, which solves the same physical system through statistical sampling of particles and their propagation, compare Sect. 1) but it is more common in other contexts, such as laser–plasma interaction applications (Martins et al. 2010; Inglebert et al. 2011). By using a frame that is propagating at relativistic speeds, a Lorentz-boosted frame, the smallest time or space scales to be resolved become larger and the plasma length shrinks due to Lorentz contraction, indicating that the simulation execution times are accelerated.

3.2 Closing the Vlasov equation system

In any simulation, it is necessary to couple the Vlasov equation with the field equations to form a closed set of equations. The Vlasov equation deals with the time evolution of the distribution function and uses the electromagnetic fields as input. Thus the fields need to be evolved based on the updated distribution function. There are two main ways of closing the equation set: the electrostatic approach, which uses the Poisson equation to close the system and the electromagnetic approach, which uses the Maxwell equations to that end. They are typically referred to as the Vlasov–Poisson system and the Vlasov–Maxwell system of equations. With appropriate approximations, the system can also be closed without solving the Vlasov equation for all species.

3.2.1 The Vlasov–Poisson equations

The Vlasov–Poisson equations model plasma in the electrostatic limit without a magnetic field (corresponding to the assumption that \(v/c \rightarrow 0\) for any relevant velocity v in the system). Thus Eq. (3) takes the form

for all species and the system is closed by the Poisson equation

where \(\varPhi \) is the electric potential and \(\epsilon _0\) is the vacuum permittivity. Using Eq. (1), the total charge density \(\rho _q\) is obtained by taking the zeroth moment of f for all species:

3.2.2 The Vlasov–Maxwell equations

In the electromagnetic case, the Vlasov equation (3) is retained for all species and complemented by the Maxwell equations, namely the Ampère law

the Faraday law

and the Gauss laws

Usually in a numerical scheme only Eqs. (7) and (8) are discretised. If Eq. (9) is not satisfied by the numerical method used, numerical instabilities can occur because the underlying system needs to be divergence-free.

3.2.3 Hybrid-Vlasov systems

The hybrid-Vlasov systems retain only the Vlasov equation for the ions, thus neglecting the electrons to a certain extent. This has the advantage that the model is not required to resolve the short temporal and spatial scales associated with electron dynamics. Typically, the system is closed by taking moments of the Vlasov equation and making approximations pertinent to the simulation system at hand.

Integrating (3) over the velocity space, one gets the continuity equation or the zeroth moment of the Vlasov equation:

where \(n_s\) and \(\mathbf {V}_s\) are the number density and the bulk velocity of species s, respectively. Multiplying (3) by the phase-space momentum \(m_s \mathbf {v}_s\) and integrating over the velocity space, one obtains the equation of motion or the first moment of the Vlasov equation:

where \(\mathcal {P}_s\) is the pressure tensor of species s, which can in turn be obtained as the second moment of \(f_s\). This leads to a chain of equations where each step depends on the next moment of \(f_s\). The most common closure of hybrid-Vlasov systems is taken at this level by summing the electron and ion equations of motion and neglecting terms based on the electron-to-ion mass ratio \(m_\mathrm{e}/m_\mathrm{i} \ll 1\), leading to the generalised Ohm’s law

where \(\sigma \) is the conductivity, e is the elementary charge, \(n_e\) is the electron number density, and \(\mathbf {j}\) is the total current density. In the limit of slow temporal variations, the rightmost electron inertia term is typically dropped from the equation. Further, assuming high conductivity, one is left with the Hall term \(\mathbf {j}\times \mathbf {B}/\rho _q\) and the electron pressure gradient term \(\nabla \cdot \mathcal {P}_\mathrm{e}/\rho _q\) on the right-hand side. The electron pressure term can be handled in a number of ways, such as using isobaric or isothermal assumptions. If electrons are assumed to be completely cold, the equation can be written as the Hall MHD Ohm’s law

Thus the hybrid-Vlasov system of equations retains the Vlasov equation (3) for ions and the Maxwell–Ampère and Maxwell–Faraday equations (7) and (8) but replaces the Gauss equations by a generalised Ohm equation (12) with appropriate approximations. If rapid field fluctuations are excluded from the solution, the displacement current from Ampère’s law can be omitted, resulting in the Darwin approximation and yielding

This makes the equation system more easily tractable.

Note that conversely, neglecting ion dynamics and retaining the electron Vlasov equation can be advantageous in certain contexts and is formally equivalent to the above with switched electron and ion variables.

3.3 Properties of the Vlasov equation

When solving the Vlasov equation, there are a number of useful properties that can be used for an advantage in numerical solvers. In its fundamental structure, the Vlasov equation is a 6D advection equation, which equals a zero material derivative of phase-space density. In the absence of any source terms, finding a solution at any given point in time requires to determine the motion of the phase-space density. One particularly handy property follows from Liouville’s theorem, from which the Vlasov equation is derived. It states that phase-space density is constant along the trajectories of the system. This means that a solution of the system at one point in time can be followed forward or backward in time arbitrarily, as long as the trajectories of phase-space elements, characterising the Vlasov equation, are known.

One consequence of Liouville’s theorem is that initial density perturbations tend to form smaller and smaller structures as trajectories of the phase-space regions with different densities converge over time. In physical reality, this so-called filamentation has a natural cutoff at scales where diffusive scattering effects become important, however, this is not part of the pure mathematical description of the Vlasov equation. Therefore, numerical implementations need to address this issue either by explicit filtering steps, or innate numerical diffusivity.

A fundamental consideration in any physical modelling is the conservation of certain quantities, like mass, momentum, energy, and electric charge. This of course applies to Vlasov-based plasma modelling as well. Variational approaches have been used to derive the Vlasov–Maxwell and Vlasov–Poisson systems of equations as well as reduced forms (e.g., Marsden and Weinstein 1982; Ye and Morrison 1992; Brizard 2000; Brizard and Tronko 2011). Care has to be taken when developing numerical solutions of the Vlasov equation that quantities relevant to the problem to be solved are conserved adequately by the method (e.g., Filbet et al. 2001; Crouseilles et al. 2010; Cheng et al. 2013, 2014; Becerra-Sagredo et al. 2016; Einkemmer and Lubich 2018).

4 Numerical modelling and HPC aspects

Finding solutions to the Vlasov equation in order to model physical systems typically involves computer simulations. Therefore, the phase space density \(f(\mathbf {x},\mathbf {v},t)\) needs to be numerically represented, which strongly influences the choice of solvers and the resulting simulation code. This section is structured around different numerical representations of f.

A problem common to all numerical approaches solving the Vlasov equation is the curse of dimensionality—to fully reproduce all physical behaviour, the simulation domain must be 6-dimensional, with all 6 dimensions being of sufficient resolution or fidelity for the desired physical system. This considerably impacts the size of the phase space and hence the computational burden of the algorithm.

Different ways of numerically representing the phase-space density \(f(\mathbf {x},\mathbf {v},t)\): a In a Eulerian grid, every grid cell stores the local value of phase-space density, which is transported across cell boundaries. b Spectral representations (shown here: Fourier-space in \(\mathbf {v}\)) allow for some update steps of phase-space density to be performed locally. c In a tensor train representation, phase-space density is represented as a sum of tensor products of single coordinates’ distribution functions which get transported individually

4.1 Eulerian approach

In a straightforward manner, the phase-space distribution function \(f(\mathbf {x},\mathbf {v},t)\) can be discretised on a Eulerian grid, which can be operated by different kinds of solvers (see Fig. 2a). The structure of the Vlasov equation allows both finite volume (FV) as well as semi-Lagrangian solvers to be employed, and all of these have been operated with some success (Arber and Vann 2002). Discretisation of velocity space to a finite grid size \(\varDelta v\) also automatically imposes a lower limit for phase-space filamentation (compare Sect. 3.3), at which the grid will naturally smooth out the structure. In some cases this is a purely numerically diffusive process, whereas others use explicit smoothing, filtering or subgrid modelling (e.g., Klimas 1987; Klimas and Farrell 1994). The 6-dimensional structure of the phase space, along with the physical scales and resolutions imposed by the underlying physical system (compare Sect. 2) make a Eulerian representation in a Cartesian grid computationally impractical in the vast majority of cases concerning a large simulation volume.

Let us consider as an example a simulation of the Earth’s entire magnetosphere using a full 3D–3V, Eulerian hybrid-Vlasov model. Let it extend up to the lunar orbit (\(x \sim \pm \,60 \, R_E\) in every direction) resolving approximately the solar wind ion inertial length (\(\varDelta x \sim 100\) km), and let the velocity space encompass typical solar wind velocities with some safety margin (\(v \sim \pm \,2000\) km/s) while resolving the solar wind thermal speed (\(\varDelta v \sim 30\) km/s). In this case the resulting phase space would contain a total of \(10^{18}\) cells. If each of them were represented by a single-precision floating point value, a minimum of 4 EiB of memory would be required to represent it!

Fortunately, there are many possibilities for simplification of the computational grid size:

-

Reduction of phase-space dimension, if the physical system under consideration allows it, is an easy and efficient way to reduce computational load. Simulations of longitudinal wave instabilities (Jenab and Kourakis 2014; Shoucri 2008) and fundamental studies of filamentation have been performed in a 1D–1V setup, whereas laser wakefield and wave interaction simulations tend to be modelled in 2D–2V or 2D–3V setups (Besse et al. 2007; Sircombe et al. 2004; Thomas 2016). Another possibility here is to globally reduce the number of grid points by introducing a sparse grid representation, where the grid may be uneven with respect to Cartesian coordinates, while remaining static during runtime (Kormann and Sonnendrücker 2016; Guo and Cheng 2016). This is sometimes referred to as a sparse grid representation.

-

Gyrokinetic simulations reduce the velocity space by dropping the azimuthal velocity dimensions perpendicular to the magnetic field, thus assuming complete gyrotropy of the distribution functions (e.g., Görler et al. 2011).

-

Adaptively refined grids can be employed to reduce resolution and thus computational expense in areas of phase space that are considered less important for the physical problem at hand (Wettervik et al. 2017; Besse et al. 2008).

-

In many physical scenarios, large parts of phase space contain an extremely low, if not zero, density, and contribute nothing to the overall dynamic development. Suitable pruning of the phase-space grid can thus be performed to obtain a data structure that dynamically removes grid elements during runtime and keeps them only in regions deemed relevant for the physical system dynamics. The computational speed-up gained through the reduction of phase-space volume thus obtained can in some cases be a tradeoff against physical accuracy, and needs to be carefully considered. We have implemented this option in Vlasiator, and call it the dynamic sparse phase space representation, discussed more in Sect. 5.2. This method is not to be mixed to the static sparse grid methods (Kormann and Sonnendrücker 2016; Guo and Cheng 2016) that are fundamentally dimension reduction techniques, similar to the low-rank approximations.

In plasmas, the magnetic field \(\mathbf {B}\) makes the particles gyrate while the electric field \(\mathbf {E}\) causes them to accelerate and drift. It can be advantageous to take the characteristics of acceleration due to Lorentz’ force into consideration when choosing an appropriate grid for the numerical phase-space representation. Common ideas include:

-

A polar velocity coordinate system aligned with the magnetic field and centred around the drift velocity, \(\mathbf {v} = ( v_\parallel ,\, v_r,\, v_\phi ),\) in which the gyrophase coordinate \(v_\phi \) has a much lower resolution than \(v_\parallel \) and \(v_r\). This can be employed in cases where the velocity distribution functions are known not to deviate strongly from gyrotropy, i.e., to exhibit cylindrical symmetry with respect to the magnetic field direction. However, the disadvantage of a polar velocity space over a Cartesian one is the more complex coordinate transformation required for transport into the spatial neighbours.

-

A Cartesian representation of velocity space, in which its coordinate axes co-rotate with the local gyration at every given spatial cell. Such a setup has the advantage that no transformation of velocity space due to the magnetic field will have to be performed at all, and no numerical diffusion due to gyration motion will occur. It does however come at the cost of more complicated spatial updates, since neighbouring spatial domains do no longer have identical velocity space axes. A suitable interpolation or reconstruction has to take place in the spatial transport, thus potentially negating the advantage in numerical diffusivity.

For the actual process of solving the Vlasov equation, a fundamental decision has to be made in the structure of the code, whether the solution steps are to be performed in a proper 6D manner (e.g., Vogman 2016), or whether a Strang-splitting approach will be taken (Strang 1968; Cheng and Knorr 1976; Mangeney et al. 2002), in which the position and velocity space solution steps are performed independently of each other. Due to the large number of involved dimensions, and thus computational context of each solver step, the latter approach tends to have significant performance benefits, whilst still achieving convergence (Einkemmer and Ostermann 2014). Alternative time-splitting methods based on Hamiltonians have also been proposed (e.g., Crouseilles et al. 2015; Casas et al. 2017).

If a Cartesian velocity grid is employed in the simulation, multiple families of solvers are available for it (Filbet and Sonnendrücker 2003b). In all cases, the effects of diffusivity of the solver need to be considered. Especially uncontrolled velocity space diffusion manifests itself as numerical heating, as the distribution function tends to broaden over time. Higher orders of solvers and reconstruction methods, as well as explicit formulations in which moments of the distribution function are conserved are therefore advisable (Balsara 2017).

The choice of a Eulerian representation of phase space brings certain implementation details for high performance computing (HPC) aspects with it. The relative ease of spatial decomposition into independent computational domains, which communicate through ghost cells, can be employed readily for Eulerian Vlasov simulations, providing a straightforward path to parallel implementations. On the other hand, the inherent limitations of Eulerian schemes (such as conditions for time steps, compare Sect. 5.3) limit their overall numerical efficiency, and the high-dimensional nature of phase space can lead to challenges in appropriately represented and resolved numerical grids. As so often in HPC, design decisions have to be based on the specific properties of the physical system under investigation.

4.1.1 Finite volume solvers

As the Vlasov equation (3) is fundamentally a hyperbolic conservation law in 6D, it can be solved using the well-established methods of Finite Volumes (FV, LeVeque 2002). In this approach, the phase-space fluxes are calculated through each interface of a discrete simulation volume (or phase-space cell) by reconstructing the phase-space density distribution through an appropriate interpolation scheme. The characteristic velocities at both sides of this interface are determined and the Riemann problem (Toro 2014) at each of these interfaces is solved to update the material content in each cell.

If Strang splitting is used to perform separate spatial and velocity-space updates, it is noteworthy that the state vector only contains a single scalar quantity (the phase-space density) and each cell interface update only needs to take a single characteristic velocity into consideration: For the update in a spatial direction, the characteristic is given by the corresponding cell’s velocity space coordinates, whereas in the velocity space update step, the acceleration due to magnetic, electric and external field forces is homogeneous throughout each spatial cell. The Riemann problem for the Vlasov update does therefore not require the solution or approximation of an eigenvalue problem, which significantly simplifies its solution in comparison to hydrodynamic or MHD FV solvers. This property also enables the efficient use of semi-Lagrangian solvers (discussed more in Sect. 4.4).

As will be shown in Sect. 5, versions of the Vlasiator code until 2014 employed a FV formulation of its phase space update (von Alfthan et al. 2014) and numerous other implementations exist (Banks and Hittinger 2010; Wettervik et al. 2017). A comprehensive introduction to the implementation and thorough analysis of the behaviour of a fully 6D implementation of a FV Vlasov solver is given by Vogman (2016).

4.1.2 Finite difference solvers

While the Vlasov equation (3) could in principle be solved by directly employing finite difference methods, this approach does not seem to be favoured, and its applications in the literature appear to be limited to fundamental theory studies only (e.g., Schaeffer 1998; Holloway 1995). The biggest issue with finite difference formulations is the lack of explicit conservation moments of the distribution function and related quantities. While high-order methods can still maintain suitable approximate conservation properties, their computational demands and/or diffusivity make them impractical.

4.2 Spectral solvers

Instead of a direct discretisation of the phase-space density \(f(\mathbf {x},\mathbf {v}, t)\) in its \(\mathbf {x}\) and \(\mathbf {v}\) coordinates, a change of basis functions can be employed, each coming with benefits and limitations. The transformation of f into a different basis can be performed in the velocity coordinates only (cf. Fig. 2b), or in both spatial and velocity coordinates, depending on the physical application.

If a splitting scheme is employed, where velocity and real space advection updates are treated separately, the advection in a Fourier-transformed coordinate can be completely performed in Fourier space, as the transform of any coordinate \(x \rightarrow k_x\) results in the differential advection operator \(v_x \,\nabla _x\) turning into a simple multiplication:

However, for the acceleration update, this transformation brings in the additional complication that the acceleration \(\mathbf {a}\) would have to be independent of \(\mathbf {v}\), which is true for the electrostatic Vlasov–Poisson system, but incorrect in full Vlasov–Maxwell scenarios, due to the v-dependence of the Lorentz force. In order to accommodate velocity-dependent acceleration, solving a system in such a way typically requires multiple forward and backward Fourier transforms within one time step (Klimas and Farrell 1994).

The limit of filamentation in a thus-represented velocity space becomes the question of which maximum velocity space frequency \(\mathbf {k}_{v,\text {max}}\) is available, and the filamentation problem itself becomes a boundary problem at the maximum extents of velocity \(\mathbf {k}\)-space (Eliasson 2011). However, stability issues of this scheme remain under discussion (Figua et al. 2000; Klimas et al. 2017).

Finally, a full Fourier-space representation of \(\tilde{f}\left( \mathbf {k}_r,\mathbf {k}_v, t\right) \), in which also the real space coordinate \(\mathbf {x} \rightarrow \mathbf {k}_r\) is transformed is a possibility. However, it further complicates the treatment of configuration and velocity space boundaries (Eliasson 2001). When used with periodic spatial boundary conditions, such a setup can be quite efficient for the study of kinetic plasma wave interactions.

Apart from the Fourier basis, other orthogonal function systems can be used as the basis for description of phase-space densities. A popular choice is presented by Hermite functions (Delzanno 2015; Camporeale et al. 2016), whose \(\mathbf {L}^2\) convergence behaviour closely matches that of physical velocity distribution functions, and whose property of being eigenfunctions to the Fourier transform can be used as a numerical advantage. Since the zeroth Hermite function \(H_0(\mathbf {v})\) is simply a Maxwellian particle distribution, a hybrid-Vlasov code with Hermitian basis functions should replicate MHD behaviour in this limit. Adaptive inclusion of higher-order Hermite functions then allows an increasing amount of kinetic physics to be numerically represented.

A common problem of any kind of spectral method, be it Fourier-based, or using any other choice of nonlocalised basis functions, is the formulation of boundary conditions. While microphysical simulations of wave or scattering behaviour can usually get away with periodic boundary conditions, macroscopic systems will require boundaries at which interaction of plasma with solid or gaseous matter is to be modelled (such as planetary or stellar surfaces), inflow conditions as well as outflow boundaries. Due to the unavailability of suitable spectral formulations for these boundaries, spectral-domain solvers have not gained foothold in modelling efforts of macroscopic astrophysical systems.

In any nonlocal choice of basis function for the phase-space representation, be it Fourier-, Hermite- or wavelet-based (Besse et al. 2008), extra thought has to be put into scalability of parallel solvers for it. If a change of basis function (such as a switch from a real-space to a Fourier space representation) is required as part of the simulation update step, this will typically not scale beyond hundreds of cores in supercomputing environments.

4.3 Tensor train

An entirely separate class of numerical representations for the phase-space density is provided by the tensor train formalism (Kormann 2015) illustrated in Fig. 2c. The idea behind this approach is inspired by Strang-splitting solvers, in which spatial and velocity dimensions are treated in individual and subsequent solver steps. The overall distribution function \(f(x_1,x_2,\ldots ,x_n)\) is represented as a tensor product of component basis functions,

in which each \(f_k(x_k)\) is only dependent on a single coordinate \(x_k\), and thus only affected by a single dimension’s update step. The generalised formulation is called the Tensor Train of ranks \(r_1\ldots r_n\) (compare Fig. 2c),

in which the distribution function is entirely formulated in terms of sums of products of \(Q_k\), which themselves only depend on a single coordinate \(x_k\). Transport can be performed by individually affecting each \(Q_k\), followed by a rounding step to keep the tensor train compact.

While this approach has so far only been employed in low-dimensional approaches and for feasibility studies, and attempts at large numerical simulations using tensor train models have not yet been performed, efforts to integrate them into existing codebases are underway.

Illustration of Lagrangian and semi-Lagrangian approaches: a in a full Lagrangian solver, phase-space samples are moved along their characteristic trajectories, never mapped to a grid and the phase-space values in between are obtained by interpolation. b In a forward semi-Lagrangian method, the same process is used, but the phase-space values are redistributed to a grid at regular intervals. c A backward semi-Lagrangian method follows the characteristic of every target phase-space point backwards in time and obtains the source value by interpolation

4.4 Semi-Lagrangian and fully Lagrangian solvers

As a consequence of Liouville’s theorem (cf. Sect. 3.3), numerical solutions of the Vlasov equation can be elegantly formulated in Lagrangian and semi-Lagrangian ways, by following the characteristics in phase space. Since the spatial velocity of any point in phase space is simply given by its velocity space coordinates, and its acceleration due to Lorentz’ force is provided by the local electromagnetic field quantities, a unique characteristic for each point in phase space is easily obtained in a simulation (cf. Sect. 4.1.1).

As the simulation progresses, the distribution of these sample points will shift, maintaining their initial phase-space density values, and the volumes in between them obtain phase-space density values through interpolation. If necessary, new samples can be created, or existing ones merged, where filamentation requires it. In essence, fully Lagrangian simulation codes (Kazeminezhad et al. 2003; Nunn 2005; Jenab and Kourakis 2014) track the motion of samples of density through phase space, stepping forward in time, resulting in an updated phase-space distribution. This is illustrated in Fig. 3a. Sometimes, these methods are referred to as Lagrangian particle methods, as each phase-space sample can be modelled as a macroparticle.

Much more common than the fully Lagrangian formulation of Vlasov solvers is the family of semi-Lagrangian solvers (Sonnendrücker et al. 1999). In these, the phase-space samples to be propagated are obtained at every time step from a Eulerian description of phase space, their transport along the characteristics is calculated within the time step, and the resulting updated phase-space density is sampled back into a Eulerian grid (which can be either structured or unstructured, see Besse and Sonnendrücker 2003). This process can be performed either forwards in time (Crouseilles et al. 2009, see Fig. 3b), in which the source grid points are scattered into the target locations, or backwards in time (Sonnendrücker et al. 1999; Pfau-Kempf 2016), where each target grid point performs a gather operation, spatially interpolating inside the previous state of the time steps (Fig. 3c). Backwards semi-Lagrangian methods are sometimes also referred to as Flux Balance Methods (see Filbet et al. 2001). Either way, an interpolation step will be involved which may again lead to significant numerical diffusion, unless methods are used to minimise it. Some of the more common interpolation procedures used are cubic splines and Hermite reconstruction because they produce smooth results with reasonable accuracy and are less dissipative than other methods using continuous interpolations (Filbet and Sonnendrücker 2003a). Lagrange interpolation methods produce more accurate results but require higher order polynomials and large stencils to limit diffusion. The high-order discontinuous Galerkin method for spatial discretisation, along with a semi-Lagrangian time stepping method, has also been used in Vlasov–Poisson systems providing an improvement in accuracy compared to previously used techniques (Rossmanith and Seal 2011). The flexibility of combining different approaches is also seen in a recent particle-based semi-Lagrangian method for solving the Vlasov–Poisson equation (Cottet 2018).

Cheng and Knorr (1976) were the first authors to employ semi-Lagrangian updates of a Vlasov–Poisson problem in a Strang-splitting setup, which they refer to as the time-splitting scheme, in which they independently treated advection due to temporal and spatial updates. Mangeney et al. (2002) later formulated a Strang-splitting scheme for the Vlasov–Maxwell equation. As such a splitting scheme performs acceleration and translation steps separately, the phase-space trajectories of any simulation point approximates their physical behaviour in a staircase-like dimension-by-dimension pattern.

4.5 Field solvers

The Vlasov equation does not stand alone in describing the physical system in consideration, but requires a further prescription of the fields introducing the force terms. In the vast majority of cases in computational astrophysics, these will be electromagnetic forces self-consistently produced through the motion of charged particles in plasma, although there have been examples of Vlasov-gravity simulations (Guo and Li 2008), in which the Poisson equation was solved based on the simulation’s mass distribution. Also in a few cases, the fields affecting phase-space distributions are considered entirely an external simulation input, with no feedback from the phase-space density onto the fields (Palmroth et al. 2013), which can be called “test-Vlasov” simulations, in analogy to test-particle runs. These are particularly useful as test cases before the fully operational code can be launched.

A key requirement for any field solver is to preserve the solenoidality of the magnetic field \(\mathbf {B}\) expressed by Eq. (9). There are two main avenues used to achieve this goal (e.g., Tóth 2000; Balsara and Kim 2004; Zhang and Feng 2016, and references therein). Either the field reconstruction is divergence-free by design, such as the one used in Vlasiator (see Sect. 5.3), or a procedure is needed to periodically clean up the divergence of \(\mathbf {B}\) arising from numerical errors.

In the following sections, different solvers for electromagnetic fields (and their simplifications) will be discussed in relation to astrophysical Vlasov simulations. These are fundamentally very similar in structure to the field solvers used in other simulation methods, such as PIC and MHD, and can in many cases be adapted directly from these with little change.

4.5.1 Electrostatic solvers

If modelling a physical system in which the interaction of plasma with magnetic fields is of little importance (such as electrostatic wave instabilities, dusty plasmas, surface interactions (Chane-Yook et al. 2006) and other typically local phenomena), the magnetic field force (\(q \mathbf {v} \times \mathbf {B}\)) part of the Vlasov equation can be neglected, and a purely electrostatic system remains.

Neglecting the effects of \(\mathbf {B}\) completely leads to the Vlasov–Poisson system of equations (4) and (5), for which the field solver needs to find a solution to the Poisson equation at every time step. Being an elliptic differential equation that is solved instantaneous in time, no time step limit arises from the field solver itself. Typically, solvers use approximate iterative approaches, multigrid methods or Fourier-space solutions (Birdsall and Langdon 2004).

Another option, if an initial solution for the electric field has been found (or happens to be trivial), is to update it in time by using Ampere’s equation in the absence of \(\mathbf {B}\),

in either an explicit finite-difference manner, or using more advanced implicit formulations (Cheng et al. 2014). Special care should however be taken to prevent the violation of the Gauss law [cf. Eq. (9)] by using appropriate numerical methods.

4.5.2 Full electromagnetic solvers

If the full plasma microphysics of both electrons and ions is to be considered, and particularly if radio wave or synchrotron emissions are intended outcomes of the system, one must use the full set of Maxwell’s equations. A popular and well-established family of electromagnetic field solvers is the finite difference time-domain (FDTD) approach, which has a longstanding history in electrical engineering applications. In formulating the finite differences for the \(\partial \mathbf {E} / \partial t \sim \nabla \times \mathbf {B}\) and \(\partial \mathbf {B} / \partial t \sim \nabla \times \mathbf {E}\) terms of Maxwell’s equations, it is often advantageous to use a staggered-grid approach, in which the electric field and magnetic field grids are offset from one another by half a grid spacing in every direction (Yee 1966). In this setup, every component of the electric field vector is surrounded by magnetic field components and vice versa, so that the finite difference evaluation of the rotation can be performed without any need for interpolation.

Care should be taken when employing FDTD solvers for studies of wave propagation at high frequencies or wave numbers, as the numerical dispersion relations of waves are deviating from their physical counterparts for high \(\mathbf {k}\), and this effect in particular is anisotropic in nature, and most strongly pronounced in cases of diagonal propagation, due to the intrinsic differences in the manner by which grid-aligned and non-grid-aligned computations are handled. Kärkkäinen et al. (2006) and Vay et al. (2011) present a thorough analysis of this problem in the case of PIC simulations, and provide suggestions for mitigating their effects. The largest disadvantage of FDTD solvers is their stringent requirement to resolve the propagation of fields at the speed of light, thus leading to extremely short time step lengths. In order to simulate anything at non-microscopic timescales, other methods will need to be used. Fourier-space solvers of Maxwell’s equations are advantageous in this respect, as they do not come with fundamental time step limitations. This is weighed up by the fact that their parallelisation is more difficult, and the formulation of appropriate boundary conditions is not always possible (cf. Sect. 4.2).

4.5.3 Hybrid solvers

If large-scale phenomena with timescales much larger than the local light crossing time are being investigated, FDTD Maxwell solvers quickly lose their appeal. If magnetic field phenomena are still to be considered self-consistently in the simulation, appropriate modifications of the electrodynamic behaviour have to be taken, so that their simulation with longer time steps becomes feasible.

One common way to get rid of the speed of light as a limiting factor is by getting rid of the electromagnetic mode as a solution to Maxwell’s equation altogether, in a process called the Darwin approximation (see Sect. 3.2.3 and Schmitz and Grauer 2006; Bauer and Kunze 2005). In this process, the electric field is decomposed into its longitudinal and transverse components \(\mathbf {E} = \mathbf {E}_L + \mathbf {E}_T\), with \(\nabla \times \mathbf {E}_L = 0\) and \(\nabla \cdot \mathbf {E}_T = 0\). Only \(E_L\) is allowed to participate in the temporal update of \(\mathbf {B}\), so that the electromagnetic mode drops out of the simulated physical system. As a result, the fastest remaining wavemode in the system becomes the Alfvén wave, and the maximum time step rises significantly, by a factor of \(c/v_A\).

Approximating the full set of Maxwell equations comes at the cost of not having a closed set of equations any more. As already shown in Sect. 3.2.3, the system is typically closed by providing a relation between the electric and magnetic field such as Eq. (12), called Ohm’s law. The level of complexity of Ohm’s law directly influences the simulation results as it immediately affects the kinetic physics described by the model.

4.6 Coupling schemes

A reduction of the computational burden of a model can be achieved by coupling different schemes in order to focus the use of the costlier kinetic model on the region(s) of interest while solving other parts of the system with less intensive algorithms. This is also a means of extending the simulation domain where one system is taken as the boundary condition of the other. Various classes of coupled models exist, depending on the coupling interface chosen.

One strategy is to define a spatial region of interest in which the expensive kinetic model is applied, embedded in a wider domain covered by a significantly cheaper fluid model. While the method is under investigation and has been tested on classic small problems (Rieke et al. 2015), it has not been applied in the context of large-scale astrophysical simulations yet. However, this type of coupling is being used successfully in the case of fluid–PIC coupling (e.g., Tóth et al. 2016; Chen et al. 2017) and also in reconnection studies (e.g., Usami et al. 2013). The disadvantage of this strategy is that scale coupling cannot be addressed as the kinetic effects do not spread into the fluid regime, and smaller-scale physics can only affect the solution in the domain at which the kinetic physics is in force.

Another strategy consists in defining the regions of interest in velocity space, that is coupling a fluid scheme describing the large-scale behaviour of a system with a Vlasov model handling suprathermal populations introducing kinetics into the model. Again, this is a recent development for which a certain amount of theoretical work and testing on small cases has been done (e.g., Tronci et al. 2014) but not yet extended to larger scale applications.

4.7 Computational burden of Vlasov simulations

Representing numerically the complete velocity phase space of a kinetic plasma system including all required physical processes is computationally intensive, and a large amount of data needs to be stored and processed. Different possible representations of the phase-space distribution and solution methods and their expected scaling shall be given in this section. Computational requirements for equivalent PIC simulations are estimated in comparison, although due to their different tuneable parameters, a rigorous comparison is difficult and beyond the scope of this work.

As shown in Sect. 4.1, a blunt Eulerian discretisation of a magnetospheric simulation without any velocity space sparsity results in \(10^{18}\) sample points or a minimum of 4 EiB memory requirement, which is unrealistic on current and next-generation architectures. A first approach is to reduce the dimensionality from a full 3D space to a 2D slice, which results in a reduction of sample points of the order of \(10^4\). Obviously a further reduction to 1D yields a similar gain. With a sparse velocity space strategy as used in Vlasiator (see Sect. 5.2 below) a further reduction by a factor of \(10^2\)–\(10^3\) sample points can be achieved. Typically modern large-scale kinetic simulations both with Vlasov-based methods (e.g., Palmroth et al. 2017) and PIC methods (e.g., Daughton et al. 2011) reach an order of magnitude of \(10^{11}\)–\(10^{12}\) sample points.

Beyond the number of sample points to be treated, the length of the propagation time step relative to the total simulation time aimed for is a crucial component of the computational burden of a model. Certain classes of solvers are limited in that respect as they cannot allow a signal to propagate more than one sampling interval or discretisation cell per time step (see Sect. 5.3). With respect to hybrid models using the Darwin approximation, the inclusion of electromagnetic (light) waves in the model description results in a reduction of the allowable time step by a factor of \(10^3\) or more. Eulerian solvers typically have similar limitations which can impact the time step by a factor of approximately \(10^2\) due to the Larmor motion in velocity space in the presence of magnetic field. Subcycling strategies and the use of Lagrangian algorithms are common approaches to alleviate these issues, at the potential cost of some physical detail however.

A choice of basis function for the representation of velocity space other than Eulerian grids (like spectral or Hermite bases) can in many cases be beneficial to limit the memory requirements for reasonable approximations of the velocity space morphology. Care must however be taken that non-local transformations from one basis to another, such as a Fourier transform, tend to have unfavourable scaling behaviour in massively parallel implementations. Tensor-train formulations appear to be a promising avenue for the representation of phase space densities that have suitable computational properties, but large-scale space plasma applications have not been demonstrated yet.

Higher computational requirements are expected if physics of multiple particle species (especially electrons) are essential for the system under investigation. The need to represent multiple separate distribution functions multiplies the memory and computation requirements. The relative mass ratios of these species also have an effect on the kinetic time and length scales that need to be resolved. Going from a purely proton-based hybrid-Vlasov to a “full-Vlasov” simulation, in which electrons are included as a kinetic species shortens the Larmor times by a factor of \(m_p / m_e = 1836\) and depending on the employed solver may require resolution of the plasma’s Debye length. The latter factor means that, with respect to the reference hybrid simulation considered above, which approximately resolves the ion kinetic scales, a spatial resolution increase of the rough order of \(10^5\) would be required (see Table 1), amounting to a staggering \(10^{15}\) more sampling points. In order to reduce this considerable overhead, a common approach is to rescale physical quantities such as the electron-to-proton mass ratio and/or the speed of light (e.g., Hockney and Eastwood 1988), while hoping to maintain quantitatively correct kinetic physics behaviour.

Most of these scaling relations likewise apply in PIC. In these, however, the parameter most strongly affecting the computational burden of the phase space representation is the particle count. As a rule of thumb, a PIC simulation with a particle count similar to the sampling point count of an equivalent Vlasov simulation will have a similar overall computational cost. For many physical scenarios, this particle count can be chosen to be significantly lower (on the order of 100–1000 particles/cell), especially if noisy representations of the velocity spaces are acceptable. In simulations with high dynamic density contrasts, in which certain simulation regions deplete of particles, as well as setups in which a minimisation of sampling noise is essential (such as investigations of nonlinear wave phenomena), PIC and Vlasov simulations are expected to reach a break-even point.

4.8 Achievements in Vlasov-based modelling

The progress of available scientific computing capabilities towards and beyond petascale in the last decade has driven the interest in and applicability of Vlasov-based methods to multidimensional space and astrophysical plasma problems. Table 2 compiles existing research work using direct solutions of the Vlasov equation in plasma physics. Table 2 only includes works with a direct link to space physics and astrophysics, meaning that purely theoretical work as well as research from adjacent fields, in particular nuclear fusion and laser–plasma interaction, has been omitted from this list on purpose. As of 2018, the Vlasov equation has thus been used in space plasma physics and plasma astrophysics to model magnetic reconnection, instabilities and turbulence, the interaction of the solar wind with the Earth and other bodies, radio emissions in near-Earth space and the charge distribution around spacecraft.

5 Vlasiator

This section considers the choices and approaches made for the Vlasiator code, attempting to describe the near-Earth space at ion kinetic scales. Vlasiator simulates the global near-Earth plasma environment through a hybrid-Vlasov approach. The evolution of the phase-space density of kinetic ions is solved with Vlasov’s equation (Eq. 3), with the evolution of electromagnetic fields described through Faraday’s law (Eq. 8), Gauss’ law and the solenoid condition (Eq. 9), and the Darwin approximation of Ampère’s law (Eq. 14). Electrons are modelled as a massless charge-neutralising fluid. Closure is provided via the generalised Ohm’s law (Eq. 12) under the assumptions of high conductivity, slow temporal variations, and cold electrons, i.e., the Hall MHD Ohm’s law (Eq. 13). The source code of Vlasiator is available at http://github.com/fmihpc/vlasiator according to the Rules of the Road mapped out at http://www.physics.helsinki.fi/vlasiator.

5.1 Background

Vlasiator has its roots in the discussions within the global MHD simulation community around 2005. It was becoming evident that while global MHD simulations are important, their capabilities, especially in the inner magnetosphere, are limited. The inner magnetosphere consists of spatially overlapping plasma populations of different temperatures (e.g., Baker 1995) and therefore the environment cannot be satisfactorily modelled with MHD to a degree allowing e.g., environmental predictions for societally critical spacecraft or as a context for upcoming missions, like the Van Allen Probes (e.g., Fox and Burch 2013). To this end, two strategies emerged, including either coupling a global MHD simulation with an inner magnetospheric simulation (e.g., Huang et al. 2006), or going beyond MHD with the then newly introduced hybrid-PIC approach (e.g., Omidi and Sibeck 2007). Coupling different codes carries a risk that the effects of the coupling scheme dominate over the improved physics. On the other hand, while hybrid-PIC simulations had produced important breakthroughs (e.g., Omidi et al. 2005), the velocity distributions computed through binning are noisy due to the limited number of launched particles, which could compromise physical conclusions. Further, due to the limited number of particles, the hybrid-PIC simulations could not provide sharp gradients, which would become a problem especially in the magnetotail, where the lobes surrounding the dense plasma sheet are almost empty. As the tail physics is critical in the global description of the magnetosphere, the idea about a hybrid-Vlasov simulation emerged.

The objective was simple, just to go beyond MHD by introducing protons as a kinetic population modelled by a distribution function and thus getting rid of the noise. Several challenges were identified. First, if one neglects electrons as a kinetic population, one will, e.g., lose electron-scale instabilities that can be important in the tail physics (e.g., Pritchett 2005). Second, a global hybrid-Vlasov approach is still an extreme computational challenge even with a coarse ion-scale resolution, since it must be carried out in six dimensions. Further, doubts existed about whether grid resolutions achievable with current computational resources would facilitate ion kinetic physics. However, with a new approach without historical heritage, one could utilize the latest high-performance computing methods and new computational architectures, provided that the code would always be portable to the latest technology. The computational resources were still obeying the “Moore law”, and petascale systems had just become operational (Kogge 2009). With these prospects in mind, Vlasiator was proposed to the newly established European Research Council in 2007, which solicited new ideas with a high risk–high gain vision.

5.2 Grid discretisation

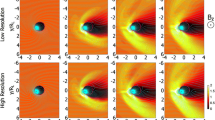

The position space is discretised on a uniform Cartesian grid of cubic cells. Each cell holds the values of variables that are either being propagated or reconstructed (e.g., the electric and magnetic fields and the ion velocity distribution moments) as well as housekeeping variables. In addition, a three-dimensional uniform Cartesian velocity space grid is stored in each spatial cell. For position space Vlasiator uses the Distributed Cartesian Cell-Refinable Grid library (DCCRG; Honkonen et al. 2013) albeit without making use of the adaptive mesh refinement capabilities it offers. The library can distribute the grid over a large supercomputer using the domain decomposition approach (see Sect. 5.5 for details on the parallelisation strategies).