Abstract

Despite the importance of network analysis in biological practice, dominant models of scientific explanation do not account satisfactorily for how this family of explanations gain their explanatory power in every specific application. This insufficiency is particularly salient in the study of the ecology of the microbiome. Drawing on Coyte et al. (2015) study of the ecology of the microbiome, Deulofeu et al. (2021) argue that these explanations are neither mechanistic, nor purely mathematical, yet they are substantially empirical. Building on their criticisms, in the present work we make a step further elucidating this kind of explanations with a general analytical framework according to which scientific explanations are ampliative, specialized embeddings (ASE), which has recently been successfully applied to other biological subfields. We use ASE to reconstruct in detail the Coyte et al.’s case study and on its basis, we claim that network explanations of the ecology of the microbiome, and other similar explanations in ecology, gain their epistemological force in virtue of their capacity to embed biological phenomena in non-accidental generalizations that are simultaneously ampliative and specialized.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The first contemporary models of scientific explanation attributed a fundamental role to scientific laws in the explanatory practice. Under this schema, an explanation was conceived as the nomological inference of the set of propositions contained in the explanandum from the set of propositions that constituted the explanans, among which a law of nature should necessarily figure –and no non-nomological regularity can figure (Hempel & Oppenheim, 1948; Hempel, 1965). Explanations would thus be deductive-nomological (DN) or inductive statistical (IS), depending on whether the inference is deductive or inductive. This model of explanation has however been claimed by many as inadequate to capture the epistemology of the explanations in the life sciences. These critics argue that one can hardly find in biology the type of universal and exceptionless nomological generalizations that Hempel-like explanations require (Beatty, 1995; Mitchell, 1997, 2003). On a related vein, it is also claimed that the aim to discover laws seems to score poorly with the heuristics of biological research, which seems to be oriented towards the construction of local models of different types of biological phenomena (Godfrey-Smith, 2006).

The general criticisms to the DS/IS model of explanation motivated the emergence of other general accounts, mainly unificationism (Kitcher, 1989, 1993) and causalism (Scriven, 1975; Salmon, 1984; Lewis, 1986), each of which is also prone to its own problems. Additionally, the alleged inapplicability of the DS/IS model to the biological sciences also motivated the formulation of a whole variety of new types of explanations in biology (functional, teleological, mechanistic, etc.). In fact, contemporary biological research relies on a plurality of explanatory practices to account for biological phenomena (Braillard & Malaterre, 2015; Green, 2016; Moreno & Suárez, 2020). Molecular biology, for instance, appeals to the way in which certain biomolecules interact with each other to produce certain outputs. Evolutionary biology appeals to the theory of natural selection and its concrete implementation in genetic models to explain why some organisms bear certain traits, or why some phenotypic forms have evolved. Developmental biology tries to uncover how aspects such as the relative position of some cells during gastrulation constraints their biological fate, determining whether they will specialize into one type of tissue or another. These and other (sub)fields combine different resources to produce epistemologically satisfactory explanations of why the biological world is how it is, and why certain biological phenomena occur.

One of the explanatory heuristics that has received more philosophical attention in the last decades is the so-called new-mechanistic strategy, which is presented in sharp opposition to the Hempelian DN/IS conception of explanation (Craver & Darden, 2013; Glennan, 1996, 2002; Machamer et al., 2000). The defining feature of new-mechanism is the belief that scientific explanations work because scientists can show how the phenomenon of interest (explanandum) is produced via the operations of a discrete set of parts and their interactions. Conceptually speaking, one may distinguish two essential components in any mechanistic explanation, which jointly make mechanistic explanations explanatory: a model of mechanism, consisting in the arrangement of parts, operations and the way these are organized in a system, and a causal story that systematically connects the interactions between these parts and a specific outcome. Both elements are necessary and sufficient for a mechanistic explanation (Issad & Malaterre, 2015), and for this reason new-mechanism has been characterized as a form of causalism about explanation (Woodward, 2017). Heuristically speaking, new-mechanism exploits the possibility that biological systems can often be de-composed into discrete parts whose behaviour can be causally mapped to a specific function within the global system. This leads to a research methodology based on the heuristics of de-composition/re-composition, and localization (Bechtel & Abrahamsen, 2005; Bechtel & Richardson, 1993). These strategies require four minimal elements, which are necessary for a successful mechanistic explanation (Kaiser, 2015; Moreno & Suárez, 2020): (a) the system must be experimentally divided into discrete parts and their operations; (b) the experimental division must occur via an empirical intervention of the system; (c) each part may be causally mapped to a specific operation within the system; (d) the phenomenon must be shown to be a product of the interactions between the parts of the mechanism.

Despite its increasing popularity and success in several areas of contemporary biology, the universal application of the new-mechanistic strategy in every field of biology has been severely questioned. First, several biological phenomena are explained by a variety of processes whose level of complexity and high degree of robustness makes them incompatible with the ontological requirements that characterize new-mechanistic philosophy (Alleva et al., 2017; Brigandt, 2015; Deulofeu & Suárez, 2018; Dupré, 2013; McManus, 2012; Nicholson, 2018). Second, several biological explanations seem not to be grounded on the causal-mechanistic features of the systems under study, but rather on their structural properties. These explanations, instead of looking for a causal-mechanistic story of how the parts of a system inter-relate to produce the phenomenon, seek to determine how the global properties of the system constraint or limit its behaviour in such a way that the biological phenomenon becomes predictable (Brigandt et al., 2017; Deulofeu et al., 2021; Green & Jones, 2016; Huneman, 2010, 2018a; Sober, 1983).

The latter strategy has been implemented in biology giving rise to the development of systems biology (Chen et al., 2009; Green, 2017; Mason & Verwoerd, 2007). Systems biology employs a diverse array of mathematical techniques to uncover the global properties of complex biological systems, and to predict their future states or behaviours, and it has been suggested that explanations in systems biology are better understood in Hempelian terms than in mechanistic terms (Fagan, 2016), but without providing a detailed characterization and defence. One of the techniques that has acquired special prominence has been the use of network analyses, and the application of principles from graph theory to uncover the inner dynamics that some biological phenomena follow, providing a specific form of generating biological explanations (Green et al., 2015, 2018; Huneman, 2010, 2018b). While some accounts to elucidate the explanatory power of network methodologies have been proposed in recent literature in philosophy of biology, we will show that they are epistemologically unsatisfactory (§ 3), rendering network explanations epistemologically mysterious.

To solve this deficiency, in this paper we provide an alternative elucidation of network explanations as they are used in the context of ecological microbiome research, by applying Díez’s (2014) neo-Hempelian analysis to Deulofeu et al.’s (2021) study of the network explanation of the ecology of the microbiome. We argue that, insofar as network methodologies are applied in the study of the ecology of the microbiome, they generate explanations that explain in virtue of their capacity of embedding some biological phenomena into a theoretical model using a generalization which is simultaneously non-accidental, ampliative and specialized (ASE, for “ampliative, specialized embedding”). In this sense, network explanations of the ecology of the microbiome explain because they make the biological phenomenon expectable in this specific manner. Our paper thus complements Deulofeu et al.’s (2021) study by showing that ecological network explanations are ultimately explanations of the ASE-type.

In § 2, we introduce network approaches to the study of biological complexity. In § 3 we argue, by drawing on the explanation of the ecology of the microbiome, that network explanations, as they are used to study the ecology of the microbiome, are not satisfactorily accounted by existing proposals, neither mechanistic nor topological. In § 4, we introduce the ampliative specialized embedding account and show how it has been successfully applied to several cases of explanation in biology. In § 5, we apply this account to network explanations of the ecology of the microbiome and argue that it elucidates their explanatory power better than its rivals. Finally, in § 6, we present some concluding remarks.

2 The explanatory role of networks in biology: the case of the ecology of the microbiome

The growth of systems biology since the early 2000 has been partially triggered by the extensive application of the mathematical techniques derived from graph theory to study the properties of biological systems. Within systems biology, network approaches have acquired a notable prominence as explanatory tools and have merited extensive philosophical examination in the last years (Fox Keller, 2005; Green, 2017; Moreno & Suárez, 2020). The use of networks to provide explanations in contemporary biology is plural, and the philosophical implications of different uses of network analyses strongly depend on the type of questions that different research groups are trying to address, as Green et al. (2018) have convincingly argued. These philosophical implications strongly depend on whether the use of the network analysis is subsidiary to the discovery of mechanisms, or rather it is by itself sufficient for explanatory purposes (Deulofeu et al., 2021). For instance, some network approaches are used to systematically analyse biological datasets in a way that allows the discovery of the mechanisms that underlie the biological systems for which these datasets have been generated (Bechtel, 2019, 2020). This use of network analysis has been argued to constitute an example in which the use of network tools is somehow ancillary to mechanistic explanation, a first step in the discovery of the mechanisms that are causally (and explanatory) responsible of the occurrence of certain biological phenomena.

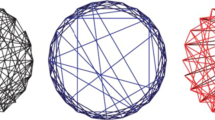

Other uses of network analyses are however more substantive, in the sense that the use of networks seems to be explanatory by itself independently of mechanistic considerations. We refer to these cases as genuine network explanations. A good example of genuine network explanations in the natural sciences is provided by the use of network analysis to uncover the set of constraints that a concrete biological system may have as a result of its organization, i.e., as a consequence of the number of components that the system has and the way in which those are arranged or related to each other. In these cases, the nature of the entities that compose the system or the activities that these entities engage in are largely irrelevant to explain the biological phenomena under investigation (Green, 2018, 2021; Green & Jones, 2016; Huneman, 2010, 2018b; Jones, 2014; Rathkopf, 2018). We consider that this second group of cases, which include examples in stem cell biology (Fagan, 2016), developmental biology (McManus, 2012), and ecology (Huneman, 2010; Deulofeu et al., 2021), among other fields, constitute the best example of a family of biological explanations that derive their explanatory power from non-mechanistic properties of the empirical systems, but rather from some specific network-like properties that are specific of the biological system. To clarify this last point, it is useful to introduce a distinction between a biological network-like system and a general network-like system A general network-like system refers to the type of systems usually built by mathematicians with the aim of exploring the implications of the relationships between objects regardless of their specific nature. For example, the networks built in graph theory explore the properties derived from the distribution of objects in the space. In contrast, a biological network-like system requires biological interpretation and it thus refers to the network systems built with specific biological purposes in mind. In this case, the objects are not interpreted abstractly, but as specific types of objects (species, microorganisms, multicellular organisms, predators, prey, etc.). This strongly affects how the network is built for two reasons: first, because some perfectly possible mathematical objects are biologically impossible, and thus are discarded; second, because the properties of the objects, while yet being too abstract for mechanistic manipulation, will partially condition the construction of the network by constraining aspects like the strength of interaction between the nodes, the type of interactions, etc.

To illustrate how genuine biological network-like system explanations work, how they differ from mechanistic explanations, and how they differ from network-like systems generally, let us consider recent applications of network analyses to the study of the ecology of the microbiome (Coyte et al., 2015; Layeghifard et al., 2017; Naqvi et al., 2010). This is a well-described case in contemporary philosophy of biology, which has already been established as a standard example of a genuine biological network-like explanation (Deulofeu et al., 2021).

The microbiome is the set of microorganisms of different species that cohabit with an animal or plant host. In most cases, a microbiome includes hundreds or even thousands of species (Ronai et al., 2020). Microbiome composition is shaped by many factors, including the host genetics, but also environmental factors such as the host diet, or even seasonal variation (Stencel, 2021; Suárez, 2020; Theis et al., 2016). Recent biological research has shown that the microbiome of an organism is ecologically stable during its lifetime, even though it is highly diverse, it instantiates a random network, and its species composition is constantly being disturbed. That is, the relative density of the species that compose a host’s microbiome remains constant across time, even though our current knowledge about ecology strongly suggests that the microbiome should be unstable. There are two reasons to believe that the microbiome should be unstable. On the one hand, since the microbiome is constantly disturbed through several disrupting environmental factors, it is expected that the arrival of new species would constantly alter its relative densities and even produce the extinction of some of the already existing species. On the other hand, the microbiome is a highly diverse random ecological network, and current ecological knowledge suggests that high diversity triggers instability in random networks (May, 1972). Therefore, a key biological phenomenon that needs to be explained is thus why the microbiome remains ecologically stable, even while all our current ecological knowledge suggests that it should be unstable. Or, in other words, how the microbiome is organised such that certain type of interactions (perturbations) do not make any difference to its global stability (Coyte et al., 2015).

The standard way of studying the global stability of the microbiome is via the construction of network models, akin to their use in other areas of system’s biology. In this vein, biologists Coyte et al. (2015) built a well-recognised network-based explanation of the microbiome stability, which we will focus on here.

To build their explanation, they start by describing the microbiome as a random unstructured network of interacting species up to S, where S = 1000 species (an average number of species in a normal microbiome), whose relative density, Xi, will change over time in response to the intrinsic growth rate of each species, ri, its interaction with other members of the same species, si, and its interaction with members of all the other species within the network, aij / i ≠ j. In Coyte et al.’s model, the interactions refer to five types of biological interactions between species: cooperation, competition, exploitation, commensalism and amensalism. The choice of five interaction types derives from the knowledge that diversity fosters instability in random networks. Concretely, Coyte et al. realized that this was only the case if the network had only one interacting type, as it was the case in the early studies by May (1972). However, recent research by Mougi and Kondoh (2012) had shown that this was not the case if five possible types of interactions were considered, although Mougi and Kondoh’s research was restricted to macroscopic communities. Based on this, Coyte et al. concluded that the dynamics of the network would be captured by the equation:

The next step in the research consists in studying the behaviour of the network, i.e. of (1), when different perturbations alter the relative density of one or more species. This allows discovering the type of interactions aij that would make the network unstable, revealing thus which interactions make unfeasible that the network will recover its relative distribution of species densities after dt, and which interactions will make the recovery feasible. The mathematical analysis requires a linear stability analysis (LSA) of the system, which generates a huge amount of ordinary differential equations (ODEs) whose computation will reveal which types of interactions aij will make the microbiome stable. LSA requires the computation of thousands of equations in a complex process in which any alleged causal equivalence between each ODE and a real property of the empirical system is ultimately lost (Issad & Malaterre, 2015). After LSA is conducted, Coyte et al. deduced that, assuming that the network has a non-zero degree of connectivity (i.e. that there are interactions between many species in it), a high proportion of competitive interactions between the species that compose the network will make it stable, whereas a high proportion of cooperative interactions will make it unstable. This provides a spectrum which constrains the ranges of interactions where the microbiome is ecologically stable, given its internal architecture as this emerges from the LSA of the network model system. In other words, the explanans explains the explanandum because it depicts how the species’ densities relate with each other on average for the system to be stable, which is the empirically observable explanandum. Coyte et al. thus concluded that the high proportion of inter-species competition explains the stability of the microbiome. This was a surprising discovery since it contradicts Mougi and Kondoh’s (2012) result, which had shown that a high degree of mutualism was key to explain stability in diverse ecosystems. However, Coyte et al. (2015) argued that the same cannot be the case for microscopic communities, since a high degree of mutualism would tend to generate feedback loops in the community which will ultimately erode its stability. In view of this analysis, it is important to note that Coyte el al.’s explanation constitutes a highly specific example in which the network-like structure becomes strongly shaped by the knowledge derived from ecology, in general, and from microbial ecology in particular. Concretely, some empirical knowledge derives from the known properties of the microbiome (that it is diverse, stable and constantly perturbed), and some theoretical knowledge derives from some ecological results (that diversity and stability only correlate in systems with several interaction types, and that microscopic and macroscopic systems do not need to ecologically behave in the same way).

The interesting philosophical question that we raise about this case study concerns how exactly Coyte et al.’s, and other similar, explanans gains its explanatory force with respect to its explanandum.

3 Philosophical elucidations of the explanatory role of networks

One option to elucidate Coyte et al.’s case study would be to argue that the explanans provides a mechanism that is productive of the explanandum, and in doing so it accounts for it. However, as Deulofeu et al. (2021) have convincingly argued, none of the requirements of the new-mechanistic strategy can be found in Coyte et al.’s explanation.Footnote 1 To start with, the microbiome is not amenable to mechanistic intervention as a means to study its ecological stability. One of the reasons why this is so concerns its size. The microbiome of most individuals is composed by hundreds or thousands of species, whose relative abundance varies. A system of this size is not easily amenable to laboratory intervention, and the strategies of de-composition/re-composition and localization that characterize new-mechanistic research seem of little help here (Green et al., 2018). A second reason concerns its variability: the microbiome is unique to each host species, and different individuals bear different species in their microbiomes, even though this variability does not affect the global stability of the system. These two aspects cause that: (a) there is no division of the system in parts and their operations (as these would be unique to every microbiome, and Coyte et al. study the conditions affecting every ecologically stable microbiome); (b) there is no intervention in any empirical system (i.e. no de-composition/re-composition, no localization). In addition to these two problems, Deulofeu et al. (2021) show that: (c) there is no causal story of how the interactions between the elements of the system (species) produce the stability behaviour, as no element of the microbiome is causally mapped to a specific operation within the system; (d) there is no specification of which species interact with other, or the specific nature (competitive, cooperative, etc.) of these interactions, but rather a global description of the range(s) of proportion of the interacting species. Therefore, Deulofeu et al. (2021) convincingly conclude that new-mechanism cannot elucidate this question: the global properties of the microbiome, including the spectrum where it is ecologically stable, are inferred from the type of biological constraints that the system must possess given its internal architecture, and the internal architecture is in turn known via the LSA of the network model of the system. In other words, the explanans explains the explanandum because it depicts how the parts interact with each other on average for the system to be stable, which is the empirically observable explanandum.

Other authors agree with us that genuine network explanations are not accountable in mechanistic terms either, and propose their own elucidation of how this type of explanations explain (Huneman, 2010, 2018b; Kostić 2020). Even while these elucidations are interesting and reveal important elements of some network explanations, we do not think they account satisfactorily for the specific kind of ecological cases we are analysing in this paper.

For example, Huneman (2010, pp. 216–218) argues that ecological explanations that appeal to networks are topological explanations, which are in turn a subtype of structural explanations (Huneman, 2018b). In his view, a topological explanation explains that a system S has a property X in virtue of S possessing a specific topological property Ti; that is, S has elements or parts whose behaviours can be represented by a network of a specific variety, $, in a topological space of possibilities or networks E. $ characterizes a class within E with different properties than other subclasses, $*, in E. Huneman’s idea is that the topological properties Ti of the system S explain because “they specify the nature of the properties whose existence entail[s] the fact that the explanandum happens” (2010, p. 217). For example, this occurs when one explains the impossibility of crossing the seven bridges of Konigsberg without crossing one of them at least twice: the seven bridges exemplify a topological graph from which it can be demonstrated that no single-cross path can cross all the bridges. $ generally constitutes an equivalence class because many Sj realize the same topological property Ti as S. What happens in this kind of topological explanations is that once the network is correctly represented in a graph displaying the topological properties Ti then these Ti constrain the dynamics of the empirical system S such that it can only have one outcome when placed under certain conditions.

Though Huneman’s account applies well to Konigsberg’s bridges-like cases, and other virtues notwithstanding, we think it suffers from two flaws when applied to Coyte et al.’s-like explanations in ecology. One rather technical issue has already been noted by Deulofeu et al. (2021). Because Coyte et al. build their system as a random network, topology is necessary, but it is by itself not sufficient to elucidate the explanation. It needs to be complemented by facts of the interaction matrix spelled out in (1), which are particularly realized through Coyte et al.’s LSA. The explanation works then as a combination of topological properties plus the interaction of the elements in the network. But we also have a second concern with this type of elucidation, as we think Huneman’s account fails to differentiate between biological network-like systems and general network-like systems. We believe that this leads him to present an account which, despite being a good elucidation of general network-like systems, does not work well for biological network-like systems. Let us explain this in some detail. Even if Huneman were correct that the topological properties together with the interaction matrix are enough to imply the explanandum, one may wonder why considering exclusively the topological properties and the interaction matrix would be a satisfactory explanation of an ecological phenomenon. Both topological properties and LSAs are applicable to systems of a very different empirical nature (biological, geographical, economical, etc.) provided the network they realize belongs to the same equivalence class. In a sense, if the only properties accounting for the explanandum are topological, it seems there is no difference between, e.g., explanations in geography and in ecology. Note that this is problematic regardless of one’s philosophical commitments about scientific explanation. First, Coyte et al. do not claim to be carrying out mathematical research; on the contrary, they are interested in explaining observable ecological properties of an empirical system. Second, their study is not taken to yield knowledge about the properties of random networks; it yields knowledge about the ecological properties of the microbiome. A good philosophical elucidation of this type of explanation should preserve its specific empirical nature, at least if it aims to account for what actually occurs in scientific practice. The question that Huneman’s account leaves unanswered, thus, is how to connect the topological properties and the interaction matrix discovered by Coyte et al. to specific ecological research.

Kostić (2020) has recently presented an account of topological explanations that one could try to apply to network explanations such as Coyte et al.’s to elucidate why they are explanatory. His account includes three elements, which he considers necessary and sufficient for a topological explanation to be explanatory: (a) factivity, i.e., explanans and explanandum may be approximately true; (b) counterfactual dependence between explanans and explanandum, which can take a horizontal or a vertical mode (Kostić, 2019); (c) explanatory perspectivism, i.e., that the explanans is a relevant answer to specific question about the explanandum (2020, p. 2). The account justifies as well what Kostic considers to be two salient features of topological explanations. First, that “the same topological explanatory pattern can be used to explain” a family of heterogeneous empirical systems (p. 1). Second, “that topological explanations are non-causal and that counterfactual dependency relations (…) hold independently from the contingent facts about any particular system” (p. 7).

We think that, despite its alleged success in other fields, the explanation of the ecology of the microbiome is not satisfactorily elucidated in Kostic’s terms either. Kostic’s account correctly puts the focus on the counterfactual yet non-causal-mechanistic aspect of topological explanations, as well as on the perspectival aspects that characterize any explanation as opposed to a description, but it does not elucidate essential aspects of the explanatoriness of Coyte et al.’s, nor of other network explanations that are built based on well-structured scientific theories. In Kostic’s account, the reason why scientists use a specific network model to explain certain features of an empirical system, and whether they appeal to a horizontal-mode or vertical-mode counterfactual explanans, depends on the specific question that the scientists are asking (i.e., perspectivism). So constructed, Kostic’s account puts an excessive weight on the pragmatic dimension of scientifically explaining a phenomenon, letting it completely unconstrained. Our main concern is that not every question a scientist asks about a phenomenon necessarily gives rise to a scientific explanation. Occasionally, scientists build descriptive models (Ankeny, 2000; Colyvan et al., 2009; Findl & Suárez, 2021), exploratory models (Gelfert, 2016), or phenomenological models (Cartwright, 1983; Díez, 2014). None of these models are considered explanations of the phenomena, yet they clearly respond to questions that scientists consider important. Kostic could object that even if this is a general problem of his account, this is not necessarily true in ecology, where the pragmatic dimension determines that ecological models are explanatory. We think this answer would be incorrect, as the problem of distinguishing which models are explanatory is particularly salient in ecology. In ecology, models play many different functions not necessarily limited to explaining phenomena (Odenbaugh, 2019); yet some of these models are explanatory. The philosophical question is what makes these explanatory models different from those models that play other epistemic roles. Therefore, we consider that this type of account fails to elucidate what network explanations are, at least in the context of ecology we are considering in this paper.

To summarize, regardless their successful application to other cases, neither new-mechanist accounts, nor Huneman’s nor Kostic’s, provide a satisfactory elucidation of Coyte et al.,’s-like ecological explanations, whose explanatory force remains then in need of a satisfactory elucidation. In the next section we present Díez’s ASE account of scientific explanation that we argue serves to fill this gap.

4 Explanations as ampliative, specialized embeddings: a neo-hempelian approach

Díez’s (2014) account is elaborated by drawing on some ideas from Sneedean structuralism (Balzer et al., 1987; Bartelborth, 2002; Forge, 2002). It is formulated as a general account of explanation which can fix the issues usually raised against Hempel’s DN/IS model while simultaneously sticking close to Hempel’s idea that explaining consists, basically, in making the explanandum nomologically expectable from antecedent conditions. The account allows doing so without moving to conditions as demanding as those posited by causalism or standard unificationism (see below). Díez claims that his neo-Hempelian approach can qualify for a general theory of explanation applicable across scientific practice, that may be supplemented with additional (causal manipulativist, causal mechanistic, unifying, reductive, topological, etc.) features in specific fields. The idea is that non-accidental, ampliative, specialized embedding is the minimal common sufficient condition for explanatoriness, yet in some specific contexts this condition is supplemented with one such additional features that provides additional explanatory value according to certain pragmatic contextual demands, if any.

Ampliative, specialized embedding is then sufficient in general for minimal explanatoriness, which can eventually be additionally enriched in certain contexts. Yet, according to Díez, even when not so enriched, ampliative specialized embedding suffices for explanation. This generality is then compatible with the plurality of specific different kinds of explanation that one may find in different fields, including the variety one finds in biological practice that we referred above, provided they all share the ASE minimal core. This plural variety originates in the additional features (if any) that the context may demand in a particular scientific field/practice.Footnote 2

ASE preserves the core of the Hempelian nomological expectability, though formulated within a model-theoretic framework with the notion of nomological embedding. The basic idea is that explaining a phenomenon consists of (at least) embedding it into a nomic pattern within a theory-net. Now explanandum and explanans are certain kinds of models or structures:

Let Di be domains of objects, and fi, gi relations or functions, then the explanandum is constituted by a data model DM = < D1, …, Dn, f1, …, fi > and the explanans by a theoretical model, TM = < D1, …, Dm, g1, …, gj>. TM is defined by the satisfaction of certain nomological regularities and must involve at least all the kinds of objects and functions including in DM, but it can introduce new ones and frequently does so in satisfactory scientific explanations (see below on ampliativeness).

For instance, in the Classical Mechanics Earth-Moon case, the explanandum is the purely kinematical data model that represents the Moon’s spatio-temporal trajectory around the Earth actually measured, and the explanans model is the dynamical structure including masses and forces and satisfying Newton’s Second Law and the Law of Gravitation. To explain the Moon’s trajectory consists in embedding it in the mechanical system, i.e., to make the Moon’ kinematic trajectory “nomologically expectable” from the mechanical model. Embedding here is a technical model-theoretic notion that means (if we simplify and leave idealizations aside now) that the data model is -or is isomorphic to- a part of the theoretical model. This account is not confined to the physical sciences, and it applies well also to biological research. In Mendelian Genetics, for instance, the explanandum model describes the patterns of transmission of certain phenotypes, e.g., for peas, and the explanans model includes genes as an addition and satisfies certain genetic laws. The transmission of traits is explained when one embeds phenotype transmission into the theoretical genetic model, that is, when the observed phenotype sequence is identical (or isomorphic) to a part of the full genetic model (Díez & Lorenzano, 2022).

ASE’s basic idea is that if the explanation succeeds, then we find the explanandum data model as part of the theoretical model defined by certain laws. That is, if things behave in the way that some non-accidental regularities in the explanans say, then one should obtain as a result the explanandum data independently measured; and when the actual data coincide with the expected results, the embedding obtains, and the explanation succeeds. In this regard, ASE preserves the core of the Hempelian nomic-expectabilty idea. The embedding provides the expectability part, for if the embedding obtains then the expectation of finding the explanadum data as part of the theoretical model succeeds. This expectability, though, is weaker than Hempel’s inferentialism for it does not demand that explanations must be logical inferences stricto sensu (then it is not subject to the “explanations are not inferences” criticisms), but nevertheless it also makes room for both deterministic and probabilistic (including low probability cases, if needed) explanations depending on whether the regularities that define the explanans models are deterministic or probabilistic. The nomic component comes from the fact that the theoretical model that embeds the explanadum model is defined by the structure satisfying certain laws, understood merely as non-accidental, counterfactual-supporting generalizations (in a way that is compatible with Mitchell’s characterization of the so-called “pragmatic laws”, see Mitchell, 1997, 2003). As Díez emphasizes, this sense of nomicity is quite modest, meaning just that the explanans model satisfies certain non-accidental generalizations, no matter how ceteris paribus, local, or domain restricted they are. On the other hand, the explanandum data model is measured without using the laws that define the theoretical model, i.e., independently of such laws, which guarantees that the intended embedding is not trivial and may fail.

This is basically a model-theoretic elaboration of the general Hempelian idea of explanations as nomological expectabilities. This idea, though, is substantially modified adding two new conditions for the embedding to be properly explanatory: it must be both ampliatve and specialized. As our mechanical and genetic examples illustrate, the explanans model must include new, additional ontological (in metaphysical terms) or conceptual (if one prefers a more epistemic formulationFootnote 3) components with respect the explanandum model. In the mechanical case, the explanans includes, together with kinematic properties, new dynamical ones, namely masses and forces, behaving with the former as the mechanical laws establish. In the genetic case, the explanans model includes, together with the phenotypic properties, the genetic properties, i.e., the genes or factors that relate with the phenotypic properties as the genetic laws establish.

The ampliative character of these embeddings is what accounts for their explanatory nature compared to other embeddings that, although also nomological, lack explanatory import. In the Keplerian case, for instance, a nomological embedding is also present (Kepler’s regularities are not accidental generalizations; they support counterfactuals), but this embedding does not qualify as explanatory because the explanans model (defined by Kepler’s laws) does not introduce additional conceptual/ontological apparatus with respect to the explanandum, namely the kinematic (realFootnote 4) trajectories. Both the explanandum and the explanans model include only kinematical properties. The same applies to Galilean kinematics. As for biological cases, a case in point is merely phenotypic genetics, in which one takes purely phenotypic statistical regularities as defining models that embed certain phenotypic data. Again, this would be a case of a nomological embedding with no explanatory import. Embedding, even when nomological (i.e., counterfactual supporting), without ontological/conceptual ampliation, is not explanatory.

The second, additional condition is that the ampliative laws used for defining the explanans model must include “special” laws, which cannot consist in merely schematic or programmatic principles. This distinction originates in Kuhn’s difference between “generalization-sketches” and “detailed symbolic expressions” (Kuhn, 1974, p. 465) further elaborated by structuralist metatheory as the distinction between guiding-principles and their specializations in a theory-net (Balzer et al., 1987, Balzer & Moulines, 1998 for several examples).Footnote 5

Most theories are hierarchical net-like systems with laws of very different degrees of generality within the same conceptual framework. Often there is a single fundamental law or guiding-principle “at the top” of the hierarchy and a variety of special laws that apply to different phenomena. Fundamental laws/guiding-principles are kind of “programmatic”, in the sense that they establish the kind of things we should look for when we want to explain a specific phenomenon, setting the general lawful scheme that specific laws must specify. It is worth emphasizing that general guiding-principles taken in isolation, without their specializations, are not very empirically informative for they are too unspecific to be tested in isolation. To be tested/applied, fundamental laws or guiding-principles must be specialized (“concretized” or “specified”) by particular forms that, in the above referred Kuhn’s sense, specify some functional dependences that are left open in the general guiding-principle (Moulines, 1984; Díez & Lorenzano, 2013).

The resulting structure of a theory may be represented as a net, where the nodes are given by the different theory-elements, and the links represent different directions of specialization. For instance, the theory-net of Classical Mechanics (CM) has Newton’s Second Law as the top unifying nomological component, i.e. as its Fundamental Law or Guiding-principle (Balzer & Moulines, 1981; Moulines, 1984; Balzer et al., 1987) that can be read as follows:

CMGP

For a mechanical trajectory of a particle with mass m, the change in quantity of movement, i.e. m·a, is due to the combination of the forces acting on the particle.

This CMGP at the top specializes down opening different branches for different phenomena or explananda. This branching is reconstructed in different steps: first, symmetry forces, space-dependent forces, velocity-dependent forces, and time-dependent ones; then, e.g., the space-dependent branch further specializes into direct and indirect space-dependent; direct space-dependent branch specializes in turn into linear negative space-dependent and…; inverse space-dependent branch specializes into square inverse and…; at the bottom of every branch we have a completely specified law that is the version of the guiding-principle for the specific phenomenon in point: pendula, planets, inclined planes, etc. (Kuhn’s “detailed symbolic expressions”).

The theory-net of CM looks (at a certain historical moment) as follows (only some, simplified, terminal nodes are shown here, which suffices to our present goals; at bottom, in capitals, we include examples of phenomena explained by the branch):

Now we can spell out the second additional condition for an embedding to be explanatory, namely, the embedding must be specialized. The basic idea is that among the (non-accidental) generalizations that define the explanans model, at least one must be a special law, i.e. the explanans model cannot be defined exclusively using general guiding-principles, otherwise the embedding becomes empirically trivial. Take for instance Newton’s second law Σ fi = m·d2s/t alone. If there is no additional constraint on the kind of functions fi that one can make use of, no matter how crazy these functions were, then, as Díez points out, “with just some mathematical skill we could embed any trajectory” (2014, p. 1425). Even for weird trajectories, such as the pen in my hand moved at will: with enough purely mathematical smartness one could find out a series of functions f1, f2, … whose combination embeds it; or, as a more realistic case, with no restriction on the kind of forces, CM could embed diffraction phenomena. As another example, take what can be considered the general guiding-principle of Ptolemaic astronomy: “For every planetary trajectory there is a deferent and a finite series of nested epicycles (with angular velocities) whose combination fits the trajectory”. As it has been proved, any continuous, bounded periodical trajectory may be so embedded (Hanson, 1960), if no additional constraint on the epicycles system is imposed. The moral, then, is that for a nomological embedding, even ampliative, to be properly empirically explanatory and not merely an ad-hoc trick, the explanans model must be specified using some special law in the referred sense.

These notions of specialization and of special laws may raise two concerns worth clarifying. First, it may seem that ASE involves a tension between “acts of generalization”, providing guiding principles, and “acts of specification”, generating special laws.Footnote 6 It is true that complex, highly unified theories involve these two “acts”, but we believe that they are not in tension but, rather, in an intimate collaboration. This collaboration is in fact constitutively essential in unified theories with a wide scope of application, such as Classical Mechanics or Natural Selection (and, as we defend in the next section, Theoretical Ecology). It is because of the act of generalization involved in the guiding principle (Kuhn’s “generalization schemes”), that the unified theory is one (unified) theory, and not a mere set of disconnected applications. It is because the acts of specialization generating special laws as different “specifications” of the schematic parameters of the guiding principle, that the single theory has a diverse variety of (partially independent, partially dependent) applications, constituting a unified system/theory.

Second, one might then object that, according to ASE, it would only be possible to find bona fide scientific explanations in highly developed unified theories with a net-like structure. This seems counterintuitive for, as we have noted above, there are quite isolated bona fide explanations. The objection, though, is unsound. Special laws do not need the explicit presence of a guiding principle. Firstly, the guiding principle is often not explicitly formulated, but only implicitly assumed (see e.g. Lorenzano, 2006). Secondly, special laws may exist without unified theories. Although the notion of special law is particularly clear by contrast to that of general principle in the framework of a unified theory-net, this does not mean that special laws exist only within the framework of a unified theory-net. The law for harmonic oscillators, for instance, is a special law no matter when it was discovered or later integrated in a bigger, unified theory-net (Díez, 2014).

Although ASE has some unificationist flavor in the complex, theory-net cases, a unifying network, though desirable, is not strictly necessary for explanatoriness. Unification is an additional virtue, but not a precondition for explanatoriness. Additionally, given the ampliativeness condition, it is clear that ASE does not make unification sufficient for explanation either: a unification that is not ampliative can hardly be considered explanatory, as illustrated by the Keplerian and the Galilean examples discussed above. On the other hand, while some theory-nets may be built based on some causal structures, this is not always the case, making thus room for non-causal explanations, as the several counterexamples to causalism found in the literature (see Ruben, 1990 and Díez, 2014, for a summary). For instance, reductive explanations with identity, or non-reductive examples such as relativistic mechanics, many social sciences, or some biological theories, including the case of the microbiome we study here, provide explanations that can hardly qualify as causal. In the next section we argue that this last case may be elucidated in terms of ampliative, specialized embeddings; thus, ASE would account for its non-causal explanatoriness.

Note that the two conditions added to the core Hempelian nomological expectability idea (ampliativeness and specialization), though substantive and crucial for fixing classical counterexamples to standard Hempelianism, are much weaker than both causality and unification, which, we have claimed, fail to be present in some bona fide explanations. These week demands added to nomological expectability make ASE, though substantial, a sober empiricist account, aligned with Hempel’s empiricist strictures, without the strong metaphysical commitments that both causalism and unificationism must assume.Footnote 7

5 The explanation of the ecology of microbiome as ampliative, specialized embedding

The ASE account of scientific explanation has been applied to elucidate the explanatory import of many scientific theories, including several biological theories, e.g., Natural Selection (Díez & Lorenzano, 2013, 2015; Ginnobili, 2016); Symbiogenesis (Deulofeu & Suárez, 2018); Biochemestry (Alleva & Federico, 2013); Allosterism (Alleva et al., 2017); Classical Genetics (Díez & Lorenzano, 2022). We argue that ASE can also characterize the explanatory nature of network ecological explanations as they are used in microbiome research, which we have already claimed to be non-mechanistic and unsatisfactorily elucidated by other current accounts of topological explanations (§ 3). While we illustrate our case with the example of Coyte et al.’s (2015) analysis, it can be generalized to any research that realizes its embedding by appealing to certain specializations characteristic of the ecological sciences, and the basic principles for building ecological models, whenever these are constructed to explain natural control—the fluctuations of the sizes of the populations of all the species in an ecosystem.Footnote 8 Our claim, hence, is that Coyte et al.’s explanation, like other explanations about the natural control of the microbiome in Theoretical Ecology, acquires its explanatory power by embedding a specific empirical model under the basic theoretical principles of ecological modelling introducing new conceptual/ontological machinery connected to the properties of the explananda via special laws/non-accidental regularities.

Note that what follows is our own philosophical reconstruction of how Coyte et al.’s explanation is theoretically grounded. We acknowledge that ecology lacks explicit accepted global principles, and theory in ecology consists in the heuristics used to build the models in each sub-discipline (Sarkar & Elliott-Graves, 2016). But we do not think that from the fact that a discipline lacks explicit theoretical or shared principles, it necessarily lacks implicit ones. These implicit principles are used precisely in the construction of the models, and in their justification in the context of the scientific community. This is what the concept of “guiding principle”, as we use it in this paper, is supposed to capture and, as we will show, it is a key element to elucidate the explanatory character of Coyte et al.’s model (a similar situation happens in other fields, e.g., genetics: Lorenzano, 2007; Díez & Lorenzano, 2022; and symbiogenesis: Deulofeu & Suárez, 2018).

By Theoretical or Mathematical Ecology (TE) we mean a sub-discipline of ecology widely understood (Codling & Dumbrell, 2012), studying how organisms establish a natural control of their population size through their relationship to their physical environment or niche.Footnote 9 One of the main purposes of TE is to explain the dynamical behavior experienced among different groups of species that interact with each other in virtue of sharing the same environment. In contrast with other biological disciplines, such as Population Genetics within Evolutionary Theory, which aims to explain how the distribution of phenotypic and genotypic properties of certain populations of organisms will change over time in virtue of different evolutionary factors (Lloyd 1988/1994, 2021), TE as we conceive it here is not primarily concerned with how these types of properties change. Rather, it studies how different species dynamically change their relative densities in a given environment in virtue of their interactions with the environment and other species that share the same environment. The dynamical changes studied in TE include the study of species extinction, species recovery, or species equilibria or stability, among many others.

To identify the TE’s theoretical framework, it is useful to look at the works of Volterra and Lotka, who started its mathematization for prey-predator systems in the 1920s, in what will become known as the Lotka-Volterra equations, or Lotka-Volterra model.

Lotka and Volterra’s work provides the clues for identifying the relevant guiding-principle in TE. Their research is built upon two assumptions that can be considered as the basic components of TE guiding-principle: (1) that a species’ relative density in a given population will always tend to increase over time according to an internal growth rate fi that will depend on its reproductive capacity, which is unique to each species; (2) that the species density will be limited (either decreased or enhanced) by its interaction gij with other species in their niche. The appeal to these two assumptions introduces the type of ontological or conceptual novel machinery that confers an ampliative character to the embeddings of a data models in TE-theoretical models, in the sense explicated by ASE.

As per analogy with the case of Newton’s CM, we can consider that TE is structured around these two principles as a basic nomological component that expresses the basic types of counterfactual dependencies between species:

TEGP

For a relative density of a species Xi in an environment, the net change in its value over time is due to the combination of the internal growth rate of the species fi and the limiting (either increasing or enhancing) role posed by its interaction gij with other species in the environment.

We can formalize this TEGP as follows: “dXi/dt = f (Xi) + g (…)”, where X corresponds to de density of the relevant species, f to the intrinsic growth function, and g the relation-to-environment (including other species) density-affecting function.

Note that as it is formulated, the TEGP is empirically almost empty, for as it occurred in the case of CMGP, any change in species density (even those produced due to the action of a chemical that drives it to extinction) could be embedded in TEGP alone, provided the scientists have enough skills to assign ad hoc values to fi and formulate an adequate set of functions g1, g2, …, gn for each moment in the process of extinction. To become empirically meaningful, TEGP needs to be constrained for specific applications to different empirical systems. These constrains come through TEGP specializations, which specify the mathematical form of the functions involved depending on different factors of interest for ecologists: whether species growth is exponential or logarithmically limited by the carrying capacity of the niche, on the number of species interaction (including the case where there is only constrained/unconstrained growth with no species interaction), of the type of inter-species functional response (Holling types 1, 2, or 3), on the predominant nature of the interactions between species (competitive, cooperative, mutualistic, etc.), etc. Note that the historical development of all these specializations results from the combination of theoretical knowledge about the ecological systems plus the empirical knowledge about how some concrete ecological systems behave given their structure; as in CM, the theory-net cannot be understood as a deductive system. The pattern of specialization of TEGP can be presented in a theory-net hierarchical structure as follows (for the sake of simplicity, we make explicit the relevant special laws only in some of the branches):

Coyte et al.’s (2015) explanation is, we claim, (implicitly) based on this theory-net, and it simply consists in a particular application of TE to explain a specific data-set in virtue of making it expectable in the ASE form in a terminal node as specialization of the competitive case within the TE theory-net. The data set that is embedded includes empirical observations about the stability behaviour of the microbiome, despite the big number of species composing it (S = 1000) and the presence of constant perturbations due to constant food intake, seasonality, or other type of factors that would alter the initial species densities. To make this data set expectable, the way of specializing the theoretical model needs to be appropriate and in agreement with the tenets of TE. Coyte et al. need to computationally investigate which specialization makes that data set expectable for a system with the properties of the microbiome, and which specializations would not make it expectable because the system would collapse (i.e. one or more species would get extinct) (see Sect. 2, as well as Deulofeu et al. (2021) for an extremely detailed analysis of the process and its philosophical implications).

In summary, thus, the process of discovering an explanans in ecological explanations goes hand in hand with the process of making the explanandum expectable were the specialized explanans correct. Note thus that this points to an immediate relationship between the theory-net system that we have presented here and how biological network-like explanations are built: biological network-like explanations are primarily built on the basis of empirical and theoretical knowledge about the empirical system, whereas the theory-net system is mostly built theoretically. However, empirical knowledge will determine how the theory-net system must develop over time: which specializations are feasible, which are discarded, how the theory-net must continue, etc. The specializations of a theory-net, thus, reflect the empirical development of the discipline and is always specific to it. In the case of microbial ecology, the theory-net result not just from the development of general network-like systems, but from the historical development of scientists’ knowledge of the biological-like system.

In Coyte et al.’s work, a network with a high degree of interspecies competition and moderate to high interaction strength is stable. However, adding cooperative or mutualistic interacting species to the network renders it unstable due to the generation of feedback loops. Hence, Coyte et al. (2015) conclude that the microbiome is stable because the proportion of competitive interspecies interactions is high. Competition, hence, explains stability. And ASE elucidates the way in which Coyte et al.’s explanans gains it explanatory power. Explanatoriness is not gained merely due to a complex computational calculus, but for the way in which the computational calculus has an ampliative character and a specific form within TE framework as a special law, i.e., a non-accidental regularity with counterfactual import that introduces, as new explanatory machinery, specific internal growth and external interactions to environment.

Contrary to Huneman’s account, ASE elucidates the specific ecological nature of Coyte et al.’s-like explanations by showing how the type of topological constraints derived from the LSA performed in system (1) are embedded in an ecological theoretical network, rather than in an economical or geographic theoretical network. Particularly, we show that the topological properties make the explanandum expectable because the topological structure being instantiated involves at the same time an ampliative specialized non-accidental regularity that implies stability under the concrete ecological conditions of this case study.

On the other hand, our account shares with Kostic’s account the commitment to the notion that ecological explanations as a species of topological explanations are counterfactual. But, in contrast with Kostic, who reduces the application of a specific mathematical structure to an empirical phenomenon to a pragmatic choice by the group of scientists guided by their question/perspective, our account allows elucidating why such choice is explanatory in the present case, whereas it is not in other cases of ecological modelling. Ecologists do not choose to build a network-based explanation of a phenomenon based uniquely on (unconstrained) pragmatic considerations. They proceed as they do by choosing a specific ampliative equation that specifies TEGP general parameters for the case in point. The pragmatic choice is then constrained by the formal possibilities that TEGP establishes for ecological research on specific ecosystems, so that all these possibilities must at least specify the two new parameters related in a specific manner. Coyte et al.’s choice is a result of their knowledge of some basic principles of TE plus their empirical knowledge of the microbiome. The explanatory import of their analysis derives from this specific embedding constraints, and not merely from an unconstrained pragmatic choice of a specific equation as the one that answers their research question. Contrary to Kostic’s account, ASE elucidates why the application of a mathematical equation is explanatory, as opposed as merely descriptive, heuristic or phenomenological in the present case.

6 Conclusion

Coyte et al.’s network ecological explanation of the stability behavior of the microbiome is commonly recognized in current biology as a paradigmatic example of how ecological explanations of the microbiome proceed. We have argued that such a paradigmatic biological explanation is not well elucidated neither by unificationist nor by causalist accounts, including among the later the recent new mechanistic approach. Nor is it correctly understood by alternative accounts, such as Huneman’s, or Kostic’s, the conclusion being that current philosophy of biology lacks a satisfactory philosophical elucidation of Coyte’s network explanation and of other similar network explanations in ecology. In this article we have proposed to elucidate Coyte’s, and other explanations in ecology in terms of a new, neo-Hempelian account of scientific explanation as ampliative, specialized embedding (ASE) already successfully applied to other biological explanations.

ASE’s main idea is a modification of Hempel’s nomological expectabilility account: to explain a phenomenon consists in embedding the data explanandum model it in a theoretical model defined by certain non-accidental, nomological regularities. For the embedding to be explanatory it must satisfy two crucial new conditions: the explanans model must introduce new (ontological/conceptual) machinery, and the nomological regularities that define the theoretical model cannot simply be general, schematic guiding-principles. ASE does not require the ampliative components to have necessarily a causal nature, although they may have such nature. It does not require unification either, for there may be ampliative embeddings that use special laws not yet integrated in a wholly complex unified theory-net. But unification, when present, may provide additional explanatory value (see also Moreno and Suárez, 2020).

We have shown how ASE satisfactorily elucidates Coyte et al.’s as well as other network explanations in ecology. All such explanations have in common that the specific laws defining the explanans models are particular specializations/specifications of a general, schematic guiding-principle that tells just the kind of things one has to look for in ecological explanations: every population/species density in a niche is explained by a specific combination of two general factors, an inner growth function and a relational affection by the environment (including other interacting populations/species). Depending on specific concretizations of both parameters and of their combination, ecologists provide particular explanations for different concrete ecological phenomena, such as the stability of the microbiome, the predator-prey equilibrium, or a population’s rate of growth. It is our claim that this correctly elucidates the explanatory import of such explanatory practices in ecological research, and thus that ASE fares well as a philosophical elucidation of explanations in ecology, particularly vis à vis other existing accounts. While this paper focuses on the use of network analysis in TE, we believe the account can also be applied to other, new cases, including Evolutionary Biology (Huitzil et al., 2018, 2020), and Dynamic Systems Theory (Fagan, 2016; Green, 2017).

Notes

We do not aim to provide any new argument against new-mechanism than the ones already discussed in Deulofeu et al. (2021). This paragraph summarizes their argument.

This section introduces Díez’s account but does not discuss its alleged general applicability (for a sustained defence see Díez, 2014). Since we are here interested only in showing its applicability in the specific case of the ecology of the microbiome, we do not need to commit to Diez’s generality goals.

For a specific kind of explanations, namely reductive explanations with identity (e.g. “temperature = mean kinetic energy”), the choice is not optional, for due to identity, in these cases the explanans does not introduce new entities but just different conceptualizations of the same entities.

We emphasize “real” for we refer to real positions as explanandum. If the explanandum were “apparent” positions then Kepler laws would be explanatory, “real trajectories” being the ampliative element introduced by the explanans with respect to the explanandum “apparent positions”.

“[G]eneralizations [like f = ma…] are not so much generalizations as generalization-sketches, schematic forms whose detailed symbolic expression varies from one application to the next. For the problem of free fall, f = ma becomes mg = md2s/dt2. For the simple pendulum, it becomes mgSinθ = – md2s/dt2. For coupled harmonic oscillators it becomes two equations, the first of which may be written m1d2s1/dt2 + k1s1 = k2(d + s2 – s1). More interesting mechanical problems, for example the motion of a gyroscope, would display still greater disparity f = ma and the actual symbolic generalization to which logic and mathematics are applied.” (Kuhn, 1974, p. 465).

We thank an anonymous reviewer for raising this concern. This generalization/specification aspects commented here are also related with the “specific ecological” dimension of Coyte et al. explanation that we claimed is lost in both Huneman’s and Kostic’s accounts (Sect. 3).

Though, initially, uificationism aimed at being as metaphysically as sober as Hempel’s account, it was soon noticed that the notion of simplicity upon which unificationism is construed is not as metaphysically innocent as it aimed, for it depends on the primitive vocabulary chosen and, thus, on pain of relativism, on a metaphysically burden notion of “natural kind predicates” (cf. Lewis, 1983, Díez, 2014; see Cohen & Callender, 2009 for an empiricist approximation to the problem).

We are conscious that ecology involves more questions than these about natural control, including coexistence, ecosystem functioning, etc. Yet here we confine ourselves to the specific branch of natural control ecological research exemplified by Coyte et al.’s explanation. We do not intend that our theses here also apply to other parts of ecology. This also explains why we connect it to Lotka-Volterra modelling later, insofar as they did pioneering work on this area.

References

Alleva, K., & Federico, L. (2013). A structuralist analysis of Hill’s theories: An elucidation of explanation in Biochemistry. Scientiae Studia, 11(2), 333–353.

Alleva, K., Díez, J., & Federico, L. (2017). Models, theory structure and mechanisms in biochemistry: The case of allosterism. Studies in History and Philosophy of Science Part C, 63, 1–14.

Ankeny, R. (2000). Fashioning descriptive models in biology. Philosophy of Science, 67(3), 272.

Balzer, W., & Moulines, C. (1981). Die Grundstruktur der klassischen Partikelmechanik und ihre Spezialfsierungen. Zeitschrift für Naturforschung A, 36(6), 600–608.

Balzer, W., & Moulines, C. U. (1998). Structuralist theory of science: Paradigmatic reconstructions. Rodopi.

Balzer, W., Moulines, C. U., & Sneed, J. D. (1987). An architectonic for science: The structuralist program (186 vol.). Springer Science & Business Media.

Bartelborth, T. (2002). Explanatory unification. Synthese, 130–1, 91–107.

Beatty, J. (1995). The Evolutionary Contingency Thesis. En G. Wolters & J. C. Lennox (Eds.), Concepts, Theories, and Rationality in the Biological Sciences (pp. 45–81). University of Pittsburgh Press.

Bechtel, W. (2019). Analysing Network Models to make discoveries about Biological Mechanisms. The British Journal for the Philosophy of Science, 70(2), 459–484.

Bechtel, W. (2020). Hierarchy and levels: Analysing networks to study mechanisms in molecular biology. Philosophical Transactions of the Royal Society B: Biological Sciences, 375(1796), 20190320. https://doi.org/10.1098/rstb.2019.0320.

Bechtel, W., & Abrahamsen, A. (2005). Explanation: A mechanist alternative. Studies in History and Philosophy of Science Part C, 36(2), 421–441.

Bechtel, W., & Richardson, R. C. (1993). Discovering complexity: Decomposition and localization as strategies in Scientific Research. The MIT Press.

Braillard, P. A., & Malaterre, C. (2015). Explanation in Biology: An introduction. In P. A. Braillard, & C. Malaterre (Eds.), Explanation in Biology: An Enquiry into the diversity of explanatory patterns in the Life Sciences (pp. 1–28). Springer.

Brigandt, I. (2015). Evolutionary developmental biology and the limits of philosophical accounts of mechanistic explanation. In P. A. Braillard, & C. Malaterre (Eds.), Explanation in biology (pp. 135–173). Springer.

Brigandt, I., Green, S., & O’Malley, M. (2017). Systems biology and mechanistic explanation. In S. S. Glennan, & P. Illari (Eds.), The Routledge Handbook of Mechanisms and Mechanical Philosophy (pp. 362–374). Routledge.

Cartwright, N. (1983). How the Laws of Physics Lie. Oxford University Press.

Chen, L., Wang, R. S., & Zhang, X. S. (2009). Biomolecular networks: Methods and applications in systems biology (10 vol.). John Wiley & Sons.

Codling, E. A., & Dumbrell, A. J. (2012). Mathematical and theoretical ecology: Linking models with ecological processes. Interface Focus, 2, 144–149.

Cohen, J., & Callender, C. (2009). Special sciences, conspiracy and the better best system account of lawhood. Erkenntnis, 73, 427–447.

Colyvan, M., Linquist, S., Grey, W., Griffiths, P., Odenbaugh, J., & Possingham, H. P. (2009). Philosophical issues in ecology: Recent trends and future directions. Ecology and Society, 14(2), 22.

Coyte, K. Z., Schluter, J., & Foster, K. R. (2015). The ecology of the microbiome: Networks, competition, and stability. Science, 350(6261), 663.

Craver, C. F., & Darden, L. (2013). Search of Mechanisms Discoveries across the Life Sciences. University of Chicago Press.

Deulofeu, R., & Suárez, J. (2018). When mechanisms are not enough: The origin of Eukaryotes and Scientific Explanation. In A. En, D. Christian, N. Hommen, Retzlaff, & G. Schurz (Eds.), Philosophy of Science: Between the Natural Sciences, the Social Sciences, and the Humanities (pp. 95–115). Springer.

Deulofeu, R., Suárez, J., & Pérez-Cervera, A. (2021). Explaining the behaviour of random ecological networks: The stability of the microbiome as a case of integrative pluralism. Synthese, 198, 2003–2025.

Díez, J. A. (2014). Scientific explanation as ampliative, specialized embedding: A neo-hempelian account. Erkenntnis, 79, 1413–1443.

Díez, J. A., & Lorenzano, P. (2013). Who got what wrong? Fodor and Piattelli on Darwin: Guiding-principles and explanatory models in natural selection. Erkenntnis, 78/5, 1143–1175.

Díez, J. A., & Lorenzano, P. (2015). Are natural selection explanatory models a Priori? Biology and Philosophy, 30(6), 887–809.

Díez, J. A., & Lorenzano, P. (2022). Scientific explanation as ampliative, specialized embedding: The case of classical genetics. Synthese, 200(6), 1–25.

Dupré, J. (2013). I—John Dupré: Living causes. Aristotelian Society Supplementary Volume, 87(1), 19–37.

Fagan, M. (2016). Stem cells and system models: clashing views of explanation. Synthese 193: 873–907.

Forge, J. (2002). Reflections on structuralism and scientific explanation. Synthese, 130(1), 109–121.

Findl, J., & Suárez, J. (2021). Descriptive understanding in COVID-19 modelling. History and Philosophy of the Life Sciences, 43, 107.

Fox Keller, E. (2005). Revisiting scale-free networks. Bioessays, 27(10), 1060–1068.

Gelfert, A. (2016). How to do Science with Models: A philosophical primer. Springer.

Ginnobili, S. (2016). Missing concepts in natural selection theory reconstructions. History and Philosophy of Life Sciences, 38, 1–33.

Glennan, S. (1996). Mechanisms and the nature of causation. Erkenntnis, 44(1), 49–71. https://doi.org/10.1007/BF00172853.

Glennan, S. (2002). Rethinking mechanistic explanation. Philosophy of Science, 69(S3), S342–S353.

Godfrey-Smith, P. (2006). The strategy of model-based science. Biology and Philosophy, 21(5), 725–740.

Green, S. (2016). Explanatory pluralism in biology. Studies in History and Philosophy of Science Part C, 59, 154–157.

Green, S. (2017). Philosophy of systems and synthetic biology. En E. N. Zalta (Ed.), The Stanford Encyclopedia of Philosophy. https://plato.stanford.edu/archives/sum2017/entries/systems-synthetic-biology/.

Green, S. (2018). Scale dependency and downward causation in biology. Philosophy of Science, 85(5), 998–1011.

Green, S. (2021). Cancer beyond genetics: On the practical implications of downward causation. In D. S. Brooks, J. DiFrisco, & W. C. Wimsatt (Eds.), Levels of organization in the biological sciences. The MIT Press.

Green, S., & Jones, N. (2016). Constraint-based reasoning for search and explanation: Strategies for understanding variation and patterns in Biology. Dialectica, 70(3), 343–374. https://doi.org/10.1111/1746-8361.12145.

Green, S., Fagan, M., & Jaeger, J. (2015). Explanatory integration challenges in Evolutionary Systems Biology. Biological Theory, 10(1), 18–35.

Green, S., Şerban, M., Scholl, R., Jones, N., Brigandt, I., & Bechtel, W. (2018). Network analyses in systems biology: New strategies for dealing with biological complexity. Synthese, 195(4), 1751–1777. https://doi.org/10.1007/s11229-016-1307-6.

Hanson, N. R. (1960). The mathematical power of epicyclical astronomy. Isis, 51(2), 150–158.

Hempel, C. (1965). Aspects of scientific explanation, and other essays in the philosophy of Science. The Free Press.

Hempel, C., & Oppenheim, P. (1948). Studies in the logic of explanation. Philosophy of Science, 15(2), 135–175.

Huitzil, S., Sandoval-Motta, S., Frank, A., & Aldana, M. (2018). Modeling the role of the microbiome in evolution. Frontiers in Physiology, 9, 1836.

Huitzil, S., Sandoval-Motta, S., Frank, A., & Aldana, M. (2020). Phenotype heritability in holobionts: An evolutionary model. Symbiosis: Cellular, Molecular, Medical and Evolutionary aspects (pp. 199–223). Springer.

Huneman, P. (2010). Topological explanations and robustness in biological sciences. Synthese, 177(2), 213–245.

Huneman, P. (2018a). Diversifying the picture of explanations in biological sciences: Ways of combining topology with mechanisms. Synthese, 195(1), 115–146.

Huneman, P. (2018b). Outlines of a theory of structural explanations. Philosophical Studies, 175(3), 665–702. https://doi.org/10.1007/s11098-017-0887-4.

Issad, T., & Malaterre, C. (2015). Are dynamic mechanistic explanations still mechanistic? In P. A. Braillard, & C. Malaterre (Eds.), Explanation in Biology (pp. 265–292). Springer.

Jones, N. (2014). Bowtie Structures, Pathway Diagrams, and topological explanation. Erkenntnis, 79(5), 1135–1155.

Kaiser, M. I. (2015). Reductive explanation in the Biological Sciences. Springer Cham.

Kitcher, P. (1989). Explanatory unification and the causal structure of the world. In P. Kitcher, & W. Salmon (Eds.), Scientific explanation (pp. 410–505). University of Minnesota Press.

Kitcher, P. (1993). The advancement of science. Oxford University Press.

Kostić, D. (2019). Minimal structure explanations, scientific understanding and explanatory depth. Perspectives on Science, 27(1), 48–67.

Kostić, D. (2020). General theory of topological explanations and explanatory asymmetry. Philosophical Transactions of the Royal Society B: Biological Sciences, 375(1796), 20190321.

Křivan, V. (2008). Prey–predator models. In S. E. Jorgensen, & B. D. Fath (Eds.), Encyclopedia of Ecology (4 vol., pp. 2929–2940). Elsevier.

Kuhn, T. S. (1974). Second thoughts on paradigms. The Structure of Scientific Theories, 2, 459–482.

Layeghifard, M., Hwang, D. M., & Guttman, D. S. (2017). Disentangling interactions in the Microbiome: A Network Perspective. Trends in Microbiology, 25(3), 217–228.

Lewis, D. (1983). New Work for a theory of universals. Australasian Journal of Philosophy, 61, 343–377.

Lewis, D. (1986). Causal explanation. Philosophical papers II (pp. 214–240). Oxford University Press.

Lloyd, E. A. (2021). Adaptation. Cambridge University Press.

Lloyd, E. A. (1988/1992). The structure and confirmation of Evolutionary Theory. Princeton University Press.

Lorenzano, P. (2006). Fundamental laws and laws of biology. In N. Karl-Georg (Ed.), Philosophie der Wissenschaft – Wissenschaft der Philosophie (pp. 129–155). Mentis. Dordrecht.

Lorenzano, P. (2007). The influence of genetics on philosophy of science: Classical genetics and the structuralist view of theories. In A. Fagot-Largeaut, S. Rahman, & J. M. Torres (Eds.), The influence of genetics on contemporary thinking (pp. 97–114). Springer.

Machamer, P., Darden, L., & Craver, C. F. (2000). Thinking about mechanisms. Philosophy of Science, 67(1), 1–25.

Mason, O., & Verwoerd, M. (2007). Graph theory and networks in biology. IET Systems Biology, 1(2), 89–119.

May, M. (1972). Will a large complex system be stable? Nature, 238, 413–414.

McManus, F. (2012). Development and mechanistic explanation. Studies in History and Philosophy of Science Part C, 43(2), 532–541.

Mitchell, S. D. (1997). Pragmatic laws. Philosophy of Science, 64, S468–S479.