Abstract

The assessment of prevalence on regional levels is an important element of public health reporting. Since regional prevalence is rarely collected in registers, corresponding figures are often estimated via small area estimation using suitable health data. However, such data are frequently subject to uncertainty as values have been estimated from surveys. In that case, the method for prevalence estimation must explicitly account for data uncertainty to allow for reliable results. This can be achieved via measurement error models that introduce distribution assumptions on the noisy data. However, these methods usually require target and explanatory variable errors to be independent. This does not hold when data for both have been estimated from the same survey, which is sometimes the case in official statistics. If not accounted for, prevalence estimates can be severely biased. We propose a new measurement error model for regional prevalence estimation that is suitable for settings where target and explanatory variable errors are dependent. We derive empirical best predictors and demonstrate mean-squared error estimation. A maximum likelihood approach for model parameter estimation is presented. Simulation experiments are conducted to prove the effectiveness of the method. An application to regional hypertension prevalence estimation in Germany is provided.

Similar content being viewed by others

1 Introduction

In recent years, the demand for detailed population health indicators has increased considerably. Policy-makers and researchers have realized the importance of monitoring disease frequencies on geographic regions and demographic groups over time. These insights mark important elements of public health reporting and are key to the provision of comprehensive health care programs. However, regional prevalence is rarely collected in registers due to confidentiality restrictions. Instead, such figures are often estimated via methods of small area estimation (SAE) using suitable health data. See Rao and Molina (2015) and Jiang and Lahiri (2006), or Pfeffermann (2002) and Pfeffermann (2013) for comprehensive overviews on SAE. Health data records are used as auxiliary variables in regression models that generate predictions for domain characteristics of interest. This approach allows for efficient estimates when the model has sufficient explanatory power (Münnich et al. 2019; Burgard et al. 2020b). Some recent papers in small area estimation for regional estimation are Trandafir et al. (2020), Bernal et al. (2020), Mills et al. (2020) and Saeedi et al. (2019), as well as Adin et al. (2019).

Due to the confidentiality of the data and the sensitive nature of health variables, area-level models are good options for predicting regional prevalence. Fay and Herriot (1979) proposed the original area-level model, which regresses regional direct estimates of target variables on covariates using cross-sectional data. This model was later extended and applied to multivariate data by Benavent and Morales (2016), Arima et al. (2017), Ubaidillah et al. (2019), Burgard et al. (2021) and Esteban et al. (2020). Since analyzing the development of domain characteristics over time is often relevant in empirical studies, generalizations of the area-level model to the temporal context soon appeared. Among them, the papers of Pfeffermann and Burck (1990), Rao and Yu (1994), Ghosh et al. (1996), Datta et al. (1999), You and Rao (2000) and Datta et al. (2002) provided generalizations of this model by considering time-series data across domains, multi-level geographic segmentation, Bayesian modeling and robust approaches. More recently, Esteban et al. (2012), Marhuenda et al. (2013), Morales et al. (2015), Jedrejczak and Kubacki (2017), Boubeta et al. (2017) and Benavent and Morales (2021) applied temporal area-level models to poverty mapping.

In SAE models, health variables are often subject to uncertainty. Their values are typically estimated from periodic large-scale health surveys, such as the National Health and Nutrition Examination Survey (NHANES) in the US (National Center for Health Statistics 2020) or Gesundheit in Deutschland aktuell (GEDA) in Germany (Robert Koch Institute 2012; Lange et al. 2015). Accordingly, the data records are not accurate, but associated with errors. In that case, the regression models must explicitly account for data uncertainty to allow for reliable results. These issues motivated researchers over the years to develop SAE methods that allow for uncertain data in both dependent and explanatory variables. Such SAE methods are often called measurement error models. The basic idea of these methods is to introduce distribution assumptions on the errors and make statistical inference under this premise. On that note, Ybarra and Lohr (2008), Burgard et al. (2020a) and Burgard et al. (2021) provided extensions of the area-level model that explicitly account for explanatory variable errors. A related approach was developed by Torabi et al. (2009) for unit-level observations. However, despite accounting for errors on both target and explanatory variables, these methods are still limited in their applicability. They require the target variable errors to be independent from the explanatory variable errors.

This condition is not fulfilled when some explanatory variables are estimated from the same survey as the target variable, which may occur in practice. That is to say, if we do not have enough data from administrative records, the alternative of using data from other surveys or from the same survey allows proposing new model-based estimates that may improve the direct estimates.

For prevalence estimation, the above-described scenario is relevant. Explanatory variables for health mapping are often health-related indicators as well. Since surveys like NHANES or GEDA are primary data sources for public health reporting in their respective countries, it may happen that both target variable and some explanatory variables are estimated from them. If the resulting dependencies between target and explanatory variable errors are ignored, prevalence estimates based on these records may be biased or inefficient.

We propose a new measurement error area-level model for regional prevalence estimation that allows for dependent errors on target and explanatory variables. With this feature, it is the first SAE method that is suitable for settings where data records for both model components have been estimated on a single survey. The model is an extension of the Rao–Yu model proposed by Rao and Yu (1994), which implies that it allows for either regional time-series data (domains and periods), or nested geographic segmentation (domains and subdomains). Thus, we refer to it as the measurement error Rao–Yu (MERY) model. We use it to estimate the development of regional prevalence over time based on uncertain data with dependent errors. Yet, we also show that the MERY model contains many existing measurement error SAE models as particular or limits cases, which makes it applicable to a wide range of empirical settings.

We first present a detailed description of the MERY model. A special emphasis is placed on the conditional component distributions, as the main innovation of the method is to allow for dependent errors on the variables. Next, the best predictors (BPs) and empirical best predictors (EBPs) of the domain characteristics under the model are derived. We subsequently address mean-squared error (MSE) estimation. The MSE of the BP is derived analytically. We further present a set of parametric bootstrap estimators for the MSE of the EBP. A maximum likelihood (ML) approach based on the Fisher scoring algorithm (Jennrich and Sampson 1976; Longford 1987) for model parameter estimation is stated. The methodology is tested in simulation experiments to demonstrate its effectiveness. An empirical application to temporal health mapping in Germany is provided. We estimate the hypertension prevalence for Nielsen regions crossed by age groups (domains), over three periods, using survey estimates from GEDA for the years 2009, 2010 and 2012 (Robert Koch Institute 2013, 2014a, b). We remark that the domains do not correspond to any geographic segmentation in administrative health records of German statistical offices. Therefore, it is not possible to apply the standard SAE methodology based on area-level models, where the auxiliary variables are taken from out-of-sample data sources. In addition, a quarter of the domains have a sample size of less than 271 and the minimum sample size is equal to 51. Therefore, the estimation of the hypertension prevalence by domains and time periods can be treated by using SAE statistical methodology.

We show that applying the new MERY model in this situation allows for considerable efficiency gains relative to direct estimation.

The remainder of the paper is organized as follows. Section 2 introduces the MERY model and derives the BPs. Section 3 states the MSE of the BP and gives parametric bootstrap estimators for the MSE of the EBP. Section 4 addresses model parameter estimation. Section 5 contains the simulation experiments. Section 6 presents the empirical application to temporal health mapping. Section 7 closes with some conclusive remarks. A supplemental material file with five appendices is provided. Appendix 1 contains the proof for the main theorem. Appendix 2 presents several MSE calculations. Appendix 3 derives the Fisher information matrix required for the ML approach for model parameter estimation. Finally, Appendices 4 and 5 show additional results for simulation and application.

2 Model

2.1 Formulation

Let U be a finite population that is segmented into D disjoint sets \(U_d\), \(d=1,\ldots ,D\). Suppose that every set \(U_d\) is further segmented into disjoint subsets \(U_{dt}\), \(d=1,\ldots ,D\), \(t=1,\ldots ,m_{d}\). For ease of exposition, we assume that the number of subsets per set is constant with \(m_d=T\), \(d=1,\ldots ,D\). This is to say, \(U = \cup _{d=1}^D \cup _{t=1}^{\mathrm{T}} U_{dt}\). In practice, the structure may correspond to either a population with a two-stage hierarchical geographic segmentation (domain–subdomain), or a population with geographic segmentation that is observed over several time periods (domain–period). This section introduces the MERY model by using the domain–subdomain terminology. The domain–period interpretation is straightforward. Let \(\mu _{dt}\) be a characteristic of interest in set d and subset t, for instance the mean or the total of some target variable. The objective is to estimate \(\mu _{dt}\) for all \(d=1,\ldots ,D\), \(t=1,\ldots ,T\). Suppose that for each \(\mu _{dt}\) a corresponding direct estimator \(y_{dt}\) is available from a target survey \(S \subset U\). By analogy with population structure, we assume that \(S = \cup _{d=1}^D \cup _{t=1}^{\mathrm{T}} S_{dt}\) with \(S_{dt} \subset U_{dt}\) and \(|S_{dt}|> 0\), \(d=1,\ldots ,D\), \(t=1,\ldots ,T\).

The MERY model is composed of three stages. In the first stage a model, called sampling model, is used to represent the sampling error of direct estimators. The sampling model indicates that direct estimators, \(y_{dt}\)’s, are unbiased and can be expressed as

where the sampling errors, \(e_{dt}\)’s, are independent and normally distributed with known variances. This is to say, \(e_{dt}\sim N(0,\sigma _{edt}^2)\), where \(\sigma _{edt}^2\) is known. In the second stage, called linking model, the true domain characteristics, \(\mu _{dt}\)’s, are assumed to vary linearly with a number p of domain-level auxiliary variables. The linking model is

where \({\tilde{\varvec{x}}}_{0,dt}\) and \({\tilde{\varvec{x}}}_{1,dt}\) are a \(1\times p_0\) and \(1\times p_1\) row vectors, respectively, containing the true aggregated (subdomain-level) values of \(p=p_0+p_1\) auxiliary variables for domain d. The terms \(\varvec{\beta }_0\) and \(\varvec{\beta }_1\) are the corresponding column vectors of regression coefficients. The \(u_{1,d}\)’ s and \(u_{2,dt}\)’s are model errors (domain and subdomain random effects), assumed to be independent and identically distributed (i.i.d.) from \(N(0,\sigma _1^2)\) and \(N(0,\sigma _2^2)\), respectively, with variance \(\sigma _1^2\) and \(\sigma _2^2\) unknown, and independent of the \(e_{dt}\)’s. We assume that \({\tilde{\varvec{x}}}_{0,dt}\) is known and equal to \(\varvec{x}_{0,dt}\), \(d=1,\ldots ,D\), \(t=1,\ldots ,T\). Further, we assume that the \({\tilde{\varvec{x}}}_{1,dt}\)’s are unknown random vectors that are predicted from the same survey as \(\mu _{dt}\) or from different surveys. This is to say, the direct estimators \(y_{dt}\) and \(\varvec{x}_{1,dt}\), of \(\mu _{dt}\) and \({\tilde{\varvec{x}}}_{1,dt}\), respectively, can be calculated from the same or from a different survey sample. In the third stage, we consider the measurement error model

where \(\varvec{x}_{1,dt}\) is a row vector containing the direct estimators of \({\tilde{\varvec{x}}}_{1,dt}\). The term \(\varvec{v}_{dt}\) is a column vector of random measurement errors, \(\varvec{\Sigma }_{vdt}\) is the known covariance matrix of \(\varvec{v}_{dt}\) (typically taken as a design-based covariance matrix estimate) and \(\varvec{v}_{11},\ldots ,\varvec{v}_{DT}\), \(\varvec{x}_{1,11},\ldots ,\varvec{x}_{1,DT}\) are independent. Model (1) assumes that the vector of true aggregated values \({\tilde{\varvec{x}}}_{1,dt}^\prime \) is random and it is the sum of two independent random vectors. The first one contains the predictions of the realizations of \({\tilde{\varvec{x}}}_{1,dt}^\prime \) and the second one is a random error term. Further, the model assumes that the joint distribution of the \((p_1+1)\times 1\) column vector \(\varvec{\varepsilon }_{dt}=(e_{dt},\varvec{v}_{dt}^\prime )^\prime \) is multivariate normal with zero mean and covariance matrix

where \(\varvec{\sigma }_{12dt}\) is a \(p_1\times 1\) vector containing the covariances between \(e_{dt}\) and the components of \(\varvec{v}_{dt}\). The covariances of \(\varvec{\sigma }_{12dt}\), associated to auxiliary variables calculated from a different sample, are zero. The MERY model can be expressed as single model in the form

or in the simpler form

where \(\varvec{x}_{dt}=(\varvec{x}_{0,dt},\varvec{x}_{1,dt})\) and \(\varvec{\beta }=(\varvec{\beta }_0^\prime ,\varvec{\beta }_1^\prime )^\prime \). We finally assume that \(\varvec{x}_{1,dt}\), \(u_{1,d}\), \(u_{2,dt}\), \(\varvec{\varepsilon }_{dt}=(e_{dt},\varvec{v}_{dt}^\prime )^\prime \), \(d=1,\ldots ,D\), \(t=1,\ldots ,T\), are mutually independent, but we only introduce inference procedures conditionally on \((\varvec{x}_{11},\ldots ,\varvec{x}_{DT})\). Under Model (2), it holds that

The variance of \(y_{dt}\), given \(\varvec{x}_{dt}\), is

Thus, the distribution of \(y_{dt}\), given \(\varvec{x}_{dt}\), is \(y_{dt}|_{\varvec{x}_{dt}}\sim N\big (\varvec{x}_{dt}\varvec{\beta },\sigma _{ydt}^2\big )\). Let us define the vectors

and matrices \(\varvec{Z}_{1d}=\varvec{1}_T\), \(\varvec{Z}_{2d}=\varvec{I}_T\), \(\varvec{V}_{2d}=\sigma _2^2\varvec{I}_T\),

where \(\varvec{1}_T\) is a \(T\times 1\) vector with all elements equal to 1 and \(\varvec{I}_T\) is the \(T\times T\) identity matrix. At the domain level, Model (2) is

where \(u_{1,d}\sim N(0,\sigma _1^2)\), \(\varvec{u}_{2,d}\sim N_T(\varvec{0}, \varvec{V}_{2d})\), \(\varvec{\varepsilon }_d\sim N_{T(p_1+1)}(\varvec{0}, \varvec{\Sigma }_{d})\) are mutually independent. Further, we have \(\varvec{v}_d\sim N_{Tp}(\varvec{0}, \varvec{\Sigma }_{vd})\). The variance matrix of \(\varvec{y}_d\), given \(\varvec{X}_d\), is

Define \(M=DT\), the vectors \(\varvec{y}=\underset{1\le d \le D}{\hbox {col}}(\varvec{y}_{d})\),

and matrices \(\varvec{V}_1=\sigma _1^2\underset{1\le d \le D}{\hbox {diag}}(\varvec{1}_T\varvec{1}_T^\prime )\), \(\varvec{V}_{2}=\sigma _2^2\varvec{I}_M\), \(\varvec{Z}_1=\underset{1\le d \le D}{\hbox {diag}}(\varvec{1}_{T})\), \(\varvec{Z}_2=\varvec{I}_{M}\),

In matrix form, Model (2) can be alternatively written as

where \(\varvec{u}_{1}\sim N_D(0,\varvec{V}_1)\), \(\varvec{u}_{2}\sim N_{DT}(\varvec{0}, \varvec{V}_{2})\), \(\varvec{\varepsilon }\sim N_{DT(p_1+1)}(\varvec{0}, \varvec{\Sigma })\) are mutually independent. The variance matrix of \(\varvec{y}\), given \(\varvec{X}\), is

Hereafter, we state some further conditional distributions (CDs) resulting under the model. They allow for a clearer picture of the dependencies between specific model components, as the main innovation of our contribution is to allow for SAE from dependent sample estimates. Recall that if \(\varvec{y}\) is normally distributed with partitioned mean vector \(\varvec{\mu }=(\varvec{\mu }_s^\prime ,\varvec{\mu }_r^\prime )^\prime \), where \(\varvec{\mu }_s\) and \(\varvec{\mu }_r\) are partitions of \(\varvec{\mu }\), as well as covariance matrix

then the distribution of \(\varvec{y}_r\), given \(\varvec{y}_s\), is also multivariate normal, i.e., \(\varvec{y}_r|\varvec{y}_s\sim N(\varvec{\mu }_{r|s}, \varvec{V}_{r|s})\), with mean vector and covariance matrix

At the subdomain level, we have the following CDs. The CD of \(e_{dt}\), given \(\varvec{v}_{dt}\), is \(N(\mu _{e|vdt}, \sigma _{e|vdt}^2)\), where

The CD of \(\varvec{v}_{dt}\), given \(e_{dt}\), is \(N_p(\varvec{\mu }_{v|edt}, \varvec{\Sigma }_{v|edt})\), where

The conditional expectation and variance of \(y_{dt}\), given \(\varvec{x}_{dt}\) and \(\varvec{v}_{dt}\), are

The CD of \(y_{dt}\), given \(\varvec{x}_{dt}\) and \(\varvec{v}_{dt}\), is \(y_{dt}|_{\varvec{x}_{dt},\varvec{v}_{dt}}\sim N(\mu _{y|vdt},\sigma _{y|vdt}^2)\), where

The CD of \(y_{dt}\), given \(\varvec{x}_{dt}\) and \(u_{1,d}\), is \(y_{dt}|_{\varvec{x}_{dt},u_{1,d}}\sim N(\mu _{y|u_1dt},\sigma _{y|u_1dt}^2)\), where

The CD of \(y_{dt}\), given \(\varvec{x}_{dt}\) and \(u_{2,dt}\), is \(y_{dt}|_{\varvec{x}_{dt},u_{2,dt}}\sim N(\mu _{y|u_2dt},\sigma _{y|u_2dt}^2)\), with

At the domain level, we have the following CDs. The CD of \(\varvec{y}_{d}\), given \(\varvec{X}_{d}\) and \(\varvec{v}_{d}\), is \(\varvec{y}_{d}|_{\varvec{X}_{d},\varvec{v}_{d}}\sim N_T(\varvec{\mu }_{y|vd},\quad \varvec{\Sigma }_{y|vd}),\) where

The CD of \(\varvec{y}_{d}\), given \(\varvec{X}_{d}\) and \(u_{1,d}\), is \(\varvec{y}_{d}|_{\varvec{X}_{d},u_{1,d}}\sim N_T(\varvec{\mu }_{y|u_1d},\quad \varvec{\Sigma }_{y|u_1d})\), for

The CD of \(\varvec{y}_{d}\), given \(\varvec{X}_{d}\) and \(\varvec{u}_{2,d}\), is \(\varvec{y}_{d}|_{\varvec{X}_{d},\varvec{u}_{2,d}}\sim N_T(\varvec{\mu }_{y|u_2d},\,\,\varvec{\Sigma }_{y|u_2d})\), for

2.2 Empirical best prediction

In this section, we obtain the EBP of the \(\mu _{dt}\)’s under the MERY model. For this, we first derive the respective BP under the assumption of known model parameters. Thereafter, the EBP is obtained from substituting the model parameters with consistent estimators. The BP is calculated according to the subsequent theorem.

Theorem 1

Under model (2), the BP of \(\varvec{\mu }_{d}=\varvec{X}_{d}\varvec{\beta }+\varvec{v}_{d}^\prime \varvec{\beta }+\varvec{1}_Tu_{1,d}+\varvec{u}_{2,d}\) is

where

and

The proof can be found in Appendix 1 of the supplemental material. Define the full parameter vector \(\varvec{\theta }= (\varvec{\beta }', \sigma _1^2, \sigma _2^2)'\). Observe that \({\hat{\varvec{\mu }}}_{d}^{bp} = {\hat{\varvec{\mu }}}_{d}^{bp}(\varvec{\theta })\). The EBP of \(\varvec{\mu }_d\) is obtained by replacing \(\varvec{\theta }\) with a consistent estimator \({\hat{\varvec{\theta }}}\), that is, \({\hat{\varvec{\mu }}}_{d}^{ebp} = {\hat{\varvec{\mu }}}_{d}^{bp}({\hat{\varvec{\theta }}})\). Please note that we describe how to obtain \({\hat{\varvec{\theta }}}\) in Sect. 4. The MERY model is quite general. It allows for explanatory variables with no errors, errors that are independent of the sampling errors (out-of-sample errors) and errors that depend on the sampling errors (in-sample errors). If \(x_{dtk}\) is measured without error, we have \(\sigma _{dtk}^2 = \text{ var }(x_{dtk}) = 0\) and zero covariances. For the case that all explanatory variables are measured without error, that is, \(\varvec{\Sigma }_{vdt} = {\varvec{0}}_p\), which implies that \(\varvec{\varepsilon }_{dt} = (e_{dt}, {\varvec{0}})'\), \(d=1,\ldots ,D\), \(t=1,\ldots ,T\), then \({\hat{\varvec{\mu }}}_{d}^{ebp}\) corresponds to the Rao–Yu predictor proposed by Rao and Yu (1994). When \(x_{dtk}\) is an out-of-sample direct estimator, then all its covariances with in-sample direct estimators are zero. For \(\sigma _1^2 = 0\) and \(\varvec{\sigma }_{12dt} = {\varvec{0}}\), \(d=1,\ldots ,D\), \(t=1,\ldots ,T\), then \({\hat{\varvec{\mu }}}_{d}^{ebp}\) is equivalent to the EBP under the measurement error Fay–Herriot model studied by Ybarra and Lohr (2008) as well as Burgard et al. (2020a).

3 Mean-squared error estimation

In this section, we elaborate on MSE estimation for the EBP under the MERY model. Let us first decompose the MSE into components. By summing and subtracting \({\hat{\varvec{\mu }}}_d^{bp}\), we obtain

By taking expectations, we get

In what follows, we present two parametric bootstrap estimators of \(MSE({\hat{\varvec{\mu }}}_d^{ebp})\). The first is a standard estimator that approximates all four MSE components via the Monte Carlo method. The second estimator is a bias-corrected estimator that uses an analytical approximation for \(\varvec{G}_{1d}(\varvec{\theta })\). We will show via simulation in Sect. 5.4 that including the analytical formula improves MSE estimation over the standard estimator considerably.

3.1 Standard parametric bootstrap estimator

This section presents the standard estimator, denoted by \(mse_{1d}^*\), which approximates all four MSE components numerically. It is calculated with the subsequent algorithm.

-

1.

By using the data \((\varvec{y}_d,\varvec{X}_d)\), \(d=1,\ldots ,D\), calculate \({\hat{\varvec{\theta }}}\) and \({\hat{\varvec{V}}}_{2d}={\hat{\sigma }}_2^2\varvec{T}_T\).

-

2.

Repeat B times

-

2.1

For \(d=1,\ldots ,D\), generate \(u_{1,d}^{*(b)}\sim N(0,{\hat{\sigma }}_1^2)\), \(\varvec{u}_{2,d}^{*(b)}\sim N_T(\varvec{0}, {\hat{\varvec{V}}}_{2d})\), \(\varvec{\varepsilon }_d^{*(b)}=(\varvec{e}_d^{*(b)\prime },\varvec{v}_d^{*(b)\prime })^\prime \sim N_{T(p_1+1)}(\varvec{0}, \varvec{\Sigma }_{d})\), \(\varvec{\mu }_d^{*(b)}=\varvec{X}_d{{\hat{\varvec{\beta }}}}+{\hat{\varvec{B}}}_d\varvec{v}_d^{*(b)}+\varvec{1}_Tu_{1,d}^{*(b)}+\varvec{u}_{2,d}^{*(b)}\) and \(\varvec{y}_d^{*(b)}=\varvec{\mu }_d^{*(b)}+\varvec{e}_{d}^{*(b)}\).

-

2.2

By using the data \((\varvec{y}_d^{*(b)},\varvec{X}_d)\), \(d=1,\ldots ,D\), calculate the estimator \({\hat{\varvec{\theta }}}^{*(b)}\).

-

2.3

Calculate the EBPs \({\hat{\varvec{\mu }}}^{ebp*(b)}_{d}\), \(d=1,\ldots ,D\), under the model of Step 2.1.

-

2.1

-

3.

For \(d=1,\ldots ,D\), obtain the \(T\times T\) matrix of estimators of \(MSE({\hat{\varvec{\mu }}}^{ebp}_d)\); i.e.,

$$\begin{aligned} mse_{1d}^{*}=\frac{1}{B}\sum _{b=1}^B\big ({\hat{\varvec{\mu }}}^{ebp*(b)}_{d}-\varvec{\mu }^{*(b)}_{d}\big )\big ({\hat{\varvec{\mu }}}^{ebp*(b)}_{d}-\varvec{\mu }^{*(b)}_{d}\big )^\prime . \end{aligned}$$

3.2 Bias-corrected parametric bootstrap estimator

This section presents the bias-corrected estimator, denoted by \(mse_{2d}^*\), that uses an analytical approximation for \(\varvec{G}_{1d}(\varvec{\theta })\). Observe that \(\varvec{G}_{1d}(\varvec{\theta })\) denotes the MSE of the BP in the course of the resampling process. Hence, we first derive the analytical expression for \(MSE({\hat{\varvec{\mu }}}_d^{bp}|\varvec{X}_d)\) under the assumption of known model parameters \(\varvec{\theta }\). Afterward, we use this expression in another parametric bootstrap algorithm that yields us the bias-corrected estimator. Please note that we only present the final results of the corresponding mathematical developments. For further details regarding the derivation, see Appendix 2 of the supplemental material. Under model (2), the mean-squared error, \(MSE({\hat{\varvec{\mu }}}_d^{bp}|\varvec{X}_d)\), of \({\hat{\varvec{\mu }}}_d^{bp}\) is

where the first summand is

the second summand is

and the third summand is

For the last component, it holds that \(\varvec{S}_4=\varvec{S}_3^\prime \). After calculating the MSE of the BP, we use this result to estimate \(MSE({\hat{\varvec{\mu }}}_d^{ebp})\) with a bias-corrected parametric bootstrap estimator. Define \(\varvec{G}_{1d}({\hat{\varvec{\theta }}}) \triangleq \varvec{S}_1({\hat{\varvec{\theta }}})+\varvec{S}_2({\hat{\varvec{\theta }}})-\varvec{S}_3({\hat{\varvec{\theta }}})-\varvec{S}_4({\hat{\varvec{\theta }}})\), where the known model parameters \(\varvec{\theta }\) are substituted by the estimate \({\hat{\varvec{\theta }}}\). The bias-corrected estimator is obtained as follows.

-

1.

By using the data \((\varvec{y}_d,\varvec{X}_d)\), \(d=1,\ldots ,D\), calculate \({\hat{\varvec{\theta }}}\) and \({\hat{\varvec{V}}}_{2d}={\hat{\sigma }}_2^2\varvec{T}_T\).

-

2.

Repeat B times

-

2.1

For \(d=1,\ldots ,D\), generate \(u_{1,d}^{*(b)}\sim N(0,{\hat{\sigma }}_1^2)\), \(\varvec{u}_{2,d}^{*(b)}\sim N_T(\varvec{0}, {\hat{\varvec{V}}}_{2d})\), \(\varvec{\varepsilon }_d^{*(b)}=(\varvec{e}_d^{*(b)\prime },\varvec{v}_d^{*(b)\prime })^\prime \sim N_{T(p_1+1)}(\varvec{0}, \varvec{\Sigma }_{d})\), \(\varvec{\mu }_d^{*(b)}=\varvec{X}_d{{\hat{\varvec{\beta }}}}+{\hat{\varvec{B}}}_d\varvec{v}_d^{*(b)}+\varvec{1}_Tu_{1,d}^{*(b)}+\varvec{u}_{2,d}^{*(b)}\) and \(\varvec{y}_d^{*(b)}=\varvec{\mu }_d^{*(b)}+\varvec{e}_{d}^{*(b)}\).

-

2.2

By using the data \((\varvec{y}_d^{*(b)},\varvec{X}_d)\), \(d=1,\ldots ,D\), calculate the estimator \({\hat{\varvec{\theta }}}^{*(b)}\).

-

2.3

Calculate the BPs \({\hat{\varvec{\mu }}}^{*bp(b)}_{d}={\hat{\varvec{\mu }}}^{*bp(b)}_{d}(\varvec{y}_d^{*(b)},{{\hat{\varvec{\theta }}}})\) and EBPs \({\hat{\varvec{\mu }}}^{*ebp(b)}_{d}={\hat{\varvec{\mu }}}^{*ebp(b)}_{d}(\varvec{y}_d^{*(b)},{\hat{\varvec{\theta }}}^{*(b)})\), \(d=1,\ldots ,D\), under the model of Step 2.1.

-

2.1

-

3.

For \(d=1,\ldots ,D\), obtain the \(T\times T\) matrix of estimators of \(MSE({\hat{\varvec{\mu }}}^{ebp}_d)\); i.e.,

$$\begin{aligned}&E_*\big [\varvec{G}_{1d}({\hat{\varvec{\theta }}}^{*})\big ]\approx \frac{1}{B}\sum _{b=1}^B\varvec{G}_{1d}({\hat{\varvec{\theta }}}^{*(b)}) =\varvec{H}_{1d*}. \\&\quad E_*\big [({\hat{\varvec{\mu }}}^{*ebp}_{d}-\varvec{\mu }^{*bp}_{d})({\hat{\varvec{\mu }}}^{*ebp}_{d}-\varvec{\mu }^{*bp)}_{d})^\prime \big ]\approx \\&\quad \frac{1}{B}\sum _{b=1}^B\big ({\hat{\varvec{\mu }}}^{*ebp(b)}_{d}-\varvec{\mu }^{*bp(b)}_{d}\big )\big ({\hat{\varvec{\mu }}}^{*ebp(b)}_{d}-\varvec{\mu }^{*bp(b)}_{d}\big )^\prime =\varvec{G}_{2d*}, \\&\quad E_*\big [({\hat{\varvec{\mu }}}^{*ebp}_{d}-\varvec{\mu }^{*bp}_{d})({\hat{\varvec{\mu }}}^{*bp}_{d}-\varvec{\mu }^{*}_{d})^\prime \big ]\approx \\&\quad \frac{1}{B}\sum _{b=1}^B\big ({\hat{\varvec{\mu }}}^{*ebp(b)}_{d}-\varvec{\mu }^{*bp(b)}_{d}\big )\big ({\hat{\varvec{\mu }}}^{*bp(b)}_{d}-\varvec{\mu }^{*(b)}_{d}\big )^\prime =\varvec{G}_{3d*}, \\&\quad E_*\big [({\hat{\varvec{\mu }}}^{*bp}_{d}-\varvec{\mu }^{*}_{d})({\hat{\varvec{\mu }}}^{*ebp}_{d}-\varvec{\mu }^{*bp}_{d})^\prime \big ]\approx \\&\quad \frac{1}{B}\sum _{b=1}^B\big ({\hat{\varvec{\mu }}}^{*bp(b)}_{d}-\varvec{\mu }^{*(b)}_{d}\big )\big ({\hat{\varvec{\mu }}}^{*ebp(b)}_{d}-\varvec{\mu }^{*bp(b)}_{d}\big )^\prime =\varvec{G}_{4d*}. \end{aligned}$$ -

4.

For \(d=1,\ldots ,D\), obtain the \(T\times T\) matrices of bootstrap estimators of \(MSE({\hat{\varvec{\mu }}}^{ebp}_d)\).

$$\begin{aligned} MSE_{2d}^{*}= & {} 2\varvec{G}_{1d}({{\hat{\varvec{\theta }}}})-E_*\big [\varvec{G}_{1d}({\hat{\varvec{\theta }}}^{*})\big ] +E_*\big [({\hat{\varvec{\mu }}}^{*ebp}_{d}-\varvec{\mu }^{*bp}_{d})({\hat{\varvec{\mu }}}^{*ebp}_{d}-\varvec{\mu }^{*bp)}_{d})^\prime \big ] \\&+\,E_*\big [({\hat{\varvec{\mu }}}^{*ebp}_{d}-\varvec{\mu }^{*bp}_{d})({\hat{\varvec{\mu }}}^{*bp}_{d}-\varvec{\mu }^{*}_{d})^\prime \big ] + E_*\big [({\hat{\varvec{\mu }}}^{*bp}_{d}-\varvec{\mu }^{*}_{d})({\hat{\varvec{\mu }}}^{*ebp}_{d}-\varvec{\mu }^{*bp}_{d})^\prime \big ], \end{aligned}$$which can be approximated by the Monte Carlo method, i.e.,

$$\begin{aligned} mse_{2d}^{*}= & {} 2\varvec{G}_{1d}({{\hat{\varvec{\theta }}}})-\varvec{H}_{1d*} +\varvec{G}_{2d*}+\varvec{G}_{3d*}+\varvec{G}_{4d*}. \end{aligned}$$

Observe that the difference between \(mse_{1d}^{*}\) and \(mse_{2d}^{*}\) lies within the approximation of \(\varvec{G}_{1d}(\varvec{\theta })\). While \(mse_{1d}^{*}\) uses a purely numerical approximation over the Monte Carlo iterations, \(mse_{2d}^{*}\) uses the bias-corrected term \(2\varvec{G}_{1d}({{\hat{\varvec{\theta }}}})-\varvec{H}_{1d*}\). Here, both components \(\varvec{G}_{1d}({{\hat{\varvec{\theta }}}})\) and \(\varvec{H}_{1d*}\) are calculated based on the analytical formula for \(MSE({\hat{\varvec{\mu }}}_d^{bp}|\varvec{X}_d)\). This allows for an efficiency advantage over the standard estimator.

Under the Fay–Herriot model, this fact was proved in Theorem 3 of González-Manteiga et al. (2008). These authors showed that the biases of the parametric bootstrap estimators \(mse_{1d}^{*}\) and \(mse_{2d}^{*}\) are of orders \(O(D^{-1/2})\) and \(o(D^{-1})\), respectively, under some model assumptions. The extension of their results to the MERY model is not straightforward, but similar asymptotic results may be expected under the MERY model after adding regularity conditions on the matrices \(\varvec{\Sigma }_{vdt}\), \(d=1,\ldots ,D\), \(t=1,\ldots ,T\). Simulations of Sect. 5.4 cover the lack of theoretical results and gives some information about the behavior of \(mse_{1d}^{*}\) and \(mse_{2d}^{*}\) under the MERY model.

Finally, note that the properties of the bootstrap estimators \(mse_{1d}^{*}\) and \(mse_{2d}^{*}\) rely on parametric distributional assumptions. Deviations from the normal distribution may produce biases in the MSE estimates. Fortunately, in the application to real data the sample sizes are not very small. Therefore, the asymptotic normality of the direct estimators, \(y_{dt}\) and \(\varvec{x}_{1,dt}\), does not allow important deviations from normality.

4 Model parameter estimation

This section presents a ML approach for model parameter estimation. It is based on the Fisher scoring algorithm (Jennrich and Sampson 1976; Longford 1987). Recall that

For calculating \(\varvec{V}_d^{-1}\), we apply the inversion formula

with \(u=\sigma _1^2\varvec{1}_T\), \(v^\prime =\varvec{1}_T^\prime \), \(A=\varvec{H}_d\). We obtain

For calculating the derivatives of \(\varvec{V}_d\), we define the \(p\times 1\) vector

For \(k=1,\ldots ,p\), the derivative of \(\varvec{\beta }\), with respect to \(\beta _k\), is \(\partial \varvec{\beta }/\partial \beta _k=\varvec{\delta }_k\). For \(k=1,\ldots ,p_1\), the derivative of \(\varvec{V}_d\), with respect to \(\beta _k\), is \(\partial \varvec{V}_d/\partial \beta _k=\varvec{0}\). For \(k=p_1+1,\ldots ,p\), we have the following derivatives

It holds that \(\varvec{y}|\varvec{X}\sim N(\varvec{X}\varvec{\beta },\varvec{V})\), with covariance matrix \(\varvec{V}=\underset{1\le d \le D}{\hbox {diag}}(\varvec{V}_d)\). The vector of model parameters is \(\varvec{\theta }=(\varvec{\beta }^\prime ,\sigma _1^2,\sigma _2^2)^\prime \). The log-likelihood is

In what follows, we apply the formulas

The first partial derivatives of \(\ell \) are

The second partial derivatives and the elements of the Fisher information matrix can be found in Appendix 3 of the supplemental material. Let \(\varvec{\theta }=(\varvec{\beta }^\prime ,\sigma _1^2,\sigma _2^2)\) denote the full parameter. In matrix form, the vector of scores and the Fisher information matrix are

The updating formula of the Fisher scoring algorithm, for calculating the ML estimators of the MERY model parameters, is

Please note that, depending on the data setting, Fisher scoring algorithms can be numerically instable and sensitive regarding starting values. Thus, we recommend using \(\varvec{\theta }^{(0)}={\hat{\varvec{\theta }}}^{ry}\) as seed, where \({\hat{\varvec{\theta }}}^{ry}\) is the model parameter estimate obtained under the Rao–Yu model.

5 Simulation experiments

This section presents simulation experiments that are conducted in order to demonstrate the effectiveness of the method in a controlled environment. We investigate three aspects: (i) model parameter estimation, (ii) characteristic prediction, as well as (iii) mean-squared error estimation. Note that additional simulation results can be found in Appendix 4.

5.1 Setup

A simulation study with \(R=500\) iterations, \(r=1,\ldots ,R\), is conducted. We define \(p=p_0+p_1\) with \(p_0=1\), \(p_1=2\). Let \(\beta _0=10\), \(\varvec{\beta }_1=(2,2)^\prime \) and \(\varvec{\beta }=(\beta _0^\prime ,\varvec{\beta }_1^\prime )^\prime \). For \(d=1,\ldots ,D\), \(t=1,\ldots ,T\), with \(D \in \{50, 100, 200\}\) and \(T \in \{5, 10, 20\}\), the auxiliary variables are

and the measurement errors are \(\varvec{\varepsilon }_{dt}=(e_{dt},\varvec{v}_{dt}^\prime )^\prime \sim N_{p}(\varvec{0},\varvec{\Sigma }_{dt})\), where

and \(\varvec{\sigma }_{12dt}=(\rho _{e1}\sigma _{edt}\sigma _{v1dt},\rho _{e2}\sigma _{edt}\sigma _{v2dt})^\prime .\) We choose \(\rho _{v12} \in \{0, 0.4, 0.6\}\) to resemble the correlation between the covariate measurement errors. Further, we define \(\rho _{e1} = \rho _{e2} \in \{0, 0.4, 0.6\}\) for the correlation between \(e_{dt}\) and \(\varvec{v}_{dt}\). The realizations for the parameters \(\sigma _{v1dt}, \sigma _{v2dt}, \sigma _{edt}\) are drawn for each subdomain from uniform distributions. Here, we distinguish between three error settings: small error (A), moderate error (B) and large error (C). Let \(j \in \{1,2\}\). We consider a total of 15 simulation scenarios as described in Table 1.

The A-scenarios include almost no covariate measurement errors with no dependencies. In these cases, the true model is very close to the original Rao–Yu model. The B- and C-scenarios contain moderate and large covariate measurement errors with dependencies. For \(d=1,\ldots ,D\), \(t=1,\ldots ,T\), the random effects are \(u_{1,d}\sim N(0,\sigma _1^2)\), \(\varvec{u}_{2,d}\sim N_T(\varvec{0}, \sigma _2^2\varvec{I}_T)\), where \(\sigma _1 =7\), \(\sigma _2 = 2\). Define the vectors and matrices

For \(d=1,\ldots ,D\), the target vectors are given by

We apply a fixed covariate setting. The covariate realizations are generated once and held fixed over all Monte Carlo iterations. Note that the same applies to the error covariance matrices \(\varvec{\Sigma }_{dt}\). However, the realizations for \(\varvec{v}_{d}\), \(u_{1,d}\), \(\varvec{u}_{2,d}\) and \(\varvec{e}_d\), \(d=1,\ldots ,D\), are drawn in every iteration from their respective distributions. This implies that both \(\varvec{y}_d\) and \(\varvec{\mu }_d\), \(d=1,\ldots ,D\) are also generated in each iteration. We study the performance of two methods:

-

EBP based on the Rao–Yu (RY) model with REML estimation (R-package saery),

-

EBP based on the MERY model with ML estimation.

5.2 Results of model parameter estimation

This section studies the behavior of the ML fitting algorithm presented in Sect. 4. We use the root mean-squared error (RMSE) and the bias as performance measures. For \({\tau }\in \{\beta _0,\beta _1,\beta _2,\sigma _1^2,\sigma _2^2\}\), they are given by

Since \(\varvec{\beta }_1 = (2,2)'\), we can average the performance measures for the regression parameters. We start with the RMSE, which is displayed in Table 2. We observe that the model parameter estimates under the MERY model are always more efficient than under the RY model. The respective RMSE is smaller for all scenarios and for all model parameters. In the A-scenarios, MERY and RY perform very similarly with respect to regression parameter estimation. This was expected, since scenarios A generate the target data under the MERY model and not under the RY model. So, the simulation results showing that the MERY model performs slightly better compared to the RY model in the presence of small covariate measurement error are coherent with the theory.

In the B- and C-scenarios, the MERY model is clearly more efficient as it uses the additional information on the measurement error distributions. The biggest performance differences are in variance parameter estimation. Here, the RY model cannot distinguish between random effect variation and variation caused by the measurement errors. This leads to highly inefficient subdomain variance parameter estimates. The MERY model, on the other hand, shows a very stable estimation performance, as it can distinguish between the individual sources of variation.

We continue with the bias. The results are summarized in Table 3. For regression parameter estimation, the results are mixed. The MERY model is less biased with respect to intercept estimation, but often more biased in slope parameter estimation. However, the relative bias of slope parameter estimates is at most 2.6%, which is a reasonable magnitude under measurement error settings. Regarding variance parameter estimation, the MERY model is considerably less biased than the RY model. Especially on the subdomain level, which is also the level of the measurement errors, the RY model vastly overestimates the random effect variance. This is due to its incapability to distinguish between measurement errors and random effect variation. The MERY model, on the other hand, shows a much weaker tendency of overestimation because it uses the additional distribution information. The remaining bias is likely due to the likelihood function being flat under measurement errors.

5.3 Results of subdomain characteristic prediction

This section studies the performance of the EBP \({\hat{\mu }}_{dt}^{ebp}\) based on the MERY model, derived in Sect. 2.2, as well as the EBLUP \({\hat{\mu }}_{dt}^{ebp0}\) based on the RY model. We consider RMSE, RRMSE, absolute bias and relative absolute bias as measures. For \({\hat{\mu }}_{dt}^{(i)}\in \{{\hat{\mu }}_{dt}^{ebp(i)}, {\hat{\mu }}_{dt}^{ebp0(i)}\}\), \(d=1,\ldots ,D\), \(t=1,\ldots ,T\), we have

For a compact presentation, we average the performance measures over the subdomains. The averaged results are presented in Table 4. We observe that the EBP based on the MERY model is superior to the EBLUP based on the RY model in all measures and scenarios. In the A-scenarios, the performance difference is very small, which is consistent with the theoretical developments from Sect. 2. In the B- and C-scenarios, the MERY model shows more distinct efficiency advantages. The inclusion of the additional information from the subdomain covariance matrices \(\varvec{\Sigma }_{dt}\) in combination with the more efficient model parameter estimates allows for more accurate results. Further, we see that the predictions under the MERY are also less biased than under the RY model in terms of the absolute bias.

5.4 Results of mean-squared error estimation

This section studies the parametric bootstrap procedures for MSE estimation in Sect. 3. In particular, we look at two estimators \(mse_{1}^*\) and \(mse_{2}^*\), where the first is the standard parametric bootstrap estimator (Sect. 3.1) and the second is the bias-corrected estimator (Sect. 3.2). We consider bias, relative bias and RMSE as performance measures:

Note that \(mse_{dt}\), \(d=1,\ldots ,D\), \(t=1,\ldots ,T\), is the MSE of the EBP over Monte Carlo iterations, which we take as approximation for the real value \(MSE({\hat{\mu }}_{dt})\). For a more compact presentation, we use averaged measures over the subdomains, as in the previous section. For the number of bootstrap replicates, we use \(B=500\).

The results are summarized in Table 5. Note that the columns MSE and \(mse^*\) correspond to the means of \(mse_{dt}\) and \(mse_{dt}^*\) over all subdomains of the respective scenario. From Table 5, we observe that in the A- and B-scenarios, the performance of both MSE estimators is decent. The relative bias ranges between 0.1 and 7.2%, which is a reasonable magnitude for a mixed model with a multi-level random effect structure. However, we see that the bias of the MSE estimator \(mse_2^*\) is always smaller than for the standard estimator \(mse_1^*\) except for Scenario A.5. Further, by looking at the RMSE, it can be concluded that the second MSE estimator is more efficient than the first. In the C-scenarios, which resemble large measurement errors, the standard estimator \(mse_{1}^*\) become visibly biased. Its relative bias ranges from 10.0 to 24.6%. The bias-corrected estimator \(mse_2^*\), on the other hand, is not as much affected by the large errors. Its relative bias only ranges from 0.6 1.9%. In addition to that, by looking at the RMSE, a significant efficiency advantage by the second estimator becomes evident. Accordingly, including the analytical approximation to the first MSE component improves the estimation accuracy considerably in these settings.

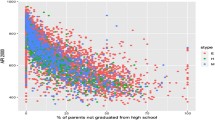

Figure 1 shows the convergence behavior of \(mse_{2}^*\). In particular, it shows the distribution of \(mse_{2dt}^*(B) - mse_{2dt}^{*}(500)\), \(d=1,\ldots ,D\), \(t=1,\ldots ,T\), when the parametric bootstrap estimate \(mse_{2dt}^*(B)\) is calculated based on \(B=1,\ldots , 500\) replicates. The light gray band displays the inner 90% of differences over all subdomains, while the gray band marks the inner 70%. We see that the mean stabilizes at around \(B=150\), implying that 150 bootstrap replicates are sufficient to obtain decent MSE estimates in terms of overall performance. However, if we require that the inner 90% differences vary less than 1% of \(MSE({\hat{\mu }})\), which would roughly be \(\pm 0.48\) in Scenario 1, \(B=300\) is an appropriate choice.

6 Application

This section presents an empirical application to health mapping on the example of regional age-referenced hypertension prevalence in Germany. Note that further results are located in Appendix 5 of the supplemental material.

6.1 Data and specification

We use the health survey GEDA (Robert Koch Institute 2013, 2014a, b) from 2009 (\(n_1 = 21{,}262\)), 2010 (\(n_2 = 22{,}050\)) and 2012 (\(n_3=19{,}294\)) as data basis. It contains citizens of age 18+ from the German population that are interviewed via CATI in a representative telephone sample. The data sets contain health-related information of participants on the unit level. For further information on the survey as well as its sampling design and response rates, see Robert Koch Institute (2013) and Robert Koch Institute (2014a, 2014b).

We define the domains as cross combinations of the seven Nielsen regions of Germany and the age groups I: 18–29, II: 30–39, III: 40–49, IV: 50–59, V: 60–69, VI: 70–79 and VII: 80+. Accordingly, we have \(D=49\) domains that are observed in \(T=3\), periods, which implies \(M=147\) domain–time sets in total. The Nielsen population structure does not correspond to the one used by the German statistical offices, and therefore, it is difficult to collect aggregated auxiliary variables to apply the standard SAE approach based on area-level models. However, we can obtain auxiliary data from the health survey GEDA and apply the statistical methodology based on the MERY model. For this sake, we calculate a set of Horvitz–Thompson estimators for the regional hypertension prevalence as target variable and other health indicators that we use as explanatory variables. Further, we exploit the fact that the hierarchical structure of the MERY model in Sect. 2.1 can be interpreted as a collection of domains that is observed over multiple time periods.

The objective is to estimate the hypertension prevalence per each domain d and time period t. That is to say, we define the number of individuals with high blood pressure as the model component \(\mu _{dt}\), \(d=1,\ldots ,D\), \(t=1,\ldots ,T\), in the sense of Sect. 2.1, and divide by the known domain–time size \(N_{dt}\) afterward. In order to predict the total of hypertensive people, we use the estimated totals of people with diabetes and increased blood lipids per domain and time period as covariates, \(\tilde{\varvec{x}}_{1,dt}=({\tilde{x}}_{1,1dt}, {\tilde{x}}_{1,2dt})\) in the notation of Sect. 2.1, since these diagnoses are well known to be associated with hypertension (see, e.g., Lastra et al. 2014). To determine whether a person suffers from any of these medical conditions in the sample, we rely on the 12-month prevalence profiles used by Robert Koch Institute (2012).

The main advantage of the new MERY model is that it allows for SAE based on uncertain data obtained from dependent survey estimates. In order to provide a suitable data basis for our method, we calculate Horvitz–Thompson estimators for the number of citizens per domain–time in the population that are associated with any of the three medical conditions. These results yield us the model components \(y_{dt}\) and \(\varvec{x}_{1,dt}\) that are direct estimators of \(\mu _{dt}\) and \(\tilde{\varvec{x}}_{1,dt}\), respectively. Note that the estimators are obtained from the same set of sample observations per domain and time period. In standard measurement error models for SAE, this would not be possible as \(y_{dt}\) and \(\varvec{x}_{1,dt}\) are not allowed to have a covariances different from zero. The MERY model, on the other hand, allows for covariances between the direct estimators. In fact, one could argue that it even encourages dependency between the errors, as the covariances between \(y_{dt}\) and \(\varvec{x}_{1,dt}\) mark additional information that can be used to improve the prediction. Recall that in Sect. 2.1, normality was assumed for these components. For the application, the applied Horvitz–Thompson estimators satisfy this assumption asymptotically, as demonstrated by Hájek (1960), Berger (1998) and Chen and Rao (2007).

We further calculate direct estimators of the variances and covariances of \(y_{dt}\) and \(\varvec{x}_{1,dt}\). In this way, we construct the matrices \(\varvec{\Sigma }_{dt}\) and \(\varvec{\Sigma }_{vdt}\) for every domain and time period, in accordance with Sect. 2.1. This can be done using the unit-level observations in GEDA. We calculate direct estimators of the variances \(\text{ var }(y_{dt})\) and \(\text{ var }(x_{1,jdt})\), \(j=1,2\), based on the unit-level survey data. For \(j=1,2\), the estimators of the covariances between the direct target and covariate estimators are obtained from Wood (2008)

The estimator of the covariance between the direct covariate estimators \(x_{1,1dt}\) and \(x_{1,2dt}\) is obtained analogously.

Table 6 presents the quartiles, across domains and time periods, of the sample sizes \(n_{dt}\), the coefficients of variation, \(\text{ cv }(y_{dt})\), \(\text{ cv }(x_{1,1dt})\) and \(\text{ cv }(x_{1,2dt})\), of the direct estimators of the totals of the objective variable (hypertension) and of the two auxiliary variables (diabetes and lipids), and the corresponding correlation coefficients \(\rho (y_{dt},x_{1,1dt})\), \(\rho (y_{dt},x_{1,2dt})\), \(\rho (x_{1,1dt},x_{1,2dt})\). This table shows that some domain–time sample sizes are small and that the direct estimators of the totals of the considered medical variables are correlated. The introduced MERY model is the first one that can deal with this situation.

The full specification of the MERY model is

where \(y_{dt}\), \(x_{1,1dt}\) and \(x_{1,2dt}\) are the Horvitz–Thompson estimators of the number of individuals with high blood pressure, diabetes and increased blood lipids per domain d and time period t, respectively. We apply the ML algorithm from Sect. 4 for model parameter estimation. Further, we use the subsequent parametric bootstrap procedure in order to approximate the standard deviation of the model parameter estimates in order to evaluate their significations.

-

1.

By using the data \((\varvec{y}_d,\varvec{X}_d)\), \(d=1,\ldots ,D\), calculate \({\hat{\varvec{\theta }}}\) and \({\hat{\varvec{V}}}_{2d}={\hat{\sigma }}_2^2\varvec{T}_T\).

-

2.

Repeat B times

-

2.1

For \(d=1,\ldots ,D\), generate \(u_{1,d}^{*(b)}\sim N(0,{\hat{\sigma }}_1^2)\), \(\varvec{u}_{2,d}^{*(b)}\sim N_T(\varvec{0}, {\hat{\varvec{V}}}_{2d})\), \(\varvec{\varepsilon }_d^{*(b)}=(\varvec{e}_d^{*(b)\prime },\varvec{v}_d^{*(b)\prime })^\prime \sim N_{T(p_1+1)}(\varvec{0}, \varvec{\Sigma }_{d})\), \(\varvec{\mu }_d^{*(b)}=\varvec{X}_d{{\hat{\varvec{\beta }}}}+{\hat{\varvec{B}}}_d\varvec{v}_d^{*(b)}+\varvec{1}_Tu_{1,d}^{*(b)}+\varvec{u}_{2,d}^{*(b)}\) and \(\varvec{y}_d^{*(b)}=\varvec{\mu }_d^{*(b)}+\varvec{e}_{d}^{*(b)}\).

-

2.2

By using the data \((\varvec{y}_d^{*(b)},\varvec{X}_d)\), \(d=1,\ldots ,D\), calculate the estimator \({\hat{\varvec{\theta }}}^{*(b)}\).

-

2.1

-

3.

For \(\tau \in \{ \beta _0, \beta _1, \beta _1, \sigma _1^2, \sigma _2^2\}\), calculate the bootstrap estimator of \(sd({\hat{\tau }})\); i.e.,

$$\begin{aligned} sd^*({\hat{\tau }}) = \sqrt{\frac{1}{B} \sum _{b=1}^B \left( {\hat{\tau }}^{(b)} - \frac{1}{B} \sum _{k=1}^B {\hat{\tau }}^{(k)} \right) ^2}. \end{aligned}$$

In accordance with Sect. 5.4, we rely on \(B=300\) bootstrap replicates. For significance testing, we use a t-test with test statistic \(t({\hat{\tau }}) = {\hat{\tau }} / sd^*({\hat{\tau }})\) that is asymptotically distributed as standard normal. The resulting values are located in the probability density function of the standard normal to find the respective p values. Confidence intervals for the model parameter estimates are calculated by \({\hat{\tau }} \pm t_{(m,1-\alpha /2)} sd^*({\hat{\tau }})\), where \(t_{(\cdot )}\) is the corresponding quantile of the t-distribution with \(m=DT-3\) degrees of freedom and significance level \(\alpha \). Please note that the mathematically correct test distribution would be the normal distribution, as maximum likelihood estimates are asymptotically normal distributed. However, the t-distribution provides slightly more conservative test decisions and confidence intervals. This seems reasonable to us in a small area setting, where asymptotic arguments, due to small sample sizes, are critical. In this particular application, the confidence intervals are almost the same, as the number of degrees of freedom is considerably large, such that the corresponding t-distribution already approximates the normal distribution quite well.

Table 7 displays the results of model parameter estimation obtained from the ML algorithm as well as the parametric bootstrap procedure. For the sake of presentability, we show the random effect standard deviations, \(\hat{\sigma }_1\) and \(\hat{\sigma }_2\), rather than the respective variances. It can be seen that all model parameters except for the intercept are significant on a 1% level. The intercept is not significantly different from zero. The signs of the regression parameters \(\beta _ 1\) and \(\beta _2\) are plausible, as diabetes and increased blood lipids are positively correlated with hypertension. Furthermore, the domain and domain–time-level random effects are clearly visible in the distribution of \(y_{dt}\).

6.2 Results

The estimated prevalence for 2009 over all domains is 26.1%. The estimated prevalence for 2010 is 26.4%, and for 2012, we have 27.1%. The estimated evolution of the hypertension prevalence over age groups for all three considered periods is displayed in Fig. 2. We see that the hypertension prevalence is low in the youngest cohorts (18–39). From age 40–79, a steep monotonous increase is evident for all three periods. For individuals of age 80+, the curve flattens on a high level in 2010 and 2012. An interesting observation is that in 2009, the prevalence for the eldest cohort is slightly lower than for the second eldest. A possible explanation is that for age 80+, individuals without hypertension have higher life expectancy than individuals with hypertension due to a lesser risk of suffering a stroke or other cardiovascular events (Kassai et al. 2005).

Figure 3 shows the empirical best predictions dependent on the respective direct estimates. The left plot displays the point estimates. The right plot marks the comparison of the EBP RMSEs and the direct estimator standard deviations. We have used the bias-corrected parametric bootstrap estimator presented in Sect. 3.2, to estimate the EBP RMSEs of the domain–time characteristic predictions.

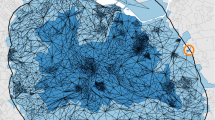

From the plot, we see that the direct estimates vary randomly around the prevalence predictions. This is in line with theory, as the EBP based on the MERY model exploits the design unbiasedness of the direct estimators with the error components being zero on expectation. Furthermore, it indicates that we do not have systematic prediction bias, which is further supported by the simulation results in Sect. 5.3. From the right plot, we see that the MERY predictions are always more efficient than the direct estimates. The EBP RMSEs are always smaller than the corresponding standard deviations of the direct estimators. Accordingly, we can conclude that the application of the MERY model allows for a considerable gain in accuracy. On the other hand, we also remark that most coefficients of variation of the direct estimators are below 20%, since the sample sizes in several domains are quite high. For some Statistical Offices, 20% marks a quality threshold that admits a publication of the results. However, the MERY still manages to improve these results uniformly, which is why its application is recommendable in this setting.

Figure 4 displays the estimated regional hypertension prevalence over the seven Nielsen regions in percent for 2010. The figures are with respect to the total regional populations (without age referencing). We see that the highest prevalences are located in the east of Germany, which is the former territory of the German Democratic Republic. The lowest prevalences are located in Badem-Württemberg and Bavaria. The estimated distribution is plausible, as similar patterns have been found for hypertension for instance by Burgard et al. (2020b). Further, similar distributions are known for strongly correlated conditions, such as diabetes (Schipf et al. 2014).

7 Conclusions

This paper introduces a new small area model for the estimation of regional population characteristics that allows for explanatory variables with measurement errors that are possibly correlated with the errors of the target variable. The approach enriches the modeling process by admitting auxiliary information that comes from administrative records, from survey samples other than the target survey, or even from that same target survey. The MERY model is a generalization of the Rao–Yu model that can be applied to both temporal data (domains and periods) or geographically nested data (domains and subdomains). With these features, it is broadly applicable in practice and marks an important contribution to both the SAE and the measurement error model literature.

Concerning model parameter estimation, the paper proposes the maximum likelihood method by implementing a Fisher scoring algorithm. For predicting the measurement errors, the random effects, as well as the target population quantities, the paper derives best predictors and calculates the corresponding EBPs. After calculating the variances and covariances of the random effects and errors, the MSEs of the BPs are approximated and applied to construct parametric bootstrap procedures with bias correction term. Several simulation experiments are performed to study the behavior of the fitting algorithm, the EBPs and the parametric bootstrap estimators of the MSEs. In particular, the efficiency gain derived from the modeling of measurement errors and their correlations is shown and a recommendation about the number of bootstrap replicates is given. An interesting finding is that if the measurements errors are ignored, then the MSEs of predictors are underestimated, which leads to the predictions seeming more accurate than they actually are (Appendix 4 of supplemental material). The new statistical methodology is applied to the health survey GEDA from 2009, 2010 and 2012. The estimation target is the regional hypertension prevalence in Germany. In order to predict hypertension, the employed covariates, diabetes and increased blood lipids prevalence, are also taken from the health survey GEDA. The new MERY model is the only model in the statistical literature that admits this possibility. Finally, the obtained EBPs are shown to be more precise than the corresponding direct estimates.

Data availability

The data used for this study are retrieved from the German health survey Gesundheit in Deutschland aktuell (GEDA). The related data sets are available on request at the Robert Koch Institute, Germany. The authors have no permission to share them with third parties.

Code availability

The R-code for this paper is accessible as online supplement.

References

Adin A, Lee D, Goicoa T, Ugarte MD (2019) A two-stage approach to estimate spatial and spatio-temporal disease risks in the presence of local discontinuities and clusters. Stat Methods Med Res 28(9):2595–2613

Arima S, Bell WR, Datta GS, Franco C, Liseo B (2017) Multivariate Fay–Herriot Bayesian estimation of small area means under functional measurement error. J R Stat Soc Ser A 180(4):1191–1209

Benavent R, Morales D (2016) Multivariate Fay–Herriot models for small area estimation. Comput Stat Data Anal 94:372–390

Benavent R, Morales D (2021) Small area estimation under a temporal bivariate area-level linear mixed model with independent time effects. Stat Methods Appl 30:195–222. https://doi.org/10.1007/s10260-020-00521-x

Berger YG (1998) Rate of convergence to normal distribution for the Horvitz–Thompson estimator. J Stat Plan Inference 67(2):209–226

Bernal RTI, de Carvalho QH, Pell JP, Leyland AH, Dundas R, Barreto ML, Malta DC (2020) A methodology for small area prevalence estimation based on survey data. Int J Equity Health 19:124

Boubeta M, Lombardía MJ, Morales D (2017) Poisson mixed models for studying the poverty in small areas. Comput Stat Data Anal 107(2):32–47

Burgard JP, Esteban MD, Morales D, Pérez A (2020a) A Fay–Herriot model when auxiliary variables are measured with error. TEST 29:166–195. https://doi.org/10.1007/S11749-019-00649-3

Burgard JP, Krause J, Münnich R (2020b) An elastic net penalized small area model combining unit- and area-level data for regional hypertension prevalence estimation. J Appl Stat. https://doi.org/10.1080/02664763.2020.1765323

Burgard JP, Esteban MD, Morales D, Pérez A (2021) Small area estimation under a measurement error bivariate Fay–Herriot model. Stat Methods Appl 30:79–108. https://doi.org/10.1007/s10260-020-00515-9

Chen J, Rao JNK (2007) Asymptotic normality under two-phase sampling designs. Stat Sin 17(3):1047–1064

Datta GS, Lahiri P, Maiti T, Lu KL (1999) Hierarchical Bayes estimation of unemployment rates for the US States. J Am Stat Assoc 94(448):1074–1082

Datta GS, Lahiri P, Maiti T (2002) Empirical Bayes estimation of median income of four-person families by state using time series and cross-sectional data. J Stat Plan Inference 102(1):83–97

Esteban MD, Morales D, Pérez A, Santamaría L (2012) Small area estimation of poverty proportions under area-level time models. Comput Stat Data Anal 56:2840–2855

Esteban MD, Lombardía MJ, López-Vizcaíno E, Morales D, Pérez A (2020) Small area estimation of proportions under area-level compositional mixed models. TEST 29(3):793–818

Fay RE, Herriot RA (1979) Estimates of income for small places: an application of James–Stein procedures to census data. J Am Stat Assoc 74(366):269–277

Ghosh M, Nangia N, Kim D (1996) Estimation of median income of four-person families: a Bayesian time series approach. J Am Stat Assoc 91(43):1423–1431

González-Manteiga W, Lombardía MJ, Molina I, Morales D, Santamaría L (2008) Analytic and bootstrap approximations of prediction errors under a multivariate Fay–Herriot model. Comput Stat Data Anal 52:5242–5252

Hájek J (1960) Limiting distributions in simple random sampling from a finite population. Publ Math Inst Hung Acad Sci 5:361–374

Jedrejczak A, Kubacki J (2017) Estimation of small area characteristics using multivariate Rao–Yu model. Stat Trans 18(4):725–742

Jennrich RI, Sampson PF (1976) Newton–Raphson and related algorithms for maximum likelihood variance component estimation. Technometrics 18(1):11–17

Jiang J, Lahiri P (2006) Mixed model prediction and small area estimation. TEST 15(1):1–96

Kassai B, Boissel JP, Cucherat M, Boutitie F, Gueyffier F (2005) Treatment of high blood pressure and gain in event-free life expectancy. Vasc Health Risk Manag 1(2):163–169

Lange C, Jentsch F, Allen J, Hoebel J, Kratz AL, von der Lippe E, Müters S, Schmich P, Thelen J, Wetzstein M, Fuchs J, Ziese T (2015) Data resource profile: German health update (GEDA)—the health interview survey for adults in Germany. Int J Epidemiol 44(2):442–450

Lastra G, Syed S, Kurukulasuriya L, Manrique C, Sowers J (2014) Type 2 diabetes mellitus and hypertension: an update. Endocrinol Metab Clin North Am 43(1):103–122

Longford NT (1987) A fast scoring algorithm for maximum likelihood estimation in unbalanced mixed models with nested random effects. Biometrika 74(4):817–827

Marhuenda Y, Molina I, Morales D (2013) Small area estimation with spatio-temporal Fay–Herriot models. Comput Stat Data Anal 58:308–325

Mills CW, Johnson G, Huang TTK, Balk D, Wyka K (2020) Use of small-area estimates to describe county-level geographic variation in prevalence of extreme obesity among US adults. JAMA Netw Open 3(5):e204289

Morales D, Pagliarella MC, Salvatore R (2015) Small area estimation of poverty indicators under partitioned area-level time models. SORT Stat Oper Res 39(1):19–34

Münnich R, Burgard JP, Krause J (2019) Adjusting selection bias in German health insurance records for regional prevalence estimation. Popul Health Met 17:13

National Center for Health Statistics (2020) National health and nutrition examination survey: 1999–2020 survey content brochure. Online. https://wwwn.cdc.gov/nchs/data/nhanes/survey_contents.pdf

Pfeffermann D (2002) Small area estimation—new developments and directions. Int Stat Rev 70(1):55–76

Pfeffermann D (2013) New important developments in small area estimation. Stat Sci 28(1):40–68

Pfeffermann D, Burck L (1990) Robust small area estimation combining time series and cross-sectional data. Surv Methodol 16(2):217–237

Rao JNK, Molina I (2015) Small area estimation. Wiley series in survey methodology. Wiley, Hoboken

Rao JNK, Yu M (1994) Small-area estimation by combining time-series and cross-sectional data. Can J Stat 22(4):511–528

Robert Koch Institute (2012) Daten und Fakten: Ergebnisse der Studie “Gesundheit in Deutschland aktuell 2010”. Online. https://www.gbe-bund.de/pdf/GEDA_2010_Gesamtausgabe.pdf

Robert Koch Institute (2013) German Health Update 2010 (GEDA 2010). Public use file third version. Online. https://doi.org/10.7797/27-200910-1-1-3

Robert Koch Institute (2014a) German Health Update 2009 (GEDA 2009). Public use file second version. Online. https://doi.org/10.7797/26-200809-1-1-2

Robert Koch Institute (2014b) German Health Update 2012 (GEDA 2012). Public use file first version. Online. https://doi.org/10.7797/29-201213-1-1-1

Saeedi P, Petersohn I, Salpea P, Malanda B, Karuranga S, Unwin N, Colagiuri S, Guariguata L, Motala AA, Ogurtsova K, Shaw JE, Bright D, Williams R (2019) Global and regional diabetes prevalence estimates for 2019 and projections for 2030 and 2045: results from the international diabetes federation diabetes atlas, 9th edition. Diab Res Clin Pract 157:107843

Schipf S, Ittermann T, Tamayo T, Holle R, Schunk M, Maier W, Meisinger C, Thorand B, Kluttig A, Greiser KH, Berger K, Müller G, Moebus S, Slomiany U, Rathmann W, Völzke H (2014) Regional differences in the incidence of self-reported type 2 diabetes in germany: Results from five population-based studies in Germany (Diab-core consortium). J Epidemiol Community Health 68:1088–1095

Torabi M, Datta GS, Rao JNK (2009) Empirical Bayes estimation of small area means under a nested error linear regression model with measurement errors in the covariates. Scand J Stat 36(2):355–368

Trandafir PC, Adin A, Ugarte MD (2020) Space-time analysis of ovarian cancer mortality rates by age groups in Spanish provinces (1989–2015). BMC Public Health 20:1244. https://doi.org/10.1186/s12889-020-09267-3

Ubaidillah A, Notodiputro KA, Kurnia A, Wayan I (2019) Multivariate Fay–Herriot models for small area estimation with application to household consumption per capita expenditure in Indonesia. J Appl Stat 46(15):2845–2861

Wood J (2008) On the covariance between related Horvitz–Thompson estimators. J Offic Stat 24(1):53–78

Ybarra LMR, Lohr SL (2008) Small area estimation when auxiliary information is measured with error. Biometrika 95:919–931

You Y, Rao JNK (2000) Hierarchical Bayes estimation of small area means using multi-level models. Surv Methodol 26(2):173–181

Acknowledgements

This research is supported by the Spanish Grant PGC2018-096840-B-I00, by the Grant “Algorithmic Optimization (ALOP)—Graduate School 2126” funded by the German Research Foundation, as well as the Grant “RIFOSS—Research Innovation for Official and Survey Statistics” funded by the German Federal Statistical Office.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict interest to report.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Burgard, J.P., Krause, J. & Morales, D. A measurement error Rao–Yu model for regional prevalence estimation over time using uncertain data obtained from dependent survey estimates. TEST 31, 204–234 (2022). https://doi.org/10.1007/s11749-021-00776-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11749-021-00776-w

Keywords

- Dependent errors

- Empirical best prediction

- Hierarchical model

- Parametric bootstrap

- Small area estimation

- Temporal data