Abstract

We extended research on scaffolds for formulating scientific hypotheses, namely the Hypothesis Scratchpad (HS), in the domain of relative density. The sample comprised of secondary school students who used three different configurations of the HS: Fully structured, containing all words needed to formulate a hypothesis in the domain of the study; partially structured, containing some words; unstructured, containing no words. We used a design with two different measures of student ability to formulate hypotheses (targeted skill): A global, domain-independent measure, and a domain-specific measure. Students used the HS in an intervention context, and then, in a novel context, addressing a transfer task. The fully and partially structured versions of the HS improved the global measure of the targeted skill, while the unstructured version, and to a lesser extent, the partially structured version, favored student performance as assessed by the domain-specific measure. The partially structured solution revealed strengths for both measures of the targeted skill (global and domain-specific), which may be attributed to its resemblance to completion problems (partially worked examples). The unstructured version of the HS seems to have promoted schema construction for students who revealed an improvement of advanced cognitive processes (thinking critically and creatively). We suggest that a comprehensive assessment of scaffolding student work when formulating hypotheses should incorporate both global and domain-specific measures and it should also involve transfer tasks.

Similar content being viewed by others

Notes

To effectively manage a transfer task in a new learning setting, students need to identify both the surface features that may differ from the prior instructional context and the underlying core aspects shared by the two learning contexts (e.g., Schwartz et al., 2011; Shemwell et al., 2015). Learners would be expected to bypass surface features and apply the learned underlying core aspects that are shared between the previous learning setting and the novel setting (Barnett & Ceci, 2002; Belenky & Schalk, 2014; Kaminski et al., 2008).

Coding for the number of trials in the Splash-Lab versus “smart” trials in the lab (with the “vary-one-variable-at-a-time”, VOTAT heuristic) as well as for the number of observations noted in the observation tool vs. “smart” observations (with a comparison between density of object and density of fluid) was performed by means of the computer screen capture software (River Past Screen Recorder Pro) and did not necessitate any control for inter-rater reliability.

The global and domain-specific measures of the targeted skill (hypothesis formulation) should be related somehow, since we should have expected that a student scoring high in the global (domain-independent) measure should also be capable of addressing effectively the domain-specific task as assessed by means of the rubric. This is what we examined in “Preliminary analysis” through non-parametric analyses (global measure treated as scale variable; domain-specific measure treated as nominal variable). A possible relation between the two measures should not lead us to collapse the two measures into one, however, since the first, global measure (scale variable) would still denote the targeted skill in a context-independent manner, while the second, domain-specific measure (nominal variable) would be confined within the frame of relative density (domain of the present study). In this domain, formulating testable hypotheses is not enough, since students also need to identify and incorporate in their hypotheses the interaction effect between the density of object and fluid.

References

Anderson, L. W., & Krathwohl, D. R. (2001). A taxonomy for learning, teaching, and assessing: A revision of Bloom’s taxonomy of educational objectives. Addison Wesley Longman.

Arnold, J. C., Kremer, K., & Mayer, J. (2014). Understanding students’ experiments—what kind of support do they need in inquiry tasks? International Journal of Science Education, 36, 2719–2749. https://doi.org/10.1080/09500693.2014.930209

Baars, M., Visser, S., van Gog, T., Bruin, A. D., & Paas, F. (2013). Completion of partially worked-out examples as a generation strategy for improving monitoring accuracy. Contemporary Educational Psychology, 38, 395–406. https://doi.org/10.1016/j.cedpsych.2013.09.001

Barnett, S. M., & Ceci, S. J. (2002). When and where do we apply what we learn? A taxonomy for far transfer. Psychological Bulletin, 128, 612–637. https://doi.org/10.1037/0033-2909.128.4.612

Belenky, D. M., & Schalk, L. (2014). The effects of idealized and grounded materials on learning, transfer, and interest: An organizing framework for categorizing external knowledge representations. Educational Psychology Review, 26, 27–50. https://doi.org/10.1007/s10648-014-9251-9

Bell, T., Urhahne, D., Schanze, S., & Ploetzner, R. (2010). Collaborative inquiry learning: Models, tools and challenges. International Journal of Science Education, 32, 349–377. https://doi.org/10.1080/09500690802582241

Bloom, B. S. (1956). Taxonomy of educational objectives. Handbook I: The cognitive domain. David McKay.

Burns, J. C., Okey, J. R., & Wise, K. C. (1985). Development of an integrated process skill test: TIPS II. Journal of Research in Science Teaching, 22, 169–177. https://doi.org/10.1002/tea.3660220208

Chang, K. E., Chen, Y. L., Lin, H. Y., & Sung, Y. T. (2008). Effects of learning support in simulation-based physics learning. Computers & Education, 51, 1486–1498. https://doi.org/10.1016/j.compedu.2008.01.007

Chen, J., Wang, M., Grotzer, T. A., & Dede, C. (2018). Using a three-dimensional thinking graph to support inquiry learning. Journal of Research in Science Teaching, 55, 1239–1263. https://doi.org/10.1002/tea.21450

Chi, M. T. H., & VanLehn, K. A. (2012). Seeing deep structure from the interactions of surface features. Educational Psychologist, 47, 177–188. https://doi.org/10.1080/00461520.2012.695709

Cohen, L., Manion, L., & Morrison, K. (2007). Research methods in education (6th ed.). Routledge.

de Jong, T. (2006a). Scaffolds for scientific discovery learning. In J. Elen & R. E. Clark (Eds.), Handling complexity in learning environments: Theory and research (pp. 107–128). London: Elsevier.

de Jong, T. (2006b). Computer simulations – Technological advances in inquiry learning. Science, 312, 532–533. https://doi.org/10.1126/science.1127750

de Jong, T. (Ed.). (2014). Preliminary inquiry classroom scenarios and guidelines. D1.3. Go-Lab Project (Global Online Science Labs for Inquiry Learning at School).

de Jong, T., Gillet, D., Rodríguez-Triana, M. J., Hovardas, T., Dikke, D., Doran, R., Dziabenko, O., Koslowsky, J., Korventausta, M., Law, E., Pedaste, M., Tasiopoulou, E., Vidal, G., & Zacharia, Z. C. (2021). Understanding teacher design practices for digital inquiry–based science learning: The case of Go-Lab. Educational Technology Research & Development, 69, 417–444. https://doi.org/10.1007/s11423-020-09904-z

de Jong, T., & van Joolingen, W. R. (1998). Scientific discovery learning with computer simulations of conceptual domains. Review of Educational Research, 68, 179–202. https://doi.org/10.3102/00346543068002179

de Jong, T., Sotiriou, S., & Gillet, D. (2014). Innovations in STEM education: the Go-Lab federation of online labs. Smart Learning Environments, 1, 1–16. https://doi.org/10.1186/s40561-014-0003-6

Efstathiou, C., Hovardas, T., Xenofontos, N., Zacharia, Z., de Jong, T., Anjewierden, A., & van Riesen S. A. N. (2018). Providing guidance in virtual lab experimentation: The case of an experiment design tool. Educational Technology Research & Development, 66, 767–791. https://doi.org/10.1007/s11423-018-9576-z

Gijlers, H., & de Jong, T. (2005). The relation between prior knowledge and students’ collaborative discovery learning processes. Journal of Research in Science Teaching, 42, 264–282. https://doi.org/10.1002/tea.20056

Gijlers, H., & de Jong, T. (2009). Sharing and confronting propositions in collaborative inquiry learning. Cognition and Instruction, 27, 239–268. https://doi.org/10.1080/07370000903014352

Hovardas, T. (2016). A learning progression should address regression: Insights from developing non-linear reasoning in ecology. Journal of Research in Science Teaching, 53, 1447–1470. https://doi.org/10.1002/tea.21330

Eiriksdottir, E., & Catrambone, R. (2011). Procedural instructions, principles, and examples: How to structure instructions for procedural tasks to enhance performance, learning, and transfer. Human Factors, 53, 749–770. https://doi.org/10.1177/0018720811419154

Großmann, N., & Wilde, M. (2019). Experimentation in biology lessons: Guided discovery through incremental scaffolds. International Journal of Science Education, 41, 759–781. https://doi.org/10.1080/09500693.2019.1579392

Hmelo-Silver, S. E., Duncan, R. G., & Chinn, C. A. (2007). Scaffolding and achievement in problem-based and inquiry learning: A response to Kirschner, Sweller, and Clark (2006). Educational Psychologist, 42, 99–107. https://doi.org/10.1080/00461520701263368

Hsin, C.-T., & Wu, H.-K. (2011). Using scaffolding strategies to promote young children’s scientific understandings of floating and sinking. Journal of Science Education and Technology, 20, 656–666. https://doi.org/10.1007/s10956-011-9310-7

Kalyuga, S. (2007). Expertise reversal effect and its implications for learner-tailored instruction. Educational Psychology Review, 19, 509–539. https://doi.org/10.1007/s10648-007-9054-3

Kaminski, J. A., Sloutsky, V. M., & Heckler, A. F. (2008). The advantage of abstract examples in learning math. Science, 320, 454–455. https://doi.org/10.1126/science.1154659

Kao, G. Y. M., Chiang, C. H., & Sun, C. T. (2017). Customizing scaffolds for game-based learning in physics: Impacts on knowledge acquisition and game design creativity. Computers & Education, 113, 294–312. https://doi.org/10.1016/j.compedu.2017.05.022

Karweit, N., & Slavin, R. E. (1982). Time-on-task: Issues of timing, sampling, and definition. Journal of Educational Psychology, 74, 844–851. https://doi.org/10.1037/0022-0663.74.6.844

Kim, J. H., & Pedersen, S. (2011). Advancing young adolescents’ hypothesis- development performance in a computer-supported and problem-based learning environment. Computers & Education, 57, 1780–1789. https://doi.org/10.1016/j.compedu.2011.03.014

Kirschner, P. A., Sweller, J., & Clark, R. E. (2006). Why minimal guidance during instruction does not work: An analysis of the failure of constructivist, discovery, problem-based, experiential, and inquiry-based teaching. Educational Psychologist, 41, 75–86. https://doi.org/10.1207/s15326985ep4102_1

Klahr, D. (2005). A framework for cognitive studies and technology. In M. Gorman, R. D. Tweney, D. C. Gooding, & A. P. Kincannon (Eds.), Scientific and technological thinking (pp. 81–95). Lawrence Erlbaum.

Koksal, E. A., & Berberoglou, G. (2014). The effect of guided inquiry instruction on 6th grade Turkish students’ achievement, science process skills, and attitudes toward science. International Journal of Science Education, 36, 66–78. https://doi.org/10.1080/09500693.2012.721942

Loverude, M. E., Kautz, C. H., & Heron, P. R. L. (2003). Helping students develop an understanding of Archimedes’ principle. I. Research on student understanding. American Journal of Physics, 71, 1178–1187. https://doi.org/10.1119/1.1607335

Margulieux, L. E., & Catrambone, R. (2016). Improving problem solving with subgoal labels in expository text and worked examples. Learning and Instruction, 42, 58–71. https://doi.org/10.1016/j.learninstruc.2015.12.002

Meindertsma, H. B., van Dijk, M. W. G., Steenbeek, H. W., & van Geert, P. L. C. (2014). Stabilty and variability in young children’s understanding of floating and sinking during one single-task session. Mind, Brain, and Education, 8, 149–158. https://doi.org/10.1111/mbe.12049

Molenaar, I., & Roda, C. (2008). Attention management for dynamic and adaptive scaffolding. Pragmatics & Cognition, 16, 224–271. https://doi.org/10.1075/pc.16.2.04mol

Mulder, Y. G., Bollen, L., de Jong, T., & Lazonder, A. W. (2016). Scaffolding learning by modelling: The effects of partially worked-out models. Journal of Research in Science Teaching, 53, 502–523. https://doi.org/10.1002/tea.21260

Oh, P. S. (2010). How can teachers help students formulate scientific hypotheses? Some strategies found in abductive inquiry activities of earth science. International Journal of Science Education, 32, 541–560. https://doi.org/10.1080/09500690903104457

Paas, F. G. (1992). Training strategies for attaining transfer of problem-solving skill in statistics: A cognitive-load approach. Journal of Educational Psychology, 84, 429–434. https://doi.org/10.1037/0022-0663.84.4.429

Pedaste, M., Mäeots, M., Siiman, L. A., de Jong, T., van Riesen, S. A. N., Kamp, E. T., Manoli, C. C., Zacharia, Z. C., & Tsourlidaki, E. (2015). Phases of inquiry-based learning: Definitions and the inquiry cycle. Educational Research Review, 14, 47–61. https://doi.org/10.1016/j.edurev.2015.02.003

Potvin, P., & Cyr, G. (2017). Toward a durable prevalence of scientific conceptions: Tracking the effects of two interfering misconceptions about buoyancy from preschoolers to science teachers. Journal of Research in Science Teaching, 54, 1121–1142. https://doi.org/10.1002/tea.21396

Quintana, C., Reiser, B. J., Davis, E. A., Krajcik, J., Fretz, E., Duncan, R. G., Kyza, E., Edelson, D., & Soloway, E. (2004). A scaffolding design framework for software to support science inquiry. Journal of the Learning Sciences, 13, 337–386. https://doi.org/10.1207/s15327809jls1303_4

Reiser, B. J. (2004). Scaffolding complex learning: The mechanisms of structuring and problematizing student work. The Journal of the Learning Sciences, 13, 273–304. https://doi.org/10.1207/s15327809jls1303_2

Schwartz, D. L., Chase, C. C., Oppezzo, M. A., & Chin, D. B. (2011). Practicing versus inventing with contrasting cases: The effects of telling first on learning and transfer. Journal of Educational Psychology, 103, 759–775. https://doi.org/10.1037/a0025140

Shemwell, J. T., Chase, C. C., & Schwartz, D. L. (2015). Seeking the general explanation: A test of inductive activities for learning and transfer. Journal of Research in Science Teaching, 52, 58–83. https://doi.org/10.1002/tea.21185

Slavin, R. E. (2014). Educational psychology: Theory and practice (11th ed.). Pearson Education.

Sweller, J., van Merrienboer, J. J., & Paas, F. G. (1998). Cognitive architecture and instructional design. Educational Psychology Review, 10, 251–296. https://doi.org/10.1023/A:1022193728205

Sweller, J., Kirschner, P. A., & Clark, R. E. (2007). Why minimally guided teaching techniques do not work: A reply to commentaries. Educational Psychologist, 42, 115–121. https://doi.org/10.1080/00461520701263426

Van Merriënboer, J. J. G. (1990). Strategies for programming instruction in high school: Program completion vs. program generation. Journal of Educational Computing Research, 6, 265–285. https://doi.org/10.2190/4NK5-17L7-TWQV-1EHL

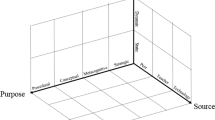

van Joolingen, W. R., & de Jong, T. (1991). Supporting hypothesis generation by learners exploring an interactive computer simulation. Instructional Science, 20, 389–404. https://doi.org/10.1007/BF00116355

van Joolingen, W. R., & de Jong, T. (1993). Exploring a domain through a computer simulation: Traversing variable and relation space with the help of a hypothesis scratchpad. In D. Towne, T. de Jong, & H. Spada (Eds.), Simulation-based experiential learning (pp. 191–206). (NATO ASI series). Berlin: Springer.

van Joolingen, W. R., & de Jong, T. (1997). An extended dual search space model of learning with computer simulations. Instructional Science, 25, 307–346. https://doi.org/10.1023/A:1002993406499

van Joolingen, W. R., & de Jong, T. (2003). SimQuest: authoring educational simulations. In T. Murray, S. Blessing, & S. Ainsworth (Eds.), Authoring tools for advanced technology educational software: Toward cost-effective production of adaptive, interactive, and intelligent educational software (pp. 1–31). Dordrecht: Kluwer Academic Publishers.

van Joolingen, W. R., de Jong, T., Lazonder, A. W., Savelsbergh, E. R., & Manlove, S. (2005). Co-Lab: Research and development of an online learning environment for collaborative scientific discovery learning. Computers in Human Behavior, 21, 671–688. https://doi.org/10.1016/j.chb.2004.10.039

Van Merriënboer, J. J. G., & de Croock, M. B. M. (1992). Strategies for computer-based programming instruction—program completion vs program generation. Journal of Educational Computing Research, 8, 365–394. https://doi.org/10.2190/MJDX-9PP4-KFMT-09PM

Xenofontos, N. A., Hovardas, T., Zacharia, Z. C., & de Jong, T. (2020). Inquiry‐based learning and retrospective action: Problematizing student work in a computer‐supported learning environment. Journal of Computer Assisted Learning, 36, 12-28. https://doi.org/10.1111/jcal.12384

Zacharia, Z. C., Manoli, C., Xenofontos, N., de Jong, T., Pedaste, M., van Riesen, S., Kamp, E., Mäeots, M., Siiman. L., & Tsourlidaki, E. (2015). Identifying potential types of guidance for supporting student inquiry when using virtual and remote labs: A literature review. Educational Technology Research and Development, 63, 257–302. https://doi.org/10.1007/s11423-015-9370-0

Zervas, P. (Ed.). (2013). The Go-Lab inventory and integration of online labs—Labs offered by large scientific organisations. D2.1. Go-Lab Project (Global Online Science Labs for Inquiry Learning at School).

Acknowledgements

The present study was undertaken within the frame of the research project “Go-Lab: Global Online Science Labs for Inquiry Learning at School”, funded by the European Community (Grant Agreement No. 317601; Information and Communication Technologies (ICT) theme; 7th Framework Programme for R&D). We are grateful to our colleagues Ellen T. Wassink-Kamp, Casper H. W. de Jong, Margus Pedaste, Mario Mäeots, Leo Siiman, Effie Law, Matthias Heintz, and Rob Edlin White for their help in developing the rubric to score hypotheses.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Hovardas, T., Zacharia, Z., Xenofontos, N. et al. How many words are enough? Investigating the effect of different configurations of a software scaffold for formulating scientific hypotheses in inquiry-oriented contexts. Instr Sci 50, 361–390 (2022). https://doi.org/10.1007/s11251-022-09580-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11251-022-09580-x