Abstract

Combinatorial estimation is a new area of application for sequential Monte Carlo methods. We use ideas from sampling theory to introduce new without-replacement sampling methods in such discrete settings. These without-replacement sampling methods allow the addition of merging steps, which can significantly improve the resulting estimators. We give examples showing the use of the proposed methods in combinatorial rare-event probability estimation and in discrete state-space models.

Similar content being viewed by others

References

Aires, N.: Comparisons between conditional Poisson sampling and Pareto \(\pi \)ps sampling designs. J. Stat. Plan. Inference 88(1), 133–147 (2000)

Bondesson, L., Traat, I., Lundqvist, A.: Pareto sampling versus Sampford and conditional Poisson sampling. Scand. J. Stat. 33(4), 699–720 (2006)

Brewer, K.R.W., Hanif, M.: Sampling with Unequal Probabilities, vol. 15. Springer, New York (1983)

Brockwell, A., Del Moral, P., Doucet, A.: Sequentially interacting Markov chain Monte Carlo methods. Ann. Stat. 38(6), 3387–3411 (2010)

Carpenter, J., Clifford, P., Fearnhead, P.: Improved particle filter for nonlinear problems. IEE Proc. Radar Sonar Navig. 146(1), 2–7 (1999)

Chen, R., Liu, J.S.: Mixture Kalman filters. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 62(3), 493–508 (2000)

Chen, Y., Diaconis, P., Holmes, S.P., Liu, J.S.: Sequential Monte Carlo methods for statistical analysis of tables. J. Am. Stat. Assoc. 100(469), 109–120 (2005)

Cochran, W.G.: Sampling Techniques, 3rd edn. Wiley, New York (1977)

Del Moral, P., Doucet, A., Jasra, A.: Sequential Monte Carlo samplers. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 68(3), 411–436 (2006)

Douc, R., Cappé, O., Moulines, E.: Comparison of resampling schemes for particle filtering. In: ISPA 2005. In: Proceedings of the 4th International Symposium on Image and Signal Processing and Analysis, pp 64–69 (2005)

Doucet, A., de Freitas, N., Gordon, N. (eds.): Sequential Monte Carlo Methods in Practice. Statistics for Engineering and Information Science. Springer, New York (2001)

Elperin, T.I., Gertsbakh, I., Lomonosov, M.: Estimation of network reliability using graph evolution models. IEEE Trans. Reliab. 40(5), 572–581 (1991)

Fearnhead, P.: Sequential Monte Carlo Methods in Filter Theory. Ph.D. thesis, University of Oxford (1998)

Fearnhead, P., Clifford, P.: On-line inference for hidden Markov models via particle filters. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 65(4), 887–899 (2003)

Gerber, M., Chopin, N.: Sequential quasi Monte Carlo. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 77(3), 509–579 (2015)

Gilks, W.R., Berzuini, C.: Following a moving target-Monte Carlo inference for dynamic Bayesian models. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 63(1), 127–146 (2001)

Gordon, N., Salmond, D., Smith, A.: Novel approach to nonlinear/non-Gaussian Bayesian state estimation. IEE Proc. F Radar Signal Process. 140(2), 107–113 (1993)

Hammersley, J.M., Morton, K.W.: Poor man’s Monte Carlo. J. R. Stat. Soc. Ser. B (Methodol.) 16(1), 23–38 (1954)

Hartley, H.O., Rao, J.N.K.: Sampling with unequal probabilities and without replacement. Ann. Math. Stat. 33(2), 350–374 (1962)

Horvitz, D.G., Thompson, D.J.: A generalization of sampling without replacement from a finite universe. J. Am. Stat. Assoc. 47(260), 663–685 (1952)

Iachan, R.: Systematic sampling: a critical review. Int. Stat. Rev. 50(3), 293–303 (1982)

Kong, A., Liu, J.S., Wong, W.H.: Sequential imputations and Bayesian missing data problems. J. Am. Stat. Assoc. 89(425), 278–288 (1994)

Kou, S.C., McCullagh, P.: Approximating the \(\alpha \)-permanent. Biometrika 96(3), 635–644 (2009)

L’Ecuyer, P., Rubino, G., Saggadi, S., Tuffin, B.: Approximate zero-variance importance sampling for static network reliability estimation. IEEE Trans. Reliab. 60(3), 590–604 (2011)

Liu, J.S.: Monte Carlo Strategies in Scientific Computing. Springer, New York (2001)

Liu, J.S., Chen, R.: Blind deconvolution via sequential imputations. J. Am. Stat. Assoc. 90(430), 567–576 (1995)

Liu, J.S., Chen, R., Logvinenko, T.: A theoretical framework for sequential importance sampling with resampling. In: Doucet, A., de Freitas, N., Gordon, N. (eds.) Sequential Monte Carlo Methods in Practice. Statistics for Engineering and Information Science, pp. 225–246. Springer, New York (2001)

Lomonosov, M.: On Monte Carlo estimates in network reliability. Probab. Eng. Inf. Sci. 8, 245–264 (1994)

Madow, W.G.: On the theory of systematic sampling, II. Ann. Math. Stat. 20(3), 333–354 (1949)

Madow, W.G., Madow, L.H.: On the theory of systematic sampling, I. Ann. Math. Stat. 15(1), 1–24 (1944)

Marshall, A.: The use of multi-stage sampling schemes in Monte Carlo computations. In: Meyer, H.A. (ed.) Symposium on Monte Carlo Methods. Wiley, Hoboken (1956)

Ó Ruanaidh, J.J.K., Fitzgerald, W.J.: Numerical Bayesian Methods Applied to Signal Processing. Springer, New York (1996)

Paige, B., Wood, F., Doucet, A., Teh, Y.W.: Asynchronous anytime sequential Monte Carlo. In: Ghahramani, Z., Welling, M., Cortes, C., Lawrence, N., Weinberger, K. (eds.) Advances in Neural Information Processing Systems, vol. 27, Curran Associates, Inc., pp 3410–3418 (2014)

Rosén, B.: Asymptotic theory for order sampling. J. Stat. Plan. Inference 62(2), 135–158 (1997a)

Rosén, B.: On sampling with probability proportional to size. J. Stat. Plan. Inference 62(2), 159–191 (1997b)

Rosenbluth, M.N., Rosenbluth, A.W.: Monte Carlo calculation of the average extension of molecular chains. J. Chem. Phys. 23(2), 356–359 (1955)

Rubinstein, R.Y., Kroese, D.P.: Simulation and the Monte Carlo Method, 3rd edn. Wiley, New York (2017)

Sampford, M.R.: On sampling without replacement with unequal probabilities of selection. Biometrika 54(3–4), 499–513 (1967)

Tillé, Y.: Sampling Algorithms. Springer, New York (2006)

Vaisman, R., Kroese, D.P.: Stochastic enumeration method for counting trees. Methodol. Comput. Appl. Probab. 19(1), 31–73 (2017)

Wall, F.T., Erpenbeck, J.J.: New method for the statistical computation of polymer dimensions. J. Chem. Phys. 30(3), 634–637 (1959)

Acknowledgements

This work was supported by the Australian Research Council Centre of Excellence for Mathematical & Statistical Frontiers, under grant number CE140100049. The authors would like to thank the reviewers for their valuable comments, which improved the quality of this paper.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1: Unbiasedness of sequential without-replacement Monte Carlo

Let \(h^*\left( {\mathbf {x}}_t\right) = \mathbb {E}\left[ h\left( {\mathbf {X}}_d\right) \;\vert \;{\mathbf {X}}_t = {\mathbf {x}}_t\right] \). Note that

Consider the expression

where \(1 \le t < d\). Let \(I\left( {\mathbf {x}}_t\right) \) be a binary variable, where \(I\left( {\mathbf {x}}_t\right) = 1\) indicates the inclusion of element \({\mathbf {x}}_{t}\) of \(\mathscr {S}_{t}\left( \mathbf {S}_{t-1}\right) \) in \(\mathbf {S}_t\). We can rewrite (20) as

Recall that \(\mathbb {E}\left[ I_t\left( {\mathbf {x}}_t\right) \;\vert \;\mathbf {S}_{t-1}\right] = \pi ^t\left( {\mathbf {x}}_t\right) \). So the expectation of (21) conditional on \(\mathbf {S}_1, \ldots , \mathbf {S}_{t-1}\) is

So

Applying Eq. (22) d times to

shows that \(\mathbb {E}\left[ \widehat{\ell }\right] = \ell \).

Appendix 2: Unbiasedness of sequential without-replacement Monte Carlo, with merging

The proof is similar to “Appendix 1.” In this case, all the sample spaces and samples are sets of triples. Consider any expression of the form

It is clear that if the proposed merging rule is applied to \(\mathscr {T}_t\left( \mathbf {S}_{t-1}\right) \), then the value of (23) is unchanged. Using the definition of \(\mathscr {T}_t\left( \mathbf {S}_{t-1}\right) \), Eq. (23) can be written as

The expectation of (24) conditional on \(\mathbf {S}_{t-2}\) is

So

Applying Eq. (26) \(d-1\) times to

shows that \(\widehat{\ell }\) is unbiased.

Appendix 3: Without-replacement sampling for the change-point example

We now give the details of the application of without-replacement sampling to the change-point example in Sect. 1. Recall that \({\mathbf {X}}_d = \left\{ X_t \right\} _{t=1}^d\) is a Markov chain and \({\mathbf {Y}}_d = \left\{ Y_t \right\} _{t=1}^d\) are the observations. Let f be the joint density of \({\mathbf {X}}_d\) and \({\mathbf {Y}}_d\). Note that

for some unknown constants \(\left\{ c_t\right\} _{t=1}^d\). Define the size variables recursively as

This updating rule is slightly different from that given in (17). Equations (30) and (27) require an initial distribution for \(X_1 = \left( C_1, O_1\right) \), which we take to be

Define

and let \(\mathbf {S}_1\) be a sample chosen from \(\mathscr {U}_1\), with probability proportional to the last component. Assume that sample \(\mathbf {S}_{t-1}\) has been chosen, and let

We account for the unknown normalizing constants in (27) by using an estimator of the form (12). This results in Algorithm 5.

Proposition 2

The set \(\mathbf {S}_d\) generated by Algorithm 5 has the property that

Proof

Define

Using (27),

Consider any expression of the form

Equation (31) can be written as

The expectation of (32) conditional on \(\mathbf {S}_{t-2}\) is

So

Applying Eq. (33) \(d-1\) times to

completes the proof. \(\square \)

We now describe the merging step outlined in Fearnhead and Clifford (2003), applied to the estimation of the posterior change-point probabilities

The method we describe here can be extended fairly trivially to also estimate \(\left\{ \mathbb {P}\left( O_t = 2 \;\vert \;{\mathbf {Y}}_d = {\mathbf {y}}_d\right) \right\} _{t=1}^d\).

In order to perform this merging, we must add more information to all the sample spaces and the samples chosen from then. The extended space will have \({\mathbf {x}}_t\) as the first entry, the particle weight w as the second entry, and a vector \(\mathbf m_t\) of t values as the third entry. The last entry will be an estimate of \(\left\{ \mathbb {P}\left( C_i = 2 \;\vert \;{\mathbf {y}}_t\right) \right\} _{i=1}^t\). Let

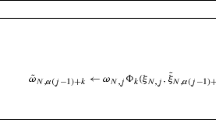

Note that the third component of every element of \(\mathscr {V}_1\) is either 0 or 1. Let \(\mathbf {S}_1\) be a sample drawn from \(\mathscr {V}_1\), with probability proportional to the second element. Assume that sample \(\mathbf {S}_{t-1}\) has been chosen, and let \(\mathscr {V}_t\left( \mathbf {S}_{t-1}\right) \) be

We can now define Algorithm 6, which uses the merging step outlined in Proposition 4.

Proposition 3

If the merging step is omitted, then the set \(\mathbf {S}_d\) generated by Algorithm 6 has the property that

Proof

Define

It can be shown that

Consider any expression of the form

Equation (34) can be written as

The expectation of (35) conditional on \(\mathbf {S}_{t-2}\) is

So

Applying Eq. (36) \(d-1\) times to

completes the proof. \(\square \)

Proposition 4

Assume we have two units \(\left( {\mathbf {x}}_t, w, \mathbf m_t\right) \) and \(\left( {\mathbf {x}}_t', w', \mathbf m_t'\right) \), both corresponding to paths of the Markov chain with \(C_t = 2\) and \(O_t = 2\). Then, we can remove these units, and replace them with the single unit

This rule also applies if both units correspond to \(C_t = 2\) and \(O_t = 1\).

Proof

Under the specified conditions on \({\mathbf {x}}_t\) and \({\mathbf {x}}_t'\),

This shows that

So replacement of this pair of units by the specified single unit does not bias the resulting estimator. \(\square \)

Rights and permissions

About this article

Cite this article

Shah, R., Kroese, D.P. Without-replacement sampling for particle methods on finite state spaces. Stat Comput 28, 633–652 (2018). https://doi.org/10.1007/s11222-017-9752-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11222-017-9752-8