Abstract

Measuring corruption has become a global industry. An important and commonly used data source are several large-scale multi-country projects that survey citizens directly about their perceptions and experiences of corruption. Such indicators are regularly used by political scientists to test theories on political attitudes and behavior. However, we still know little about the quality of many of these measures. This paper deploys a large survey with two embedded experiments to investigate two potential sources of bias in indicators based on citizens’ perceptions and experiences of corruption, stemming from political bias and sensitivity bias. First, I draw upon research on economic perceptions and argue that respondents are likely to respond in a political manner when asked how they perceive the level of corruption in their country. I test this argument by experimentally priming respondents’ political affiliations before asking for their perception of corruption. Second, I argue that standard questions probing peoples’ corruption experiences are likely to be subject to sensitivity bias. I test this second argument by constructing a list experiment. Overall, the results show strong and predictable sources of response bias that also vary significantly between important subgroups. I discuss implications for researchers and practitioners.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

There is now a near consensus among researchers about the destructive consequences of corruptionFootnote 1 and the societal benefits of clean government (Bardhan 1997; Holmberg and Rothstein 2011; Mauro 1995; Mungiu-Pippidi 2013; Rose-Ackerman 1999; Rothstein 2011). As a consequence, measuring corruption has become a global industry, with leading actors like Transparency International (TI) spending millions of dollars on the construction of corruption indicators and the surveying of ordinary citizens’ attitudes about, and experiences of, corruption. These measures are nowadays used to estimate the incidence of corruption in different countries and to research various corruption-related questions in the social sciences. The growing literature on corruption and political attitudes and behavior has in large part relied on such indicators (e.g. Agerberg 2019; Anderson and Tverdova 2003; Dahlberg and Holmberg 2014; Klasnja et al. 2016; Morris and Klesner 2010; Seligson 2002; Xezonakis et al. 2016; Zechmeister and Zizumbo-Colunga 2013). However, we still know little about the quality of many of these commonly used measures. This paper aims at investigating two potential sources of bias in frequently used indicators based on citizens’ perceptions and experiences of corruption, stemming from political bias and sensitivity bias.

Most existing corruption indicators are either perception based or based on self-reported experiences of corruption. While important corruption measures like TI’s Corruption Perception Index (CPI)Footnote 2 are largely based on the (aggregated) perceptions of business people and country experts, many large-scale multi-country projects survey citizens directly about their perceptions and experiences of corruption.Footnote 3 Some researchers argue that these citizen surveys—especially experience-based surveys—provide a more objective measure of corruption that can be used as a benchmark to which expert-based measures can be compared (Donchev and Ujhelyi 2014; Razafindrakoto and Roubaud 2010; Treisman 2007). Citizen surveys on corruption include, for instance, the Global Corruption Barometer (GCB)Footnote 4 and the Eurobarometers on corruption.Footnote 5 One important advantage of these surveys is that they provide individual-level data from ordinary people around the world. These data can then be used to study important individual-level research questions on topics like clientelism, political legitimacy, and voting. Standard corruption questions are therefore nowadays incorporated into general citizen surveys like Lapop,Footnote 6 World value survey,Footnote 7 Comparative Study of Electoral Systems,Footnote 8 and the International Social Survey Programme.Footnote 9 Wysmulek (2019) identify that the number of items on corruption (both perception and experience-based) in international survey projects has grown from just one in 1989 to nearly a hundred in 2017. However, while data availability has increased rapidly, little is known about how people form their perceptions of corruption and to what extent their reports of encounters with corruption are accurate.Footnote 10

A large body of research has documented reporting bias among survey respondents, where an individual’s reported perception of some phenomenon might be influenced by factors other than the actual occurrence of the phenomenon (see, for instance, Bartels 2002; Berinsky 1999; Bertrand and Mullainathan 2001; Tourangeau and Yan 2007). This paper explores two potential sources of bias in individual reports on perceptions and experiences of corruption. First, I draw upon research on economic perceptions and economic voting, and related research on political bias and motivated reasoning, and argue that respondents are likely to respond in a political manner when asked how they perceive corruption in their country. Corruption is an issue that citizens view as important, especially in developing democracies and countries with poor governance (see Eurobarometer 2017; Fisman and Golden 2017; Holmes 2015; World Economic Forum 2017). This is also an issue that often receives intense media coverage (not least in the case of high-level corruption scandals) and hence an issue where citizens can be expected to react to information on the basis of prior affect and political affiliations (see Anduiza et al. 2013; Fischle 2000; Jerit and Barabas 2012). As a result, we should, for instance, expect incumbent supporters to in general report a substantially more positive view of corruption in their country, compared to other groups. In this sense, reported corruption perceptions can be a way of justifying ones political affiliations (Flynn et al. 2017; Leeper and Slothuus 2014).

Second, I argue that direct questions about corruption experiences are likely to be sensitive and hence subject to ‘sensitivity bias’ (or ‘social desirability bias’) (Blair et al. 2018). Research shows that citizens around the world in general have a strong distaste for corruption and bribe giving (see below). Bribery is also something that is illegal in essentially every country (Fisman and Golden 2017, pp. 26–29). Even in countries where corruption is very widespread, most citizens still view bribe payments and the misuse of public money as a serious moral wrong that can not be justified (Persson et al. 2013; Rothstein and Varraich 2017). Therefore, admitting to having been part of a corrupt transaction is arguably an act of revealing sensitive information and hence something that is likely to be under-reported. This, in turn, makes estimates of the level of corruption in society based on experiential surveys likely to be biased.

I test these conjectures with two preregistered experiments embedded in a large survey fielded in Romania to over 3000 respondents.Footnote 11 The first experiment is designed to randomly make the political affiliations of one group of respondents more salient before answering questions about corruption perceptions. The second experiment, deploying a so called ‘list experiment’, is designed to minimize the likelihood of sensitivity bias among another group of respondents (Blair and Imai 2012; Glynn 2013). The results show strong evidence of different types of response bias with regard to questions about corruption. First, government supporters systematically report a much more positive view of the corruption situation in Romania. A simple question order prime—asking about political affiliation before corruption perceptions—makes this effect almost twice as large, suggesting that a substantial part of the gap is the result of respondents defending and justifying their political identity (Flynn et al. 2017; Leeper and Slothuus 2014). Second, the results suggest that direct questions about corruption experiences are sensitive and under-reported. For some groups, like women, the under-reporting is massive, according to the estimates. For this group the true rate of corruption victimization might be three times as high as the reported rate.

The study makes several contributions to the literature. Research has shown that respondents often show strong political bias with regard to attitudes and perceptions about the economy. The study shows that questions about corruption exhibit very similar patterns, and that responses to these questions are malleable and susceptible to political bias—even in a political system with weak party identification, like Romania (Tatar 2013). The study also demonstrates that direct questions about corruption experiences need to be treated like sensitive questions, where different groups show diverging patterns of reporting bias. These results also call into question some conclusions from previous research about who is most likely to be the victim of corruption.

Response Bias in Surveys

I consider two commonly used individual-level corruption indicators in this article: perception-based and experience-based measures. These two are complementary and capture different aspects of how individuals view and experience corruption in society. Perception-based indicators capture something more abstract since it is hard to find an objective benchmark and determine the ‘actual’ level of corruption in society. Rather, these measures tap into individuals’ general perceptions of how common corruption is overall in different sectors. In the case of perceived political corruption, for instance, these indicators are likely affected by corruption scandals discussed in the media and overall news reporting on corruption. Experience-based measures, on the other hand, should in theory capture something objective: whether or not an individual has been asked to pay a bribe. This is a relatively unambiguous question, even though there might be some variation in what exactly is considered a ‘bribe’. Within a specific country, however, this variation is likely to be rather modest. I thus expect perception-based measures to track high-level (or ‘grand’) corruption to a larger extent, whereas experience-based measures mostly should capture direct experiences with ‘petty’ corruption (Fisman and Golden 2017). Due to the different nature of these two categories of measures I expect them to be subject to different forms of bias.Footnote 12 I elaborate on this in the next two sections.

Political Bias

Researchers have long acknowledged that survey responses are sometimes unstable and inaccurate, and that there are clear psychological incentives to shape responses in certain ways (Berinsky 1999; Bertrand and Mullainathan 2001; Zaller 1992). In particular, research on citizens’ evaluations of the economy has uncovered strong response biases stemming from the respondent’s political leanings (Evans and Andersen 2006; Lau et al. 1990; Palmer and Duch 2001; Sears and Lau 1983; Wilcox and Wlezien 1996).

An early strand of research argued that this type of bias can arise because party identification works as a ‘perceptual screen’ through which economic performance is assessed, and that an individual tends to see what is favorable to his or her partisan orientation (Campbell 1960). Later research shows that even beliefs about basic objective political facts can be strongly affected by political identification, partly due to selective information processing (Bartels 2002; Gerber and Huber 2010). Respondents might also reason their way to the conclusion that the economy is doing better when their preferred party or politician is in power. The theory of motivated reasoning holds that all reasoning is motivated in the sense that it is driven by specific motives and goals. Taber and Lodge (2006) argue that these goals often are directional goals (as opposed to accuracy goals) where individuals apply their reasoning powers in defense of a prior specific conclusion. Directional goals are thus often defensive of particular identities, attitudes, or beliefs that are strongly held (Flynn et al. 2017; Leeper and Slothuus 2014).

In political science a large body of research has focused on political bias in economic perceptions. Much less is known about how political affiliations might interact with corruption perceptions. Recent decades have seen a rapid increase in efforts to measure corruption, both via expert surveys and surveys of the public (Fisman and Golden 2017; Holmes 2015; Wysmulek 2019). Many of the survey instruments used in the latter category clearly resemble the instruments used to tap into people’s economic perceptions; Klasnja et al. (2016) even adopt the terms ‘sociotropic’ and ‘egotropic corruption voting’ directly from the economic voting literature. Researchers use such measures to study, for instance, how individual corruption perceptions and experiences are related to incumbent support and vote choice (Agerberg 2017; Gingerich 2009; Klasnja et al. 2016; Xezonakis et al. 2016; Zechmeister and Zizumbo-Colunga 2013), and how corruption perceptions relate to political legitimacy and support for the democratic system (Anderson and Tverdova 2003; Dahlberg and Holmberg 2014; Morris and Klesner 2010; Seligson 2002). To be able to estimate the effect of corruption in such settings it is important that corruption perceptions are (exclusively) determined exogenously, to avoid biased estimates. In this sense, I define ‘political bias’ as a situation where respondents’ reported corruption perceptions instead are partly endogenous to their political identity.

Researchers interested in the effects of corruption would hence hope that these perceptions are only determined by external changes in an individual’s environment. However, recent studies give us reasons to believe that this might not be the case. Anduiza et al. (2013) show that tolerance for corruption can have a clear political dimension. In a survey experiment fielded in Spain the authors show that respondents’ judgment of the seriousness of a political corruption scandal partly is determined by whether the accused politician belongs to the respondent’s preferred party. The authors argue, in line with the literature on economic perceptions, that this is a way for respondents to reduce cognitive dissonance: by downplaying the importance of corruption when it affects the own party respondents make the political world more consistent with their political predispositions.

Jerit and Barabas (2012) show that individual-level motivated bias is present on a wide range of topics, as long as a question has importance or strong political implications (see also Bolsen et al. 2014). The authors show that such bias is particularly likely for issues receiving high media coverage. I argue that corruption perceptions are likely to be affected in this way: people—especially in developing democracies—view corruption in society as a question of great importance (Fisman and Golden 2017; Holmes 2015). For instance, when the World Economic Forum in 2017 surveyed individuals in 186 countries about the most pressing political issue ‘government accountability and transparency/corruption’ ranked 1st (World Economic Forum 2017). About 25% of Europeans say that they are ‘personally affected by corruption in their daily lives’; the number for countries like Romania, Croatia, and Spain is as high as 60–70% (Eurobarometer 2017). In such countries, corruption trends in society and both local and high-level scandals are often intensely covered by the media (see Hajdu 2018). Voters in countries where corruption is widespread also tend to associate current levels of perceived corruption with the incumbent government (Klasnja 2015; Klasnja et al. 2016; Xezonakis et al. 2016), and view ‘the fight against corruption’ as one of the priorities that should be most important for political leaders (Holmes 2015). Government supporters therefore have a ‘preferred world-state’ where corruption levels are decreasing (this supports their political leanings), while opposition supporters have incentives to view the situation as worse (this would be a reason to oust the current incumbent) (Jerit and Barabas 2012). As suggested by the literature on motivated reasoning, voters who sympathize with the government (for whatever reason) might therefore convince themselves that the situation with regard to corruption is more positive than what is warranted by evidence.

In general, a connection between reported corruption perceptions and government support can exist for two reasons: (1) the respondent might experience changing corruption levels in society and adjust his or her support for the incumbent accordingly, (2) the respondent reports perceived corruption levels that are consistent with his or her political affiliations. If the latter is true, making political affiliations more salient should affect reported corruption perceptions, whereas if corruption perceptions are only determined exogenously this should not be the case. In line with the literature reviewed above, I argue that we have reasons to believe that some degree of political bias is present in the reporting of corruption perceptions. In this sense, a respondent’s reported corruption levels can be a way to defend and justify his or her beliefs about the current government. I focus specifically on government supporters since this group is relatively easy to define, also in a multiparty system (I discuss this in more detail below). Drawing on this discussion, my first hypothesis has two parts and can be stated as follows:

-

\(H_{1a}.\) On average, government supporters will report lower perceived levels of corruption compared to other respondents.

-

\(H_{1b}.\) Increasing the salience of political affiliations will cause government supporters to report even lower levels of corruption.

\(H_{1a}\) predicts an empirical pattern that is more likely to be true if the political bias (PB) hypothesis is true. Finding this pattern does not, however, provide definite evidence for the PB hypothesis; the pattern could, in theory, arise exclusively because people with low corruption perceptions become government supporters. Here, \(H_{1b}\) instead provides a direct test of one of the main implications of the hypothesis.

Sensitivity Bias

According to Tourangeau and Yan (2007) a survey question is likely to be ‘sensitive’ if it touches on ‘taboo’ topics, if it induces concerns that the information given will become known to a third party, or if the question elicits answers that are socially unacceptable or undesirable. If this is the case the respondent can be expected to give a ‘socially desirable’ answer. That is, an answer that the respondent thinks will be viewed favorably by others, resulting in under-reporting of ‘undesirable’ attitudes and behavior. Such sensitivity bias (SB) has been shown to be present on a wide range of topics based on self-reports, from questions about drug use (Fendrich and Vaughn 1994) to questions on voter turnout (Holbrook and Krosnick 2010).Footnote 13

Surveys based on self-reported experiences are also common in corruption research. These so called ‘experiential surveys’ are one of the most direct methods for gauging the amount of corruption in society, by simply asking citizens about their experiences of corruption (e.g. “Have you in the past 12 months had to pay a bribe in any form?”). The method is now widely deployed by several large organizations in multi-country surveys (Holmes 2015), including, for instance, the Global Corruption Barometer. Should we expect citizens to truthfully report their first-hand experiences with corruption and bribery?

Kraay and Murrell (2016) argue that some respondents might be ‘reticent’ to tell interviewers about their experiences with corruption. The authors develop a statistical model of potential under-reporting that shows that the true rate of bribe payment in ten developing countries might be substantially higher than the conventional estimates, based on direct questions. One reason that direct questions might be sensitive is that bribery—in the sense of an official demanding a bribe for a service—is illegal in essentially every country in the world (Fisman and Golden 2017, pp. 26–29). In an overview of the research on sensitive questions Krumpal (2013) identifies several studies reporting substantial SB on topics related to criminal behavior and crime-victimization. Admitting to having been part of a corrupt exchange (for example, paying a bribe) is to admit part in an illegal transaction, and in the light of this something that could be considered sensitive. Even a question about whether an individual has been asked to pay a bribe should be sensitive, given that an affirmative answer signals that the individual potentially also completed the corrupt transaction.

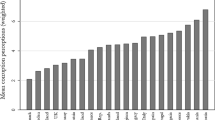

Moreover, corruption is something that people find morally reprehensible. This is true even in countries where corruption is ubiquitous (Karklins 2005; Persson et al. 2013; Rothstein and Varraich 2017). World value survey (WVS) has been asking about people’s attitudes towards bribery in several waves and respondents all over the world typically show a strong distaste for corruption.Footnote 14 Figure 1 shows data from Romania—one of the most corrupt countries in Europe—based on the most recent WVS wave for this question. It is clear that there exists a very strong norm against bribery, even in this context where corruption is widespread: over 80% of respondents say that accepting a bribe can never be justified.

Given this norm, it is hence reasonable that people would view admitting to being part of a corrupt transaction as something that is ‘socially undesirable’ (Tourangeau and Yan 2007). The topic of vote-buying has been extensively studied and has obvious similarities with the case of bribe taking: both acts are illegal transactions that are generally stigmatized. Traditionally, studies on vote-buying asked direct questions about its occurrence and found limited evidence. In recent years researchers have acknowledged that such direct questions might be sensitive and instead considered more sophisticated survey methods. As a result, several more recent studies have discovered substantial under-reporting of vote-buying due to SB (Carkoglu and Erdem Aytac 2015; Corstange 2012; Gonzalez-Ocantos et al. 2012). Important to note is that severe under-reporting has been found even for questions asking respondents whether someone ‘offered’ them to sell their vote—and not only for question asking if they actually sold their vote. Given these similarities to vote-buying, the illegality of bribery, and people’s almost unanimous distaste for its occurrence, I argue that we should expect a similar pattern with regard to reported corruption experiences:

-

\(H_{2}.\) Reported experiences of bribery are subject to sensitivity bias and hence under-reported.

Research Design and Methods

An Experimental Study in Romania

To test these hypotheses about political bias (\(H_{1}\)) and sensitivity bias (\(H_{2}\)) in corruption reporting I conducted a large survey in Romania, with two embedded survey experiments. Romania is one of the most corrupt countries in Europe, where the problem is very much a current issue. After the legislative elections of 2016 the Social Democratic Party (PSD) and the Alliance of Liberals and Democrats (ALDE) formed the governing coalition. Following massive protests in 2017 against a bill that (among other things) would have pardoned officials imprisoned for bribery offenses (see The New York Times May 4th, 2017), the government resigned and was replaced by a second iteration of the coalition. After an internal power struggle in PSD a third iteration of the PSD–ALDE coalition took office in January 2018. Due to the political turbulence, and partly related to accusations of corruption within the government, the public support for the coalition decreased over the course of 2018. In the end of 2018 many opinion polls showed a support of around 35% for the governing coalition.Footnote 15

Similar situations, with strong politicization of corruption, are not uncommon—not least in other eastern European countries (Bågenholm 2013). Researchers even talk about an incumbency disadvantage in many developing democracies, where holding office seems to decrease chances of reelection. Such an effect has been demonstrated in, for instance, Brazil (Klasnja and Titunik 2017), India (Uppal 2009), and post-Communist Eastern Europe (Roberts 2008). Klasnja (2015) shows, in a study of Romanian mayors, that one plausible explanation for this pattern is corruption, where office holders exploit their position to reap private gains—at the cost of subsequent electoral success.

The turbulent situation during the past years in Romanian politics is thus rather typical for many young democracies (e.g. Eastern Europe, Latin America), a group of countries that also tend to struggle with widespread corruption in politics and in society overall (Keefer 2007). This makes Romania a relevant case to study. Moreover, partisanship has generally been relatively weak in Romania in the post-Communist period (Tatar 2013), a pattern that is common in developing democracies. This makes the case a relatively tough test for the political bias hypothesis. Any effects found in a context like this, with weak partisanship, are likely to be more pronounced in contexts where partisanship is stronger. The results from the study at hand should hence be of high relevance for developing democracies in Europe and elsewhere, where questions about governance are on the political agenda and politicization of corruption is common.

Testing the Political Bias Hypothesis

The aim of the study is to assess each hypothesis in turn with two different research designs. Codings and analysis-strategies were pre-specified in the pre-analysis plan. To test the political bias hypothesis the first design exploits question order effects (Bertrand and Mullainathan 2001). With regard to economic voting, researchers have shown that question order effects can be substantial (e.g. Wilcox and Wlezien 1996). Sears and Lau (1983) argue that two such effects are common: political preferences might be personalized when assessed immediately after the respondent’s own economic situation has been made salient, or perceptions of the economic situation might be politicized when assessed immediately after important political preferences have been made salient. Given my hypotheses in this paper I focus on the latter. Questions about corruption perceptions subsequent to the political questions are assumed to exhibit stronger politically biased response patterns since asking the political questions make the respondent’s political identity more salient (Klar 2013). In this sense, the question ordering activates a particular political ‘frame’ around the corruption questions (Zaller 1992). If my hypothesis about PB is correct, ‘politicizing’ corruption perceptions in this way will significantly affect the response to these questions.

In this setup, some respondents (the treatment group) were randomly assigned to a question ordering where the questions about their political views were asked before a specific corruption question (political prime), while the rest of the respondents (the control group) were given an ordering where the same corruption question instead was asked before the political questions. The setup hence randomly increases the salience of political affiliations for a group of respondents with regard to a specific corruption question.

Following Evans and Andersen (2006) I asked the following political questions: (1) What political party would you vote for if the national parliamentary election were today? (2) Please choose one of the following phrases to say how you feel about the current government of Romania: ‘Strongly against’, ‘Against’, ‘Neither in favor nor against’, ‘In favor’, ‘Strongly in favor’. Based on these questions I coded respondents as government supporters if they said that they would vote for one of the parties in the current ruling coalition in Romania (PSD and ALDE) and answer that they are ‘Neither in favor nor against’, ‘In favor’, or ‘Strongly in favor’ of the current government in Romania. This way I identify government supporters in terms of vote intention, but exclude respondents that state that they are ‘against the government’ from the definition. I consider different coding decisions with regard to this variable below.

To measure corruption perceptions I asked three different questions that are commonly used in the literature and that are of theoretical interest. The questions are regularly asked in multi-country surveys like the GCB and the ISSP. Together, the three questions give a reasonably comprehensive picture of how the respondent perceives current corruption in Romania, both in terms of absolute levels and in terms of recent change. The questions ask about whether the respondent perceive that corruption has increased during the past year (corruption increase), how many politicians that are involved in corruption (corruption in politics), and how worried the respondent is about the consequences of corruption for society (corruption worry). I also included a standard question about economic perceptions as a point of comparison (economy worse). All outcome questions were coded so that high values indicate ‘bad’ outcomes; increased corruption, worsened economy, high political corruption, and high worry about corruption. See the Supplementary Materials for more details about these questions.

To avoid artificially induced correlation between different corruption items, while still retaining statistical power, the experiment had the following structure. First, a respondent was randomly assigned to one of the four corruption/economy questions above. This question was asked before any questions about political preferences. A couple of questions later in the survey the respondent was asked the two political questions described above, after which the respondent was randomly assigned to one of the three remaining corruption/economy questions. This means that each respondent is part of the control group with regard to one of the corruption/economy questions, and part of the treatment group with regard to another of these questions. For each specific corruption/economy question, about a fourth of the sample was hence assigned to the control group and a fourth was assigned to the treatment group. The basic structure of the experiment is illustrated in the Supplementary Materials where I also test the basic assumptions underlying the design empirically.

The effects of interest with regard to the corruption/economy questions can then be estimated with a simple regression model:

where \(y_{i}\) represents the outcome variable of interest, \(x_{i}\) is an indicator variable equal to 1 if a respondent is a government supporter and 0 otherwise, \(T_{i}\) indicates if a respondent is in the treatment group (\(T_{i}=1\)) or the control group (\(T_{i}=0\)), \((x_{i} \times T_{i})\) is an interaction term including \(x_{i}\) and \(T_{i},\) and \(\epsilon _{i}\) represents the error term. The treatment, again, consists of the intervention of ‘priming’ respondents with their political preference before answering one of the three corruption questions. In the interest of space I simply refer to ‘corruption perceptions’ as a catch-all term referring to all three questions (change, level, and worry—coded in the way described above). As per \(H_{1a},\) I expect \(\beta _{1}\) to be \(<0\) (on average, government supporters perceive corruption to be lower) and, as per \(H_{1b},\) I expect \(\delta \) to be \(<0\) (the effect of the prime is negative for government supporters—that is, government supporters report even lower perceived corruption when their political preference has been made salient).Footnote 16 I consider a confirmation of these expectations for all three corruption outcomes to be strong evidence in favor of \(H_{1a}\) and \(H_{1b}.\) I consider a partial confirmation of the expectations (finding significant results in the expected direction for one or two of the outcomes) to be somewhat weaker evidence in favor of \(H_{1a}\) and \(H_{1b}.\)Footnote 17

To facilitate interpretability of the results I first estimate Eq. (1) using OLS with robust standard errors as the baseline model. The Supplementary Materials include several additional robustness checks.

Testing the Sensitivity Bias Hypothesis

Kraay and Murrell (2016) develop a method to estimate under-reporting of corruption that is based on modeling respondents’ answers to a combination of a direct corruption question and several ‘random response’ questions (see Blair et al. 2015). While the approach definitely has strengths, it also relies on fairly strong assumptions about which respondents that actually are ‘reticent’ (and hence would provide an untruthful answer to the sensitive question). Moreover, the approach also involves asking ten different ‘random response’ questions, each involving the respondent flipping a coin (see Azfar and Murrell 2009 for an earlier version of this approach).

To test the sensitivity bias hypothesis I instead deploy a list experiment, which was implemented in the middle of the survey.Footnote 18 This is a conveniently simple survey method, previously used to estimate the prevalence of sensitive behavior like drug abuse, cheating, and vote buying, where the respondent does not have to directly disclose any information about the sensitive item (see Glynn 2013).Footnote 19 The list experiment works by aggregating the sensitive item with a list of non-sensitive items so that the respondent only has to indicate the number of items that apply and not which specific items that are true. To implement this design, I asked the respondents to do the following:

Here is a list with different things that you might have done or experienced during the past 12 months. Please read the list carefully and enter how many of these things that you have done or experienced. Do not indicate which things, only HOW MANY.

Attending a work-related meeting;

Investing money in stocks;

Being unemployed for more than 9 months;

Discussing politics with friends or family.

The treatment group was shown the same list but with a fifth item added (the item-order was randomized for all lists):

Being asked to pay a bribe to a public official.

The design protects the respondents’ privacy since as long as respondents in the treatment group answer with anything less than “five”, no one directly admits to answering affirmative to the sensitive question (having been asked to pay bribe). Following the advice in Glynn (2013) the control items were chosen to be negatively correlated to avoid floor and ceiling effects (where respondents would select either 0 or all items). As shown by Blair and Imai (2012), if we assume that the addition of the sensitive item does not alter responses to the control items and that the response for each sensitive item is truthful, then randomizing respondents into the treatment and control groups allows the analyst to estimate the proportion affirmative answers for the sensitive item by taking the difference between the average response among the treatment group and the average response among the control group (i.e. a difference-in-means estimator).Footnote 20

By asking the sensitive question directly to the control group (who did not receive the sensitive item on their list) I can also model the amount of sensitivity bias by comparing the direct question with the estimated proportion of affirmative answers to the sensitive item in the list experiment. For the direct question I asked: In the past 12 months were you at any point asked to pay a bribe to a public official? The answer alternatives given were ‘yes’, ‘no’, and ‘prefer to not answer’. I coded affirmative answers as 1 and other answers as 0.Footnote 21

For the basic analysis of the list experiment I rely on the linear estimator in Imai (2011), corresponding to a standard difference-in-means estimator. To estimate the overall level of SB I use the procedure described in Blair and Imai (2012) and compare the predicted response to the direct question, modeled with a logistic regression model, to the predicted response to the sensitive item in the list experiment.Footnote 22 The predicted response to the sensitive item in the list experiment can then be compared to the response to the direct question to get an estimate of the amount of SB (including 95% confidence intervals for the estimates, obtained via Monte Carlo simulations). I consider a SB estimate that is positive and statistically different from 0 to be evidence in favor of \(H_{2}.\)

To check for heterogeneity in SB I also perform exploratory analyses with regard to the following variables (the variables were identified based on previous research; see discussion below): Government supporter, Gender, University degree, Big city inhabitant, Age, High-income household (top 20% of the distribution in the data set). For the exploratory analyses I rely on the non-linear least squares (NLS) estimator developed in Imai (2011) (see Supplementary Materials).

The Survey

After a pilot study was conducted to test the questions in the survey as well as one of the assumptions underlying the PB experiment (see Supplementary Materials), the final survey was fielded between 19th of December 2018 and 24th of January 2019 in collaboration with the public opinion research company Lucid.Footnote 23 This is a survey respondent aggregator that uses quota sampling to target a nationally representative sample. Research from the US find that experimental treatment effects on Lucid match well with effects found in US national probability samples (Coppock and McClellan 2019). Based on two series of power analyses (see Supplementary Materials) the target number of respondents was set to at least 2900. The sample was collected based on nationally representative quotas on gender, age, and region.Footnote 24 3027 Respondents in total completed the survey. Descriptive statistics for the sample are available in the Supplementary Materials.

Results

Political Bias Experiment

I start by evaluating \(H_{1}\) (a and b). The unpopularity of the current government is reflected in the survey: about 24% of the sample said they would vote for a party in the governing coalition if the national parliamentary election were today. PSD is still the most popular party in the sample, but its share of the total vote decreases as many respondents indicated that they would ‘not vote’. The share true ‘government supporters’ according to the definition above is smaller, at about 14%. While this group is relatively small, it still contains a large number of respondents (about 410) given the large overall sample. Below I also consider alternative ways of coding the ‘government support variable’ that utilizes the sample in a different way.

The PB hypothesis predicts that government supporters, on average, should have a more positive view of corruption in Romania, and that this group should report an even more positive view when primed with their political affiliation. To test this, I estimated Eq. (1) for each of the four outcome variables (the three corruption variables + the economy variable), using OLS.Footnote 25 The results are reported in Table 1.

The coefficient for Government support shows the baseline difference between government supporters and others for the control group. That is, in the group that answered the outcome questions before the political questions. The first two models show the results for the outcomes corruption change and economy change—the two outcome questions that are the most similar in terms of structure. The results for these outcomes are also very similar: government supporters in general place themselves about one category lower (in the direction of less corruption/better economy) than the rest of the respondents. The corresponding coefficient for the last other two outcomes are somewhat smaller, but still highly significant (\(p<0.001\)). Overall, this is in line with \(H_{1a}\): government supporters report a much less negative view of corruption in Romania in general and say that they are less worried about the problem.

The interaction effect (Gov. support \(\times \) Prime) estimates the effect of the ‘political prime’—e.g. being asked about political affiliation before the corruption questions, rather than the other way around. As shown in the table, the effect is large. For the corruption increase outcome the difference between government supporters and others increases from 0.9 in the control group to about 1.6 (\((-0.89)+(-0.66)\)) in the treatment group (\(p<0.001\)). The pattern is, again, similar to that for the economy worse outcome where the difference increases from 1.2 to 1.7 (\((-1.19)+(-0.47),\) \(p<0.01\)). In both cases are government supporters substantially more positive (or less negative) to begin with, and become even more positive when randomly assigned to the political prime.

The last two outcomes show the same pattern: government supporters think corruption in politics is lower and worry less about corruption, a difference that becomes significantly more pronounced with the political prime (both interaction effects are statistically significant at the 0.05-level). In this experimental condition government supporters answer on average about 0.8 to 1 categories lower. To graphically display the results, predicted responses for all four outcomes are shown in Fig. 2.Footnote 26

Predicted responses based on regression estimates in Table 1. Including 95% confidence intervals

In sum, the results provide strong evidence in favor of the PB hypothesis. The estimates show that reported corruption perceptions differ substantially depending on whether a respondent supports the government or not. Moreover, the experiment shows how a simple prime (changing the order of the questions) can strongly affect the results and increase the ‘supporter effect’. This is clearly in line with previous research on economic perceptions (as also shown by the worse economy estimates), and suggests that respondents, to a significant extent, shape their reported perceptions to align with their stated political affiliation. This is clear evidence that respondents’ reported corruption perceptions are not simply a reflection of external circumstances in society. Rather, when increasing the salience of political affiliations respondents seem to engage in a ‘directional reasoning process’ where they use their response to the corruption question to substantiate their previously stated political preferences.

The Supplementary Materials include several robustness checks, including alternative codings of the supporter variable. While the robustness checks in general corroborates the main results, they also confirm that the ‘prime effect’ for the outcome corruption in politics is slightly weaker overall.Footnote 27

List Experiment

I now turn to the SB hypothesis. As argued above, it is reasonable to assume that the often used direct question about bribe experience is sensitive and hence under-reported. To test this, I started by estimating the proportion of affirmative responses to the sensitive item in the list experiment using the basic difference-in-means estimator (Glynn 2013). I then estimated a logistic regression intercept-only model with the responses to the direct bribe question as the dependent variable. The two estimates show what proportion of respondents giving an affirmative answer when their privacy is protected (in the list experiment) vs when their privacy is not protected (the direct question). I also used the procedure in Blair and Imai (2012) to compute 95% confidence intervals for the estimates and the difference between them. The results are presented in Table 2 and displayed graphically in Fig. 3.

The direct estimate of 19% ‘yes’ is very close to the reported statistic in the 2017 Eurobarometer for Romania at about 18% (Eurobarometer 2017). This stand in stark contrast to the list estimate at over 35%. The difference of more than 16 percentage points is highly statistically significant. This is clear evidence that respondents under-report the sensitive item when asked directly and suggests that the true estimate might be 90% higher than the estimate based on the commonly used bribe question.Footnote 28 As noted above, these estimates are based on the ‘less sensitive’ version of the bribe question (the other version asking if the respondent actually paid the bribe), and are also based on a survey mode that should be less likely to elicit SB (online survey).

Voters under-reporting their experiences with corruption is obviously a serious problem for researchers or organizations trying to estimate the occurrence of bribery based on direct questions. However, if this sort of measurement error is randomly distributed across the population it would still be possible to use direct questions to explore the dynamics of bribery and assess which individuals or groups that are most likely to be asked to pay bribes. This is for instance done in Mocan (2004). To explore if the extent of under-reporting differs between groups I used the NLS estimator to model the relationship between respondent characteristics and responses to the sensitive item (Blair and Imai 2012), based on the six variables described above. I also estimated a logistic regression model regressing the direct bribe question on the same variables. Comparisons between the direct estimate and the list estimate based on these models are shown in Fig. 4. The figure displays the results based on the variables government supporter, gender, and income. In the interest of space, the results for the variables age, city inhabitant, and education are presented and discussed in the Supplementary Materials.

Figure 4 reveals interesting differences in under-reporting among different subgroups. The left-hand graph indicates that government supporters tend to severely under-report the sensitive item. When asked directly, under 9% of government supporters say that someone asked them to pay a bribe, compared to the list estimate at 58%. Given the relatively small size of this group the point estimate from the list experiment needs to be taken with a grain of salt, given the substantial uncertainty around the estimate.Footnote 29 The results do suggest, however, that under-reporting is huge among government supporters. This is completely in line with both the SB and PB hypothesis: government supporters might under-report the sensitive item to make their group look better and to depict overall corruption levels as lower (Carkoglu and Erdem Aytac 2015 find a similar pattern with regard to vote buying in Turkey).

It has long been noted that women seem to be less involved in corruption than men. In an overview of the literature, Heath et al. (2016, pp. 67–72) find that women have lower risk of being asked for a bribe in all nine studies reviewed. Some have argued that one reason for this might be that women simple have fewer opportunities to engage in corrupt activities and that women get asked to pay bribes less often than men (e.g. Goetz 2007; Mocan 2004). This is also the pattern shown in the direct estimate of about 13% for women and 21% for men. The list estimates, however, suggest the opposite pattern; when using the indirect questioning method women seem to be asked for bribes more often than men. The list estimate for women is over three times as high as the direct estimate—43% vs 13%. This result is interesting, given that it goes against what much of previous research has argued. At this point I can only speculate about the reasons behind this pattern. One possibility is that women as a group are more affected by sensitivity bias.Footnote 30 The higher list estimate could reflect the fact that women utilize the health care sector more than men, and that this sector, according to many estimates, is the sector most permeated by corruption (see Eurobarometer 2014, 2017).

Finally, Mocan (2004) argues that we should expect income to be positively related to bribe victimization, given that it should be possible for a rent-seeking official to extract higher bribes from a wealthier individual (see also Heath et al. 2016, pp. 67–72). This is also the pattern found in the study at hand. Interestingly, both the list estimate and the direct estimate are substantially higher for individuals in the top 20% of the income distribution, possibly suggesting a ‘normalization’ of bribe-paying in this group.

Overall, these results provide evidence in favor of the SB hypothesis and suggest not only that bribe victimization is under-reported in general, but also that under-reporting differs substantially between different subgroups. As a consequence, researchers and practitioners should be very cautious in using direct, obtrusive, questions about corruption experiences to gauge overall levels of corruption and to model the dynamics of bribery based on these questions. As in the case of male and female respondents, using different questioning techniques might lead to opposite conclusions. Some researchers have suggested that direct questions (or ‘victim surveys’) might be ‘the least problematic of the currently available [corruption] measures’ (Heath et al. 2016, p. 58). The results in this section suggest that this is far from obvious.

Conclusions

Respondents’ answers to survey questions are constructed and shaped in many different ways. Research on survey methodology and public opinion has convincingly shown that responses often are unstable and strongly affected by things like social context, motivated reasoning, and particular frames (Bartels 2002; Berinsky 1999; Bertrand and Mullainathan 2001; Taber and Lodge 2006; Tourangeau and Yan 2007; Zaller 1992). In this paper I argue that these findings have been underappreciated by corruption researchers and practitioners using individual-level survey data. Recent years have seen a steady increase in the availability of different corruption measures and the use of corruption questions in large multi-country surveys (Fisman and Golden 2017; Heath et al. 2016; Holmes 2015; Wysmulek 2019). Many important measures and data sets are based on surveys directly probing the perceptions and experiences of the general public. These indicators have been of great interest to political scientists and have opened up several new research avenues that utilizes individual-level data, like the study of corruption and political behavior. The increase in data availability has not, however, been accompanied by sufficient reflection about problems and potential pitfalls with regard to these survey-based measures.

This paper departs from two potential sources of bias that have been demonstrated in previous research: political bias and sensitivity bias. As a first test of the prevalence of these biases in corruption surveys I conducted an original survey fielded to over 3000 respondents in Romania. The survey aimed at testing two specific hypotheses, in two different embedded experiments, based on these suggested patterns of response bias. The results from the first experiment provide strong evidence in favor of the political bias hypothesis (\(H_{1}\)). Government supporters report a much more positive view when asked common corruption questions that, in principle, ask about the objective state of society (Has corruption increased? How common is political corruption?). Government supporters also report being less worried about corruption in general, possibly signaling that they attach less importance to the issue. Priming these respondents with their political affiliation makes this general effect even more pronounced. This suggests that corruption reports to a significant extent might be subject to political motivated reasoning and expressive ‘political cheerleading’.

Researchers should hence be cautious in estimating models with individual-level measures of corruption perceptions and individual-level political outcomes such as incumbent support or vote intention. Relationships like these are likely to be affected by strong feedback mechanisms and reversed causality, especially in surveys asking political questions before corruption questions. The results also show that responses to questions about corruption perceptions in general are malleable and affected by simple frames. This means, for instance, that corruption perceptions among the public should be expected to be more polarized along political lines at times when political affiliations are more salient, for instance during an election year. From a broader perspective, the results show that political bias can be substantial even outside of traditionally studied topics like perceptions about unemployment and inflation (Bartels 2002; Gerber and Huber 2010; Jerit and Barabas 2012), and also an important factor shaping public perceptions in a multiparty system like Romania with traditionally weak party identification (Tatar 2013).

The findings from the second experiment on sensitivity bias strongly suggest that direct questions about corruption experiences need to be treated as sensitive questions. According to the results, the direct question both fails to accurately capture the overall occurrence (which is heavily under-reported), and to capture the dynamics of bribery and which groups are most likely to be targeted. This is something that anyone who uses this, or a similar question, needs to take into account. At the same time, direct questions are an important tool to gauge actual rates of corruption victimization—given that alternatives such as perceptions about ‘general levels of corruption’ can be unreliable, as shown in the PB experiment. Different techniques to unobtrusively ask sensitive questions do exist, out of which the list experiment is one. In general, these techniques come at the cost of statistical efficiency, but when bias is large—like in the study at hand—the bias–variance trade-off should come down in favor of unbiased (or less biased) estimators (Blair et al. 2018). In essence, this means that researchers will need larger samples and more sophisticated survey designs to accurately capture sensitive topics like corruption victimization. Fortunately, recent methodological developments make many of these techniques more accessible and powerful (Blair and Imai 2012; Blair et al. 2015, 2019; Kraay and Murrell 2016).

The results thus strongly suggest that corruption measures based on citizen surveys can not be treated as an ‘objective benchmark’ for the true levels of corruption in a country. At the same time, the findings in this paper should not be taken as a discouragement of research on corruption or of efforts to quantify the incidence of corruption. Rather, given the immense importance of the topic, it is crucial that we scrutinize the methods we use and try to be cognizant of potential sources of error and bias. I am also not saying that existing measures based on citizen surveys should not be used, but that researchers should approach such measures with caution and think about how reporting bias might influence a given estimate.

The experiments in this study are only a first step in identifying these potential issues. A task for future research is to think more deeply about when and in what contexts reporting bias is most likely to be present and which techniques that best can mitigate the problem. As argued above, the Romanian case is rather typical of many developing democracies, where the question of corruption plays a prominent role in political discourse. The patterns uncovered in the study at hand are hence likely to be present in countries with similar dynamics, including much of Eastern Europe and several countries in Latin America. An interesting avenue for future studies is to extend the experiments to political systems with different dynamics than Romania, for instance systems where party identification is stronger, like Spain. Overall, this study suggests that paying more attention to issues of response bias is an important part of further advancing the field of corruption research.

Notes

Corruption in the World Bank’s definition is “the extent to which public power is exercised for private gain, including both petty and grand forms of corruption as well as ‘capture’ of the state by elites and private interests” (Kaufmann et al. 2011, p. 4).

Unlike corruption surveys based on citizen interviews, expert-based corruption indicators have been widely discussed and criticized (see Hamilton and Hammer 2018 for an overview of this debate).

An exception in the literature, further discussed below, that focuses on potential under-reporting with regard to experiential corruption questions is Kraay and Murrell (2016).

The hypotheses and overall analysis plan was preregistered at EGAP (https://osf.io/pwdqt/). Full replication materials are available at the Harvard Dataverse: Agerberg (2020).

Arguably, the term bias is more well-defined in the case of corruption experiences, where the sensitivity of the issue might lead to under-reporting and hence deviations from a true population parameter in the aggregate. The analogue in the case of corruption perceptions is less straightforward. I still use the term ‘political bias’ in the latter case to be consistent with the previous literature.

See Tourangeau and Yan (2007) for an overview of research on sensitive survey questions.

In the WVS data (wave 6) the mean answer in the full sample to the question of whether accepting a bribe can be justified is below 2 on a scale from 1 to 10, where 1 corresponds to “never justifiable”. The mean value is below 3 for every country in the sample except South Africa (4.1), the Philippines (4.1), and Haiti (5.2).

Specifically, \({\hat{\delta }}\) and \(\hat{\beta _{2}}\) are estimates of the conditional average treatment effect (CATE) for government supporters and others, respectively (Morgan and Winship 2014). A statistically significant \({\hat{\delta }}\) indicates that the effect for government supporters is different from the effect in the ‘others’ group.

The economy outcome is used as a point of comparison and does not represent a formal hypothesis to be tested. My expectations here are analogous to those with regard to the corruption variables: I expect government supporters to report a more positive view of the economy, and I expect these reports to be even more positive when respondents are primed with their political affiliation.

The list experiment was always implemented before the political questions. The randomization with regard to the list experiment was independent of the randomization with regard to the political bias experiment.

Research shows that the method works well for items with relatively low incidence (de Jonge and Nickerson 2014)—like corruption experiences.

Since the treatment assignment for the political bias experiment was independent of the treatment assignment in the list experiment a respondent can either be in both treatment groups (for both experiments), in one treatment group, or in no treatment group.

The formulation of the sensitive item in the list experiment and the direct bribe question follows the formulation used in Eurobarometer (2017). This is the less sensitive version of the question that is commonly used; the other version asks directly if the respondent have actually paid a bribe. Any estimates of SB found with regard to the somewhat less sensitive bribe question should therefore arguably be larger for the more sensitive question.

The procedure is implemented in the R package list.

The age quota had to be relaxed to reach the desired number of completes. The final sample is thus—as is often the case with online samples—slightly younger and also more educated than the overall Romanian population (see Supplementary Materials).

Given random assignment of the treatment, the basic model in (1) yields an unbiased estimate of the CATE (for government supporters and ‘others’) (Morgan and Winship 2014). The Supplementary Materials report several additional specifications, including models with covariates.

The graph excludes the treatment group for the ‘others’ category to make the graph easier to interpret. As can be seen in Table 1, this category differs little from the ‘others’ category in the control group.

The interaction effect is, however, significant at the 0.01-level throughout all models and the effect size of 0.31–0.38 scale steps is still substantial. It should also be noted that the estimates for the first part of \(H_{1}\) are highly significant in all models for this outcome.

The magnitude of this estimate is quite similar to the estimates of under-reporting in Kraay and Murrell (2016).

It is hence not obvious that this estimate actually is substantially higher than the overall list estimate of 35%. At the same time, the results strongly suggest that the estimate for the direct question is substantially lower for government supporters.

This pattern is found in Gonzalez-Ocantos et al. (2012), although the estimates are uncertain.

References

Agerberg, M. (2017). Failed expectations: Quality of government and support for populist parties in Europe. European Journal of Political Research, 56, 578–600.

Agerberg, M. (2019). The curse of knowledge? Education, corruption, and politics. Political Behavior, 41(2), 369–399.

Agerberg, M. (2020). Replication data for: ”Corrupted Estimates? Response Bias in Citizen Surveys on Corruption”. In Harvard Dataverse. https://dataverse.harvard.edu/dataset.xhtml?persistentId.

Anderson, C. J., & Tverdova, Y. V. (2003). Corruption, political allegiances, and attitudes toward government in contemporary democracies. American Journal of Political Science, 47(1), 91–109.

Anduiza, E., Gallego, A., & Munoz, J. (2013). Turning a blind eye: Experimental evidence of partisan bias in attitudes toward corruption. Comparative Political Studies, 46(12), 1664–1692.

Azfar, O., & Murrell, P. (2009). Identifying reticent respondents: Assessing the quality of survey data on corruption and values. Economic Development and Cultural Change, 57(2), 387–411.

Bardhan, P. (1997). Corruption and development: A review of issues. Journal of Economic Literature, 35(3), 1320–1346.

Bartels, L. M. (2002). Beyond the running tally: Partisan bias in political perceptions. Political Behavior, 24(2), 117–150.

Berinsky, A. J. (1999). The two faces of public opinion. American Journal of Political Science, 43(4), 1209–1230.

Bertrand, M., & Mullainathan, S. (2001). Do people mean what they say? Implications for subjective survey data. American Economic Review, 91(2), 67–72.

Blair, G., Chou, W., & Imai, K. (2019). List experiments with measurement error. Political Analysis, 27(4), 455–480.

Blair, G., Coppock, A., & Moor, M. (2018). When to worry about sensitivity bias: Evidence from 30 years of list experiments. Working Paper.

Blair, G., & Imai, K. (2012). Statistical analysis of list experiments. Political Analysis, 20(1), 47–77.

Blair, G., Imai, K., & Zhou, Y.-Y. (2015). Design and analysis of the randomized response technique. Journal of the American Statistical Association, 110(115), 1304–1319.

Bolsen, T., Druckman, J. N., & Cook, F. L. (2014). The influence of partisan motivated reasoning on public opinion. Political Behavior, 36(2), 235–262.

Bågenholm, A. (2013). Throwing the rascals out? The electoral effects of corruption allegations and corruption scandals in Europe 1981–2011. Crime, Law and Social Change, 60(5), 595–609.

Campbell, A., et al. (1960). The American voter. New York: Wiley.

Carkoglu, A., & Erdem Aytac, S. (2015). Who gets targeted for vote-buying? Evidence from an augmented list experiment in Turkey. European Political Science Review, 7(4), 547–566.

Coppock, A., & McClellan, O. A. (2019). Validating the demographic, political, psychological, and experimental results obtained from a new source of online survey respondents. Research and Politics, 6(1), 1–14.

Corstange, D. (2012). Vote trafficking in Lebanon. International Journal of Middle Eastern Studies, 44, 483–505.

Dahlberg, S., & Holmberg, S. (2014). Democracy and bureaucracy: How their quality matters for popular satisfaction. West European Politics, 37(3), 515–537.

De Jonge, C. P. K., & Nickerson, D. W. (2014). Artificial inflation or deflation? Assessing the item count technique in comparative surveys. Political Behavior, 36, 659–682.

Donchev, D., & Ujhelyi, G. (2014). What do corruption indices measure. Economics and Politics, 26(2), 309–331.

Eurobarometer. (2014). Special Eurobarometer 397: Corruption. Technical report. Conducted by TNS Opinion and Social at the request of the European Commission.

Eurobarometer. (2017). Special Eurobarometer 470: Corruption. Technical report. Conducted by TNS Opinion and Social at the request of the European Commission.

Evans, G., & Andersen, R. (2006). The political conditioning of economic perceptions. The Journal of Politics, 68(1), 194–207.

Fendrich, M., & Vaughn, C. M. (1994). Diminished lifetime substance use over time: An inquiry into differential underreporting. Public Opinion Quarterly, 58, 96–123.

Fischle, M. (2000). Mass response to the Lewinsky scandal: Motivated reasoning or Bayesian updating? Political Psychology, 21(1), 135–158.

Fisman, R., & Golden, M. A. (2017). Corruption: What everyone needs to know. New York: Oxford University Press.

Flynn, D. J., Nyhan, B., & Reifler, J. (2017). The nature and origins of misperceptions: Understanding false and unsupported beliefs about politics. Advances in Political Psychology, 38(1), 127–150.

Gerber, A. S., & Huber, G. A. (2010). Partisanship, political control, and economic assessments. American Journal of Political Science, 54(1), 153–173.

Gingerich, D. W. (2009). Corruption and political decay: Evidence From Bolivia. Quarterly Journal of Political Science, 4(1), 1–34.

Glynn, A. N. (2013). What can we learn with statistical truth serum. Public Opinion Quarterly, 77, 159–172.

Goetz, A. M. (2007). Political cleaners: Women as the new anti-corruption force? Development and Change, 38(1), 87–105.

Gonzalez-Ocantos, E., et al. (2012). Vote buying and social desirability bias: Experimental evidence from Nicaragua. American Journal of Political Science, 56(1), 202–217.

Hajdu, M., et al. (2018). Content analysis of corruption coverage: Cross-national differences and commonalities. European Journal of Communication, 33(1), 7–21.

Hamilton, A., & Hammer, C. (2018). Can we measure the power of the grabbing hand? A comparative analysis of different indicators of corruption. Technical report. World Bank, Policy Research Working Paper 8299.

Heath, A. F., Richards, L., & de Graaf, N. D. (2016). Explaining corruption in the developed world: The potential of sociological approaches. Annual Review of Sociology, 42, 51–79.

Holbrook, A. L., & Krosnick, J. A. (2010). Social desirability bias in voter turnout reports: Tests using the item count technique. The Public Opinion Quarterly, 74(1), 37–67.

Holmberg, S., & Rothstein, B. (2011). Dying of corruption. Health Economics, Policy and Law, 6(4), 529–547.

Holmes, L. (2015). Corruption: A very short introduction. Oxford: Oxford University Press.

Imai, K. (2011). Multivariate regression analysis for the item count technique. Journal of the American Statistical Association, 106(494), 407–416.

Jerit, J., & Barabas, J. (2012). Partisan perceptual bias and the information environment. Journal of Politics, 74(3), 672–684.

Karklins, R. (2005). The system made me do it: Corruption in post-Communist societies. Armonk, NY: M.E. Sharpe.

Kaufmann, D., Kraay, A., & Mastruzzi, M. (2011). The worldwide governance indicators: Methodology and analytical issues. Hague Journal on the Rule of Law, 3(2), 220–246.

Keefer, P. (2007). Clientelism, credibility, and the policy choices of young democracies. American Journal of Political Science, 51(4), 804–821.

Klar, S. (2013). The influence of competing identity primes on political preferences. The Journal of Politics, 75(4), 1108–1124.

Klasnja, M. (2015). Corruption and the incumbency disadvantage: Theory and evidence. Journal of Politics, 77(4), 928–942.

Klasnja, M., & Titunik, R. (2017). The incumbency curse: Weak parties, term limits, and unfulfilled accountability. American Political Science Review, 111(1), 129–148.

Klasnja, M., Tucker, J. A., & Deegankrause, K. (2016). Pocketbook vs. sociotropic corruption voting. British Journal of Political Science, 46(1), 67–94.

Kraay, A., & Murrell, P. (2016). Misunderestimating corruption. The Review of Economics and Statistics, 98(3), 455–466.

Krumpal, I. (2013). Determinants of social desirability bias in sensitive surveys: A literature review. Quality and Quantity, 47, 2025–2047.

Lau, R. R., Sears, D. O., & Jessor, T. (1990). Fact or artifact revisited: Survey instrument effects and pocketbook voting. Political Behavior, 12(3), 217–242.

Leeper, T. J., & Slothuus, R. (2014). Political parties, motivated reasoning, and public opinion formation. Advances in Political Psychology, 35(1), 129–156.

Mauro, P. (1995). Corruption and growth. The Quarterly Journal of Economics, 110(3), 681–712.

Mocan, N. (2004). What determines corruption? International evidence from micro data. NBER Working Paper Series 10460.

Morgan, S. L., & Winship, C. (2014). Counterfactuals and causal inference: Methods and principles for social research. New York: Cambridge University Press.

Morris, S. D., & Klesner, J. L. (2010). Corruption and trust: Theoretical considerations and evidence from Mexico. Comparative Political Studies, 43(10), 1258–1285.

Mungiu-Pippidi, A. (2013). Becoming Denmark: Historical designs of corruption control. Social Research: An International Quarterly, 80(4), 1259–1286.

Palmer, H. D., & Duch, R. M. (2001). Do surveys provide representative or whimsical assessments of the economy? Political Analysis, 9(1), 58–77.

Persson, A., Rothstein, B., & Teorell, J. (2013). Why anticorruption reforms fail: Systemic corruption as a collective action problem. Governance, 26(3), 449–471.

Razafindrakoto, M., & Roubaud, F. (2010). Are international databases on corruption reliable? A comparison of expert opinion surveys and household surveys in Sub-Saharan Africa. World Development, 38(8), 1057–1069.

Roberts, A. (2008). Hyperaccountability: Economic voting in Central and Eastern Europe. Electoral Studies, 27(3), 533–546.

Rose-Ackerman, S. (1999). Corruption and government: Causes, consequences, and reform. Cambridge: Cambridge University Press.

Rothstein, B. (2011). The quality of government: Corruption, social trust and inequality in international perspective. Chicago: The University of Chicago Press.

Rothstein, B., & Varraich, A. (2017). Making sense of corruption. New York: Cambridge University Press.

Sears, D. O., & Lau, R. R. (1983). Inducing apparently self-interested political preferences. American Journal of Political Science, 27(2), 223–252.

Seligson, M. A. (2002). The impact of corruption on regime legitimacy: A comparative study of four Latin American countries. The Journal of Politics, 64(2), 408–433.

Taber, C. S., & Lodge, M. (2006). Motivated skepticism in the evaluation of political beliefs. American Journal of Political Science, 50(3), 755–769.

Tatar, M. I. (2013). From partisanship to abstention: Changing types of electoral behavior in a new democracy. Journal of Identity and Migration Studies, 7(1), 2–30.

The New York Times. (2017, May 4). Romania drops measure to pardon corrupt officials. https://www.nytimes.com/2017/05/04/world/europe/romania-corruption-protests.html.

Tourangeau, R., & Yan, T. (2007). Sensitive questions in surveys. Psychological Bulletin, 133(5), 859–883.

Treisman, D. (2007). What have we learned about the causes of corruption from ten years of cross-national empirical research? Annual Review of Political Science, 10, 211–244.

Uppal, Y. (2009). The disadvantaged incumbents: Estimating incumbency effects in Indian state legislatures. Public Choice, 138(1–2), 9–27.

Wilcox, N. T., & Wlezien, C. (1996). The contamination of responses to survey items: Economic perceptions and political judgments. Political Analysis, 5(1), 181–213.

World Economic Forum. (2017). Global shapers survey. http://shaperssurvey.org/static/data/WEF_GSC_Annual_Survey_2017.pdf.

Wysmulek, I. (2019). Using public opinion surveys to evaluate corruption in Europe: Trends in the corruption items of 21 international survey projects, 1989–2017. Quality and Quantity, 53, 2589–2610.

Xezonakis, G., Kosmidis, S., & Dahlberg, S. (2016). Can electors combat corruption? Institutional arrangements and citizen behaviour. European Journal of Political Research, 55, 160–176.

Zaller, J. (1992). The nature and origins of mass opinion. Cambridge: Cambridge University Press.

Zechmeister, E. J., & Zizumbo-Colunga, D. (2013). The varying political toll of concerns about corruption in good versus bad economic times. Comparative Political Studies, 46(10), 1190–1218.

Acknowledgements

Open access funding provided by University of Gothenburg. The author would like to thank Lena Wängnerud, Nicholas Charron, the Quality of Government Institute, and the four anonymous reviewers at Political Behavior for their valuable comments and suggestions on earlier drafts of this article.

Funding

Mattias Agerberg was supported by Helge Ax:son Johnsons Stiftelse (Grant No. F18-0296) and Kungl. Vetenskaps- och Vitterhets-Samhället i Göteborg (Grant No. W2018-0103).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Agerberg, M. Corrupted Estimates? Response Bias in Citizen Surveys on Corruption. Polit Behav 44, 653–678 (2022). https://doi.org/10.1007/s11109-020-09630-5

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11109-020-09630-5