Abstract

Embodied learning environments have a substantial share in teaching interventions and research for enhancing learning in science, technology, engineering, and mathematics (STEM) education. In these learning environments, students’ bodily experiences are an essential part of the learning activities and hence, of the learning. In this systematic review, we focused on embodied learning environments supporting students’ understanding of graphing change in the context of modeling motion. Our goal was to deepen the theoretical understanding of what aspects of these embodied learning environments are important for teaching and learning. We specified four embodied configurations by juxtaposing embodied learning environments on the degree of bodily involvement (own and others/objects’ motion) and immediacy (immediate and non-immediate) resulting in four classes of embodied learning environments. Our review included 44 articles (comprising 62 learning environments) and uncovered eight mediating factors, as described by the authors of the reviewed articles: real-world context, multimodality, linking motion to graph, multiple representations, semiotics, student control, attention capturing, and cognitive conflict. Different combinations of mediating factors were identified in each class of embodied learning environments. Additionally, we found that learning environments making use of students’ own motion immediately linked to its representation were most effective in terms of learning outcomes. Implications of this review for future research and the design of embodied learning environments are discussed.

Similar content being viewed by others

Introduction

Within the domain of STEM teaching and learning a large number of studies have been conducted incorporating embodied mathematics activities (e.g., Abrahamson and Lindgren 2014; Tran et al. 2017). These are activities in which students’ perceptual-motor experiences play an explicit role in the learning process (Lindgren and Johnson-Glenberg 2013). Using perceptual-motor activities within mathematics education fits within the theoretical framework of embodied cognition (e.g., Barsalou 2010; Gallese and Lakoff 2005; Glenberg and Gallese 2012; Núñez et al. 1999; Wilson 2002). This theory emphasizes the idea that learning and cognitive processes are taking place in the interaction between one’s body and its physical environment. Yet, as is described by Hayes and Kraemer (2017), little is known about how embodied processes, such as moving your body through space, contributes to STEM learning (see also DeSutter and Stieff 2017; Han and Black 2011; Kontra et al. 2015). Therefore, it is no surprise that recent reviews call for more research into principles of embodied (i.e., motion- and body-based) interventions for mathematics learning, as well as a systematic inventory of their presumed usefulness (Nathan et al. 2017; Nathan and Walkington 2017). In line with these reviews, we want to shed light on the significance of embodied cognition theory for mathematics teaching and learning. Yet, we want to take a small step back and take a critical look at the extant research. We particularly focus on a mathematics domain that has a tradition of including bodily experiences for learning: graphing change in the context of modeling motion.

Reviewing the operationalization of aspects of a theory in learning environments can be a helpful strategy to elaborate a theoretical perspective (Bikner-Ahsbahs and Prediger 2006) and can help demonstrate how theoretical considerations are useful for the teaching and learning of mathematics (Sriraman and English 2010). Therefore, we decided to review research literature to map the existing landscape of embodied learning environments supporting students’ understanding of graphing motion. In this way, we aim to elucidate the potential of these embodied learning environments for students, teachers, mathematics education researchers, and curriculum designers, and to assess their theoretical relevance in order to advance and inform the embodied cognition thesis.

Embodied Cognition

Considering bodily experiences as fundamental for learning has a rather long history in the educational and developmental sciences, and has recently received an increased interest through the embodied cognition paradigm (e.g., Abrahamson and Bakker 2016; Radford et al. 2005; Wilson 2002). Piaget (1964) described how during the first sensorimotor developmental stage a child acquires “the practical knowledge which constitutes the substructure of later representational knowledge” (p. 177). However, according to Piaget, the significance of sensorimotor cognition would be temporary and limited to the first stages of cognitive development. In the 1980s, this interpretation changed (Núñez et al. 1999), leading to the now common proposition that “sensorimotor activity is not merely a stage of development that fades away in more advanced stages, but rather is thoroughly present in thinking and conceptualizing” (Radford et al. 2005, p. 114, see also Oudgenoeg-Paz et al. 2016). Accordingly, current embodied cognition theories emphasize that the role of perception-action structures is not limited to concrete operational thought but extends to abstract higher-order cognitive processes involved in language and mathematics as well (Barsalou 1999). Likewise, accepting perception-action as a basic building block of cognition implies a view on cognition as, at least partly, situated (or embedded), where the interaction of the body with objects in their real spatial context is a major gateway to cognition.

Embodied cognition theory refers to a variety of different but related theories varying in how the relationship between (lower-order) sensorimotor processes and (higher-order) abstract cognitive processes is conceived. The conceptualization of this relationship can be more or less radical—a distinction that relates to, but does not coincide with, the distinction between “simple” and “radical” embodiment as proposed in the research of Clark (1999). As Clark (1999) describes, simple, non-radical views of embodiment posit that bodily experiences and interactions of the body with the environment can support or influence (“on-line” and “off-line”) cognitive processes like the use of finger-counting can help to build the concept of number. The bodily experiences are considered to add “color” to abstract concepts, yet without fundamentally altering the a-modal discursive nature of these concepts. This simple, non-radical view on embodiment is fully compatible with the computational (cognitivist) approach to cognition, as the embodiment of cognition is seen as an additional but not essential phenomenon (Goldinger et al. 2016; Goldman 2012; Wilson 2002).

A radical reading of embodiment, in contrast, holds that all human cognition emerges through, and exists in, the recurrent cycles of perception-action of the physical body in its environment (Glenberg 1997; Kiverstein 2012). Per this view, real knowledge resides in immediate environmental perception-action cycles (Wilson and Golonka 2013), which make mental representations, such as abstract concepts in mathematics, “empty and misguided notions” (Goldinger et al. 2016, p. 962). Hence, the radical view has difficulty with explaining how cognition evolves in the absence of direct environmental stimuli (as in off-line cognitive activities, see also Pouw et al. 2014) or, for example, when dealing with symbolic language or mental arithmetic, which are “hungry” for mental representation (Clark 1999; Wilson and Golonka 2013). This view is at odds with rationalist or mentalist approaches as in computational models of cognition.

Many embodiment researchers position themselves somewhere in-between the simple and radical view in line with Goldman (2012), who claims that there is compelling behavioral and neuroscientific evidence for a moderate view of embodiment (see also Gallese and Lakoff 2005; Lakoff 2014; Pulvermüller 2013). A moderate view on embodied cognition acknowledges the critical importance of bodily experiences as part of the meaning of both concrete and abstract concepts, thus as constituting the fundament of all human knowledge, but allows for two additional resources: (1) the non-immediate (off-line) grounding of cognition in bodily experiences through imagining or mentally simulating perceptions and actions by re-using the sensorimotor circuits of the brain involved in actual (on-line) perceiving or performing these actions (also referred to as mirroring, see below); and (2) the connection, based on Hebbian-associative learning, of the system of multimodal sensorimotor cognition to a system of a-modal (verbal) conceptual knowledge (Anderson 2010; Lakoff 2014; Pulvermüller 2013). With these two additional resources, moderate embodiment endorses a view on human cognition as essentially situated and embodied, while allowing for grounded but abstract mental processes, such as reasoning and combining elementary embodied concepts into more complex abstract concepts. According to this view, acquired action-perception structures can be re-used through mental simulation, as perceptual symbols (Barsalou 1999), in situations where on the basis of previous experiences and well-established skills, new (and increasingly abstract) ideas need to be constructed and understood, also in off-line contexts (Anderson 2010; Koziol et al. 2011).

In line with embodiment theories, various studies have shown the positive effects of one’s own bodily involvement on learning (e.g., Dackermann et al. 2017; Johnson-Glenberg et al. 2014; Nemirovsky et al. 2012). For example, a study by Ruiter et al. (2015) investigated the influence of task-relevant whole bodily motion on first-grade students’ learning of two-digit numbers. Here, step size (small, medium, large) represented different sized number units (1, 5, 10). They found that students in the task-relevant whole bodily motion conditions outperformed students in the non-motion condition (where the movements were task-irrelevant) on students’ learning of two-digit numbers. Other studies have shown the beneficial effects of part-bodily motion on learning, such as students’ hand gestures (Alibali and Nathan 2012; Goldin-Meadow et al. 2009), finger tracing (Agostinho et al. 2015), finger counting (Domahs et al. 2010), or arm movements (Lindgren and Johnson-Glenberg 2013; Smith et al. 2014). Similarly, giving students the opportunity to observe or influence movements of other persons or of objects, instead of making these movements themselves, can lead to improved understanding as well, which suggests, in line with the moderate embodiment position, involvement of mirroring or simulation mechanisms (De Koning and Tabbers 2011; Van Gog et al. 2009). In the study of Bokosmaty et al. (2017), fifth-grade students observed a teacher demonstrating a geometry concept. The students improved their understanding of geometry after manipulating the geometric properties of triangles as well as observing their teacher doing so. Influencing and observing the movements of others and objects entails other ways of bodily involvement than making movements of your own. A large portion of the research on observing others or objects has been devoted to observing teachers’ use of gestures (e.g., Singer and Goldin-Meadow 2005) and observing the movements of somebody or something else through video examples or animations (e.g., De Koning and Tabbers 2011; Post et al. 2013).

Perceptual-motor experiences encompass a wide variety of bodily activities ranging from observing and influencing other (human) movements to making movements oneself. In a moderate embodiment perspective, following the mirroring systems hypothesis (e.g., Rizzolatti and Craighero 2004), all these ways of directly and indirectly involving the body can be regarded as “embodied” (Van Gog et al. 2009). According to the mirroring systems hypothesis, the same sensorimotor areas in the brain are activated when observing actions by others as when performing these actions oneself (e.g., Anderson 2010; Calvo-Merino et al. 2006; Gallese and Lakoff 2005; Schwartz et al. 2012). Indeed, brain imaging studies show similar patterns of brain activation when subjects hear or read a story in which a particular action is described, when they imagine the event involving this action, or acting out the specific event (Grèzes and Decety 2001; Pulvermüller 2013; Pulvermüller and Fadiga 2010), implying that understanding a concept (e.g., the verb kicking) relies on motor activation (Goldman 2012; Pulvermüller and Fadiga 2010).

In addition to the different levels of bodily involvement, also the immediacy of the embodiment of cognitive activities can differ between learning situations. Immediate cognitive activities are activities where immediate, or on-line, perceptual-motor interaction with the physical environment is available to the student (Borghi and Cimatti 2010; Wilson 2002). For example, Smith et al. (2014) had fourth-grade students create both static and dynamic angle representations by moving their arms in front of a Kinect sensor. The angles, reflected in the position of their arms, were immediately represented on the digital blackboard. This immediate link between students’ physical experiences and the abstract visual representation of angles facilitated students’ improved understanding of angle measurement after completing the body-based angle task. However, many embodied learning environments present learners with non-immediate, or off-line, cognitive activities. Typically, in non-immediate learning situations students first have bodily experiences, as, for example, when they explore the shapes of particular objects, which are then followed by the learning activity where the to be learned concepts are presented (Pouw et al. 2014). In situations where an immediate task-relevant interaction with the physical environment is not available, embodied simulation mechanisms may play a crucial role. According to De Koning and Tabbers (2011), through embodied simulations, previously acquired sensorimotor experiences are made available for knowledge construction processes in the learning activity (e.g., Barsalou 1999).

Embodied Learning Environments for Graphing Motion

Relevance of Embodied Learning Environments for Graphing Motion

Through learning environments based on embodied cognition theory students are provided with opportunities to ground abstract formal concepts in concrete bodily experiences (Glenberg 2010). Such embodiment-based learning environments are often used in efforts to support students’ understanding of graphing motion by, for example, showing how distance changing over time is represented graphically. Like many topics within mathematics, developing an understanding of graphical representations describing dynamic situations, can be challenging for students. Among other things, students experience difficulties with distinguishing between discrete and continuous representations of change, recognizing the meaning of the represented variables and their pattern of co-variation (Leinhardt et al. 1990), and differentiating between the shape of a graph and characteristics of the situation or the construct it represents (e.g., McDermott et al. 1987; Radford 2009a). Yet, graphical representations representing dynamic situations are foundational for the study of mathematics and science, and the absence of a solid understanding of graphical representations can make learning about rate and functions in the study of calculus and kinematics even more difficult (Glazer 2011).

Learning environments supporting students’ understanding of graphs of change and motion often incorporate students’ own motion experiences. According to Lakoff and Núñez (2000), experiencing change, in the context of graphs and functions, is related to the embodied image schemes of fictive motion and the source-path-goal schema. Essentially, these embodied image schemes allow to conceptualize static representations as having dynamic components (Botzer and Yerushalmy 2008). Metaphorical projection, by means of these image schemes, is the main embodied cognitive mechanism providing the link between the source domain experiences (such as moving through space) and target domain mathematical knowledge (such as developing an understanding of graphically represented motion) (e.g., Font et al. 2010; Núñez et al. 1999).

Operationalizing Embodied Learning Environments for Graphing Motion

Over the past years, many efforts have been undertaken to categorize embodied learning. For example, taxonomies of embodied learning have been developed in the context of technology (Johnson-Glenberg et al. 2014; Melcer and Isbister 2016), full-body interactions (Malinverni and Pares 2014), learning with manipulatives (Reed 2018), and, more generally, for the field of learning and instruction (Skulmowski and Rey 2018). The taxonomy of embodied learning described by Johnson-Glenberg et al. (2014) consists of four degrees of embodiment in which each degree entails a different level of bodily involvement, or motoric engagement. Skulmowski and Rey (2018) combined the two lowest degrees of motoric engagement found in the research of Johnson-Glenberg et al. (2014) into the category lower levels of bodily engagement, such as observation and finger tracing, and the two highest degrees into the category higher levels of bodily engagement, such as performing bodily movements and locomotion. Both taxonomies consider the conceptual link between the concrete bodily experience and the intended concept, termed gestural congruency (Johnson-Glenberg et al. 2014) or task-integration (Skulmowski and Rey 2018). In both taxonomies, the bodily experience can be conceptually related to the learning content or not. We also see gestural congruency and task integration as important elements on which embodied learning environments can vary. However, for embodied learning environments supporting students’ understanding of graphing change, the congruency between a motion event (either experienced or observed) and the graph of that motion is already an essential element of the learning environment, which will make task integration a less informative dimension for the purpose of this review.

The aforementioned levels of bodily involvement provide us with a base to categorize embodied learning environments supporting students understanding of graphing motion. A further way to categorize embodied learning environments supporting students’ understanding of graphing motion refers to the contiguity of motion and graph. The graphical representation of motion can be constructed simultaneously with the motion event or at a later moment. For this temporal aspect, we use the term immediacy. Because the motion and the corresponding representation are located in different representational spaces (i.e., the space in which you move/influence/observe versus the space in which the motion is represented), this distinction between immediate (or on-line) activities versus non-immediate (or off-line) activities might be especially relevant for classifying embodied learning environments supporting students’ understanding of graphing motion.

In sum, to get a grip on the plethora of embodied configurations of the learning environments that one can come across in educational research literature, we propose to categorize embodied learning environments supporting students’ understanding of graphing motion on two dimensions: bodily involvement and immediacy (see Fig. 1). For bodily involvement, a distinction is made between own motion and observing others/objects’ motion. One’s own motion entails a direct bodily experience, while the motion of others/objects is experienced indirectly. For the latter, mirror neural activity is the main embodied cognitive mechanism, as the mirror-neuron system is activated when observing movements made by others/objects. In line with this, we defined bodily involvement on a scale ranging from “motor execution,” referring to one’s own motion, till “motor mirroring,” indicating that when observing others/objects’ motion, an individual starts to rely on (neural) mirroring mechanisms (e.g., Anderson 2010; Gallese and Lakoff 2005; Schwartz et al. 2012).

For immediacy, a distinction is made between immediate and non-immediate (see Fig. 1), taking into account the distinction between “on-line” cognitive activities and “off-line” cognitive activities (Pouw et al. 2014; Wilson 2002). In the first case, an immediate task-relevant interaction with the physical environment is acted out, whereas in the second case this interaction is not simultaneously available. For the latter, embodied simulation is the main theoretical embodied cognitive mechanism, meaning that previously acquired sensorimotor experiences are activated. Accordingly, we defined immediacy on a scale ranging from “direct enactment,” referring to cognitive activity that is situated in the participant–environment interaction in the presence of direct environmental stimuli, till “reactivated enactment,” indicating that within non-immediate learning environments, an individual starts to rely on embodied simulations, which are re-activations of previous sensorimotor experiences.

Each quadrant of the taxonomy presented in Fig. 1 may give room for specific factors that are prone to mediate learning. Reviews on embodied learning have identified valuable features of embodied learning environments that impact students’ learning processes. For example, in their review of embodied numerical training programs, Dackermann et al. (2017) detected three working mechanisms of embodied learning environments: mapping mechanisms between the bodily experience and the intended concept, interactions between different regions of personal space, and the integration of different spatial frames of reference. Tran et al. (2017) also found mapping mechanisms (as movements being in accordance with the mental model of the mathematical concept) to be an important factor within embodied learning environments. Additionally, they posit that the movements students make should be represented visibly to give them the opportunity to observe and reflect on these movements. Within the context of graphing motion, we expect aspects like participant–environment interactions, attentive processes, mapping mechanisms, and multimodal aspects of the learning environment to be of importance.

Research Focus

In this article, we describe a review of the research literature on teaching graphing change and, more specifically, graphing motion (e.g., graphical representations of distance changing over time). We focused on learning environments in which students’ bodily experiences are an essential part of the learning activities and the learning. We were especially interested in articles in which these embodied learning environments are used, described, and empirically evaluated, for example, by means of an experiment. Based on these articles, we aimed to specify the embodied configurations that constitute these learning environments; identify the presumed factors that mediate learning within these learning environments, as described by the authors; and evaluate the efficacy of these learning environments by considering the learning outcomes. Since graphing motion is a key topic within both mathematics and science and already present within the early grades, we decided to include studies from primary education to higher education. To guide our review, we formulated the following four research questions:

What does the research literature on teaching students graphing motion using learning environments that incorporate students own bodily experiences report on…

-

1.

…the embodied configuration (in terms of bodily involvement and immediacy) of these learning environments?

-

2.

…the presumed factors mediating learning within these learning environments?

-

3.

…the relationship between the learning environments’ embodied configuration and the factors that mediate learning?

-

4.

…the efficacy of embodied learning environments for graphing motion?

Method

Literature Search

The literature search was carried out in four databases: Web of Science, ERIC, PsycINFO, and Scopus. As a first quality criterion, we searched for empirical research articles published in peer-reviewed journals and written in the English language. We did not set a publication date restriction to the articles because we are also interested in articles not (yet) mentioning embodied cognition as the main or related theory, but still applying its core features, for example, in the field of kinesthetic learning. There were no further methodological restrictions, so we included articles with qualitative studies, quantitative studies, and mixed-method studies. In a stepwise process, we defined a query consisting of Education × Learning facilitator × Domain × Graph × Graph variables (for the full query, see Appendix 1). Our initial search, conducted on April 6, 2017, generated 1953 journal articles (see Fig. 2). After deduplication, 1651 unique publications remained.

Selection of Articles

The selection process was facilitated by organizing all publications and coding information in a database, using Excel. Selection decisions were frequently discussed with all authors. We first performed a quick scan of the full text of the 1651 articles to identify the articles on graphing motion. Articles not written in English (153), not about education and learning (979), not in the STEM domain (306), not including graphing activities (79), not containing motion data (94), or not having a full-text available (2) were excluded (see Fig. 2). This resulted in 36 relevant articles for the purpose of the review. By snowballing the reference lists of these articles, 13 additional articles of interest were found. Then we inspected the full texts of these 49 articles’ methodology and results, only including articles in which the embodied learning environments were sufficiently described (i.e., containing a clear description of tools and tasks) and the bodily experiences could be considered task relevant. This resulted in the exclusion of five articles and the final selection of 44 articles for our analysis.

Data Extraction and Analysis

The 44 articles were first coded in terms of the contextual information regarding the studies carried out, comprising school level, sample, subject matter domain, research design, tools, learning activities, intervention length, dependent measures, and reported learning outcomes. Then we zoomed in on the learning environments, our units of analysis. A learning environment is a setting (e.g., a classroom) in which a set of activities is provided to the participants (e.g., a teaching sequence given to a group of Grade 5 students). In many articles, the learning environments differed between conditions. The 44 articles contained a total of 62 different embodied learning environments. Some of these learning environments were used as a control condition and some as experimental conditions. Hereafter, we coded the learning environments on their bodily involvement and immediacy as an indication of their embodied configuration. Finally, we extracted the presumed mediating factors for students’ understanding of graphing motion from each article and looked at the four classes of embodied learning environments in which they were mentioned.

Bodily involvement gives an indication of students’ engagement with a movement, ranging from an action of the whole body to observing the movement of others. For example, a learning environment in which a student has to move a small toy car over the table by moving part of her/his body was qualified as part bodily motion. However, due to lacking information in most of the articles, the number of bodily actions and their duration was not coded. Immediacy gives an indication of the temporal alignment of motion and graph. This temporal alignment relates to whether or not there is an immediate task-relevant interaction with the physical environment. For example, a learning environment in which a student has to move in front of a motion sensor and later constructs a graph using this data was qualified as non-immediate, whereas a learning environment where the graphical representation is constructed in parallel with the movement of that student was qualified as immediate. These latter learning environments were often technology enriched since technology eases the immediate representation of a graphical representation alongside a motion event. See Table 1 for a description of the degrees of bodily involvement and immediacy.

Learning environments containing more than one degree of bodily involvement and immediacy were assigned to the highest degree. For example, when a learning environment included both whole-bodily motion and influencing and observing others’ or objects’ motion, the learning environment was assigned to the category whole bodily motion. The same holds for immediacy. Learning environments containing both immediate and non-immediate bodily experiences were assigned to the category immediate. An independent second rater coded a subsample of 12 articles containing 20 learning environments (> 25%). Inter-rater reliability was very good for the bodily involvement dimension (Cohen’s Kappa = 1.00) and good for the immediacy dimension (Cohen’s Kappa = 0.74). We clustered the learning environments into four main classes in which the degrees of bodily involvement and immediacy are combined: Class I—Immediate Own Motion, Class II—Immediate Others/Objects’ Motion, Class III—Non-immediate Own Motion, and Class IV—Non-immediate Others/Objects’ Motion.

In order to extract the mediating factors from the described studies, the articles were carefully read and indications of mediating factors, presumed by the authors, were recorded. First, these mediating factors were recorded based on the terminology used by the authors. Later, these factors were clustered in categories. Finding a new mediating factor sometimes led to changing the categories or combining and splitting mediator categories. For example, an article mentioning gesturing as supporting students’ understanding of graphing motion first fell in a category labeled “gestures.” Later, we decided to create a category “semiotics” in which we grouped all mediating factors related to meaning supported signs systems. In this respect, throughout several iterations of reading and data extraction, we came to eight overarching mediator categories: real-world context, multimodality, linking motion to graph, multiple representations, semiotics, student control, attention capturing, and cognitive conflict.

The Subject Matter Domains Addressed in the Articles

As a result of our search query, all articles either addressed topics from the domains of mathematics and physics or integrated topics from both domains. The mathematics-oriented articles used motion to address the teaching and learning of graphs as visual representations of dynamic data (e.g., Boyd and Rubin 1996; Robutti 2006). Some of these articles also included more advanced topics like functions and the mathematics of change (calculus) (e.g., Ferrara 2014; Salinas et al. 2016). Most of the articles in physics addressed the relation between distance traveled, velocity, and acceleration (kinematics) (e.g., Anderson and Wall 2016; Mitnik et al. 2009). Articles that used an integrated approach addressed both aspects from physics, such as distance traveled, velocity, and acceleration and from mathematics, like slope and rate of change (e.g., Nemirovsky et al. 1998; Noble et al. 2001).

All articles, in both mathematics and physics, included learning environments in which data are represented by means of graphs. These data can be first-order data such as distance and time measures, which can be represented in distance–time graphs (e.g., Deniz and Dulger 2012; Kurz and Serrano 2015) or derived data resulting in velocity–time graphs or acceleration–time graphs (e.g., Anderson and Wall 2016; Nemirovsky et al. 1998; Struck and Yerrick 2010). Also, in some mathematical learning environments, graphs were drawn of functions (e.g., linear and quadratic functions) (e.g., Noble et al. 2004; Salinas et al. 2016; Stylianou et al. 2005; Wilhelm and Confrey 2003) or, related to physics, of uniform and oscillatory motion (e.g., Kelly and Crawford 1996; Metcalf and Tinker 2004).

Efficacy of Embodied Learning Environments for Graphing Motion

Of all included articles (n = 44), 26 articles gave information about the efficacy of the embodied learning environments for graphing motion. In these articles, the learning outcomes of multiple groups or pre- and post-tests were compared. To ensure the robustness of our evaluation of the reported learning outcomes, we carried out a quality check of the research design of the articles and the reported learning outcomes per learning environment (Appendix 2). The study design of these 26 articles was either (quasi)experimental (n = 15) or descriptive (n = 11). The mean quality rating (range, 5–20) for this subset of articles was 11.77, with a standard deviation of 2.93. From this quality rating, we infer that the methodological quality of this subset of articles is sufficient.

Classes of Embodied Learning Environments

The 62 learning environments were classified on bodily involvement and immediacy (see Fig. 3). Class I—Immediate Own Motion was the largest (34 learning environments). Class II—Non-immediate Own Motion was the smallest (4 learning environments). The other two classes contained the same amount of learning environments (12 learning environments each).

Class I—Immediate Own Motion

In 30 out of the 34 learning environments that belonged to Class I—Immediate Own Motion, motion sensor technology was used (e.g., Anderson and Wall 2016; Ferrara 2014; Nemirovsky et al. 1998), allowing for the immediate representation of a student’s motion as a graph. For example, in the study of Robutti (2006), students started by interpreting a description of a motion situation, which was followed by sketching a graph of this situation. Finally, students acted out the motion event by walking in front of the motion sensor. The translation of their movements into a graphical representation happened immediately and was represented on the screen of a graphing calculator. An example where students used parts of their body can be found in Anastopoulou et al. (2011). They asked students to replicate distance–time and velocity–time graphs by moving their hands in front of a motion sensor. Again, an immediate translation of the motion into a graphical representation was provided. In other studies, it was not the motion of students’ hands that was represented, but the motion of an object that students moved with their hands, for example, a motion sensor attached to a wheel which was rolled over a table (Russell et al. 2003). In another study, students were asked to replicate given distance–time, speed–time, or acceleration–time graphs by rotating a disc-shaped handle on top of a rotational motion sensor (Kuech and Lunetta 2002). In the remaining studies of this class, no motion sensor technology was used. Instead, students were for example asked to move a computer mouse over a mousepad, while at the same time this motion was represented on the screen of the computer (Botzer and Yerushalmy 2006, 2008).

Class II—Immediate Others/Objects’ Motion

A total of 12 learning environments fell within the category of activities in which students influenced or observed the motion of another person or object without moving (parts of) their own body while getting an immediate representation of that motion. Most studies dealing with moving physical objects were situated in kinematics laboratory settings within physics classes. The used objects varied widely. In one learning environment (Espinoza 2015), a pendulum system was used, allowing students to exert control over its movement, while a graph of the pendulum's movement was immediately presented to the students by means of motion sensor technology.

Other learning environments in this class dealt with simulated motion using computer software, such as SimCalc Mathworlds. In Salinas et al. (2016), students controlled the movements of an animated avatar by building and editing mathematical functions. The students pressed play to see the corresponding animation, while both the animation and graph were presented simultaneously to the students. Another example of using software can be found in Noble et al. (2004). They provided students with a simulation of an elevator moving up and down and a two-dimensional graph with unlabeled axes, representing the velocity in floors per second on the y-axis and the time in seconds on the x-axis.

Finally, in some learning environments within Class II—Immediate Others/Objects’ Motion, another person demonstrated motion events. For example, in Anastopoulou et al. (2011), a teacher demonstrated hand movements that were captured by a motion sensor and transferred to distance–time and velocity–time graphs, thus allowing the students to see the teacher’s hand motion and the corresponding graphs in real time (see also Kozhevnikov and Thornton 2006; Zucker et al. 2014).

Class III—Non-immediate Own Motion

In three out of the four learning environments belonging to Class III—Non-immediate Own Motion, the data collection occurred manually, which caused a slight delay between the motion event and its graphical representation (e.g., Anderson and Wall 2016; Heck and Uylings 2006). For example, in Deniz and Dulger (2012), students walked at varying speeds while carrying a bottle of water with a hole in the bottom. Every second, one drop of water fell through this hole. Thus, by measuring the time of traveling and the distance between the drops of water, the students could construct position–time graphs.

In the fourth learning environment within this class, the construction of the graphical representation was intentionally delayed. Brasell (1987) tested whether different time delays between the whole-bodily motion and the graphical representation could facilitate an equivalent linking in memory.

Class IV—Non-immediate Others/Objects’ Motion

In 6 of the 12 learning environments within Class IV—Non-immediate Others/Objects’ Motion, students had to construct a graph after they had observed the movements of physical objects (e.g., Anderson and Wall 2016; Carrejo and Marshall 2007; Mitnik et al. 2009) or the movements within a video or a simulation environment (e.g., Boyd and Rubin 1996; Zajkov and Mitrevski 2012). For example, in Carrejo and Marshall (2007), students had to record time and distance measures of a ball, using a spark timer, and then construct several graphs of the ball’s motion. Here, graph construction happened some time after the motion was finished. Similarly, in another learning environment (Anderson and Wall 2016), students built ramps and had to choose three objects to roll off the ramp while collecting time and distance measures with timers and measuring tapes. In the article of Mitnik et al. (2009), students observed the movements of a robot moving through space. After all data were collected (i.e., the robot had completed the movement), the students combined distance and time measures of the robot’s movements and used this for constructing distance–time and velocity–time graphs. In Boyd and Rubin (1996), students watched videotaped motion events and analyzed these videotaped motion events at a later stage.

In the learning environment described by Brungardt and Zollman (1995), the delay between motion and graph was deliberately used. Students were shown graphs of object motion, several minutes after they had seen the real videotaped motion event, to assess whether the real-time nature of simultaneously presenting graph and motion had an effect on students’ understanding of graphs. Finally, some of the simulation environments within this class asked students to first program the movements of an animated object, either in algebraic or graphical form, after which they could see the movements of the objects (e.g., Roschelle et al. 2010). Also, a simulation environment (ToonTalk) was used in the article of Simpson et al. (2006). Using this software, students were asked to define the properties of a spacecraft in such a way that it could successfully land on the moon. Here, the graphical representation was not immediately presented after the movement. First, students saw the movements of the ToonTalk object, and second, position–time and velocity–time graphs were plotted from the data.

Mediating Factors within Embodied Learning Environments

Our analysis uncovered eight mediating factors: real-world context, multimodality, linking motion to graph, multiple representations, semiotics, student control, attention capturing, and cognitive conflict. These mediating factors are to a different extent theoretically aligned with the embodiment framework. Authors sometimes attributed more than one mediating factor to a learning environment. For the 62 embodied learning environments, we found 127 instances in which authors mentioned a mediating factor. In two articles, with two learning environments each, the authors did not mention mediating factors at all (Brungardt and Zollman 1995; Deniz and Dulger 2012). In Table 2, the eight mediating factors and the articles in which they were mentioned are presented.

Real-World Context

When authors mention the real-world context as a mediating factor, they refer to experiences of the students with the real world (e.g., Boyd and Rubin 1996; Carrejo and Marshall 2007; Heck and Uylings 2006; Struck and Yerrick 2010; Wilhelm and Confrey 2003). Mitnik et al. (2009) gave students the opportunity to study the motion of a robot in the real world, by making the environment more explorative and immersive. In another example, specific parts of the learning environment were related to both the real world and formal contexts, by having authentic player-created graphs that looked like typical velocity–time graphs (Holbert and Wilensky 2014). Also, other authors claim their learning environments to be almost identical to the real world (e.g., Mitnik et al. 2009; Thornton and Sokoloff 1990). Solomon et al. (1991) use the term “micro world” to indicate that the used learning environment consisted of a world less complex than the real world. According to Thornton and Sokoloff (1990), through a learning environment containing real-world elements, links can be made between students’ personal experiences, physical actions, and formal mathematics or physics concepts.

Another finding was that embodied learning environments using a real-world context are often presented as a natural venue for scientific exploration (Holbert and Wilensky 2014; Thornton and Sokoloff 1990; Woolnough 2000). For example, Mokros and Tinker (1987) emphasize how the use of microcomputer-based laboratories provided students with genuine scientific experiences. Using elements from the real world also has the advantage of being prone to draw on students’ prior knowledge and experiences (Altiparmak 2014; Taylor et al. 1995). For example, in a simulation environment used in Noble et al. (2004), students, over the course of the activities, started recognizing the movement of an elevator in the graph.

Multimodality

The articles describing learning facilitators related to the multimodality aspect of the learning environment are all referring to the role of intertwining modalities. This means that by the nature of the tool or the instruction, at least two of the modalities of seeing, hearing, touching, imagining, or motor actions are simultaneously activated. In most of the learning environments, seeing and motor action are involved (Anderson and Wall 2016; Botzer and Yerushalmy 2006; Nemirovsky et al. 1998; Noble et al. 2004; Radford 2009b; Russell et al. 2003). Additionally, Anastopoulou et al. (2011) mention how the interactive technology in their learning environment activated communicative modalities together with these two sensory modalities. In the same line, Mokros and Tinker (1987) emphasize how their use of microcomputer-based laboratories gave students valuable kinesthetic experiences, sometimes using their own bodily motion as data, thus activating the learning modalities perception and motor action (see also Robutti 2006). Furthermore, Botzer and Yerushalmy (2008) mention the modality touching. In their learning environment, students’ hand motion with a computer mouse was captured and shown in graphs. When students retraced the graphs with their fingers on the visual display of the computer, a blend of seeing, touching, and motor action manifested itself. A similar intertwining of multiple modalities is discussed by Ferrara (2014) focusing on the multimodal nature of mathematical thinking. The motion of a student walking in front of a motion sensor was represented on a larger screen in front of the classroom. When the student tried to make sense of the graphical representation of his own motion, this resulted in perceptual, perceptual-motor, and imaginary experiences, manifested by the student’s verbal expression of thinking.

Linking Motion to Graph

Linking motion to graph as a mediating factor can either refer to the motion of the student (e.g., Anderson and Wall 2016; Espinoza 2015), to the motion of somebody else (e.g., Anastopoulou et al. 2011; Skordoulis et al. 2014), or to the motion of objects (e.g., Brungardt and Zollman 1995; Simpson et al. 2006). In these learning environments, students experienced or observed a link between motion and the corresponding graphical representation. In some instances, authors primarily focus on how the learning environment provided this linkage between motion and graph (e.g., Kurz and Serrano 2015; Metcalf and Tinker 2004; Stylianou et al. 2005; Svec 1999), while other authors focus more on how students were engaged in connecting the graph to the motion (e.g., Anastopoulou et al. 2011; Deniz and Dulger 2012; Nemirovsky et al. 1998; Heck and Uylings 2006). A few authors emphasize how this linkage might facilitate a corresponding linking in memory, whereas the information in the graph is a direct result of students’ own motion (e.g., Brasell 1987; Brungardt and Zollman 1995; Kozhevnikov and Thornton 2006; Mokros and Tinker 1987; Struck and Yerrick 2010). While in almost all learning environments the linkage between an actual (or simulated) motion and the corresponding graph is explicit, some authors also refer to linking motion to graph at a more abstract level (e.g., Botzer and Yerushalmy 2006, 2008; Boyd and Rubin 1996; Espinoza 2015; Ferrara 2014; Holbert and Wilensky 2014; Robutti 2006; Russell et al. 2003; Thornton and Sokoloff 1990). This means that the actual motion helped to conceptualize what lies behind the graphical representation, such as the sensory aspects of the motion experience (Mokros and Tinker 1987) or mathematical abstractions (Mitnik et al. 2009; Svec 1999).

Multiple Representations

All learning environments mentioning the mediating factor multiple representations refer to multiple representations of a particular motion. Sometimes one and the same motion is represented in multiple graphs (e.g., Anastopoulou et al. 2011; Brasell 1987; Kelly and Crawford 1996; Kozhevnikov and Thornton 2006; Nemirovsky 1994; Skordoulis et al. 2014; Wilson and Brown 1998; Svec 1999). For example, in Kuech and Lunetta (2002), the same motion was represented as a position–time, velocity–time, or acceleration–time graph. Also in the article of Botzer and Yerushalmy (2006, 2008), the students’ own motion was visualized in multiple graphical formats. Here, the two dimensions of the motion of the students’ hand over the mousepad were represented in two graphs.

Within other learning environments, the motion was represented to the students in multiple formats (e.g., Altiparmak 2014; Espinoza 2015; Nemirovsky 1994; Wilhelm and Confrey 2003; Wilson and Brown 1998). In these learning environments, a motion was represented by, for example, a graph, table, or formula (e.g., Kuech and Lunetta 2002). Furthermore, some articles mention acting out of the motion itself as a representation. In this respect, Anastopoulou et al. (2011) refer to kinesthetic, in addition to graphical and linguistic, representations of motion. Similarly, Zucker et al. (2014) mention how the representations in their learning environment included the “physical motion of an object in front of the sensor” (p. 443) in addition to words, graphs, tables, and animated icons (see also Simpson et al. 2006).

Semiotics

The mediating factor semiotics entails the use of meaning-supporting sign systems. This means that in the learning environment, symbols, signs, gestures, and language, including metaphors, are explicitly used to signify meaning. Botzer and Yerushalmy (2006) describe how gestures served as “an intermediate stage between the sensory experience and the use of formal language” (p. 8) (see also Anastopoulou et al. 2011; Ferrara 2014). Representing the graphs’ mathematical features through gesturing enabled students to elaborate on the meaning of graphs (Botzer and Yerushalmy 2008). Another important component of semiotics is the role of (conceptual) metaphor and metaphorical projection. For example, Botzer and Yerushalmy (2008) mention the possible activation of the fictive motion mechanism, when students actively explored graphical representations, enabling them to conceptualize static graphs as representing motion (see also Ferrara 2014; Nemirovsky et al. 1998). Nemirovsky (1994) and Noble et al. (2004) describe how the learning environment and its tools offered the student a so-called field of possibilities with graphically represented symbols which had to be interpreted. In this respect, Nemirovsky (1994) refers to symbol-use, in which symbol-use not only depends on the configuration of the learning environment but also on personal intentions and specific histories, conceptualized as extra symbolic components. Other authors concentrate on students’ knowledge objectification (i.e., the meaning making process) from an explicit semiotic perspective. Robutti (2006) uses semiotic mediation to refer to the objectification of knowledge, consisting of several steps marked by different semiotic means, including gestures, words, metaphors, and cultural elements to explain the graphical representation (see also Botzer and Yerushalmy 2008). Similarly, Radford (2009b) provides a semiotic analysis of the way students used their semiotic means in the process of knowledge objectification. Throughout this analysis, the interplay of action, gesture, and language is emphasized.

Student Control

The mediating factor student control explicitly refers to students being in control in the learning environment allowing them to manipulate either the motion event or its graphical representation. Most of these articles refer to student control as being in control of the (physical) motion (e.g., Anderson and Wall 2016; Nemirovsky et al. 1998; Russell et al. 2003; Struck and Yerrick 2010). In this respect, students are able to directly manipulate the visual display (Anastopoulou et al. 2011). Brasell (1987) adds how this direct manipulation of the graphical representation made the graphs “more responsive […] and more concrete” (p. 394). Moreover, when students are able to control the movement represented in the graphical representation, they might feel more engaged (Anastopoulou et al. 2011), making the learning activities more meaningful (Mokros and Tinker 1987). Other articles refer to student control as being in control of the graphical representations already present in the learning environment. In this respect, Botzer and Yerushalmy (2008) mention how student control over the graphical tools was stimulated by actions as dragging, stretching, and shrinking the graphs, and that these actions strongly contributed to students’ understanding of graphical signs. Similarly, in the learning environment of Salinas et al. (2016), students performed their own actions on a graphical representation, which resulted in a change of the graph. For example, an action on a position–time graph led to a corresponding change in a velocity–time graph. These actions in the learning environment produced by the students are an essential component of doing mathematics (e.g., formulating and testing mathematical conjectures).

Attention Capturing

Learning environments mentioning the mediating factor attention capturing as a learning facilitator refer to affordances in the learning environment that direct students’ attention. In most learning environments, attention capturing implies directing students’ attention toward important visual features of the graphical representation (e.g., Botzer and Yerushalmy 2008; Deniz and Dulger 2012; Nemirovsky et al. 1998; Russell et al. 2003). These visual features are especially prominent when the graph is displayed alongside the motion event, making specific changes in the motion event (e.g., changes in speed or changes in direction) directly observable to the student (e.g., Brasell 1987). Moreover, because changes in motion are highlighted in the graphical representation, it becomes clearer to the student what the relevant aspects of the graph are that they have to attend to (Kozhevnikov and Thornton 2006). In the learning environment of Holbert and Wilensky (2014), students explored the relationship between a car’s velocity and acceleration using several game mechanics, which ultimately allowed students to relate the car’s graphically represented speed with visual environmental cues. Other learning environments intend to capture the students’ attention by making changes in the representations. Boyd and Rubin (1996) mention how the changes between video frames in their video environment drew students’ attention to the differences between the frames. The learning environment of Noble et al. (2004) involves activities related to velocity, using different but related representations. These authors talk about the active nature of perception and how, in a familiar display, students are prone to recognize, and focus on, what is new.

Cognitive Conflict

The final mediating factor we identified in the articles is cognitive conflict, which refers to students’ conflicting conceptions. In general, this means that students, by means of a tool, are confronted with new information that conflicts with their existing knowledge or ideas (e.g., Simpson et al. 2006; Zajkov and Mitrevski 2012). The student taking part in the learning environment of Nemirovsky (1994) had already some ideas about the concept of velocity and the meaning of velocity graphs. While progressing through the activities, she continuously had to deal with symbolic representations of her movement that did not make any sense to her. This conflict made her rethink the meaning of the graphs. Something similar is described in the article of Svec et al. (1995) who use the term disequilibrium to denote the conflict between the students’ own beliefs and the gathered data. As opposed to cognitive conflicts within a person, also the cognitive conflict between students, initiated through (small) group discussions, is mentioned (Kuech and Lunetta 2002). In a matching activity, students disagreed about the specific motion that would best match a particular graph, which resulted in cognitive conflict among the students. Other articles use the mediating factor cognitive conflict to indicate not only students’ personal conflicting beliefs about a certain concept or phenomenon but also conflicting beliefs generated within different educational domains (i.e., mathematics and physics). Both Carrejo and Marshall (2007) and Woolnough (2000) focus on students’ personal experiences with a concept and the concept taught within the domain of mathematics and the domain of physics. It appeared to be difficult for students to integrate similar concepts within these different areas, causing cognitive conflict (Woolnough 2000).

Mediating Factors within the Four Classes of Mathematical Learning Environments

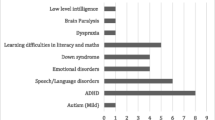

In this section, we elaborate on the relationship between the eight mediating factors and each class of embodied learning environments. The bar chart given in Fig. 4 shows the occurrence of the perceived mediating factors per learning environment for each class.

In Class I—Immediate Own Motion, all eight perceived mediating factors were present, as opposed to seven mediating factors for Class II—Immediate Others/Objects’ Motion, five mediating factors for Class II Non-immediate own motion, and six mediating factors in Class IV—Non-immediate Others/Objects’ Motion. Class I—Immediate Own Motion was the largest class, containing most learning environments (n = 34). Moreover, Class III—Non-immediate Own Motion, only containing four learning environments in total, mentioned five (different) mediating factors in total. Therefore, each mediating factor has a share of 20%.

The mediating factors linking motion to graph and multiple representations are present in each of the four classes. Moreover, these two mediating factors have a substantial share within each class (between 16% and 35%). The mediating factors real-world context and attention capturing are present in each of the four classes as well (between 5% and 20%). Multimodality is mentioned as a mediating factor in learning environments present in Class I—Immediate Own Motion (14%), Class II—Immediate Others/Objects’ Motion (8%), and Class III—Non-immediate Own Motion (20%), but not in Class IV—Non-immediate Others/Objects’ Motion.

The mediating factor cognitive conflict is rather present within Class IV—Non-immediate Others/Objects’ Motion. Cognitive conflict has a substantial share within this class (26%), especially when compared with the other three classes, where cognitive conflict either is not perceived as a mediating factor (Class II—Immediate Others/Objects’ Motion and Class III—Non-immediate Own Motion) or holds a minor share (Class I—Immediate Own Motion, 4%). Student control is mentioned relatively little as a mediating factor when compared with the other mediating factors (less than 9%). Something similar holds for the mediating factor semiotics. Semiotics is only present in the first two classes, representing learning environments containing an immediate translation of the embodied experiences (less than 10%).

Impact of Embodied Learning Environments

For 26 articles, we conducted a more fine-grained analysis of the reported effects on students’ learning in order to give an indication of the efficacy of each class of embodied learning environments. A summary of these 26 articles, including study design, tools, intervention length, description of activities, outcome measures, effect sizes, reported results, and quality appraisal score, are given in Appendix 3.

Effect Sizes

We calculated effect sizes using the common standardized mean difference statistic Hedges g for all learning environments for which adequate statistical information regarding their effectiveness was provided (n = 11). A positive g value indicates that the experimental group has a higher outcome score than the control group, or that a posttest outcome score was higher than a pretest outcome score (e.g., in the case of pre–post comparisons, see Borenstein et al. 2009). When articles made a comparison between groups and included a pretest, we calculated an adjusted effect size by subtracting the pretest effect size from the post-test effect size (Durlak 2009). Additionally, we corrected for upwards bias for samples smaller than N = 50 (ibid, 2009). Information regarding the statistical significance of the mean differences as provided by the authors was documented as well. Since there was variability in the experimental design, the data used, and outcome measures between the reviewed studies, we could not directly compare effect sizes or compute an overall effect size.

Learning Outcomes and Reported Effects

All 20 learning environments within Class I—Immediate Own Motion reported positive learning outcomes. In most of these learning environments, comparisons were made with other embodied learning environments and/or a control condition (n = 13), while for the other learning environments, pre–post comparisons were made (n = 7). In some articles, a statistical analysis was performed on the data (n = 6), all of which resulted in statistically significant differences. Among these articles, three reported at least one significant medium to large effect (g > 0.50; Ellis 2010) on a measure associated with graphing motion (Brasell 1987; Mokros and Tinker 1987; Svec 1999). For example, in Brasell (1987), viewing an immediate representation of one’s own motion seems to be advantageous for pre-university students’ understanding of distance–time graphs (g = 1.22 and g = 1.01), although students in the non-immediate motion representation environment also outperformed the controls on distance–time graphs, but not on velocity–time graphs.

For five of the ten learning environments within Class II—Immediate Others/Objects’ Motion, comparisons were made with other learning environments (n = 3), or a control condition (n = 2). When comparisons were made with learning environments belonging to the first class (n = 2), students in the second class performed less well (e.g., Anastopoulou et al. 2011; Zucker et al. 2014). When a comparison was made with a learning environment belonging to the fourth class (n = 1), the reported learning outcomes were positive, but non-significant with a small effect (g = 0.29, Brungardt & Zollman). When comparisons were made with a control condition (n = 2), the results were either positive with one statistically significant medium to large effect (g = 0.81, Altiparmak 2014), or a non-significant small effect (g = 0.20, Espinoza 2015). For the other five learning environments, pre–post comparisons were made. Three of these belonged to the same article (Kozhevnikov and Thornton 2006) in which the reported learning outcomes were all positive, of which one showed a significant large effect (g = 2.09) of the intervention on students’ understanding of force and motion and a moderate effect on spatial visualization ability (g = 0.62).

For both learning environments within Class III—Non-immediate Own Motion, a comparison was made with a learning environment belonging to the first class and/or a control condition, for which no strong results in favor of this class were reported (Brasell 1987; Deniz and Dulger 2012). In the article of Deniz and Dulger (2012), students seemed to benefit less from the intervention, which consisted of walking with a bottle of water with a hole in it, than the students who received an immediate graphical representation of their own movement on the screen of the computer, with a relatively small negative effect size (g = − 0.39).

Finally, in Class IV—Non-immediate Others/Objects’ Motion (n = 10), six learning environments were compared with another learning environment, belonging to Class I (n = 1) or Class II (n = 1), Class IV (n = 2) or being part of a control condition (n = 2). In the article making a comparison with a learning environment in the second class, a non-significant small negative effect was found (g = − 0.29; Brungardt and Zollman 1995). In Mitnik et al. (2009), two learning environments belonging to Class IV were compared. In one condition, students made use of a real robot whereas in the other condition students watched a simulation of a robot. Results were in favor of the first condition with a statistically significant large effect (g = 0.76). The two learning environments in Roschelle et al. (2010) involved Smartgraphs software, one for Grade 7 and one for Grade 8 students. Students using this software seemed to score higher on the outcome measures than students in the control conditions, especially on outcome measures associated with reasoning about and representing change over time. The four learning environments in which pre–post comparisons were made all reported positive learning outcomes regarding outcome measures associated with graphing change, for example, students’ ability to interpret and calculate slope (Woolnough 2000).

Discussion and Conclusion

Summary of the Results

In this study, we evaluated 62 embodied learning environments supporting students’ understanding of graphing motion, derived from 44 research articles. In order to know more about the embodied configurations of these learning environments, we developed a taxonomy in which embodied learning environments were juxtaposed on their degree of bodily involvement (own and others/objects’ motion) and immediacy (immediate and non-immediate). This resulted in four classes of embodied learning environments: Class I—Immediate Own Motion, Class II—Immediate Others/Objects’ Motion, Class III—Non-Immediate Own Motion, and Class IV—Non-Immediate Others/Objects’ Motion. Our analysis showed that immediate own motion experiences were most common in the embodied learning environments; 34 out of the 62 learning environments belonged to this class.

According to the authors of the reviewed articles, a large variety of situations or characteristics of the embodied learning environments mediated the learning of students. After clustering these situations or characteristics, we recognized eight mediating factors, namely, real-world context, multimodality, linking motion to graph, semiotics, attention capturing, multiple representations, student control, and cognitive conflict. All these factors have their own specific role in how and why they mediate learning within such a learning environment. Each class of embodied learning environments has a particular embodied configuration and entails different combinations of mediating factors. Within some classes, particular mediating factors are more common than within other classes. For example, within Class I—Immediate Own Motion, the factors multimodality, linking motion to graph, and multiple representations were most common. This implies that the embodied configuration in this learning environment gives students the opportunity to deploy multiple modalities in order to link their own motion to the graphically represented motion and interacts with multiple representations throughout this process. Out of the eight mediating factors, two were mentioned in all four classes: real-world context and multiple representations. These mediating factors seem to be relevant for learning environments supporting students’ understanding of graphing motion, regardless of their embodied configuration.

Our analysis of the 26 studies in which a comparison was made with another learning environment, with a control condition, or between pre- and post-measures to demonstrate the possible impact of embodied learning environments on students’ learning revealed how embodied learning environments with only lower levels of bodily involvement, irrespective of the immediacy of the translation of the embodied experiences, seemed to be less effective. These findings imply that students’ own motion experiences might be most beneficial for learning graphs of change and that learning by observing others/objects’ motion is not as effective within this domain. Consequently, these findings give more weight to the mediating factors (e.g., multimodality, linking motion to graph, and multiple representations) present in Class I—Immediate Own Motion, than to the mediating factors in the other learning environments with different embodied configurations.

Limitations

For quality purposes, we only reviewed research articles published in peer-reviewed journals. As a result of this we might have missed studies on embodied learning environments that have been published elsewhere. Another limitation of our study is related to the challenges we met when classifying the embodied learning environments. Due to the fact that the learning environments often included multiple activities with different levels of bodily involvement and different levels of immediacy, for each learning environment we assigned the highest level of bodily involvement and immediacy that was found in the activities. Consequently, the learning environments with multiple activities were more often classified as whole bodily motion and immediate than as one of the lower levels of bodily involvement and immediacy. Moreover, regarding the levels of bodily involvement, to avoid further complexity of our review, we did not distinguish between observing the movement of a human model and observing the movement of an object, even though some studies have suggested that this is a relevant aspect to consider (Höffler and Leutner 2007; Van Gog et al. 2009). These studies posit how non-human movements are less likely to trigger the mirror-neuron system as opposed to human movements. We, however, consider our topic of interest (graphs of objects/humans moving through space) to have such a clear and direct link to the human action repertoire of moving through space, that if it is an object such as a cart or a car moving that is observed, the same brain activation will take place as with the observation of a human (see also Martin 2007). Another shortcoming of our review is that we did not limit our investigation of mediating factors to those factors that were empirically tested. To have a broad scope of possible mediating factors, we included each mediating aspect mentioned in the articles in our review and clustered them. Therefore, since these mediators are based on the self-reported information provided by the authors of the articles, the evidence for the found mediating factors is not equally strong. Interestingly though, regarding the mediating factors that were found, not many authors seem to link their study to semiotics while this factor can be seen as fundamental to these kinds of learning environments. Similarly, even though all learning environments seem to contain some aspects of multimodality and multiple representations, again not all authors see those aspects as essential or helpful elements of their learning environments, which is yet another interesting finding of our study. Therefore, more research is needed into the effects of these mediating factors, to determine whether, and to what extent, they are helpful in learning environments supporting students’ understanding of graphing motion. A further limitation we would like to address is the wide variety of articles included in our review. This variety led to considerable variation in, for example, the level of education of the participants (ranging from primary education to higher education) and intervention length (ranging from two tasks to 20 class sessions). Even though including this wide variety of articles gives insight in the breadth and depth of the research conducted in this mathematics domain, it also makes it difficult to generalize the results found in the various articles. This especially pertains to the presumed mediating factors per class of embodied learning environments and to the respective efficacy of each class of embodied learning environments. To be more precise regarding the efficacy of embodied learning environments, for example regarding the influence of age, topic, or intervention length, more targeted research is necessary. Our review is also limited by the fact that the reviewed articles include few comparison studies and, as a result, our evaluation of the efficacy of embodied learning environments on student learning was rather constrained. A final limitation of our study relates to our choice to focus on the embodied approach to teaching graphing motion. This means that we did not address the full spectrum of a mathematical topic such as graphing change. Moreover, in addition to offering students an embodied learning environment other approaches can also be used for teaching students graphing motion. The embodiment approach is just one perspective, yet in our opinion, a valuable one.

Future Directions

In this review, we presented a new taxonomy to classify embodied learning environments (see Fig. 1). The taxonomy was based on two important embodied cognitive mechanisms: mirror neural activity and embodied simulation, which were operationalized by looking into the levels of bodily involvement and immediacy. This combination was not encountered in any of the already existing embodied learning taxonomies, although some taxonomies also considered levels of bodily involvement (e.g., Johnson-Glenberg et al. 2014; Skulmowski and Rey 2018). By including immediacy as a further way to classify embodied learning environments, we developed a method to consider learning environments that deal with both immediate, or on-line, cognitive activities and non-immediate, or off-line, cognitive activities. To be precise, observing others/objects can be theoretically aligned with off-line cognitive activity because the mirroring systems hypothesis and embodied simulation share some common characteristics (i.e., the sensorimotor circuits of the brain are re-used through embodied simulation when perceiving someone else performing a particular action). For that reason, we think our taxonomy to be especially relevant for embodied learning environments supporting students’ understanding of graphing motion because when graphing motion, the bodily experience (e.g., the space in which you move) is often separated from the visualization of the motion (e.g., the graphical representation) in both space and time (e.g., Gallese and Lakoff 2005; Rizzolatti et al. 1997), which is conveniently captured as immediacy in our scheme.

Although we think that combining bodily involvement and immediacy in our taxonomy provides a more precise insight in the understanding of embodied learning environments than previous taxonomies did, further research is necessary into the distinctions between the different quadrants of our taxonomy. Therefore, comparisons are needed of the different configurations of embodied learning environments to find out whether and how the embodied configurations of learning environments affect learning. Moreover, the specificity of this taxonomy, including the two embodied cognitive mechanisms, mirror neural activity and embodied simulation, presented along two dimensions, is crucial for categorizing learning environments concerned with dynamic representations. This expresses the need for different subfields of embodied learning research to take into account tailored taxonomies when systematically reviewing the embodiment literature.

We think of at least two ways to use our newly developed taxonomy. First, the classification of embodied learning environments on bodily involvement and immediacy may be used to inform and design new learning environments. The levels of bodily involvement and immediacy can be used as guidance for researchers and curriculum designers, providing them with concrete support on how to incorporate important theoretical distinctions (i.e., mirror neural activity and embodied simulation) for the design of embodied learning environments supporting students’ understanding of graphing motion. Second, the taxonomy may also serve as a framework to categorize embodied learning environments not specifically related to the graphical representation of motion, but to other changing quantities as well, for example temperature. Temperature as well as motion can be directly experienced through the senses. Reed and Evans (1987) found how students’ experiences with the mixing of water at two different temperatures helped them to perform a similar task in an unfamiliar domain (mixing acid solutions) and helped them to understand functional relations. Additionally, we suggest our taxonomy to be valuable for embodied activities outside the domain of graphing change, within, for example, the domain of number learning and number representation. As with graphs of change in the context of modeling motion, embodied activities within this domain often involve (whole) bodily activities. In the article of Fischer et al. (2011), students are given tasks that aim to train their basic numerical competencies by making whole bodily movements. In this respect, kindergartners saw a number on a digital blackboard after which they had to jump left or right depending on whether this number was smaller or larger than a standard value. Here, the experience is immediate since students are immediately confronted with the position of the numbers on the number line.