Abstract

Oropharyngeal dysphagia is prevalent in several at-risk populations, including post-stroke patients, patients in intensive care and the elderly. Dysphagia contributes to longer hospital stays and poor outcomes, including pneumonia. Early identification of dysphagia is recommended as part of the evaluation of at-risk patients, but available bedside screening tools perform inconsistently. In this study, we developed algorithms to detect swallowing impairment using a novel accelerometer-based dysphagia detection system (DDS). A sample of 344 individuals was enrolled across seven sites in the United States. Dual-axis accelerometry signals were collected prospectively with simultaneous videofluoroscopy (VFSS) during swallows of liquid barium stimuli in thin, mildly, moderately and extremely thick consistencies. Signal processing classifiers were trained using linear discriminant analysis and 10,000 random training–test data splits. The primary objective was to develop an algorithm to detect impaired swallowing safety with thin liquids with an area under receiver operating characteristic curve (AUC) > 80% compared to the VFSS reference standard. Impaired swallowing safety was identified in 7.2% of the thin liquid boluses collected. At least one unsafe thin liquid bolus was found in 19.7% of participants, but participants did not exhibit impaired safety consistently. The DDS classifier algorithms identified participants with impaired thin liquid swallowing safety with a mean AUC of 81.5%, (sensitivity 90.4%, specificity 60.0%). Thicker consistencies were effective for reducing the frequency of penetration–aspiration. This DDS reached targeted performance goals in detecting impaired swallowing safety with thin liquids. Simultaneous measures by DDS and VFSS, as performed here, will be used for future validation studies.

Similar content being viewed by others

Introduction

Individuals with dysphagia are faced with two functional concerns: 1, the inability to swallow safely, whereby material enters the airway (“penetration–aspiration” [1]); and/or 2, the inability to swallow efficiently, with residue remaining in the pharynx [2, 3]. Impaired swallowing safety is associated with pneumonia [4] whereas impaired efficiency contributes to the risk of malnutrition [2, 5, 6] and of aspirating residue after the swallow [7]. Dysphagia is estimated to affect 6.7% of hospitalized patients in the United States, with an annual attributable cost of $547 million [8]. The burden of dysphagia is significant, as shown in a recent analysis of the United States Agency for Healthcare Research and Quality (AHRQ) Healthcare Cost and Utilization Project National Inpatient Sample database (2009–2013) [9]. The estimated additional cost of dysphagia over the study period was $16.8 billion. Mean length of stay was 8.8 days for those with a dysphagia diagnosis versus 5.0 days for those without. Adult patients with dysphagia were 1.7 times more likely to die in hospital and 33% more likely to be discharged to a post-acute care facility than those without dysphagia. Similar results from a European study of dysphagia following acute ischemic stroke showed that patients with dysphagia more frequently had pneumonia (23.1% vs. 1.1%), higher mortality (13.6% vs. 1.6%), longer lengths of stay and were less frequently discharged to home (19.5% vs. 63.7%) [10].

Stroke practice guidelines recommend early screening for swallowing impairment [11,12,13]. For example, the 2018 American Heart Association/American Stroke Association guidelines for acute ischemic stroke recommend early screening to identify dysphagia and, for those in whom risk of dysphagia is identified, a swallowing assessment before the patient begins eating, drinking or receiving oral medications [11, 12]. Evidence suggests that formal screening programs are more effective at detecting dysphagia than informal approaches [14] and are effective for reducing pneumonia [15,16,17]. There is, however, a lack of agreement regarding optimal screening methodologies [18,19,20,21,22,23,24,25,26,27,28]. Most swallow screening protocols rely on observations by trained health care professionals who perform subjective evaluations of non-swallowing tasks (e.g., speech clarity, tongue motor function, voice quality and voluntary cough function) and swallows of water or other stimuli [23,24,25,26,27,28]. The detection of ≥ 1 sign(s) of concern has relatively good sensitivity (i.e., > 80%) for identifying patients in whom prior or subsequent videofluoroscopic or endoscopic examinations of swallowing confirm the presence of dysphagia or aspiration [24, 26,27,28,29]. Specificity is reported to range from 49 to 79%.

One acknowledged limitation of existing screening protocols is their reliance on observation of overt signs of aspiration; by definition, this results in poor sensitivity for identifying silent aspiration (i.e., aspiration without outward signs of difficulty), which is estimated to occur in up to 2/3 of stroke patients who aspirate [30, 31]. A further limitation is that the sensitivity and specificity of screening tests are typically determined through comparison of the net (i.e., worst) result observed across several screening criteria and the worst result obtained across several swallowing tasks in the reference assessment. Studies suggest that individuals who aspirate do not do so consistently across repeated presentations of similar boluses, even within a single examination [32, 33]. Such variability challenges the idea that the accuracy of screening test results can be properly evaluated through comparison to instrumental reference data collected on a separate occasion.

For the past few years, we have been developing a non-invasive medical device (the Dysphagia Detection System, DDS) to detect swallowing impairment based on automated machine analysis of cervical accelerometry signals, trained through direct comparison to simultaneous videofluoroscopy [34] (2012). If an accurate and reliable device can be developed, concerns regarding the reliance of swallow screening on subjective clinical observations would be obviated, together with the burden that currently exists for training health care professionals to recognize signs of swallowing impairment. We report the results of a prospective study to develop signal processing algorithms for the DDS device, with the ultimate goal of using this device to screen swallowing function in adults at risk of oropharyngeal dysphagia.

Methods

This study involved prospective collection and classification of dual-axis (superior–inferior and anterior–posterior) accelerometry signals, collected from the front of the neck during swallows of water and of barium stimuli of different consistencies. These signals were collected with time-synchronized videofluoroscopy (VFSS), which was used as the clinical reference standard for determining true status (safe/unsafe; efficient/inefficient). The study was conducted at seven hospitals (six acute care; one inpatient rehabilitation hospital) between November 7, 2013, and March 11, 2015. The protocol was approved by the Institutional Review Board (IRB) of each participating medical center.

Participants

Consenting participants were recruited from a broad population of adults considered at risk for non-congenital, non-surgical, and non-oncologic oropharyngeal dysphagia. The population included those with stroke or other brain injury aged ≥ 21 years and other inpatients or outpatients with dysphagia risk aged ≥ 50 years. Exclusion criteria included the presence of a nasogastric feeding tube; neck surgery (including tracheotomy within the past year, resection for oral or pharyngeal cancer, radical neck dissection, cervical spine surgery, carotid endarterectomy, orofacial reconstruction, pharyngoplasty, or thyroidectomy; routine tonsillectomy and/or adenoidectomy were not excluded); non-surgical trauma to the neck resulting in musculoskeletal or nerve injury; or radiation to the neck. These exclusions were applied due to the possibility that these conditions might alter or interfere with the ability to collect swallowing accelerometry signals using a sensor placed on the surface of the neck. Participants had to have sufficient cognitive function to be able to comply with study procedures.

Investigational Device Description

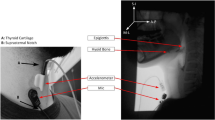

The DDS is a portable, non-invasive device consisting of a dual-axis accelerometer (bandwidth up to 1600 Hz) contained in the plastic housing of a sensor unit that is attached by a single-use, disposable fixation unit to the front of a patient’s neck in midline, just below the palpable lower border of the thyroid cartilage. Vibrations are detected in the superior–inferior and anterior–posterior axes. The sensor unit was connected via a cable to an A/D converter (12-bit resolution, 10 kHz sampling frequency), which in turn was connected to a laptop computer where the signal was recorded.

Procedures

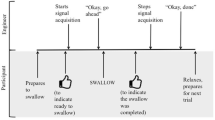

The data collection protocol began by asking participants to swallow six comfortably sized sips of water from a cup. These water data were collected to demonstrate equivalence of the DDS signal profiles for water and thin-barium stimuli and will not be further discussed in this manuscript. The protocol continued with six sips of thin liquid barium, followed by three sips each of mildly thick, moderately thick and extremely thick barium. The thin and mildly thick liquids were taken by sip from 7-ounce cups containing 4 oz of liquid. The moderately thick and extremely thick barium were taken by teaspoon. Sip volume was measured based on pre- and post-sip cup weights. The thickened stimuli were prepared by mixing a powdered xanthan gum thickener (Nestlé Resource® ThickenUp® Clear™) with the thin liquid barium powder (Bracco Varibar® Thin) and water in a 20% w/v barium concentration according to standard recipes (see Online Appendix for more details). Lateral view videofluoroscopy (recorded at 30 frames/second) and simultaneous accelerometry signals were captured on a laptop computer. Stopping rules were applied for safety with testing of a particular stimulus discontinued after two penetration–aspiration events and the entire protocol terminated after a total of five penetration–aspiration events.

Videofluoroscopy Rating

All videofluoroscopy records were analyzed in a central lab utilizing a standard operating procedure (see Online Appendix for more details). Each bolus recording was randomly assigned to be assessed independently by two raters who were masked to the identity and diagnosis of the participant, study site, the bolus consistency and order of the bolus within the data collection sequence. The rating for each bolus included a measure of swallowing safety scored according to the 8-point Penetration–Aspiration Scale [1]; and pixel-based measures of residue severity taken on the last frame of each swallow [35]. When necessary, a meeting of at least three raters was convened to resolve discrepancies by consensus. If the raters concurred that visualization of the structures necessary for a particular rating was obscured, the feature in question was documented as not-rateable and became a missing data point, resulting in exclusion of that record from the data available for algorithm training. Confirmed ratings were transformed to binary scores as follows: for swallowing safety, 1–2 versus 3–8 on the Penetration–Aspiration Scale [1]; inefficiency was operationally defined as the presence of residue at the end of any swallow, filling ≥ 50% of the valleculae and/or the pyriform sinuses [36]. A participant was considered to have impaired swallowing safety (or efficiency) on a given consistency if at least one bolus in the series for that stimulus was rated as unsafe or inefficient, respectively.

Accelerometry Signal Processing and Classifier Development

The classifier development path is illustrated in Fig. 1. During preprocessing, the accelerometry signals were filtered first with a second-order high-pass Butterworth filter (0.1 Hz corner frequency) and then with a low-pass filter (1000 Hz corner frequency). This was followed by automated signal segmentation to isolate regions of swallow activity within each signal recording and feature extraction for analysis. Several well-established models for training classifiers were explored, including Support Vector Machine, Random Forest, Quadratic Discriminant Analysis and Linear Discriminant Analysis (LDA) [37]. Ultimately, a regularized LDA model approach using an equal covariance matrix for all estimated classes was selected because it had the most robust performance and a low risk of over-fitting to the training data [37]. Classifier models were built and validated in an iterative fashion using internal repeated random sub-sampling (also known as the Monte Carlo method [38, 39]). From the whole set of data, 20% of the participants were set aside as a validation set and the signals from the remaining 80% of participants were used to train the classifier (training set). The trained classifier was then used to estimate the classes (i.e., safe, unsafe; efficient, inefficient) of the cases in the validation set that were previously unseen by the classifier. These results were then compared to the true classes obtained from the videofluoroscopy analysis. This process was repeated 10,000 times with randomly selected validation and training sets and the classifier was re-generated during each iteration with the new training set. In this manner, each independent iteration could be considered analogous to training the device on a sample of approximately 244 individuals, representing 80% of individuals in a particular cohort in a particular setting, before being applied unsupervised to the remaining 20% of unseen patients (approximately 61) in that cohort and setting. Random splitting of the data was stratified by the participant’s status derived from the VFSS results; thus, all boluses from a given participant were either in the training or the validation set on a given iteration. The area under the curve (AUC) measures of the receiver operator curve (ROC) from each of the independent validation sets across all runs were averaged to provide the mean and standard deviation of the AUC for the classifier at the bolus level. The large number of iterations (i.e., 10,000) was chosen in light of the high standard deviation of the resulting AUC.

In total, six different LDA classifiers were developed: three algorithms to detect impaired swallowing safety (thin liquids; mildly thick liquids; moderately thick liquids) and three to detect impaired swallowing efficiency (thin, mildly and moderately thick liquids). Given the limited availability of impaired swallowing safety data for the moderately thick consistency, it was decided to use combined moderately and extremely thick stimuli in the training set of the moderately thick safety classifier; the corresponding validation sets included only moderately thick consistency swallows.

Analysis of the cumulative frequency of impaired swallowing safety by bolus number, across the series of thin liquid boluses administered, showed new occurrences beyond the first bolus (7.5%) to the second (15%), third (18.5%) and fourth bolus (22.2%), with relatively small incremental detection rates on the fifth (23.4%) and sixth (25.6%) boluses. Given this finding, the mean predicted probability of impairment was summarized at the participant level using up to four boluses for thin and up to three boluses for other consistencies. The ROC at participant level was obtained by comparing these mean predicted probabilities with the participant level class label obtained using the “at least one positive” roll-up rule on the VFSS binary data. Thus, if the VFSS showed a problem on at least one bolus of a given consistency, that participant was classified as having impaired swallowing function on that consistency.

Results

Participants

A total of 344 patients consented to participate in the study. Of these, 12 were excluded based on protocol requirements (see Fig. 2). Videofluoroscopy was performed in 332 participants for whom the demographic characteristics are summarized in Table 1. Complete VFSS and DDS signal data were available for at least 2 boluses for 305 participants. There were no serious adverse events related to the device or the swallowing trial protocol.

Prevalence of Impaired Swallowing Safety and Efficiency in the Study Population

Videofluoroscopy data for 1730 thin, 872 mildly thick, 833 moderately thick and 794 extremely thick boluses were analyzed. Table 2 shows the frequencies of unsafe swallows and efficiency issues by consistency at both the bolus and the participant levels. The number of available data points differs for the safety versus efficiency ratings because the visibility of the airway (necessary for rating safety) on a given recording may have differed from visibility of the valleculae and pyriform sinuses required for rating efficiency. Post hoc examination of the frequency distribution of Penetration–Aspiration Scale rating data by the central laboratory showed that 23 participants (i.e., 7.5%) had silent aspiration (i.e., PAS scores of 8) on at least one thin liquid bolus. The participant-level prevalence of silent aspiration dropped to 5.3% on the mildly thick liquids and < 1% on the moderately and extremely thick liquids.

Accuracy of Classifiers

Tables 3 and 4 summarize the estimated accuracy of the DDS classifiers for swallowing safety and efficiency, respectively. For the primary outcome of detecting impaired swallowing safety on thin liquids, a mean AUC of 80.9% on the ROC was obtained at the bolus level. At the participant level, the mean AUC was 81.5%, with sensitivity (i.e., true positive rate) of 90.4% and specificity (i.e., true negative rate) of 60.0%. Classifier performance was similar for detecting impaired swallowing safety on thicker consistencies. The efficiency classifiers achieved sensitivities of ~ 80% and specificities of 60% across the consistencies tested.

Discussion

In this study, signal processing classifiers were trained using a large dataset collected from a heterogeneous sample of individuals with risk for dysphagia. As the first step in identifying dysphagia, it is desirable for swallow screening methods to have wide applicability and accuracy in patient populations with dysphagia risk related to varied medical conditions and for whom the pathophysiological mechanisms leading to impaired swallowing safety or efficiency may be heterogeneous. Within this broader objective, the inclusion criteria for this particular study excluded individuals with oncological, structural or congenital etiologies of dysphagia, and the definition of impaired swallowing safety was set conservatively as a Penetration–Aspiration Scale score ≥ 3.

The DDS classifiers developed in this study were able to identify impaired swallowing safety on thin liquid swallows with high accuracy. In clinical practice, the achieved classifier performance results would translate to failure to identify impaired swallowing safety in 10% of patients and over-detection in 40%. This degree of over-detection is acceptable in screening, provided that referral for assessment to verify the patient’s swallowing status occurs in a timely manner [40, 41].

Several differences between the classifier results of this study and commonly used swallow screening protocols should be noted. First, the results for thin liquids in this study were obtained using a small number of sips (i.e., 4). Second, the results obtained in this project are consistency specific. It is encouraging that similar algorithm performance was achieved for detecting impaired swallowing safety across a range of consistencies. The current data are consistent with previous studies in showing reduced rates of penetration–aspiration with thickened liquids [42,43,44,45]. However, this also meant that a smaller number of impaired signal examples were available for algorithm training for moderately thick liquids. Additionally, protocol-mandated stopping rules did not permit more severely affected participants to receive the thicker consistencies.

Our data corroborate observations from previous studies that the occurrence of penetration–aspiration on a given consistency varies from bolus to bolus in individuals with impaired swallowing safety [32, 33]. This finding has two major implications: (1) more than one swallow should be assessed to determine if a patient can swallow a given consistency safely; and (2) validation of the accuracy of any method for detecting swallowing impairment at the bedside requires direct comparison to a simultaneous reference standard rather than a reference test performed at a different time [26,27,28]. An advantage of using direct comparison to simultaneous videofluoroscopy is that we were able to include verified examples of silent aspiration in the training sets of unsafe swallows in this study.

Given the overall objective of developing a swallow screening instrument that would have broad utility to identify impaired swallowing safety in a heterogeneous sample of patients, no attempts were made to stratify the data by PAS severity beyond the binary groupings of < versus ≥ 3. Similarly, we did not limit the examples of impaired swallowing safety to cases of airway invasion before, during or after the swallow. Although previous studies have identified links between specific swallowing parameters (such as measures of hyolaryngeal excursion) and the features of swallowing accelerometry signals [46, 47], the algorithm training process in this study allowed for a variety of pathophysiological mechanisms to be included in the subset of cases that the device learned to identify as impaired. The study is not powered to allow stratification by severity, timing or mechanism of swallowing impairment; stratifications of this sort would require much larger datasets with adequate representation of the different groupings of interest.

The majority of swallow screening protocols in current use focus on signs of impaired safety and risk of aspiration, without consideration of swallow efficiency. Given this, it is encouraging that sensitivities and specificities of ~ 80 and 60%, respectively, were also obtained for the separate classifier algorithms developed to detect impaired swallowing efficiency using the DDS. Individuals who have impaired swallowing efficiency may require more time to complete a meal, and are thought to be at risk both for post-swallow aspiration and malnutrition [6, 36]. Our data show that swallowing inefficiency is a common finding. Furthermore, the data suggest that residue can be common after swallows of thin liquids. We found no evidence to support the widespread assumption that residue increases with thicker stimuli (Table 2). This finding may be explained by the use of a xanthan gum thickener rather than starch-based thickeners [48], which are commonly used in clinical practice.

Conclusion

In this study, we generated accelerometry signal classification algorithms to detect impaired swallowing safety in patients at risk for dysphagia with high accuracy using a pragmatic DDS protocol involving a series of four thin liquid sips. Additional algorithms to detect impaired swallowing safety with thicker consistencies and to detect impaired swallowing efficiency across the range from thin to moderately thick liquids were also developed. Development of these algorithms represents a critical first step in the process of developing an accurate, non-invasive, accelerometry-based device for swallow screening. The next step will be to validate these algorithms in a prospective study using a novel patient sample. Based on the current study results, a validation study of this sort will require simultaneous collection of screening and reference data. If such a validation study were to be performed with a fixed design in a population with 60% participant-level prevalence of impaired swallowing safety on thin liquids, with at least 30% of the thin liquid swallows displaying the swallowing safety problem in those participants, power calculations based on the results of this study suggest that a minimum sample of 500–600 participants would be required, assuming performance targets of 86% sensitivity, 60% specificity and 90% power. If accurate device performance can be confirmed for detecting impaired swallowing safety and/or impaired swallowing efficiency on a range of liquid consistencies in the broad population of individuals at risk for dysphagia, comparisons to current screening methods, which rely on subjective clinical observations of swallowing difficulty, will also be warranted.

References

Rosenbek JC, Robbins JA, Roecker EB, Coyle JL, Wood JL. A penetration-aspiration scale. Dysphagia. 1996;11:93–8.

Rofes L, Arreola V, Almirall J, Cabre M, Campins L, Garcia-Peris P, Speyer R, Clave P. Diagnosis and management of oropharyngeal Dysphagia and its nutritional and respiratory complications in the elderly. Gastroenterol Res Pract. 2011;2011:818979.

Clave P, Shaker R. Dysphagia: current reality and scope of the problem. Nat Rev Gastroenterol Hepatol. 2015;12:259–70.

Pikus L, Levine MS, Yang YX, Rubesin SE, Katzka DA, Laufer I, Gefter WB. Videofluoroscopic studies of swallowing dysfunction and the relative risk of pneumonia. AJR Am J Roentgenol. 2003;180:1613–6.

Carrion S, Cabre M, Monteis R, Roca M, Palomera E, Serra-Prat M, Clave P. Prevalence and association between oropharyngeal Dysphagia and malnutrition in patients hospitalized in an acute geriatric unit (AGU). Dysphagia. 2011;26(4):479.

Carrion S, Cabre M, Monteis R, Roca M, Palomera E, Serra-Prat M, Rofes L, Clave P. Oropharyngeal Dysphagia is a prevalent risk factor for malnutrition in a cohort of older patients admitted with an acute disease to a general hospital. Clin Nutr. 2015;34:436–42.

Molfenter SM, Steele CM. The relationship between residue and aspiration on the subsequent swallow: an application of the normalized residue ratio scale. Dysphagia. 2013;28(4):494–500.

Altman KW, Yu GP, Schaefer SD. Consequence of Dysphagia in the hospitalized patient: impact on prognosis and hospital resources. Arch Otolaryngol Head Neck Surg. 2010;136:784–9.

Patel DA, Krishnaswami S, Steger E, Conover E, Vaezi MF, Ciucci MR, Francis DO. Economic and survival burden of Dysphagia among inpatients in the United States. Dis Esophagus. 2018;31:1–7.

Arnold M, Liesirova K, Broeg-Morvay A, Meisterernst J, Schlager M, Mono ML, El-Koussy M, Kagi G, Jung S, Sarikaya H. Dysphagia in acute stroke: incidence, burden and impact on clinical outcome. PLoS ONE. 2016;11:e0148424.

Powers WJ, Rabinstein AA, Ackerson T, Adeoye OM, Bambakidis NC, Becker K, Biller J, Brown M, Demaerschalk BM, Hoh B, Jauch EC, Kidwell CS, Leslie-Mazwi TM, Ovbiagele B, Scott PA, Sheth KN, Southerland AM, Summers DV, Tirschwell DL, American Heart Association Stroke C. 2018 guidelines for the early management of patients with acute ischemic stroke: a Guideline for Healthcare Professionals from the American Heart Association/American Stroke Association. Stroke. 2018;49:e46–110.

Smith EE, Kent DM, Bulsara KR, Leung LY, Lichtman JH, Reeves MJ, Towfighi A, Whiteley WN, Zahuranec DB, American Heart Association Stroke C. Effect of Dysphagia screening strategies on clinical outcomes after stroke: a systematic review for the 2018 guidelines for the early management of patients with acute ischemic stroke. Stroke. 2018;49:e123–8.

Boulanger JM, Lindsay MP, Gubitz G, Smith EE, Stotts G, Foley N, Bhogal S, Boyle K, Braun L, Goddard T, Heran M, Kanya-Forster N, Lang E, Lavoie P, McClelland M, O’Kelly C, Pageau P, Pettersen J, Purvis H, Shamy M, Tampieri D, vanAdel B, Verbeek R, Blacquiere D, Casaubon L, Ferguson D, Hegedus Y, Jacquin GJ, Kelly M, Kamal N, Linkewich B, Lum C, Mann B, Milot G, Newcommon N, Poirier P, Simpkin W, Snieder E, Trivedi A, Whelan R, Eustace M, Smitko E, Butcher K, Acute Stroke Management Best Practice Writing G, the Canadian Stroke Best P, Quality Advisory C, in collaboration with the Canadian Stroke C, the Canadian Association of Emergency P: Canadian Stroke Best Practice Recommendations for Acute Stroke Management: Prehospital, Emergency Department, and Acute Inpatient Stroke Care, 6th edn., Update 2018. Int J Stroke: 1747493018786616, 2018.

Sherman V, Flowers H, Kapral MK, Nicholson G, Silver F, Martino R. Screening for Dysphagia in adult patients with stroke: assessing the accuracy of informal detection. Dysphagia. 2018;33(5):662–9.

Lakshminarayan K, Tsai AW, Tong X, Vazquez G, Peacock JM, George MG, Luepker RV, Anderson DC. Utility of Dysphagia screening results in predicting poststroke pneumonia. Stroke. 2010;41:2849–54.

Hinchey JA, Shephard T, Furie K, Smith D, Wang D, Tonn S. Formal Dysphagia screening protocols prevent pneumonia. Stroke. 2005;36:1972–6.

Titsworth WL, Abram J, Fullerton A, Hester J, Guin P, Waters MF, Mocco J. Prospective quality initiative to maximize Dysphagia screening reduces hospital-acquired pneumonia prevalence in patients with stroke. Stroke. 2013;44:3154–60.

Ramsey DJ, Smithard DG, Kalra L. Can pulse oximetry or a bedside swallowing assessment be used to detect aspiration after stroke? Stroke. 2006;37:2984–8.

Bours GJ, Speyer R, Lemmens J, Limburg M, de Wit R. Bedside screening tests vs. videofluoroscopy or fibre optic endoscopic evaluation of swallowing to detect Dysphagia in patients with neurological disorders: systematic review. J Adv Nurs. 2009;65:477–93.

Donovan NJ, Daniels SK, Edmiaston J, Weinhardt J, Summers D, Mitchell PH, American Heart Association Council on Cardiovascular N, Stroke C. Dysphagia screening: state of the art: invitational conference proceeding from the State-of-the-Art Nursing Symposium, International Stroke Conference 2012. Stroke. 2013;44:e24–31.

O’Horo JC, Rogus-Pulia N, Garcia-Arguello L, Robbins J, Safdar N. Bedside diagnosis of Dysphagia: a systematic review. J Hosp Med. 2015;10:256–65.

Kertscher B, Speyer R, Palmieri M, Plant C. Bedside screening to detect oropharyngeal Dysphagia in patients with neurological disorders: an updated systematic review. Dysphagia. 2014;29:204–12.

Brodsky MB, Suiter DM, Gonzalez-Fernandez M, Michtalik HJ, Frymark TB, Venediktov R, Schooling T. Screening accuracy for aspiration using bedside water swallow tests: a systematic review and meta-analysis. Chest. 2016;150:148–63.

Daniels SK, Anderson JA, Willson PC. Valid items for screening Dysphagia risk in patients with stroke: a systematic review. Stroke. 2012;43:892–7.

Steele CM, Molfenter SM, Bailey GL, Cliffe Polacco R, Waito AA, Zoratto DCBH, Chau T. Exploration of the utility of a brief swallow screening protocol with comparison to concurrent videofluoroscopy. Can J Speech-Lang Pathol Audiol. 2011;35:228–42.

Martino R, Silver F, Teasell R, Bayley M, Nicholson G, Streiner DL, Diamant NE. The Toronto Bedside Swallowing Screening Test (TOR-BSST): development and validation of a Dysphagia screening tool for patients with stroke. Stroke. 2009;40:555–61.

Suiter DM, Sloggy J, Leder SB. Validation of the Yale Swallow Protocol: a prospective double-blinded videofluoroscopic study. Dysphagia. 2014;29:199–203.

Trapl M, Enderle P, Nowotny M, Teuschl Y, Matz K, Dachenhausen A, Barinin M. Dysphagia bedside screening for acute-stroke patients: the Gugging swallowing screen. Stroke. 2007;38:2948–52.

Clave P, Arreola V, Romea M, Medina L, Palomera E, Serra-Prat M. Accuracy of the volume-viscosity swallow test for clinical screening of oropharyngeal Dysphagia and aspiration. Clin Nutr. 2008;27:806–15.

Horner J, Massey EW. Silent aspiration following stroke. Neurology. 1988;38:317–9.

Daniels SK, Brailey K, Priestly DH, Herrington LR, Weisberg LA, Foundas AL. Aspiration in patients with acute stroke. Arch PhysMed Rehabil. 1998;79:14–9.

Power ML, Hamdy S, Goulermas JY, Tyrrell PJ, Turnbull I, Thompson DG. Predicting aspiration after hemispheric stroke from timing measures of oropharyngeal bolus flow and laryngeal closure. Dysphagia. 2009;24:257–64.

Bath PM, Scutt P, Love J, Clave P, Cohen D, Dziewas R, Iversen HK, Ledl C, Ragab S, Soda H, Warusevitane A, Woisard V, Hamdy S. Swallowing treatment using pharyngeal electrical stimulation trial I: pharyngeal electrical stimulation for treatment of Dysphagia in subacute stroke: a randomized controlled trial. Stroke. 2016;47:1562–70.

Steele CM, Sejdic E, Chau T. Noninvasive detection of thin-liquid aspiration using dual-axis swallowing accelerometry. Dysphagia. 2013;28(1):105–12.

Pearson WG Jr, Molfenter SM, Smith ZM, Steele CM. Image-based measurement of post-swallow residue: the normalized residue ratio scale. Dysphagia. 2013;28:167–77.

Eisenhuber E, Schima W, Schober E, Pokieser P, Stadler A, Scharitzer M, Oschatz E, Eisenhuber E, Schima W, Schober E, Pokieser P, Stadler A, Scharitzer M, Oschatz E. Videofluoroscopic assessment of patients with Dysphagia: pharyngeal retention is a predictive factor for aspiration. AJR Am J Roentgenol. 2002;178:393–8.

Hastie T, Tibshirani R, Friedman J. The elements of statistical learning: data mining, inference and prediction. 2nd ed. New York: Springer; 2001.

Simon R. Resampling strategies for model assessment and selection. In: Dubitzky W, Granzow M, Berrar D, editors. Fundamentals of data mining in genomics and proteomics. New York: Springer; 2007.

Remesan R, Mathew J. Model data selection and data pre-processing approaches. Hydrological data driven modelling: a case study. New York: Springer; 2014. p. 41–70.

Swets JA. The science of choosing the right decision threshold in high-stakes diagnostics. Am Psychol. 1992;47:522–32.

Samuelson FW. Inference based on diagnostic measures from studies of new imaging devices. Acad Radiol. 2013;20:816–24.

Logemann JA, Gensler G, Robbins J, Lindblad AS, Brandt D, Hind JA, Kosek S, Dikeman K, Kazandjian M, Gramigna GD, Lundy D, McGarvey-Toler S, Miller Gardner PJ. A randomized study of three interventions for aspiration of thin liquids in patients with dementia or Parkinson’s disease. J Speech Lang Hear Res. 2008;51:173–83.

Leonard RJ, White C, McKenzie S, Belafsky PC. Effects of bolus rheology on aspiration in patients with Dysphagia. J Acad Nutr Diet. 2014;114:590–4.

Steele CM, Alsanei WA, Ayanikalath S, Barbon CE, Chen J, Cichero JA, Coutts K, Dantas RO, Duivestein J, Giosa L, Hanson B, Lam P, Lecko C, Leigh C, Nagy A, Namasivayam AM, Nascimento WV, Odendaal I, Smith CH, Wang H. The influence of food texture and liquid consistency modification on swallowing physiology and function: a systematic review. Dysphagia. 2015;30:2–26.

Newman R, Vilardell N, Clave P, Speyer R. Effect of bolus viscosity on the safety and efficacy of swallowing and the kinematics of the swallow response in patients with oropharyngeal Dysphagia: White Paper by the European Society for Swallowing Disorders (ESSD). Dysphagia. 2016;31:232–49.

Zoratto DCBH, Chau T, Steele CM. Hyolaryngeal excursion as the physiological source of swallowing accelerometry signals. Physiol Meas. 2010;31:843–56.

Dudik JM, Kurosu A, Coyle JL, Sejdic E. Dysphagia and its effects on swallowing sounds and vibrations in adults. Biomed Eng Online. 2018;17:69.

Vilardell N, Rofes L, Arreola V, Speyer R, Clave P. A comparative study between modified starch and Xanthan Gum thickeners in post-stroke oropharyngeal Dysphagia. Dysphagia. 2016;31:169–79.

Acknowledgements

The authors would like to thank Maryam Kadjar Olesen, Head of Global Clinical Operations at Nestlé Health Science for her contributions to the study. The authors would like to thank Paula Kamman, Nancy Schurhammer and Ryan Ping from RCRI Regulatory and Clinical Research Institute, Minneapolis, MN for their contributions to oversight of data collection, database management and quality control. The authors would like to thank Liza Blumenfeld (Scripps Memorial Hospital, La Jolla, CA), Gintas Krisciunas (Boston Medical Centre, Boston, MA), Andrea Tobochnik (Bronson Healthcare, Kalamazoo, MI), Ka Lun Tam (Bloorview Research Institute, Toronto), Melanie Peladeau-Pigeon, Melanie Tapson, Talia Wolkin and Navid Zohouri-Haghian (Toronto Rehabilitation Institute—University Health Network) for their contributions to data acquisition. The authors would like to thank Carly Barbon, Vivian Chak, Amy Dhindsa, Robbyn Draimin, Natalie Muradian, Ahmed Nagy, Ashwini Namasivayam-MacDonald, Sonya Torreiter, Teresa Valenzano and Ashley Waito, (Toronto Rehabilitation Institute—University Health Network) for their contributions to videofluoroscopy rating. The authors would like to thank Jaakko Lähteenmäki, Juha Pajula and co-workers of VTT Technical Research Centre of Finland Ltd. for their contributions to data analysis and input on technical implementation considerations. The authors would like to thank Tom Chau, Helia Mohammadi, Ali Akbar-Samadani and Ka Lun Tam from the PRISM lab, Bloorview Research Institute, Toronto, for their contributions to data analysis. The authors would like to thank Munshiimran Hossain and Andrea Hita from Cytel Inc. for their contributions to data analysis.

Funding

The study was sponsored by Nestec S.A., Vevey, Switzerland, through contracts negotiated through a contract research organization with participating hospitals and directly with Cytel, Massachusetts, USA; VTT Technical Research Centre of Finland, Espoo, Finland; and University Health Network, Toronto. The sponsor was responsible for site management, data collection, database management and data archiving activities.

Author information

Authors and Affiliations

Contributions

Catriona M. Steele is the guarantor of the paper and takes responsibility for the integrity of the work as a whole, from inception to published article. The study was designed by Catriona M. Steele & Nestlé (co-authors NM, MJ). Classifier development and analysis was conducted by Nestlé (co-authors NM, MJ) with VTT (co-authors KM, HP), Cytel (co-author RM) and the Prism Lab, Bloorview Research Institute, Toronto. Data were collected by site investigator teams (co-authors SLB, KBT, SL, LFR, and NBS). The statistical analyses were performed by Cytel (co-author RM) according to the statistical analysis plan. The study was guided by an advisory group who interpreted the results (co-authors CMS, PMB, LBG, RLH, DL, KRL, and AM). Preparation, review and approval of the manuscript were performed by all co-authors.

Corresponding author

Ethics declarations

Conflict of interest

Philip M. Bath is Stroke Association Professor of Stroke Medicine and a NIHR Senior Investigator. He is a member of a Nestlé Advisory Board for which he receives an honorarium and travel expenses. He is a consultant to Nestec SA and for Phagenesis Ltd (which is partly owned by Nestlé), has given education talks for them, was Chief Investigator of their STEPS trial, is Co-Chief Investigator of their PhEED trial, and receives an honorarium and travel expenses for this work. Larry B. Goldstein is the Ruth L. Works Professor and Chairman, Department of Neurology and Co-Director, Kentucky Neuroscience Institute at the University of Kentucky. He is a member of a Nestlé Advisory Board for which he receives an honorarium and travel expenses. Richard L. Hughes is Professor of Neurology and participates in clinical research sponsored by the NIH and Industry. He is a member of a Nestle Advisory Board for which he receives an honorarium and travel expenses. Kennedy R. Lees is Professor of Cerebrovascular Medicine at the Institute of Cardiovascular and Medical Sciences, University of Glasgow. He is a consultant for Nestec SA. He has also received consulting fees or research funding from the American Heart Association, Applied Clinical Intelligence, Boehringer Ingelheim, EVER NeuroPharma, Hilicon, Parexel, Translation Medical Academy, University Newcastle (Australia), European Union, IQVIA, National Institutes of Health (USA), Novartis, and Sunovion. Dana Leifer is Associate Professor of Neurology and an NIH StrokeNet Principal Investigator. He is a member of a Nestlé Advisory Board for which he receives an honorarium. Atte Meretoja has consulted for Nestec SA and Phagenesis Ltd. He is a member of a Nestlé Advisory Board for which he receives an honorarium and travel expenses. Luis F. Riquelme is a member of the board of directors for the International Dysphagia Diet Standardisation Initiative. Catriona M. Steele has served on Nestlé expert panels for which she has received honoraria and travel expenses. She is an NIH-funded principal investigator and a member of the board of directors for the International Dysphagia Diet Standardisation Initiative. Nancy B. Swigert is a paid consultant for Nestlé and serves on the Medical Advisory Board of the National Foundation on Swallowing Disorders. Natalia Muehlemann and Michael Jedwab are full-time employees of Nestlé Health Science, Nestec SA. Juha M. Kortelainen and Harri Pölönen are employees of VTT Technical Research Centre of Finland Ltd. Rajat Mukherjee is an employee of Cytel Inc. Susan L. Brady, Kayla Brinkman Theimer and Susan Langmore declare no conflicts of interest.

Ethical Approval

The protocol was approved by the Institutional Review Board (IRB) of each participating medical center.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

OpenAccess This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Steele, C.M., Mukherjee, R., Kortelainen, J.M. et al. Development of a Non-invasive Device for Swallow Screening in Patients at Risk of Oropharyngeal Dysphagia: Results from a Prospective Exploratory Study. Dysphagia 34, 698–707 (2019). https://doi.org/10.1007/s00455-018-09974-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00455-018-09974-5