Abstract

Since the 1970s, scientists have developed statistical methods intended to formalize detection of changes in global climate and to attribute such changes to relevant causal factors, natural and anthropogenic. Detection and attribution (D&A) of climate change trends is commonly performed using a variant of Hasselmann’s “optimal fingerprinting” method, which involves a linear regression of historical climate observations on corresponding output from numerical climate models. However, it has long been known in the field of time series analysis that regressions of “non-stationary” or “trending” variables are, in general, statistically inconsistent and often spurious. When non-stationarity is caused by “integrated” processes, as is likely the case for climate variables, consistency of least-squares estimators depends on “cointegration” of regressors. This study has shown, using an idealized linear-response-model framework, that if standard assumptions hold then the optimal fingerprinting estimator is consistent, and hence robust against spurious regression. In the case of global mean surface temperature (GMST), parameterizing abstract linear response models in terms of energy balance provides this result with physical interpretability. Hypothesis tests conducted using observations of historical GMST and simulation output from 13 CMIP6 general circulation models produced no evidence that standard assumptions required for consistency were violated. It is therefore concluded that, at least in the case of GMST, detection and attribution of climate change trends is very likely not spurious regression. Furthermore, detection of significant cointegration between observations and model output indicates that the least-squares estimator is “superconsistent”, with better convergence properties than might previously have been assumed. Finally, a new method has been developed for quantifying D&A uncertainty, exploiting the notion of cointegration to eliminate the need for pre-industrial control simulations.

Similar content being viewed by others

1 Introduction

Statistical methods for detection and attribution (D&A) of climate change trends have been widely used in climate change studies over the last two decades, and the resulting inferences have informed assessment reports from the Intergovernmental Panel on Climate Change (IPCC) (Mitchell et al. 2001; Hegerl et al. 2007; Bindoff et al. 2013). Formal D&A studies commonly employ some variant of the method known as “optimal fingerprinting”, introduced by Hasselmann (1979, 1997). Optimal fingerprinting frames D&A as the problem of separating the forced component of historical climate observations (i.e. the signal) from internal climate variability (the noise) (Hegerl and Zwiers 2011). In practice, this separation of signal and noise is performed by projecting climate observations onto corresponding simulation output from general circulation models (GCMs), in a procedure analogous to a multivariate linear regression (Allen and Tett 1999). Optimal fingerprinting assumes a regression model of the form

where \(\varvec{y}\) denotes historical climate observations; X is a matrix of predicted climate-change signals, typically consisting of simulation output from a GCM; and \(\varvec{e}\) is a composite error term containing internal climate variability noise as well as other sources of uncertainty. Regression coefficients \(\varvec{\beta }\) are known in D&A as “scaling factors”. Detection and attribution inferences depend on obtaining reliable estimates of these scaling factors and establishing their statistical significance. Fingerprinting methods have become increasingly sophisticated due to a succession of proposed refinements, e.g. Hegerl et al. (1996), Hegerl et al. (1997), Allen and Tett (1999), Allen and Stott (2003), Huntingford et al. (2006), Ribes et al. (2009), Ribes et al. (2013), Hannart et al. (2014), Hannart (2016), Katzfuss et al. (2017) and Hannart (2019).

While originally conceived as a multivariate method for use with gridded spatio-temporal datasets, typically requiring application of dimension-reduction techniques, simplified variants of optimal fingerprinting have more recently been applied to time series data, specifically to observations of global mean surface temperature (GMST) (Otto et al. 2015; Rypdal 2015; Haustein et al. 2017). Global mean surface temperature is an important climate variable, both as a predictor of changes in local climate (Sutton et al. 2015), and as the metric of global warming used in communication with policymakers, e.g. the 1.5 and 2.0 degrees Celsius global warming limits of the 2015 Paris Agreement. There is also evidence that the additivity assumption (see Sect. 3) implicit in optimal fingerprinting is more likely to hold for GMST than for other variables such as precipitation (Good et al. 2011).

The present study is motivated by an apparent failure in the canonical D&A literature to explicitly recognize the time-indexed nature of climate datasets, an omission which could potentially draw that literature into question. It has long been known in the field of time series analysis that the practice of regressing variables containing time trends comes with a unique set of pitfalls, the most serious of which being the “spurious regression” phenomenon (discussed in detail in Sect. 2). In this paper, the threat to D&A inferences posed by spurious regression will be assessed, using a combination of theoretical argument (Sect. 3) and empirical evidence (Sect. 4). The validity (or otherwise) of optimal fingerprinting studies will be found to depend on a property known as “cointegration”, which may be described informally as a stricter form of correlation arising between time series. Section 4 will also introduce and demonstrate a new method for estimating uncertainty in D&A results, which exploits the notion of cointegration to obviate dependence on pre-industrial control (piControl) simulations (and the strong assumptions therewith).

2 Regression of non-stationary variables

When discussing optimal fingerprinting as applied to global temperatures, it is necessary to introduce some definitions from time series analysis, the most important of which being the notion of “stationarity”. In this paper, a time series variable u(t) is said to be stationary if and only if its mean and variance are finite and do not depend on time t. Such a time series exhibits a mean-reverting behaviour. An example of a stationary time series is the first-order autoregressive or AR(1) model

where \(-1< \rho < 1\) is a correlation and \(\varepsilon (t)\) a white-noise process, commonly called a “shock” or “innovation”. The AR(1) model in Eq. (2) has mean zero and constant variance \(\sigma ^2/(1-\rho ^2)\), where \(\sigma ^2=\mathrm {Var}(\varepsilon )\). Stationary autoregressive processes have been proposed as simple models of internal climate variability (Hasselmann 1976). If a trend in a time series can be described as a change in the mean (deterministic trend) or variance (stochastic trend) over time then, by their definition, stationary time series do not exhibit trends.

Detection and attribution is concerned with “trending” (non-stationary) climate variables. While the above definition of stationarity is quite prescriptive, the corresponding class of non-stationary time series, i.e. those violating the conditions, is too broad to be practically useful for the purposes of this paper. Instead, attention will be restricted to the class of non-stationary time series known as “integrated” or “difference-stationary”. For a time series v(t) to be difference-stationary, the differenced series \(\Delta v(t)=v(t)-v(t-1)\) must be stationary. The equivalent term “integrated” comes from the fact that v(t) can be constructed by integrating (taking partial sums of) the stationary time series \(\Delta v(t)\). In this paper, integrated will be abbreviated to I(1), where 1, the “order of integration”, denotes the number of times a series must be differenced to achieve stationarity. An example of an I(1) time series, the simple random walk, can be obtained from Eq. (2) by setting \(\rho =1\):

The variance of v(t) grows linearly in time, so the series is non-stationary. It may be said that v(t) contains a “unit root” stochastic trend (see Sect. 3).

The climate variables in D&A studies are hypothesized to be non-stationary, due to the presence of externally forced trends, both natural and anthropogenic. Radiative forcing due to greenhouse gas (GHG) emissions has a natural representation as an integrated process, where the integration is the accumulation of gases in the atmosphere over time. The idea of unit-root stochastic trends has a long history in climate change studies, e.g. Kaufmann and Stern (1997). Stern and Kaufmann (2000), Kaufmann and Stern (2002), Kaufmann et al. (2006), Mills (2008), Kaufmann et al. (2011) and Kaufmann et al. (2013), although it has been disputed (Gay-Garcia et al. 2009). It might further be argued that the processes driving anthropogenic GHG emissions are themselves integrated, where the integration represents accumulation of industrial capacity, however there is no numerical evidence for significant higher-order integration in annual records of historical GMST (see Sect. 4). In optimal fingerprinting, observed and simulated realizations of non-stationary variables are commonly regressed on one another using classical estimators such as ordinary least squares (OLS) (Allen and Tett 1999) or total least squares (TLS) (Allen and Stott 2003), depending on the size of the GCM ensemble. However, it has long been known that regressions involving non-stationary variables are susceptible to a phenomenon called “spurious regression”, whereby statistically significant linear relationships are found between completely unrelated time series (e.g. Yule (1926)). Granger and Newbold (1974) showed that regressing two independent random walks produces inflated t-statistics and often leads to the detection of a statistically significant relationship when in reality none exists. In general, OLS regressions of I(1) time series are statistically inconsistent, i.e. the coefficient estimates do not converge in the limit of infinite data, except in the special case where the series are “cointegrated” (Engle and Granger 1987). Two or more I(1) time series are said to cointegrate when there exists a linear combination of the series which is itself stationary. Regressing cointegrated time series using OLS yields coefficient estimates which are not only consistent but “superconsistent”, meaning they converge to the coefficients’ true values at a rate proportional to the length of the series. Thus the question of whether the regressors in optimal fingerprinting are cointegrated is critical for evaluating the reliability of D&A of climate change trends.

The risk of spurious regression in D&A pertains specifically to the attribution problem. This is because, in the case of detection, p-values are calculated “under the null”, i.e. under an assumption of no climate change. In the absence of climate change, the left-hand side of Eq. (1) would be stationary by definition, and there would be no risk of spurious regression. In the case of attribution, where climate change is taken as given, spurious regression refers to the misattribution of climate trends to one or more candidate factors, meaning that the resulting allocation of blame is inaccurate. Such misattribution does not require the presence of an “exogenous” trend (e.g. caused by a hidden forcing mechanism), but may instead be caused by flawed representation of forced trends included in the climate model. In particular, any discrepancy between true and modelled forcing which accumulates over time (e.g. due to inaccurate data/incomplete understanding of physical processes) has the potential to induce non-stationarity in the error term of the regression equation, leading to inconsistent scaling factor estimates and invalid confidence intervals. Given that climate models are known to differ in their representation of radiative forcings, there is a prima facie case for investigating this possibility (Myhre et al. 2013).

Methods based on the notion of cointegration have been used previously in analyses of climatic time series (Bindoff et al. 2013). Much effort has gone into studying cointegrations between groups of real-world variables, such as temperatures and forcings (Stern 2006; Turasie 2012; Beenstock et al. 2012; Stern and Kaufmann 2014; Pretis et al. 2015; Storelvmo et al. 2016; Estrada and Perron 2017; Bruns et al. 2020) or temperatures and sea level (Schmith et al. 2012). However, little effort has gone into discussing the presence or lack of cointegration between observed and model-simulated realizations of the same climate variable.

This study has two primary aims: firstly, to determine mathematically whether the experimental design and model assumptions of optimal fingerprinting together imply cointegration of the regression and therefore consistency of the least squares estimator; secondly, to investigate whether there is empirical evidence of such a cointegration arising in practice for the GMST variable. The first aim will be addressed in Sect. 3 and key results proved within an idealized linear-response-model framework. It will be shown how, by parameterizing the impulse response as an energy-balance model (EBM), the formulas in the proof can assume physical interpretability in terms of real-world quantities. Section 4 deals with the second aim by means of hypothesis testing, applied to historical observations and output from the latest generation of GCMs. A new method for calculating confidence regions without recourse to piControl simulations will also be introduced. The content of the paper is summarized in Sect. 5.

3 Theoretical reasons for cointegration

This section will assess the consistency of optimal fingerprinting regression in the presence of I(1) non-stationary forcings. To begin with, some definitions are required.

3.1 Impulse-response model definition

Let y denote a climate variable of interest for which historical observations are available. Assuming that a change in y in response to an externally imposed effective radiative forcing (ERF) F may be adequately described by a linear and time-invariant (LTI) impulse-response function, the time series of observations y(t) may be written as an autoregressive moving-average (ARMA) model of arbitrary order \(p,q \ge 0\),

where \(\mu = c/(1-\sum _i \phi _i)\) is variable y’s pre-industrial baseline, i.e. its mean value in the absence of any forcing F; coefficients \(\phi _i\) and \(\theta _i\) are sequences of weights determining the autoregressive (AR) and moving-average (MA) parts of the impulse-response function; and \(\xi (t)\) is a stationary zero-mean stochastic process representing internal climate variability, plus other sources of noise/uncertainty reasonably considered stationary, such as observational error. The definition of ERF is given in Myhre et al. (2013) and is such that the climate system’s response to ERF should be indifferent to the particular forcing agent responsible. The ARMA model in Eq. (4) is very general, incorporating all finite-impulse-response (FIR) models, as well as all infinite-impulse-response (IIR) models of exponential type. Defining the “backshift operator” \(\mathrm {B}\) such that \(\mathrm {B}^i x(t) = x(t-i)\), Eq. (4) may be written

where the rational function

is known as the “transfer function”. The time series of radiative forcings F(t) is assumed to be non-stationary with non-stationarity modelled as I(1),

where the series of forcing increments \(\Delta F(t)\) is a stationary stochastic process. The rationale for modelling \(\Delta F(t)\) as stochastic is the fact that it cannot be predicted from past values of F(t) only, as evidenced by the impact on \(\text {CO}_2\) emissions of the recent SARS-CoV-2 pandemic (Tollefson 2021). Equation (7) is quite general since, beyond the assumption of stationarity, no specific parametric model is assumed for \(\Delta F(t)\). Note that \(\Delta F(t)\) need not have zero mean: for example, in the case of forcing due to exponentially increasing atmospheric \(\text {CO}_2\) concentration, \(\Delta F(t)\) would on average (and indeed almost always) take a positive value. Using the backshift operator,

whence the term “unit-root” non-stationarity originates, as the polynomial in the transfer function’s denominator contains a unit root. To guarantee the existence of a finite climate sensitivity, it is assumed that all roots of the AR polynomial \(\varvec{\phi }(\mathrm {B})\) lie strictly outside the unit circle in the complex plane. Noting that the backshift operator \(\mathrm {B}\) reduces to the identity when the system is in equilibrium, the familiar equilibrium climate sensitivity (ECS) for variable y is then

where \(F_{2 \times \text {CO}_2}\) denotes the increase in radiative forcing associated with a doubling of atmospheric carbon dioxide \(\text {CO}_2\) concentration.

3.2 Optimal fingerprinting experimental design

Consider an optimal fingerprinting study where observed changes in y are to be attributed to a set of p candidate forcings \(F_1, \dots , F_p\). Using the ARMA model in Eq. (4) and the backshift operator notation, the study’s experimental design may be written

The equation for observations y(t) is unchanged. New variables \(x_i\) denote output from climate model runs (or ensembles thereof) where forcings \(F_i\) have been applied individually. If no important forcing factors are missing from the candidate set, and if there are no interactions between forcing factors, it may be assumed that the total forcing F driving the observed trend in y has the decomposition \(F = F_1 + \dots + F_p\). This is the “additivity assumption” of optimal fingerprinting. Another fundamental assumption of optimal fingerprinting is that radiative forcings driving model runs \(x_i\) have the correct temporal structure, i.e. are identical to their real-world counterparts up to multiplicative constants \(\pi _i\). In practice this assumption may be relaxed using errors-in-variables (EIV) methods, but at the cost of introducing further assumptions such as model exchangeability (Huntingford et al. 2006). Note that, in Eqs. (11) to (12), the climate model is not assumed to perfectly reproduce the properties of the true climate. In general, the climate model may have a different mean \(\mu ' \ne \mu\), a different noise process \(\varepsilon ' \ne \varepsilon\), and an impulse response differing from the truth in shape and scale \(\varvec{\Phi }' \ne \varvec{\Phi }\).

3.3 Consistency of the least squares estimator

If at least one of the candidate forcings is I(1) non-stationary then a multiple regression of y on \(x_1, \dots , x_p\) is integrated on both sides of the equation. An integrated regression of this type is known to be consistent if and only if the I(1) variables are cointegrated (Engle and Granger 1987). To establish consistency of optimal fingerprinting, as described by Eqs. (10) to (12), it is therefore necessary and sufficient to prove that y and \(x_1, \dots , x_p\) cointegrate. From the definition of cointegration, this may be achieved by proving the existence of a linear combination of the \(x_i\) which, when subtracted from y, yields a stationary process.

Lemma

The rational transfer function \(\varvec{\Phi }(\mathrm {B}) = \varvec{\theta }(\mathrm {B}) / \varvec{\phi }(\mathrm {B})\) permits the following decomposition:

where \(\varvec{\psi }(\mathrm {B})\) and \(\varvec{\omega }(\mathrm {B})\) are polynomial operators, with \(\varvec{\omega }(\mathrm {B})\) containing no unit root.

Proof

Define the abstract rational function

where \(\varvec{\Phi }(z) = \varvec{\theta }(z) / \varvec{\phi }(z)\) as before. Since \(\varvec{\phi }(z)\) has no unit root, \(\varvec{\Phi }(1)\) is a finite constant, and it follows that \(\varvec{\Omega }(z)\) has no pole at \(z=1\). From Eq. (14) it may be seen that \(\varvec{\Omega }(1) = 0\). Thus \(\varvec{\Omega }(z)\) may be factorized

where \(\varvec{\omega }(z)\) contains no unit root. \(\square\)

Theorem

There exists a p-vector of coefficients \((\beta _1, \dots , \beta _p)'\) such that the linear combination

is a stationary time series. Specifically, r(t) is stationary when

for all j in \(1, \dots , p\).

Proof

Applying the lemma to Eq. (10) yields

Substituting Eq. (8) into (18) gives

Observe that all terms on the right-hand side are stationary except for \(\varvec{\Phi }(1) F(t)\), so the non-stationary component of the forced response is simply a scaled version of the forcing series. This holds similarly for series of model output \(x_i\) and their respective forcings. It therefore follows that the non-stationarity in y due to forcing \(F_i\) may be eliminated by subtracting an appropriately scaled version of the corresponding model output series \(x_i\). Expressions for the scaling factors \(\beta _i\) in Eq. (17) are readily obtained by considering the relative magnitudes of the non-stationary components of the forced responses. \(\square\)

Thus it has been established that the optimal fingerprinting regression described in this section is cointegrated, given standard model assumptions, and may be consistently estimated using OLS. The presence of cointegration also renders the OLS estimator superconsistent (Engle and Granger 1987). In practice, the experimental design of a D&A study can be more complicated: GCM simulations are often run with linearly independent combinations of forcing factors, rather than each forcing being applied separately, in order to reduce collinearity of the forced responses (Jones et al. 2016; Jones and Kennedy 2017). Due to the additivity assumption of optimal fingerprinting, the reasoning applied in this section holds similarly in the case of linear combinations of forcings.

3.4 Energy-balance model parameterization

The result presented above holds for a general LTI impulse-response model of the form given in Eq. (4). By choosing a suitable parameterization for the impulse response, this result can be given some physical interpretability. For example, when variable y denotes GMST, the impulse response may be parameterized as a k-box EBM, which is known to have a discrete-time representation as an ARMA(k, \(k-1\)) filter (Cummins et al. 2020a). In the simplest case, when \(k=1\), the EBM reduces to a single ordinary differential equation,

where T (K) denotes GMST, \(T_0\) (K) is the pre-industrial baseline temperature, C (W year m\(^{-2}\) K\(^{-1}\)) is a heat capacity, and \(\lambda\) (W m\(^{-2}\) K\(^{-1}\)) is the climate feedback parameter. When \(k>1\) the GMST “box” is coupled to a system of additional boxes representing the heat capacity of the deep ocean. Recent studies have identified \(k=3\) as the optimal EBM complexity for reproducing the thermal characteristics of recent-generation GCMs (Caldeira and Myhrvold 2013; Tsutsui 2017; Fredriksen and Rypdal 2017; Cummins et al. 2020b). Unfortunately, analytical solutions to k-box models quickly become quite complicated, even for \(k=2\) (Geoffroy et al. 2013), so in this illustrative example equations are shown for the one-box model only.

If radiative forcing is assumed constant between timesteps, i.e. \(F(t) = F(s)\) for \(s \in (t-1, t]\), Eq. (21) can be discretized and written in the form of Eq. (10):

which may be decomposed into

The non-stationary component of T(t) is simply the input forcing F(t) scaled by the climate sensitivity \(\lambda ^{-1}\), while the stationary component is an AR(1)-filtered version of the forcing increment series \(\Delta F(t)\). The AR(1) filter is stationary because \(\lambda\) and C are both strictly positive. For EBMs with \(k>1\) the ARMA filter applied to \(\Delta F(t)\) in Eq. (24) will have higher-order polynomials in the numerator and denominator, however the non-stationary component will be unchanged as this term depends only on the climate feedback parameter \(\lambda\).

Returning to the question of D&A, let y(t) and x(t) denote time series of observed and GCM-simulated historical GMST, driven by forcing series F(t) and \(\pi F(t)\) respectively. If temperature series y(t) and x(t) are adequately described by k-box EBMs (not necessarily of the same order) with respective climate feedback parameters \(\lambda\) and \(\lambda '\), then it follows from the theorem that

is a stationary time series. It also follows that the estimator \({\hat{\beta }}_{\mathrm {OLS}}\) obtained by an OLS regression of y(t) on x(t) is a superconsistent estimator of \(\beta = \lambda '/(\pi \lambda )\) (Engle and Granger 1987).

4 Empirical evidence

In the previous section, it was established that, under certain conditions, the variables in optimal fingerprinting regression are provably cointegrated, implying consistency of least-squares parameter estimation. Though cointegration is predicted by the theory, whether it arises in reality will depend on the validity of the model assumptions. Two of the main assumptions used to obtain results in Sect. 3 are standard in optimal fingerprinting:

-

1.

additivity, that the combined effect of multiple forcing factors is the sum of their effects had they been applied separately;

-

2.

correct forcing specification, that radiative forcings in GCMs have correct temporal structure up to a multiplicative constant.

Identifying these assumptions as necessary prerequisites for cointegration of GCM output and historical observations allows them to be assessed using numerical cointegration tests. If there is strong numerical evidence of cointegration, then this gives no reason to doubt the validity of assumptions 1 and 2, and by extension the consistency of optimal fingerprinting. On the other hand, should significant cointegration fail to be detected, then the possibility of violated assumptions, spurious regression and meaningless results cannot be discounted without further investigation.

Two further assumptions were made in Sect. 3 which are non-standard in optimal fingerprinting. Firstly, it was assumed that non-stationary forcings are I(1), for reasons set out in Sect. 1. Since the true underlying forcing series are not directly observable, it is infeasible to assess this assumption directly. However, as with the standard optimal fingerprinting assumptions above, the idealized concept of I(1) forcings may be shown “not inconsistent” with observation in the event that significant cointegration is detected. The second non-standard assumption is that of LTI impulse responses, which may be seen as a strengthening of the standard additivity assumption. To limit the influence of this strengthening, numerical results in this section have been calculated using the GMST climate variable, whose response to a radiative forcing perturbation is known to be well-modelled using LTI impulse responses (Li and Jarvis 2009; Good et al. 2011; Geoffroy et al. 2013).

4.1 Data

The numerical analyses in this section were performed using observed and GCM-simulated time series of GMST, averaged annually (Jan–Dec) for the period 1880–2014.

Observational datasets were, in alphabetical order: Berkeley Earth (Rohde and Hausfather 2020), Cowtan and Way 2.0 (Cowtan and Way 2014), GISTEMP v4 (Lenssen et al. 2019; GISTEMP Team 2021), HadCRUT5 (Morice et al. 2021) and NOAAGlobalTemp V5 (Smith et al. 2008; Huai-Min Zhang et al. 2019). The choice of observational dataset was found not to affect hypothesis test results. The results presented here were calculated using HadCRUT5, however the whole analysis may be re-run for the other observational datasets by changing a single line of code (see Data Availability statement for details).

Predicted climate change signals were calculated using simulation output from 13 GCMs of the CMIP6 generation (Eyring et al. 2016). Chosen models are from modelling centres who have contributed runs as part of the DAMIP project (Gillett et al. 2016). For each GCM, ensemble-mean annual-GMST time series were calculated for the historical and hist-GHG experiments. These two forcing scenarios were chosen because GHG-attributable warming is of primary interest. Jones et al. (2016) recommend a two-way attribution of this form on the grounds of robustness. Table 1 gives the respective sizes of the historical and hist-GHG ensembles for each GCM, as well as the corresponding model citations.

4.2 Cointegration tests

Let y denote observed historical GMST and let \(x_1,x_2\) denote GCM-predicted signals corresponding to the historical and hist-GHG experiments respectively. The theory in Sect. 3 predicts that the time series \(y(t), x_1(t), x_2(t)\) are cointegrated. A simple test for cointegration consists of fitting the linear regression model

using OLS and then testing the series of residuals \({\hat{\varepsilon }}(t)\) for stationarity (Engle and Granger 1987). Residual stationarity may be tested using the procedure of Dickey and Fuller (1979), whereby the autoregression

is estimated using OLS and the t-statistic corresponding to \({\hat{\delta }}_1\), denoted \({\hat{\tau }}_c\), compared with a relevant quantile of the reference “Dickey–Fuller” distribution. The null hypothesis \(H_0\) is that no cointegrating relationship exists between \(y(t), x_1(t), x_2(t)\) and the residuals exhibit unit-root non-stationarity. A significant negative estimate \({\hat{\delta }}_1\) provides evidence of mean-reverting residuals and leads to a rejection of \(H_0\) in favour of the alternative hypothesis: that the residuals are stationary and series \(y(t), x_1(t), x_2(t)\) cointegrate. The appropriate reference distribution depends on the number \(N=3\) and length \(n=135\) of potentially I(1) time series being regressed in Eq. (26). Using the third-order approximation formula in MacKinnon (2010), the critical value for a cointegration test at the one-percent level is \(\tau _c = -4.40\). It should be noted that the I(1) assumption refers to the degree of differencing required to achieve stationarity and is best regarded as an upper bound on the level of trendiness anywhere in the time series. The above cointegration test is therefore also valid for I(0) data: the results would just be more conservative.

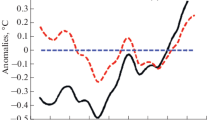

Tests of this form were performed for combinations of the HadCRUT5 historical observations with output from each of the 13 CMIP6 GCMs considered in this study. Figure 1 shows time series of residuals \({\hat{\varepsilon }}(t)\) from the fitted regression in Eq. (26), with test statistics \({\hat{\tau }}_c\) and associated p-values. It can be seen from the residual plots that all 13 time series exhibit strong mean-reverting behaviour. This is confirmed by the results of the cointegration tests: the null hypothesis of “no cointegration” was rejected at the one-percent level for all 13 GCMs.

The residual time series in Fig. 1 share common features, such as an apparent bump around the year 1940. This is as expected. From the theorem in Sect. 3 it follows that the regression residuals in Eq. (26) include contributions from the stationary components of the forced trends in \(y(t), x_1(t), x_2(t)\) (i.e. those terms involving \(\Delta F(t)\)), as well as from internal climate variability in those series. Since the GCMs have similar impulse responses, and since the realization of internal variability in HadCRUT5 is common to all 13 regressions, the only truly independent contribution to each residual series comes from that GCM’s realizations of internal variability.

4.3 Attribution of surface temperature warming

Having detected signficant cointegration of \(y(t), x_1(t), x_2(t)\), it follows that the coefficients \(\varvec{\beta } = (\beta _1, \beta _2)'\) in Eq. (26) may be consistently estimated using OLS. However, the regression residuals (see Fig. 1) are serially correlated, meaning that the usual formulas for calculating standard errors and confidence regions are invalid. Optimal fingerprinting studies commonly address this problem by estimating the covariance structure of internal climate variability from a GCM’s piControl simulation (Allen and Tett 1999). This approach ignores the stationary forced component of the residuals, which arises due to differences between the impulse responses of GCMs and the true climate. Using piControl also relies on GCMs accurately simulating the pre-industrial climate, which cannot be verified through observation. The use of piControl for hypothesis testing in optimal fingerprinting has recently been criticized (McKitrick 2021).

An alternative way to avoid the problem of serially correlated residuals, without introducing dependence on piControl simulations, is to fit a dynamic regression model which includes lagged versions of time series \(y(t), x_1(t), x_2(t)\) (Hendry and Juselius 2000). Fitting dynamic regressions of the form

using OLS yields serially uncorrelated residual series for each of the 13 GCMs. The usual normal distribution theory may then be assumed to hold asymptotically for estimates of the coefficients \(\varvec{\beta }'=(\beta _1',\dots ,\beta _5')'\) in Eq. (28).

Dynamic regression coefficients \(\varvec{\beta }'\) in Eq. (28) can be related back to coefficients \(\varvec{\beta }\) in Eq. (26) via the Granger representation theorem, which requires that systems of cointegrated series have equivalent representations as error-correction models (ECMs) (Engle and Granger 1987). Observe that Eq. (28) may be written

where \(\alpha =1-\beta _3'\), \(\beta _0=\beta _0'/\alpha\), \(\beta _1=(\beta _1'+\beta _4')/\alpha\), and \(\beta _2=(\beta _2'+\beta _5')/\alpha\). The expression inside the square brackets, called the error-correction term, is a stationary linear combination of \(y(t), x_1(t), x_2(t)\). By estimating the dynamic regression model in Eq. (28) using OLS, and then reparameterizing to obtain the ECM in Eq. (29), it is possible to recover estimates of coefficients \(\varvec{\beta }\) in Eq. (26).

Because the historical CMIP6 experiment includes GHG forcing, parameters \(\beta _1\) and \(\beta _2\) must be transformed to obtain the scaling factors of primary interest. Following the notation of Jones et al. (2016), let \(\beta _{\mathrm {G}}=\beta _1 + \beta _2\) and \(\beta _{\mathrm {OAN}}=\beta _1\) denote the scaling factors to be applied to GCM-predicted signals forced by GHG emissions and “other anthropogenic and natural” factors respectively. Although \(\varvec{\beta }^* = (\beta _{\mathrm {G}},\beta _{\mathrm {OAN}})'\) is linearly related to coefficients \(\varvec{\beta }\), the function relating \(\varvec{\beta }\) to \(\varvec{\beta }'\) is non-linear. The partially linear function \(f: \varvec{\beta }' \mapsto \varvec{\beta }^*\) may be written

where \(g(\varvec{\beta }') = (1-\beta _3')^{-1}\) is the non-linear part of f, and \(h(\varvec{\beta }') = M \varvec{\beta }'\) is the linear part where

Let

denote the Jacobian of f. If coefficient estimates \(\hat{\varvec{\beta }}'\), obtained by fitting Eq. (28) using OLS, have estimated covariance \({\hat{\Sigma }}'\), then a linearized estimate of the covariance of \(\hat{\varvec{\beta }}^* = f(\hat{\varvec{\beta }}')\) is given by \({\hat{\Sigma }}^* = J(\hat{\varvec{\beta }}') {\hat{\Sigma }}' J(\hat{\varvec{\beta }}')'\). An approximate 90% confidence ellipse for \(\varvec{\beta }^*\) satisfies

where \(F_{2,128} (0.90)\) denotes the 90th percentile of the F distribution with degrees of freedom two and 128. Residual degrees of freedom are 135 (years of observations from 1880 to 2014), minus one (due to differencing), minus six (parameters estimated to fit Eq. (28)), giving 128.

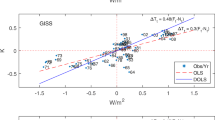

Point estimates and confidence ellipses have been calculated for scaling factors \(\beta _{\mathrm {G}}\) and \(\beta _{\mathrm {OAN}}\) using the methodology described above (see Fig. 2 and Table 2). Collinearity between time series \(y(t), x_1(t), x_2(t)\) and their lagged counterparts means that, taken individually, coefficient estimates \(\hat{\varvec{\beta }}'\) are subject to greater uncertainty than the classical OLS estimates \(\hat{\varvec{\beta }}\). However, the estimates \({\hat{\beta }}_{\mathrm {G}}\) and \({\hat{\beta }}_{\mathrm {OAN}}\) obtained by back-transforming \(\hat{\varvec{\beta }}'\) are well-constrained and closely resemble the classical estimates (proportional change has mean 0.008 and standard deviation 0.07). The inflation of parameter uncertainty which results from including lagged variables is a necessary consequence of accounting for residual autocorrelation. Scaling factor standard errors vary across different GCMs. This is partly a consequence of variation in the size of available ensembles, with increased ensemble size leading to smaller standard errors. Standard errors are also affected by the GCMs’ ability to reproduce the forced patterns in the observations. Table 2 also includes estimates of attributable warming between the reference periods 1880–1899 and 2005–2014. Attributable warming was calculated by first computing the mean difference in GMST between the reference periods for each of the historical and hist-GHG GCM experiments, and then taking appropriate linear combinations of the temperature differences with coefficients determined by the relevant scaling factors. These results are visualized in Fig. 3, where total historical and GHG-attributable warming are plotted side-by-side. From Fig. 3 it may be seen that for 12 out of 13 CMIP6 GCMs GHG-attributable warming exceeds total historical warming.

There exist some differences between attribution results in the present study and those of Gillett et al. (2021) and Chapter 3 of IPCC AR6 (Eyring et al. 2021). For example, in Table 1 of Gillett et al. (2021), the authors of that paper report GHG-attributable warming of 1.16–1.95 degrees in 2010–2019 relative to an 1850–1900 reference period, whereas the point estimates of GHG-attributable warming obtained in the present study range from 0.93 to 1.47. The likely range given in AR6 is 1.0–2.0 degrees. While the attribution of surface warming performed in this section demonstrates how cointegration theory may be applied to uncertainty quantification for scaling factors in D&A of climate trends, rigorous assessment of the new method’s performance and true “apples-to-apples” comparison with current IPCC estimates will require a full suite of “perfect-model” numerical experiments. Such numerical experiments have recently been employed for testing coverage rates of confidence intervals in optimal fingerprinting (Li et al. 2021).

5 Summary

Optimal fingerprinting, the statistical methodology commonly used for D&A of climate change trends, is typically performed by linearly regressing non-stationary climate variables. Non-stationary time series regressions are, in general, statistically inconsistent and liable to produce spurious results. This study has shown, by modelling radiative forcing as an integrated stochastic process within an idealized linear-response-model framework, that the optimal fingerprinting estimator is consistent under standard D&A assumptions. Hypothesis tests, combining observations of historical GMST with simulation output from 13 CMIP6-generation GCMs, produce no evidence that standard assumptions have been violated. It is therefore concluded that, at least in the case of GMST, detection and attribution of climate change trends is very likely not spurious regression. Furthermore, detection of significant cointegration between observations and GCM output indicates that the OLS estimator is superconsistent, with better convergence properties than might previously have been assumed. Finally, a new method has been developed for quantifying D&A uncertainty, which exploits the notion of cointegration to eliminate the need to rely on piControl GCM simulations and the corresponding strong assumptions.

Data and code availability

The datasets and code used to perform the analyses in this study are available online at https://doi.org/10.5281/zenodo.5008827. Code is written in the statistical programming language R (R Core Team 2021). The ellipses in Fig. 2 were plotted using the “ellipse” function from the car package by Fox et al. (2011).

References

Allen MR, Stott PA (2003) Estimating signal amplitudes in optimal fingerprinting, part I: theory. Clim Dyn 21(5):477–491. https://doi.org/10.1007/s00382-003-0313-9

Allen MR, Tett SFB (1999) Checking for model consistency in optimal fingerprinting. Clim Dyn 15(6):419–434. https://doi.org/10.1007/s003820050291

Beenstock M, Reingewertz Y, Paldor N (2012) Polynomial cointegration tests of anthropogenic impact on global warming. Earth Syst Dyn 3(2):173–188

Bindoff NL, Stott PA, AchutaRao KM, Allen MR, Gillett N, Gutzler D, Hansingo K, Hegerl G, Hu Y, Jain S, Mokhov II, Overland J, Perlwitz J, Sebbari R, Zhang X (2013) Chapter 10—Detection and attribution of climate change: from global to regional. In Climate change 2013: the physical science basis. Contribution of Working Group I to the Fifth Assessment Report of the Intergovernmental Panel on Climate Change. Cambridge University Press, Cambridge. https://www.ipcc.ch/site/assets/uploads/2018/02/WG1AR5_Chapter10_FINAL.pdf

Boucher O, Servonnat J, Albright AL, Aumont O, Balkanski Y, Bastrikov V, Bekki S, Bonnet R, Bony S, Bopp L, Braconnot P, Brockmann P, Cadule P, Caubel A, Cheruy F, Codron F, Cozic A, Cugnet D, D’Andrea F, Davini P, de Lavergne C, Denvil S, Deshayes J, Devilliers M, Ducharne A, Dufresne J-L, Dupont E, Éthé C, Fairhead L, Falletti L, Flavoni S, Foujols M-A, Gardoll S, Gastineau G, Ghattas J, Grandpeix J-Y, Guenet B, Guez Lionel E, Guilyardi E, Guimberteau M, Hauglustaine D, Hourdin F, Idelkadi A, Joussaume S, Kageyama M, Khodri M, Krinner G, Lebas N, Levavasseur G, Lévy C, Li L, Lott F, Lurton T, Luyssaert S, Madec G, Madeleine J-B, Maignan F, Marchand M, Marti O, Mellul L, Meurdesoif Y, Mignot J, Musat I, Ottlé C, Peylin P, Planton Y, Polcher J, Rio C, Rochetin N, Rousset C, Sepulchre P, Sima A, Swingedouw D, Thiéblemont R, Traore AK, Vancoppenolle M, Vial J, Vialard J, Viovy N, Vuichard N (2020) Presentation and evaluation of the IPSL-CM6A-LR climate model. J Adv Model Earth Syst 12(7):e2019MS002010. https://doi.org/10.1029/2019MS002010

Bruns SB, Csereklyei Z, Stern DI (2020) A multicointegration model of global climate change. J Econom 214(1):175–197

Caldeira K, Myhrvold NP (2013) Projections of the pace of warming following an abrupt increase in atmospheric carbon dioxide concentration. Environ Res Lett 8(3):034039. https://doi.org/10.1088/1748-9326/8/3/034039

Cowtan K, Way RG (2014) Coverage bias in the HadCRUT4 temperature series and its impact on recent temperature trends. Q J R Meteorol Soc 140(683):1935–1944. https://doi.org/10.1002/qj.2297

Cummins DP, Stephenson DB, Stott PA (2020) A new energy-balance approach to linear filtering for estimating effective radiative forcing from temperature time series. Adv Stat Climatol Meteorol Oceanogr 6(2):91–102

Cummins DP, Stephenson DB, Stott PA (2020) Optimal estimation of stochastic energy balance model parameters. J Clim 33(18):7909–7926

Danabasoglu G, Lamarque J-F, Bacmeister J, Bailey DA, DuVivier AK, Edwards J, Emmons LK, Fasullo J, Garcia R, Gettelman A, Hannay C, Holland MM, Large WG, Lauritzen PH, Lawrence DM, Lenaerts JTM, Lindsay K, Lipscomb WH, Mills MJ, Neale R, Oleson KW, Otto-Bliesner B, Phillips AS, Sacks W, Tilmes S, van Kampenhout L, Vertenstein M, Bertini A, Dennis J, Deser C, Fischer C, Fox-Kemper B, Kay JE, Kinnison D, Kushner PJ, Larson VE, Long MC, Mickelson S, Moore JK, Nienhouse E, Polvani L, Rasch PJ, Strand WG (2020) The Community Earth System Model Version 2 (CESM2). J Adv Model Earth Syst 12(2):e2019MS001916. https://doi.org/10.1029/2019MS001916

Dickey DA, Fuller WA (1979) Distribution of the estimators for autoregressive time series with a unit root. J Am Stat Assoc 74(366a):427–431. https://doi.org/10.1080/01621459.1979.10482531

Dunne JP, Horowitz LW, Adcroft AJ, Ginoux P, Held IM, John JG, Krasting JP, Malyshev S, Naik V, Paulot F, Shevliakova E, Stock CA, Zadeh N, Balaji V, Blanton C, Dunne KA, Dupuis C, Durachta J, Dussin R, Gauthier PPG, Griffies SM, Guo H, Hallberg RW, Harrison M, He J, Hurlin W, McHugh C, Menzel R, Milly PCD, Nikonov S, Paynter DJ, Ploshay J, Radhakrishnan A, Rand K, Reichl BG, Robinson T, Schwarzkopf DM, Sentman LT, Underwood S, Vahlenkamp H, Winton M, Wittenberg AT, Wyman B, Zeng Y, Zhao M (2020) The GFDL Earth System Model Version 4.1 (GFDL-ESM 4.1): Overall coupled model description and simulation characteristics. J Adv Model Earth Syst 12(11):e2019MS002015. https://doi.org/10.1029/2019MS002015

Engle RF, Granger CWJ (1987) Co-integration and error correction: Representation, estimation, and testing. Econometrica 55(2):251–276

Estrada F, Perron P (2017) Extracting and analyzing the warming trend in global and hemispheric temperatures. J Time Ser Anal 38(5):711–732. https://doi.org/10.1111/jtsa.12246

Eyring V, Bony S, Meehl GA, Senior CA, Stevens B, Stouffer RJ, Taylor KE (2016) Overview of the Coupled Model Intercomparison Project Phase 6 (CMIP6) experimental design and organization. Geosci Model Dev 9(5):1937–1958

Eyring V, Gillett NP, Achuta Rao KM, Barimalala R, Barreiro Parrillo M, Bellouin N, Cassou C, Durack PJ, Kosaka Y, McGregor S, Min S, Morgenstern O, Sun Y (2021) Chapter 3—Human influence on the climate system. In: Masson-Delmotte V, Zhai P, Pirani A, Connors SL, Pean C, Berger S, Caud N, Chen Y, Goldfarb L, Gomis MI, Huang M, Leitzell K, Lonnoy E, Matthews JBR, Maycock TK, Waterfield T, Yelekci O, Yu R, Zhou B (eds) Climate Change 2021: The Physical Science Basis. Contribution of Working Group I to the Sixth Assessment Report of the Intergovernmental Panel on Climate Change. Cambridge University Press, Cambridge. https://www.ipcc.ch/report/ar6/wg1/downloads/report/IPCC_AR6_WGI_Chapter_03.pdf

Fox J, Weisberg S, Fox J (2011) An R companion to applied regression, 2nd edn. SAGE Publications, Los Angeles

Fredriksen H-B, Rypdal M (2017) Long-range persistence in global surface temperatures explained by linear multibox energy balance models. J Clim 30(18):7157–7168

Gay-Garcia C, Estrada F, Sánchez A (2009) Global and hemispheric temperatures revisited. Clim Change 94(3):333–349. https://doi.org/10.1007/s10584-008-9524-8

Geoffroy O, Saint-Martin D, Bellon G, Voldoire A, Olivié DJL, Tytéca S (2013) Transient climate response in a two-layer energy-balance model Part II: representation of the efficacy of deep-ocean heat uptake and validation for CMIP5 AOGCMs. J Clim 26(6):1859–1876

Gillett NP, Kirchmeier-Young M, Ribes A, Shiogama H, Hegerl GC, Knutti R, Gastineau G, John JG, Li L, Nazarenko L, Rosenbloom N, Seland Ø, Wu T, Yukimoto S, Ziehn T (2021) Constraining human contributions to observed warming since the pre-industrial period. Nat Clim Change 11(3):207–212

Gillett NP, Shiogama H, Funke B, Hegerl G, Knutti R, Matthes K, Santer BD, Stone D, Tebaldi C (2016) The Detection and Attribution Model Intercomparison Project (DAMIP v10) contribution to CMIP6. Geosci Model Dev 9(10):3685–3697

GISTEMP Team (2021) GISS Surface Temperature Analysis (GISTEMP), version 4. https://data.giss.nasa.gov/gistemp/

Good P, Gregory JM, Lowe JA (2011) A step-response simple climate model to reconstruct and interpret AOGCM projections. Geophys Res Lett. https://doi.org/10.1029/2010GL045208

Granger CWJ, Newbold P (1974) Spurious regressions in econometrics. J Econom 2(2):111–120

Hannart A (2016) Integrated optimal fingerprinting: method description and illustration. J Clim 29(6):1977–1998

Hannart A (2019) An improved projection of climate observations for detection and attribution. Adv Stat Climatol Meteorol Oceanogr 5(2):161–171

Hannart A, Ribes A, Naveau P (2014) Optimal fingerprinting under multiple sources of uncertainty. Geophys Res Lett 41(4):1261–1268. https://doi.org/10.1002/2013GL058653

Hasselmann K (1976) Stochastic climate models. Part I. Theory. Tellus 28(6):473–485. https://doi.org/10.1111/j.2153-3490.1976.tb00696.x

Hasselmann K (1997) Multi-pattern fingerprint method for detection and attribution of climate change. Clim Dyn 13(9):601–611. https://doi.org/10.1007/s003820050185

Hasselmann KF (1979) On the signal-to-noise problem in atmospheric response studies. In: Joint Conference of Royal Meteorological Society, American Meteorological Society, Deutsche Meteorologische Gesellschaft and the Royal Society, pp 251–259. Royal Meteorological Society. https://pure.mpg.de/pubman/faces/ViewItemOverviewPage.jsp?itemId=item_3030122

Haustein K, Allen MR, Forster PM, Otto FEL, Mitchell DM, Matthews HD, Frame DJ (2017) A real-time Global Warming Index. Sci Rep 7(1):15417

Hegerl G, Zwiers F (2011) Use of models in detection and attribution of climate change. WIREs Clim Change 2(4):570–591. https://doi.org/10.1002/wcc.121

Hegerl GC, Hasselmann K, Cubasch U, Mitchell JFB, Roeckner E, Voss R, Waszkewitz J (1997) Multi-fingerprint detection and attribution analysis of greenhouse gas, greenhouse gas-plus-aerosol and solar forced climate change. Clim Dyn 13(9):613–634. https://doi.org/10.1007/s003820050186

Hegerl GC, von Storch H, Hasselmann K, Santer BD, Cubasch U, Jones PD (1996) Detecting greenhouse-gas-induced climate change with an optimal fingerprint method. J Clim 9(10):2281–2306

Hegerl GC, Zwiers FW, Braconnot P, Gillett N, Luo YM, Marengo Orsini J, Nicholls N, Penner J, Stott P (2007) Chapter 9—Understanding and attributing climate change. In: Solomon S, Qin D, Manning M, Chen Z, Marquis M, Averyt KB, Tignor M, Miller HL (eds) Climate Change 2007: the physical science basis. Contribution of Working Group I to the Fourth Assessment Report of the Intergovernmental Panel on Climate Change. Cambridge University Press, Cambridge. https://www.ipcc.ch/site/assets/uploads/2018/02/ar4-wg1-chapter9-1.pdf

Hendry DF, Juselius K (2000) Explaining cointegration analysis: Part 1. Energy J 21(1):1–42

Huntingford C, Stott PA, Allen MR, Lambert FH (2006) Incorporating model uncertainty into attribution of observed temperature change. Geophys Res Lett. https://doi.org/10.1029/2005GL024831

Jones GS, Kennedy JJ (2017) Sensitivity of attribution of anthropogenic near-surface warming to observational uncertainty. J Clim 30(12):4677–4691

Jones GS, Stott PA, Mitchell JFB (2016) Uncertainties in the attribution of greenhouse gas warming and implications for climate prediction. J Geophys Res Atmos 121(12):6969–6992. https://doi.org/10.1002/2015JD024337

Katzfuss M, Hammerling D, Smith RL (2017) A Bayesian hierarchical model for climate change detection and attribution. Geophys Res Lett 44(11):5720–5728. https://doi.org/10.1002/2017GL073688

Kaufmann RK, Kauppi H, Mann ML, Stock JH (2011) Reconciling anthropogenic climate change with observed temperature 1998–2008. Proc Natl Acad Sci 108(29):11790–11793

Kaufmann RK, Kauppi H, Mann ML, Stock JH (2013) Does temperature contain a stochastic trend: linking statistical results to physical mechanisms. Clim Change 118(3):729–743. https://doi.org/10.1007/s10584-012-0683-2

Kaufmann RK, Kauppi H, Stock JH (2006) Emissions, concentrations, & temperature: a time series analysis. Clim Change 77(3):249–278. https://doi.org/10.1007/s10584-006-9062-1

Kaufmann RK, Stern DI (1997) Evidence for human influence on climate from hemispheric temperature relations. Nature 388(6637):39–44

Kaufmann RK, Stern DI (2002) Cointegration analysis of hemispheric temperature relations. J Geophys Res Atmos 107(D2):ACL 8-1-ACL 8-10. https://doi.org/10.1029/2000JD000174

Kelley M, Schmidt GA, Nazarenko LS, Bauer SE, Ruedy R, Russell GL, Ackerman AS, Aleinov I, Bauer M, Bleck R, Canuto V, Cesana G, Cheng Y, Clune TL, Cook BI, Cruz CA, Del Genio AD, Elsaesser GS, Faluvegi G, Kiang NY, Kim D, Lacis AA, Leboissetier A, LeGrande AN, Lo KK, Marshall J, Matthews EE, McDermid S, Mezuman K, Miller RL, Murray LT, Oinas V, Orbe C, García-Pando CP, Perlwitz JP, Puma MJ, Rind D, Romanou A, Shindell DT, Sun S, Tausnev N, Tsigaridis K, Tselioudis G, Weng E, Wu J, Yao M-S (2020) GISS-E2.1: configurations and climatology. J Adv Model Earth Syst 12(8):e2019MS002025. https://doi.org/10.1029/2019MS002025

Lenssen NJL, Schmidt GA, Hansen JE, Menne MJ, Persin A, Ruedy R, Zyss D (2019) Improvements in the GISTEMP uncertainty model. J Geophys Res Atmos 124(12):6307–6326. https://doi.org/10.1029/2018JD029522

Li L, Yu Y, Tang Y, Lin P, Xie J, Song M, Dong L, Zhou T, Liu L, Wang L, Pu Y, Chen X, Chen L, Xie Z, Liu H, Zhang L, Huang X, Feng T, Zheng W, Xia K, Liu H, Liu J, Wang Y, Wang L, Jia B, Xie F, Wang B, Zhao S, Yu Z, Zhao B, Wei J (2020) The flexible global ocean–atmosphere-land system model grid-point version 3 (FGOALS-g3): description and evaluation. J Adv Model Earth Syst 12(9):e2019MS002012. https://doi.org/10.1029/2019MS002012

Li S, Jarvis A (2009) Long run surface temperature dynamics of an A-OGCM: the HadCM3 4\(\times\)CO2 forcing experiment revisited. Clim Dyn 33(6):817–825. https://doi.org/10.1007/s00382-009-0581-0

Li Y, Chen K, Yan J, Zhang X (2021) Uncertainty in optimal fingerprinting is underestimated. Environ Res Lett 16(8):084043. https://doi.org/10.1088/1748-9326/ac14ee

MacKinnon JG (2010) Critical values for cointegration tests. Queen’s Economics Department Working Paper 1227, Queen’s University, Department of Economics, Kingston. http://hdl.handle.net/10419/67744

McKitrick R (2021) Checking for model consistency in optimal fingerprinting: a comment. Clim Dyn. https://doi.org/10.1007/s00382-021-05913-7

Mills TC (2008) How robust is the long-run relationship between temperature and radiative forcing? Clim Change 94(3):351. https://doi.org/10.1007/s10584-008-9525-7

Mitchell JFB, Karoly DJ, Hegerl GC, Zwiers FW, Allen MR, Marengo J, Barros V, Berliner M, Boer G, Crowley T, Folland C, Free M, Gillett N, Groisman P, Haigh J, Hasselmann K, Jones P, Kandlikar M, Kharin V, Kheshgi H, Knutson T, MacCracken M, Mann M, North G, Risbey J, Robock A, Santer B, Schnur R, Schönwiese C, Sexton D, Stott P, Tett S, Vinnikov K, Wigley T (2001) Chapter 12—Detection of climate change and attribution of causes. In: Semazzi F, Zillman J (eds) Climate Change 2001: the physical science basis. Contribution of Working Group I to the Third Assessment Report of the Intergovernmental Panel on Climate Change, p 44. Cambridge University Press, Cambridge. https://www.ipcc.ch/site/assets/uploads/2018/03/TAR-12.pdf

Morice CP, Kennedy JJ, Rayner NA, Winn JP, Hogan E, Killick RE, Dunn RJH, Osborn TJ, Jones PD, Simpson IR (2021) An updated assessment of near-surface temperature change from 1850: the HadCRUT5 data set. J Geophys Res Atmos 126(3):e2019JD032361. https://doi.org/10.1029/2019JD032361

Myhre G, Shindell D, Bréon F-M, Collins W, Fuglestvedt J, Huang J, Koch D, Lamarque J-F, Lee D, Mendoza B, Nakajima T, Robock A, Stephens G, Takemura T, Zhang H (2013) Chapter 8– Anthropogenic and natural radiative forcing. In: Stocker TF, Qin D, Plattner G-K, Tignor M, Allen SK, Doschung J, Nauels A, Xia Y, Bex V, Midgley PM (eds) Climate change 2013: the physical science basis. Contribution of Working Group I to the Fifth Assessment Report of the Intergovernmental Panel on Climate Change, pp 659–740. Cambridge University Press, Cambridge. https://www.ipcc.ch/site/assets/uploads/2018/02/WG1AR5_Chapter08_FINAL.pdf

Otto FEL, Frame DJ, Otto A, Allen MR (2015) Embracing uncertainty in climate change policy. Nat Clim Change 5(10):917–920

Pretis F, Mann ML, Kaufmann RK (2015) Testing competing models of the temperature hiatus: assessing the effects of conditioning variables and temporal uncertainties through sample-wide break detection. Clim Change 131(4):705–718. https://doi.org/10.1007/s10584-015-1391-5

R Core Team (2021) R: a language and environment for statistical computing. R Foundation for Statistical Computing. https://www.R-project.org/

Ribes A, Azaïs J-M, Planton S (2009) Adaptation of the optimal fingerprint method for climate change detection using a well-conditioned covariance matrix estimate. Clim Dyn 33(5):707–722. https://doi.org/10.1007/s00382-009-0561-4

Ribes A, Planton S, Terray L (2013) Application of regularised optimal fingerprinting to attribution. Part I: Method, properties and idealised analysis. Clim Dyn 41(11):2817–2836. https://doi.org/10.1007/s00382-013-1735-7

Rohde RA, Hausfather Z (2020) The Berkeley earth land/ocean temperature record. Earth Syst Sci Data 12(4):3469–3479

Rypdal K (2015) Attribution in the presence of a long-memory climate response. Earth Syst Dyn 6(2):719–730

Schmith T, Johansen S, Thejll P (2012) Statistical analysis of global surface temperature and sea level using cointegration methods. J Clim 25(22):7822–7833

Seland Ø, Bentsen M, Olivié D, Toniazzo T, Gjermundsen A, Graff LS, Debernard JB, Gupta AK, He Y-C, Kirkevåg A, Schwinger J, Tjiputra J, Aas KS, Bethke I, Fan Y, Griesfeller J, Grini A, Guo C, Ilicak M, Karset IHH, Landgren O, Liakka J, Moseid KO, Nummelin A, Spensberger C, Tang H, Zhang Z, Heinze C, Iversen T, Schulz M (2020) Overview of the Norwegian Earth System Model (NorESM2) and key climate response of CMIP6 DECK, historical, and scenario simulations. Geosci Model Dev 13(12):6165–6200

Smith TM, Reynolds RW, Peterson TC, Lawrimore J (2008) Improvements to NOAA’s historical merged land–ocean surface temperature analysis (1880–2006). J Clim 21(10):2283–2296

Stern DI (2006) An atmosphere–ocean time series model of global climate change. Comput Stat Data Anal 51(2):1330–1346

Stern DI, Kaufmann RK (2000) Detecting a global warming signal in hemispheric temperature series: a structural time series analysis. Clim Change 47(4):411–438. https://doi.org/10.1023/A:1005672231474

Stern DI, Kaufmann RK (2014) Anthropogenic and natural causes of climate change. Clim Change 122(1):257–269. https://doi.org/10.1007/s10584-013-1007-x

Storelvmo T, Leirvik T, Lohmann U, Phillips PCB, Wild M (2016) Disentangling greenhouse warming and aerosol cooling to reveal Earth’s climate sensitivity. Nat Geosci 9(4):286–289

Sutton R, Suckling E, Hawkins E (2015) What does global mean temperature tell us about local climate? Philos Trans R Soc A Math Phys Eng Sci 373(2054):20140426. https://doi.org/10.1098/rsta.2014.0426

Swart NC, Cole JNS, Kharin VV, Lazare M, Scinocca JF, Gillett NP, Anstey J, Arora V, Christian JR, Hanna S, Jiao Y, Lee WG, Majaess F, Saenko OA, Seiler C, Seinen C, Shao A, Sigmond M, Solheim L, von Salzen K, Yang D, Winter B (2019) The Canadian Earth System Model version 5 (CanESM5.0.3). Geosci Model Dev 12(11):4823–4873

Tatebe H, Ogura T, Nitta T, Komuro Y, Ogochi K, Takemura T, Sudo K, Sekiguchi M, Abe M, Saito F, Chikira M, Watanabe S, Mori M, Hirota N, Kawatani Y, Mochizuki T, Yoshimura K, Takata K, O’ishi R, Yamazaki D, Suzuki T, Kurogi M, Kataoka T, Watanabe M, Kimoto M, (2019) Description and basic evaluation of simulated mean state, internal variability, and climate sensitivity in MIROC6. Geosci Model Dev 12(7):2727–2765

Tollefson J (2021) COVID curbed carbon emissions in 2020—but not by much. Nature 589(7842):343–343

Tsutsui J (2017) Quantification of temperature response to CO2 forcing in atmosphere–ocean general circulation models. Clim Change 140(2):287–305. https://doi.org/10.1007/s10584-016-1832-9

Turasie AA (2012) Cointegration modelling of climatic time series. p 166

Voldoire A, Saint-Martin D, Sénési S, Decharme B, Alias A, Chevallier M, Colin J, Guérémy J-F, Michou M, Moine M-P, Nabat P, Roehrig R, Salas y Mélia D, Séférian R, Valcke S, Beau I, Belamari S, Berthet S, Cassou C, Cattiaux J, Deshayes J, Douville H, Ethé C, Franchistéguy L, Geoffroy O, Lévy C, Madec G, Meurdesoif Y, Msadek R, Ribes A, Sanchez-Gomez E, Terray L, Waldman R, (2019) Evaluation of CMIP6 DECK experiments with CNRM-CM6-1. J Adv Model Earth Syst 11(7):2177–2213. https://doi.org/10.1029/2019MS001683

Williams KD, Copsey D, Blockley EW, Bodas-Salcedo A, Calvert D, Comer R, Davis P, Graham T, Hewitt HT, Hill R, Hyder P, Ineson S, Johns TC, Keen AB, Lee RW, Megann A, Milton SF, Rae JGL, Roberts MJ, Scaife AA, Schiemann R, Storkey D, Thorpe L, Watterson IG, Walters DN, West A, Wood RA, Woollings T, Xavier PK (2018) The met office global coupled model 3.0 and 3.1 (GC3.0 and GC3.1) configurations. J Adv Model Earth Syst 10(2):357–380. https://doi.org/10.1002/2017MS001115

Wu T, Lu Y, Fang Y, Xin X, Li L, Li W, Jie W, Zhang J, Liu Y, Zhang L, Zhang F, Zhang Y, Wu F, Li J, Chu M, Wang Z, Shi X, Liu X, Wei M, Huang A, Zhang Y, Liu X (2019) The Beijing Climate Center Climate System Model (BCC-CSM): the main progress from CMIP5 to CMIP6. Geosci Model Dev 12(4):1573–1600

Yukimoto S, Kawai H, Koshiro T, Oshima N, Yoshida K, Urakawa S, Tsujino H, Deushi M, Tanaka T, Hosaka M, Yabu S, Yoshimura H, Shindo E, Mizuta R, Obata A, Adachi Y, Ishii M (2019) The Meteorological Research Institute Earth System Model Version 2.0, MRI-ESM2.0: description and basic evaluation of the physical component. J Meteorol Soc Jpn Ser II 97(5):931–965

Yule GU (1926) Why do we sometimes get nonsense-correlations between time-series? A study in sampling and the nature of time-series. J R Stat Soc 89(1):1–63

Zhang HM, Lawrimore JH, Huang B, Menne MJ, Yin X, Sanchez-Lugo A, Gleason BE, Vose R, Arndt D, Rennie JJ, Williams CN (2019) Updated temperature data give a sharper view of climate trends. Eos. https://doi.org/10.1029/2019EO128229

Ziehn T, Chamberlain MA, Law RM, Lenton A, Bodman RW, Dix M, Stevens L, Wang Y-P, Srbinovsky J, Ziehn T, Chamberlain MA, Law RM, Lenton A, Bodman RW, Dix M, Stevens L, Wang Y-P, Srbinovsky J (2020) The Australian Earth System Model: ACCESS-ESM1.5. J South Hemisph Earth Syst Scie 70(1):193–214

Acknowledgements

We are grateful to the reviewers for their insightful and constructive comments. We acknowledge the World Climate Research Program’s Working Group on Coupled Modelling, which is responsible for CMIP, and we thank the climate modelling groups for producing and making available their model output. A list of the models used in this study and the respective modelling centres is available at the repository linked below. For CMIP the U.S. Department of Energy’s Program for Climate Model Diagnosis and Intercomparison provides coordinating support and led development of software infrastructure in partnership with the Global Organization for Earth System Science Portals. Donald P. Cummins wishes to thank Philip G. Sansom for kindly sharing his R script which was used for batch processing of CMIP6 gridded data.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Cummins, D.P., Stephenson, D.B. & Stott, P.A. Could detection and attribution of climate change trends be spurious regression?. Clim Dyn 59, 2785–2799 (2022). https://doi.org/10.1007/s00382-022-06242-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00382-022-06242-z