Abstract

Tuning of model-based boosting algorithms relies mainly on the number of iterations, while the step-length is fixed at a predefined value. For complex models with several predictors such as Generalized additive models for location, scale and shape (GAMLSS), imbalanced updates of predictors, where some distribution parameters are updated more frequently than others, can be a problem that prevents some submodels to be appropriately fitted within a limited number of boosting iterations. We propose an approach using adaptive step-length (ASL) determination within a non-cyclical boosting algorithm for Gaussian location and scale models, as an important special case of the wider class of GAMLSS, to prevent such imbalance. Moreover, we discuss properties of the ASL and derive a semi-analytical form of the ASL that avoids manual selection of the search interval and numerical optimization to find the optimal step-length, and consequently improves computational efficiency. We show competitive behavior of the proposed approaches compared to penalized maximum likelihood and boosting with a fixed step-length for Gaussian location and scale models in two simulations and two applications, in particular for cases of large variance and/or more variables than observations. In addition, the underlying concept of the ASL is also applicable to the whole GAMLSS framework and to other models with more than one predictor like zero-inflated count models, and brings up insights into the choice of the reasonable defaults for the step-length in the simpler special case of (Gaussian) additive models.

Similar content being viewed by others

1 Introduction

Generalized additive models for location, scale and shape (GAMLSS) (Rigby and Stasinopoulos 2005) are distribution-based approaches, where all parameters of the assumed distribution for the response can be modelled as additive functions of the explanatory variables (Ripley 2004; Stasinopoulos et al. 2017). Specifically, the GAMLSS framework allows the conditional distribution of the response variable to come from a wide variety of discrete, continuous and mixed discrete-continuous distributions, see Stasinopoulos and Rigby (2007). Unlike conventional generalized additive models (GAMs), GAMLSS not only model the location parameter, e.g. the mean for Gaussian distributions, but also further distribution parameters such as scale (variance) and shape (skewness and kurtosis) through the explanatory variables in linear, nonlinear or smooth functional form.

The coefficients of GAMLSS are usually estimated based on penalized maximum likelihood method (Rigby and Stasinopoulos 2005). However, this approach cannot deal with high dimensional data, or more precisely, the case of more variables than observations (Bühlmann 2006). As the selection of informative covariates is an important part of practical analysis, Mayr et al. (2012) combined the GAMLSS framework with componentwise gradient boosting (Bühlmann and Yu 2003; Hofner et al. 2014; Hothorn et al. 2018) such that variable selection and estimation can be performed simultaneously. The original method cyclically updates the distribution parameters, i.e. all predictors will be updated sequentially in each boosting iteration (Hofner et al. 2016). Because the levels of complexity vary across the prediction functions, separate stopping values are required for each distribution parameter. Consequently, these stopping values have to be optimized jointly as they are not independent of each other. The commonly applied joint optimization methods like grid search are, however, computationally very demanding. For this reason, Thomas et al. (2018) proposed an alternative non-cyclical algorithm that updates only one distribution parameter (yielding the strongest improvement) in each boosting iteration. This way, only one global stopping value is needed and the resulting one-dimensional optimization procedure vastly reduces computing complexity for the boosting algorithm compared to the previous multi-dimensional one. The non-cyclical algorithm can be combined with stability selection (Meinshausen and Bühlmann 2010; Hofner et al. 2015) to further reduce the selection of false positives (Hothorn et al. 2010).

In contrast to the cyclical approach, the non-cyclical algorithm avoids an equal number of updates for all distribution parameters as it is not useful to artificially enforce updates for parameters with a less complex structure than other parameters. However, it becomes even more important to fairly select the predictor to be updated in any given iteration. The current implementation of Thomas et al. (2018), however, uses fixed and equal step-lengths for all updates, regardless of the achieved loss reduction of different distribution parameters. In other words, different parameters affect the loss in different ways, and an update of the same size on all predictors hence results in different improvement with respect to loss reduction. As a consequence, a more useful update of one parameter could be rejected in favor of the other one just because the relevance in the loss function varies. As we demonstrate later, this leads to imbalanced updates that affect the fair selection and predictors with large number of boosting iterations still tend to be underfitted. This seems inconsistent, since one expects the underfitted predictor to be updated with a small number of iterations. As we show later, a large \(\varvec{\sigma }\) in a Gaussian distribution leads to a small negative gradient of \(\varvec{\mu }\) and consequently the improvement for \(\varvec{\mu }\) with fixed small step-lengths in each boosting iteration will also be small. This results in the algorithm needing a lot of updates for \(\varvec{\mu }\) until its empirical risk decreases to the level of \(\varvec{\sigma }\). However, the algorithm may stop long before the corresponding coefficients are well estimated.

We address this problem by proposing a version of the non-cyclical boosting algorithm for GAMLSS, especially for Gaussian location and scale models, that adaptively and automatically optimizes the step-lengths for all predictors in each boosting iteration. This ensures no parameters favored over the other by finding the factor that results in the overall best model improvement for each update and then bases the decision on which parameter to update on this comparison. While the adaptive approach does not enforce equal numbers of updates for all distribution parameters, it yields a fair selection of predictors to update and a natural balance in the updates. For the very special Gaussian case, we also derive (semi-)analytical adaptive step-lengths that decrease the need for numerical optimization and discuss their properties. Our findings have implications beyond boosted Gaussian location and scale models for boosting other models with several predictors, e.g. the whole GAMLSS framework in general or for zero-inflated count models, and also give insights into the step-length choice for the simpler special case of (Gaussian) additive models.

The structure of this paper is organized as follows: Section 2 introduces the boosted GAMLSS models including the cyclical and non-cyclical algorithms. Section 3 discusses how to apply the adaptive step-length on the non-cyclical boosted GAMLSS algorithm, and introduces the semi-analytical solutions of the adaptive step-length for the Gaussian location and scale models and discuss their properties. Section 4 evaluates the performance of the adaptive algorithms and the problem of fixed step-length in two simulations. Section 5 presents the application of the adaptive algorithms for two datasets: the malnutrition data, where the outcome variance is very large, and the riboflavin data, which has more variables than observations. Section 6 concludes with a summary and discussion. Further relevant materials and results are included in the appendix.

2 Boosted GAMLSS

In this section, we briefly introduce the GAMLSS models and the two cyclical and noncyclical boosting methods for estimation.

2.1 GAMLSS and componentwise gradient boosting

Conventional generalized additive models (GAM) assume a dependence only of the conditional mean \(\mu \) of the response on the covariates. GAMLSS, however, also model other distribution parameters such as the scale \(\sigma \), skewness \(\nu \) and/or kurtosis \(\tau \) with a set of statistical models.

The K distribution parameters \(\varvec{\theta }^T = (\varvec{\theta }_1, \varvec{\theta }_2, \ldots , \varvec{\theta }_K)\) of a density function \(f({\varvec{y}}|\varvec{\theta })\) are modelled by a set of up to K additive models. The model class assumes that the observations \(y_i\) for \(i \in \{1, \ldots , n\}\) are conditionally independent given a set of explanatory variables. Let \({\varvec{y}}^T = (y_1, y_2, \ldots , y_n)\) be a vector of the response variable and \({\varvec{X}}\) be a \(n \times J\) data matrix. In addition, we denote \({\varvec{X}}_{i \cdot }\), \({\varvec{X}}_{\cdot j}\) and \(X_{ij}\) as the i-th observation (vector of length J), j-variable (vector of length n) and the i-th observation of the j-th variable (a single value) respectively. Let \(g_k(\cdot ), k = 1, \ldots , K\) be known monotonic link functions that relate K distribution parameters to explanatory variables through additive models given by

where \(\varvec{\theta }_k = (\theta _{k, 1}, \ldots , \theta _{k, n})^T\) contains the n parameter values for the n observations and functions are applied elementwise if the argument is a vector, \(\varvec{\eta }_{\theta _k}\) is a vector of length n, \({\varvec{1}}_n\) is a vector of ones and \(\beta _{0, \varvec{\theta }_k}\) is the model parameter specific intercept. Function \(f_{j, \varvec{\theta }_k}({\varvec{X}}_{\cdot j} | \beta _{j, \varvec{\theta }_k})\) indicates the effects of the j-th explanatory variable \({\varvec{X}}_{\cdot j}\) (vector of length n) for the model parameter \(\varvec{\theta }_k\), and \(\beta _{j, \varvec{\theta _k}}\) is the parameter of the additive predictor \(f_{j, \varvec{\theta }_k}(\cdot )\). Various types of effects (e.g., linear, smooth, random) for \(f(\cdot )\) are allowed. If the location parameter \((\theta _1 = \mu )\) is the only distribution parameter to be regressed (\(K=1\)) and the response variable is from the exponential family, (1) reduces to the conventional GAM. In addition, \(f_j\) can depend on more than one variable (interaction), in which case \(X_{\cdot j}\) would be e.g. a \(n \times 2\) matrix, but for simplicity we ignore this case in the notation.

A penalized likelihood approach can be used to estimate the unknown quantities; for more details, see Rigby and Stasinopoulos (2005). This approach does not allow parameter estimation in the case of more explanatory variables than observations, and variable selection for high-dimensional data is not possible, which, however, can be well solved by using boosting. The theoretical foundations regarding numerical convergence and consistency of boosting with general loss functions have been studied by Zhang and Yu (2005). The work of Bühlmann and Yu (2003) on L2 boosting with linear learners and Hastie et al. (2007) on the proof of the equivalence of the lasso and forward stagewise regression paved the way of componentwise gradient boosting (Hothorn et al. 2018), which emphasizes the importance of weak learners to reduce the tendency to overfit. To deal with the high-dimensional problems, Mayr et al. (2012) proposed a boosted GAMLSS algorithm, which estimates the predictors in GAMLSS with componentwise gradient boosting. As this method updates in general only one variable in each iteration, it can deal with data that has more variables than observations, and the important variables can be selected by controlling the stopping iterations.

To estimate the unknown predictor parameters \(\beta _{j, \varvec{\theta }_k}\), \(j \in \{1, \ldots , J\}\) in equation (1), the componentwise gradient boosting algorithm minimizes the empirical risk R, which is also the loss \(\rho \) summed over all observations,

where the loss \(\rho \) measures the discrepancy between the response \(y_i\) and the predictor \(\varvec{\eta }({\varvec{X}}_{i \cdot })\). The predictor \(\varvec{\eta }({\varvec{X}}_{i \cdot }) = \left( \eta _{\varvec{\theta }_1}({\varvec{X}}_{i\cdot }), \ldots , \eta _{\varvec{\theta }_K}({\varvec{X}}_{i\cdot }) \right) \) is a vector of length K. For the i-th observation \({\varvec{X}}_{i\cdot }\), each predictor \(\eta _{\varvec{\theta }_k}({\varvec{X}}_{i\cdot })\) is a single value corresponding to the i-th entry in \(\eta _{\varvec{\theta _k}}\) in equation (1). The loss function \(\rho \) usually used in GAMLSS is the negative log-likelihood of the assumed distribution of \({\varvec{y}}\) (Thomas et al. 2018; Friedman et al. 2000).

The main idea of gradient boosting is to fit simple regression base-learners \(h_j(\cdot )\) to the pseudo-residuals vector \({\varvec{u}}^T = (u_1, \ldots , u_n)\), which is defined as the negative partial derivatives of loss \(\rho \), i.e.,

where m denotes the current boosting iteration. In a componentwise gradient boosting iteration, each base-learner involves usually one explanatory variable (interactions are also allowed) and is fitted separately to \({\varvec{u}}_k^{[m]}\),

For linear base-learner, its correspondence to the model terms in (1) shall be

where the estimated coefficients can be obtained by using the maximum likelihood or least square method. The best-fitting base-learner is selected based on the residual sum of squares, i.e.,

thereby allowing for easy interpretability of the estimated model and also the use of hypothesis tests for single base-learners (Hepp et al. 2019). The additive predictor will be updated based on the best-fitting base-learner \({\hat{h}}_{j^*, \theta _{k^*}}({\varvec{X}}_{\cdot j^*})\) in terms of the best-performing sub-model \(\eta _{\varvec{\theta }_{k^*}}\),

where \(\nu \) denotes the step-length. In order to prevent overfitting, the step-length is usually set to a small value, in most cases 0.1. Equation (2) updates only the best-performing predictor \({\hat{\eta }}_{\varvec{\theta }_{k^*}}^{[m]}\), all other predictors (i.e. for \(k \ne k^*\)) remain the same as in the previous boosting iteration. The best-performing sub-model \(\varvec{\theta }_{k^*}\) can be selected by comparing the empirical risk, i.e. which model parameter achieves the largest model improvement.

The main tuning parameter in this procedure, as in other boosting algorithms, is how many iterations should be performed before it stops, which is denoted as \(m_{\theta _{\text {stop}}}\). As too large or small \(m_{\theta _{\text {stop}}}\) leads to over-/underfitting model, cross-validation (Kohavi 1995) is one of the most widely used methods to find the optimal \(m_{\theta _{\text {stop}}}\).

2.2 Cyclical boosted GAMLSS

The boosted GAMLSS can deal with data that has more variables than observations, as the componentwise gradient boosting updates only one variable in each iteration. It leads to variable selection if some less important variables have never been selected as the best-performing variable and thus are not included in the final model for a given stopping iteration \(m_{\theta _{\text {stop}}}\).

The original framework of boosted GAMLSS proposed by Mayr et al. (2012) is a cyclical approach, which means every predictor \(\eta _{\varvec{\theta }_k}, k \in \{1, \ldots , K\}\) is updated in a cyclical manner inside each boosting iteration. The iteration starts by updating the predictor for the location parameter and uses the predictors from the previous iteration for all other parameters. Then, the updated location model will be used for updating the scale model and so on. A schematic overview of the updating process in iteration \(m+1\) for \(K = 4\) is

However, not all of the distribution parameters have the same complexity, i.e., the stopping iterations \(m_{\theta _{\text {stop}}}\) should be set separately for different parameters, or jointly optimized, for example by grid search. Since grid search scales exponentially with the number of distribution parameters, such optimization can be very slow.

2.3 Non-cyclical boosted GAMLSS

In order to deal with the issues of a cyclical approach, Thomas et al. (2018) proposed a non-cyclical version, that updates only one distribution parameter instead of successively updating all parameters in each boosting iteration by comparing the model improvement (negative log-likelihood) of each model parameter, see Algorithm 1 (especially step 11). Consequently, instead of specifying separate stopping iterations \(m_{\varvec{\theta }\text {stop}}\) for different parameters and tuning them with the computationally demanding grid search, only one overall stopping iteration, denoted as \(m_{\text {stop}}\), needs to be tuned with e.g. the line search (Friedman 2001; Brent 2013). The tuning problem thus reduces from a multi-dimensional to a one-dimensional problem, which vastly reduces the computing time.

Algorithm 1 has a nested structure, with the outer loop executing the boosting iterations and the inner loops addressing the different distribution parameters. The best-fitting base-learner and their contribution to the model improvement for every parameter is selected in the inner loop and compared in the outer loop (step 11). Therefore, only the best performing base-learner is updated in a single iteration by adding \(\nu {\hat{h}}(X_{\cdot j^*})\) to the predictor of the corresponding parameter \(\theta _{k^*}\). Over the course of the iterations, the boosting algorithm steadily increases the model in small steps and the final estimates for the different base-learners are simply the sum of all their updates they may have received.

The cyclical approach led to an inherent but somewhat artificial balance between the distribution parameters, as the predictors for all distribution parameters are updated in each iteration. The different final stopping values \(m_{\varvec{\theta }\text {stop}}\) for the different distribution parameters - chosen by tuning methods such as cross-validation - allow to stop updates at different times for distribution parameters of different complexity to avoid overfitting. In the non-cyclical algorithm, especially when \(m_{\text {stop}}\) is not large enough, there is the danger of an imbalance between predictors. If the selection between predictors to update is not fair, this could lead to iterations primarily updating some of the predictors and underfitting others. We will provide a detailed example for the Gaussian distribution with large \(\varvec{\sigma }\) in Sect. 4.2.

A related challenge is to choose an appropriate step-length \(\nu _{\varvec{\theta }_k}^{[m]}\) for both the cyclical and non-cyclical approaches. Tuning the parameters when boosting GAMLSS models relies mainly on the number of boosting iterations (\(m_{\text {stop}}\)), with the step-length \(\nu \) usually set to a small value such as 0.1. Bühlmann and Hothorn (2007) argued that using a small step-length like 0.1 (potentially resulting in a larger number of iterations \(m_{\text {stop}}\)) had a similar computing speed as using an adaptive step-length performed by doing a line search, but meant an easier tuning task for one parameter (\(m_{\text {stop}}\)) instead of two. However, this result referred to models with a single predictor. A fixed step-length can lead to an imbalance in the case of several predictors that may live on quite different scales. For example, 0.1 may be too small for \(\mu \) but large for \(\sigma \). We will discuss such cases analytically and with empirical evidence in the later sections. Moreover, varying the step-lengths for the different sub-models directly influences the choice of the best performing sub-model in the non-cyclical boosting algorithm, thus choosing a subjective step-length is not appropriate. In the following, we denote a fixed predefined step-length such as 0.1 as the fixed step-length (FSL) approach.

To overcome the problems stated above, we propose using an adaptive step-length (ASL) while boosting. In particular, we propose to optimize the step-length for each predictor in each iteration to obtain a fair comparison between the predictors. While the adaptive step-length has been used before, the proposal to use different ASLs for different predictors is new and we will see that this leads to balanced updates of the different predictors.

3 Adaptive step-length

In this section, we first introduce the general idea of the implementation of adaptive step-lengths for different predictors to GAMLSS. For the important special case of a Gaussian location and scale models with two model parameters (\(\mu \) and \(\sigma \)), we will derive and discuss their adaptive step-lengths and properties, which also serves as an important illustration of the relevant issues more generally.

3.1 Boosted GAMLSS with adaptive step-length

Unlike the step-length in Eq. (2) and Algorithm 1, step 11, the adaptive step-length may also vary in different boosting iterations according to the loss reduction.

The adaptive step-length can be derived by solving the optimization problem

note that \(\nu _{j^*, \varvec{\theta }_k}^{*[m]}\) is the optimal step-length of the model parameter \(\theta _k\) dependent on \(j^*\) in iteration m. The optimal step-length is a value that leads to the largest decrease possible of the empirical risk and usually leads to overfitting of the corresponding variable if no shrinkage is used (Hepp et al. 2016). Therefore the actual adaptive step-length (ASL) we apply in the boosting algorithm is the product of two parts, the shrinkage parameter \(\lambda \) and the optimal step-length \(\nu _{j^*, \varvec{\theta }_k}^{*[m]}\), i.e.,

In this article, we take \(\lambda = 0.1\), thus 10% of the optimal step-length. By comparison, the fixed step-length \(\nu = 0.1\) would correspond to a combination of a shrinkage parameter \(\lambda = 0.1\) with the “optimal" step-length \(\nu ^*\) set to one.

The non-cyclical algorithm with ASL can be improved by replacing the fixed step-length in step 8 of algorithm 1 with the adaptive one. We formulate this change in Algorithm 2.

As the parameters in GAMLSS may have quite different scales, updates with fixed step-length can lead to an imbalance between model parameters, especially when \(m_{\text {stop}}\) is not large enough. When using FSL, a single update for predictor \(\eta _{\varvec{\theta }_1}\) may achieve the same amount of global loss reduction than several updates of another predictor \(\eta _{\varvec{\theta }_2}\) even if the actually possible contribution of the competing base-learners is similar, because for different scales the loss reductions of \(\eta _{\varvec{\theta }_2}\) in these iterations are always smaller than that of \(\eta _{\varvec{\theta }_1}\). However, such unfair selections can be avoided by using ASL, because the model improvement depends on the largest decrease possible of each predictor, i.e., the potential reduction in the empirical risks of all predictors are on the same level and their comparison therefore is fair. Fair selection does not enforce an equal number of updates as in the cyclical approach. The ASL approach can lead to imbalanced updates of predictors, but such imbalance actually reveals the intrinsically different complexities of each sub-model.

The main contribution of this paper is the proposal to use ASLs for each predictor in GAMLSS. This idea can also be applied to other complex models (e.g. zero-inflated count models) with several predictors for the different parameters, because these models meet the same problem, i.e. the scale of these parameters might differ considerably. If a boosting algorithm is preferred for estimation of such a model, we provide a new solution to address these kinds of problems, i.e. separate adaptive step-lengths for each distribution parameter.

3.2 Gaussian location and scale models

In general, the adaptive step-length \(\nu \) can be found by optimizing procedures such as a line search. However, such methods do not help to reveal the properties of adaptive step-lengths and its relationship with model parameters. Moreover, a line search method searches for the optimal value from a predefined search interval, which can be difficult to find out since too narrow intervals might not include the optimal value and too large intervals increase the searching time. The direct computation from an analytical expression is faster than a search. By investigating the important special case of a Gaussian distribution with two parameters, we will learn a lot about the adaptive step-length for the general case. Nevertheless, we must underline that for many cases an explicit closed form for the adaptive step-length may not exist and line search still plays an irreplaceable role. We perform the following study of the analytical solutions for the Gaussian special case out of the wish of finding its inner relationship with the model parameters, in order to better understand the limitation of fixed step-length and how adaptive values improve the learning process.

Consider the data points \((y_i, {\varvec{x}}_{i\cdot }), i \in \{1, \ldots , n\}\), where \({\varvec{x}}\) is a \(n \times J\) matrix. Assume the true data generating mechanism is the normal model

As we talk about the observed data, we replace \(\eta _{\varvec{\theta }_k}\), where \(k = 1, 2\) for Gaussian distribution, with \(\varvec{\mu }\) and \(\varvec{\sigma }\), and replace \({\varvec{X}}\) with \({\varvec{x}}\). The identity and exponential functions for \(\varvec{\mu }\) and \(\varvec{\sigma }\) are thus the corresponding inverse link. Taking the negative log-likelihood as the loss function, its negative partial derivatives \({\varvec{u}}_{\varvec{\mu }}\) and \({\varvec{u}}_{\varvec{\sigma }}\) in iteration m for both parameters can then be modelled with the base-learners \({\hat{h}}_{j, \varvec{\mu }}^{[m]}\) and \({\hat{h}}_{j, \varvec{\sigma }}^{[m]}\). The optimization process can then be divided into two parts: one is the ASL for the location parameter \(\varvec{\mu }\), and the other is for the scale parameter \(\varvec{\sigma }\). As the ASL shrinks the optimal value, we consider only the optimal step-lengths for both parameters.

3.2.1 Optimal step-length for \(\varvec{\mu }\)

The analytical optimal step-length for \(\varvec{\mu }\) in iteration m is obtained through minimizing the empirical risk

where the expression \({\hat{\sigma }}_i^{2[m-1]}\) represents the square of the standard deviation in the previous iteration, i.e. \({\hat{\sigma }}_i^{2[m-1]} = ({\hat{\sigma }}_i^{[m-1]})^2\). The optimal value of \(\nu _{j^*, \varvec{\mu }}^{*[m]}\) is obtained by letting the derivative of the equation equal zero, so we get the analytical ASL for \(\varvec{\mu }\) (for more derivation details, see also appendix A.1):

It is obvious, that \(\nu ^{*[m]}_{j^*, \varvec{\mu }}\) is not an independent parameter in GAMLSS but depends on the base-learner \({\hat{h}}_{\varvec{\mu }}^{[m]}(x_{ij^*})\) with respect to the best performing variable \({\varvec{x}}_{\cdot j^*}\) and the estimated variance in the previous iteration \({\hat{\sigma }}_i^{2[m-1]}\).

In the special case of a Gaussian additive model, the scale parameter \(\sigma \) is assumed to be constant, i.e. \({\hat{\sigma }}_i^{[m-1]} = {\hat{\sigma }}^{[m-1]}\) for all \(i \in \{1, \ldots , n\}\). We then obtain

This gives us an interesting property of the optimal step-length or ASL, i.e., the analytical ASL for \(\mu \) in the Gaussian distribution is actually the variance (as computed in the previous boosting iteration). This property enables this paper to be not only applicable for the special GAMLSS case, but also for the boosting of additive models with normal responses. Therefore, in the case of Gaussian additive models, we can use \(\nu _{j^*, \varvec{\mu }}^{[m]} = \lambda {\hat{\sigma }}^{2[m-1]}\) as the step-length, which has a stronger theoretical foundation, instead of the common choice 0.1.

Back to the general GAMLSS case, we can further investigate the behavior of the step-length by considering the limiting case of \(m \rightarrow \infty \). For large m, all base-learner fits \({\hat{h}}_{j^*, \varvec{\mu }}^{[m]}(x_{ij^*})\) converge to zero or are similarly small. If we consequently approximate all \({\hat{h}}_{j^*, \varvec{\mu }}^{[m]}(x_{ij^*})\) by some small constant h, this gives an approximation of the analytical optimal step-length of

which is the harmonic mean of the estimated variances \({\hat{\sigma }}_i^{2[m-1]}\) in the previous iteration. While this expression requires m to be large, which may not be reached if \(m_{\text {stop}}\) is of moderate size to prevent overfitting, the expression still gives an indication of the strong dependence of the optimal step-length on the variances \({\hat{\sigma }}_i^{2[m-1]}\), which generalizes the optimal value of the additive model in (6).

3.2.2 Optimal step-length for \(\varvec{\sigma }\)

The optimal step-length for the scale parameter \(\varvec{\sigma }\) can be obtained analogously by minimizing the empirical risk, now with respect to \(\nu _{j^*, \varvec{\sigma }}^{*[m]}\). We obtain

After checking the positivity of the second-order derivative of the expression in equation (8), the optimal value can be obtained by setting the first-order derivative equal to zero:

where \(\epsilon _{i, \varvec{\sigma }}\) denotes the residuals when regressing the negative partial derivatives \({\varvec{u}}_{\varvec{\sigma }, i}^{[m]}\) on the base-learner \({\hat{h}}_{\varvec{\sigma }}^{[m]}(x_{ij^*})\), i.e.,\(u_{\varvec{\sigma }, i} = {\hat{h}}_{\varvec{\sigma }}^{[m]}({\varvec{x}}_{i \cdot }) + \epsilon _{i, \varvec{\sigma }}\). Unfortunately, equation (9) cannot be further simplified, which means that there is no analytical ASL for the scale parameter \(\varvec{\sigma }\) in the Gaussian distribution. Hence, the optimal ASL must be found by performing a conventional line search. For more details, see also Appendix A.2.

Even without an analytical solution, we can still use (9) to further study the behavior of the ASL. Analogous to the derivation of (7), \({\hat{h}}_{\varvec{\sigma }}^{[m]}(x_{ij^*})\) converges to zero for \(m \rightarrow \infty \). If we approximate with a (small) constant \({\hat{h}}_{\varvec{\sigma }}^{[m]}(x_{ij^*}) \approx h, \forall i \in \{1, \ldots , n\}\). Then (9) simplifies to

where \(\frac{1}{n}\sum _{i=1}^n \epsilon _{i, \varvec{\sigma }} = 0\) in the regression model. Equation (10) can be further simplified by approximating the logarithm function with a Taylor series at \(h = 0\), thus

As \(h \rightarrow 0\) for \(m \rightarrow \infty \), the limit of this approximate optimal step-length for \(\sigma \) is

Thus, the ASL for \(\varvec{\sigma }\) approaches approximately 0.05 if we take the shrinkage parameter \(\lambda = 0.1\) and iterations run for a longer time (and the boosting algorithm is not stopped too early to prevent overfitting for this trend to show).

3.3 (Semi-)Analytical adaptive step-length

Knowing the properties of the analytical ASL in boosting GAMLSS for the Gaussian distribution, we can replace the line search with the analytical solution for the location parameter \(\varvec{\mu }\). If we keep the line search for the scale parameter \(\varvec{\sigma }\), we call this the Semi-Analytical Adaptive Step-Length (SAASL). Moreover, we are interested in the performance of combining the analytical ASL for \(\varvec{\mu }\) with the approximate value \(0.05 = \lambda \cdot \frac{1}{2}\) (with \(\lambda = 0.1\)) for the ASL for \(\varvec{\sigma }\), which is motivated by the limiting considerations discussed above and has a better theoretical foundation than selecting an arbitrary small value in the common FSL. We call this step-length setup SAASL05. In either of these cases, it is straightforward and computationally efficient to obtain the (approximate) optimal value(s) and both alternatives are faster than performing two line searches.

The semi-analytical solution avoids the need for selecting a search interval for the line search, at least for the ASL for \(\varvec{\mu }\) in the case of SAASL and for both parameters for SAASL05. This is an advantage, since too large search intervals will cause additional computing time, but too small intervals may miss the optimal ASL value and again lead to an imbalance of updates between the parameters. Also note that the value 0.5 gives an indication for a reasonable range for the search interval for \(\nu _{j^*, \varvec{\sigma }}^{*[m]}\) if a line search is conducted after all.

The boosting GAMLSS algorithm with ASL for the Gaussian distribution is shown in Algorithm 3.

For a chosen shrinkage parameter of \(\lambda = 0.1\), the \(\nu _{\varvec{\sigma }}\) in SAASL05 would be 0.05, which is a smaller or “less aggressive" value than 0.1 in FSL, leading to a somewhat larger number of boosting iterations but a smaller risk of overfitting, and to a better balance with the ASL for \(\varvec{\mu }\).

4 Simulation study

In the following, two simulations are shown to demonstrate the performance of the adaptive algorithms. The first one compares the estimation accuracy between the different non-cyclical boosted GAMLSS algorithms with FSL or ASL in a Gaussian regression model for location and scale. The second one underlines the problem of FSL and the performance of the adaptive approaches if the variance in this setting is large.

4.1 Gaussian location and scale model

The simulation study in Thomas et al. (2018) showed that their FSL non-cyclical approach outperforms the classical cyclical approach. We use the same setup to show that the ASL approach performs at least as good as the FSL non-cyclical approach (and hence also outperforms the classical cyclical approach). At the end of this subsection we will show that the reason for the good performance of FSL is due to the chosen simulated data structure. The setup is the following: the response \(y_i\) is drawn from \(N(\mu _i, \sigma _i)\) for \(n=500\) observations, with 6 informative covariates \(x_{ij}, j \in \{1, \ldots , 6\}\) drawn independently from \(\text {Uni}(-1, 1)\). The predictors of both distribution parameters are:

where \(x_3\) and \(x_4\) are shared between both \(\mu \) and \(\sigma \). Moreover, \(p_{\text {n-inf}} = 0, 50, 250 \text { or } 500\) non-informative variables sampled from \(\text {Uni}(-1, 1)\) are also added to the model. We conduct \(B = 100\) simulation runs.

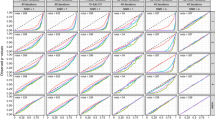

The estimated coefficients of \(\eta _{\varvec{\mu }}\) and \(\eta _{\varvec{\sigma }}\), whose values are taken at stopping iterations tuned by 10-fold CV with the maximum number of boosting iterations set to 1000, are shown in Appendix Figs. 8 and 9.

Overall, the estimated coefficients are similar between all four methods, with the shrinkage bias of boosting only becoming apparent with an increasing number of noise variables.

Figure 1 shows the comparison of the mean squared error (MSE) among non-cyclical boosted algorithms for \(\varvec{\mu }\) and \(\varvec{\sigma }\), where the MSEs are defined on the predictor level as \(\text {MSE}_{\varvec{\mu }} = \frac{1}{n}\sum _{i=1}^n(\mu _i - \eta _{\varvec{\mu }}({\varvec{x}}_{i \cdot }))^2\) and \(\text {MSE}_{\varvec{\sigma }} = \frac{1}{n}\sum _{i=1}^n(\log (\sigma _i) - \eta _{\varvec{\sigma }}({\varvec{x}}_{i \cdot }))^2\), respectively. In general, all methods have a similar MSE, with the MSE of FSL increasing more strongly with the number of non-informative variables \(p_{\text {n-inf}}\) and ASL methods hence slightly outperform FSL in the variance predictor for a high number of non-informative variables. ASL and SAASL show identical results, as they should if the line search is correctly conducted, with results returned by SAASL05 very similar.

Computing the negative log-likelihood in sample of the model fits reveals a slight advantage for FSL (see Appendix Fig. 10). However, this can be linked to the fact that FSL selects more false positive variables on average than the adaptive approaches and thus shows a relatively stronger tendency to overfit the training data (Fig. 2).

Figure 2 illustrates the false positives of each methods for each parameter. For \(\varvec{\sigma }\), even if \(p_{\text {n-info}}\) is small, the false positive rates of the adaptive approaches are notably smaller than those of FSL. As discussed above, \(\nu _{j^*, \varvec{\sigma }}^{[m]} \approx 0.05\) for large m in the adaptive approach is smaller than \(\nu _{\varvec{\sigma }} = 0.1\) for FSL. An update with a smaller, conservative step-length can apparently help to avoid overfitting and the adaptive step-length here seems to strike the balance between learning speed and the number of false positives. While it would also be possible to lower the step-length for FSL to reduce the number of non-informative variables included in the final model, this would increase the number of boosting iterations and the computing time, and it would not address the imbalance between updates for \(\varvec{\mu }\) and \(\varvec{\sigma }\). The optimal choice of the step-length is also difficult without further tuning or an automatic selection as in ASL.

With respect to the neglecting of actually informative variables, i.e. false negatives, all four methods are able to find and select all variables for \(\varvec{\mu }\) in all of the simulation runs. Regarding \(\varvec{\sigma }\), the risk of false negatives slightly increases with the number of noise variables in the setting. However, even in the case of 500 noise-variables, only a single false negative is observed in between 3% and 6% of the runs, independently of the algorithm in question.

To some extent, the low false negative rate could be expected considering the somewhat greedy nature of boosting algorithms. For this reason, performance in terms of false-positive selections is arguably the more important aspect and speaks to the adaptive updates.

In Fig. 3 we show an example of the comparison between the optimal step-lengths in this case. As can be seen, the step-lengths for \(\varvec{\sigma }\) (depicted in grey) converge to 0.5 as shown in Sect. 3.2.2. The second fact that becomes obvious when looking at the figure is that the optimal step-lengths for both predictors do not differ a lot. Even though differences can be observed in early iterations in particular, the step-lengths still have the same order of magnitude. This is not only the case for this example but overall in this simulation setup. Having this in mind, the similar results for both approaches (FSL and ASL) are not very surprising anymore: there is hardly any difference in the approaches, since the updates do not need different step-lengths to be balanced. In the next subsection we will examine a case in which the data calls for different step-lengths, and see how both methods perform under those changed circumstances.

Comparison of the optimal step-lengths \(\nu _{j^*, \varvec{\mu }}^{*[m]}\) and \(\nu _{j^*, \varvec{\sigma }}^{*[m]}\) in SAASL from one of the 100 simulation runs. The step-lengths for \(\mu \) are in black dots, the step-lengths for \(\sigma \) in grey cross. Different horizontal layers of dots/crosses correspond to different covariates

4.2 Large variance with resulting imbalance between location and scale

As discussed above, the Gaussian location and scale model in Sect. 4.1 did not lead to a large difference between FSL and ASL, as the optimal step-lengths for \(\varvec{\mu }\) and \(\varvec{\sigma }\) were roughly similar and the imbalance between the updates for the two predictors in FSL was thus not large. In this section, we investigate a setting with a large variance, which leads to a stronger imbalance between the two parts of the model.

In the following, we use SAASL as a representative of the adaptive approaches in our presentation, as it yields identical results to ASL, but avoids the numerical search for the optimal \(\nu _{\varvec{\mu }}\) by using the analytical result (5). Since estimated effects generally deviated more strongly from the theoretical values than before due to the large variance (details will be discussed later), we additionally compared the results to those obtained using GAMLSS with penalized maximum likelihood estimation as implemented in the R-package gamlss (Rigby and Stasinopoulos 2005).

Consider the data generating mechanism \(y_i \sim N(\mu _i, \sigma _i), i \in \{1, \ldots , 500\}\) with \(B = 100\) simulation runs. The predictors are determined by

where \({\varvec{x}}_{\cdot j} \sim \text {Uni}(-1, 1), j \in \{1, 2, 3, 4, 5\}\), \({\varvec{x}}_{\cdot 4}\) and \({\varvec{x}}_{\cdot 5}\) are noise variables. The choice of \(\eta _{\varvec{\sigma }}\) leads to an extremely large standard deviation in the order of 150 due to the large intercept 5. The stopping iteration is obtained by 10-fold CV, and the maximum number of iterations is 3000 and 2,000,000 for SAASL and FSL respectively. The main goal of this simulation setting is to highlight the imbalance problem of FSL when the scale parameter is large. As many noise variables will make it difficult to demonstrate the differences between FSL and adaptive approaches, we include only two noise variables in this example for illustration.

As can be seen in Fig. 4, both fixed and adaptive step-lengths yield reasonable estimates regarding \(\eta _{\varvec{\sigma }}\), but FSL results in many false negative estimates equal to zero for \(\eta _{\varvec{\mu }}\) in the majority of the simulation runs. This is of course connected to the relative importance of the variance component in this setting, which should in itself already lead to a preference for updating \(\eta _{\varvec{\sigma }}\) rather than \(\eta _{\varvec{\mu }}\) in early boosting iterations due to the fact that the negative gradient for \(\varvec{\mu }\) (i.e. \(u_{\varvec{\mu }, i} = \sum _{i=1}^n (y_i - \mu _i)/\sigma _i^2 \text { with large } \sigma _i\)) is actually scaled by the variance (recall the large intercept 5, log-link and the resulting exponential transformation) and hence very small. As a consequence, the impact on the global loss of base-learners fit to the gradient is also small compared to those suggested for updates regarding \(\sigma \) in step 11 of Algorithm 1. Then, using the same step-length for both parameters makes it clearly harder to identify informative effects on \(\mu \) as they are trivialized in comparisons.

The adaptive step-lengths implemented in SAASL compensates for this disadvantage. Compared to the simulation results in the previous subsection the estimates regarding \(\eta _{\varvec{\mu }}\) are less precise with large variability around the true values. This is not a problem of SAASL but again the consequence of the large variance, obscuring the effects on the mean, and also encountered using the penalized maximum likelihood approach implemented in the gamlss-package (called GAMLSS in Fig. 4). The variability in the estimates is actually somewhat smaller than for GAMLSS due to the regularization inherent in the boosting approach. This is also illustrated in Fig. 5 in the pairwise comparison of the estimated coefficients for both methods, where SAASL leads to similar but slightly closer to zero estimates compared to the penalized maximum likelihood based method GAMLSS.

Interestingly, Fig. 4 also reveals that the inability to identify the informative variables results in the lowest MSE for all three individual coefficients for \(\varvec{\mu }\) when using FSL (for more numerical details, see Appendix C). As can be seen from Table 1, however, the combined additive predictor performs worse in terms of overall MSE than both GAMLSS and SAASL, with the latter performing best.

To further highlight the differences in the selection behavior between FSL and SAASL, Fig. 6 illustrates the proportion of boosting iterations used to update \(\varvec{\mu }\) over the course of the model fits, i.e. \(p_{m_{\varvec{\mu }}} = m_{\varvec{\mu }} / (m_{\varvec{\mu }} + m_{\varvec{\sigma }})\), where \(m_{\varvec{\mu }} + m_{\varvec{\sigma }} = m_{\text {stop}}\). The bimodal distribution for FSL observed in the histogram in panel (a) demonstrates another problem of the fixed step-lengths in this setting. Considering the many estimates equal or close to zero observed in Fig. 4, the mode close to \(p_{m_{\varvec{\mu }}} = 0\) is expected, as it describes the proportion of simulation runs where \(\mu \) has not been updated at all. However, as soon as at least one base-learner for \(\varvec{\mu }\) is recognized as an effective model parameter, the small step-length fixed at 0.1 requires a huge number of updates for the base-learner to actually make an impact on the global loss (hence the large number of maximum iterations allowed for FSL). This results in the second mode also around \(p_{m_{\varvec{\mu }}} = 1\), as the algorithm is mainly occupied with \(\mu \) in the corresponding runs.

This is illustrated by the scatter plot in Fig. 6b, where \(p_{m_{\varvec{\mu }}}\) is plotted against the stopping iteration \(m_{\text {stop}}\). Note that the y-axis is displayed with a logarithmic scale and each tick on the y-axis represents a tenfold increase over the previous one. The few points (FSL), whose \(m_{\text {stop}}\) lie between \(10^2\) and \(10^3\), show a better balance between the updates of \(\varvec{\mu }\) and \(\varvec{\sigma }\) than other points, i.e., the middle region of \(p_{m_{\varvec{\mu }}}\). But we also observe a bimodal distribution for FSL, i.e., lots of points are equal or close to \(p_{m_\mu } = 0\) and 1, with very low and extremely large values for \(m_{\text {stop}}\) resulting, respectively.

Thus for SAASL, we observe a unimodel distribution of \(p_{m_{\varvec{\mu }}}\) in Fig. 6a. The mode smaller than 0.5 indicates SAASL updates \(\varvec{\sigma }\) a little more frequently than \(\varvec{\mu }\). Unlike the cyclical approach that enforces an equal number of updates for all distribution parameters, the balance formed by SAASL is more natural. This balance enables the alternate updates between two predictors even though they lie on different scales. Therefore, the information in \(\varvec{\mu }\) can be fairly discovered in time and it reduces the risk of overlooking the informative base-learners with respect to \(\varvec{\mu }\). The number of simulations runs, in which \(\varvec{\mu }\) is not updated at all (\(p_{m_{\varvec{\mu }}} = 0\)), reduces from 39 in FSL to only 5 in SAASL. Moreover, none of the 100 simulations require a substantial amount of updates for \(\varvec{\mu }\) to get well estimated coefficients (cf. also Fig. 4).

Distribution of \(p_{m_{\varvec{\mu }}}\) in \(B = 100\) simulation runs. (a) Histogram of \(p_{m_{\varvec{\mu }}}\). The histogram of the two approaches are overlayed using transparency. (b) Scatter plot of \(m_{\text {stop}}\) against \(p_{m_{\varvec{\mu }}}\). Points and crosses are displayed with transparency. The y-axis is displayed on a logarithmic scale with base 10. Each tick represents a tenfold increase over the previous one

Table 2 displays the information about false positives and false negatives of the two approaches in all 100 simulations with respect to \(\varvec{\mu }\) and \(\varvec{\sigma }\). For example, the second and fourth number 77 and 21 in the first line indicate that the informative variable \({\varvec{x}}_{\cdot 2}\) is not included in the final model in 77 out of 100 simulation runs (i.e. false negative), while there are 21 simulations whose final model contains the non-informative variable \({\varvec{x}}_{\cdot 4}\) (i.e. false positive). Similar as Fig. 2 in Sect. 4.1, the conservative small step-length for \(\varvec{\mu }\) in FSL increases the number of boosting iterations, but reduces the risk of overfitting. Less simulations containing the noise variables for \(\varvec{\mu }\) in FSL than in SAASL confirms this behavior. According to Eq. (11) the ASLs \(\nu _{j^*, \varvec{\sigma }}\) are a sequence of values around 0.05, and (except for the values at early boosting iterations) most of them smaller than 0.1. There are correspondingly slightly more simulations in FSL overfitting the \(\varvec{\sigma }\)-submodel than in SAASL.

Although non-informative variables of \(\varvec{\mu }\) are excluded from the FSL model, the informative ones are excluded as well. Actually \(\varvec{\mu }\) was not updated in many simulations at all (cf. Fig. 6a). The false negatives part of Table 2 for \(\varvec{\mu }\) confirms this. The informative variables \({\varvec{x}}_{\cdot 1}\) to \({\varvec{x}}_{\cdot 3}\) are excluded from the final model in the majority of simulations with FSL but not with SAASL. For \(\varvec{\sigma }\), the smaller step-length \(\nu _{j^*, \varvec{\sigma }}\) in SAASL selects variables more conservatively and as a consequence slightly more simulations underfit the \(\varvec{\sigma }\)-submodel in SAASL than in FSL, but the difference is far less pronounced.

5 Applications

We apply the proposed algorithms to two datasets. The malnutrition dataset demonstrates the shortcomings of FSL and the pitfalls of using numerical determination of ASL with a fixed search interval, and with the riboflavin dataset we illustrate the variable selection properties of each algorithm.

5.1 Malnutrition of children in India

The first data called india from the R package gamboostLSS (Hofner et al. 2018; Fahrmeir and Kneib 2011) are sampled from the Standard Demographic and Health Survey between 1998 and 1999 on malnutrition of children in India (Fahrmeir and Kneib 2011). The sample contains 4000 observations and four variables (BMI of the child (cBMI), age of the child in months (cAge), BMI of the mother (mBMI) and age of the mother in years (mAge)). The outcome of interest in this case is a numeric z-score for malnutrition ranging from -6 to 6, where the negative values represent malnourished children. To highlight the problems of using a fixed step-length, we work with the original variable stunting (corresponding to 100 * z-score). The identity and logarithm functions are used as the link functions for \(\varvec{\mu }\) and \(\varvec{\sigma }\) respectively.

Because this is not a high-dimensional data example, we use the GAMLSS with penalized maximum-likelihood estimation as a gold standard to examine the effectiveness of the adaptive approaches.

Table 3 lists the estimated coefficients of each variable on the predictors \(\eta _{\varvec{\mu }}\) and \(\eta _{\varvec{\sigma }}\) at the stopping iteration tuned by 10-folds CV, where the maximum number of iterations is set to 2000. The estimated intercept in \(\eta _{\varvec{\sigma }}\) indicates a large variance of the response, with the setting thus being similar to the second simulation above. It is therefore not surprising that FSL selects only one variable (cAge) for \(\eta _{\varvec{\mu }}\), i.e. a large number of updates for the base-learner are required but the given maximal boosting iteration is not large enough. In practice we can certainly increase the maximum number of iterations as well as enlarge the commonly applied step-length 0.1 in order to estimate the coefficients well. But their choices are very subjective and probably result in tedious manual fine-tuning based on trial and error.

The ASL method with the default predefined search interval [0, 10] encounters a similar problem as FSL. Apart from the only selected and underfitted variable cAge for \(\varvec{\mu }\), the two variables (cBMI and cAge) for the \(\varvec{\sigma }\)-submodel are also underfitted compared with the results from the gold standard GAMLSS. The reason for this phenomenon lies in the relationship between the variance and step-length discussed in Eq. (5). The log-link or exponential transformation for \(\eta _{\varvec{\sigma }}\) in this example data requires a sequence of huge step-lengths, but the default search interval does not fulfill this requirement.

An estimation of ASL by increasing its search interval to [0, 50000], denoted as ASL5 in Table 3, results in coefficients comparable to those of GAMLSS. But choosing a suitable search interval becomes an unavoidable side task for ASL when analyzing this kind of dataset.

The results of the two semi-analytical approaches hardly differ from the maximum likelihood based GAMLSS. Unlike the numerical determination with a fixed search interval in ASL, the analytical approaches replace this procedure with a direct and precise solution that gets rid of the potential manual intervention (e.g. increasing the search interval). Contrary to the direct influence of the variance on \(\nu _{j^*, \varvec{\mu }}^{*[m]}\) in Eq. (5), the optimal step-length \(\nu _{j^*, \varvec{\sigma }}^{*[m]}\) is dominated by the chosen base-learner, but as the number of learning iterations increases, such effects gradually disappear, and \(\nu _{j^*, \varvec{\sigma }}^{*[m]}\) finally converges to 0.5. Thus, our default search interval [0, 1] is sufficient for \(\nu _{j^*, \varvec{\sigma }}^{*[m]}\), and increasing the range of search interval as for \(\nu _{j^*, \varvec{\mu }}^{*[m]}\) in ASL is almost never necessary.

Theoretically, the ASL with a sufficiently large search interval (ASL5 in this example) and SAASL should result in the same values as discussed in the previous theoretical section. Due to the calculation accuracy of computers and the numerical optimization steps, their outputs are very similar but can differ slightly for the malnutrition data.

Figure 7 presents the optimal step-lengths \(\nu _{j^*, \varvec{\mu }}^{*[m]}\) and \(\nu _{j^*, \varvec{\sigma }}^{*[m]}\) using SAASL for each variable up to 769 boosting iterations specified by 10-folds CV for one simulation run. Apparently, the optimal step-lengths for \(\varvec{\mu }\) over the entire learning process are over 20000, which is far larger than the fixed step-length 0.1 and the upper boundary 10 of the predefined search interval in ASL. Without knowing this information, it is not trivial to determine the search interval for \(\nu _{j^*, \varvec{\mu }}^{*[m]}\). And we thus (after acquiring this graphic) re-estimated the example data with ASL5.

Additionally, Fig. 7b illustrates the optimal step-length for \(\varvec{\sigma }\). After several boosting iterations the optimal values of each covariate converge to their own stable regions (ranging from about 0.38 to 0.56). As discussed above, the optimal step-lengths for \(\varvec{\sigma }\) should be some values around 0.5, and this graphic confirms this statement.

As this example is not high-dimensional and does not necessarily require variable selection, we can use GAMLSS with penalized maximum likelihood estimation for comparison. The fact that its results are very similar to those of the semi-analytical approaches indicates that results from SAASL and SAASL05 are reliable. The only alternative to achieve balance between predictors would be using a cyclical algorithm (with the downsides discussed in the introduction). Rescaling the response variable or standardizing the negative partial derivatives could reduce the scaling problem to some extend, but would not eliminate the need to increase the step-length or reduce the imbalance between predictors.

5.2 Riboflavin dataset

This data set describes the riboflavin (also known as vitamin \(B_2\)) production by Bacillus subtilis, containing 71 observations and 4088 predictors (gene expressions) (Bühlmann et al. 2014; Dezeure et al. 2015). The log-transformed riboflavin production rate, which is close to a Gaussian distribution, is regarded as the response. This data set is chosen to demonstrate the capability of the boosting algorithm to deal with situations in which the number of covariates exceeds the number of observations. Please note that a comparison to the original GAMLSS algorithm is not possible in this case, since the algorithm is not able to deal with more model parameters than available observations. In order to compare the out-of-sample MSE of each algorithm, we select 10 observations randomly as the validation set.

Table 4 summarize the selected informative variables for \(\varvec{\mu }\) and \(\varvec{\sigma }\) separately at the stopping iteration tuned by 5-fold CV, the corresponding coefficients are listed in Appendix D. The results in both tables demonstrate the intersection of the selected variables, for example FSL selects 13 informative variables in total, and 9 of them are also chosen by ASL and SAASL, and there are 11 variables common with SAASL05. In general, for both \(\varvec{\mu }\) and \(\varvec{\sigma }\), more variables are included in the adaptive approaches and the difference in the selected variables mainly lies between the adaptive and fixed approach. Because the optimal step-length \(\nu _{j^*, \varvec{\mu }}^{*[m]}\) lies in the predefined search interval [0, 10] (and is actually smaller than 1, i.e. the adaptive step-length \(\nu _{j^*, \varvec{\mu }}^{[m]} < 0.1\)), and \(\nu _{j^*, \varvec{\sigma }}^{*[m]}\) lies also in a narrower predefined search interval [0, 1], ASL and SAASL have the same results. Moreover, as the adaptive step-length is smaller than the fixed step-length 0.1, the adaptive approaches make conservative (small) updates, leading to more boosting iterations. Several of the gene expressions for \(\varvec{\mu }\) and \(\varvec{\sigma }\) are selected by all algorithms and are thus consistently included in the set of informative covariates. Actually almost all gene expressions chosen by FSL are also recognized as informative variables by all other methods.

To compare the performance of each algorithm, Table 5 lists the out-of-sample MSE. In contrast to the fixed approach, the three adaptive approaches perform in general well, where the performance of SAASL05 is slightly worse than the other two. In addition, Table 5 demonstrates also the result of Lasso estimator from the R package glmnet (Friedman et al. 2010) suggested by Bühlmann et al. (2014). The mean squared prediction error of glmnet is the smallest among the five approaches, but the difference with the adaptive approaches is relatively small.

As glmnet cannot model the scale parameter \(\varvec{\sigma }\), only the estimated coefficients of the \(\varvec{\mu }\)-submodel are provided in Appendix D. Out of the 21 genes selected by glmnet, 7 and 9 of them are common with the ASL/SAASL and SAASL05, respectively. The signs (positive/negative) of the estimated coefficients of these common covariates from glmnet match the adaptive approaches. This comparison indicates that the boosted GAMLSS with adaptive step-length is an applicable and competitive approach for high-dimensional data analysis.

6 Conclusions and outlook

The step-length is often not treated as an important tuning parameter in many boosting algorithms, as long as it is set to a small value. However, if complex models like GAMLSS with several predictors for the different distribution parameters are estimated, the different scales of the distribution parameters can lead to imbalanced updates and resulting bad performances if one common small fixed step-length is used, as we show in this paper.

The main contribution of this article is the proposal to use separate adaptive step-lengths for each distribution parameter in a non-cyclical boosting algorithm for GAMLSS. In addition to the resulting balance in updates between different distribution parameters, a balance between over- and underfitting is obtained by taking only a proportion (shrinkage parameter) such as 10% of the determined optimal step-length as the adaptive step-length. The optimal step-length can be found by optimization procedures such as a line search. We illustrated with an example the importance of updating the search interval for the search if necessary to find the optimal solution.

For the Gaussian location and scale models, we derived an analytical solution for the adaptive step-length for the mean parameter \(\varvec{\mu }\), which avoids numerical optimization and specification of a search interval. For the scale parameter \(\varvec{\sigma }\), we obtained an approximate solution of 0.5 (or 0.05 with 10% proportion), which gives a better motivated default value than 0.1 relative to the step-length for \(\varvec{\mu }\), and discussed a combination with a one-dimensional line search in the semi-analytical approach.

In simulations and empirical applications, we showed favorable behavior compared to using a fixed step-length FSL. We showed highly competitive results of our adaptive approaches compared to a standard GAMLSS with respect to estimation accuracy for the low-dimensional case, while the adaptive boosting approach has the advantages of shrinkage and variable selection, which makes it also applicable to the high-dimensional case of more covariates than observations. Overall, the semi-analytical method for adaptive step-length selection performed best among the considered methods.

In this paper we focused on the Gaussian location and scale models to derive analytical or semi-analytical solutions for the optimal step-length, but in most cases, a line search has to be conducted for all distribution parameters. In the future, if possible it is worth investigating analytical adaptive step-lengths for other distributions, because analytical or approximate adaptive step-lengths increase the numerical efficiency and also reveal the relationships between the optimal step-lengths for different parameters and model parameters (as well as properties of commonly used but probably less than ideal step-length settings).

We are confident that the adaptive step-length concept is relevant way beyond the Gaussian specification, so further work should contain the study on the stability and effectiveness of the implementation of adaptive step-length to other common distributions or zeor-inflated count models. Further work should also include the implementation of further (e.g. non-linear, spatial etc.) effects (Hothorn et al. 2011) into the model, and test the influence of the adaptive step-length on such effects. Moreover, we discovered correlations between the optimal step-length \(\nu _{j^*, \varvec{\mu }}^{*[m]}\) of a variable and the coefficient of this variable in the \(\varvec{\sigma }\)-submodel through our application of the algorithm. Future work should also investigate the relationship among the optimal step-lengths of different parameters and the relationship of these step-lengths to the model coefficients.

A basic R package ASL based on this article is available online at https://github.com/BoyaoZhang/ASL. This package contains the source code of Algorithm 3 and the function of the corresponding cross-validation. Some simple examples can also be found in this package. This package is originated from the R package gamboostLSS, we hope to implement the functions of ASL into the latter in the future.

References

Brent RP (2013) Algorithms for minimization without derivatives. Courier Corporation

Bühlmann P (2006) Boosting for high-dimensional linear models. Ann Stat 34(2):559–583

Bühlmann P, Hothorn T (2007) Boosting algorithms: regularization, prediction and model fitting. Stat Sci 22(4):477–505

Bühlmann P, Yu B (2003) Boosting with the L2 loss. J Am Stat Assoc 98(462):324–339. https://doi.org/10.1198/016214503000125

Bühlmann P, Kalisch M, Meier L (2014) High-dimensional statistics with a view toward applications in biology. Annu Rev Stat Appl 1(1):255–278. https://doi.org/10.1146/annurev-statistics-022513-115545

Dezeure R, Bühlmann P, Meier L, Meinshausen N (2015) High-dimensional inference: Confidence intervals, p-values and R-software HDI. Stat Sci 30(4):533–558

Fahrmeir L, Kneib T (2011) Bayesian smoothing and regression for longitudinal, spatial and event history data. Oxford University Press, Oxford

Friedman J, Hastie T, Tibshirani R (2000) Additive logistic regression: a statistical view of boosting (with discussion and a rejoinder by the authors). Ann Stat 28(2):337–407

Friedman J, Hastie T, Tibshirani R (2010) Regularization paths for generalized linear models via coordinate descent. J Stat Softw 33(1):1–22

Friedman JH (2001) Greedy function approximation: a gradient boosting machine. Ann Stat 29(5):1189–1232

Hastie T, Taylor J, Tibshirani R, Walther G (2007) Forward stagewise regression and the monotone lasso. Electr J Stat 1:1–29

Hepp T, Schmid M, Gefeller O, Waldmann E, Mayr A (2016) Approaches to regularized regression—a comparison between gradient boosting and the Lasso. Methods Inf Med 55(5):422–430

Hepp T, Schmid M, Mayr A (2019) Significance tests for boosted location and scale models with linear base-learners. Int J Biostat 15(1):20180110

Hofner B, Mayr A, Robinzonov N, Schmid M (2014) Model-based boosting in R: a hands-on tutorial using the R package mboost. Comput Stat 29(1–2):3–35

Hofner B, Boccuto L, Göker M (2015) Controlling false discoveries in high-dimensional situations: boosting with stability selection. BMC Bioinf 16(1):144

Hofner B, Mayr A, Schmid M (2016) gamboostlss: an R package for model building and variable selection in the GAMLSS framework. J Stat Softw Articles 74(1):1–31

Hofner B, Mayr A, Fenske N, Schmid M (2018) gamboostLSS: Boosting methods for GAMLSS models. R package version 2.0-1

Hothorn T, Buehlmann P, Kneib T, Schmid M, Hofner B (2010) Model-based boosting 2.0. J Mach Learn Res 11:2109–2113

Hothorn T, Müller J, Schröder B, Kneib T, Brandl R (2011) Decomposing environmental, spatial, and spatiotemporal components of species distributions. Ecol Monogr 81(2):329–347. https://doi.org/10.1890/10-0602.1

Hothorn T, Buehlmann P, Kneib T, Schmid M, Hofner B (2018) mboost: Model-based boosting. R package version 2.9-1

Kohavi R et al (1995) A study of cross-validation and bootstrap for accuracy estimation and model selection. Ijcai 14(2):1137–1145

Mayr A, Fenske N, Hofner B, Kneib T, Schmid M (2012) Generalized additive models for location, scale and shape for high dimensional data-a flexible approach based on boosting. J R Stat Soc Ser C Appl Stat 61(3):403–427. https://doi.org/10.1111/j.1467-9876.2011.01033.x

Meinshausen N, Bühlmann P (2010) Stability selection. J R Stat Soc Ser B Stat Methodol 72(4):417–473. https://doi.org/10.1111/j.1467-9868.2010.00740.x

Rigby RA, Stasinopoulos DM (2005) Generalized additive models for location, scale and shape. J R Stat Soc Ser C Appl Stat 54(3):507–554. https://doi.org/10.1111/j.1467-9876.2005.00510.x

Ripley BD (2004) Selecting amongst large classes of models. Methods Models Stat. https://doi.org/10.1142/9781860945410_0007

Stasinopoulos D, Rigby R (2007) Generalized additive models for location scale and shape (GAMLSS) in R. J Stat Softw Articles 23(7):1–46

Stasinopoulos D, Rigby R, Heller G, Voudouris V, De Bastiani F (2017) Flexible regression and smoothing: using GAMLSS in R. Chapman and Hall/CRC

Thomas J, Mayr A, Bischl B, Schmid M, Smith A, Hofner B (2018) Gradient boosting for distributional regression: faster tuning and improved variable selection via noncyclical updates. Stat Comput 28(3):673–687

Zhang T, Yu B (2005) Boosting with early stopping: convergence and consistency. Ann Stat 33(4):1538–1579

Acknowledgements

This work was supported by the Freigeist-Fellowships of Volkswagen Stiftung, project “Bayesian Boosting - A new approach to data science, unifying two statistical philosophies”. Boyao Zhang performed the present work in partial fulfilment of the requirements for obtaining the degree “Dr. rer. pol.” at the Georg-August-Universität Göttingen.

Open Access

This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendices

Derive the analytical ASL for the Gaussian distribution

Take the negative log-likelihood as the loss function, the loss for Gaussian distribution can be displayed as

The negative partial derivatives for both distribution parameters in iteration m are then

Both \({\varvec{u}}_{\varvec{\theta }}^{[m]}, \varvec{\theta } \in \{\varvec{\mu }, \varvec{\sigma }\}\) can be regressed on the simple linear base-learner \(h_{j^*, \varvec{\theta }}^{[m]}({\varvec{x}}_{\cdot j^*})\), where \(j^*\) denotes the best-fitting variable.

where \(\hat{\varvec{\epsilon }}_{\varvec{\mu }}^{[m]}\) and \(\hat{\varvec{\epsilon }}_{\varvec{\sigma }}^{[m]}\) denote the residuals in simple linear regression models.

1.1 Optimal step-length for \(\varvec{\mu }\)

The analytical optimal step-length for \(\varvec{\mu }\) in iteration m is obtained by minimizing the empirical risk,

Note that the expression \({\hat{\sigma }}_i^{2[m-1]}\) represents the square of the standard deviation in the previous boosting iteration, i.e. \({\hat{\sigma }}_i^{2[m-1]} = ({\hat{\sigma }}_i^{[m-1]})^2\). And according to the model specification \({\hat{\sigma }}_i^{[m-1]} = \exp ({\hat{\eta }}_{\varvec{\sigma }}^{[m-1]}({\varvec{x}}_{i\cdot }))\).

It can be shown, that the expression is a convex function, so the optimal value \(\nu _{\varvec{\mu }}^{*[m]}\) is accessed by letting the first order derivative equal zero,

where \(\sum _{i=1}^n {\hat{h}}_{j^*\varvec{\mu }}^{[m]}(x_{ij^*}) {\hat{\epsilon }}_{\varvec{\mu }, i} = 0\), because the residuals are uncorrelated with the fitted values. \(\square \)

1.2 Optimal step-length for \(\varvec{\sigma }\)

The analytical optimal step-length for \(\varvec{\sigma }\) in iteration m is obtained by minimizing the empirical risk,

It can be shown, that the second order derivative of the expression is positive and thus the expression a convex function. Letting the first order derivative equal zero, we get

\(\square \)

Additional simulation graphics

In this appendix, we present the results for some of the simulated examples in Sect. 4.1. Boxplot of the estimated coefficients are showed in Figs 8 and 9. Figure 10 illustrates the negative log-likelihood. The summary of stopping iterations \(m_{\text {stop}}\) is demonstrated in Fig. 11.

Boxplot of the estimated coefficients of \(\eta _\mu \) in 100 simulation runs. Values are taken at the stopping iterations determined by 10-folds cross-validation. The results are separated according to fixed and adaptive approaches with respect to different non-informative variables settings, i.e. \(p_{\text {n-inf}} = 0, 50, 250\) and 500. The horizontal red lines indicate the true coefficients. The shrinkage of the coefficients towards zero can be observed from this graphic

Boxplot of the estimated coefficients of \(\eta _\sigma \) in 100 simulation runs. Values are taken at the stopping iterations tuned by 10-folds cross-validation. The results are separated according to fixed and adaptive approaches with respect to different non-informative variables settings, i.e. \(p_{\text {n-inf}} = 0, 50, 250\) and 500. The horizontal red lines indicate the true coefficients. The shrinkage of the coefficients towards zero can be observed from this graphic

Additional simulation table

The additional Table 6 summaries the average MSE of the estimated coefficients for both Gaussian distribution parameters in Sect. 4.2.

Estimated coefficients of riboflavin dataset

In this appendix, we provide the estimated coefficients with fixed and adaptive approaches for riboflavin data in Sect. 5.2. Tables7 and 8 concern about the \(\varvec{\mu }\)-submodel and \(\varvec{\sigma }\)-submodel, respectively.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zhang, B., Hepp, T., Greven, S. et al. Adaptive step-length selection in gradient boosting for Gaussian location and scale models. Comput Stat 37, 2295–2332 (2022). https://doi.org/10.1007/s00180-022-01199-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00180-022-01199-3