Abstract

Ventricular arrhythmias (VAs) and sudden cardiac death (SCD) are significant adverse events that affect the morbidity and mortality of both the general population and patients with predisposing cardiovascular risk factors. Currently, conventional disease-specific scores are used for risk stratification purposes. However, these risk scores have several limitations, including variations among validation cohorts, the inclusion of a limited number of predictors while omitting important variables, as well as hidden relationships between predictors. Machine learning (ML) techniques are based on algorithms that describe intervariable relationships. Recent studies have implemented ML techniques to construct models for the prediction of fatal VAs. However, the application of ML study findings is limited by the absence of established frameworks for its implementation, in addition to clinicians’ unfamiliarity with ML techniques. This review, therefore, aims to provide an accessible and easy-to-understand summary of the existing evidence about the use of ML techniques in the prediction of VAs. Our findings suggest that ML algorithms improve arrhythmic prediction performance in different clinical settings. However, it should be emphasized that prospective studies comparing ML algorithms to conventional risk models are needed while a regulatory framework is required prior to their implementation in clinical practice.

Similar content being viewed by others

Introduction

Fatal ventricular arrhythmias (VAs) and sudden cardiac death (SCD) are some of the most important study outcomes in the field of cardiology. Current efforts have focused on the prediction of VAs in different diseases, including hypertrophic cardiomyopathy, arrhythmogenic cardiomyopathy, heart failure (HF), congenital heart diseases, cardiac ion channelopathies, in addition to the risk of VAs among the general public [1,2,3,4,5,6]. Conventional risk scores are the most widely used tools for risk stratification purposes in clinical practice [7]. However, these risk scores have several limitations, including variations among validation cohorts, the inclusion of a limited number of predictors while omitting some variables that might be important. As a result, clinical scores that can accurately predict major outcomes and therefore can aid in personalized clinical management are needed.

Machine learning (ML) can integrate and interpret data from different domains in settings where conventional statistical methods may not be able to perform [8]. Recently, the role of ML techniques has been studied in different aspects of medicine, including electronic health records, diagnosis, risk stratification, timely identification of abnormal heart rhythms in the intensive care unit [9, 10], on prognosis and guidance of personalized management [11, 12]. However, application of ML study findings has been limited due to the lack of a regulatory framework for its implementation and the clinicians’ unfamiliarity in using as well as trusting ML techniques [13]. This review aims to present existing data regarding the role of ML techniques in the risk stratification of VAs in different clinical settings.

Machine learning algorithms

ML algorithms can aid the interpretation of complex data, stratification of patient diagnosis and delivery of personalized care and therefore are particularly useful in the management of cardiovascular diseases [8]. Random forest, convolutional neural network (CNN) and long short-term memory network (LTSM) models are three commonly used ML approaches in cardiovascular medicine.

Random forest model

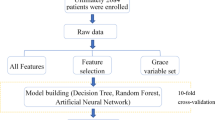

One common model ensemble method is known as the random forest model. The underlying idea of random forest algorithms originates from the assumption that predictions derived from a large ensemble of models are more accurate and robust compared to using a single model. Within a random forest model, numerous decision trees form the basic building blocks by performing either classification or regression tasks. To classify the data, it is processed through a series of true or false questions, allowing information to be categorized into the purest possible subgroups. Each decision tree will then classify a new object based on specific attributes through voting, and the classification is based on the largest sum of votes. In the case of regression, the average outputs from different trees are calculated [14]. With this algorithm, large data sets with higher dimensionality can be processed. When a group of uncorrelated decision trees in collaboration can reduce the effect of individual variability and errors, thus outperforming constituent trees [14]. There are two main ML ensemble meta-algorithms to ensure the trees are uncorrelated: bagging and featuring randomness. The former method separates the data into small subsets via random sampling with replacement, improving the stability of ML; at the same time, the latter shuffles specific features of the data set, increasing the diversity in trees. Due to the simplicity of individual trees, this lowers the training time and can be applied to academia, e-commerce and banking sectors. An illustration of one decision tree in a random forest model used to predict atrial fibrillation can be shown in Fig. 1 [15]. The model was trained with a sample of 682,237 Chinese subjects. In each decision tree, there was a maximum depth of four nodes. Any greater than four nodes in each tree were found to cause overfitting.

First decision tree of the random forest model predicting risk of atrial fibrillation [15]

Convolutional neural networks

Convolutional neural networks (CNNs) are used to detect patterns and to classify images with a high level of precision using filters. Different types of filters detect various forms of patterns depending on their level of sophistication. Pattern detection can range from simple geometric shapes to complex objects such as eyes and dogs. The main purpose of a CNN is to receive and transform an input through a convolutional operation. In a convolutional operation, the process requires an input image, feature detector and feature map [14]. A feature detector consists of a matrix. The matrix can contain any digits corresponding to a specific color or feature that is being measured. This detector is placed over the input image, and the number of cells that match between the feature detector and the image is counted pixel by pixel. Often, CNN analysis may break down an image into smaller parts for higher precision during matching. After a series of calculations, this generates a feature map that indicates where a specific feature occurs. This process of convolving and filtering an image to generate a stack of filtered images is known as a convolution layer [14]. Realistically, for CNN to generate practical data, this would require multiple feature detectors to develop multiple feature maps. Following on, the output is passed onto an adjoining layer. This process is repeated until it reaches the final layer known as a fully connected layer, where a list of featured values converts into a list of votes for a category. Through training, CNN can prioritize the detection of features in chronological order with higher accuracy. An example of an optimal architecture of a CNN model used to predict atrial fibrillation can be demonstrated in Fig. 2 [16]. A one-dimensional convolution was used in the convolution layer as electrocardiographic (ECG) signals are also a one-dimensional time series. Other functions including dropout, batch normalization and a rectified linear unit were also included to prevent divergence.

Seven-layered optimal architecture of the CNN model predicting risk of atrial fibrillation [16]

Long short-term memory network

Long short-term memory network (LTSM) is a type of gated recurrent neural network that regulates the flow of information. Hence, this allows the algorithm to learn to differentiate between unnecessary and relevant information for making predictions. The process begins with transforming a sequence of words into machine-readable vectors. These vectors are processed by transferring the previous hidden state into the next cell, which includes learned information from the previous network. Within an LSTM unit cell, there are three gates: The input gate controls whether the memory cell is updated, the output gate controls the visibility of the current cell state, and the forget gate ensures the memory cell is reset to 0. The previous and current inputs are combined to form a vector, which then goes through Tanh activation. Through a series of complex calculations and processing, LSTM can learn long-term dependencies. An example of an LTSM recurrent network architecture with focal loss, used to detect arrhythmia, is shows in Fig. 3 [17]. The four-layered LTSM network was designed to decipher the timing features in complex ECG signals, which is coupled with the focal loss to fix category imbalance. Epochs were set to 350 to achieve stability in classification accuracy.

LSTM recurrent network architecture detecting arrhythmia on imbalanced ECG datasets [17]

Specific patient populations

ML algorithms have been used for arrhythmic risk stratification purposes and can provide an incremental value for the risk stratification of cardiomyopathies (Table 1).

Hypertrophic cardiomyopathy

A simple clinical score is recommended according to the current guidelines for the VA risk stratification of patients with hypertrophic cardiomyopathy (HCM) [18]. However, the analysis and implementation of more variables for VA risk stratification purposes seem to improve the predictive accuracy in this population. Specifically, the application of ML methods to electronic health data has identified new predictors of VAs in this population, while the ML-derived model performed better compared to current prediction algorithms [19]. In another study, the ensemble of logistic regression and naïve Bayes classifiers was most effective in separating patients with and without Vas [19]. A recent study proposed a novel ML risk stratification tool for the prediction of five-year risk in HCM, which showed a better performance compared to conventional risk stratification tools regarding SCD, cardiac and all-cause mortality, while the best performance was achieved using boosted trees [20]; specifically, the authors used demographic characteristics, genetic data, clinical investigations, medications and disease-related events for risk stratification purposes.

Cardiac magnetic resonance (CMR) has been found to provide important data for risk stratification purposes in HCM patients [21]. Of the studied CMR indices, late gadolinium enhancement (LGE) has a major role in the risk stratification of this population [22]. The extent of LGE has been found to outperform current guideline-recommended criteria in the identification of HCM patients at risk of Vas [23]. In this context, ML techniques can improve the accuracy in the identification of high-risk patients. Specifically, ML-based texture analysis of LGE-positive areas has been proposed as a promising tool for the classification of HCM patients with and without ventricular tachycardia (VT) [24]. In this study, of eight ML models investigated, k-nearest-neighbors with synthetic minority oversampling technique depicted the best diagnostic accuracy for the presence or absence of VT [24].

12-lead Holter ECGs have also been analyzed using mathematical modeling and computational clustering to identify phenotypic subgroups of HCM patients [25]. Specifically, using these methods, it has been found that HCM can be classified into patients with T-wave inversion with and without secondary to QRS abnormalities. HCM patients with T-wave inversion not secondary to QRS abnormalities have been associated with an increased risk of SCD [25].

Other cardiomyopathies

The risk stratification of fatal arrhythmias is also significant in myocardial infarction patients. CMR has also been found to provide incremental data for risk stratification purposes in this population [26]. Quantitative discriminative features extracted from LGE in post-myocardial infarction patients have been studied for the discrimination of high- versus low-risk patients. In a study, the leave-one-out cross-validation scheme was implemented to classify high- and low-risk groups with a high classification accuracy for a feature combination that captures the size, location and heterogeneity of the scar [27]. Furthermore, nested cross-validation was performed with k-neural network, support vector machine, adjusting decision tree and random forest classifiers to differentiate high-risk and low-risk patients. In this context, the support vector machine classifier provided average accuracy of 92.6% and area under the receiver operating curve (AUC) of 0.921 for a feature combination capturing location and heterogeneity of the scar [27].

Recently, a novel ML approach was studied for quantifying the three-dimensional spatial complexity of grayscale patterns on LGE-CMR images to predict VAs in patients with ischemic cardiomyopathy [28]. Specifically, in this study, a substrate spatial complexity profile was created for each patient. The ML algorithm was classified with 81% overall accuracy, while the overall negative predictive value was estimated at 91% [28]. The clinical importance of these findings is mainly attributed to the high negative predictive value of the method that can identify ischemic cardiomyopathy patients who will not be benefited from an implantable cardioverter-defibrillator (ICD).

Except for clinical and imaging variables, cellular electrophysiological characteristics have also been studied using ML algorithms to identify ischemic cardiomyopathy patients at risk of SCD [29]. ML of monophasic action potential recordings in ischemic cardiomyopathy patients revealed novel phenotypes for predicting sustained VT/fventricular fibrillation (VF) [29].

Another clinical entity that needs a better risk stratification tool is HF with reduced ejection fraction (HFrEF) due to non-ischemic dilated cardiomyopathy. While previous studies had proved the benefit of ICDs in the primary prevention of SCD in this setting [30], the Danish Study to Assess the Efficacy of ICDs in Patients with Non-ischemic Systolic Heart Failure on Mortality (DANISH) trial showed that prophylactic ICD implantation in patients with symptomatic systolic HF that not caused by coronary artery disease was not associated with a significantly lower rate of all-cause mortality [31]. It is of great clinical importance to identify those patients with HFrEF due to non-ischemic etiology, who will benefit from an ICD. ML techniques can play a crucial role in better stratifying this group of patients [32].

In a recent study, ML techniques were used to identify cardiac imaging and time-varying risk predictors of appropriate ICD therapy in HFrEF patients [33]. It was found that baseline CMR imaging metrics (specifically, left ventricle heterogeneous gray and total scar, left ventricle and left atrial volumes, and left atrial total emptying fraction) and interleukin-6 levels were the strongest predictors of subsequent appropriate ICD therapies [33]. It is well known that ICD shocks have been associated with adverse events in patients with ICDs [34]. ML techniques and specifically random forest have been used for the prediction of short-term risk of electrical storm in patients with an ICD using daily summaries of ICD measurements [35]. The clinical importance of these methods can be mainly attributed to the preventive measures that can be adopted to avoid an imminent arrhythmic event. ML algorithms can further improve the existing risk stratification tools, and the ML-derived models can help clinicians to optimize the management of HFrEF patients.

Non-compaction cardiomyopathy is another clinical setting that needs further research for better characterization and risk stratification. In a recent study, the presence of significant compacted myocardial thinning, an elevated B‐type natriuretic peptide or increased left ventricular dimensions were significantly associated with adverse events in non-compaction cardiomyopathy patients [36]. ML techniques have been implemented for improving risk stratification in these patients. Specifically, echocardiographic and CMR data were analyzed using ML algorithms to identify predictors of adverse events in non-compaction cardiomyopathy patients [37]. The combination of CMR-derived left ventricular ejection fraction, CMR-derived right ventricular end systolic volume, echocardiogram-derived right ventricular systolic dysfunction and CMR-derived right ventricular lower diameter was found to achieve the better performance in predicting major adverse events in these patients [37].

Sarcoidosis

Cardiac sarcoidosis is another clinical condition that mandates a better arrhythmic risk stratification model given the increased risk of complete heart block, VA and SCD. Currently, an ICD should be considered in patients with atrioventricular block requiring pacemaker implantation independently of the left ventricular ejection fraction [38].

ML techniques have been used for diagnosing and optimizing the arrhythmic risk stratification of these patients. While the 18F-fluorodeoxyglucose (18F-FDG) positron emission tomography (PET) plays a critical role in the diagnosis of cardiac sarcoidosis, there are significant interobserver differences that warrant more objective quantitative evaluation methods, which can be achieved by ML approaches [39].

It has been reported that deep CNN analysis can achieve superior diagnostic performance of sarcoidosis in comparison with the conventional quantitative analysis [40]. Moreover, it is known that myocardial scarring on CMR has a prognostic value in cardiac sarcoidosis patients [41]. A ML approach using regional CMR analysis predicted the combined endpoint of death, heart transplantation or arrhythmic events with reasonable accuracy in cardiac sarcoidosis patients [42].

Ion channelopathies

Specific clinical and electrocardiographic markers have been associated with VT/VF occurrence in patients with Brugada syndrome. ML techniques can further improve the risk stratification performance of existing prediction models. Specifically, the combination of nonnegative matrix factorization and random forest models showed the best predictive performance compared with the random forest model alone and Cox regression models in this clinical setting [43]. Similarly, the random forest can better predict the occurrence of VT/VF post-diagnosis in congenital long QT syndrome in comparison with the conventional multivariate Cox regression model [44].

Furthermore, ML approaches can be used to explore the associations between genetic mutations and the occurrences of VAs triggered by ion channelopathies. As mutations of the SCN5A gene are known to be associated with Brugada syndrome and long QT syndrome, a study has applied ML methods to a list of missense SCN5A mutations and found mutations causing changes to the sodium current increase the risk of Vas [45]. However, the location, rather than the physicochemical properties of the mutation, is predictive, which highlights that functional studies remain important in this area of research [45]. As with Brugada and long QT syndromes, random forest analysis has been applied to identify important factors of ventricular arrhythmogenesis in catecholaminergic polymorphic VT [46].

Drug-induced arrhythmias

Another interesting area pertinent to the implementation of ML techniques involves the prediction of drug-induced VAs. Specifically, using a support vector machine classifier, clustering by gene expression profile similarities showed that certain drugs prolong the QT interval in a limited number of patient groups [47]. As a result, ML methods may provide additional benefit in the current process of testing ion channel activities in the preclinical setting of cardiac safety assessment of drugs. Support vector machine has also been used for the prediction of Torsade des pointes of the different pharmacological agents [48]. It should be mentioned that ML methods have not only been implemented to identify a potential association between drugs and arrhythmic risk but also to identify moderators of the arrhythmic potential of specific medications [49]. Specifically, a study previously constructed a surrogate model for QT interval using multi-fidelity Gaussian regression and found that compounds blocking the rapid delayed rectifier potassium channels have the greatest QT-prolonging effect [50].

Congenital structural heart disease

An integrated approach should be implemented in patients with complex congenital structural heart disease for the early prediction of adverse outcomes. For example, patients with Tetralogy of Fallot require risk stratification for the early identification of high-risk patients who require advanced healthcare management. ML techniques and specifically deep learning imaging analysis have been proposed to improve the risk stratification of Tetralogy of Fallot patients [51]. Using CMR data, a composite score of the enlarged right atrial area and depressed right ventricular longitudinal function identified a tetralogy of Fallot subgroup at increased risk of adverse outcome [51].

Furthermore, ML techniques have also studied in the prediction of postoperative arrhythmias following atrial septal defect closure. In this setting, a prediction model based on synthetic minority oversampling technique algorithm and the random forest was found to predict arrhythmias with excellent accuracy in a pediatric population [52]. This is further supported by Guo et al., which used a combination of ML techniques including support vector machine (SVM), random forest, naïve Bayes and adaptive boost to predict postoperative blood coagulation function for children with congenital heart disease [53]. The results offer promising evidence that ML models are more robust and accurate relative to traditional statistical methods.

Arterial hypertension

Hypertension is a common condition that has been associated with adverse outcomes in the long-term setting. However, a prediction model is difficult to be constructed in young hypertensive patients mainly due to the lack of sufficient data in this population. Wu et al. used two ML methods, recursive feature elimination and extreme gradient boosting, to predict outcomes in young patients with hypertension [54]. The outcome was the composite of all-cause mortality, acute myocardial infarction, coronary artery revascularization, new-onset HF, new-onset atrial fibrillation/atrial flutter, sustained VT/VF, peripheral artery revascularization, new-onset stroke and end-stage renal disease [54]. While the proposed ML model was comparable with Cox regression for the measured outcome in the young patients with hypertension, it performed better than that of the recalibrated Framingham Risk Score model [54].

Discussion

The role of ML algorithms for the risk stratification of VAs has been studied in different clinical settings. ML algorithms can provide an incremental value for the risk stratification of cardiomyopathies (HCM, ischemic and non-ischemic cardiomyopathy), cardiac sarcoidosis, channelopathies, congenital heart disease, arterial hypertension, as well as in predicting pharmacologically induced life-threatening arrhythmias.

The management and especially the prediction of life-threatening arrhythmias are paramount in clinical cardiology. A prediction model for VT one hour before its occurrence, using an artificial neural network, has been generated using 14 parameters obtained from heart rate variability and respiratory rate variability analysis [55]. ML techniques have been used to predict the occurrence of VΑs using heartbeat interval time series. In this setting, the random forest model showed better performance using a length of heartbeat interval time series of 800 heartbeats, 108 s before the occurrence of arrhythmias [56]. These results can be implemented mainly in the prevention of cardiac arrest identifying high-risk patients prior to the occurrence of life-threatening VAs. Another study proposed a CNN algorithm to predict the onset of a VT using heart rate variability data [57]. The authors found that compared to other ML algorithms, the proposed one showed the highest prediction accuracy. Furthermore, ML algorithms have also been used for the prediction of VF. In this setting, QRS complex shape features were analyzed using artificial neural network classifiers [58]. This proposed model was found to achieve a better performance compared to the prediction accuracy using heart rate variability features [58].

Another area of ML implementation is in in-hospital monitoring. Timely and accurate discrimination of shockable versus non-shockable rhythms from external detectors and ICDs is of great clinical importance. Recently, the fixed frequency range empirical wavelet transform filter bank and deep CNN were used to analyze electrocardiographic signals [59]. The results showed excellent accuracy rates in classifying shockable versus non-shockable rhythms, VF versus non-VF and VT versus VF [59]. A deep learning architecture based on one-dimensional CNN layers and an LSTM network was found to be timely and accurate for the detection of VF in automated external defibrillators [60]. Furthermore, ML-based intensive care unit alarm systems have been found to achieve higher positive predictive values for the identification of asystole, extreme bradycardia, VT and VF compared to the bedside monitors used in the PhysioNet 2015 competition [9, 10, 61].

Except for life-threatening arrhythmias, ML algorithms have been used for the management of atrial fibrillation. Artificial intelligence-enabled electrocardiography was used to predict the incident atrial fibrillation [62]. In the same setting, ML algorithms have been implemented and outperformed conventional tools for the prediction of atrial fibrillation in critically ill patients who were hospitalized with sepsis [63]. In the field of invasive management of atrial fibrillation, ML-based classification of 12-lead ECG has been proposed as a useful tool for guiding atrial fibrillation ablation procedures and specifically in identifying patients suitable for pulmonary vein isolation alone vs. those needing additional ablation to pulmonary vein isolation [64]. Moreover, an ensemble classifier that used clinical and heart rate variability features were found to predict atrial fibrillation catheter ablation outcomes [65]. As a result, ML algorithms can have a role in the prevention and management of patients at risk or with documented atrial fibrillation, respectively.

However, ML algorithms present a series of limitations. Not only does ML require large sets of data during training, a considerable amount of time and resources is also necessary. Moreover, ML algorithms are susceptible to errors, such as mislabeled data, overfitting information and unavoidable bias [66]. Furthermore, ML techniques can only be trained to analyze a specific type of data; for example, although the random forest is highly effective in performing classification tasks, it is less effective when performing regression tasks as the algorithm cannot demonstrate precise, continuous nature predictions. Similarly, CNN is only effective in analyzing spatial patterns in images. Resultantly, different ML algorithms should be used for different purposes. Finally, ML algorithms shine a light on the debate regarding transparency, authority and other ethical ramifications. Therefore, an established framework that regulated the implementation of ML in clinical practice needs to be implemented [13].

Conclusions

ML algorithms have been shown to ameliorate arrhythmic prediction performance in different clinical settings. However, it should be emphasized that prospective studies comparing ML algorithms to conventional risk models are needed while a framework is required prior to their implementation in clinical practice.

Availability of data materials

All data are included in the tables.

References

Holkeri A, Eranti A, Haukilahti MAE, Kerola T, Kentta TV, Tikkanen JT, et al. Predicting sudden cardiac death in a general population using an electrocardiographic risk score. Heart. 2020;106(6):427–33.

Oliver JM, Gallego P, Gonzalez AE, Avila P, Alonso A, Garcia-Hamilton D, et al. Predicting sudden cardiac death in adults with congenital heart disease. Heart. 2021;107(1):67–75.

Lee S, Wong WT, Wong ICK, Mak C, Mok NS, Liu T, et al. Ventricular Tachyarrhythmia risk in paediatric/young vs. adult brugada syndrome patients: a territory-wide study. Front Cardiovasc Med. 2021;8:671666.

Kayvanpour E, Sammani A, Sedaghat-Hamedani F, Lehmann DH, Broezel A, Koelemenoglu J, et al. A novel risk model for predicting potentially life-threatening arrhythmias in non-ischemic dilated cardiomyopathy (DCM-SVA risk). Int J Cardiol. 2021.

Maupain C, Badenco N, Pousset F, Waintraub X, Duthoit G, Chastre T, et al. Risk stratification in arrhythmogenic right ventricular cardiomyopathy/dysplasia without an implantable cardioverter-defibrillator. JACC Clin Electrophysiol. 2018;4(6):757–68.

Ostman-Smith I, Sjoberg G, Alenius Dahlqvist J, Larsson P, Fernlund E. Sudden cardiac death in childhood hypertrophic cardiomyopathy is best predicted by a combination of ECG risk-score and HCMRisk-Kids score. Acta Paediatr. 2021.

Mohd Faizal AS, Thevarajah TM, Khor SM, Chang SW. A review of risk prediction models in cardiovascular disease: conventional approach vs. artificial intelligent approach. Comput Methods Programs Biomed. 2021;207:106190.

Shameer K, Johnson KW, Glicksberg BS, Dudley JT, Sengupta PP. Machine learning in cardiovascular medicine: are we there yet? Heart. 2018;104(14):1156–64.

Au-Yeung WM, Sahani AK, Isselbacher EM, Armoundas AA. Reduction of false alarms in the intensive care unit using an optimized machine learning based approach. NPJ Digit Med. 2019;2:86.

Bollepalli SC, Sevakula RK, Au-Yeung WM, Kassab MB, Merchant FM, Bazoukis G, et al. Real-time arrhythmia detection using hybrid convolutional neural networks. JAHA (in press).

Sevakula RK, Au-Yeung WM, Singh JP, Heist EK, Isselbacher EM, Armoundas AA. State-of-the-art machine learning techniques aiming to improve patient outcomes pertaining to the cardiovascular system. J Am Heart Assoc. 2020;9(4):e013924.

Bazoukis G, Stavrakis S, Zhou J, Bollepalli SC, Tse G, Zhang Q, et al. Machine learning versus conventional clinical methods in guiding management of heart failure patients-a systematic review. Heart Fail Rev. 2021;26(1):23–34.

Bazoukis G, Hall J, Loscalzo J, Antman E, Fuster V, Armoundas AA. The augmented intelligence in medicine: a framework for successful implementation. Cell Rep Med. (in press).

Saad Albawi OB, Saad Al-Azawi & Osman N. Ucan understanding of a convolutional neural network. IEEE. 2017.

Hu W, Hsieh MH, Lin CL. A novel atrial fibrillation prediction model for Chinese subjects: a nationwide cohort investigation of 682 237 study participants with random forest model. EP Europace. 2019;21(9):1307–12.

Erdenebayar U, Kim H, Park JU, Kang D, Lee KJ. Automatic prediction of atrial fibrillation based on convolutional neural network using a short-term normal electrocardiogram signal. J Korean Med Sci. 2019;34(7):e64.

Gao J, Zhang H, Lu P, Wang Z. An effective LSTM recurrent network to detect arrhythmia on imbalanced ECG dataset. J Healthc Eng. 2019.

Ommen SR, Mital S, Burke MA, Day SM, Deswal A, Elliott P, et al. 2020 AHA/ACC guideline for the diagnosis and treatment of patients with hypertrophic cardiomyopathy: executive summary: a report of the American College of Cardiology/American Heart Association Joint Committee on clinical practice guidelines. Circulation. 2020;142(25):e533–57.

Bhattacharya M, Lu DY, Kudchadkar SM, Greenland GV, Lingamaneni P, Corona-Villalobos CP, et al. Identifying ventricular arrhythmias and their predictors by applying machine learning methods to electronic health records in patients with hypertrophic cardiomyopathy (HCM-VAr-Risk Model). Am J Cardiol. 2019;123(10):1681–9.

Smole T, Zunkovic B, Piculin M, Kokalj E, Robnik-Sikonja M, Kukar M, et al. A machine learning-based risk stratification model for ventricular tachycardia and heart failure in hypertrophic cardiomyopathy. Comput Biol Med. 2021;135:104648.

Rowin EJ, Maron MS. The role of cardiac MRI in the diagnosis and risk stratification of hypertrophic cardiomyopathy. Arrhythm Electrophysiol Rev. 2016;5(3):197–202.

Kamp NJ, Chery G, Kosinski AS, Desai MY, Wazni O, Schmidler GS, et al. Risk stratification using late gadolinium enhancement on cardiac magnetic resonance imaging in patients with hypertrophic cardiomyopathy: a systematic review and meta-analysis. Prog Cardiovasc Dis. 2021;66:10–6.

Freitas P, Ferreira AM, Arteaga-Fernández E, de Oliveira AM, Mesquita J, Abecasis J, et al. The amount of late gadolinium enhancement outperforms current guideline-recommended criteria in the identification of patients with hypertrophic cardiomyopathy at risk of sudden cardiac death. J Cardiovasc Magn Reson. 2019;21(1):50.

Alis D, Guler A, Yergin M, Asmakutlu O. Assessment of ventricular tachyarrhythmia in patients with hypertrophic cardiomyopathy with machine learning-based texture analysis of late gadolinium enhancement cardiac MRI. Diagn Interv Imaging. 2020;101(3):137–46.

Lyon A, Ariga R, Minchole A, Mahmod M, Ormondroyd E, Laguna P, et al. Distinct ECG phenotypes identified in hypertrophic cardiomyopathy using machine learning associate with arrhythmic risk markers. Front Physiol. 2018;9:213.

Yalin K, Golcuk E, Aksu T. Cardiac magnetic resonance for ventricular arrhythmia therapies in patients with coronary artery disease. J Atr Fibrillation. 2015;8(1):1242.

Kotu LP, Engan K, Borhani R, Katsaggelos AK, Orn S, Woie L, et al. Cardiac magnetic resonance image-based classification of the risk of arrhythmias in post-myocardial infarction patients. Artif Intell Med. 2015;64(3):205–15.

Okada DR, Miller J, Chrispin J, Prakosa A, Trayanova N, Jones S, et al. Substrate spatial complexity analysis for the prediction of ventricular arrhythmias in patients with ischemic cardiomyopathy. Circ Arrhythm Electrophysiol. 2020;13(4):e007975.

Rogers AJ, Selvalingam A, Alhusseini MI, Krummen DE, Corrado C, Abuzaid F, et al. Machine learned cellular phenotypes in cardiomyopathy predict sudden death. Circ Res. 2021;128(2):172–84.

Bardy GH, Lee KL, Mark DB, Poole JE, Packer DL, Boineau R, et al. Amiodarone or an implantable cardioverter-defibrillator for congestive heart failure. N Engl J Med. 2005;352(3):225–37.

Kober L, Thune JJ, Nielsen JC, Haarbo J, Videbaek L, Korup E, et al. Defibrillator implantation in patients with nonischemic systolic heart failure. N Engl J Med. 2016;375(13):1221–30.

Meng F, Zhang Z, Hou X, Qian Z, Wang Y, Chen Y, et al. Machine learning for prediction of sudden cardiac death in heart failure patients with low left ventricular ejection fraction: study protocol for a retroprospective multicentre registry in China. BMJ Open. 2019;9(5):e023724.

Wu KC, Wongvibulsin S, Tao S, Ashikaga H, Stillabower M, Dickfeld TM, et al. Baseline and dynamic risk predictors of appropriate implantable cardioverter defibrillator therapy. J Am Heart Assoc. 2020;9(20):e017002.

Bazoukis G, Tse G, Korantzopoulos P, Liu T, Letsas KP, Stavrakis S, et al. Impact of implantable cardioverter-defibrillator interventions on all-cause mortality in heart failure patients: a meta-analysis. Cardiol Rev. 2019;27(3):160–6.

Shakibfar S, Krause O, Lund-Andersen C, Aranda A, Moll J, Andersen TO, et al. Predicting electrical storms by remote monitoring of implantable cardioverter-defibrillator patients using machine learning. Europace. 2019;21(2):268–74.

Ramchand J, Podugu P, Obuchowski N, Harb SC, Chetrit M, Milinovich A, et al. Novel approach to risk stratification in left ventricular non-compaction using a combined cardiac imaging and plasma biomarker approach. J Am Heart Assoc. 2021;10(8):e019209.

Rocon C, Tabassian M, Tavares de Melo MD, de Araujo Filho JA, Grupi CJ, Parga Filho JR, et al. Biventricular imaging markers to predict outcomes in non-compaction cardiomyopathy: a machine learning study. ESC Heart Fail. 2020;7(5):2431–9.

Al-Khatib SM, Stevenson WG, Ackerman MJ, Bryant WJ, Callans DJ, Curtis AB, et al. 2017 AHA/ACC/HRS guideline for management of patients with ventricular arrhythmias and the prevention of sudden cardiac death: executive summary: a report of the American College of Cardiology/American Heart Association Task Force on Clinical Practice Guidelines and the Heart Rhythm Society. Circulation. 2018;138(13):e210–71.

Ohira H, Ardle BM, deKemp RA, Nery P, Juneau D, Renaud JM, et al. Inter- and intraobserver agreement of (18)F-FDG PET/CT image interpretation in patients referred for assessment of cardiac sarcoidosis. J Nucl Med. 2017;58(8):1324–9.

Togo R, Hirata K, Manabe O, Ohira H, Tsujino I, Magota K, et al. Cardiac sarcoidosis classification with deep convolutional neural network-based features using polar maps. Comput Biol Med. 2019;104:81–6.

Coleman GC, Shaw PW, Balfour PC Jr, Gonzalez JA, Kramer CM, Patel AR, et al. Prognostic value of myocardial scarring on CMR in patients with cardiac sarcoidosis. JACC Cardiovasc Imaging. 2017;10(4):411–20.

Okada DR, Xie E, Assis F, Smith J, Derakhshan A, Gowani Z, et al. Regional abnormalities on cardiac magnetic resonance imaging and arrhythmic events in patients with cardiac sarcoidosis. J Cardiovasc Electrophysiol. 2019;30(10):1967–76.

Lee S, Zhou J, Li KHC, Leung KSK, Lakhani I, Liu T, et al. Territory-wide cohort study of Brugada syndrome in Hong Kong: predictors of long-term outcomes using random survival forests and non-negative matrix factorisation. Open Heart. 2021;8(1).

Tse G, Lee S, Zhou J, Liu T, Wong ICK, Mak C, et al. Territory-wide Chinese cohort of long QT syndrome: random survival forest and cox analyses. Front Cardiovasc Med. 2021;8:608592.

Clerx M, Heijman J, Collins P, Volders PGA. Predicting changes to INa from missense mutations in human SCN5A. Sci Rep. 2018;8(1):12797.

Lee S, Zhou J, Jeevaratnam K, Lakhani I, Wong WT, Wong IC, et al. Arrhythmic outcomes in catecholaminergic polymorphic ventricular tachycardia. medRxiv. 2021:2021.01.04.21249214.

Bergau DM, Liu C, Magin RL, Lu H. Machine-learning prediction of drug-induced cardiac arrhythmia: analysis of gene expression and clustering. Crit Rev Biomed Eng. 2018;46(3):245–75.

Yap CW, Cai CZ, Xue Y, Chen YZ. Prediction of torsade-causing potential of drugs by support vector machine approach. Toxicol Sci. 2004;79(1):170–7.

Sessa M, Mascolo A, Dalhoff KP, Andersen M. The risk of fractures, acute myocardial infarction, atrial fibrillation and ventricular arrhythmia in geriatric patients exposed to promethazine. Exp Opin Drug Saf. 2020;19(3):349–57.

Costabal FS, Matsuno K, Yao J, Perdikaris P, Kuhl E. Machine learning in drug development: characterizing the effect of 30 drugs on the QT interval using Gaussian process regression, sensitivity analysis, and uncertainty quantification. Comput Methods Appl Mech Eng. 2019;348:313–33.

Diller GP, Orwat S, Vahle J, Bauer UMM, Urban A, Sarikouch S, et al. Prediction of prognosis in patients with tetralogy of Fallot based on deep learning imaging analysis. Heart. 2020;106(13):1007–14.

Sun H, Liu Y, Song B, Cui X, Luo G, Pan S. Prediction of arrhythmia after intervention in children with atrial septal defect based on random forest. BMC Pediatr. 2021;21(1):280.

Guo K, Fu X, Zhang H, Wang M, Hong S, Ma S. Predicting the postoperative blood coagulation state of children with congenital heart disease by machine learning based on real-world data. Transl Pediatr. 2021;10(1):33.

Wu X, Yuan X, Wang W, Liu K, Qin Y, Sun X, et al. Value of a machine learning approach for predicting clinical outcomes in young patients with hypertension. Hypertension. 2020;75(5):1271–8.

Lee H, Shin SY, Seo M, Nam GB, Joo S. Prediction of ventricular tachycardia one hour before occurrence using artificial neural networks. Sci Rep. 2016;6:32390.

Chen Z, Ono N, Chen W, Tamura T, Altaf-Ul-Amin MD, Kanaya S, et al. The feasibility of predicting impending malignant ventricular arrhythmias by using nonlinear features of short heartbeat intervals. Comput Methods Programs Biomed. 2021;205:106102.

Taye GT, Hwang HJ, Lim KM. Application of a convolutional neural network for predicting the occurrence of ventricular tachyarrhythmia using heart rate variability features. Sci Rep. 2020;10(1):6769.

Taye GT, Shim EB, Hwang HJ, Lim KM. Machine learning approach to predict ventricular fibrillation based on QRS complex shape. Front Physiol. 2019;10:1193.

Panda R, Jain S, Tripathy RK, Acharya UR. Detection of shockable ventricular cardiac arrhythmias from ECG signals using FFREWT filter-bank and deep convolutional neural network. Comput Biol Med. 2020;124:103939.

Picon A, Irusta U, Alvarez-Gila A, Aramendi E, Alonso-Atienza F, Figuera C, et al. Mixed convolutional and long short-term memory network for the detection of lethal ventricular arrhythmia. PLoS ONE. 2019;14(5):e0216756.

Au-Yeung WM, Sevakula RK, Sahani AK, Kassab M, Boyer R, Isselbacher EM, et al. Real-time machine learning-based intensive care unit alarm classification without prior knowledge of the underlying rhythm. Eur Heart J Digit Health. 2021;2(3):437–45.

Christopoulos G, Graff-Radford J, Lopez CL, Yao X, Attia ZI, Rabinstein AA, et al. Artificial intelligence-electrocardiography to predict incident atrial fibrillation: a population-based study. Circ Arrhythm Electrophysiol. 2020;13(12):e009355.

Bashar SK, Ding EY, Walkey AJ, McManus DD, Chon KH. Atrial fibrillation prediction from critically ill sepsis patients. Biosensors (Basel). 2021;11(8).

Luongo G, Azzolin L, Schuler S, Rivolta MW, Almeida TP, Martinez JP, et al. Machine learning enables noninvasive prediction of atrial fibrillation driver location and acute pulmonary vein ablation success using the 12-lead ECG. Cardiovasc Digit Health J. 2021;2(2):126–36.

Saiz-Vivo J, Corino VDA, Hatala R, de Melis M, Mainardi LT. Heart rate variability and clinical features as predictors of atrial fibrillation recurrence after catheter ablation: a pilot study. Front Physiol. 2021;12:672896.

Ren Q, Cheng, H., & Han, H. Research on machine learning framework based on random forest algorithm. In: AIP conference proceedings. 2017;1820(1).

Au-Yeung W, Reinhall PG, Bardy GH, Brunton SL. Development and validation of warning system of ventricular tachyarrhythmia in patients with heart failure with heart rate variability data. PLoS ONE. 2018;13(11):e0207215.

Marzec L, Raghavan S, Banaei-Kashani F, Creasy S, Melanson EL, Lange L, et al. Device-measured physical activity data for classification of patients with ventricular arrhythmia events: a pilot investigation. PLoS ONE. 2018;13(10):e0206153.

Acknowledgements

None.

Funding

The work was supported by Grants from the Institute of Precision Medicine (17UNPG33840017) from the American Heart Association (AHA), the RICBAC Foundation, NIH Grants 1 R01 HL135335-01, 1 R21 HL137870-01, 1 R21EB026164-01 and 3R21EB026164-02S1.

Author information

Authors and Affiliations

Contributions

CTC and GB carried out literature search and data extraction and analysis, drafted the manuscript and critically revised the manuscript. SL, YL, TL and KPL conducted the data interpretation and critically revised the manuscript. AAA and GT conceived the study, carried out the data analysis and interpretation, and drafted and critically revised the manuscript.

Corresponding authors

Ethics declarations

Ethical Approval and Consent to participate

Not applicable.

Consent for publication

All authors consent to publication.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Chung, C.T., Bazoukis, G., Lee, S. et al. Machine learning techniques for arrhythmic risk stratification: a review of the literature. Int J Arrhythm 23, 10 (2022). https://doi.org/10.1186/s42444-022-00062-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s42444-022-00062-2