Abstract

Decision-making based on reliable evidence is more likely to lead to effective and efficient treatments. Evidence-based dentistry was developed, similarly to evidence-based medicine, to help clinicians apply current and valid research findings into their own clinical practice. Interpreting and appraising the literature is fundamental and involves the development of evidence-based dentistry (EBD) skills. Systematic reviews (SRs) of randomized controlled trials (RCTs) are considered to be evidence of the highest level in evaluating the effectiveness of interventions. Furthermore, the assessment of the report of a RCT, as well as a SR, can lead to an estimation of how the study was designed and conducted.

Similar content being viewed by others

Review

Introduction

We live in the age of information, innovation, and change. The number of published studies in the dental literature increases dramatically every year. Clinicians are required to base their decisions on the best available research evidence by critically appraising and incorporating sound scientific evidence into everyday clinical practice [1]. The clinicians' difficulty of staying current can be facilitated by integrating basic skills of evidence-based dentistry (EBD), such as the ability to identify and critically appraise evidence, into everyday practice [2].

Defining evidence-based dentistry

The practice of evidence-based dentistry consists in dentists critically applying relevant research findings to the care of patients [3]. The American Dental Association (A.D.A.) defines evidence-based dentistry as `an approach to oral health care that requires the judicious integration of systematic assessments of clinically relevant scientific evidence, relating to the patient's oral and medical condition and history, with the dentist's clinical expertise and the patient's treatment needs and preferences.' Evidence-based dentistry is based on three important domains: the best available scientific evidence, dentist's clinical skills and judgment, and patient's needs and preferences. Only when all three are given due consideration in individual patient care is EBD actually being practiced [4].

Why is evidence-based dentistry important?

Practicing evidence-based dentistry reassures the quality improvement of health-care delivery by incorporating effective practices, while eliminating those that are ineffective or inappropriate [5]. The main advantage of EBD is that, in fact, it uses significant findings obtained from large clinical trials and systematic reviews and applies them to the individual patient's needs. In this way, clinicians are able to deliver more focused treatment, while patients receive optimal care [6].

The five steps of evidence-based dentistry practice

The practice of EBD involves five essential steps [4],[7],[8]:

-

1.

Developing a clear, clinically focused question

-

2.

Identifying, summarizing, and synthesizing all relevant studies that directly answer the formulated question

-

3.

Appraising evidence in terms of validity and applicability

-

4.

Combining research evidence with clinical expertise and patients characteristics

-

5.

Assessing the successful implementation of previous steps

Choosing the best form of evidence

Some research designs are more effective than others in their ability to answer specific research questions. Rules of evidence have been established to grade evidence according to its strength, giving rise to the concept of `hierarchy of evidence.' The hierarchy provides a framework for rating evidence and indicates which study types should be given more weight when assessing the same question [9]. At the top of the hierarchy, we find high-quality systematic reviews of randomized controlled trials, with or without meta-analysis together with randomized controlled trials (RCTs) of very low risk of bias (Table 1) [10].

Classification of studies based on research design

Clinical research can be either observational or experimental. In observational studies, the investigator observes patients at a point in time or over time, without intervening. They may be cross-sectional, providing a snapshot picture of a population at a particular point in time, or longitudinal, following the individuals over a period of time. Observational studies may also be prospective, when the data are collected forward in time from the beginning of the study, or retrospective, in which the information is obtained by going backwards in time [11],[12].

The most common types of observational studies are:

Cohort studies

In a cohort study, people are divided into cohorts, based on whether they have been exposed or not to a treatment, and then they are followed over a period of time to note the occurrence of an event, or not [11].

Case-control studies

In a case-control study, a sample of cases is compared with a group of controls who do not have the outcome of interest. The cases and controls are then categorized according to whether or not they have been exposed to the risk factor [12].

Cross-sectional studies

Cross-sectional studies attempt to investigate an association between a possible risk factor and a condition. The data concerning the exposure to the causal agent and outcome are collected simultaneously [13].

Case reports and case series

A case report is a descriptive report of a single patient. A case series is a descriptive report on a series of patients with a condition of interest. No control group is involved [14].

Experimental is the type of study in which the investigator intervenes so as to observe the effect on the outcome being studied [12]. Experimental studies can be either controlled (a comparison group exists) or uncontrolled. Sutherland [11] suggests that uncontrolled studies provide very weak evidence and should not be used as a reference for decision-making. Studies with experimental design can be distinguished in those where the comparisons are made between subjects (parallel groups design) or within subjects (matched design or cross-over or split-mouth studies). The clinical trial is a particular form of experimental study performed on humans [12]. A well-designed trial involves a comparison between groups formed after the randomization of patients to treatments. This type of study is called a randomized controlled clinical trial (RCT) [15].

The randomized controlled clinical trial

The randomized controlled clinical trial is a specific type of scientific experiment which is characterized by several distinguishing features. A fundamental feature of a clinical trial is that it is comparative in nature. This means that the results of a group of patients who are receiving the treatment under investigation (treatment group) are compared with those of another group of patients with similar characteristics who are not receiving the particular treatment (control group) [15]. In the RCT, the participants are randomly assigned to either experimental or control groups in a way that each participant has an equal probability of being assigned to any given group (randomization) [16]. As the two groups are identical apart from the treatment being compared, any differences in outcomes are attributed to the intervention [17].

An essential issue of a RCT is the concealment of the allocation sequence from the investigators who assign participants to treatment groups (allocation concealment). Allocation concealment should not be confused, though, with blinding, which corresponds to whether patients and investigators know or do not know which treatment they received [18]. Even though the validity of a RCT depends mainly on the randomization procedure, it is almost inevitable that after randomization some participants would not complete the study [19]. Intention-to-treat (ITT) analysis is a strategy that ensures that all patients are included in the analysis as part of the groups they were originally randomized, regardless of whether they withdrew or did not receive the treatment. Application of true ITT analysis requires some assumptions and some form of imputations for the missing data [20],[21].

The RCT is the most scientifically rigorous method of hypothesis testing available [20] and is regarded as the gold standard trial for evaluating the effectiveness of interventions [17]. The RCT is the only study which allows investigators to balance unknown prognostic factors at baseline (selection bias), this being its main advantage. Random allocation does not, however, protect RCTs against other types of bias (Table 2) [21]. Bias refers to the systematic (not random) deviation from the truth [22].

Most randomized controlled clinical trials have parallel designs in which each group of participants is exposed to only one of the interventions of interest (Figure 1) [23].

Flow diagram of the progress through the phases of a parallel RCT of two groups. Modified from CONSORT 2010 [23].

Understanding systematic reviews

It is well recognized that health-care decisions should not be based merely on one or two studies but take into consideration all the scientific research available on a specific topic [24]. Still, applying research into practice may be time consuming as it is often impractical for readers to track down and review all of the primary studies [2],[25]. Systematic reviews are particularly useful in synthesizing studies, even those with conflicting findings [24]. A systematic review is a form of research that uses explicit methods to identify comprehensively all studies for a specific focused question, critically appraise them, and synthesize the world literature systematically [26]-[28]. The explicit methods used in systematic reviews limit bias and, hopefully, will improve reliability and accuracy of conclusions [29].

Developing a systematic review requires a number of discrete steps [22]:

-

1.

Defining an appropriate health-care question

-

2.

Searching the literature

-

3.

Assessing the selected studies

-

4.

Synthesizing the findings

-

5.

Placing the findings in context

What are Cochrane reviews?

Cochrane reviews are systematic reviews of primary research in human health care and health policy, undertaken by members of The Cochrane Collaboration [30] adhering to a specific methodology [31]. The Cochrane Collaboration is an international organization that aims to organize medical research information in a systematic way promoting the accessibility of systematic reviews of the effects of health-care interventions [31].

What is a meta-analysis?

Frequently, data from all studies included in a systematic review are pooled quantitatively and reanalyzed; this is called a meta-analysis[32]. This technique increases the size of the `overall sample' and ultimately enhances the statistical power of the analysis [33]. A meta-analysis can be included in a well-executed systematic review. Quantitative synthesis should only be applied under certain conditions such as when the studies to be combined are clinically and statistically homogeneous. If the original review was biased or unsystematic, then the meta-analysis may provide a false measure of treatment effect [34].

Principles of critical appraisal

For any clinician, the key to assessing the usefulness of a clinical study and interpreting the results to a particular subject is through the process of critical appraisal. Critical appraisal is an essential skill of evidence-based dentistry which involves systematic assessment of research allowing, thus, clinicians to apply valid evidence in an efficient manner. When critically appraising research, there are three main areas that should be assessed: validity, importance, and applicability [35].

A study which is sufficiently free from bias is considered to have internal validity[36]. Common types of bias that affect internal validity include allocation bias, blinding, data collection methods, dropouts, etc. [37]. External validity refers to whether the study is asking an appropriate research question, as well as whether the study results reflect what can be expected in the population of interest (generalizability) [22].

Appraising a randomized controlled trial

Randomized controlled trials are the standard for testing the efficacy of health-care interventions. The better a RCT is designed and conducted, the more likely it is to provide a true estimate of the effect of an intervention. When reading a RCT article, we should focus on certain issues in order to decide whether the results of the study are reliable and applicable to our patient [38].

The validity of the trial methodology

During the process of appraising a RCT, readers should evaluate the following:

-

1.

Clear, appropriate research question

The clinical question should specify the type of participants, interventions, and outcomes that are of interest [22].

-

2.

Randomization

-

3.

Allocation concealment

-

4.

Blinding

-

5.

Intention-to-treat analysis

The concepts of randomization, allocation concealment, blinding, and ITT analysis were previously explained.

-

6.

Statistical power

McAlister et al. define the statistical power of a RCT as `the ability of the study to detect a difference between the groups when such a difference exists'. The size of the sample must be large enough to raise the trial's possibilities of answering the research question and, thus, enhancing the statistical power of the trial [39].

The magnitude and significance of treatment effect

Magnitude refers to the size of the treatment effect. The effect size represents the degree to which the two interventions differ. Effect size in RCTs may be reported in various ways including mean difference, risk ratio, odds ratio, risk difference, rate and hazard ratio, and numbers needed to treat [40],[41].

Statistical significance refers to the likelihood that a relationship is caused by something other than simple random chance. Probability (p) values and confidence intervals (CI) are used to assess statistical significance [42]. A statistically significant result indicates that there is probably a relationship between the variables of interest, but does not show whether this is important. Clinical significance refers to the practical meaning of the effect of an intervention to patients and health-care providers [43].

Generalizability of trial results

The fact that evidence addresses the effectiveness of a treatment does not mean that all patients will necessarily benefit from that. Thus, a clinician should always examine the similarities of the study participants with his own patients together with the proportion between the benefits and adverse events of the therapy [38].

Tools assessing the quality of RCTs

Many tools have been proposed for assessing the quality of studies. Most tools are scales in which various quality elements are scored or checklists in which specific questions are asked [44]. One method used to critically appraise randomized trials is the Risk of Bias Tool, developed by The Cochrane Collaboration, which is based on a critical evaluation of six different domains [22]. The Critical Appraisal Skills Program (CASP), also, aims to help people develop the necessary skills to interpret scientific evidence. CASP has released critical appraisal checklists for different types of research studies that cover three main areas of a study: validity, results, and clinical relevance [45]. Likewise, the Scottish Intercollegiate Guidelines Network (SIGN) has developed a methodology checklist in order to facilitate the assessment of a RCT. This checklist consists of three sections, each covering key topics of a trial [46].

Tools assessing the reporting quality of RCTs

To assess a trial accurately, readers need complete and transparent information on its methodology and findings. However, the reporting of RCTs is often incomplete [45]-[50] aggravating problems arising from poor methodology [51]-[56]. The Consolidated Standards of Reporting Trials (CONSORT) statement, which is aimed at trials with a parallel design, consists of a checklist of essential items that should be included in the report of a RCT together with a diagram showing the flow of participants through the phases of the trial. The objective of CONSORT is to provide guidance to authors about how to improve the reporting of their trials. Additionally, it can be used by readers, peer reviewers, and editors to help them critically appraise reports of RCTs [23].

Many clinicians base their assessment of a trial, or even their clinical decisions, on the information reported in the abstract, as they only read, or have access to, the abstracts of journal articles [57]. A well-written and constructed abstract should help the readers assess quickly the validity and applicability of the trial findings [58]. Several studies have accentuated the need for improvement in the reporting of conference abstracts and journal abstracts of RCTs [59]. The CONSORT for Abstracts (Table 3) provides a list of essential items that authors should consider when reporting a randomized trial in any journal or conference abstract [60].

Appraising systematic reviews and meta-analyses

Simply naming a review systematic does not imply that it is valid, even though systematic reviews are considered to be evidence of the highest level in the hierarchy of studies evaluating the effectiveness of interventions [10]. Readers need to be sure that the methodology used to synthesize relevant information was appropriate. This can be achieved by answering a few critical questions [61]:

-

1.

Did the review have a predetermined protocol?

-

2.

Was the question well formulated?

-

3.

Did the review include appropriate type of studies?

-

4.

Were all relevant studies identified using a comprehensive method?

-

5.

Were the included studies assessed in terms of their validity?

-

6.

Was the data abstracted appropriately from each study?

-

7.

How was the information synthesized and was the synthesis appropriate?

Tools assessing the quality of systematic reviews and meta-analyses

A tool commonly used to critically appraise the quality of a systematic review (with or without meta-analysis) is the Assessment of Multiple Systematic Reviews (AMSTAR) which consists of an 11-item questionnaire assessing the presence of key methodological issues [62]. SIGN has developed, as well, different methodology checklists for the most common types of studies, including systematic reviews. This checklist covers all main topics of a systematic review (SR) aiming to facilitate the process of critical appraisal [46].

Tools assessing the reporting quality of systematic reviews and meta-analyses

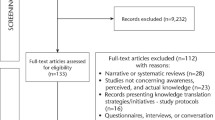

The Meta-analyses of Observational Studies in Epidemiology (MOOSE) guidelines were designed to promote the reporting quality in reviews of observational studies [63]. The MOOSE checklist includes guidelines for the reporting of the background, search strategies, methods, results, discussion, and conclusion of the study [64]. The Institute of Medicine (IOM) of the National Academies, also, has developed standards for conducting systematic reviews in order to help authors improve the reporting quality of their studies [65]. The Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) Statement focuses on ways in which authors can ensure the transparent and complete reporting of systematic reviews and meta-analyses. PRISMA consists of a checklist of 27 items indispensable in the reporting of SRs and meta-analyses and a flow diagram illustrating the flow of information through the four different phases of a systematic review [66].

The main function of an abstract of a systematic review is to signal its systematic methodology. However, despite the development of PRISMA, which gave some guidance for abstracts, the quality of abstracts of systematic reviews, as in RCTs, still remained poor [67]. The PRISMA for Abstracts consists of a 12-item checklist of items to report when writing an abstract of a SR with or without meta-analyses (Table 4) [68].

Applying evidence into practice - introducing GRADE

Health-care professionals are urged to inform their clinical decisions with the best relevant evidence. Clinicians, thus, are expected not only to deliver a treatment but to choose the best treatment option based on their patients' needs and preferences while balancing all benefits and harms. However, translating research findings into clinical guidelines can often be challenging. Moreover, users of guidelines need to be confident that they can rely on existing recommendations. Grading of Recommendations Assessment, Development and Evaluation (GRADE) is a system for grading the quality of evidence in order to develop recommendations that are as evidence-based as possible [69]. The GRADE methodology consists of the formulation of a clear clinical question which is followed by the identification of all relevant outcomes from systematic reviews, rated depending on how important they are for the development of a recommendation [70]. Judgments about the quality of evidence for important outcomes are made, and specific recommendations are formulated based on the strength of evidence and net benefits [69]. GRADE is not only a rating system. It provides a structured process for developing the strength of recommendations, and its explicit and comprehensive approach ensures the transparency of the judgments made [71].

Conclusions

Clinical decisions guided by valid and reliable research findings are considered to be more focused and effective than others [1]. Evidence indicates that controlled trials and systematic reviews with inadequate methodology are more susceptible to bias [6],[14]. Optimal reporting of RCT and SR abstracts is particularly important in enabling increased and sufficient access to evidence. The development of standards for the reporting of scientific results is essential for dissemination of new knowledge [58].

Authors' contributions

JK drafted the manuscript. AP revised, edited, and reviewed the information on the article. NP and PM edited the final manuscript. All authors read and approved the final manuscript.

References

Akobeng AK: Evidence-based child health 1: principles of evidence-based medicine. Arch Dis Child 2005, 90: 837–840. 10.1136/adc.2005.071761

Green S: Systematic reviews and meta-analysis. Singapore Med J 2005, 46: 270–273. quiz 274 quiz 274

Richardson WS, Wilson MC, Nishikawa J, Hayward RS: The well-built clinical question: a key to evidence-based decisions. ACP J Club 1995, 123: A12-A13.

Policy on evidence-based dentistry: introduction. 2008.

Gray GE, Pinson LA: Evidence-based medicine and psychiatric practice. Psychiatr Q 2003, 74: 387–399. 10.1023/A:1026091611425

Prisant LM: Hypertension. In Current Diagnosis. Edited by: Conn RB, Borer WZ, Snyder JW. W.B. Saunders, Philadelphia; 1997:349–359.

Brownson RC, Baker EA, Leet TL, Gillespie KN, True WN: Evidence-Based Public Health. Oxford University Press, New York; 2003.

Sackett DL: Evidence-based medicine. Semin Perinatol 1997, 21: 3–5. 10.1016/S0146-0005(97)80013-4

Rychetnik L, Hawe P, Waters E, Barratt A, Frommer M: A glossary for evidence based public health. J Epidemiol Community Health 2004, 58: 538–545. 10.1136/jech.2003.011585

Harbour R, Miller J: A new system for grading recommendations in evidence based guidelines. Br Med J 2001, 323: 334. 10.1136/bmj.323.7308.334

Sutherland SE: Evidence-based dentistry: part IV: research design and levels of evidence. J Can Dent Assoc 2001, 67: 375–378.

Petrie A, Bulman JS, Osborn JF: Further statistics in dentistry part 2: research designs 2. Br Dent J 2002, 193: 435–440. 10.1038/sj.bdj.4801591

Coggon D, Rose G, Barker DJP: Case-control and cross-sectional studies. In Epidemiology for the Uninitiated. BMJ Publishing Group, London; 1997:46–50.

Straus SE, Richardson WS, Glasziou P, Haynes RB: Evidence-Based Medicine: How to Practice and Teach EBM. Elsevier Churchill Livingstone, Philadelphia; 2005.

Petrie A, Bulman JS, Osborn JF: Further statistics in dentistry Part 3: clinical trials 1. Br Dent J 2002, 193: 495–498. 10.1038/sj.bdj.4801608

Lang TA, Secic M: How to Report Statistics in Medicine. American College of Physicians, Philadelphia; 1997.

McGovern DPB: Randomized controlled trials. In Key Topics in Evidence Based Medicine. Edited by: McGovern DPB, Valori RM, Summerskill WSM. BIOS Scientific Publishers, Oxford; 2001:26–29.

Chalmers TC, Levin H, Sacks HS, Reitman D, Berrier J, Nagalingam R: Meta-analysis of clinical trials as a scientific discipline: I: control of bias and comparison with large co-operative trials. Statistics in Medicine 1987, 6: 315–328. 10.1002/sim.4780060320

Summerskill WSM: Intention to treat. In Key Topics in Evidence Based Medicine. Edited by: McGovern DPB, Valori RM, Summerskill WSM. BIOS Scientific Publishers, Oxford; 2001:105–107.

Last JM: A Dictionary of Epidemiology. Oxford University Press, New York; 2001.

Jadad AR: Randomized Controlled Trials: a User's Guide London. BMJ Books, England; 1998.

Higgins JPT, Green S, editors. Cochrane Handbook for Systematic Reviews of Interventions Version 5.1.0 [updated March 2011]. The Cochrane Collaboration; 2011. . Accessed 1 Mar 2013., [www.cochrane-handbook.org] Higgins JPT, Green S, editors. Cochrane Handbook for Systematic Reviews of Interventions Version 5.1.0 [updated March 2011]. The Cochrane Collaboration; 2011. . Accessed 1 Mar 2013.

Moher D, Hopewell S, Schulz KF, Montori V, Gøtzsche PC, Devereaux PJ, Elbourne D, Egger M, Altman DG: CONSORT 2010 explanation and elaboration: updated guidelines for reporting parallel group randomized trials. BMJ 2010, 340: c869. 10.1136/bmj.c869

Akobeng AK: Understanding systematic reviews and meta-analysis. Arch Dis Child 2005, 90: 845–848. 10.1136/adc.2004.058230

Garg AX, Iansavichus AV, Kastner M, Walters LA, Wilczynski N, McKibbon KA, Yang RC, Rehman F, Haynes RB: Lost in publication: half of all renal practice evidence is published in non-renal journals. Kidney Int 2006, 70: 1995–2005.

Sackett DL, Strauss SE, Richardson WS, Rosenberg W, Haynes RB: Evidence-Based Medicine: How to Practice and Teach EBM. Churchill Livingstone, London; 2000.

Cook DJ, Mulrow CD, Haynes RB: Systematic reviews: synthesis of best evidence for clinical decisions. Ann Intern Med 1997, 126: 376–380. 10.7326/0003-4819-126-5-199703010-00006

Oxman AD, Cook DJ, Guyatt GH: Users' guides to the medical literature: VI how to use an overview evidence-based medicine working group. JAMA 1994, 272: 1367–1371. 10.1001/jama.1994.03520170077040

Jorgensen AW, Hilden J, Gotzsche PC: Cochrane reviews compared with industry supported meta-analyses and other meta-analyses of the same drugs: systematic review. BMJ 2006, 333: 782. 10.1136/bmj.38973.444699.0B

Oxford, UK: The Cochrane Collaboration; c2004–2006. 2013.

Hill GB: Archie Cochrane and his legacy: an internal challenge to physicians' autonomy? J Clin Epidemiol 2000, 53: 1189–1192. 10.1016/S0895-4356(00)00253-5

Mulrow CD: Rationale for systematic reviews. BMJ 1994, 309: 597–599. 10.1136/bmj.309.6954.597

Fouque D, Laville M, Haugh M, Boissel JP: Systematic reviews and their roles in promoting evidence-based medicine in renal disease. Nephrol Dial Transplant 1996, 11: 2398–2401. 10.1093/oxfordjournals.ndt.a027201

Bailar JC 3rd: The promise and problems of meta-analysis. N Engl J Med 1997, 337: 559–561. 10.1056/NEJM199708213370810

Rosenberg W, Donald A: Evidence based medicine: an approach to clinical problem-solving. BMJ 1995, 310: 1122–1126. 10.1136/bmj.310.6987.1122

Burls A: What Is Critical Appraisal?. Hayward Group, London; 2009.

Attia A: Bias in RCTs: confounders, selection bias and allocation concealment. Middle East Fertil Soc J 2005, 3: 258–261.

Akobeng AK: Evidence based child health 2: understanding randomized controlled trials. Arch Dis Child 2005, 90: 840–844. 10.1136/adc.2004.058222

McAlister FA, Clark HD, van Walraven C, Straus SE, Lawson FM, Moher D, Mulrow CD: The medical review article revisited: has the science improved? Ann Intern Med 1999, 131: 947–951. 10.7326/0003-4819-131-12-199912210-00007

Pandis N: The effect size. Am J Orthod Dentofacial Orthop 2012, 142: 739–740. 10.1016/j.ajodo.2012.06.011

Akobeng AK: Understanding measures of treatment effect in clinical trials. Arch Dis Child 2005, 90: 54–56. 10.1136/adc.2004.052233

Kremer LCM, Van Dalen EC: Tips and tricks for understanding and using SR results - no 11: P -values and confidence intervals. Evid Base Child Health 2008, 3: 904–906. 10.1002/ebch.260

Kazdin AE: The meanings and measurement of clinical significance. J Consult Clin Psychol 1999, 67: 332–339. 10.1037/0022-006X.67.3.332

Juni P, Altman DG, Egger M: Systematic reviews in health care: assessing the quality of controlled clinical trials. Br Med J 2001, 323: 42–46. 10.1136/bmj.323.7303.42

Evidence based medicine: a new approach to teaching the practice of medicine JAMA 1992, 268: 2420–2425. 10.1001/jama.1992.03490170092032

SIGN 50: a Guideline Developer's Handbook. 2013.

van Zanten SJ V, Cleary C, Talley NJ, Peterson TC, Nyren O, Bradley LA, Verlinden M, Tytgat GN: Drug treatment of functional dyspepsia: a systematic analysis of trial methodology with recommendations for design of future trials. Am J Gastroenterol 1996, 91: 660–673.

Talley NJ, Owen BK, Boyce P, Paterson K: Psychological treatments for 7 irritable bowel syndrome: a critique of controlled treatment trials. Am J Gastroenterol 1996, 91: 277–283.

Adetugbo K, Williams H: How well are randomized controlled trials reported in the dermatology literature? Arch Dermatol 2000, 136: 381–385. 10.1001/archderm.136.3.381

Kjaergard LL, Nikolova D, Gluud C: Randomized clinical trials in hepatology: predictors of quality. Hepatology 1999, 30: 1134–1138. 10.1002/hep.510300510

Schor S, Karten I: Statistical evaluation of medical journal manuscripts. JAMA 1966, 195: 1123–1128. 10.1001/jama.1966.03100130097026

Gore SM, Jones IG, Rytter EC: Misuse of statistical methods: critical assessment of articles in BMJ from January to March 1976. BMJ 1977, 1: 85–87. 10.1136/bmj.1.6053.85

Hall JC, Hill D, Watts JM: Misuse of statistical methods in the Australasian surgical literature. Aust N Z J Surg 1982, 52: 541–543. 10.1111/j.1445-2197.1982.tb06050.x

Altman DG: Statistics in medical journals. Stat Med 1982, 1: 59–71. 10.1002/sim.4780010109

Pocock SJ, Hughes MD, Lee RJ: Statistical problems in the reporting of clinical trials: a survey of three medical journals. N Engl J Med 1987, 317: 426–432. 10.1056/NEJM198708133170706

Altman DG: The scandal of poor medical research. BMJ 1994, 308: 283–284. 10.1136/bmj.308.6924.283

Barry HC, Ebell MH, Shaughnessy AF, Slawson DC, Nietzke F: Family physicians' use of medical abstracts to guide decision making: style or substance? J Am Board Fam Pract 2001, 14: 437–442.

Moher D, Schulz KF, Altman DG: The CONSORT statement: revised recommendations for improving the quality of reports of parallel-group randomized trials. Lancet 2001, 357: 1191–1194. 10.1016/S0140-6736(00)04337-3

Hopewell S, Eisinga A, Clarke M: Better reporting of randomized trials in biomedical journal and conference abstracts. J Inform Sci 2008, 34: 162–173. 10.1177/0165551507080415

Hopewell S, Clarke M, Moher D, Wager E, Middleton P, Altman DG, Schulz KF: CONSORT for reporting randomized controlled trials in journal and conference abstracts: explanation and elaboration. PLoS Med 2008, 5: e20. 10.1371/journal.pmed.0050020

Garg AX, Hackam D, Tonelli M: Systematic review and meta-analysis: when one study is just not enough. Clin J Am Soc Nephrol 2008, 3: 253–260. 10.2215/CJN.01430307

AMSTAR: Assessing Methodological Quality of Systematic Reviews. McMaster University, Hamilton, ON; 2011.

Huston P: Health services research. CMAJ 1996, 155: 1697–1702.

Stroup DF, Berlin JA, Morton SC, Olkin I, Williamson GD, Rennie D, Thacker SB: Meta-analysis of observational studies in epidemiology. JAMA 2000, 283: 2008–2012. 10.1001/jama.283.15.2008

Finding What Works in Health Care: Standards for Reporting of Systematic Reviews. 2011.

Moher D, Liberati A, Tetzlaff J, Altman DG: Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. PLoS Med 2009, 6: e1000097. 10.1371/journal.pmed.1000097

Beller EM, Glasziou PP, Hopewell S, Altman DG: Reporting of effect direction and size in abstracts of systematic reviews. JAMA 2011, 306: 1981–1982. 10.1001/jama.2011.1620

Beller EM, Glasziou PP, Altman DG, Hopewell S, Bastian H, Chalmers I, Gøtzsche PC, Lasserson T, Tovey D: PRISMA for Abstracts: reporting systematic reviews in journal and conference abstracts. PLoS Med 2013, 10: e1001419. 10.1371/journal.pmed.1001419

Atkins D, Best D, Briss PA, Eccles M, Falck-Ytter Y, Flottorp S, Guyatt GH, Harbour RT, Haugh MC, Henry D, Hill S, Jaeschke R, Leng G, Liberati A, Magrini N, Mason J, Middleton P, Mrukowicz J, O'Connell D, Oxman AD, Phillips B, Schünemann HJ, Edejer T, Varonen H, Vist GE, Williams JW Jr, Zaza S: Grading quality of evidence and strength of recommendations. BMJ 2004, 328: 1490. 10.1136/bmj.328.7454.1490

Schunemann HJ, Hill SR, Kakad M, Vist GE, Bellamy R, Stockman L, Wisløff TF, Del Mar C, Hayden F, Uyeki TM, Farrar J, Yazdanpanah Y, Zucker H, Beigel J, Chotpitayasunondh T, Hien TT, Ozbay B, Sugaya N, Oxman AD: Transparent development of the WHO rapid advice guidelines. PLoS Med 2007, 4: e119. 10.1371/journal.pmed.0040119

Brozek JL, Akl EA, Alonso-Coello P, Lang D, Jaeschke R, Williams JW, Phillips B, Lelgemann M, Lethaby A, Bousquet J, Guyatt GH, Schünemann HJ: Grading quality of evidence and strength of recommendations in clinical practice guidelines. Part 1 of 3. An overview of the GRADE approach and grading quality of evidence about interventions. Allergy 2009, 64: 669–677. 10.1111/j.1398-9995.2009.01973.x

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made.

The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

To view a copy of this licence, visit https://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kiriakou, J., Pandis, N., Madianos, P. et al. Developing evidence-based dentistry skills: how to interpret randomized clinical trials and systematic reviews. Prog Orthod. 15, 58 (2014). https://doi.org/10.1186/s40510-014-0058-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s40510-014-0058-5