Abstract

In this work, we study the problem to identify an unknown source term for the Atangana–Baleanu fractional derivative. In general, the problem is severely ill-posed in the sense of Hadamard. We have applied the generalized Tikhonov method to regularize the instable solution of the problem. In the theoretical result, we show the error estimate between the regularized and exact solutions with a priori parameter choice rules. We present a numerical example to illustrate the theoretical result. According to this example, we show that the proposed regularization method is converged.

Similar content being viewed by others

1 Introduction

In the last decade, the fractional derivative was used in many physical problems, see [1–9]. One type of newly defined fractional derivatives without a singular kernel has been suggested, namely, the fractional derivative that was defined by Atangana and Baleanu. The Atangana–Baleanu fractional derivative definition uses a Mittag-Leffler function as a nonlocal kernel. This fractional derivative is more suitable for modeling the fact problems than classical derivatives. The derivative has several interesting properties that are useful for modeling in many branches of sciences, with applications in real-world problems, see [10, 11]. For instance, Atangana and Baleanu have studied some useful properties of the new derivative and applied them to solving the fractional heat transfer model, see [12]; they applied them to the model of groundwater within an unconfined aquifer, see [13]. Alkahtani et al. used the Atangana–Baleanu derivative to research Chua’s circuit model, see [14]. Although there have been many research results on ordinary differential equations for this ABC-fractional derivative, the results on partial differential equations for this derivative are also limited. Especially, the results for the problem of determining the source function are almost not found in recent years. Therefore, we focus on the fractional diffusion equation with the fractional derivative of Atangana–Baleanu to determine an unknown source term as follows:

Here, the Atangana–Baleanu fractional derivative \({}^{\mathrm{ABC}}_{0} D_{t}^{\gamma }u(x,t)\) is defined by

where the normalization function \(L(\gamma )\) can be any function satisfying the conditions \(L(\gamma ) = 1 - \gamma + \frac{\gamma }{\varGamma (\gamma )}\), here \(L(0) = L(1) = 1\) (see Definition 2.1 in [15]) and \(E_{\gamma ,1}\) is the Mittag-Leffler function which is introduced later in Sect. 2. Our inverse source problem is finding \(f(x)\) from the given data Φ and the measured data at the final time \(u(x,T)= g(x) \), \(g \in L^{2}(\varOmega )\).

In practice, the exact data \((\varPhi ,g)\) is noised by observation data \((\varPhi _{\epsilon }, g_{\epsilon })\) with order of \(\epsilon >0\)

where \(\Vert \varXi \Vert _{L^{\infty }(0,T)}= {\sup }_{0\le t \le T} \vert \varXi (t) \vert \) for any \(\varXi \in L^{\infty }(0,T)\).

In the sense of Hadamard, the inverse source problem (1.1) with the observation data satisfies that (1.3) is ill-posed in general, i.e., a solution does not depend continuously on the input data \((\varPhi ,g)\). It means that if the noise level of ϵ is small, we have a large error in the sought solution f. It makes a troublesome numerical computation. Therefore, a regularization method is required.

The goal of this paper is to determine the source function f from the observation of \(g(x)\) at a final time \(t=T\) by \(g_{\epsilon }\) with a noise level of ϵ. To the best of author’s knowledge, there are no results for the Atangana–Baleanu fractional derivative to solve the inverse source problem (1.1). Motivated by the ideas mentioned above, in this work, to solve the fractional inverse source problem, we apply the generalized Tikhonov method with variable coefficients in a general bounded domain. We present the estimation of the convergence rate under an a priori bound assumption of the exact solution and an a priori parameter choice rule. Hence some regularization methods are required for stable computation of a sought solution. The inverse source problem attracted many authors, and its physical background can be found in [16], Wei et al. [17–19] Kirane et al. [20, 21]. In [22], Sümeyra Uçar et al. and his group studied mathematical analysis and numerical scheme for a smoking model with Atangana–Baleanu fractional derivative. In this paper, the authors meticulously study mathematical models for analyzing the dynamics of the smoking model with ABC fractional derivative, the existence and uniqueness of problem (1.1) to the relevant model are tested by fixed point theory. The numerical results are implemented by giving some illustrative graphics including the variation of fractional order.

The content of this paper is divided into six sections as follows. In general, we introduce our problem in Sect. 1. In the second section, some preliminary results are shown. In Sect. 3, we present the ill-posedness of the fractional inverse source problem (1.1) and conditional stability. In Sect. 4, we propose a generalized Tikhonov regularization method. Moreover, in this section, we show convergence estimate under an a priori assumption. Next, we consider a numerical example to verify our proposed regularized method in Sect. 5. Finally, in Sect. 6, we give some comments as a conclusion.

2 Preliminary results

Definition 2.1

(Hilbert scale space, see [23])

First, let the spectral problem

admit the eigenvalues

The corresponding eigenfunctions \(\mathrm{e}_{k} \in H_{0}^{1}(\varOmega )\). The Hilbert scale space \(\mathbb{H}^{m+1} \) (\(m >0\)) is defined by

with the norm

Let X be a Hilbert space, we denote by \(C ( [0,T ];X )\) and \(L^{p} (0,T;X )\) the Banach spaces of measurable real functions \(f:[0,T]\to X\) measurable such that

and

Lemma 2.1

([24])

The definition of the Mittag-Leffler function is as follows:

whereα, βare arbitrary constants.

Lemma 2.2

([25])

For\(\beta > 0\)and\(\alpha \in \mathbb {R} \), we obtain

Lemma 2.3

([25])

Let\(\xi > 0\), then we obtain

Lemma 2.4

([25])

For\(0 < \gamma < 1 \)and\(\zeta > 0\), we obtain\(0 < E_{\gamma ,\gamma }(-\zeta ) < \frac{1}{\varGamma (\gamma )}\). However, \(E_{\gamma ,\gamma }\)is a monotonic decreasing function with\(\zeta >0\).

Lemma 2.5

([24])

Let\(0 < \gamma _{0} < \gamma _{1} < 1\). Then there exist positive constants\(A_{1}\), \(A_{2}\), \(A_{3}\)depending only on\(\gamma _{0}\), \(\gamma _{1}\)such that, for all\(\gamma \in [\gamma _{0}, \gamma _{1}] \)and

Lemma 2.6

([25])

For any\(\lambda _{k}\)satisfying\(\lambda _{k} \geq \lambda _{1} > 0\), there exist positive constants\(A_{4}\)depending onγ, T, \(\lambda _{1}\)such that

Lemma 2.7

For\(\gamma \in (0,1)\)and\(\lambda _{k} \ge \lambda _{1}\), \(\forall k > 1\), one obtains

Lemma 2.8

For any\(\lambda _{1} < \lambda _{k}\)\(\forall k \in {\mathbb{N}}\)and\(\gamma \in (0,1)\), we denote

Using Lemma 2.7, we obtain

Proof

For \(E_{\gamma , \gamma }(-y) \geq 0\) for \(0 < \gamma < 1\) and \(y \geq 0\), we obtain

and

□

Lemma 2.9

Assume that there exist positive constants\(\vert \varPhi _{0} \vert \), \(\Vert \varPhi \Vert _{L^{\infty }(0,T)}\)such that\(\vert \varPhi _{0} \vert \le \vert \varPhi (t) \vert \le \Vert \varPhi \Vert _{L^{\infty }(0,T)}\)\(\forall t \in [0,T]\). Choosing\(\varepsilon \in (0, \frac{ \vert \varPhi _{0} \vert }{4} )\), we obtain

Proof

We notice that

From (2.13), we obtain

Similarly, we get

Denoting \(\mathcal{S} ( \vert \varPhi _{0} \vert , \Vert \varPhi \Vert _{L^{\infty }(0,T)} ) = \Vert \varPhi \Vert _{L^{\infty }(0,T)} + \frac{ \vert \varPhi _{0} \vert }{4}\), combining (2.14) and (2.15) leads to (2.12) holds. □

3 Regularization and error estimate for unknown source (1.1)

Assume that problem (1.1) has a solution u which has the form \(u(x,t) = \sum_{k=1}^{\infty } u_{k}(t) \mathrm{e}_{k}(x)\) with \(u_{k}(t) = \langle u(x,t), \mathrm{e}_{k}(x) \rangle \), then we have the fractional integro-differential equation involving the Atangana–Baleanu fractional derivative in the form

and the following condition \(u_{k}(0) = \langle u_{0}(x), \mathrm{e}_{k}(x)\rangle \). We have the solution of the initial value problem as follows (see [26]):

From (3.2) we obtain

From (3.3), applying \(u(x,0) = 0\) and letting \(t=T\), we have

Next, replacing \(u(x,T) = g(x)\) and adding to \(\varPhi (T)=0\), we get

A simple transformation gives

From (3.6), we can see that

Next, we recall \(A_{k}(\gamma )\) and \(H_{\gamma }(\lambda _{k},s)\) in Lemma 2.8, we have the source function f as follows:

3.1 The ill-posedness of the inverse source problem

Theorem 3.1

The unknown source problem (1.1) is not well-posed.

Proof

First of all, we define a linear operator as follows:

in which

From the property \(k(x,\omega ) = k(\omega ,x)\), we can see that \(\mathcal{P}\) is a self-adjoint operator. In the next step, we prove its compactness. To do this, we define the finite rank operator \(\mathcal{P}_{N}\) as follows:

Then, from (3.9) and (3.11), we obtain

This implies that

At this stage, \(\Vert \mathcal{P}_{N} - \mathcal{P} \Vert _{L^{2}(\varOmega )} \to 0\) in the sense of operator norm in \(L(L^{2}(\varOmega );L^{2}(\varOmega ))\) as \(N \to \infty \). Moreover, \(\mathcal{P}\) is a compact operator.

Next, the singular values for the linear self-adjoint compact operator \(\mathcal{P}\) are

and \({\mathrm{e}}_{k}\) are corresponding eigenvectors; we also know it as an orthonormal basis in \(L^{2}(\varOmega )\). From (3.9), what we introduced above can be formulated as

by Kirsch [27].

We give an example to illustrate the ill-posedness of our problem. Let us choose the input final data. Indeed, let \(\overline{g}_{j}\) be as follows \(\overline{g}_{j}:= \lambda _{j}^{-1/2} \mathrm{e}_{j}\). First, we assume that the other input final data \(g=0\). Then, using (3.7), the source term corresponding to g is \(f=0\). We obtain the following error in the \(L^{2}\) norm:

And the source term corresponding to \(\widetilde{g}_{j}\) is

Using Lemma 2.8, we obtain

And the error estimation between f and \(\overline{f}_{j}\) is as follows:

Combining (3.16) and (3.19), we know that

Thus our problem is ill-posed in the Hadamard sense in the \(L^{2}(\varOmega )\)-norm. □

3.2 Conditional stability of source term f

At the beginning of this section, we introduce a theorem to prove the stability condition.

Theorem 3.2

Let E be a positive number such that

Then

whereby

Proof

Thanks to the Hölder inequality and (3.7), we get

Using Lemma 2.8 part (c), we can easily see that

and this inequality leads to

Combining (3.23) and (3.25), we get

□

4 A generalized Tikhonov method

According to the ideas mentioned above, we apply the generalized Tikhonov regularization method to solve problem (1.1), which minimizes the function f satisfies

Let \(f^{\alpha (\epsilon )}\) be a solution of problem (4.1) \(f^{\alpha (\epsilon )}\) satisfying

From the operator \(\mathcal{P}\) is compact self-adjoint in [27], we obtain

If the measured data \((\varPhi _{\epsilon }(t), g_{\epsilon }(x))\) of \((\varPhi (t), g(x))\) with a noise level of ϵ satisfies

then we present the following regularized solution:

and denote

Therefore, from (4.3), (4.5), and (4.6), one has

and

4.1 Convergence estimates of the generalized Tikhonov regularization method under a priori parameter choice rules

As the main objectives of this section, we will prove an error estimation for \(\Vert f-f_{\epsilon }^{\alpha (\epsilon )} \Vert _{L^{2}(\varOmega )}\) and show the convergence rate by using an a priori choice rule for the regularization parameter.

Theorem 4.1

LetΦ, \(\varPhi _{\epsilon }\)satisfy Lemma 2.9. Assume an a priori bounded condition (3.21). Then the following estimate holds:

-

(a)

If\(0 < m < 3\)and choosing\(\alpha (\epsilon ) = (\frac{\epsilon }{E} )^{\frac{4}{m+1}}\)from (4.18) and (4.29), we receive

$$\begin{aligned} \bigl\Vert f - f_{\epsilon }^{\alpha (\epsilon )} \bigr\Vert _{L^{2}(\varOmega )} \textit{ is of order } \epsilon ^{\frac{4}{m+5}}. \end{aligned}$$(4.9) -

(b)

If\(m \geq 3\)and choosing\(\alpha (\epsilon ) = \frac{\epsilon }{E}\), from (4.18) and (4.29), we receive

$$\begin{aligned} \bigl\Vert f - f_{\epsilon }^{\alpha (\epsilon )} \bigr\Vert _{L^{2}(\varOmega )} \textit{ is of order } \epsilon ^{\frac{1}{2}}. \end{aligned}$$(4.10)

Proof

From (4.3), (4.5) and using the triangle inequality, we get

We prove this theorem through the following two lemmas.

Lemma 4.1

Let us assume that (4.4) holds. Then we have the estimation as follows:

Proof

From (4.11), we have

in which \(\mathcal{S}_{1}\), \(\mathcal{S}_{2}\), and \(\mathcal{S}_{3}\) are as follows:

Step 1: Estimating \(\Vert \mathcal{S}_{1} \Vert _{L^{2}(\varOmega )}\), using the inequality \(a^{2}+b^{2} \ge 2ab\), \(\forall a,b \ge 0\), we obtain

Step 2: Estimate \(\Vert \mathcal{S}_{2} \Vert _{L^{2}(\varOmega )}\) as follows:

Step 3: Finally, \(\Vert \mathcal{S}_{3} \Vert _{L^{2}(\varOmega )}\) can be bounded by

Combining (4.15) to (4.17), we obtain

The proof is completed. □

Next, we estimate the second term of \(\mathcal{I}_{2}\) as follows.

Lemma 4.2

Let\(f \in \mathbb{H}^{m+1}(\varOmega )\), subtract (4.7) and (3.8), we thus see that

in which\(Q_{\gamma } (\lambda _{1},T,E )\)is defined in (4.30).

Proof

By using Parseval’s equality, (3.7), and (4.3), we obtain

where

The function G can be bounded as follows:

From (4.22), we divide into two cases.

Case 1st:\(m \ge 3\). We have

Combining (4.20), (4.23), we obtain

Case 2nd:\(0 < m < 3\). We set \(\mathbb{N} = \mathbb{V}_{1} \cup \mathbb{V}_{2}\) and choose any ℓ such that \(\ell \in (0,3 )\), where

In this case, we also continue to divide into two cases as follows:

-

(a)

If \(k \in \mathbb{V}_{1}\), one has

$$\begin{aligned} \bigl\Vert f - f^{\alpha (\epsilon )} \bigr\Vert _{L^{2}(\varOmega )} & \le \frac{{[\alpha (\epsilon )]^{\frac{1}{2}-\ell }} ( \frac{L(\gamma )}{\lambda _{1}} + (1-\gamma ) )^{2}}{2\gamma L(\gamma ) \vert \varPhi _{0} \vert } \biggl( \frac{1-\gamma }{\gamma } \biggr)^{-1} \\ &\quad{} \times \biggl(1 - E_{\gamma ,1} \biggl( \frac{-\gamma \lambda _{1} T^{\gamma }}{L(\gamma ) + \lambda _{1} (1-\gamma )} \biggr) \biggr)^{-1} \Vert f \Vert _{\mathbb{H}^{m+1}(\varOmega )}. \end{aligned}$$(4.26) -

(b)

If \(k \in \mathbb{V}_{2}\), using the inequality \(a+b \geq 2\sqrt{ab}\), \(\forall a,b > 0\) gives

$$\begin{aligned} \bigl\Vert f - f^{\alpha (\epsilon )} \bigr\Vert _{L^{2}(\varOmega )} &\le \frac{{[\alpha (\epsilon )]^{\frac{2\ell (m+1)}{3-m}}} ( \frac{L(\gamma )}{\lambda _{1}} + (1-\gamma ) )^{2}}{2\gamma L(\gamma ) \vert \varPhi _{0} \vert } \biggl( \frac{1-\gamma }{\gamma } \biggr)^{-1} \\ &\quad{} \times \biggl(1 - E_{\gamma ,1} \biggl( \frac{-\gamma \lambda _{1} T^{\gamma }}{L(\gamma ) + \lambda _{1} (1-\gamma )} \biggr) \biggr)^{-1} \Vert f \Vert _{\mathbb{H}^{m+1}(\varOmega )}. \end{aligned}$$(4.27)

Combining (4.25) to (4.30), we have thus proved

Choosing \(\ell =\frac{3-s}{2(s+5)}\) and from \(\Vert f \Vert _{\mathbb{H}^{s+1}(\varOmega )} \le E\), this implies that

where

□

Combining (4.15) to (4.17), the proof is completed by showing that

-

(a)

If \(0 < m < 3\) and choosing \(\alpha (\epsilon ) = (\frac{\epsilon }{E} )^{\frac{4}{m+1}}\) from (4.18) and (4.29), we get

$$\begin{aligned} \bigl\Vert f_{\epsilon }^{\alpha (\epsilon )} - f \bigr\Vert _{L^{2}(\varOmega )} \text{ is of order } \epsilon ^{\frac{4}{m+5}} . \end{aligned}$$(4.31) -

(b)

If \(m \geq 3\) and choosing \(\alpha (\epsilon ) = \frac{\epsilon }{E}\), from (4.18) and (4.29), we get

$$\begin{aligned}& \bigl\Vert f_{\epsilon }^{\alpha (\epsilon )} - f \bigr\Vert _{L^{2}(\varOmega )} \text{ is of order } \epsilon ^{\frac{1}{2}} . \end{aligned}$$(4.32)

□

5 Simulation example

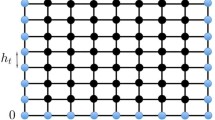

In this section, we present an example to simulate the theory presented. By choosing \(T = 1\), \(m=1\), and \(\gamma = 0.75\), \(\gamma =0.85\) and \(\gamma =0.95\) are shown in this section, respectively. The computations in this paper are supported by the Matlab codes given by Podlubny [28]. Here, we compute the generalized Mittag-Leffler function with \(P = 10^{-10}\). We consider the problem as follows:

whereby \({}^{\mathrm{ABC}}_{0} D_{t}^{\gamma }u(x,t)\) is the Atangana–Baleanu fractional derivative.

Let \(\Delta u = \frac{\partial ^{2}}{\partial x^{2}} u \) on the domain \(\varOmega = (0,\pi )\) with the Dirichlet boundary condition such that \(u(0,t) = u(\pi ,t) = 0\), \(t \in (0, 1)\). Then we have the eigenvalues and corresponding eigenvectors: \(\lambda _{k} = k^{2}\), \(k = 1, 2,\ldots \) , and \(\mathrm{e}_{k}(x) = \sqrt{\frac{2}{\pi }}\sin (kx)\), respectively.

In addition, problem (5.1) satisfies the following condition:

Then we have the following solution:

We have

From (5.3) and (5.4), we can find that through some simple transformations

The algorithm analysis steps are divided as follows.

Step 1: Considering the domain \((x,t) \in (0,\pi ) \times (0,1)\), we use the following finite difference to discrete the time and spatial variable:

Step 2: The approximated data of \((g,\varPhi )\) is noised by observation data \((g_{\epsilon },\varPhi _{\epsilon })\) as follows:

Step 3: The relative error estimation is given by

In addition, we can choose the regularization parameter \(\alpha (\epsilon ) = \frac{\epsilon }{E}\) for the a priori parameter choice rule, where the value of E plays a role as the a priori condition computed by \(\Vert f \Vert _{\mathbb{H}^{2}(0,\pi )}\). Using the fact that (see [29])

From (5.8), by replacing \(\alpha = \gamma \) and \(z = -\frac{\gamma k^{2}}{L(\gamma ) + k^{2}(1-\gamma )}\), we can find

From (4.5), we have the following regularized solution with a truncation number N:

in which \(A_{k}(\gamma )\) and \(H_{\gamma }(k^{2},s)\) are defined in Lemma 2.8. From (5.9) and (5.10), we can calculate the integral \(\int _{0}^{1} H_{\gamma }(k^{2},s) \varPhi _{\epsilon }(s) \,ds \) as follows:

In these calculations, we choose \(N = 40\). Figure 1 shows the 2D graphs of the source function with the exact data and its approximation for the a priori parameter choice rule with \(\gamma =0.75\) and its error estimates with \(\epsilon = 0.1\), \(\epsilon = 0.01\), and \(\epsilon = 0.001\). Figure 2 shows the 2D graphs of the source function with the exact data and its approximation for the a priori parameter choice rule with \(\gamma =0.85\) and its error estimates with \(\epsilon = 0.1\), \(\epsilon = 0.01\), and \(\epsilon = 0.001\). Figure 3 shows the 2D graphs of the source function with the exact data and its approximation for the a priori parameter choice rule with \(\gamma =0.95\) and its error estimates with \(\epsilon = 0.1\), \(\epsilon = 0.01\), and \(\epsilon = 0.001\), respectively. Table 1 shows the error estimates between the source function with the exact data and the measurement data for the a priori parameter choice rule method with the third cases of γ. From the observations on this table, we can conclude that the approximation result is acceptable. That means the proposed method is effective.

6 Conclusion

We used the generalized Tikhonov method to regularize the inverse problem to identify an unknown source term for fractional diffusion equations with the Atangana–Baleanu fractional derivative. By giving an example, we showed that this problem is ill-posed (in the sense of Hadamard). In addition, we showed the result for the convergent estimate between the sought solution and the regularized solution under a priori parameter choice rule. Finally, we showed an example to simulate our proposed regularization. In the future work, we will expand the research direction for this type of derivative such as considering the regularity of solutions, continuity according to derivative, results of comparison between the existing derivatives.

References

Tuan, N.H., Ngoc, T.B., Zhou, Y., O’Regan, D.: On existence and regularity of a terminal value problem for the time fractional diffusion equation. Inverse Probl. (2020)

Binh, T.T., Luc, N.H., O’Regan, D., Can, N.H.: On an initial inverse problem for a diffusion equation with a conformable derivative. Adv. Differ. Equ. 2019, 481 (2019)

Huynh, L.N., Zhou, Y., O’Regan, D., Tuan, N.H.: Fractional Landweber method for an initial inverse problem for time-fractional wave equations. Appl. Anal. 1–19

Ibrahim, R.W., Jafari, H., Jalab, H.A., et al.: Local fractional system for economic order quantity using entropy solution. Adv. Differ. Equ. 2019, 96 (2019)

Sanjay, B., Amit, M., Devendra, K., Singh, J.: A new analysis of fractional Drinfeld–Sokolov–Wilson model with exponential memory. Phys. A, Stat. Mech. Appl. 537(C) (2020)

Sanjay, B., Amit, M., Devendra, K., Sooppy, N.K., Singh, J.: Fractional modified Kawahara equation with Mittag-Leffler law. Chaos Solitons Fractals 131, 109508 (2020)

Singh, J., Kilicman, A., Kumar, D., Swroop, R.: Numerical study for fractional model of nonlinear predator–prey biological population dynamic system. Therm. Sci. 23(Suppl. 6), 2017–2025 (2019)

Dubey, V.P., Kumar, R., Kumar, D., et al.: An efficient computational scheme for nonlinear time fractional systems of partial differential equations arising in physical sciences. Adv. Differ. Equ. 2020, 46 (2020)

Goswami, A., Sushila, Singh, J., Kumar, D.: Numerical computation of fractional Kersten–Krasil’shchik coupled KdV-mKdV system occurring in multi-component plasmas. AIMS Math. 5, 2346–2368 (2020)

Jafari, H., Babaei, A., Banihashemi, S.: A novel approach for solving an inverse reaction–diffusion–convection problem. J. Optim. Theory Appl. 183, 688–704 (2019)

Ganji, R.M., Jafari, H., Baleanu, D.: A new approach for solving multi variable orders differential equations with Mittag-Leffler kernel. Chaos Solitons Fractals 130, 109405 (2020)

Atangana, A., Baleanu, D.: New fractional derivatives with nonlocal and non-singular kernel: theory and application to heat transfer model. Therm. Sci. 20, 763–769 (2016)

Algahtani, R.T.: Atangana–Baleanu derivative with fractional order applied to the model of groundwater within an unconfined aquifer. J. Nonlinear Sci. Appl. 9, 3647–3654 (2016)

Alkahtani, B.S.T.: Chuas circuit model with Atangana–Baleanu derivative with fractional order. Chaos Solitons Fractals 89, 547–551 (2016)

Bahaa, G.M., Hamiaz, A.: Optimality conditions for fractional differential inclusions with nonsingular Mittag-Leffler kernel. Adv. Differ. Equ. 2018, 257 (2018)

Tuan, N.H., Kirane, M., Long, L.D., Thinh, N.V.: Filter regularization for an inverse parabolic problem in several variables. Electron. J. Differ. Equ. 2016, 24 (2016)

Wang, J.G., Zhou, Y.B., Wei, T.: Two regularization methods to identify a space-dependent source for the time-fractional diffusion equation. Appl. Numer. Math. 68, 39–57 (2013)

Wei, T., Wang, J.: A modified quasi-boundary value method for an inverse source problem of the time-fractional diffusion equation. Appl. Numer. Math. 78, 95–111 (2014)

Zhang, Z.Q., Wei, T.: Identifying an unknown source in time-fractional diffusion equation by a truncation method. Appl. Math. Comput. 219(11), 5972–5983 (2013)

Kirane, M., Malik, A.S., Al-Gwaiz, M.A.: An inverse source problem for a two dimensional time fractional diffusion equation with nonlocal boundary conditions. Math. Methods Appl. Sci. 36(9), 1056–1069 (2013)

Kirane, M., Malik, A.S.: Determination of an unknown source term and the temperature distribution for the linear heat equation involving fractional derivative in time. Appl. Math. Comput. 218(1), 163–170 (2011)

Uçar, S., Uçar, E., Özdemir, N., Hammouch, Z.: Mathematical analysis and numerical simulation for a smoking model with Atangana–Baleanu derivative. Chaos Solitons Fractals 118, 300–306 (2019)

Nair, M.T., Pereverzev, S.V., Tautenhahn, U.: Regularization in Hilbert scales under general smoothing conditions. Inverse Probl. 21(6), 1851–1869 (2005)

Podlubny, I.: Fractional Diffusion Equation, Mathematics in Science and Engineering. Academic Press, New York (1999)

Ma, Y.-K., Prakash, P., Deiveegan, A.: Generalized Tikhonov methods for an inverse source problem of the time-fractional diffusion equation. Chaos Solitons Fractals 108, 39–48 (2018)

Musalhi, F.S.A., Nasser, S.A.S., Erkinjon, K.: Initial and boundary value problems for fractional differential equations involving Atangana–Baleanu derivative. SQU J. Sci. 23(2), 137–146 (2018)

Kirsch, A.: An Introduction to the Mathematical Theory of Inverse Problem, 2nd edn. Springer, New York (2011)

Podlubny, I., Kacenak, M.: Mittag-leffler function. The MATLAB routine. http://www.mathworks.com/matlabcentral/fileexchange (2006)

Mathai, A.M.: Mittag-Leffler function and fractional calculus. India

Acknowledgements

The authors wish to express their sincere appreciation to the editor and the anonymous referees for their valuable comments and suggestions.

Availability of data and materials

Not applicable.

Funding

Not applicable.

Author information

Authors and Affiliations

Contributions

All authors contributed equally. All the authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no financial and non-financial competing interests.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Can, N.H., Luc, N.H., Baleanu, D. et al. Inverse source problem for time fractional diffusion equation with Mittag-Leffler kernel. Adv Differ Equ 2020, 210 (2020). https://doi.org/10.1186/s13662-020-02657-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-020-02657-2