Abstract

This paper investigates the design of disturbance attenuating controller for memristive recurrent neural networks (MRNNs) with mixed time-varying delays. By applying the combination of differential inclusions, set-valued maps and Lyapunov–Razumikhin, a feedback control law is obtained in the simple form of linear matrix inequality (LMI) to ensure disturbance attenuation of memristor-based neural networks. Finally, a numerical example is given to show the effectiveness of the proposed criteria.

Similar content being viewed by others

1 Introduction

It is well known that the neural networks are so important that they have been widely applied in various areas such as reconstructing moving images, signal processing, pattern recognition, optimization problems and so on (for reference, see [1–48]). During the recent years, more and more researchers have paid attention to a new model named state-dependent switching recurrent neural networks whose connection weights vary due to their states. Generally speaking, such switching neural networks have been entitled memristive neural networks or memristor-based neural networks. Therefore, let us recall the brief development of memristive neural networks in the following. In 1971, Dr. Chua (see [10]) firstly advised that a fourth basic circuit element should exist. Different from the other three elements—the resistor, the inductor, and the capacitor—the fourth one was named the memristor. According to Chua’s theory, the memristor must have important and distinctive ability. Precisely, with the rapid development of science, a prototype of the memristor had been built by some scientists from HP Labs until 2008 (see [11]). The memristor, which not only shares many properties of resistors but also shares the same unit of measurement, is a two-terminal element whose characteristic lies in its variable resistance called memristance. Memristance, depending on how much electric charge has been passed through the memristor in a special direction, is its distinctive ability. The ability contributes to its memorizing the passed quantity of electric charge. Therefore, since 2008, its potential applications have become more and more popular in many aspects such as generation computer, powerful brain-like neural computer, and so on. There is no doubt that it has initiated the worldwide concern with the emergence of the memristor (see [11–30]). For the neural networks, the first job is considering whether they are stable or not. Therefore, a lot of scholars have studied the memristive neural networks’ multitudinous stability such as asymptotical stability, global stability, and exponential stability (see [12, 13, 15, 17–20]). Moreover, as far as we know, the passivity theory plays an important role in the analysis of the stability of dynamical systems, nonlinear control, and other areas. Thus, some researchers have investigated passivity or dissipativity criteria on MRNNs (see [14, 16, 23–25, 27–30]).

On the other hand, neural networks with time-varying delays are unavoidable to subject to persistent disturbance. How to solve persistent disturbance for delayed neural networks is still an open problem. Therefore, He et al. [31] studied the problem of disturbance attenuating controller design for delayed cellular neural networks (DCNNs). In this paper, authors designed a feedback control law to guarantee disturbance attenuation for DCNNs by employing Lyapunov–Razumikhin theorem. However, firstly, this paper just discussed disturbance attenuation for delayed cellular neural networks, so the activation function was assumed only to be \(f(x(\cdot ))=0.5(|x(\cdot)+1|-|x(\cdot)-1|)\). As is well known to us, there are still Hopfield neural networks, except cellular neural networks. Both of them belong to recurrent neural networks. Thus, how to design disturbance attenuating controller for general neural networks is our first motivation. Secondly, it is noted that the results in this paper were derived for systems only with discrete delays. Another type of time delay is distributed delay. Systems with distributed delay can be applied in the modeling of feeding systems and combustion chambers in a liquid monopropellant rocket motor with pressure feeding. So, how to solve the persistent disturbance for delayed neural networks with both discrete and distributed time-varying delays remains some room to certain extent.

Motivated by the above mentioned discussion, the problem of disturbance attenuating controller design is extended for memristor-based neural networks. To the best of our knowledge, there has not been any paper to discuss the disturbance attenuating controller design for MRNNs, which motivates our study. Our objective is to give an effective feedback control law to ensure disturbance attenuation and obtain a description of the bounded attractor set for MRNNs with mixed time-varying delays. The main contribution of this paper lies in the following aspects: first of all, this paper is the first one to investigate the disturbance attenuating controller for MRNNs, which is sure to strengthen the systematic research theory for MRNNs and must further enrich the basis of application for MRNNs. Then, comparing to the existing paper [31] about the disturbance attenuating controller design, the studied systems not only contain the more general activation functions but also include both discrete time-varying delay and distribute time-varying delays;a feedback control law is designed in the simple form of linear matrix inequality (LMI) to ensure disturbance attenuation of memristor-based neural networks by employing multiple theories such as differential inclusions, set-valued maps, and Lyapunov–Razumikhin.

2 Problem statement and preliminaries

Throughout this paper, solutions of all the systems considered in the following are intended in Filippov’s sense (see [1, 36]). \([\cdot ,\cdot]\) represents the interval. The superscripts ‘−1’ and ‘T’ stand for the inverse and transpose of a matrix, respectively. \(P>0\) (\(P\geqslant0\), \(P<0\), \(P\leqslant0\)) means that the matrix P is symmetric positive definite (positive-semi definite, negative definite, and negative-semi definite). \(\Vert\cdot\Vert\) refers to the Euclidean vector norm. \(R^{n}\) denotes an n-dimensional Euclidean space. \(\mathcal{C}([-\rho,0],R^{n})\) represents a Banach space of all continuous functions. \(R^{{m}\times{n}}\) is the set of \(m\times n\) real matrices. ∗ denotes the symmetric block in a symmetric matrix. For matrices \(\mathcal{M}=(m_{ij})_{m\times{n}}\), \(\mathcal {N}=(n_{ij})_{m\times{n}}\), \(\mathcal{M}\gg\mathcal{N}\) (\(\mathcal{M}\ll \mathcal{N}\)) means that \(m_{ij}\gg{n}_{ij}\) (\(m_{ij}\ll{n}_{ij}\)) for \(i=1,2,\ldots,m\), \(j=1,2,\ldots,n\). And by the interval matrix \([\mathcal {M},\mathcal{N}]\), it follows that \(\mathcal{M}\ll\mathcal{N}\). For \(\forall\mathcal{L}=(l_{ij})_{m\times{n}}\in[\mathcal{M},\mathcal{N}]\), it means \(\mathcal{M}\ll\mathcal{L}\ll\mathcal{N}\), i.e., \(m_{ij}\lll _{ij}\ll{n}_{ij}\) for \(i=1,2,\ldots,m\), \(j=1,2,\ldots,n\). \(\operatorname{co}\{\Pi_{1},\Pi _{2}\}\) denotes the closure of the convex hull generated by real numbers \(\Pi_{1}\) and \(\Pi_{2}\). Let \(\bar{a}_{i}=\max\{\hat{a}_{i},\check{a}_{i}\} \), \(\underline{a}_{i}=\min\{\hat{a}_{i},\check{a}_{i}\}\), \(\bar {b}_{ij}=\max\{\hat{b}_{ij},\check{a}_{ij}\}\), \(\underline{b}_{ij}=\min \{\hat{b}_{ij},\check{b}_{ij}\}\), \(\bar{c}_{ij}=\max\{\hat {c}_{ij},\check{c}_{ij}\}\), \(\underline{c}_{ij}=\min\{\hat {c}_{ij},\check{c}_{ij}\}\), \(\bar{d}_{ij}=\max\{\hat{d}_{ij},\check {d}_{ij}\}\), \(\underline{d}_{ij}=\min\{\hat{d}_{ij},\check{d}_{ij}\}\). Matrix dimensions, if not explicitly stated, are assumed to be compatible with algebraic operations.

In this section, by Krichoff’s current law, a general class of memristor-based recurrent neural networks containing both persistent disturbances and mixed time-varying delays is introduced as follows:

where \(x_{i}(t)\) represents the voltage of the capacitor \(C_{i}\), \(f_{i}(x_{i}(t))\in {R}^{n}\) is the nonlinear activation function, \(u_{i}(t)\) is the input, \(h_{l}(t)\) (\(l=1,2,\ldots,m\)) is the bounded disturbance. \(\tau_{i}(t)\) is the discrete time-varying delay, and \(\rho_{i}(t)\) is the distributed delay. They satisfy the following conditions: \(0\leq\tau_{i}(t)\leq\tau\), \(0\leq \rho_{i}(t)\leq\rho\) (τ and ρ are constants). \(\phi_{i}(t)\) is the initial condition and is bounded and continuously differential on \([-\delta,0]\) (\(\delta=\max\{\tau,\rho\}\)). \(g_{il}\) describes the weighting coefficients of the disturbance. \(\breve{a}_{i}\) describes the rate with which each neuron will reset its potential to the resting state in isolation when disconnected from the networks and external inputs. \(\breve{b}_{ij}\), \(\breve{c}_{ij}\), and \(\breve{d}_{ij}\) represent the element of the connection weight matrix, the discretely delayed connection weight matrices, and the distributed delays, respectively. They satisfy the following conditions:

in which switching jump \(T_{i}>0\), \(\hat{d}_{ij}\), \(\check{d}_{ij}\), \(\hat {b}_{ij}\), \(\check{b}_{ij}\), \(\hat{c}_{ij}\), \(\check{c}_{ij}\), \(\hat {a}_{i}\), \(\check{a}_{i}\), \(i,j=1,2,\ldots,n\), are all constant numbers.

Remark 2.1

The clear exposition about the relation between memristances and coefficients of switching system (1) has been given in the works [12, 18]. Thus, researchers can consult [12, 18] to get more information.

From the above description, the studied networks are state-dependent switching recurrent neural networks whose connection weights vary according to their states. To translate these state-dependent neural networks into the general ones, the next definitions are necessary.

Definition 2.1

Let \(E\subseteq{R}^{n}\), \(x\mapsto {F}(x)\) is called a set-valued map from \(E\hookrightarrow{R}^{n}\) if, for each point x of a set \(E\subseteq{R}^{n}\), there corresponds a nonempty set \(F(x)\subseteq{R}^{n}\).

Definition 2.2

A set-valued map F with nonempty values is said to be upper semi-continuous at \(x_{0}\in{E}\subseteq{R}^{n}\) if, for any open set N containing \(F(x_{0})\), there exists a neighborhood M of \(x_{0}\) such that \(F(M)\subseteq{N}\). \(F(x)\) is said to have a closed (convex, compact) image if, for each \(x\in{E}\), \(F(x)\) is closed (convex, compact).

Definition 2.3

For the differential system \(\frac {dx}{dt}=f(t,x)\), where \(f(t,x)\) is discontinuous in x, the set-valued map of \(f(t,x)\) is defined as follows:

where \(B(x,\epsilon)=\{y: \Vert y-x \Vert\leq\epsilon\}\) is the ball of center x and radius ϵ. Intersection is taken over all sets N of measure zero and over all \(\epsilon>0\); and \(\mu(N)\) is the Lebesgue measure of set N.

A Filippov solution of system (1) with initial condition \(x(0)=x_{0}\) is absolutely continuous on any subinterval \(t\in[t_{1},t_{2}]\) of \([0,T]\), which satisfies \(x(0)=x_{0}\), and the differential inclusion:

Firstly, by employing the theories of differential inclusions and set-valued maps, from (1), it follows that

or equivalently, for \(i,j=1,2,\ldots,n\), there exist \(a_{i}\in\operatorname{co}\{\hat {a}_{i},\check{a}_{i}\}\), \(b_{ij}\in\operatorname{co}\{\hat{b}_{ij},\check{b}_{ij}\} \), \(c_{ij}\in\operatorname{co}\{\hat{c}_{ij},\check{c}_{ij}\}\), and \(d_{ij}\in\operatorname{co}\{ \hat{d}_{ij},\check{d}_{ij}\}\) such that

Clearly, \(\operatorname{co}\{\hat{a_{i}},\check{a_{i}}\}=[\bar{a},\underline{a}]\), \(\operatorname{co}\{ \hat{b}_{ij},\check{b}_{ij}\}=[\bar{b}_{ij},\underline{b}_{ij}]\), \(\operatorname{co}\{ \hat{c}_{ij},\check{c}_{ij}\}=[\bar{c}_{ij},\underline{c}_{ij}]\), \(\operatorname{co}\{ \hat{d}_{ij},\check{d}_{ij}\}=[\bar{d}_{ij},\underline{d}_{ij}]\) for \(i,j=1,2,\ldots,n\).

A solution \(x(t)=[x_{1}(t),x_{2}(t),\ldots,x_{n}(t)]^{T}\in{R}^{n}\)(in the sense of Filippov) of system (1) is absolutely continuous on any compact interval of \([0,+\infty]\), and for \(i=1,2,\ldots,n\),

For convenience, transform (1) into the compact form as follows:

or equivalently, there exist \(A^{\ast}\in\operatorname{co}\{\hat{A},\check{A}\}\), \(B^{\ast}\in\operatorname{co}\{\hat{B},\check{B}\}\), \(C^{\ast}\in\operatorname{co}\{\hat{C},\check {C}\}\), and \(D^{\ast}\in\operatorname{co}\{\hat{D},\check{D}\}\) such that

where \(C^{\ast}=C(x)\), \(A^{\ast}=A(x)\), \(B^{\ast}=B(x)\), \(D^{\ast}=D(x)\), \(\hat{A}=(\hat{a}_{i})_{n\times{n}}\), \(\hat{B}=(\hat{b}_{ij})_{n\times {n}}\), \(\hat{C}=(\hat{c}_{ij})_{n\times{n}}\), \(\hat{D}=(\hat {d}_{ij})_{n\times{n}}\), \(\check{A}=(\check{a}_{i})_{n\times{n}}\), \(\check{B}=(\check {b}_{ij})_{n\times{n}}\), \(\check{C}=(\check{c}_{ij})_{n\times{n}}\), \(\check{D}=(\check{d}_{ij})_{n\times{n}}\), \(G=(g_{il})_{n\times{m}}\), \(x(t)=[x_{1}(t),x_{2}(t),\ldots,x_{n}(t)]^{T}\in{R}^{n}\), \(f(x(t))=[f_{1}(x_{1}(t)),f_{2}(x_{2}(t)), \ldots,f_{n}(x_{n}(t))]^{T}\in{R}^{n}\), \(f(x(t-\tau(t)))=[f_{1}(x_{1}(t-\tau_{1}(t))),f_{2}(x_{2}(t-\tau_{2}(t))), \ldots ,f_{n}(x_{n}(t-\tau_{n}(t)))]^{T}\in{R}^{n}\), \(y(t)=[y_{1}(t),y_{2}(t),\ldots ,y_{n}(t)]^{T}\in{R}^{n}\), \(u(t)=[u_{1}(t),u_{2}(t),\ldots,u_{n}(t)]^{T}\in{R}^{n}\). The bounded disturbance \(h(t)=[h_{1}(t),h_{2}(t),\ldots, h_{n}(t)]^{T}\in{R}^{n}\) is assumed to belong to the set \(\mathcal{H}=\{h|h^{T}h\leq1\}\).

Clearly, \(\operatorname{co}\{\hat{D},\check{D}\}=[\bar{D},\underline{D}]\), \(\operatorname{co}\{\hat {A},\check{A}\}=[\bar{A},\underline{A}]\), \(\operatorname{co}\{\hat{B},\check{B}\}=[\bar {B},\underline{B}]\), \(\operatorname{co}\{\hat{C},\check{C}\}=[\bar{C},\underline {C}]\), where \(\bar{A}=(\bar{a_{i}})_{n\times{n}}\), \(\underline{A}=(\underline {a}_{i})_{n\times{n}}\), \(\bar{B}=(\bar{b}_{ij})_{n\times{n}}\), \(\underline {B}=(\underline{b}_{ij})_{n\times{n}}\), \(\bar{C}=(\bar{c}_{ij})_{n\times {n}}\), \(\underline{C}=(\underline{c}_{ij})_{n\times{n}}\), \(\bar{D}=(\bar {d}_{ij})_{n\times{n}}\), \(\underline{D}=(\underline{d}_{ij})_{n\times{n}}\).

Let \(C=\frac{\underline{C}+\bar{C}}{2}\), \(A=\frac{\underline{A}+\bar {A}}{2}\), \(B=\frac{\underline{B}+\bar{B}}{2}\), \(D=\frac{\underline {D}+\bar{D}}{2}\), ∀ \(A^{\ast}\in\operatorname{co}\{\hat{A},\check{A}\}\), \(B^{\ast}\in\operatorname{co}\{ \hat{B},\check{B}\}\), \(C^{\ast}\in\operatorname{co}\{\hat{C},\check{C}\}\), \(D^{\ast}\in \operatorname{co}\{\hat{D},\check{D}\}\), \(A^{\ast}=A+\Delta{A}(t)\), \(B^{\ast}=B+\Delta {B}(t)\), \(C^{\ast}=C+\Delta{C}(t)\), \(D^{\ast}=D+\Delta{D}(t)\), (4) can be described as follows:

Moreover, if \(\hat{a}_{i}=\check{a}_{i}\), \(\hat{c}_{ij}=\check {c}_{ij}\), \(\hat{b}_{ij}=\check{b}_{ij}\), \(\hat{d}_{ij}=\check{d}_{ij}\) (\(i,j=1,2,\ldots,n\)), (5) can be expressed as follows:

Suppose the state feedback to be \(u=-Fx\), then system (6) is changed into

Moreover, throughout this paper, the neuron activation functions are assumed to satisfy the following assumption.

Assumption 2.1

The neuron activation function \(f(x(t))\) satisfies

where \(l_{j}>0\) is a known real constant.

To get the main results in this paper, the definition of disturbance attenuation is introduced as follows.

Definition 2.4

Given system (6), the controller \(u=-Fx\) is called disturbance attenuating if systems (7) satisfy the following conditions:

-

(1)

When \(h(t)=0\), systems (7) are globally asymptotically stable;

-

(2)

When \(h(t)\neq0\), there exists a bounded attractor for systems (7).

Remark 2.2

The attractor of systems (7) is the invariant set Ω, which not only lies in the fact that all the trajectories beginning from it will retain in it for any \(h\in {\mathcal{H}}\), but also subjects to the condition that any trajectories beginning from outside the set will ultimately go into the set for any \(h\in\mathcal{H}\).

To establish the feedback controller for systems (7), the following lemmas will be used in this paper.

Lemma 2.1

(Lyapunov–Razumikhin theorem [35])

Consider the following functional differential equation:

Assume that \(\phi\in{C}_{n,\tau}\) and the map \(f(\phi):{C}_{n,\tau }\mapsto{R}^{n}\) is continuous and Lipschitzian in ϕ and \(f(0)=0\). Suppose that \(u(s)\), \(\nu(s)\), \(w(s)\), and \(p(s)\in{R}^{+}\mapsto {R}^{+}\) are scalar, continuous, and nondecreasing functions, \(u(s)\), \(\nu(s)\), \(w(s)\) positive for \(s>0\), \(u(0)=\nu(0)=0\) and \(p(s)>s\) for \(s>0\). If there are a continuous function \(V:R^{n}\mapsto{R}\) and a positive number ρ such that, for all \(x_{t}\in{M}_{V(\rho)}:=\{\phi \in{C}_{n,\tau}:V(\phi(\theta))\leq\rho,\forall\theta\in[-\tau,0]\}\), the following conditions hold:

-

(1)

\(u( \Vert x \Vert )\leq{V}(x)\leq\nu( \Vert x \Vert )\);

-

(2)

\(\dot{V}(x(t))\leq-w( \Vert x \Vert )\), if \(V(x(t+ \theta ))< p (V (x(t) ) )\).

Then the solution \(x(t)\equiv0\) of (9) is asymptotically stable. Moveover, the set \({M}_{V(\rho)}\) is an invariant set inside the domain of attraction. Further, if \(u(s)\rightarrow\infty\) as \(s\rightarrow\infty\), then the solution \(x(t)\equiv0\) of (9) is globally stable.

Lemma 2.2

([27])

Let H, E, and \(G(t)\) be real matrices of appropriate dimensions with \(G(t)\) satisfying \(G(t)^{T}G(t)\leq{I}\). Then, for any scalar \(\varepsilon>0\),

3 Main results

In this paper, the disturbance attenuation is investigated for memristive recurrent neural networks with mixed time-varying delays. According to Definition 2.4, the condition is constructed for the global asymptotic stability of systems (7) when \(h(t)=0\). Secondly, it is proved that there exists a bounded attractor for systems (7) when \(h(t)\neq0\). For convenience, denote \(L=\operatorname{diag}\{ l_{1},l_{2},\ldots,l_{n}\}\).

Theorem 3.1

Under Assumption 2.1, the memristive neural network (7) with \(h(t)=0\) under a disturbance attenuating controller \(u(t)=-Fx(t)\) is asymptotically stable if there exist matrices \(Q>0\), F, positive constants \(\varepsilon_{i}\) (\(i=0,1,2\)), and any given positive constant ϵ such that the following inequality holds:

Proof

Consider the following Lyapunov–Razumikhin function candidate:

Taking the time-derivative of \(V(t)\) along the solution of (7) when \(h(t)=0\), the time-derivative of \(V(t)\) is

If there exist positive constants \(\varepsilon_{i}\) (\(i=0,1,2\)), by employing Lemma 2.2, it is easy to obtain

Moreover, according to Assumption 2.1, it is not difficult to get

Suppose \(p(s)=r\cdot{s}\) with \(r>1\) in Lemma 2.1, it is easy to get that \(p(s)>s\) for \(s>0\). Due to the condition \(V(x(\theta))\leq {p}(V(x(t)))\), \(\theta\in[t-\tau(t),t]\), that is, \(x^{T}(\theta)Qx(\theta )\leq{p}x^{T}(t)Qx(t)\). Thus, it is obvious that

In addition, choosing the controller to be \(u(t)=-Fx(t)\), it is easy to obtain

Because (11) holds, \(r>1\) is chosen to guarantee

Thus, combining (13) with (14), it is not difficult to obtain

It is obvious that \(\dot{V}(t)\) is negative definite. According to Lyapunov stability theory, systems (7) when \(h(t)=0\) are asymptotically stable. This completes our first step. Next, when \(h(t)\neq0\), it is proved that there really exists a bounded attractor for systems (7). □

Theorem 3.2

Under Assumption 2.1, the memristive neural network (7) with \(h(t)\neq0\) has a disturbance attenuating controller \(u(t)=-Fx(t)\) with an attractor as \(\Phi=\{ x|x^{T}Qx\leq1\}\) if there exist matrices \(Q>0\), M, F, positive constants \(\varepsilon_{i}\) (\(i=0,1,2\)), and any given positive constant ϵ such that the following inequality holds:

where

Proof

Consider the same Lyapunov–Razumikhin function candidate:

Taking the time-derivative of \(V(t)\) along the solution of (7) when \(h(t)\neq0\), the time-derivative of \(V(t)\) is

After the same discussion as that in Theorem 3.1, let \(M=QF\), it is easy to obtain

Applying Lemma 2.2 to the term \(2x^{T}(t)QGh(t)\), for the given positive ϵ, it is easy to get

Because the bounded disturbances are assumed to belong to the set \(\mathcal{H}=\{h|h^{T}h\leq1\}\), it is easy to obtain

Thus, it follows that

Applying the Schur complement to (16), it is equivalent to

By choosing \(r>1\), it implies that

Thus, it follows that

Obviously, \(\dot{V}(t)\) is negative outside the set Φ, the trajectories beginning from outside the set Φ will ultimately access the set Φ for any \(h\in\mathcal{H}\). Therefore, Φ is the invariant set of systems (7). So far, condition (2) of disturbance attenuation has been constructed. Meanwhile, the disturbance attenuating controller \(u(t)=-Fx(t)\) with an attractor as \(\Phi=\{x|x^{T}Qx\leq1\}\) has been designed. This completes our proof. □

Remark 3.1

In comparison to the published paper [31], our paper’s contribution lies in three aspects: Firstly, the studied memristive neural networks are more popular at present; secondly, the activation function is not needed to be strict to be \(f(x(\cdot))=0.5(|x(\cdot)+1|-|x(\cdot)-1|)\), but rather it is relaxed to just satisfy Lipschitz conditions; thirdly, the discussed model not only contains discrete time-varying delay but also includes distributed time-varying delay. Therefore, our results are more general to be well applied.

Remark 3.2

Recently, many scholars have studied different kinds of control theories about MRNNS such as exponential synchronization control [12], finite-time synchronization control [13], exponential lag adaptive synchronization control [18], lag synchronization control [19], and so on. However, to the best of our knowledge, there has not been any paper to discuss the disturbance attenuating controller design for MRNNs. This paper is the first one to investigate the disturbance attenuating controller for MRNNs, which is sure to strengthen the systematic research theory for MRNNs and must further enrich the basis of application for MRNNs.

4 Numerical examples

In this section, one example is presented to demonstrate the effectiveness of our results.

Example 4.1

Consider a two-neuron memristive neural network containing both persistent disturbances and mixed time-varying delays:

where

Meanwhile, the discrete time-varying delay is assumed to be \(\tau (t)=1+0.4\operatorname{sin}(5t)\), and the distributed time-varying delay is supposed to be \(\rho(t)=0.81|\operatorname{cos}(t)|\). In addition, the activation functions are assumed to be \(f_{i}(x_{i})=0.5(|x_{i}+1|-|x_{i}-1|)\) (\(i=1,2\)). Moreover, the disturbance \(h(t)=[0.03\operatorname{cos}t;0.02\operatorname{sin}t]\). Particularly, if we choose that \(\varepsilon_{0}=\varepsilon_{1}=\varepsilon_{1}=\epsilon=1\), by solving LMI (16), we obtain

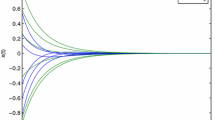

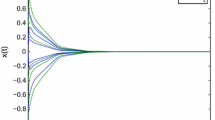

Figure 1 demonstrates the state trajectories of neural network (7) with \(u(t)=-Fx(t)\) when \(h(t)=0\). From Fig. 1, it shows that the neural networks are globally asymptotically stable under the feedback controller \(u(t)\). Figure 2 describes the disturbance attenuating controller \(u(t)=-Fx(t)\) with an attractor as \(\Phi=\{x|x^{T}Qx\leq1\}\) for neural network (7).

State trajectories of neural network (1) with \(u(t)\) in case of \(h=0\)

Remark 4.1

Comparatively speaking, although the feedback controller law is established in the form of bilinear matrix inequality (BMI), it can be easily solved by alternatively fixing some parameters and optimizing the rest. However, the LMIs in [31] are at least four, which is obviously difficult to be solved.

5 Conclusions

In this paper, the famous differential inclusions, set-valued maps, and Lyapunov–Razumikhin are employed to design a feedback controller law for MRNNs. A feedback controller law is obtained with less computation burden. In the future, other approach, such as the delay-partitioning technique, can be employed to further reduce the conservativeness of the obtained result.

References

Filippov, A.: Differential Equations with Discontinuous Right Hand Sides. Kluwer Academic, Boston (1988)

Cao, J.D., Huang, D., Qu, Y.: Global robust stability of delayed recurrent neural networks. Chaos Solitons Fractals 23, 221–229 (2005)

Cao, J.D., Wang, J.: Global asymptotic and robust stability of recurrent neural networks with time delays. IEEE Trans. Circuits Syst. I 52, 417–426 (2005)

Ozcan, N., Arik, S.: Global robust stability analysis of neural networks with multiple time delays. IEEE Trans. Circuits Syst. I 53, 166–176 (2006)

Faydasicok, O., Arik, S.: Robust stability analysis of a class of neural networks with discrete time delays. Neural Netw. 29–30, 52–59 (2012)

Zeng, Z.G., Wang, J.: Global exponential stability of recurrent neural networks with time-varying delays in the presence of strong external stimuli. Neural Netw. 19, 1528–1537 (2006)

Zeng, Z.G., Wang, J., Liao, X.: Global asymptotic stability and global exponential stability of neural networks with unbounded time-varying delays. IEEE Trans. Circuits Syst. II, Express Briefs 52, 168–173 (2005)

Shen, Y., Wang, J.: An improved algebraic criterion for global exponential stability of recurrent neural networks with time-varying delays. IEEE Trans. Neural Netw. 19, 528–531 (2008)

Shen, Y., Xia, X.H.: Semi-global finite-time observers for nonlinear systems. Automatica 44, 3152–3156 (2008)

Chua, L.O.: Memristor—The missing circuit element. IEEE Trans. Circuit Theory 18, 507–519 (1971)

Strukov, D.B., Snider, G.S., Stewart, D.R., Williams, R.S.: The missing memristor found. Nature 453, 80–83 (2008)

Wu, A.L., Zeng, Z.G., Zhu, X., Zhang, J.: Exponential synchronization of memristor-based recurrent neural networks with time delays. Neurocomputing 74, 3043–3050 (2011)

Jiang, M.H., Wang, S., Mei, J., Shen, Y.: Finite-time synchronization control of a class of memristor-based recurrent neural networks. Neural Netw. 63, 133–140 (2015)

Wen, S.P., Zeng, Z.G., Huang, T.W., Chen, Y.R.: Passivity analysis of memristor-based recurrent neural networks with time-varying delays. J. Franklin Inst. 350, 2354–2370 (2013)

Xiao, J.Y., Zhong, S.M., Li, Y.T., Xu, F.: Finite-time Mittag–Leffler synchronization of fractional-order memristive BAM neural networks with time delays. Neurocomputing 219, 431–439 (2017)

Wu, A.L., Zeng, Z.G.: Exponential passivity of memristive neural networks with time delays. Neural Netw. 49, 11–18 (2014)

Chandrasekar, A., Rakkiyappan, R., Cao, J., Lakshmanan, S.: Synchronization of memristor-based recurrent neural networks with two delay components based on second-order reciprocally convex approach. Neural Netw. 57, 79–93 (2014)

Wen, S.P., Zeng, Z.G., Huang, T.W., Zhang, Y.D.: Exponential lag adaptive synchronization of memristive neural networks and applications in pseudo-random generators. IEEE Trans. Fuzzy Syst. 22, 1704–1713 (2014)

Wen, S.P., Zeng, Z.G., Huang, T.W., Meng, Q.G., Yao, W.: Lag synchronization of switched neural networks via neural activation function and applications in image encryption. IEEE Trans. Neural Netw. Learn. Syst. 26, 1493–1502 (2015)

Wen, S.P., Huang, T.W., Zeng, Z.G., Chen, Y., Li, P.: Circuit design and exponential stabilization of memristive neural networks. Neural Netw. 63, 48–56 (2015)

Wen, S.P., Bao, G., Zeng, Z.G., Chen, Y.R., Huang, T.W.: Global exponential synchronization of memristor-based recurrent neural networks with time-varying delays. Neural Netw. 48, 195–203 (2013)

Wen, S.P., Zeng, Z.G., Huang, T.W.: \(H_{\infty}\) filtering for neutral systems with mixed delays and multiplicative noises. IEEE Trans. Circuits Syst. II, Express Briefs 59, 820–824 (2012)

Wen, S.P., Zeng, Z.G., Huang, T.W., Li, C.J., Chen, Y.R.: Passivity and passification of stochastic impulsive memristor-based piecewise linear system with mixed delays. Int. J. Robust Nonlinear Control 25, 610–624 (2015)

Xiao, J.Y., Zhong, S.M., Li, Y.T.: Relaxed dissipativity criteria for memristive neural networks with leakage and time-varying delays. Neurocomputing 171, 707–718 (2016)

Xiao, J.Y., Zhong, S.M., Li, Y.T.: Improved passivity criteria for memristive neural networks with interval multiple time-varying delays. Neurocomputing 171, 1414–1430 (2016)

Mathiyalagan, K., Anbuvithya, R., Sakthivel, R., Park, J.H., Prakash, P.: Reliable stabilization for memristor-based recurrent neural networks with time-varying delays. Neurocomputing 153, 140–147 (2015)

Xiao, J.Y., Zhong, S.M., Li, Y.T.: New passivity criteria for memristive uncertain neural networks with leakage and time-varying delays. ISA Trans. 59, 133–148 (2015)

Du, Y., Zhong, S., Zhou, N., Nie, L., Wang, W.: Exponential passivity of BAM neural networks with time-varying delays. Appl. Math. Comput. 221, 727–740 (2013)

Xiao, J., Li, Y., Zhong, S., Xu, F.: Extended dissipative state estimation for memristive neural networks with time-varying delay. ISA Trans. 64, 113–128 (2016)

Zhang, R., Zeng, D., Zhong, S.M., Yu, Y.: Event-triggered sampling control for stability and stabilization of memristive neural networks with communication delays. Appl. Math. Comput. 310, 57–74 (2017)

He, H.L., Yan, L., Jiang, M.: Study on disturbance attenuation of cellular neural networks with time-varying delays. Appl. Math. Comput. 244, 533–541 (2014)

Xiao, J.Y., Zhong, S.M.: Extended dissipative conditions for memristive neural networks with multiple time delays. Appl. Math. Comput. 323, 145–163 (2018)

Kwon, O.M., Park, J.H.: New delay-dependent robust stability criterion for uncertain neural networks with time-varying delays. Appl. Math. Comput. 205, 417–427 (2008)

Park, P., Ko, J.W., Jeong, C.K.: Reciprocally convex approach to stability of systems with time-varying delays. Automatica 47, 235–238 (2011)

Hale, J., Lunel, V.: Introduction to Functional Differential Equations. Springer, New York (1993)

Aubin, J., Frankowska, H.: Set-Valued Analysis. Springer, Berlin (2009)

Wu, X., Ma, X., Zhu, Z., Lu, T.: Topological ergodic shadowing and chaos on uniform spaces. Int. J. Bifurc. Chaos 28, 1850043 (2018)

Wu, X., Ding, X., Lu, T., Wang, J.: Topological dynamics of Zadeh’s extension on upper semi-continuous fuzzy sets. Int. J. Bifurc. Chaos 27, 1750165 (2017)

Wu, X., Wang, X., Chen, G.: On the large deviations theorem of weaker types. Int. J. Bifurc. Chaos 27, 1750127 (2017)

Wu, X., Wang, J., Chen, G.: F-sensitivity and multi-sensitivity of hyperspatial dynamical systems. J. Math. Anal. Appl. 429, 16–26 (2005)

Liu, Y., Park, J.H., Guo, B., Shu, Y.: Further results on stabilization of chaotic systems based on fuzzy memory sampled-data control. IEEE Trans. Fuzzy Syst. 26, 1040–1045 (2018)

Shi, K., Tang, Y., Liu, X., Zhong, S.: Non-fragile sampled-data robust synchronization of uncertain delayed chaotic Lurie systems with randomly occurring controller gain fluctuation. ISA Trans. 66, 185–199 (2017)

Shi, K., Liu, X., Tang, Y., Zhu, H., Zhong, S.: Some novel approaches on state estimation of delayed neural networks. Inf. Sci. 372, 313–331 (2016)

Shi, K., Liu, X., Zhu, H., Zhong, S., Liu, Y., Yin, C.: Novel integral inequality approach on master–slave synchronization of chaotic delayed Lur’e systems with sampled-data feedback control. Nonlinear Dyn. 83, 1259–1274 (2016)

Shi, L., Zhu, H., Zhong, S., Shi, K., Cheng, J.: Cluster synchronization of linearly coupled complex networks via linear and adaptive feedback pinning controls. Nonlinear Dyn. 88, 859–870 (2017)

Zhu, G., Meng, X., Cheng, L.: The dynamics of a mutual interference age structured predator-prey model with time delay and impulsive perturbations nn predators. Appl. Math. Comput. 216, 308–316 (2010)

Guo, R., Zhang, Z., Liu, X., Lin, C.: Existence, uniqueness, and exponential stability analysis for complex-valued memristor-based BAM neural networks with time delays. Appl. Math. Comput. 311, 100–107 (2017)

Zhang, T., Meng, X.: Stability analysis of a chemostat model with maintenance energy. Appl. Math. Lett. 68, 1–7 (2017)

Acknowledgements

This work was supported in part by the National Science Foundation of China under Grant 61202045, Grant 11501475,Grant 61703060, in part by the Science and Technology Innovation Team of Education Department of Sichuan for Dynamical System and its Applications (No. 18TD0013),and in part by the Program of Science and Technology of Sichuan Province of China under Grant No. 2016JY0067.

Author information

Authors and Affiliations

Contributions

All authors contributed equally to the writing of this paper. All authors read and approved the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Xiao, J., Zhong, S. & Xu, F. Design disturbance attenuating controller for memristive recurrent neural networks with mixed time-varying delays. Adv Differ Equ 2018, 189 (2018). https://doi.org/10.1186/s13662-018-1641-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-018-1641-8