Abstract

The exact solution of fractional combined Korteweg-de Vries and modified Korteweg-de Vries (KdV–mKdV) equation is obtained by using the \((1/G^{\prime})\) expansion method. To investigate a geometrical surface of the exact solution, we choose \(\gamma=1\). The collocation method is applied to the fractional combined KdV–mKdV equation with the help of radial basis for \(0<\gamma<1\). \(L_{2}\) and \(L_{\infty}\) error norms are computed with the Mathematica program. Stability is investigated by the Von-Neumann analysis. Instable numerical solutions are obtained as the number of node points increases. It is shown that the reason for this situation is that the exact solution contains some degenerate points in the Lorentz–Minkowski space.

Similar content being viewed by others

1 Introduction

Fractional calculus is known as a generalization of the derivative and integral of non-integer order. The birth of fractional calculus goes as Leibniz and Newton’s differential calculus. Leibniz firstly introduced fractional order derivatives of non-integer order for the function \(f(x)=e^{mx}\), \(m\in R\) as follows:

where n is non-integer value.

Later, this frame of derivatives was studied by Liouville, Riemann, Weyl, Lacroix, Leibniz, Grunward, Letnikov, etc. (cf. [1]).

Since the inception of the definition of fractional order derivatives created by Leibniz, fractional partial differential equations have drawn attention of many mathematicians and have also shown an increasing development (cf. [2–14] etc.). Recently, analytical solutions of fractional differential equations have been obtained by the authors in [15, 16]. Furthermore, there exist many various applications of fractional partial differential equations in physics and engineering such as viscoelastic mechanics, power-law phenomenon in fluid and complex network, biology and ecology of allometric measurement legislation, colored noise, the electrode–electrolyte polarization, dielectric polarization, electromagnetic waves, numerical finance, etc. (cf. [17–21]).

Beside these facts, the combined KdV–mKdV equation, considered as the combination of KdV equation and mKdV equations, drew attention of several authors (cf. [22–30] etc.). The combined KdV–mKdV equation is one of the most popular equations in soliton physics and wave propagation of bound particle. The combined KdV–mKdV equation can be expressed as follows:

where α, β, and s are real constants. In general, the fractional combined KdV–mKdV equation is given in the following form for \(\alpha=2\), \(\beta=3\), \(s=-1\):

with the initial condition

and with boundary conditions

In recent years, the collocation method has been a useful alternative tool to obtain numerical solutions since this method yields multiple numerical solutions depending on whether numerical methods such as finite differences, Runge–Kutta and Crank–Nicolson methods yield only numerical solutions. Using a few numbers of collocation points, this method has been widely studied by various authors to obtain high accuracy in numerical analysis (cf. [31–36]). On the other hand, radial basis functions are univariate functions which depend only on the distance between points and they are attractive to high dimensional differential equations. Furthermore, implementation and coding of the collocation method are very practical by using these bases. However, this method usually gives very efficient results as the number of node points is increased. We will see that it is not true when finding numerical solutions of the fractional combined KdV–mKdV equation in the present paper. This situation led us to examine the geometry of numerical solutions.

In the present paper, we obtain the exact solution of the fractional combined KdV–mKdV equation by using the \((1/G^{\prime })\) expansion method. With the help of radial basis functions, we apply the collocation method to this equation and obtain numerical solutions. We recognize that numerical solutions are more accurate for \(h=0.1\) than for \(h=0.01\). Therefore, we investigate the exact solution of the combined KdV–mKdV equation in a Lorentz–Minkowski space. Furthermore, we compute the Gauss curvature and the mean curvature of the exact solution and give a geometrical interpretation of these curvatures at the node points of our numerical solution. Finally, we observe that the exact solution contains some degenerate points in the Lorentz–Minkowski space at \(h=0.01\).

2 Analysis of \((1/G^{\prime})\)-expansion method

The \((1/G^{\prime})\) expansion method is used to obtain traveling wave solutions in nonlinear differential equation. In this section, we shall firstly mention a simple description of the \((1/G^{\prime})\)-expansion method by following [37]. Later, we shall obtain the exact solution of the combined KdV–mKdV equation by using this method.

Let us consider the following two-variable general form of nonlinear partial differential equation:

If we apply \(u=u ( x,t ) =u ( \xi ) \), \(\xi=x-Vt\) and V is a constant in Eq. (5), we get a nonlinear ordinary differential equation for \(u ( \xi ) \) as follows:

Now, assume that a solution of Eq. (6) can be stated as a polynomial in \((1/G^{\prime})\) by

where \(a_{i}\) (\(i=0,1,2,\ldots,m\)), m is a positive integer determined by balancing the highest order derivative with the highest nonlinear terms in Eq. (6), and \(G=G ( \xi ) \) satisfies the following second order linear ordinary differential equation:

Here, μ and λ are constants.

The method is constructed as follows.

Firstly, if we substitute solution (7) into Eq. (6), then we obtain the second order IODE given in (8). Later, using (8), we have a set of algebraic equations of the same order of \((1/G^{\prime})\) which have to vanish. That is, all coefficients of the same order have to vanish. After we have manipulated these algebraic equations, we can find \(a_{i}\), \(i\geq0\), and V are constants and then, substituting \(a_{i}\) and the general solutions of Eq. (8) into (7), we can obtain solutions of Eq. (5).

Example 2.1

Let us consider Eq. (2) for \(\gamma=1\). When balancing \(u^{2}u_{x}\) with \(u_{xxx}\), it is obtained that \(m=1\). Thus, putting \(u=u ( x,t ) =u ( \xi ) \), \(\xi=x-Vt\) and taking integral in Eq. (2), we get

Now let us write

Substituting Eq. (9) into Eq. (10), we have a group of algebraic equations for the coefficients \(a_{0}\), \(a_{1}\), δ, μ, c, λ, and V. These systems are given as follows:

If we find the solutions of system (11) with the aid of Mathematica, then the following cases occur.

Case 1. If we put

and substitute \(a_{0}\) and \(a_{1}\) values into (10), we have the following two types of wave solutions of Eq. (2):

(see Fig. 1).

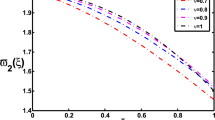

Exact solution \(u_{1} ( x,t ) \) of Eq. (2) by substituting the values \(\lambda=0.834\), \(\mu =1\), \(\alpha=0.8\), \(-3\leq x\leq3\), \(-4\leq t\leq4\), \(\gamma=1\) for the 2D graphic

Case 2. If we put

and substitute \(a_{0}\) and \(a_{1}\) values into (10), we have the following two types of wave solutions of Eq. (2):

(see Fig. 2).

Exact solution \(u_{2} ( x,t )\) of Eq. (2) by substituting the values \(\lambda=0.834\), \(\mu=1\), \(\alpha=0.8\), \(-3\leq x\leq3\), \(-4\leq t\leq4\), \(\gamma=1\) for the 2D graphic

3 Collocation method using radial basis functions

In Eq. (2), \(\frac{\partial^{\gamma}u}{\partial t^{\gamma}}\) is the Caputo fractional derivative through L1 formula of \(u ( x,t )\), which can be written as follows:

Now, we discretize the time derivative in (2) by using the Caputo derivative defined in [38] through L1 formula and the space derivative by the Crank–Nicolson formula between two time levels n and \(n+1\), respectively. Thus, we have

Nonlinear terms of the above equation can be linearized by using the following equations:

By a straightforward computation in Eq. (19), it follows that

Now, we shall use radial basis functions.

Radial basis functions are often very variable functions, and their values depend on the distance from the origin. Thus \(\phi ( x ) =\phi ( r ) \in \mathbb{R}\), \(x\in \mathbb{R}^{n}\) or it is the distance from a point \(\{ x_{j} \} \) of \(\phi ( x-x_{j} ) =\phi ( r_{j} ) \in \mathbb{R}\). We note each function providing \(\phi(x)=\phi ( \Vert x \Vert _{2} ) \). In general, norm \(r_{j}= \Vert x-x_{j} \Vert _{2}\) is considered to be the Euclidean distance. Globally supported radial basis functions throughout the present paper are given as follows:

where c is the shape parameter. Let \(x_{i}\) (\(i=0,\ldots,n\)) be the collocation points in the interval \([ a,b ] \) such that \(x_{1}=a\) and \(x_{n}=b\). Then Eq. (2) is expressed with the following approximate solution:

Here, n is the number of data points; ϕ is some radial basis functions; \(\lambda_{j}\) are unknown coefficients defined by collocation methods. Thus, for each collocation point, Eq. (24) becomes

Putting Eq. (25) into Eq. (22) and writing the collocation points \(x_{i}\) (\(i=0,\ldots,n\)) instead of x, we obtain the following equations:

and

Equations (26) and (27) display \((n+1)\) linear equation systems in \((n+1)\) unknown \(\lambda_{j}^{n+1}\) parameters. Before the solution of the system, boundary conditions \(u ( a,t ) =\alpha_{1}\) and \(u ( b,t ) =\alpha_{2}\) are applied. Thus, \(n\times n\) type equation systems are obtained in each point of the range from Eq. (25).

3.1 Stability analysis

In this subsection, we shall investigate the stability of this method with the help of Von-Neumann analysis.

The third order derivatives in (25) can be approximated using linear combinations of values of \(u^{k}( x)\) as follows:

where \(\{ \beta_{i}^{ ( k,i ) } \} _{i=1}^{N}\) is the RBF-FD coefficient corresponding to the third order derivatives. For simplicity, the stencils with three uniform nodes are used. It is well known that stability analysis is only applied to partial differential equations with constant coefficients. Let the stencils with three uniform nodes be used [39]. For \(x= \{ x_{m-1},x_{m},x_{m+1} \} \), we get

where \(A=u^{n}\), \(B=u_{x}^{n}\).

Assume the solutions of (29) to be as follows:

where ℓ is the imaginary unit and φ is real. Firstly, substituting the Fourier mode (30) into the recurrence relationship (29), we obtain

Next, let \(\xi^{n+1}=\zeta\xi^{n}\) and assume that \(\zeta=\zeta ( \varphi ) \) is independent of time. Then we easily obtain the following expression:

Let us denote Eq. (32) as follows:

where

for

Therefore, we get

If \(\vert \zeta \vert \leq1\) conditional is satisfied, then we see that the proposed method is unconditionally stable.

3.2 \(L_{2}\) and \(L_{\infty}\) error norms

For the test problem used in the present study, numerical solutions of Eq. (2) are computed with help of Mathematica software. Both \(L_{2}\) error norms are given by

and \(L_{\infty}\) error norm is given by

They are calculated to show the accuracy of the results.

3.3 Test problem

For \(\lambda=0.834\), \(\mu=1\), \(-\gamma=0.8\), the exact solution of the fractional combined KdV–mKdV equation is given as follows:

for \(t\geq t_{0}\), \(0\leq x\leq1\).

In our computations, the linearization technique has been applied for the numerical solution of the test problem. Then, the numerical tests are performed using the radial basis function GA, IQ, \(IMQ\), MQ. The collocation matrix does not become ill-conditional during the run algorithms with GA radial basis functions for \(c=10^{-15}\) at Eq. (2). In Table 1, the error norms \(L_{2}\) and \(L_{\infty}\) of numerical solutions with IQ basis are compared for \(c=10^{-15}\) and \(\Delta t=0.01\) at times \(t=1\). In Fig. 3, the numerical solutions obtained by IQ, \(IMQ\), MQ bases are compared for \(h=0.1\) at times \(t=1\).

We obtain good results for \(h=0.1\). However, we do not get better results for \(h=0.01\). Furthermore, we cannot find any result as the number of nodes increases.

4 Geometry of the exact solution

The geometry of the exact solutions of various equations has been intensely studied by different authors in various ways (cf. [40–44]). In this section, we are going to investigate the exact solution and the numerical solutions in the 3-dimensional space-time known as Lorentz–Minkowski space \(\mathbb{R}_{1}^{3}\). The main reason for choosing to work in this space is that the Lorentz–Minkowski space plays an important role in both special relativity and general relativity with space coordinates and time coordinates.

First, we need to recall some basic facts and notations in \(\mathbb{R}_{1}^{3}\) (cf. [45–49]).

Let \(X= ( x_{1},x_{2},x_{3} ) \) and \(Y= ( y_{1},y_{2},y_{3} ) \) be any two vector fields in \(\mathbb{R}_{1}^{3}\). Then inner product of X and Y is defined by

Note that a vector field X is called

-

(i)

a timelike vector if \(\langle X,X \rangle<0\),

-

(ii)

a spacelike vector if \(\langle X,X \rangle>0\),

-

(iii)

a lightlike \(( \text{or degenerate} ) \) vector if \(\langle X,X \rangle=0\) and \(X\neq0\).

Thus, the inner product in \(\mathbb{R}_{1}^{3}\) splits each vector field into three categories, namely (i) spacelike, (ii) timelike, and (iii) lightlike (degenerate) vectors. The category is known as causal character of a vector. The set of all lightlike vectors is called null cone. Furthermore, the norm of a vector X is defined by its causal character as follows:

-

(i)

\(\Vert X \Vert =\sqrt{ \langle X,X \rangle}\) if X is a spacelike vector,

-

(ii)

\(\Vert X \Vert =-\sqrt{ \langle X,X \rangle}\) if X is a timelike vector.

Let X be a unit timelike vector and \(e=(0,0,1)\) in \(\mathbb{R}_{1}^{3}\). Then X is called

-

(i)

a timelike future pointing vector if \(\langle X,e \rangle>0\),

-

(ii)

a timelike past pointing vector if \(\langle X,e \rangle <0\).

Now, let \(r ( x,t ) \) be a surface in \(\mathbb{R}_{1}^{3}\). Then the normal vector N at a point in \(r ( x,t ) \) is given by

where ∧ denotes the wedge product in \(\mathbb{R}_{1}^{3}\). A surface is called

-

(i)

a timelike surface if N is spacelike,

-

(ii)

a spacelike surface if N is timelike,

-

(iii)

a lightlike (or degenerate) surface if N is lightlike.

We note that a point is called regular if \(N\neq0\) and singular if \(N=0\).

Now, let us consider a surface given by

where \(u ( x,t )\) is the exact solution of the fractional combined KdV–mKdV equation given by

In view of (36), the normal vector field of \(r ( x,t ) \) becomes

From (38), it is clear that \(r ( x,t ) \) is a regular surface, that is, every point of it is a regular point.

As a consequence of the above facts, we immediately get the following.

Corollary 4.1

For the node points, we have Table 2.

Remark 4.2

From Table 2, we see that the surface \(r(x,t)\) contains at least one degenerate point near \(x=0.94\). As the number of node points increases, we approach degenerate points. Therefore, numerical solutions become instable when the number of node points increases.

5 Gaussian curvature of node points

Another important fact for a surface is to compute the Gaussian curvature which is an intrinsic character of it. The Gaussian curvature is the determinant of the shape operator. For a surface \(r ( x,t ) \), we shall apply the following useful way to compute the Gaussian curvature:

Consider \(\langle N,N\rangle=\varepsilon\|N\|\), where \(\varepsilon =\mp1\). Let us define

and

Then the Gaussian curvature \(K ( p ) \) at a point p of a surface satisfies

We note that

-

(i)

\(K ( p ) >0\) means that the surface \(r ( x,t ) \) is shaped like an elliptic paraboloid near p. In this case, p is called an elliptic point.

-

(ii)

\(K ( p ) <0\) means that the surface \(r ( x,t ) \) is shaped like a hyperbolic paraboloid near p. In this case, p is called a hyperbolic point.

-

(iii)

\(K ( p ) =0\) means that the surface \(r ( x,t ) \) is shaped like a parabolic cylinder or a plane near p. In this case, p is called a parabolic point.

Now, let us consider the surface given in (37). From (39) and (40), by a straightforward computation, we get

Another important kind of curvatures is mean curvature which measures the surface tension from the surrounding space at a point. The mean curvature is a trace of the second fundamental form. For a surface \(r(x,t) \), we shall apply the following useful way to compute the mean curvature \(H(p)\):

If \(H(p)=0\) for all points of \(r(x,t)\), then the surface is called minimal. Furthermore, if the value of the mean curvature at a point p receives at least a possible amount of tension from the surrounding space, then p is called ideal point. That is, if a point in a surface is affected as little as possible from the external influence, then it becomes ideal.

From (41), we obtain

As a consequence of the above facts, we get the following corollary:

Corollary 5.1

For the node points of \(r(x,t)\), we have Table 3.

Remark 5.2

From Table 3, we see that if x approaches 0.94, then the values of Gauss curvature and mean curvature change remarkably. Therefore, there exists the maximum external influence near the point 0.94.

6 Conclusions

Using the \((1/G')\) expansion method, the exact solution \(r(x,t)\) of the fractional combined KdV–mKdV equation is obtained. The numerical solutions of the fractional combined KdV–mKdV equation are shown by using the collocation method. These solutions are compared with the exact solution. The computational efficiency and effectiveness of the proposed method were tested on a problem. The error norms \(L_{2}\) and \(L_{\infty}\) have been calculated. The obtained results show that the error norms are small during all computer runs for all bases except for MQ basis. It was proved that the present method is a particularly successful numerical scheme to solve the fractional combined KdV–mKdV equation. However, numerical solutions are more accurate for \(h=0.1\) than for \(h=0.01\). Therefore, casual character of the exact solution was expressed at the nodal points. From Tables 1 and 2, it was realized that the most accurate numerical solution occurred in the timelike case of \(r(x,t)\), and there exists at least one degenerate point near \(x=0.94\). Furthermore, from Table 3, it was realized that the most accurate numerical solution occurred at the elliptic points of \(r(x,t)\), and the ideal node point of \(r(x,t)\) is \(x=0\).

References

Das, S.: Functional Fractional Calculus. Springer, Berlin (2011)

Agarwal, R.P., Benchohra, M., Hamani, S., Pinelas, S.: Boundary value problems for differential equations involving Riemann–Liouville fractional derivative on the half line. Dyn. Contin. Discrete Impuls. Syst., Ser. A Math. Anal. 18(2), 235–244 (2011)

Agarwal, R.P., Benchohra, M., Hamani, S.: Boundary value problems for fractional differential equations. Georgian Math. J. 16(3), 401–411 (2009)

Kaya, D., Yokuş, A.: Stability analysis and numerical solutions for time fractional KdVb equation. In: International Conference on Computational Experimental Science and Engineering, Antalya (2014)

Khater, A., Helal, M., El-Kalaawy, O.: Bäcklund transformations: exact solutions for the KdV and the Calogero–Degasperis–Fokas mKdV equations. Math. Methods Appl. Sci. 21(8), 719–731 (1998)

Kumar, D., Singh, J., Baleanu, D., et al.: Analysis of regularized long-wave equation associated with a new fractional operator with Mittag–Leffler type kernel. Phys. A, Stat. Mech. Appl. 492, 155–167 (2018)

Kumar, D., Singh, J., Baleanu, D.: A new fractional model for convective straight fins with temperature-dependent thermal conductivity. Therm. Sci. 1, 1–12 (2017)

Momani, S., Noor, M.A.: Numerical methods for fourth-order fractional integro-differential equations. Appl. Math. Comput. 182(1), 754–760 (2006)

Momani, S., Odibat, Z.: Homotopy perturbation method for nonlinear partial differential equations of fractional order. Phys. Lett. A 365(5), 345–350 (2007)

Safari, M., Ganji, D., Moslemi, M.: Application of He’s variational iteration method and Adomian’s decomposition method to the fractional KdV–Burgers–Kuramoto equation. Comput. Math. Appl. 58(11), 2091–2097 (2009)

Sakar, M.G., Akgül, A., Baleanu, D.: On solutions of fractional Riccati differential equations. Adv. Differ. Equ. 2017(1), 39 (2017)

Singh, J., Kumar, D., Baleanu, D.: On the analysis of chemical kinetics system pertaining to a fractional derivative with Mittag–Leffler type kernel. Chaos, Interdiscip. J. Nonlinear Sci. 27(10), 103113 (2017)

Song, L., Zhang, H.: Application of homotopy analysis method to fractional KdV–Burgers–Kuramoto equation. Phys. Lett. A 367(1), 88–94 (2007)

Wei, L., He, Y., Yildirim, A., Kumar, S.: Numerical algorithm based on an implicit fully discrete local discontinuous Galerkin method for the time-fractional KdV–Burgers–Kuramoto equation. Z. Angew. Math. Mech. 93(1), 14–28 (2013)

Choi, J., Kumar, D., Singh, J., Swroop, R.: Analytical techniques for system of time fractional nonlinear differential equations. J. Korean Math. Soc. 54(4), 1209–1229 (2017)

Kumar, D., Singh, J., Baleanu, D.: A new numerical algorithm for fractional Fitzhugh–Nagumo equation arising in transmission of nerve impulses. Nonlinear Dyn. 91, 307–317 (2018)

Baleanu, D., Jajarmi, A., Hajipour, M.: A new formulation of the fractional optimal control problems involving Mittag–Leffler nonsingular kernel. J. Optim. Theory Appl. 175(3), 718–737 (2017)

Baleanu, D., Jajarmi, A., Asad, J., Blaszczyk, T.: The motion of a bead sliding on a wire in fractional sense. Acta Phys. Pol. A 131(6), 1561–1564 (2017)

Hajipour, M., Jajarmi, A., Baleanu, D.: An efficient nonstandard finite difference scheme for a class of fractional chaotic systems. J. Comput. Nonlinear Dyn. 13(2), 021013 (2018)

Jajarmi, A., Hajipour, M., Baleanu, D.: New aspects of the adaptive synchronization and hyperchaos suppression of a financial model. Chaos Solitons Fractals 99, 285–296 (2017)

Miller, K.S., Ross, B.: An Introduction to the Fractional Calculus and Fractional Differential Equations (1993)

Aslan, E.C., Inc, M., Qurashi, M.A., Baleanu, D.: On numerical solutions of time-fraction generalized Hirota Satsuma coupled KdV equation. J. Nonlinear Sci. Appl. 10(2), 724–733 (2017)

Bandyopadhyay, S.: A new class of solutions of combined KdV–mKdV equation. arXiv preprint (2014). arXiv:1411.7077

Djoudi, W., Zerarka, A.: Exact solutions for the KdV–mKdV equation with time-dependent coefficients using the modified functional variable method. Cogent Math. 3(1), 1218405 (2016)

Jafari, H., Tajadodi, H., Baleanu, D., Al-Zahrani, A.A., Alhamed, Y.A., Zahid, A.H.: Exact solutions of Boussinesq and KdV–mKdV equations by fractional sub-equation method. Rom. Rep. Phys. 65(4), 1119–1124 (2013)

Kaya, D., Inan, I.E.: A numerical application of the decomposition method for the combined KdV–mKdV equation. Appl. Math. Comput. 168(2), 915–926 (2005)

Krishnan, E., Peng, Y.-Z.: Exact solutions to the combined KdV–mKdV equation by the extended mapping method. Phys. Scr. 73(4), 405–409 (2006)

Lu, D., Shi, Q.: New solitary wave solutions for the combined KdV–mKdV equation. J. Inf. Comput. Sci. 8(7), 1733–1737 (2010)

Sierra, C.G., Molati, M., Ramollo, M.P.: Exact solutions of a generalized KdV–mKdV equation. Int. J. Nonlinear Sci. 13(1), 94–98 (2012)

Triki, H., Taha, T.R., Wazwaz, A.-M.: Solitary wave solutions for a generalized KdV–mKdV equation with variable coefficients. Math. Comput. Simul. 80(9), 1867–1873 (2010)

Golbabai, A., Nikan, O.: A meshless method for numerical solution of fractional differential equations. Casp. J. Math. Sci. 4(1), 1–8 (2015)

Rong-Jun, C., Yu-Min, C.: A meshless method for the compound KdV–Burgers equation. Chin. Phys. B 20(7), 070206 (2011)

Hu, H.-Y., Li, Z.-C., Cheng, A.H.-D.: Radial basis collocation methods for elliptic boundary value problems. Comput. Math. Appl. 50(1–2), 289–320 (2005)

Hon, Y., Schaback, R.: On unsymmetric collocation by radial basis functions. Appl. Math. Comput. 119(2), 177–186 (2001)

Haq, S., Uddin, M., et al.: Numerical solution of nonlinear Schrodinger equations by collocation method using radial basis functions. Comput. Model. Eng. Sci. 44(2), 115–136 (2009)

Šarler, B., Vertnik, R., Kosec, G., et al.: Radial basis function collocation method for the numerical solution of the two-dimensional transient nonlinear coupled Burgers equations. Appl. Math. Model. 36(3), 1148–1160 (2012)

Yokus, A., Kaya, D.: Numerical and exact solutions for time fractional Burgers’ equation. J. Nonlinear Sci. Appl. 10(7), 3419–3428 (2017)

Oldham, K., Spanier, J.: The Fractional Calculus. Academic Press, New York (1974)

Golbabai, A., Mohebianfar, E.: A new stable local radial basis function approach for option pricing. Comput. Econ. 49(2), 271–288 (2017)

Sasaki, R.: Soliton equations and pseudospherical surfaces. Nucl. Phys. B 154(2), 343–357 (1979)

Bracken, P.: Surfaces specified by integrable systems of partial differential equations determined by structure equations and Lax pair. J. Geom. Phys. 60(4), 562–569 (2010)

Altalla, F.H.: Exact solution for some nonlinear partial differential equation which describes pseudo-spherical surfaces. PhD thesis, Zarqa University (2015)

Matveev, V.B., Matveev, V.: Darboux Transformations and Solitons (1991)

Rogers, C., Schief, W.K.: Bäcklund and Darboux Transformations: Geometry and Modern Applications in Soliton Theory. Cambridge Texts in Applied Mathematics, vol. 30. Cambridge University Press, Cambridge (2002)

Duggal, K.L., Bejancu, A.: Lightlike Submanifolds of Semi-Riemannian Manifolds and Applications. Mathematics and Its Applications, vol. 364. Springer, Dordrecht (2013)

Duggal, K.L., Jin, D.H.: Null Curves and Hypersurfaces of Semi-Riemannian Manifolds. World Scientific, Singapore (2007)

Duggal, K.L., Sahin, B.: Differential Geometry of Lightlike Submanifolds. Springer, Basel (2011)

López, R.: Differential geometry of curves and surfaces in Lorentz–Minkowski space. arXiv preprint (2008). arXiv:0810.3351

O’Neill, B.: Semi-Riemannian Geometry with Applications to Relativity. Pure and Applied Mathematics, vol. 103. Academic Press, New York (1983)

Acknowledgements

The authors are thankful to the referees for their valuable comments and constructive suggestions towards the improvement of the paper.

Author information

Authors and Affiliations

Contributions

All authors contributed equally to the writing of this paper. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Kaya, D., Gülbahar, S., Yokuş, A. et al. Solutions of the fractional combined KdV–mKdV equation with collocation method using radial basis function and their geometrical obstructions. Adv Differ Equ 2018, 77 (2018). https://doi.org/10.1186/s13662-018-1531-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-018-1531-0