Abstract

In this paper, we investigate the existence results of a fourth-order differential equation with multi-strip integral boundary conditions. Our analysis relies on the shooting method and the Sturm comparison theorem. Finally, an example is discussed for the illustration of the main work.

Similar content being viewed by others

1 Introduction

In this paper, we study the existence of positive solutions for a boundary value problem of nonlinear fourth-order impulsive differential equation with multi-strip integral conditions given by

where \(0<\alpha_{i}<\beta_{i} <1\), \(0<\xi_{i}<\eta_{i}<1\), \(\gamma_{i} >0\), \(\rho _{i}>0\), \(i=1,2,\ldots,n\), \(\lambda>0\), \(\mu>0\). \(J=[0,1]\), \(J^{*}=J\backslash\{t_{1},t_{2},\ldots,t_{p}\}\), \(0=t_{0}< t_{1}< t_{2}<\cdots<t_{p}<t_{p+1}=1\), \(\Delta u(t_{k})=u(t_{k}^{+})-u(t_{k}^{-})\) denotes the jump of \(u(t)\) at \(t=t_{k}\), \(u(t_{k}^{+})\) and \(u(t_{k}^{-})\) represent the right and left limits of \(u(t)\) at \(t=t_{k}\), respectively, \(\Delta u'(t_{k})\) has a similar meaning for \(u'(t)\).

In fact, multi-strip conditions correspond to a linear combination of integrals of unknown function on the sub-intervals of J. The multi-strip conditions appear in the mathematical modelings of the physical phenomena, for instance, see [1, 2]. They have various applications in realistic fields such as blood flow problems, semiconductor problems, hydrodynamic problems, thermoelectric flexibility, underground water flow and so on. For a detailed description of multi-strip integral boundary conditions, we refer the reader to the papers [3–6].

Impulsive differential equations, which provide a natural description of observed evolution processes, are regarded as important mathematical tools for a better understanding of several real world problems in the applied sciences. For an overview of existing results and of recent research areas of impulsive differential equations, see [7–10].

The existing literature indicates that research of fourth-order nonlocal integral boundary value problems is excellent, and the relevant methods are developed to be various. Generally, the fixed point theorems in cones, the method of upper and lower solutions, the monotone iterative technique, the critical point theory and variational methods play extremely important roles in establishing the existence of solutions to boundary value problems. It is well known that the classical shooting method could be effectively used to prove the existence results for differential equation boundary value problems. To some extent, this approach has an advantage over the traditional methods. Readers can see [11–16] and the references therein for details.

To the best of our knowledge, no paper has considered the existence of positive solutions for a fourth-order impulsive differential equation multi-strip integral boundary value problem with the shooting method till now. Motivated by the excellent work mentioned above, in this paper, we try to employ the shooting method to establish the criteria for the existence of positive solutions to BVP (1.1).

The rest of the paper is organized as follows. In Section 2, we provide some necessary lemmas. In particular, we transform fourth-order impulsive problem (1.1) into a second-order differential integral equation BVP (2.10), and by using the shooting method, we convert BVP (2.10) into a corresponding IVP (initial value problem). In Section 3, the main theorem is stated and proved. Finally, an example is discussed for the illustration of the main work.

Throughout the paper, we always assume that:

- (H1):

-

\(f\in C(\mathbb{R^{+}}\times\mathbb{R^{-}}\times\mathbb{R^{-}} , \mathbb{R}^{+})\), \(I_{k}\in C(\mathbb{R^{+}},\mathbb{R^{-}})\), \(J_{k}\in C(\mathbb{R^{+}}\times\mathbb{R},\mathbb{R^{-}})\) for \(1\leqslant k\leqslant p \), here \(\mathbb{R}^{+}=[0,\infty)\), \(\mathbb{R}^{-}=(-\infty ,0]\);

- (H2):

-

h, \(g_{1}\) and \(g_{2}\in C(J,\mathbb{R}^{+}) \);

- (H3):

-

\(\lambda,\mu,\gamma_{i} ,\rho_{i} >0\) for \(i=1,2,\ldots,n\) and \(0<\Gamma=\sum_{i=1}^{n} \gamma_{i}\int_{\alpha_{i}}^{\beta _{i}}g_{1}(t)\,dt<1\).

2 Preliminaries

For \(v(t)\in C(J)\), we consider the equation

subject to the boundary conditions of (1.1).

We need to reduce BVP (1.1) to two second-order BVPs. To this end, first of all, by means of the transformation

we convert problem (2.1) into the BVP

and the BVP

Lemma 2.1

Assume that conditions (H1)-(H3) hold. Then, for any \(y(t)\in C(J)\), BVP (2.3) has a unique solution as follows:

where

and

Proof

Integrating (2.3), we get

By the same way, we get

Setting \(t=1\) in (2.6) and (2.7), yields that

Taking into account the integral boundary condition of (2.3), we have

Combining with (2.7), we have

where \(G(t,s)\) is defined in Lemma 2.1.

Further, it holds that

where Γ is given by (H3). Therefore,

Substituting (2.9) into (2.8), we get

where \(H(t,s)\) and \(\Phi(t,I_{k}(u(t_{k})))\) are given previously. □

Lemma 2.2

Assume that conditions (H2)-(H3) hold. \(G(t,s)\) and \(H(t,s)\) are given as in the statement of Lemma 2.1. Then \(G(t,s)\geqslant0\), \(H(t,s)\geqslant0\) for any \(t,s\in[0,1]\).

We consider the operator A defined by

and the operator B defined by

Then BVP (1.1) is equivalent to the following second-order differential integral equation BVP:

Lemma 2.3

If y is a positive solution of (2.10), then u is a positive solution of (1.1).

Proof

Assume y is a positive solution of (2.10), then \(y(t)>0\) for \(t\in (0,1)\), and it follows from \(u(t)=(Ay)(t)\) that \(u(t)\) satisfies (2.5). By (H1) and Lemma 2.2, we can obtain

This ends the proof. □

The principle of the shooting method converts the BVP into an IVP by finding suitable initial value m such that the equation in (2.10) comes with the initial value condition as follows:

Under assumptions (H1)-(H3), denote by \(y(t,m)\) the solution of IVP (2.11). We assume that f is strong continuous enough to guarantee that \(y(t,m)\) is uniquely defined and that \(y(t,m)\) depends continuously on both t and m. The studies of this kind of problem can be available in [17]. Consequently, the solution of IVP (2.11) exists.

Denote

Then solving (2.10) is equivalent to finding \(m^{*}\) such that \(k(m^{*})=1\).

Lemma 2.4

([18])

If there exist two initial values \(m_{1}>0\) and \(m_{2}>0\) such that

-

(i)

the solution \(y(t,m_{1})\) of (2.11) remains positive in \((0,1)\) and \(k(m_{1})\leqslant1\);

-

(ii)

the solution \(y(t,m_{2})\) of (2.11) remains positive in \((0,1)\) and \(k(m_{2})\geqslant1\).

Then BVP (2.10) has a positive solution with \(y(0)=m^{*}\) between \(m_{1}\) and \(m_{2}\).

Now we introduce the Sturm comparison theorem derived from [19].

Lemma 2.5

Let \(y(t,m)\), \(z(t,m)\), \(Z(t,m)\) be the solutions of the following IVPs, respectively:

and suppose that \(F(t)\), \(g(t)\) and \(G(t)\) are continuous functions defined on \([0,1]\) satisfying

If \(Z(t,m)\) does not vanish in \([0,1]\), for any \(0 \leqslant t\leqslant1\), we have

further

Proof

The classical Sturm comparison theorem gives us the inequalities

Integrating these inequalities over \([t,1]\) yields (2.12). In view of (H2), (H3) and the continuity of the integral, (2.13) can be obtained. □

Lemma 2.6

Under assumptions (H1)-(H3), the shooting solution \(y(t,m)\) of IVP (2.11) has the following properties:

Proof

Integrating both sides of equation (2.11) from 0 to t, we have

Indeed,

This ends the proof. □

Lemma 2.7

Let \(\sum_{i=1}^{n}\rho_{i}\int_{\xi_{i}}^{\eta_{i}}g_{2}(t)\,dt>1\), then BVP (2.10) has no positive solution.

Proof

Assume that BVP (2.10) has a positive solution \(y(t)\), then \(y(t,m)\) is the positive solution of IVP (2.11). For any given \(m>0\), the solution \(y(t,m)\) of IVP (2.11) will be compared with the solution \(z(t)=m\) of

By Lemmas 2.4 and 2.5, we have

In fact, \(k(m)=1\), which means \(\sum_{i=1}^{n} \rho_{i}\int_{\xi _{i}}^{\eta_{i}}g_{2}(t)\,dt\leqslant1\). □

In the rest of the paper, we always assume

- (H4):

-

\(0<\Lambda=\sum_{i=1}^{n} \rho_{i}\int_{\xi_{i}}^{\eta _{i}}g_{2}(t)\,dt<1\).

3 Existence results

For the sake of convenience, we denote

Lemma 3.1

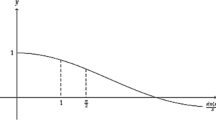

Let \(g_{2}^{L} \sum_{i=1}^{n} \rho_{i}(\eta_{i} -\xi_{i})\leqslant1\), then there exist a real number \(x=A_{1}\in (0,\frac{\pi}{2} )\) satisfying the inequality

and another real number \(x=A_{2}\in (0,\frac{\pi}{2} )\) satisfying the inequality

Proof

It is easy to show that

Since the function \(f_{1}(x)\) is continuous on \((0,\frac{\pi}{2} )\), there exists a real number \(A_{1}\in (0,\frac{\pi}{2} )\) such that \(f_{1}(A_{1})\leqslant1\).

Analogously,

Thus, there exists a real number \(A_{2}\in (0,\frac{\pi}{2} )\) such that \(f_{2}(A_{2})\geqslant1\). □

Set

Theorem 3.1

Assume that (H1)-(H4) hold. Suppose that one of the following conditions holds:

-

(i)

\(0\leqslant f^{0} < \frac{\underline{A}^{2}}{h^{L}}\), \(f_{\infty}>\frac{\bar{A}^{2}}{h^{l}}\);

-

(ii)

\(0\leqslant f^{\infty}< \frac{\underline{A}^{2}}{h^{L}}\), \(f_{0}>\frac{\bar{A}^{2}}{h^{l}}\).

Then problem (2.10) has at least one positive solution, where

\(A_{1}\) and \(A_{2}\) are defined by Lemma 3.1.

Proof

(i) Since \(0\leqslant f^{0} < \frac{\underline{A}^{2}}{h^{L}}\), there exists a positive number r such that

Let \(0< m_{1}< r\), it gives \(y(t,m_{1})\leqslant y(0,m_{1})= m_{1}< r\). According to (H1) and (H2), we have

Let \(Z(t)=m_{1}\cos(A_{1}t)\) for \(t\in[0,1]\), then \(Z(t)\) satisfies the following IVP:

Applying the Sturm comparison theorem, from (3.2), Lemma 2.5 and Lemma 3.1, we have

Conversely, the second part of condition (i) guarantees that there exists a number L large enough such that

and there exists some fixed positive constant \(\varepsilon<\min \{A_{2},\frac{\pi}{2}-A_{2} \}\) small enough such that

In what follows, we need to find a positive number \(m_{2}\) satisfying \(k(m_{2})>1\).

Claim

There exists an initial value \(m_{2}\) and a positive number σ such that

Since the function \(y(t,m)\) is concave and \(y'(0,m)=0\), the function \(y(t,m)\) and the line \(y=L\) intersects at most one time for the constant L defined in (3.5) and \(t\in(0,1]\). The intersecting point is denoted as \(\bar{\delta} _{m}\) provided it exists. Furthermore, we set \(I_{m}=(0,\bar{\delta} _{m}]\subseteq (0,1]\). If \(y(1,m)\geqslant L\), then \(\bar{\delta} _{m}=1\).

Next, we divide the discussion into three steps.

Step 1. We declare that there exists \(m_{0}\) large enough such that \(0\leqslant y(t,m_{0})\leqslant L\) for \(t\in[\bar{\delta} _{m_{0}},1]\) and \(y(t,m_{0})\geqslant L\) for \(t\in I_{m_{0}}\).

If not, provided \(y(t,m)\leqslant L\) for all \(t\in(0,1]\) as \(m\to \infty\), then by integrating both sides of equation (2.10) over \([0,t]\), we get

Set \(t=1\), we have

where \(L_{f}=\max_{y\in[0,L]}f(Ay,By,-y)\), which is a contradiction as \(m\to\infty\). Hence we have the claim.

Step 2. There exists a monotone increasing sequence \(\{m_{k}\}\) such that the sequence \(\{\bar{\delta}_{m_{k}}\}\) is increasing on \(m_{k}\). That is,

We prove that

Since f guarantees that \(y(t,m)\) is uniquely defined, the solutions \(y(t,m_{k-1})\) and \(y(t,m_{k})\) have no intersection in the interval \([\bar{\delta} _{m_{k-1}},1)\). It follows from

that

Therefore, (3.7) is obtained.

Step 3. Search a suitable \(m_{2}^{*}\) and a positive number σ such that \(0<\max \{\frac{A_{2}}{A_{2}+\varepsilon}, \eta_{n} \}\leqslant\sigma\leqslant1\) and \(y(t,m_{2}^{*})\geqslant L\) for \(t\in(0,\sigma]\).

From Step 1, Step 2 and the extension principle of solutions, there exists a positive integer n large enough such that

If we take \(m_{2}^{*}=m_{n}\), \(\sigma=\bar{\delta} _{m_{n}}\), then

Next, we show that \(k(m_{2}^{*})\geqslant1\) for the chosen \(m_{2}^{*}\) and σ.

Take \(z(t)=m_{2}^{*}\cos( (A_{2}+\varepsilon)t)\), then \(z(t)\) satisfies the following IVP:

where \(\sigma\leqslant1\). Noting (3.6), we have

Taking into account Lemmas 2.4, 2.5 and 3.1, we have

For \(t\in (0,\frac{\pi}{2} )\), define

where \(0<\xi_{i} \leqslant\sigma\leqslant1\) for \(i=1,2,\ldots,n\). Through calculating, we have

Since \(\sin\sigma t \geqslant\sin\xi_{i} t\), \(\cos \xi_{i} t\geqslant \cos\sigma t\), we have \(C_{i}'(t)\geqslant0\), for \(i=1,2,\ldots,n\), which implies that \(C_{i}(t)\) is nondecreasing for \(t\in (0,\frac{\pi}{2} )\). Similarly, we can show that \(\frac{\cos\eta_{i} t}{\cos\sigma t}\) is nondecreasing for \(t\in (0,\frac{\pi}{2} )\), \(i=1,2,\ldots,n\).

The above discussion guarantees that

On account of Lemma 2.4, some initial value \(m^{*}\) can be found such that \(y(t,m^{*})\) is the solution of (2.10). Consequently, \(u(t,m^{*})=(Ay)(t,m^{*})\) is the solution of BVP (1.1).

We omit the derivation for (ii) since it is similar to the above proof. □

4 Example

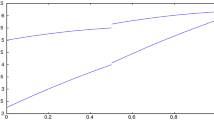

Consider the following boundary value problem:

with \(\gamma_{1}=\rho_{1}=\frac{1}{2}\), \(\gamma_{2}=\rho _{2}=\frac{3}{5} \) and

It is easy to check that (H1) and (H2) are satisfied. Simple calculation shows that \(\Gamma=\Lambda=\frac{9}{16}<1\), which implies that (H3) and (H4) are satisfied.

Let \(A_{1}=\frac{1}{2}\) and \(A_{2}=\frac{3}{2}\) such that \(f_{1}(A_{1})=0.9552<1\) and \(f_{2}(A_{2})>4.2304>1\).

Hence,

Then condition (ii) of Theorem 3.1 is satisfied. Consequently, Theorem 3.1 guarantees that problem (4.1) has at least one positive solution \(u(t)\).

References

Asghar, S, Ahmad, B, Ayub, M: Diffraction from an absorbing half plane due to a finite cylindrical source. Acta Acust. Acust. 82, 365-367 (1996)

Boucherif, A: Second-order boundary value problem with integral boundary conditions. Nonlinear Anal. 70, 364-371 (2009)

Alsulami, HH, Ntouyas, SK: A study of third-order single-valued and multi-valued problems with integral boundary conditions. Bound. Value Probl. 2015, 25 (2015)

Ahmad, B, Alsaedi, A, Al-Malki, N: On higher-order nonlinear boundary value problems with nonlocal multipoint integral boundary conditions. Lith. Math. J. 2016, 143-163 (2016)

Alsaedi, A, Ntouyas, SK, Garout, D, Ahmad, B: Coupled fractional-order systems with nonlocal coupled integral and discrete boundary conditions. Bull. Malays. Math. Sci. Soc. (2017). https://doi.org/10.1007/s40840-017-0480-1

Ntouyas, SK, Tariboon, J, Sudsutad, W: Boundary value problems for Riemann-Liouville fractional differential inclusions with nonlocal Hadamard fractional integral conditions. Mediterr. J. Math. 13, 939-954 (2016)

Bai, Z, Dong, X, Yin, C: Existence results for impulsive nonlinear fractional differential equation with mixed boundary conditions. Bound. Value Probl. 2016, 63 (2016)

Pang, Y, Bai, Z: Upper and lower solution method for a fourth-order four-point boundary value problem on time scales. Appl. Math. Comput. 215, 2243-2247 (2009)

Feng, M: Multiple positive solutions of fourth-order impulsive differential equations with integral boundary conditions and one-dimensional p-Laplacian. Bound. Value Probl. 2011, 654871 (2011)

Ding, W, Wang, Y: New result for a class of impulsive differential equation with integral boundary conditions. Commun. Nonlinear Sci. Numer. Simul. 18, 1095-1105 (2013)

Amster, P, Alzate, PPC: A shooting method for a nonlinear beam equation. Nonlinear Anal. 68, 2072-2078 (2008)

Kwong, MK, Wong, JSW: The shooting method and nonhomogeneous multipoint BVPs of second-order ODE. Bound. Value Probl. 2007, 64012 (2007)

Kwong, MK, Wong, JSW: Solvability of second-order nonlinear three-point boundary value problems. Nonlinear Anal. 73, 2343-2352 (2010)

Wang, H, Ouyang, Z, Tang, H: A note on the shooting method and its applications in the Stieltjes integral boundary value problems. Bound. Value Probl. 2015, 102 (2015)

Ahsan, M, Farrukh, S: A new type of shooting method for nonlinear boundary value problems. Alex. Eng. J. 52, 801-805 (2013)

Feng, M, Qiu, J: Multi-parameter fourth order impulsive integral boundary value problems with one-dimensional m-Laplacian and deviating arguments. J. Inequal. Appl. 2015, 64 (2015)

Kwong, MK: The shooting method and multiple solutions of two/multi-point BVPs of second-order ODE. Electron. J. Qual. Theory Differ. Equ. 2006, 6 (2006)

Wang, H, Ouyang, Z, Wang, L: Application of the shooting method to second-order multi-point integral boundary-value problems. Bound. Value Probl. 2013, 205 (2013)

Xie, W, Pang, H: The shooting method and integral boundary value problems of third-order differential equation. Adv. Differ. Equ. 2016, 138 (2016)

Acknowledgements

The work is supported by Chinese Universities Scientific Fund (Project No. 2017LX003) and National Training Program of Innovation (Project No. 201710019252).

Author information

Authors and Affiliations

Contributions

All authors contributed equally to the manuscript, read and approved the final draft.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Zhu, Y., Pang, H. The shooting method and positive solutions of fourth-order impulsive differential equations with multi-strip integral boundary conditions. Adv Differ Equ 2018, 5 (2018). https://doi.org/10.1186/s13662-017-1453-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-017-1453-2