Abstract

This paper is concerned with the problem of enhanced results on robust finite-time passivity for uncertain discrete-time Markovian jumping BAM delayed neural networks with leakage delay. By implementing a proper Lyapunov-Krasovskii functional candidate, the reciprocally convex combination method together with linear matrix inequality technique, several sufficient conditions are derived for varying the passivity of discrete-time BAM neural networks. An important feature presented in our paper is that we utilize the reciprocally convex combination lemma in the main section and the relevance of that lemma arises from the derivation of stability by using Jensen’s inequality. Further, the zero inequalities help to propose the sufficient conditions for finite-time boundedness and passivity for uncertainties. Finally, the enhancement of the feasible region of the proposed criteria is shown via numerical examples with simulation to illustrate the applicability and usefulness of the proposed method.

Similar content being viewed by others

1 Introduction

Over the past decades, delayed neural networks have found successful applications in many areas such as signal processing, pattern recognition, associative memories and optimization solvers. In such applications quantitative behavior of dynamical systems is an important step for the practical design of neural networks [1]. Therefore, the dynamic characteristics of discrete-time neural networks have been extensively investigated, for example, see [2–12]. The study on neural networks is mostly in the continuous-time setting, but they are often discretized for experimental or computational purposes. Also, neural networks with leakage delay is one of the important types of neural networks. Hence, time delay in the leakage term has a great impact on the dynamics of neural networks. Although, time delay in the stabilizing negative feedback term has a tendency to destabilize a neural network system [13–16], the delay in the leakage term can destroy the stability neural networks. Gopalasamy [17] initially investigated the dynamics of bidirectional associative memory (BAM) network model with leakage delay. Based on this work, authors in [15] considered the global stability for a class of nonlinear systems with leakage delay. Li and Cao discussed the stability of memristive neural networks with both reaction-diffusion term and leakage delay, and some easily checked criteria have been established by employing differential inclusion theory and Jensen’s integral inequality [18].

The (BAM) neural network model, proposed by Kosko [19, 20] is a two-layered nonlinear feedback network model, where the neurons in one layer always interconnect with the neurons in the another layer, while there are no interconnections among neurons in the same layer. In the current scenario, due to its application in many fields, the study of bidirectional associative memory neural networks has attracted the attention of many researchers, and they have studied the stability properties of neural networks and presented various sufficient conditions for asymptotic or exponential stability of the BAM neural networks [4, 15, 21–23].

On the one hand, time delay is one of the main sources of instability, which is encountered in many engineering systems such as chemical processes, long transmission lines in pneumatic systems, networked control systems, etc. Over the past years, the study of time delay systems has received considerable attention, and a great number of research results on time delay systems exist in the literature. The stability of time delay systems is a fundamental problem because it is important in the synthesis and analysis of such neural network systems [3, 5, 24, 25]. The exponential stability of stochastic BAM networks with mixed delays was discussed by Lyapunov theory [25].

On the other hand, the theory of passivity was implemented first in circuit analysis and generates increasing interest among the researchers. It is a useful tool in obtaining the stability analysis of both linear and nonlinear systems, especially for high-order systems. It is evidentally true that the passive properties can ideally keep the systems internally stable. Due to its importance and applicability, the problem of passivity analysis for delayed dynamic systems has been investigated, and lots of results have been reported in the literature [26–30]. For instance, in [28] the authors Wu et al. derived the passivity condition for discrete-time switched neural networks with various functions and mixed time delays. Moreover, the passivity and synchronization of switched neural networks were investigated in [30], and some delay-dependent as well as delay-independent criteria were provided. In [6, 7], the authors delivered the concept of passivity which is stable or not.

However, using the conventional Lyapunov asymptotic stability theory, it should be mentioned that all these existing studies about the passivity analysis are performed with definition over the infinite-time interval. The concept of finite-time (or short-time) analysis problem was first initiated by Dorato in 1961 [31]. Communication network system, missile system and robot control system are the examples of systems which work in a short-time interval. In the present years, many biologists have been focusing on the transient values of the actual network states. In [32–34], many interesting results for finite-time stability of various types of systems can be found. Recently, an extended finite-time \(H_{\infty}\) control problem for uncertain switched linear neutral systems with time-varying delays was investigated in [27], and also the concept of time-varying delays was proposed in [22, 35]. The results for finite-time stabilization of neural networks with discontinuous activations was proposed in [36].

Motivated by the aforementioned discussions, in this paper we focus on the finite-time boundedness and passivity of uncertain discrete-time Markovian jumping BAM neural networks with leakage time-delays. Here we use a new type of LKF to handle the given range of time delay interval together with free weighting matrix approach to derive the main results. Our main contributions are highlighted as follows:

-

The finite-time passivity result for discrete-time Markovian jumping uncertain BAM neural networks with leakage delay is proposed for the first time.

-

Reciprocally convex combination approach is used to handle the triple summation terms and a new type of zero inequalities is introduced.

-

Delay-dependent results for finite-time boundedness and finite-time passivity are derived by using the finite-time stability method and the Lyapunov-Krasovskii functional approach.

The rest of this paper is well organized as follows. Problem formulation and mathematical preliminaries are presented in Section 2. Section 3 gives the main result of this paper, and it also contains the subsection on finite-time boundedness. Robust finite-time passivity is derived in Section 4. Numerical examples are demonstrated in Section 5 to illustrate the effectiveness of the proposed method. Finally, we give the conclusion of this paper in Section 6.

1.1 Notations

The notations in this paper are standard. Throughout this paper, \(\mathbb{R}^{n}\) and \(\mathbb{R}^{n\times m}\) denote, respectively, the n-dimensional Euclidean space and the set of all \(n \times m\) real matrices. I denotes the identity matrix with appropriate dimensions and \(\operatorname{diag}(\cdot)\) denotes the diagonal matrix. \(A^{T}\) denotes the transpose of matrix A. k denotes the set of positive integers. For real symmetric matrices X and Y, the notation \(X \geq Y\) (resp., \(X > Y\)) means that the matrix \(X-Y\) is positive semi-definite (resp., positive definite). \(\mathbb{N}= \{1, 2,\ldots, n\}\) and \(\|\cdot\|\) stands for the Euclidean norm in \(\mathbb{R}^{n}\). \(\lambda_{\mathrm{max}}(X)\) (resp., \(\lambda _{\mathrm{min}}(X)\)) stands for the maximum (resp., minimum) eigenvalue of the matrix X. \(I_{n}\) and \(0_{n}\) represent the identity matrix and zero matrix, respectively. \(l_{2}(0, \infty)\) denotes the space of square summable infinite vector sequences. The symbol ∗ within a matrix represents the symmetric term of the matrix.

2 Problem formulation and mathematical preliminaries

Let \((\Omega, \mathfrak{F}, \{\mathfrak{F}\}_{t\geq0}, P)\) be a complete probability space with filtration \(\{\mathfrak{F}\}_{t\geq 0}\) satisfying the usual condition (i.e., it is right continuous and \(\mathfrak{F}_{0}\) contains all p-null sets); \(E\{\cdot\}\) stands for the mathematical expectation operator with respect to given probability measure P. Let \(r(k)\), \(k\geq0\) be a Markovian chain taking values in a finite space \(S = \{1, 2, 3,\ldots, N\}\) with probability transition matrix \(\pi = (\pi_{ij})_{N\times N}\) given by

where \(\pi\geq0\) (\(i, j \in S\)) is a transition rate from i to j and \(\sum_{j=1}^{N}\pi_{ij} = 1\), \(\forall i \in S\).

Consider the following BAM uncertain discrete-time Markovian jumping neural network with time-varying delays, and leakage delay is described by

where \(x(k), y(k) \in \mathbb{R}^{n}\) is the neural state vector, \(v(k)\), \(u(k)\) is the exogenous disturbance input vector belonging to \(\mathfrak{L}_{2}[0,\infty)\) and \(g^{*}(k)\), \(h(k)\) is the output vector of the neural network, \(f(y(k))\), \(g(x(k))\) is the neuron activation function, the positive integer \(\tau(k)\), \(\sigma (k)\) denotes the time-varying delay satisfying \(\tau_{m}\leq\tau (k)\leq\tau_{M}\) and \(\sigma_{m}\leq\sigma(k)\leq\sigma_{M}\) for all \(k \in N\), where \(\tau_{m}\) and \(\tau_{M}\), \(\sigma_{m}\) and \(\sigma_{M}\) are constant positive scalars representing the minimum and maximum delays, respectively.

in which \(A = \operatorname{diag}\{a_{1},a_{2},\ldots,a_{n}\}\), \(D = \operatorname{diag}\{ d_{1},d_{2},\ldots,d_{n}\} \) represent the state feed back coefficient matrix with \(|a_{i}|<1\), \(|d_{i}|<1\), \(B=[b_{ij}]_{n\times n}\), \(E=[e_{ij}]_{n\times n}\), \(C=[c_{ij}]_{n\times n}\), \(F=[f_{ij}]_{n\times n}\), respectively, the connection weights and the delayed connection weights, the initial function \(\phi(k)\), \(\psi(k)\) is continuous and defined on \([-\tau_{M},0]\), \([-\sigma_{M},0]\). Further the uncertainty parameters are defined as follows:

where \(M_{a}\), \(M_{b}\), \(M_{c}\), \(M_{d}\), \(M_{f}\), M are known constant matrices of appropriate dimensions and \(N(k)\) is an unknown time-varying matrix with Lebesgue measurable elements bounded by \(N^{T}(k)N(k) \leq I\).

The following assumptions help to complete the main result.

Assumption I

For any \(i = 1, 2, 3, \ldots,n\), there exist constraints \(F_{i}^{-}\), \(F_{i}^{+}\), \(G_{i}^{-}\), \(G_{i}^{+}\) such that

For presentation convenience, in the following we denote

Remark 2.1

In biologically inspired neural networks, the activation function is usually an abstraction representing the rate of action potential firing in the cell. Non-monotonic functions can be more suitable than other activation functions. In many electronic circuits, the input-output functions of amplifiers may be neither monotonically increasing nor continuously differentiable. The constants are positive, negative or zero in the above assumption. So, the activation function may be non-monotonic and more widespread than usual sigmoid functions and Lipschitz functions. Such conditions are discourteous in qualifying the lower and upper bounds of the activation functions. Therefore, by using the LMI-based technique, the generalized activation function is considered to reduce the possible conservatism.

Assumption II

The disturbance input vector \(v(x)\) and \(u(x)\) is time-varying and, for given \(v > 0\), \(u > 0\) satisfies \(v^{T}(x) v(x) \leq v\), \(u^{T}(x) u(x) \leq u\).

Before deriving our main results, the following definitions and lemmas will be stated.

Definition 2.2

[37]

DNN (1), (3) is said to be robustly finite-time bounded with respect to \((\eta_{1}, \eta_{2}, \eta, \chi, L, Q, L_{1}, Q_{1}, u, v)\), where \(0 < \eta_{1} < \eta_{2} < \eta< \chi\) and \(L, L_{1} > 0\), if

∀ \(k_{1} \in \{-\tau_{M}, -\tau_{M}+1,\ldots, 0 \} \), \(k_{2} \in \{-\sigma_{M}, -\sigma_{M}+1,\ldots, 0 \} \), \(k_{4}=k_{3} \in \{1, 2,\ldots, N \}\) holds for any nonzero \(v(x)\), \(u(x)\) satisfying Assumption II.

Definition 2.3

[37]

DNN (1), (3) with output (2), (4) is said to be robustly finite-time passive with respect to \((\eta_{1}, \eta_{2}, \eta, \chi, L, Q, L_{1}, Q_{1}, \gamma, \tilde{\gamma }_{1}, u, v)\), where \(0 < \eta_{1} < \eta_{2} < \eta< \chi, \omega , \tilde{\omega}\) is a prescribed positive scalar and \(L > 0\) and \(L_{1} > 0\), iff DNN (1), (3) with output (2), (4) is robustly finite-time bounded with respect to \((\eta_{1}, \eta _{2}, \eta, \chi, L, Q, L_{1}, Q_{1}, u, v)\) and under the zero initial condition the output \(G(k)\), \(H(k)\) satisfies

for any nonzero \(v(x)\), \(u(x)\) satisfying Assumption II.

Remark 2.4

The concept of finite-time passivity is different from that of usual passivity. If the states in the system exceed their recommended bounds, it is usual passivity. Here, in this paper, Assumption II and Definition 2.2 should be in the given bounds, which helps to prove the finite-time passivity in the main result.

Lemma 2.5

[12]

For any symmetric constant matrix \(Z \in \mathbb{R}^{n \times n}\), \(Z \geq0\), two scalars \(\tau_{m}\) and \(\tau_{M}\) satisfying \(\tau_{m} \leq\tau_{M}\), and a vector-valued function \(\eta{k} = x(k+1)-x(k)\) (\(k \in\mathbb{Z}^{+}\)), we have

Lemma 2.6

[38]

Let \(g_{1}, g_{2},\ldots,g_{N}: \mathbb{R}^{m} \rightarrow\mathbb{R}\) have positive values in an open subset \(\mathbb{D}\) of \(\mathbb{R}^{m}\). Then the reciprocally convex combination of \(g_{i}\) over \(\mathbb{D}\) satisfies

subject to

Remark 2.7

There are two main methods to find lower bounds. The first one is based on Moon’s inequality. The second method is the so-called reciprocally convex combination lemma, and this approach also helps to reduce the number of decision variables. The conservatism induced by these two inequalities is independent. While, in some cases, such as stability conditions resulting from the application of Jensen’s inequality, the reciprocally convex combination lemma is in general more conservative than Moon’s inequality. Also, note that the reciprocally convex combination approach is successfully applied on double summation terms.

3 Robust finite-time boundedness

The main concern in this subsection is that the sufficient conditions for the finite boundedness of DNN (1), (3) and the LMI-based robust conditions will be derived using the Lyapunov technique.

Theorem 3.1

Under Assumptions I and II, for given scalars \(\mu>1\), \(\rho>1\), \(\tau_{m}\), \(\tau_{M}\), \(\sigma_{m}\), \(\sigma_{M}\), DNN model (1), (3) is robustly finite-time bounded with respect to \((\eta_{1}, \eta_{2}, \eta, \chi, L, Q, L_{1}, Q_{1}, u, v)\) if there exist symmetric positive definite matrices \(P_{1i}\), \(P_{2i}\), W, \(W_{1}\), \(R_{1}\), R, \(S_{1}\), \(S_{2}\), \(S_{3}\), \(S_{4}\), \(Z_{1}\), \(Z_{2}\), \(Z_{3}\), \(Z_{4}\), matrices \(U_{1}\), \(U_{2}\), \(U_{3}\), \(U_{4}\), \(U_{5}\), \(U_{6}\), \(U_{7}\), \(U_{8}\), positive diagonal matrices \(H_{1}\), \(H_{2}\), \(H_{3}\), \(H_{4}\) and positive scalars \(\lambda^{P_{1}}\), \(\lambda^{P_{2}}\), \(\lambda _{P_{1}}\), \(\lambda_{P_{2}}\), \(\lambda_{W_{3}}\), \(\lambda_{W_{4}}\), \(\lambda_{R_{3}}\), \(\lambda_{R_{4}}\), \(\lambda_{S_{1}}\), \(\lambda _{S_{2}}\), \(\lambda_{S_{3}}\), \(\lambda_{S_{4}}\), \(\lambda_{Z_{1}}\), \(\lambda_{Z_{2}}\), \(\lambda_{Z_{3}}\), \(\lambda_{Z_{4}}\), ϵ, \(\epsilon_{1}\), \(P_{1i}=P_{1}(r_{k})\), \(P_{2i}=P_{2}(r_{k})\), \(\bar {P}_{1i}= \sum_{j=1}^{N}\Pi_{ij}P_{1j}\), \(\bar{P}_{2i}= \sum_{j=1}^{N}\Pi_{ij}P_{2j}\) such that the following LMIs hold for \(r=1, 2, 3, 4\):

where

Proof

To prove the required results, the following LKF for finite-time passivity BAM DNN model (1)-(4) is considered:

where

where \(\eta(k) = x(k+1)-x(k)\) and \(\zeta(k) = y(k+1)-y(k)\). Calculating the forward difference of V(k) by defining \(\Delta V(k) = V(k+1)-V(k)\) along the solution of (1) and (3), we obtain

Using Lemma 2.5(i), the first summation term in \(\Delta V_{5i}\) can be written as

Similarly,

Further, using Lemma 2.5(ii), the second summation term in \(\Delta V_{5i}(k)\) becomes

By reciprocally convex combination Lemma 2.6, if LMIs in (8) hold, then the following inequalities hold:

where \(\alpha_{1}=\frac{\sigma_{1}}{\hat{\sigma}}\), \(\alpha _{2}=\frac{\sigma_{2}}{\hat{\sigma}}\), \(\beta_{1}=\frac{\sigma _{3}}{\check{\sigma}}\), \(\beta_{1}=\frac{\sigma_{4}}{\check {\sigma}}\) with \(\sigma_{1}=(\sigma_{M}-\sigma(k))\), \(\sigma_{2}=(\sigma(k)-\sigma_{m})\), \(\sigma_{3}=(\sigma_{M}-\sigma(k))(\sigma_{M}+\sigma(k)+1)\), \(\sigma_{4}=(\sigma(k)-\sigma_{m})(\sigma(k)+\sigma_{m}+1)\).

Similarly,

By reciprocally convex combination Lemma 2.6, if LMIs in (9) hold, then the following inequalities hold:

where \(\alpha_{3}=\frac{\tau_{1}}{\hat{\tau}}\), \(\alpha _{4}=\frac{\tau_{2}}{\hat{\tau}}\), \(\beta_{1}=\frac{\tau _{3}}{\check{\tau}}\), \(\beta_{1}=\frac{\tau_{4}}{\check{\tau }}\) with \(\tau_{1}=(\tau_{M}-\tau(k))\), \(\tau_{2}=(\tau(k)-\tau_{m})\), \(\tau_{3}=(\tau_{M}-\tau(k))(\tau _{M}+\tau(k)+1)\), \(\tau_{4}=(\tau(k)-\tau_{m})(\tau(k)+\tau_{m}+1)\).

Then inequalities (19) and (20) can be rewritten as

Similarly,

It is noted that when \(\sigma(k)=\sigma_{m}\) or \(\sigma(k)=\sigma _{M}\) and \(\tau(k)=\tau_{m}\) or \(\tau(k)=\tau_{M}\), we have \(x(k-\sigma(k))-x(k-\sigma_{M})=0\) or \(x(k-\sigma_{m})-x(k-\sigma(k))=0\) and \(y(k-\tau(k)) -y(k-\tau_{M})=0\) or \(y(k-\tau_{m})-y(k-\tau(k))=0\), respectively. So, inequalities (20) and (21) still hold.

For any matrices \(u_{5}\), \(u_{6}\), \(u_{7}\), \(u_{8}\), the following equalities hold:

On the other hand, from Assumption I, we have

which is equivalent to

and

where \(e_{i}\) denotes the unit column vector having the element 1 on its rth row and zero elsewhere. Let \(H_{1} = \operatorname{diag}\{ h_{11},h_{12},\ldots,h_{1n}\}\), \(H_{2} = \operatorname{diag}\{h_{21},h_{22},\ldots,h_{2n}\}\), \(H_{3} = \operatorname{diag}\{h_{31},h_{32},\ldots, h_{3n}\}\), \(H_{4} = \operatorname{diag}\{ h_{41},h_{42},\ldots,h_{4n}\}\).

Then

Similarly,

Similarly, one can get

and

Then from (13) and adding (25)-(30) gives

where

and

Next, in view of Schur complement [39], the RHS of (31) can be written as

Similarly, for

Then, by using uncertainty description (2), (4) and procedure as in Lemma 2.6, we have

where

where

Hence if LMIs ((6), (7), (8), (9) hold, it is easy to get

where \(\lambda_{Q_{1}}=\lambda_{\mathrm{max}}(Q_{1})\) and \(\lambda _{Q}=\lambda_{\mathrm{max}}(Q)\). Simple computation gives

Noticing \(\mu\geq1\) and \(\rho\geq1\), it follows that

Further, from (12), we can get

Letting

we obtain

where \(\lambda_{P_{1}}= \lambda_{\max}(P_{1})\), \(\lambda_{P_{2}}= \lambda_{\max}(P_{2})\), \(\lambda^{P_{1}}= \lambda_{\min}(P_{1})\), \(\lambda^{P_{2}}= \lambda_{\min}(P_{2})\), \(\lambda_{W_{3}}= \lambda _{\max}(W_{3})\), \(\lambda_{W_{4}}= \lambda_{\max}(W_{4})\), \(\lambda _{R_{3}}= \lambda_{\max}(R_{3})\), \(\lambda_{R_{4}}= \lambda_{\max }(R_{4})\), \(\lambda_{S_{1}}= \lambda_{\max}(S_{11})\), \(\lambda _{S_{2}}= \lambda_{\max}(S_{21})\), \(\lambda_{S_{3}}= \lambda_{\max }(S_{31})\), \(\lambda_{S_{4}}= \lambda_{\max}(S_{41})\), \(\lambda _{Z_{1}}= \lambda_{\max}(Z_{1})\), \(\lambda_{Z_{2}}= \lambda_{\max }(Z_{2})\), \(\lambda_{Z_{3}}= \lambda_{\max}(Z_{3})\), \(\lambda _{Z_{4}}= \lambda_{\max}(Z_{4})\).

On the other hand, from (12), we can obtain that

Put \(M^{*}=\min\{\lambda^{P_{1}},\lambda^{P_{2}}\}\).

Therefore, from (11), we get that

Then, using Definition 2.2, DNN (1), (3) is robustly finite-time bounded. □

Remark 3.2

Leakage time delay in the stabilizing negative feedback term has a tendency to destabilize a system. The term \(x(k-\gamma_{1})\), \(y(k-\gamma_{2})\) in system (1) and (3) corresponds to a stabilizing negative feedback of the system which acts instantaneously with time delay. The term is variously known as leakage (or forgetting) term which is considered as a time delay.

4 Robust finite-time passivity

In this subsection, we focus on the robust finite-time passivity of DNN (1), (3) with output (2), (4). In order to deal this, we introduce \(I=2g^{T}(k)u(k)+u^{T}(k)Qu(k)\) and \(J=2h^{T}(k)v(k)+v^{T}(k)Q_{1}v(k)\).

Theorem 4.1

Under Assumptions I and II, for given scalars \(\mu>1\), \(\rho>1\), \(\tau_{m}\), \(\tau_{M}\), \(\sigma_{m}\), \(\sigma_{M}\), DNN model (1), (3) is robustly finite-time passive with respect to \((\eta_{1}, \eta_{2}, \eta, \chi, L, Q, L_{1}, Q_{1}, u, v)\), if there exist symmetric positive definite matrices \(P_{1i}\), \(P_{2i}\), W, \(W_{1}\), \(R_{1}\), R, \(S_{1}\), \(S_{2}\), \(S_{3}\), \(S_{4}\), \(Z_{1}\), \(Z_{2}\), \(Z_{3}\), \(Z_{4}\), matrices \(U_{1}\), \(U_{2}\), \(U_{3}\), \(U_{4}\), \(U_{5}\), \(U_{6}\), \(U_{7}\), \(U_{8}\), positive diagonal matrices \(H_{1}\), \(H_{2}\), \(H_{3}\), \(H_{4}\) and positive scalars \(\lambda^{P_{1}}\), \(\lambda^{P_{2}}\), \(\lambda _{P_{1}}\), \(\lambda_{P_{2}}\), \(\lambda_{W_{3}}\), \(\lambda_{W_{4}}\), \(\lambda_{R_{3}}\), \(\lambda_{R_{4}}\), \(\lambda_{S_{1}}\), \(\lambda _{S_{2}}\), \(\lambda_{S_{3}}\), \(\lambda_{S_{4}}\), \(\lambda_{Z_{1}}\), \(\lambda_{Z_{2}}\), \(\lambda_{Z_{3}}\), \(\lambda_{Z_{4}}\), ϵ, \(\epsilon^{*}\), \(P_{1i}=P_{1}(r_{k})\), \(P_{2i}=P_{2}(r_{k})\), \(\bar {P}_{1i}= \sum_{j=1}^{N}\Pi_{ij}P_{1j}\), \(\bar{P}_{2i}= \sum_{j=1}^{N}\Pi_{ij}P_{2j}\), ω, \(\omega_{1}\) such that the following LMIs (8), (9) hold for \(r=1, 2, 3, 4\):

where

and the parameters are defined in the above theorem.

Proof

The proof is followed from the theorem above by choosing \(u = \omega \mu^{-T}\) in I and \(v = \omega_{1}\rho^{-T_{1}}\) in J. Using similar lines of (34), it follows that

By simple computation,

Under the zero initial condition and noticing \(v(k)\geq0\) for all \(k \in \{1, 2 , 3, \ldots,N\}\), we have

Noticing that \(\mu, \rho\geq1\), we have

Let \(\gamma^{*}=\max\{\omega, \omega_{1}\}\)

Therefore, from (40), it is easy to get the inequality in Definition 2.2. Hence it can be concluded that DNN model (1), (3) is robustly finite-time passive. This completes the proof. □

Remark 4.2

If leakage terms \(\gamma_{1}\) and \(\gamma_{2}\) become zero, then the neural networks system (1)-(4) is

5 Numerical simulation

In this section, we present one numerical example with its simulations to guarantee the superiority and validity of our theoretical results.

Example 5.1

Consider two-dimensional robust finite-time passivity Markovian jumping discrete-time BAM neural networks with (1)-(4) with \(x(k) = (x_{1}(k),x_{2}(k))^{T}\), \(y(k) = (y_{1}(k),y_{2}(k))^{T}\); \(r(k)\) denotes right-continuous Markovian chains taking values in \(\mathfrak{M}=\{1,2\}\) with generators

Leakage delay is defined as \(\gamma_{1} = \gamma_{2}= 5\), and the scalars are as follows: \(\mu=5\); \(\rho=9\); \(\eta_{1}=9\); \(\eta _{2}=30\); \(\eta=8\); \(\xi=9\); \(L=5\); \(Q=4\); \(L_{1}=3\); \(Q_{1}=8\); \(u=10\); \(v=30\). Lower bounds and upper bounds of finite-time passivity BAM neural networks system (1)-(4) are \(\tau _{M}=50\), \(\tau_{m}=8\), \(\sigma_{m}=5\), and \(\sigma_{M}=30\).

Now, take the activation functions as follows:

Now, the feasible solutions are as follows:

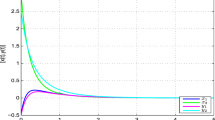

The trajectory of finite-time passivity BAM neural networks system (1)-(4) is shown in Figure 2.

According to Theorem 4.1, we can obtain that system (1)-(4) with the above given parameters is exponentially stable. With the help of Lyapunov functions and state trajectories \(x_{1}(k)\), \(x_{2}(k)\), \(y_{1} (k)\), \(y_{2}(k)\), the above finite-time passivity BAM neural networks are depicted in Figures 1, 2 and 3.

The performance of Markovian jumping for system (1)-(4) is given in Figure 3.

6 Conclusion

Passivity result for uncertain discrete-time Markovian jumping BAM neural networks with leakage delay has been investigated. By using the Lyapunov theory together with zero inequalities, convex combination and reciprocally convex combination approaches, the finite-time boundedness and passivity are derived in terms of LMI which can be easily verified via the LMI toolbox. Leakage delay has been considered as a time-varying delay. Utilizing the reciprocal convex technique, conservatism of the proposed criteria has been reduced significantly. A numerical example has been provided to illustrate the effectiveness of the results and their improvement over the existing results.

References

Chen, T, Lu, W, Chen, G: Dynamical behaviors of a large class of general delayed neural networks. Neural Comput. 17(4), 949-968 (2005)

Huang, Z, Mohamad, S, Xia, Y: Exponential periodic attractor of discrete-time BAM neural networks with transmission delays. Comput. Math. Model. 20(3), 258-277 (2009)

Xiao, J, Li, Y, Zhong, S, Xu, F: Extended dissipative state estimation for memristive neural networks with time-varying delay. ISA Trans. 64, 113-128 (2016)

Xiao, J, Zhong, S, Li, Y, Xu, F: Finite-time Mittag-Leffler synchronization of fractional-order memristive BAM neural networks with time delays. Neurocomputing 219, 431-439 (2017)

Xiao, J, Zhong, S, Li, Y: Relaxed dissipativity criteria for memristive neural networks with leakage and time-varying delays. Neurocomputing 171, 708-718 (2016)

Rajavel, S, Samidurai, R, Cao, J, Alsaedi, A, Ahmad, B: Finite-time non-fragile passivity control for neural networks with time-varying delay. Appl. Math. Comput. 297, 145-158 (2017)

Xiao, J, Zhong, S, Li, Y: New passivity criteria for memristive uncertain neural networks with leakage and time-varying delays. ISA Trans. 59, 133-148 (2015)

Li, R, Cao, J: Dissipativity analysis of memristive neural networks with time-varying delays and randomly occurring uncertainties. Math. Methods Appl. Sci. 39(11), 2896-2915 (2016)

Liu, M: Stability analysis of discrete-time recurrent neural networks based on standard neural network models. Neural Comput. Appl. 18(8), 861-874 (2009)

Li, Y: Global exponential stability of BAM neural networks with delays and impulses. Chaos Solitons Fractals 24, 279-285 (2005)

Lee, TH, Park, JH: A novel Lyapunov functional for stability of time-varying delay systems via matrix-refined-function. Automatica 80, 239-242 (2017)

Mathiyalagan, K, Sakthivel, R: Robust stabilization and H∞ control for discrete time stochastic genetic regulatory networks with time delays. Can. J. Phys. 90, 939-953 (2012)

Gopalsamy, K: Leakage delays in BAM. J. Math. Anal. Appl. 325(2), 1117-1132 (2007)

Gopalsamy, K: Stability and Oscillations in Delay Differential Equations of Population Dynamics. Kluwer Academic, Dordrecht (1992)

Li, C, Huang, T: On the stability of nonlinear systems with leakage delay. J. Franklin Inst. 346, 366-377 (2009)

Li, X, Cao, J: Delay-dependent stability of neural networks of neutral-type with time delay in the leakage term. Nonlinearity 23(7), 1709-1726 (2010)

Gan, Q: Synchronization of competitive neural networks with different time scales and time-varying delay based on delay partitioning approach. Int. J. Mach. Learn. Cybern. 4(4), 327-337 (2013)

Li, R, Cao, J: Stability analysis of reaction-diffusion uncertain memristive neural networks with time-varying delays and leakage term. Appl. Math. Comput. 278, 54-69 (2016)

Kosko, B: Neural Networks and Fuzzy Systems - A Dynamical System Approach to Machine Intelligence. Prentice Hall, Englewood Cliffs (1992)

Kosko, B: Adaptive bidirectional associative memories. Appl. Opt. 26(23), 4947-4960 (1987)

Mathiyalagan, K, Park, JH, Sakthivel, R: Synchronization for delayed memristive BAM neural networks using impulsive control with random nonlinearities. Appl. Math. Comput. 259, 967-979 (2015)

Liu, YR, Wang, ZD, Serrano, A, Liu, X: Discrete-time recurrent neural networks with time varying delays: exponential stability analysis. Phys. Lett. A 362(5-6), 480-488 (2007)

Park, JH: A novel criterion for global asymptotic stability of BAM neural networks with time delays. Chaos Solitons Fractals 29(2), 446-453 (2006)

Cao, J, Wan, Y: Matrix measure strategies for stability and synchronization of inertial BAM neural network with time delays. Neural Netw. 53, 165-172 (2014)

Bao, H, Cao, J: Exponential stability for stochastic BAM networks with discrete and distributed delays. Appl. Math. Comput. 218(11), 6188-6199 (2012)

Li, R, Cao, J, Tu, Z: Passivity analysis of memristive neural networks with probabilistic time-varying delays. Neurocomputing 91, 249-262 (2016)

Shen, H, Wu, ZG, Park, JH: Finite-time energy-to-peak filtering for Markov jump repeated scalar non-linear systems with packet dropouts. IET Control Theory Appl. 8, 1617-1624 (2014)

Wu, A, Zeng, Z: Exponential passivity of memristive neural networks with time delays. Neural Netw. 49, 11-18 (2014)

Hu, M, Cao, J, Yang, Y, Hu, A: Passivity analysis for switched generalized neural networks with time-varying delay and uncertain output. IMA J. Math. Control Inf. 30(3), 407-422 (2013)

Li, N, Cao, J: Passivity and robust synchronization of switched interval coupled neural networks with time delay. Int. J. Syst. Sci. 47(12), 2827-2836 (2016)

Dorato, P: Short time stability in linear time-varying systems. In: Proc. IRE Int. Convention Record Part 4, pp. 83-87 (1961)

Liu, H, Shen, Y, Zhao, X: Delay-dependent observer-based \(H_{\infty}\)-time control for switched systems with time-varying delay. Nonlinear Anal. Hybrid Syst. 6, 885-898 (2012)

Zhang, Y, Shi, P, Nguang, S: Observer-based finite-time \(H_{\infty}\) control for discrete singular stochastic systems. Appl. Math. Lett. 38, 115-121 (2014)

Hu, M, Cao, J, Hu, A, Yang, Y, Jin, Y: A novel finite-time stability criterion for linear discrete-time stochastic system with applications to consensus of multi-agent system. Circuits Syst. Signal Process. 34, 41-59 (2015)

Lee, TH, Park, JH: Stability analysis of sampled-data systems via free-matrix-based time-dependent discontinuous Lyapunov approach. IEEE Trans. Autom. Control 62(7), 3653-3657 (2017)

Liu, X, Park, J, Jiang, N, Cao, J: Non smooth finite-time stabilization of neural networks with discontinuous activations. Neural Netw. 52, 25-32 (2014)

Mathiyalagan, K, Park, JH, Sakthivel, R: Novel results on robust finite-time passivity for discrete-time delayed neural networks. Neurocomputing 177, 585-593 (2016)

Park, P, Ko, J, Jeong, C: Reciprocally convex approach to stability of systems with time-varying delays. Automatica 47, 235-238 (2011)

Wan, X, Xu, L, Fang, H, Ling, G: Robust non-fragile \(H_{\infty}\) state estimation for discrete-time genetic regulatory networks with Markov jump delays and uncertain transition probabilities. Neurocomputing 154, 162-173 (2015)

Acknowledgements

This work was jointly supported by the National Natural Science Foundation of China under Grant nos. 61573096 and 61272530, the Jiangsu Provincial Key Laboratory of Networked Collective Intelligence under Grant No. BM2017002, and the Thailand research grant fund (RSA5980019) and Maejo University.

Author information

Authors and Affiliations

Contributions

All authors contributed equally and significantly in writing this article. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Sowmiya, C., Raja, R., Cao, J. et al. Enhanced robust finite-time passivity for Markovian jumping discrete-time BAM neural networks with leakage delay. Adv Differ Equ 2017, 318 (2017). https://doi.org/10.1186/s13662-017-1378-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-017-1378-9