Abstract

In this paper, the global robust Mittag-Leffler stability analysis is preformed for fractional-order neural networks (FNNs) with parameter uncertainties. A new inequality with respect to the Caputo derivative of integer-order integral function with the variable upper limit is developed. By means of the properties of Brouwer degree and the matrix inequality analysis technique, the proof of the existence and uniqueness of equilibrium point is given. By using integer-order integral with the variable upper limit, Lur’e-Postnikov type Lyapunov functional candidate is constructed to address the global robust Mittag-Leffler stability condition in terms of linear matrix inequalities (LMIs). Finally, two examples are provided to illustrate the validity of the theoretical results.

Similar content being viewed by others

1 Introduction

Recently, dynamical neural networks (DNNs) have been widely applied in all kinds of science and engineering fields, such as image and signal processing, pattern recognition, associative memory and combinational optimization, see [1–5]. In practical applications, DNNs are always designed to be globally asymptotically or exponentially stable. There have been many excellent results with respect to the stability of DNNs in the existing works, see [6–16].

During the implementation of DNNs, the effects of measurement errors, parameter fluctuations and external disturbances are inevitable. Hence, it is significant that the designed neural networks are globally robustly stable. In Refs. [17–34], the global robust stability conditions were presented for integer-order neural networks (INNs).

Recently, in Refs. [6–8], the stability of a class of FNNs with delays was discussed, and some sufficient conditions were presented by applying Lyapunov functional approach. In Ref. [9], Wang et al. investigated the global asymptotic stability of FNNs with impulse effects. In Ref. [11], Yang et al. discussed the finite-time stability for FNNs with delay. By employing the Mittag-Leffler stability theorem, Ref. [35] considered the global projective synchronization for FNNs and presented the Mittag-Leffler synchronization condition in terms of LMIs. In Ref. [12], Wu et al. discussed the global Mittag-Leffler stabilization for FNNs with bidirectional associative memory based on Lyapunov functional approach. Ref. [13] considered the boundedness, Mittag-Leffler stability and asymptotical ω-periodicity of fractional-order fuzzy neural networks, some Mittag-Leffler stability conditions were developed. In addition, Ref. [32] investigated the robust stability of fractional-order Hopfield DNNs with the norm-bounded uncertainties, and some conditions were presented. It should be noted that, in the above papers with respect to FNNs, the Lyapunov function for solving the stability of FNNs is the absolute value function \(V(t)=\sum^{n}_{i=1}\delta_{i}|x_{i}|\), see [8–13, 32], or the quadratic function \(V(t)=x^{T}Px\), see [14] and [35]. Obviously, the activation function of neural networks is not applied in the Lyapunov function, hence, the obtained stability results in the above papers have a certain degree of conservatism.

Motivated by the discussion above, in this paper, we investigate the global robust Mittag-Leffler stability for FNNs with the interval uncertainties. The innovations of this paper are mainly the following aspects: (1) a new inequality for the Caputo derivative of integer-order integral function with the variable upper limit is developed; (2) the proof of the existence of equilibrium point is presented by means of the properties of Brouwer degree; (3) the integral item \(\int ^{z_{i}(t)}_{0}f_{i}(s)\,ds \) is utilized in the construction of Lyapunov functional; (4) the criteria of the global robust Mittag-Leffler stability are established in terms of LMIs.

The rest of this paper is organized as follows. In Section 2, some definitions, lemmas and a system model are given. In Section 3, the proof of global robust Mittag-Leffler stability of equilibrium point for FNNs with interval uncertainties is presented. In Section 4, two numerical examples are provided to demonstrate the correctness of the proposed results. Some conclusions are drawn in Section 5.

Notation: R denotes the set of real numbers, \(R^{n}\) denotes the n-dimensional Euclidean space, \(R^{n\times m}\) is the set of all \(n\times m\) real matrices, N is the set of integers and C is the set of complex numbers. Given the vectors \(x= (x_{1},\ldots,x_{n} )^{T}, y= (y_{1},\ldots,y_{n} )^{T}\in R^{n}\). The norm of a vector \(x\in R^{n}\) by \(\|x\|=(\sum_{i=1}^{n}x_{i}^{2})^{\frac{1}{2}}\), \(A^{T}\) represents the transpose of matrix A, \(A^{-1}\) represents the inverse of matrix A, \(\|A\|\) represents the induced norm of matrix A. \(A>0\) (\(A<0\)) means that A is positive definite (negative definite). \(\lambda_{\max}(A)\) and \(\lambda_{\min}(A)\) represent the maximum and minimum eigenvalues of matrix A, respectively. E denotes an identity matrix.

2 Preliminaries and model description

2.1 Fractional-order integral and derivative

Definition 2.1

[36]

The Riemann-Liouville fractional integral of order α for a function \(f(t):[0,+\infty)\rightarrow R\) is defined as

where \(\alpha>0 \), \(\Gamma(\cdot)\) is the gamma function.

Definition 2.2

[36]

Caputo’s fractional derivative of order α for a function \(f\in C^{n}([0,+\infty],R) \) is defined by

where \(t\geqslant0 \) and n is a positive integer such that \(n-1<\alpha<n \). Particularly, when \(0<\alpha<1\),

Lemma 2.1

[36]

-

(i)

\(\underline{_{0}^{c} D^{\alpha}_{t}( {}_{0}^{R} I^{\beta}_{t}x(t))= {}_{0}^{c}D^{\alpha-\beta}_{t}x(t)}\), where \(\alpha\geq\beta\geq0\). Especially, when \(\alpha=\beta\), \(\underline{_{0}^{c} D^{\alpha}_{t}( {}_{0}^{R} I^{\beta}_{t}x(t))= x(t)}\).

-

(ii)

Let \([0,T]\) be an interval on the real axis R, \(n=[\alpha]+1\) for \(\alpha\notin N\) or \(n=\alpha\) for \(\alpha\in N\). If \(x(t)\in C^{n}[0,T]\), then

$$ \begin{aligned} _{0}^{R} I^{\alpha}_{t} \bigl( {}_{0}^{c} D^{\alpha}_{t}x(t) \bigr) =x(t)-\sum_{k=0}^{n-1}\frac{x^{(k)}(0)}{k!}t^{k}. \end{aligned} $$In particular, if \(0< \alpha<1\) and \(x(t)\in C^{1}[0,T]\), then \(_{0}^{R} I^{\alpha}_{t} {}_{0}^{c} D^{\alpha}_{t}x(t)=x(t)-x(0)\).

Definition 2.3

[16]

The Mittag-Leffler function with two parameters has the following form:

where \(\alpha>0\), \(\beta>0 \), and \(z\in C\). For \(\beta=1\),

especially, \(E_{1,1}(z)=e^{z}\).

Lemma 2.2

[16]

Let \(V(t)\) be a continuous function on \([0,+\infty)\) satisfying

where \(0<\alpha< 1\), and θ is a constant. Then

Lemma 2.3

[35]

Suppose that \(x(t)\in R^{n}\) is a continuous and differentiable function, \({P\in R^{n\times n}}\) is a positive definite matrix. Then, for \(\alpha\in(0,1)\), the following inequality holds:

2.2 A new inequality

In this section, we develop an inequality (see Lemma 2.5) with respect to the Caputo derivative of the integer-order integral function.

Lemma 2.4

Suppose that \(f:R\rightarrow R\) and \(g:R\rightarrow R\) are continuous functions, and \(f(0)=0\), \(tf(t)\geq0\), \(g(t)\geq0\), \(t\in R\). Then, for \(t\in R\),

where \(\operatorname{Sign}(\cdot)\) is the sign function.

Proof

Case 1. Let \(t\geq0\), then \(f(s)\geq0\), \(s\in(0,t)\). Obviously, \(\int^{t}_{0}f(s)\,ds\geq0\). According to \(g(s)\geq0\), it is easy to obtain that \(\int^{t}_{0}g(s)f(s)\,ds\geq 0\). Thus, \(\operatorname{Sign}(\int^{t}_{0}f(s)\,ds)=\operatorname{Sign}(\int^{t}_{0}g(s) f(s)\,ds)\).

Case 2. Let \(t<0\), then \(f(s)<0\), \(s\in(t, 0)\), and \(\int^{t}_{0}f(s)\,ds= -\int^{0}_{t}f(s)\,ds \geq0\). Noting that \(\int^{t}_{0}g(s)f(s)\,ds=- \int^{0}_{t}g(s)f(s)\,ds \geq0\), we have \(\operatorname{Sign}(\int^{t}_{0}f(s)\,ds)=\operatorname{Sign}(\int^{t}_{0}g(s)f(s)\,ds)\).

From Cases 1 and 2, it follows that

The proof is completed. □

Lemma 2.5

Suppose that \(z:R\rightarrow R\) is a continuous and differentiable function, and \(g:R\rightarrow R\) is a continuous and monotone nondecreasing function. Then, for \(\alpha\in(0,1)\), the following inequality holds:

Proof

Obviously, inequality (1) is equivalent to

By Definition 2.2, we have

and

Take (3) and (4) into (2), then it can be rewritten as

Further, we can get

Equation (6) can be changed as follows:

Set \(\tau=z(t)-z(s)\), \(w(s)=\frac{g(z(t))-g(z(s))}{(t-s)^{\alpha}(z(t)-z(s))}=\bar{w}(\tau) \). g is a monotone nondecreasing function, hence, \(\bar{w}(\tau)\geq0\).

As a result, (7) can be transformed into

By Lemma 2.4 and (8), (2) is true if

It is easy to calculate that

The proof is completed. □

2.3 System description

In this paper, we consider a FNNs model described by

Equation (11) can be written equivalently as follows:

where \(\underline{x(t)=(x_{1}(t),x_{2}(t),\ldots,x_{n}(t))^{T}\in R^{n}}\) is the state vector associated with the neurons, \(C=\text{diag}(c_{1},c_{2},\ldots,c_{n}) \) is a positive diagonal matrix, \(A=(a_{ij})_{n\times n}\) is the interconnection weight matrix, \(g(x)=(g_{1}(x_{1}),g_{2}(x_{2}),\ldots, g_{n}(x_{n}))^{T}\in R^{n}\) and \(I=(I_{1},I_{2},\ldots,I_{n})^{T}\) denote the neuron activation function and constant input vector, respectively.

The parameters C, A are assumed to be interval as \(C\in C_{I}\), \(A\in A_{I}\), where \(C_{I}:=\{C=\text{diag}(c_{i})_{n\times n}:0<\underline{C}\leq{C}\leq\overline{C}, \textit{i.e.}, 0<\underline{c}_{i}\leq c_{i}\leq\overline{c}_{i},i=1,2,\ldots,n\}\), \(\underline{C}=\text{diag}(\underline{c}_{i})_{n\times n}\), \(\overline{C}=\text{diag}(\overline{c}_{i})_{n\times n}\). \(A \in A_{I}\), \(A_{I}:=\{A=(a_{ij})_{n\times n}:\underline{A}\leq{A}\leq\overline{A}, \textit{i.e.}, \underline{a}_{i}\leq a_{i}\leq\overline{a}_{i},i=1,2,\ldots,n\}\), \(\underline{A}=(\underline{a}_{ij})_{n\times n}\), \(\overline{A}=(\overline{a}_{ij})_{n\times n}\).

In this paper, we make the following hypothesis for the neuron activation function \(g_{i}\):

- \((H_{1})\) :

-

\(g_{i}\) is a continuous and monotone nondecreasing function.

Lemma 2.6

[24]

Let \(x\in R^{n}\) and \(A\in A_{I}\), then, for any positive diagonal matrix Q, the following inequality holds:

where \(A^{\ast}=\frac{1}{2}(\overline{A}+\underline{A})\), \(A_{\ast}=\frac {1}{2}(\overline{A}-\underline{A})\).

Definition 2.4

Mittag-Leffler stability

An equilibrium point \(x^{\ast}\) of the neural network system (12) is said to be Mittag-Leffler stable if there exist constants \(M>0\), \(\lambda>0\), \(\alpha\in(0,1)\) and \(b>0\) such that, for any solutions \(x(t)\) of (12) with initial value \(x_{0}\), the following inequality holds:

Definition 2.5

[32]

The neural network system (12) is said to be globally robust Mittag-Leffler stable if the unique equilibrium point is globally Mittag-Leffler stable for \(C\in C_{I}\) and \(A\in A_{I}\).

3 Main results

Theorem 3.1

Let assumption \((H_{1})\) hold. If there exists a positive diagonal matrix \(Q=\operatorname{diag}(q_{1}, \ldots,q_{n})>0\) such that

then system (12) has a unique equilibrium point which is globally robustly Mittag-Leffler stable.

Proof

The process of proof is divided into three steps.

Step 1: In this step, we prove the existence of equilibrium point of system (12).

For \(C\in C_{I}\) and \(A\in A_{I}\), set

where \(f(x)=g(x)-g(0)\), \(\widetilde{W}=Ag(0)+I\). It is easy to check that \(x^{*}\in R^{n}\) is an equilibrium point of (12), if and only if, \(W(x^{*}) = 0\). Let \(\mathcal{H}(\lambda, x)=Cx-\lambda A f(x)-\lambda\widetilde{W}\), \(\lambda\in[0, 1]\). Obviously, \(\mathcal{H}(\lambda, x)\) is a continuous homotopy mapping on x.

By Lemma 2.6 and (13), we can obtain that

Under assumption \((H_{1})\), we have

This implies that, for each \(i\in\{ 1,2,\ldots, n\}\),

Let

By (14), there exists \(l_{i}> |\frac{\widetilde{W}_{j}}{\overline{c}_{j}}|\) such that, for any \(| x_{i}|> l_{i}\),

Set \(\Omega=\{x\in R^{n}:| x_{i}|< l_{i}+1,i=1,2,\ldots,n\}\). It is easy to find that Ω is an open bounded convex set independent of λ. For \(x\in \partial\Omega\), define

and there exists \(i_{0}\in\{1,2,\ldots,n\}\) such that \(| x_{i_{0}}|= l_{i_{0}}+1\).

By the definition of \(L_{i}\) in (15), we have

On the other hand,

Thus, for \(x\in\partial\Omega\) and \(\lambda\in[0,1]\), it follows that

This shows that \(\mathcal{H}(\lambda,x)\neq0\) for any \(x\in \partial\Omega\), \(\lambda\in[0,1]\). By utilizing the property of Brouwer degree (Lemma 2.3(1) in [15]), it follows that \(\operatorname{deg}(\mathcal {H}(1,x),\Omega,0)=\operatorname{deg}(\mathcal{H}(0, x),\Omega,0)\), namely, \(\operatorname{deg}(W(x),\Omega,0)=\operatorname{deg}(Cx,\Omega,0)\). Noting that \(\operatorname{deg}(W(x),\Omega,0)=\operatorname{deg}(Cx,\Omega,0)=\operatorname{Sign}|C|\neq 0\), where \(|C|\) is the determinant of C, and applying Lemma 2.3(2) in [15], we can obtain that \(W(x)=0 \) has at least a solution in Ω. That is, system (12) has at least an equilibrium point in Ω.

Step 2: In this step, we verify the uniqueness of equilibrium point of system (12).

Let \(x^{\prime}\) and \(x^{\prime\prime}\in R^{n}\) be two different equilibrium points of system (12). Then

Multiplying by \(2(f(x^{\prime})-f(x^{\prime\prime}))^{T}Q\) yields

Obviously, this is contradiction. Hence, \(x^{\prime}=x^{\prime\prime}\), namely, system (12) has a unique equilibrium point in Ω.

Step 3: In this step, we prove that the equilibrium point of system (12) is globally robustly Mittag-Leffler stable.

Assume that \(x^{\ast} \in R ^{n}\) is the unique equilibrium point of system (12). Let \(z_{i}(t)=x_{i}(t)-x_{i}^{\ast}\), then system (12) can be changed as follows:

where \(f_{j}(z_{j}(t))=g_{j}(z_{j}(t)+x_{j}^{\ast})-g_{j}(x_{j}^{\ast})\).

By assumption \((H_{1})\), \(f_{i}\) is monotone nondecreasing and \(f_{i}(0)=0\). Hence, for any \(z_{i} \in R\), we have

For \(C\in C_{I}\) and \(A\in A_{I}\), consider the following Lyapunov functional \(V: R^{n}\rightarrow R\) of Lur’e-Postnikov type [37]:

where

\(\lambda_{m} =\lambda_{\min}(-\Phi)>0\). Note that under condition (19), being \(\|C^{-1}A\|^{2}=\lambda_{\max} ((C^{-1}A)^{T}(C^{-1}A))\), it follows that

Calculate the fractional-order derivative of \(V(z(t))\) with respect to time along the solution of system (17). By Lemmas 2.3, 2.5 and 2.6, it can be obtained that

where \(2\beta f^{T}(z(t))(QC)z(t)\geq0\) on the basis of assumption \((H_{1})\). By adding and subtracting \(-f^{T} (z(t)) [(C^{-1}A)^{T} (C^{-1}A) ]f(z(t))\), and accounting for (20), we get

By (18), we have

where \(\underline{c}=\min\{\underline{c}_{i}:1\leq i\leq n\}\). By applying Lemma 2.2, we obtain that

Noting that

where \(\overline{c}=\max\{\overline{c}_{i}:1\leq i\leq n\}\), we have

This implies that

where \(\overline{q}=\max\{\overline{q}_{i}:1\leq i\leq n\}\).

Hence

where \(M= (\overline{c}+2\beta \overline{q}\overline{c}\frac{f^{T} (x(0)-x^{\ast})(x(0)-x^{\ast})}{\|x(0)-x^{\ast}\|^{2}} )^{\frac{1}{2}}\). According to Definitions 2.4 and 2.5, the equilibrium point of system (12) is globally robustly Mittag-Leffler stable. The proof is completed. □

4 Numerical examples

Example 1

Consider a fractional-order neural network (12) with the following parameters:

Choose the activation function with respect to \(\underline{g_{i}(x_{i})=0.5x_{i}+\tanh(x_{i})}\), \(i=1,\ldots, n\). Obviously, \(g_{i}\) is a monotone nondecreasing function.

By applying appropriate LMI solver to acquire a feasible numerical solution of (13), we can get that Q could be as follows:

The condition of Theorem 3.1 holds. Thus, system (12) with the above parameters is globally robustly Mittag-Leffler stable.

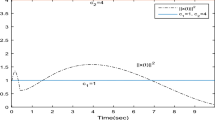

To verify the above result, we divide a numerical simulation into three cases:

Case 1: \(C=\underline{C}\in C_{I} \), \(A=\underline{A}\in A_{I}\), \(x^{*}=(0.8024,0.3089)\), see Figure 1.

Case 2: \(C= \bigl({\scriptsize\begin{matrix}{}2&0\cr0&2 \end{matrix}} \bigr) \in C_{I}\), \(A= \bigl({\scriptsize\begin{matrix}{}-17&1\cr0&-18 \end{matrix}} \bigr) \in A_{I}\), \(x^{*}=(0.8419,0.3535)\), see Figure 2.

Case 3: \(C=\overline{C}\in C_{I}\), \(A=\overline{A}\in A_{I}\), \(x^{*}=(0.8899,0.4037)\), see Figure 3.

From Figures 1, 2, 3, we can see that the state trajectories converge to a unique equilibrium point. This is consistent with the conclusion of Theorem 3.1.

Example 2

Consider a fractional-order neural network (12) with the following parameters:

Obviously, \(g_{i}\) is a monotone nondecreasing function. Choose the positive definite diagonal matrix \(Q=\operatorname{diag}(5,8,11)\), then we have

The condition of Theorem 3.1 is satisfied. Thus, system (12) with the above parameters is globally robustly Mittag-Leffler stable.

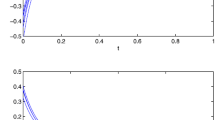

To verify the above result, we divide a numerical simulation into three cases:

Case 1: \(C=\underline{C}\in C_{I} \), \(A=\underline{A}\in A_{I}\), \(x^{*}=(0.2336,0.5032,0.8613)\), see Figure 4.

Case 2:

see Figure 5.

Case 3: \(C=\overline{C}\in C_{I}\), \(A=\overline{A}\in A_{I}\), \(x^{*}=(0.2495,0.6496,1.1050)\), see Figure 6.

From Figures 4, 5, 6, we can see that the state trajectories converge to a unique equilibrium point. This is consistent with the conclusion of Theorem 3.1.

5 Conclusion

In this paper, the global robust Mittag-Leffler stability issue has been investigated for a class of FNNs with parameter uncertainties. A new inequality with respect to the Caputo derivative of an integer-order integral function with the variable upper limit has been developed. The sufficient condition in terms of LMIs has been presented to ensure the existence, uniqueness and robust Mittag-Leffler stability of equilibrium point.

It would be interesting to extend the results proposed in this paper to FNNs with delays and parameter uncertainties. This issue will be the topic of our future research.

References

Gupta, M, Jin, L, Homma, N: Static and Dynamic Neural Networks. Wiley-Interscience, New York (2003)

Banerjee, S, Verghese, G: Nonlinear Phenomena in Power Electronics: Bifurcation, Chaos, Control, and Applications. Wiley-IEEE Press, New York (2001)

Liberzon, D: Switching in System and Control. Birkhäuser, Boston (2001)

Leine, R, Nijmeijer, H: Dynamics and Bifurcation of Nonsmooth Mechanical Systems. Lecture Notes in Applied and Computational Mechanics, vol. 18. Springer, Berlin (2004)

Forti, M, Tesi, A: New conditions for global stability of neural networks with application to linear and quadratic programming problems. IEEE Trans. Circuits Syst. I, Fundam. Theory Appl. 42, 354-366 (1995)

Chen, L, Chai, Y, Wu, R, Ma, T, Zhai, H: Dynamic analysis of a class of fractional-order neural networks with delay. Neurocomputing 111, 190-194 (2013)

Wang, H, Yu, Y, Wen, G: Stability analysis of fractional-order Hopfield neural networks with time delays. Neural Netw. 55, 98-109 (2014)

Wang, H, Yu, Y, Wen, G, Zhang, S, Yu, J: Global stability analysis of fractional-order Hopfield neural networks with time delay. Neurocomputing 154, 15-23 (2015)

Wang, F, Yang, YQ, Hu, M: Asymptotic stability of delayed fractional-order neural networks with impulsive effects. Neurocomputing 154, 239-244 (2015)

Zhang, S, Yu, Y, Wang, H: Mittag-Leffler stability of fractional-order Hopfield neural networks. Nonlinear Anal. Hybrid Syst. 16, 104-121 (2015)

Yang, X, Song, Q, Liu, Y, Zhao, Z: Finite-time stability analysis of fractional-order neural networks with delay. Neurocomputing 152, 19-26 (2015)

Wu, A, Zeng, Z, Song, X: Global Mittag-Leffler stabilization of fractional-order bidirectional associative memory neural networks. Neurocomputing 177, 489-496 (2016)

Wu, A, Zeng, Z: Boundedness, Mittag-Leffler stability and asymptotical omega-periodicity of fractional-order fuzzy neural networks. Neural Netw. 74, 73-84 (2016)

Wu, H, Zhang, X, Xue, S, Wang, L, Wang, Y: LMI conditions to global Mittag-Leffler stability of fractional-order neural networks with impulses. Neurocomputing 193, 148-154 (2016)

Wu, H, Tao, F, Qin, L, Shi, R, He, L: Robust exponential stability for interval neural networks with delays and non-Lipschitz activation functions. Nonlinear Dyn. 66, 479-487 (2011)

Chen, J, Zeng, Z, Jiang, P: Global Mittag-Leffler stability and synchronization of memristor-based fractional-order neural networks. Neural Netw. 51, 1-8 (2014)

Singh, V: New LMI-based criteria for global robust stability of delayed neural networks. Appl. Math. Model. 34, 2958-2965 (2010)

Faydasicok, O, Arik, S: Robust stability analysis of neural networks with discrete time delays. Neural Netw. 30, 52-59 (2012)

Wang, X, Chen, X, Qi, H: Global robust exponential stability in Lagrange sense for interval delayed neural networks. In: Advances in Neural Networks - ISNN 2013. Lecture Notes in Computer Science, vol. 7951, pp. 239-249. Springer, Berlin (2013)

Hu, W, Li, C, Wu, S: Stochastic robust stability for neutral-type impulsive interval neural networks with distributed time-varying delays. Neural Comput. Appl. 21, 1947-1960 (2012)

Li, H, Lam, J, Gao, H: Robust stability for interval stochastic neural networks with time-varying discrete and distributed delays. Differ. Equ. Dyn. Syst. 19, 97-118 (2011)

He, Q, Liu, D, Wu, H, Ding, S: Robust exponential stability analysis for interval Cohen-Grossberg type BAM neural networks with mixed time delays. Int. J. Mach. Learn. Cybern. 5, 23-38 (2014)

Wu, H, Tao, F, Qin, L, Shi, R, He, L: Robust exponential stability for interval neural networks with delays and non-Lipschitz activation functions. Nonlinear Dyn. 66, 479-487 (2011)

Qin, S, Fan, D, Yan, M, Liu, Q: Global robust exponential stability for interval delayed neural networks with possibly unbounded activation functions. Neural Process. Lett. 40, 35-50 (2014)

Arik, S: New criteria for global robust stability of delayed neural networks with norm-bounded uncertainties. IEEE Trans. Neural Netw. Learn. Syst. 25, 1045-1052 (2014)

Ahn, C: Robust stability of recurrent neural networks with ISS learning algorithm. Nonlinear Dyn. 65, 413-419 (2011)

Feng, W, Yang, S, Wu, H: Improved robust stability criteria for bidirectional associative memory neural networks under parameter uncertainties. Neural Comput. Appl. 25, 1205-1214 (2014)

Xie, J, Chen, C, Liu, P, Jeng, Y: Robust exponential stability analysis for delayed neural networks with time-varying delay. Adv. Differ. Equ. 2014, 131-146 (2014)

Banu, L, Balasubramaniam, P, Ratnavelu, K: Robust stability analysis for discrete-time uncertain neural networks with leakage time-varying delay. Neurocomputing 151, 808-816 (2014)

Ali, M, Gunasekaran, N, Rani, M: Robust stability of Hopfield delayed neural networks via an augmented L-K functional. Neurocomputing 234, 198-204 (2017)

Hua, C, Wu, S, Guan, X: New robust stability condition for discrete-time recurrent neural networks with time-varying delays and nonlinear perturbations. Neurocomputing 219, 203-209 (2017)

Zhang, S, Yu, Y, Hu, W: Robust stability analysis of fractional order Hopfield neural networks with parameter uncertainties. Math. Probl. Eng. 4, 1-14 (2014)

Liao, Z, Peng, C, Li, W, Wang, Y: Robust stability analysis for a class of fractional order systems with uncertain parameters. J. Franklin Inst. 348, 1101-1113 (2011)

Ma, Y, Lu, J, Chen, W: Robust stability bounds of uncertain fractional-order systems. Fract. Calc. Appl. Anal. 17, 136-153 (2014)

Wu, H, Wang, L, Wang, Y, Niu, P, Fang, B: Global Mittag-Leffler projective synchronization for fractional-order neural networks: an LMI-based approach. Adv. Differ. Equ. 1, Article ID 132 (2016). doi:10.1186/s13662-016-0857-8

Podlubny, I: Fractional Differential Equations. Mathematics in Science and Engineering, vol. 198. Academic Press, San Diego (1999)

Forti, M, Tesi, A: New conditions for global stability of neural networks with application to linear and quadratic programming problems. IEEE Trans. Circuits Syst. I, Fundam. Theory Appl. 42, 354-366 (1995)

Acknowledgements

This work was jointly supported by the National Natural Science Foundation of China (61573306) and High Level Talent Project of Hebei Province of China (C2015003054).

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

All authors contributed equally to the manuscript. All authors read and approved the final manuscript.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Song, K., Wu, H. & Wang, L. Lur’e-Postnikov Lyapunov function approach to global robust Mittag-Leffler stability of fractional-order neural networks. Adv Differ Equ 2017, 232 (2017). https://doi.org/10.1186/s13662-017-1298-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-017-1298-8